Abstract

This paper present an effective numerical approach for solving a class of space-time fractional diffusion equations. By discretizing the time-fractional derivative, the original problem is transformed to a semi-discrete problem, which was solved by using the fractional reproducing kernel collocation method (FRKCM). Additionally, the stability and convergence analysis are given and some numerical examples demonstrate the feasibility and reliability of the method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper focus on the numerical solution of the space-time fractional diffusion equation

with the initial condition

and the boundary conditions

where \(0<\alpha <1\) and \(1<\beta <2\), a(x, t) is a nonngeative function, f(x, t) is the source term, u(x, t) is the unknown function. \({^C_0D_t^\alpha }u(x,t)\) is the left Caputo time-fractional derivative of order \(\alpha \) defined as

And \({^{C}_{0}D_{x}^{\beta }}u(x,t)\) is the left Caputo space-fractional derivatives of order \(\beta \) defined as

Fractional diffusion equations were introduced to describe anomalous diffusion phenomena in the transport process of a complex or disordered system [1,2,3,4,5,6,7]. For flow in porous media, fractional space derivative models large motions through highly conductive layers or fractures, while fractional time derivative describes particles that remain motionless for extended periods of time [4].

Recently the reaction-diffusion equation, also called the parabolic equation as a standard and classical partial differential equation attracted much attention with the research interests in the well-posedness of solution [8,9,10,11,12,13,14], especially for the model with Caputo time-fractional derivative [9]. The authors [15] introduced a new hybrid scheme based on a finite difference method and Chebyshev collocation method for solving the space-time fractional advection–diffusion equation and the corresponding convergence and stability are investigated. Baseri et al. [16] employed the shifted Chebyshev and rational Chebyshev polynomials to obtain the approximate solution of (1.1). In [17], the authors discussed such equation based on normalized Bernstein polynomials. Feng et al. [18] applied the finite element method to obtain a numerical solution of the space-time fractional diffusion equation.

Over the past 30 years, the numerical analysis theory of reproducing kernel has been widely used in solving various differential equations [19,20,21,22,23,24,25,26,27]. The reproducing kernel particle methods was proposed in 1995 by Liu et al. [28] as an extension to the method Smooth Particle Hydrodynamics. Mahdavi et al. applied the gradient reproducing kernel in conjunction with the collocation method to solve 2nd- and 4th-order equations [29]. In solving fractional differential equations, the approximate solution given by fractional reproducing kernel method is more accurate than the traditional integer-order reproducing kernel method [30]. Therefore, it is crucial to seek efficient numerical algorithms for solving fractional-order problems. Chen et al. [31] firstly constructed a new fractional weighted reproducing kernel space and presented the exact representation of the reproducing kernel function. In 2021, the authors presented a new numerical method to solve a class of fractional differential equations using the fractional reproducing kernel method [32] which can lessen computation costs and provide highly precise approximate solutions.

In this paper, a new numerical algorithm is proposed to solve the space-time fractional diffusion equation. First, the time-fractional derivative is discretized by L1 scheme, which is a classical time-fractional derivative discretization method and widely used in many literatures [33,34,35,36]. When the time-fractional derivative is approximately discretized by difference, the information of the solution at all past time points is considered, which ensures the stability of the discretization. After the above treatment, the space-time fractional problem (1.1)–(1.3) is transformed into a semi-discrete problem that only contains the spatial fractional derivative. Then the fractional reproducing kernel collocation method is proposed to solve the semi-discrete problem. According to the order of the space-fractional derivatives, a fractional reproducing kernel function space is selected. We calculate the reproducing kernel function and its fractional derivative. To obtain the approximate solution of the semi-discrete problem, a set of fractional basis is constructed by using reproducing kernel and polynomial functions. Compared with the traditional practice of using integer-order polynomials as the basis, the use of fractional-order polynomial basis is more effective for fractional partial differential equation. Next, under three different types of collocation points, the undetermined coefficients in the approximate solution are solved by the collocation method. Compared with the traditional reproducing kernel method, FRCKM avoids the Schmidt orthogonalization processing. Finally, the uniform convergence, stability and error estimation of the approximate solution are analyzed. In addition, global error estimation and stability analysis are carried out for the semi-discrete iterative scheme which is obtained after the difference approximation of the time-fractional derivative. Some numerical examples indicate the universality and flexibility of the method by selecting multiple types of collocation points.

The remaining sections are organized as follows. Section 2 investigated a new numerical method to solve (1.1)–(1.3). In Sect. 3, stability and convergence analysis are surveyed. Numerical examples are shown in Sect. 4 to demonstrate the efficiency and accuracy. Section 5 ends the paper with a brief conclusion.

2 Numerical method

In this section, we will study the construction process of numerical method to solve the problem (1.1)–(1.3).

2.1 Construction of the basis

First, we adopted the L1 scheme that is described in [36] to approximate the time-fractional derivative.

Let \(t_{n}=n\Delta t,\ n=0,1,...,N,\ \) and\(\ \Delta t=\frac{T}{N}\) is the time step, then

And using \(\dfrac{u(x,t_{j+1})-u(x,t_j)}{\Delta t}\) approximation for \(\dfrac{\partial u(x,\xi )}{\partial \xi }\) in (2.1). Explicitly, the L1 approximation for the time fractional derivative of order \(\alpha \) with respect to time at \(t=t_n\) is given by

where \(\alpha _{0}=\Gamma (2-\alpha )\Delta t^{\alpha }\), \(b_{j}=(j+1)^{1-\alpha }-j^{1-\alpha }\) and \(R_{n,u}\) is the truncation error.

It is easy to verify the following properties for the coefficients \(b_{j}\)

Denote \(u^n(x)\) is approximate solution of \(u(x,t_n)\), the semi-discrete form of (1.1) at points \(\left\{ {t_{n}}\right\} _{n=0}^{N}\) is given by

The boundary conditions (1.3) can be discretized as follows

Here, we introduce briefly some necessary knowledges of reproducing kernel which will be used throughout the later work.

Definition 2.1

([31]) The reproducing kernel space \(W_2^1[0,1]\) is defined by

and endowed with the inner product and norm respectively

Definition 2.2

([31]) The fractional reproducing kernel space \(W_2^\beta [0,1]\) is defined by

and endowed with the inner product and norm respectively

Lemma 2.1

([31]) The expression of the reproducing kernel function of \(W_{2}^{\beta }[0,1]\) is

with the corresponding left Caputo fractional derivative

Now, based on the reproducing kernel K(x, y) in fractional reproducing kernel space \(W_{2}^{\beta }[0,1]\), the approximate solution of (2.4)–(2.5) can be constructed.

Theorem 2.1

Suppose \(\left\{ x_{i}\right\} _{i=1}^{M}\) is a dense subset in [0, 1], then \(\left\{ 1,x^{2},K(x,x_{i}) \right\} _{i=1}^{M} \) are linearly independent on \(W_2^\beta [0,1]\).

Proof

Assume that there exits constants \(\left\{ \mu _i\right\} _{i=1}^{M+2}\) such that

Taking \(h_k(x)\in W_2^\beta [0,1]\) such that

Then we have the third order derivative of (2.9) with respect to x

and

Since \(\left\langle K^{(3)}(x,x_i),h_k{(x)}\right\rangle _\beta =h_k^{(3)}(x_i)\), we see

then

which means that \(\mu _1=\mu _2=0\), and completes the proof. \(\square \)

Define the approximate solution space

Apparently, the number of basis functions in the space \(S_{M+2}\) is \(M+2\), and \(S_{M+2}\) is a subspace of \(W_2^\beta [0,1].\)

2.2 Approximate method

Define a linear operator \(L:W_{2}^{\beta }[0,1]\longrightarrow W_{2}^{1}[0,1]\) as follows

Lemma 2.2

\(L:W_{2}^{\beta }[0,1]\longrightarrow W_{2}^{1}[0,1]\) is a bounded linear operator.

Proof

In view of the definition of L, it is obvious that L is a linear operator.

According to reproducing property of K(x, y), we have

By virtue of the Cauchy-Schwarz inequality, we obtain

where \(\partial _{x}LK\) is continuous on [0, 1], and its norm is bounded.

Then

The proof is completed. \(\square \)

Next, we set the process of solving the semi-discrete problem (2.4)–(2.5) by using the presented FRKCM. We find the approximate solution of problem (2.4)–(2.5)

such that the collocation equations

where \(\left\{ x_{k}\right\} _{k=1}^{M}\in [0,1]\) are collocation points as following.

In the numerical process later, we will choose three different collocation points

As the undetermined coefficients \(\left\{ a_{i}\right\} _{i=1}^{M+2} \) can be obtained by solving (2.12)–(2.14), the approximate solution \(u^n_{m}(x)\) is determined. Therefore, the algorithm for solving the space-time fractional diffusion problem (1.1)–(1.3) can be divided into the following two steps. First, we discretized the time-fractional derivative to obtain the semi-discrete problem (2.4)–(2.5), and then we use FRKCM to solve this semi-discrete problem. The above process is summarized as Algorithm 1.

3 Stability and convergence analysis

3.1 The stability and convergence of the approximate solution

We first investigate the stability analysis of the approximate solution given by FRKCM.

Theorem 3.1

Suppose \({\widetilde{f}}(x,t_n)=f(x,t_n)+\delta \), where \(\delta \) is a perturbation and \(L{\widetilde{u}}^n_m(x)={\widetilde{f}}(x,t_n)\), then

where H is a nonnegative constant.

Proof

where H is a nonnegative constant. The proof is completed. \(\square \)

Next, we present three lemmas for analysing the convergence of the approximate solution.

Lemma 3.1

Suppose \(u^n(x)\) is the solution of the semi-discrete problem (2.4)–(2.5), \(u^n_m(x)\) is the approximate solution of \(u^n(x)\), \(\left\{ x_i\right\} _{i=1}^{\infty }\) is a dense set in [0, 1], then

Proof

Let R(x, y) be the reproducing kernel function of the reproducing kernel space \(W_2^1[0,1]\). From (2.10), there holds

Taking the inner product of (3.1) with \(R(x,x_i)\), we obtain

Due to the reproducing property of reproducing kernel, (3.2) becomes

It follows from (2.12) to (2.14) that

Taking the inner product of (3.4) with \(R(x_k,x_i)\), then we have

also

Combining (3.3) and (3.5), it can be concluded

The proof is completed. \(\square \)

Lemma 3.2

([37]) \(X=\left\{ u^n_{m}(x)|\ \Vert u^n_{m}(x)\Vert _{\beta }\le \gamma \right\} \) is a compact set of C[0, 1], where \(\gamma \) is a constant.

Lemma 3.3

Suppose that \(u^n(x)\) is the solution of the semi-discrete problem (2.4)–(2.5), \(u^n_m(x,t_n)\) is the approximate solution of \(u^n(x)\), \(\left\{ x_{i}\right\} _{i=1}^{\infty }\) is dense set in [0, 1], then \(\Vert u^n_m(x)-u^n(x)\Vert _\beta \rightarrow 0.\)

Proof

Since \(u^n_{m}(x)=a_{1}+a_{2}x^{2}+\sum _{i=1}^{M}a_{i+2}K(x,x_{i})\) is continuous on [0, 1], we know \(\Vert u^n_{m}(x)\Vert _{\beta }\le \gamma \), where \(\gamma \) is a constant. From Lemma 3.2, it is known that X is a compact set, hence there exists a convergent subsequence \(\left\{ u^n_{m_{l}}(x)\right\} _{l=1}^{\infty } \subset \left\{ u^n_{m}(x)\right\} _{m=1}^{\infty }\subset X\).

Assume subsequence \(\lbrace u^n_{m_{l}}(x)\rbrace _{l=1}^{\infty }\) converges to \(u(x,t_n)\in X\), i.e.

According to Lemma 3.1, we have

Hence,

As \(l\rightarrow \infty \), take the limit of (3.6) to get

Due to the fact that \(Lu^n(x)-f(x,t_n)\) is a continuous function and \(\left\{ x_{i}\right\} _{i=1}^{\infty }\) is a dense set, we have

Thus, \(u^n(x)\) is the solution of the semi-discrete problem (2.4)–(2.5).

Since L is a bounded linear operator,

As \(l\rightarrow \infty \), take the limit of (3.7) to get

Since \(L^{-1}:W_2^1[0,1]\rightarrow W_2^\beta [0,1]\) exists, we have

\(\square \)

Now, we prove that the approximate solution uniformly converges to the solution of the semi-discrete problem (2.4)–(2.5).

Theorem 3.2

Suppose that \(u^n(x)\) is the solution of the semi-discrete problem (2.4)–(2.5), \(u^n_m(x)\) is the approximate solution of \(u^n(x)\), then \(u^n_{m}(x)\) is uniformly convergent to \(u^n(x)\).

Proof

From the reproducing properties and Lemma 3.3 we have,

where \(\Vert K_x(\cdot )\Vert _\beta =\sqrt{\langle K_x(\cdot ),K_x(\cdot ) \rangle _\beta }=\sqrt{K_x(x)}<\eta \). Namely, \(u^n_{m}(x)\) uniformly converges to \(u^n(x)\). \(\square \)

Here, we establish error estimation for the approximate solution given by FRKCM.

Theorem 3.3

Let \(\Delta x=\frac{1}{M}\), where M is a nonnegative integer, \(u^n(x)\) is the solution of the semi-discrete problem (2.4)–(2.5) and \(u^n_m(x)\) is the approximate solution of \(u^n(x)\), then \(|u^n_m(x)-u^n(x)|\le o\left( \Delta x\right) \).

Proof

There exists \(x_i\in [0,1]\), such that \(|x-x_i|\le \Delta x\). From Lemma 3.1, we obtain

thus,

As \(L^{-1}\) exists,

From Lagrange mean value theorem, we have

Therefore,

Making use of Lemma 3.3 and the bounded properties of \(\Vert L^{-1}\Vert \) and \(\left\| \dfrac{\partial }{\partial _{\xi }}LK(\xi ,\cdot )\right\| \), it is easy to verify that

The proof is completed. \(\square \)

3.2 The stability and convergence of the semi-discrete scheme

Now, we introduce the following lemmas for the stability of the semi-discrete scheme (2.4).

Lemma 3.4

Suppose \(u(x)\in W_2^\beta [0,1]\), then \(u(x)\in W_2^{\frac{\beta }{2}}[0,1] \).

Proof

It is enough to show that \(\dfrac{\partial }{\partial x}\left( {_0^CD_x^{\frac{\beta }{2}}}u(x)\right) \in L^{2}[0,1]\). In fact

Obviously, \(\dfrac{1}{\Gamma ({1-\frac{\beta }{2}})}u'(0)x^{-\frac{\beta }{2}}\in L^2[0,1]\), and

thereby \(\dfrac{1}{\Gamma ({1-\frac{\beta }{2}})}\int _{0}^{x}(x-t)^{-\frac{\beta }{2}}u''(t)\textrm{d}t \in L^1[0,1]\).

According to the fact, \(\dfrac{1}{\Gamma ({1-\frac{\beta }{2}})}\int _{0}^{x}(x-t)^{-\frac{\beta }{2}}u''(t)\textrm{d}t \in L^2[0,1]\), we have \(\dfrac{\partial }{\partial x}\left( {_0^CD_x^{\frac{\beta }{2}}}u(x)\right) \in L^{2}[0,1]\). From the definition of \(W_2^{\frac{\beta }{2}}[0,1]\) given by (2.6), we know that \(u(x)\in W_2^{\frac{\beta }{2}}[0,1]\). \(\square \)

Lemma 3.5

([38, 39]) For \(u,v \in W_{2}^{\frac{\beta }{2}}[0,1]\), we have

Lemma 3.6

Let \(u^n(x)\in W_{2}^{\beta }[0,1](n=1,2,\ldots ,N)\) be the solution of the semi-discrete problem (2.4)–(2.5), then

where \(C>0\).

Proof

Here we use induction to prove (3.8). First (2.4) can be transformed into the following equivalent form in case of \(n=1\).

Multiplying (3.9) by \(u^1(x)\) and integrating on [0, 1] gives

According to Lemma 3.5, we see

By using the properties of \(b_{j}\) given by (2.3) and (3.11), (3.10) can be rewritten as follows

where \(C>0\), which demonstrates that (3.8) is valid for the case \(n=1\).

Now, assuming that the inequality (3.8) is true for \(n=1,2,\ldots ,N-1\). Taking \(n=N\) for (2.4), we obtain

Multiplying (3.12) by \(u^N(x)\) and integrating on [0, 1] gives

By Lemma 3.5, the properties of \(b_{j}\) given by (2.3) and the Cauchy-Schwarz inequality, we arrive at

where \(C>0\). \(\square \)

Here, we prove that the semi-discrete scheme (2.4) is unconditionally stable.

Theorem 3.4

Suppose that \(u^n(x)\in W_{2}^{\beta }[0,1]\) is the solution of the semi-discrete problem (2.4)–(2.5) at \(t=t_n\). Then, the semi-discrete scheme (2.4) is unconditionally stable.

Proof

Suppose that \(v^n(x)\) is the solution of (2.4) with corresponding initial condition v(x, 0), therefore \(v(x,t_n),n=1,2,\ldots ,N\) is also the solution of the semi-distcrete problem (2.4)–(2.5). Similar to (2.4), there exists

Let \(\varepsilon ^{n}=u^n(x)-v^n(x)\) be the error, then subtracting (2.4) from (3.15) gives

Further, with the help of Lemma 3.6 and (3.16), we obtain

which means that the semi-distrete problem (2.4)–(2.5) is unconditionally stable. \(\square \)

Next, we introduce a lemma to prove the convergence order of the semi-discrete scheme (2.4).

Lemma 3.7

([40]) Suppose \(\theta \) be a nonnegative constant, \(s_{m}\) and \(r_{k}\) are nonnegative sequences that the sequence \(v_{m}\) satisfies

then \(v_{m}\) satisfies

Finally, we obtain the convergence order of the semi-discrete scheme (2.4) is \(O(\Delta t)\).

Theorem 3.5

Suppose u(x, t) is the exact solutions of (1.1)–(1.3), and \(\eta ^{n}=u(x,t_n)-u^n(x),\ (n=1,2,\ldots ,N)\) is the error function, then the semi-discrete scheme (2.4) has convergence order \(O(\Delta t)\).

Proof

First (1.1) can be rewritten as

where \(R_{n,u}\) is the truncation error satisfying \(R_{n,u}\le C_{u}O(\Delta t^{2-\alpha })\)(see pages 1535–1538, [35]), and \(C_{u}\) is a constant depending only on u.

Subtracting (2.4) from (3.17), we obtain

The following formulation can be obtained by multiplying (3.18) by \(\varepsilon ^{n}\) and integrating on [0, 1],

By virtue of the Cauchy–Schwarz inequality, Lemma 3.5 and the properties of \(b_{j}\) given by (2.3), we obtain

which leads to

Summing up for n from 1 to M, and due to \(\Vert \eta ^{0}\Vert _{L^2}=0\), we have

and also, the inequality (3.19) can be rewritten as

Employing Lemma 3.7 and the properties of \(b_{j}\) given by (2.3), we have

where \(C>0\). The proof is completed. \(\square \)

4 Numerical results

In this section, three examples are solved to verify our theoretical findings. Write \(u^N_m(x)\) as the approximate solutions determined by the method provided in the paper. Example 4.1 is taken from [15, 16], and Example 4.2–4.3 are taken from [17, 18] respectively and we construct numerical results with them. All computations were performed by using Mathematica 9.0. The accuracy of the proposed method is measured using the \(\Vert e\Vert _{abs}\), \( \Vert e\Vert _{L_2}\) and \( \Vert e\Vert _{L_{\infty }}\) error norms for the test problems. The error norms, convergence rates in both time and space direction are respectively defined as

Example 4.1

Consider the following space-time fractional diffusion problem

The exact solution is \(u(x,t)=x^2(1-x)e^{-t}\). \(\Vert e\Vert _{abs}\) is compared with [15, 16] when \(N=M=3\) and for different values of \(\alpha \) at \(T=1\). Numerical results are presented in Table 1 indicates that when the error order is the same, the calculation speed of the present method is fast and only a few nodes are needed. Table 2 shows the maximum absolute error \(|Lu^n_m(x)-Lu(x,T)|\) for \(N=2,\ M=2\) with collocation points for three cases at \(T=1\). Note that in these tables, we provide CPU time consumed in the algorithms for obtaining the numerical solution, our method acting in a short time.

Example 4.2

Consider the following space-time fractional diffusion problem

The exact solution is \(u(x,t)=x^3e^{-t}\) for suitable source term. Table 3 compares \(\Vert e\Vert _{L_\infty }\) with the Galerkin method in [17] for \(N=30,\ M=4\) and different values of \(\alpha \in (0,1)\). Table 4 shows the maximum absolute errors \(|Lu^n_m(x)-Lu(x,T)|\) with \(N=2,\ M=4\) for different values of \(\alpha \) with collocation points for three cases at \(T=1\), and we calculated CPU time for a number of different \(\alpha \) which is a very short time. The numerical results of this example demonstrate that our methods are more accurate and faster.

Example 4.3

Consider the following space-time fractional diffusion problem

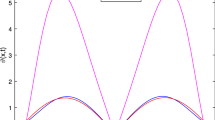

The exact solution is \(u(x,t)=t^2x^2\). \(\Vert e\Vert _{L_2}\) of our method and the existing approach in [18] which is used finite element method for solving this problem are compared in Table 5. We can see from this table that the maximum error absolute order obtained by the present method is one order higher than the method [18]. \(\Vert e\Vert _{abs}\) for \(\alpha =0.3,0.6,0.9\) based on \(M=4\) and \(N=10,20\) at \(T=1\), which are shown in Fig. 1. Figure 2 depicts \(\Vert e\Vert _{abs}\) for \(M=4\) and \(N=10,20,50,100\), \(\alpha =0.3\) at \(T=1\). This illustrates that the accuracy of the approximate solution will be getting best as N increases, it implies that the method proposed in this paper is stable. Table 6 reports the convergence order with \(\alpha =0.9\) at \(T=1,\ M=3,\ 4\) and different values of N. From this table, one can observe that the obtained convergence orders are in close agreement with the theoretical order \(O(\Delta t)\) in time direction.

Example 4.4

Consider the following space-time fractional diffusion problem

The exact solution is \(u(x,t)=txsin(\pi x)\). Tables 7, 8 display the results of the FRKCM. Table 7 lists the \(\Vert e\Vert _{abs}\) of the present method for \(N=2,\ M=4\) and \(\beta =1.4,\ 1.6,\ 1.8\) at \(T=1\). The convergence orders and \(\Vert e\Vert _{L_\infty }\) are shown in Table 8 at \(T=1\) with \(\alpha =0.9\), \(N=2\) for different values \(\alpha \) and M. It is clear that the space order of convergence supports the theoretical result \(o(\Delta x)\).

5 Conclusion

In this paper, the semi-discrete process and FRKCM have been used for approximating the solution of the space-time fractional diffusion equations. We established stability and convergence analysis of the approximate solution. The unconditional stability of the semi-discrete scheme is rigorously discussed, and we have proved that the semi-discrete scheme is of \(O(\Delta t)\) convergence. Numerical examples demonstrate the feasibility and reliability of our algorithm.

References

Donatelli, M., Krause, R., Mazza, M., Trotti, K.: All-at-once multigrid approaches for one-dimensional space-fractional diffusion equations. Calcolo 58, 45 (2021)

Jiang, D., Li, Z.: Coefficient inverse problem for variable order time-fractional diffusion equations from distributed data. Calcolo 59(4), 1–28 (2022)

del Teso, F.: Finite difference method for a fractional porous medium equation. Calcolo 51(4), 615–638 (2014)

Meerschaert, M.M., Benson, D.A., Scheffler, H.P., Baeumer, B.: Stochastic solution of space-time fractional diffusion equations. Phys. Rev. E 65, 041103 (2002)

Che, H., Wang, Y., Li, Z.: Novel patterns in a class of fractional reaction-diffusion models with the Riesz fractional derivative. Math. Comput. Simul. 202, 149–163 (2022)

Cartea, A., del Castillo-Negrete, D.: Fractional diffusion models of option prices in markets with jumps. Phys. A 374(2), 749–763 (2007)

Ding, Y., Ye, H.: A fractional-order differential equation model of hiv infection of cd4+ t-cells. Math. Comput. Model. 50(3), 386–392 (2009)

Xu, R., Chen, Y., Yang, Y., Chen, S., Shen, J.: Global well-posedness of semilinear hyperbolic equations, parabolic equations and Schrodinger equations. Electron. J. Differ. Equ. 2018(55), 1–52 (2018)

Tuan, N.H., Au, V.V., Xu, R.: Semilinear caputo time-fractional pseudo-parabolic equations. Commun. Pure Appl. Anal. 20(2), 583–621 (2021)

Wang, X., Xu, R.: Global existence and finite time blowup for a nonlocal semilinear pseudo-parabolic equation. Adv. Nonlinear Anal. 10(1), 261–288 (2021)

Lian, W., Wang, J., Xu, R.: Global existence and blow up of solutions for pseudo-parabolic equation with singular potential. J. Differ. Equ. 269(6), 4914–4959 (2020)

Xu, R., Wang, X., Yang, Y.: Blowup and blowup time for a class of semilinear pseudo-parabolic equations with high initial energy. Appl. Math. Lett. 83, 176–181 (2018)

Xu, R., Wei, L., Yi, N.: Global well-posedness of coupled parabolic systems. Sci. China Math. 63, 321–356 (2020)

Xu, R., Yang, Y., Chen, S., Su, J., Shen, J., Hang, S.: Nonlinear wave equations and reaction-diffusion equations with several nonlinear source terms of different signs at high energy level. ANZIAM J. 54(3), 153–170 (2013)

Aghdam, Y.E., Mesgrani, H., Javidi, M., Nikan, O.: A computational approach for the space-time fractional advection-diffusion equation arising in contaminant transport through porous media. Eng. Comput. 37(4), 3615–3627 (2021)

Baseri, A., Abbasbandy, S., Babolian, E.: A collocation method for fractional diffusion equation in a long time with chebyshev functions. Appl. Math. Comput. 322, 55–65 (2018)

Baseri, A., Babolian, E., Abbasbandy, S.: Normalized Bernstein polynomials in solving space-time fractional diffusion equation. Adv. Differ. Equ. 2017(1), 346 (2017)

Feng, L.B., Zhuang, P., Liu, F., Turner, I., Gu, Y.T.: Finite element method for space-time fractional diffusion equation. Numer. Algorithm 72(3), 749–767 (2016)

Hu, H.Y., Chen, J.S., Hu, W.: Error analysis of collocation method based on reproducing kernel approximation. Numer. Methods Partial Differ. Equ. 27(3), 554–580 (2011)

Chi, S.W., Chen, J.S., Hu, H.Y., Yang, J.P.: A gradient reproducing kernel collocation method for boundary value problems. Int. J. Numer. Methods Eng. 93(13), 1381–1402 (2013)

Wang, D., Wang, J., Wu, J.: Superconvergent gradient smoothing meshfree collocation method. Comput. Methods Appl. Mech. Eng. 340, 728–766 (2018)

Yang, J., Yao, H., Wu, B.: An efficient numerical method for variable order fractional functional differential equation. Appl. Math. Lett. 76, 221–226 (2018)

Du, H., Chen, Z., Yang, T.: A stable least residue method in reproducing kernel space for solving a nonlinear fractional integro-differential equation with a weakly singular kernel. Appl. Numer. Math. 157, 210–222 (2020)

Aluru, N.R.: A point collocation method based on reproducing kernel approximations. Int. J. Numer. Methods Eng. 47(6), 1083–1121 (2000)

Li, X., Wang, H., Wu, B.: A stable and efficient technique for linear boundary value problems by applying kernel functions. Appl. Numer. Math. 172, 206–214 (2022)

Zhang, X., Du, H.: A generalized collocation method in reproducing kernel space for solving a weakly singular fredholm integro-differential equations. Appl. Numer. Math. 156, 158–173 (2020)

Geng, F.: A new higher order accurate reproducing kernel-based approach for boundary value problems. Appl. Math. Lett. 107, 106494 (2020)

Liu, W.K., Jun, S., Zhang, Y.F.: Reproducing kernel particle methods. Int. J. Numer. Methods Fluids 20(8–9), 1081–1106 (1995)

Mahdavi, A., Chi, S.W., Zhu, H.: A gradient reproducing kernel collocation method for high order differential equations. Comput. Mech. 64(5), 1421–1454 (2019)

Abbaszadeh, M., Dehghan, M.: A galerkin meshless reproducing kernel particle method for numerical solution of neutral delay time-space distributed-order fractional damped diffusion-wave equation. Appl. Numer. Math. 169, 44–63 (2021)

Chen, Z., Wu, L., Lin, Y.: Exact solution of a class of fractional integro-differential equations with the weakly singular kernel based on a new fractional reproducing kernel space. Math. Methods Appl. Sci. 41(10), 3841–3855 (2018)

Zhang, R., Lin, Y.: A new algorithm for fractional differential equation based on fractional order reproducing kernel space. Math. Methods Appl. Sci. 44(2), 2171–2182 (2021)

Oldham, K., Spanier, J.: The fractional calculus, theory and applications of differentiation and integration to arbitrary order. Dover Publications (2006)

Langlands, T., Henry, B.: The accuracy and stability of an implicit solution method for the fractional diffusion equation. J. Comput. Phys. 205(2), 719–736 (2005)

Lin, Y., Xu, C.: Finite difference/spectral approximations for the time-fractional diffusion equation. J. Comput. Phys. 225(2), 1533–1552 (2007)

hua Gao, G., zhong Sun, Z., wei Zhang, H.: A new fractional numerical differentiation formula to approximate the caputo fractional derivative and its applications. J. Comput. Phys. 259, 33–50 (2014)

Wang, Y., Chaolu, T., Chen, Z.: Using reproducing kernel for solving a class of singular weakly nonlinear boundary value problems. Int. J. Comput. Math. 87(2), 367–380 (2010)

Ervin, V.J., Roop, J.P.: Variational solution of fractional advection dispersion equations on bounded domains in \(\mathbb{R} ^d\). Numer. Methods Partial Differ. Equ. 23(2), 256–281 (2007)

Huang, J., Nie, N., Tang, Y.: A second order finite difference-spectral method for space fractional diffusion equations. Sci. China Math. 57(6), 1303–1317 (2014)

Quarteroni, A., Valli, A.: Numerical Approximation of Partial Differential Equations, vol. 23. Springer Science & Business Media (2008)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sun, R., Yang, J. & Yao, H. Numerical solution of the space-time fractional diffusion equation based on fractional reproducing kernel collocation method. Calcolo 60, 31 (2023). https://doi.org/10.1007/s10092-023-00525-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10092-023-00525-5