Abstract

This paper deals with an inverse problem on determining a time dependent potential in a diffusion equation with temporal fractional derivative of variable order from a distributed observation. We shall study the existence, uniqueness and regularity estimates of the solution for the forward problem by utilizing the Freldhom alternative principle for compact operators. Based on a newly established coercivity for fractional derivatives of variable order, we prove a uniqueness result for the inverse potential problem. Numerically, we transform the inverse potential problem into an optimization problem with Tikhonov regularization. An iterative thresholding algorithm is proposed to find the minimizers by a newly constructed adjoint system, whose wellposedness is also verified. Several numerical experiments are presented to show the accuracy and efficiency of the proposed algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the field of statistical mechanics, people can deduce the time-fractional diffusion equation (tFDE) by assuming the jump length and the waiting time between two successive jumps are independent and respectively follow Gaussian distribution and power law distribution as time large, see, e.g., Metzler and Klafter [30]. Because tFDEs were well performed in catching the behavior of the diffusion processes with memory effect, it attracted more and more attention from many fields, such as rheology [1], transport theory [8], viscoelasticity [28], non-Markovian stochastic processes [31], etc.

However, on the attempting to describe some complex dynamical systems, several researches confronted with the situation that the fractal dimension of the heterogeneous media determined by the Hurst index [29] that changes as the geometrical structure or property of the media changes. Mathematically, it reflects that the fractional orders in tFDEs are temporally or spatially varying.

The fractional models involving variable-order derivatives have more feasibility to catch some behavior of complex dynamical system than the constant fractional order case in a large class of physical, biological and physiological diffusion phenomena. For example, shape memory polymer due to the change of its microstructure [23], the shale gas production due to the hydraulic fracturing [37], the tritium transport through a highly heterogeneous medium at the macrodispersion experimental site [39], polymer gels [34] and magnetorheological elastomers [5] in imposed electric and magnetic fields. We also refer to [9] for the relaxation processes and reaction kinetics of proteins under changing temperature field. These work indicated that the behavior of the above diffusion processes in response to temperature, electrostatic field strength or magnetic field strength changes may be better described using variable order elements rather than the constant order.

Variable-order tFDEs have appeared in more and more applications, but mathematical researches on analyzing the variable-order tFDEs is relatively new and is still at an early stage. For the variable-order tFDEs with the time independent principle elliptic part, Umarov and Steinberg [42] proved the existence and uniqueness theorem of a solution of the Cauchy problem for variable order tFDEs via the explicit representation formula (see, e.g., [21, 33]) of the solution for constant-order fractional PDEs. Wang and Zheng [44] studied the wellposedness of the initial-boundary value problems of variable order tFDE, in which the principle elliptic part is time independent, so the Mittag-Leffler function and the eigenfunction expansion argument, which is a widely used technique in analyzing tFDEs, can be used to derive the desired results. Kian, Soccorsi and Yamamoto [16] studied tFDEs with space depending variable order, in which the t-analyticity of the solution is proved and so the Laplace transform technique is utilized to derive the wellposedness. For the numerical methods for solving the variable order tFDEs, we refer to some work given by W. Chen and his group [11, 37, 38], F. Liu and his group [2, 3, 53], Wu, Baleanu, Xie and Zeng [46] , Zaky, Doha, Taha and Baleanu [49] and the references therein.

In this paper, assuming \(T\in (0,\infty )\) and \(\Omega\) is an open bounded domain in \({\mathbb {R}}^d\) with a sufficiently smooth boundary \(\partial \Omega\), we will consider the following initial-boundary value problem of variable-order tFDE:

where \((\nu _1,\nu _2,\ldots ,\nu _d)\) is the unit out normal vector at the boundary \(\partial \Omega\), and L(t) is a symmetric uniformly elliptic operator defined by

with \(a_{ij}(x,t) = a_{ji}(x,t)\) \((1\le i,j \le d),\ x\in {\overline{\Omega }}\) and \(a_{ij}\in C^1(\overline{\Omega _T})\) such that

for some positive constant \(a_0\). In the system (1.1), by \(\partial ^{\alpha (\cdot )}_t\) we denote the Caputo fractional derivative of temporal variable order \(\alpha (t)\in (0,1)\):

The coefficient q depends only on x, the potential function p is only t-dependent, and they are assumed to be in function spaces \(L^\infty (\Omega )\), \(L^\infty (0,T)\) respectively. We assume the source term \(f\in L^2(\Omega _T)\). In the above variable-order tFDE (1.1), the variable order, diffusion coefficient and potential are all time dependent, hence the previous analytical techniques, such as the Fourier expansion and Laplace transform argument used in [16, 42, 44], do not work in the current context, which increases the difficulty in the study of the wellposedness for the forward problem.

In addition, the parameters e.g., fractional order, source, potential and initial value, are usually unknown and cannot be obtained directly in most practical applications. It should be inferred as an inverse problem from suitable additional data which can be easily obtained based on the forward problems. This is a more practical issue and has been widely concerned in theoretical and numerical researches for tFDEs in recent years. We do not intend to give a full list of the works. We refer to Huang, Li and Yamamoto [12], Jiang, Li, Liu and Yamamoto [13], Liu, Rundell and Yamamoto [26] for inverse source problems. We refer to Chen, Liu, Jiang, Turner and Burrage [4], Li, Zhang, Jia and Yamamoto [20], Li, Imanuvilov and Yamamoto [22] for recovering the fractional orders. Kian, Li, Liu and Yamamoto [18], Kian, Oksanen, Soccorsi and Yamamoto [17], Jin and Rundell [14] are concerned with the inverse problem in determining the spatially varying potential. For recovering t-dependent potential, we refer to Zhang and Zhou [50], Sun, Zhang and Wei [40]. For backward problems, we refer to Liu and Yamamoto [25] , Florida, Li and Yamamoto [7] and Wang and Liu [43]. We also refer to Jin and Rundell [15] for a survey of the inverse problems for fractional differential equations. It seems that the most existing works are concerned with inverse problems for (constant-order) tFDEs. Up to now, the available study on inverse problems associated with variable-order case are meager. Wang, Wang, Li and Wang [45] investigated a tFDE involving space and time depending variable fractional order derivative in which the homotopy regularization algorithm was applied to solve the inverse problem in simultaneously determining the fractional order and the diffusion coefficient. Zheng, Cheng and Wang [51] proved the uniqueness of determining the variable fractional order of the initial-boundary value problems of variable-order tFDEs in one space dimension with some available observed values of the unknown solutions inside the spatial domain.

As far as we know, there is no theoretical analysis and numerical algorithm for inverse coefficient problems in the variable-order tFDEs with time dependent principle elliptic part, since the useful analytic techniques, e.g., Mittag-Leffler function expression and Laplace transform argument cannot work here. Regarding the importance of inverse coefficient problems, we here consider the theoretical analysis and numerical reconstruction of the time dependent potential from energy type measured data. The reasons why we choose the integral type observation conclude two aspects. One is that the energy method could help us investigate the mathematical theories, and the other one is that it can average the error of point measurements, which will make the inversion algorithm more stable.

Inverse Problem 1.1

We assume \(u\in H^1(0,T;L^2(\Omega ))\cap L^2(0,T;H^2(\Omega ))\) is the solution to the system (1.1). We intend to determine the temporally varying potential \(p\in L^\infty (0,T)\) by measuring the energy data

As this is the first paper to study the uniqueness and numerical algorithms for the reconstruction of the time dependent potential in a variable-order tFDE with time dependent principle elliptic part. We would like to point out the main difficulties for the reconstruction and the novelties of this paper. The essential difficulties are

-

1.

the fractional order, diffusion coefficient and potential are time varying, which makes the usual analytical techniques, like Mittag-Leffler function expression and Laplace transform argument, cannot work;

-

2.

the inverse problem of identifying the potential is nonlinear, which makes that there are quite a few mathematical tools can work. Indeed, it is difficult to give an exact formula for the solution operator of our inverse problem. Moreover, the techniques based on linearity, such as compact operator theory and Fredholm alternative principle, are no longer applicable to our inverse problem.

The main novelties of this paper include

-

1.

we prove the wellposedness for the forward problem (1.1) by regarding the fractional order term as a perturbation of the first order time derivative and using the theoretical results for the parabolic equations;

-

2.

we obtain the uniqueness for the inverse potential problem by the use of the energy methods on basis of a newly established coercivity of the variable order time fractional derivative in Lemma 4.1;

-

3.

numerically, we construct a newly wellposed adjoint system and propose an iterative thresholding algorithm, which makes the numerical implementation easy and computationally cheap.

2 Main results and outline

For the initial-boundary value problem (1.1), we will first prove the well-posedness of the solution. Before stating the main theorem, we list several restrictions on the coefficients.

Assumption 2.1

-

(a)

\(\alpha (\cdot )\in C[0,T]\) such that \(0<\alpha (\cdot )<1\) in [0, T].

-

(b)

\(p(\cdot )\in L^\infty (0,T)\), \(q(\cdot )\in L^\infty (\Omega )\) and \(f\in L^2(\Omega _T)\).

Under Assumption 2.1, by regarding the fractional order term as a perturbation of the first order time-derivative, the unique existence of the solution as well as regularity of the solution can be proved by Fredholm principle for compact operators. We now state the first main theorem.

Theorem 2.1

Under Assumption 2.1, the initial-boundary value problem (1.1) admits a unique weak solution \(u\in H^1(0,T;L^2(\Omega ))\cap L^2(0,T;H^2(\Omega ))\) such that

The above theorem indicates that the solution has the full regularity as the parabolic analogues do. Most importantly, based on the above regularity of the solution, one can show the coercivity of the variable order time-fractional derivative (see Lemma 4.1 ), from which we can further derive the uniqueness of the inverse problem.

Theorem 2.2

Under Assumption 2.1, and we further suppose that ess \(\inf _{\Omega } q(x) > 0\) and \(\alpha (\cdot )\in C^1[0,T]\). Let \(u_1, u_2\in H^1(0,T;L^2(\Omega )) \cap L^2(0,T;H^2(\Omega ))\) be the solutions to (1.1) with respect to \(p_1,p_2\in L_+^\infty (0,T):=\{p\in L^\infty (0,T); p\ge 0, \text{ a.e. } t\in (0,T) \}\), then the observation data

for any \(t\in (0,T)\) implies

The remaining parts of this manuscript are structured as follows. Section 3 is concerned with the well-posedness of the initial-boundary value problem (1.1). In Sect. 4, we address a coercivity of the variable order time-fractional derivatives, based on which we prove a uniqueness result for an inverse problem of the determination of the potential from the energy type observed value of the unknown solution. In Sect. 5, we transform the inverse problem into an optimization problem with Tikhonov regularization, which will be solved by an iterative thresholding algorithm. Several examples are also given to show the accuracy and efficiency of our algorithm. Finally, the concluding remarks are given in Sect. 6.

3 Well-posedness of the forward problem

In this part, we are concerned with the unique existence of the solution to the initial-boundary value problem (1.1) as well as the regularity estimates for the solution under suitable topology. Recalling that the coefficients in (1.1) are t and/or x dependent, the Fourier expansion argument in Sakamoto and Yamamoto [33] cannot be directly used any more. Here we will introduce a novel method to avoid the expansion argument. The key idea is regarding the fractional order term as a source term and using the theoretical results for the parabolic equations to construct a compact operator, and then the well-posedness of the problem (1.1) can be shown from the Fredholm alternative principle.

For the purpose, we introduce the Riemann-Liouville integral operator \(J^{\alpha }\): for any \(v(t)\in L^2(0,T)\),

Then we first establish the following useful lemma for later use.

Lemma 3.1

Let \(\alpha (\cdot )\in C[0,T]\) such that \(0< \alpha (t)<1\) for any \(t\in [0,T]\), and we choose \(\varepsilon >0\) being small enough such that \(1-\varepsilon -\alpha (t)>0\) for any \(t\in [0,T]\). Then the following estimate

is valid for any \(g\in H^{1}(0,T)\) satisfying \(g(0)=0\).

Proof

Firstly, from Wang and Zheng [44, Lemma 2.2], it follows that

From the assumptions on g, it follows that \(g\in H^{1-\varepsilon }(0,T)\), and then we can find an element \(h\in L^2(0,T)\) such that \(g=J^{1-\varepsilon } h\) in view of Gorenflo, Luchko and Yamamoto [10, Theorem 2.1]. Then from the definition of \(J^{1-\varepsilon }\) and the Fubini lemma, we see that

Since \(1-\varepsilon -\alpha (t)>0\) for any \(t\in [0,T]\), we further see that

Noting the fact that

where \({\overline{\alpha }}:=\sup _{t\in [0,T]} \alpha (t)\), we see that \(\partial _t^{\alpha (\cdot )} g\) can be estimated as follows

Further, we employ the Young inequality for the convolution to derive

Finally, noting that \(\Vert h\Vert _{L^2(0,T)} \sim \Vert g\Vert _{H^{1-\varepsilon }(0,T)}\), see e.g., Gorenflo, Luchko and Yamamoto [10, Theorem 2.1], Kubica and Yamamoto [19], we finish the proof of the lemma. \(\square\)

The above lemma indicates that the definition of the variable order Captuo derivative \(\partial _t^{\alpha (\cdot )}\) can be extended to the fractional Sobolev space \(H^{1-\varepsilon }(0,T)\) by following the argument used in Gorenflo, Luchko and Yamamoto [10]. Actually, in the case of \(1-\varepsilon -\alpha (t)>0\), \(\forall t\in [0,T]\), by noting the relation (3.2), for \(g\in H^{1-\varepsilon }(0,T)\) satisfying \(g(0)=0\), we define

Here \(g_n\in H^{1-\varepsilon }(0,T)\) such that \(g_n(0)=0\). We still denote the extended operator by \(\partial _t^{\alpha (\cdot )}\) if there is no conflict. Then Lemma 3.1 immediately implies the following corollary.

Corollary 3.1

Let \(\alpha (\cdot )\in C[0,T]\) such that \(0< \alpha (t)<1\) for any \(t\in [0,T]\), and we choose \(\varepsilon >0\) being small enough such that \(1-\varepsilon -\alpha (t)>0\) for any \(t\in [0,T]\). Then the following estimate

is valid for any \(g\in H^{1-\varepsilon }(0,T)\) satisfying \(g(0)=0\).

We also need a unique result for the following system

Lemma 3.2

Suppose \(v\in H^1(0,T;L^2(\Omega )) \cap L^2(0,T; H^2(\Omega ))\) is a solution to the system (3.4), then \(v\equiv 0\) in \(\Omega _T\).

Proof

By regarding the term \(-q\partial _t^{\alpha (\cdot )}v\) as a new source term, we multiply equation in (3.4) by v and \(\partial _t v\) separately, and follow the argument used in the proof of [6, Theorem 5, p.360] to discover

where C is a constant which is independent of t. Moreover, from the definition of the Caputo derivative of variable order, in view of the Hölder inequality, it follows that

Here \(\varepsilon >0\) is sufficiently small and \(\Gamma _0:=\sup _{t\in [0,T]} \frac{1}{\Gamma (1-\alpha (t))}\). Now using the inequality (3.3), we conclude that \((t-\tau )^{-\alpha (t)} \le \max \{1,T^{{\overline{\alpha }}}\}(t-\tau )^{-{\overline{\alpha }}}\). Therefore, the above estimate (3.6) can be further treated as follows

Similarly, we can obtain

Substituting the above two estimates into (3.5), and choosing \(\varepsilon >0\) sufficiently small, we see that (3.5) can be rephrased as follows

We conclude from the Fubini lemma that

Finally, from the Gronwall inequality, we derive \(\partial _t v=0\) in \(\Omega _T\), which combined with \(v(x,0)=0\) in \(\Omega\) implies \(v=0\) in \(\Omega _T\).

The proof of the lemma is finished. \(\square\)

Now we are ready to show the well-posedness of the problem (1.1).

Lemma 3.3

Let \(T>0\) and \(\alpha (\cdot )\in C[0,T]\) such that \(\alpha (t)\in (0,1)\) for \(t\in [0,T]\), and we suppose \(f\in L^2(\Omega _T)\). Then the initial-boundary value problem (1.1) admits a unique weak solution \(u\in H^1(0,T;L^2(\Omega ))\cap L^2(0,T;H^2(\Omega ))\) satisfying

Proof

From the superposition of the problem (1.1), we consider the following two subproblems separately:

and

with the same initial and boundary conditions as in (1.1). Here in the above equation (3.8), we assume \(u\in H^{1-\varepsilon }(0,T;L^2(\Omega ))\). In view of Lemma 3.1, we see from the theoretical results of parabolic equations that there exist unique solutions to the above two problems (3.7) and (3.8). We denote them as \(u_f\) and \(K_\alpha u\) separately. It remains to show that there exists a unique solution \(u\in H^1(0,T;L^2(\Omega ))\cap L^2(0,T;H^2(\Omega ))\) for the operator equation \(u=K_\alpha u + u_f\).

From Lemma 3.1 and the well-known regularity for parabolic equations (e.g., [24, Section 4.7.1, p.243]), we have \(u_f, K_\alpha u\in H^1(0,T; L^2(\Omega ))\cap L^2(0,T; H^2(\Omega ))\) such that

and

By [41, Theorem 2.1] and [24, Theorem 16.2, Chapter 1], we see that \(H^1(0,T; L^2(\Omega ))\cap L^2(0,T; H^2(\Omega ))\) is compact in \(H^{1-\varepsilon }(0,T; L^2(\Omega ))\), which further implies \(K_\alpha\): \(H^{1-\varepsilon }(0,T; L^2(\Omega )) \rightarrow H^{1-\varepsilon }(0,T; L^2(\Omega ))\) is a compact operator. By the Fredholm alternative for compact operators, \(u=K_\alpha u + u_f\) admits a unique solution in \(H^{1-\varepsilon }(0,T; L^2(\Omega ))\) as long as

Here I denotes the identity operator.

Therefore, we need to show that \((I-K_\alpha )v = 0\) implies \(v=0\). Indeed, noting that \(v=K_\alpha v\) is just the solution to (3.4), then we obtain from Lemma 3.2 that \(I-K_\alpha\) is one-to-one on \(H^{1-\varepsilon }(0,T; H^{-1}(\Omega ))\). Hence, by Fredholm alternative, there exists a unique solution of \(u=K_\alpha u + u_f\) and the solution admits the following regularity estimates

We further derive from (3.9) and (3.10) that

and

Combining all the above estimates, we finish the proof of the lemma. \(\square\)

4 Uniqueness of the inverse potential problem

In this section, we shall prove the uniqueness theorem proposed in Sect. 2. For the sake of convenience in fractional calculus, we will first establish several estimates related to the Caputo derivatives of variable order which will be useful for the proof of Theorem 2.2.

The first lemma indicates the coercivity of the Caputo derivative.

Lemma 4.1

(Coercivity) Let \(\alpha (\cdot )\in C[0,T]\) such that \(\alpha (t)\in (0,1)\), \(t\in [0,T]\). Then the following coercivity inequality

holds true for any function \(g\in H^1(0,T)\).

Proof

For simplicity, we denote

Then it is sufficient to prove \(I \ge 0\). The proof is done by direct calculations. By the definition of Caputo fractional derivative, we find

By a direct calculation, we further claim that

Indeed, by noting that

where in the second inequality we used the Hölder inequality. Thus the claim (4.1) is true. Finally, by integration by parts, we see that

This completes the proof of the lemma. \(\square\)

Lemma 4.2

Let \(\alpha (\cdot )\in C[0,T]\) satisfy \(\alpha (t)\in (0,1)\), \(t\in [0,T]\). Then there exists a positive constant \(C=C(T,\alpha )\) such that the following inequality

is valid for any \(g\in H^1(0,T)\) with \(g(0)=0\), where \({\overline{\alpha }}:=\sup _{t\in [0,T]} \alpha (t)\).

Proof

From the notation of \(\partial _t^{\alpha (\cdot )}\), and using the Fubini lemma, we see that

Here in the last equality we used the fact \(g(0)=0\) and the estimate

Moreover, by letting \({\tilde{\tau }}=\frac{\tau -\eta }{t-\eta }\) and a direct calculation, we obtain

Now noting the facts that \(\frac{1}{\Gamma (1-\alpha (\cdot ))} \in C^1[0,T]\) and \(\alpha (\cdot )\in C^1[0,T]\) satisfying \(0<\alpha (t)<1\) for \(t\in [0,T]\), we derive

and

Consequently, collecting all the above estimates, we arrive at

Since \(0<{\overline{\alpha }}<1\), we see that the two functions \({{\tilde{\tau }}}^{-{\overline{\alpha }}} (1+|\ln {\tilde{\tau }}|)\) and \({{\tilde{\tau }}}^{ - {\overline{\alpha }}}\) are integrable on [0, 1], hence

Taking the above inequality into (4.2), we conclude that

which completes the proof of the lemma. \(\square\)

Now we are ready to give the proof of Theorem 2.2.

Proof of Theorem 2.2

Let \(u_1\) and \(u_2\) be the solutions to the fractional diffusion equations (1.1) with respect to \(p_1\) and \(p_2\). Then we see that \(w:=u_1-u_2\) admits the following initial-boundary value problem

with the homogeneous initial and boundary conditions. An equivalent form of (4.3) read as

Summing the above two equations implies

Now multiplying the above equation with w and integrating the resulted equation over \(\Omega\), we have

where \(\langle \cdot ,\cdot \rangle\) denotes the inner product in \(L^2(\Omega )\). Further, in view of the observation

we see that \(\langle u_1+u_2,w\rangle = \langle u_1,u_1\rangle - \langle u_2,u_2\rangle =0\), which implies

By integration by parts and the ellipticity of the operator L(t), we see that

Moreover, we conclude from Lemma 4.1 that

Now taking the above two estimates into (4.7) and noting \(p_1,p_2\ge 0\), we derive

Integrating with respect to t over (0,t) on both sides of the above inequality and using the fact \(\Vert w(0)\Vert _{L^2(\Omega )}=0\) and the estimate in Lemma 4.2, we obtain

Moreover, since \({\overline{\alpha }}\in (0,1)\), we see that there exists a sufficiently small \(\varepsilon >0\) such that \({\overline{\alpha }}+\varepsilon <1\) and \(1+|\ln t| \le Ct^{-\varepsilon }\) are valid for any \(t\in (0,T)\), therefore we have

We further use the Gronwall inequality to derive that \(w=0\) in \(\Omega _T\), that is, \(u_1=u_2\). We thus have \(p_1=p_2\) from (4.4) and the positivity of the observation data, which completes the proof of the theorem. \(\square\)

Remark 4.1

We point out here that the assumption on the observation data \(\left( \int _\Omega u^2(x,t)dx\right) ^{\frac{1}{2}} >0\) for \(t\in (0,T)\) is essential for our proof and it may be achieved by choosing the source being positive. Indeed, one may prove \(u(x,t)>0\) for any \((x,t)\in \Omega _T\) in view of the maximum principle for the fractional diffusion equation. We postpone the proof of the positivity of the solution to the appendix..

5 Numerical inversion

In this section, we are devoted to developing an effective numerical method and presenting several numerical experiments for the reconstruction of the unknown potential p(t) in the domain (0, T) from the addition data \(\left( \int _\Omega u^2(x,t) dx\right) ^{\frac{1}{2}}\) in (0, T).

5.1 Tikhonov regularization

We write the solution of system (1.1) as u(p) in order to emphasize its dependence on the unknown function p. Here and henceforth, we set \(p^*\in L_+^\infty (0,T):=\{p\in L^\infty (0,T); p\ge 0, \text{ a.e. } t\in (0,T) \}\) as the true solution to the proposed Inverse Problem 1.1 and investigate the numerical reconstruction by noise contaminated observation data \(E^{\delta }(t)\) in (0, T). Here \(E^{\delta }\) satisfies \(\left\| \Vert u(p^*)\Vert _{L^2(\Omega )} - E^\delta (t)\right\| _{L^2(0,T)}\le \delta\) with the noise level \(\delta\).

In the framework of the Tikhonov regularization technique, we propose the following output least squares functional related to our inverse potential problem:

where \(\beta >0\) is the regularization parameter.

As the majority of the efficient iterative methods do, we need the information about the G\({\hat{a}}\)teaux derivative \(\Phi '(p)\) of the objective functional \(\Phi (p)\). For an arbitrarily fixed direction \(\xi \in L^{2}(0,T)\), a direct calculation implies

where \(u'(p)\xi\) denotes the G\({\hat{a}}\)teaux derivative of u(p) in the direction \(\xi\) and it is the solution to the following variable order time-fractional differential equation:

In view of the fact that \(u(p)\in L^2(0,T;L^2(\Omega ))\cap H^1(0,T;H^2(\Omega ))\), by a similar argument used in the proof for Lemma 3.3, we can show that the above problem (5.3) admits a unique solution \(u'(p)\xi \in H^1(0,T;L^2(\Omega )) \cap L^2(0,T;H^2(\Omega ))\).

Remark 5.1

It is not applicable to find the minimizer of the functional \(\Phi\) directly in terms of the above formula (5.2) of the G\({\hat{a}}\)teaux derivative. Indeed, in the computation for \(\Phi '(p)\) in (5.2), one should solve system (5.3) for \(u'(p)\xi\) with \(\xi\) varying in \(L^2(0,T)\), which is undoubtedly quite hard and computationally expensive.

5.2 Adjoint system and its well-posedness

In order to reduce the computational costs for the G\({\hat{a}}\)teaux derivative \(\Phi '(p)\), we first define the backward Riemann-Liouville integral operator \(J_T^{1-\alpha (t)}\) and the backward Riemann-Liouville fractional derivative \(D_T^{\alpha (t)}\): for any \(g(t)\in L^2(0,T)\),

By the Fubini Lemma and the integration by parts, for any \(g_1,g_2\in L^2(0,T)\) with \(g_1(0)=0\) and \(\lim _{t\rightarrow T}J_T^{1-\alpha (t)}g_2(t)=0\), we have

Then we shall introduce the following backward variable order time-fractional differential equation:

Now we are ready to propose the following lemma for getting the adjoint relation.

Lemma 5.1

For any fixed direction \(\xi \in L^{2}(0,T)\), the following equality holds:

Proof

By the variational forms of the systems (5.3) and (5.5), for any \(\varphi ,\psi \in L^2(0,T;H^1(\Omega ))\), we have

As \((u'(p)\xi )(x,0)=0=z(x,T)\), we obtain by the integration by parts with respect to t and (5.4) that

Hence, taking \(\varphi =z\) and \(\psi =u'(p)\xi\) in (5.7) and (5.8) respectively, we derive

We finish the proof of the lemma. \(\square\)

Next, we shall show that the new system (5.5) is well-posed. Correspondingly, in a similar manner as the proof of Lemma 3.3, one can show the following well-posedness result for the backward boundary value problem (5.5). Indeed, by the change of the variable \({\tilde{t}}=T-t\) and setting \({\tilde{v}}({\tilde{t}}):=z(t)=z(T-{\tilde{t}})\), we first see that \(\partial _t z(t) = -\partial _{{\tilde{t}}} {\tilde{v}}({\tilde{t}})\) and

Then we can equivalently change the problem (5.5) to the following initial-boundary value problem

We notice that

Then in order to prove the well-posedness of the problem (), it is sufficient to show that \(d_{{\tilde{t}}}^{\alpha (t)} {\tilde{v}} \in L^2(0,T;L^2(\Omega ))\) for any \({\tilde{v}}\in {_0}H^{1-\varepsilon }(0,T;L^2(\Omega ))\) with small enough \(\varepsilon >0\).

Lemma 5.2

Let \(\alpha (\cdot )\in C^1[0,T]\) such that \(0< \alpha (t)<1\) for any \(t\in [0,T]\), and we choose \(\varepsilon >0\) being small enough such that \(1-\varepsilon -\alpha (t)>0\) for any \(t\in [0,T]\). Then the following estimate

is valid for any \(g\in H^{1-\varepsilon }(0,T)\) satisfying \(v(0)=0\).

Proof

From the assumptions on g, we can find \(h\in L^2(0,T)\) such that \(z=J^{1-\varepsilon } h\), then from the notations of \(d_t^{\alpha (t)}\) and \(J^{1-\varepsilon }\) and the Fubini lemma, we see that

Here in the last inequality we used

as \(\eta \rightarrow t\). Moreover, we can show the following assertion

This can be done by the change of variables. In fact, by letting \({\tilde{\tau }}=\frac{\tau -\eta }{t-\eta }\), we have

Now by noting the facts that \(\frac{1}{\Gamma (1-\alpha (\cdot ))} \in C^1[0,T]\), \(1-\varepsilon -\alpha (t)>0\) and \(\alpha (\cdot )\in C^1[0,T]\), we can show that the assertion (5.9) is true. Collecting all the above estimates, from the Young inequality for the convolution, it follows that

Then the proof of the lemma can be finished by noting that \(\Vert h\Vert _{L^2(0,T)} \sim \Vert g\Vert _{H^{1-\varepsilon }(0,T)}\), see Gorenflo, Luchko and Yamamoto [10, Theorem 2.1]. \(\square\)

Now, by an argument similar to the proof of Lemma 3.3, we have the well-posedness of the problem (5.5).

Lemma 5.3

Let \(T>0\) and \(\alpha (\cdot )\in C^1[0,T]\) such that \(\alpha (t)\in (0,1)\) for any \(t\in [0,T]\). We suppose \(\Vert u(p)(\cdot ,t)\Vert _{L^2(\Omega )} >0\) for any \(t\in [0,T]\) and \(p\in L^2(0,T)\). Then the problem (5.5) admits a unique weak solution \(z\in H^1(0,T;L^2(\Omega ))\cap L^2(0,T;H^2(\Omega ))\) such that

On the basis of the above regularity result of the solution to the adjoint system (5.5), we now aim to give a weak form of the solution to the problem (5.5). Let \(z\in H^1(0,T;L^2(\Omega ))\cap L^2(0,T; H^2(\Omega ))\) be the solution to the problem (5.5). The use of integration by parts implies that the solution z to the problem (5.5) admits the following weak form

where \({\tilde{f}}:=\left[ \frac{E^{\delta }(t)}{\Vert u(p)(\cdot ,t)\Vert _{L^2(\Omega )}} - 1\right] u(p)\), and \(\psi \in H^1(0,T;L^2(\Omega ))\cap L^2(0,T;H^2(\Omega ))\) satisfying \(\psi (\cdot ,0)=0\).

5.3 Iterative thresholding algorithm

Due to the adjoint relation (5.6), we find that for any \(\xi \in L^2(0,T)\),

Here we write the solution z to system (5.5) as z(p) to emphasize its dependence on p(t). This suggests a characterization of the solution to the minimization problem (5.1), i.e., the function \(p^*(t)\in L^2(0,T)\) is a minimizer of the functional \(\Phi (p)\) in (5.1) only if it satisfies the variational equation

Now we are ready to propose the iterative thresholding algorithm (see e.g. [32] [48]) for the reconstruction.

Algorithm 5.1

Choose a tolerance parameter \(\epsilon >0\) and an initial value \(p^{0}\), and set \(k:=0\).

-

1.

Compute

$$\begin{aligned} p^{k+1} =\frac{1}{A+\beta }\Big (Ap^k-\int _\Omega u(p^k)\,z(p^k)dx\Big ). \end{aligned}$$(5.11) -

2.

If \(\frac{\Vert p^{k+1}-p^{k}\Vert _{L^2(0,T)}}{\Vert p^{k}\Vert _{L^2(0,T)}}\le \epsilon\), stop the iteration; otherwise set \(k:=k+1\), go to Step 1.

Remark 5.2

Here \(A>0\) is a tuning parameter and it should satisfy that \(A\Vert p-q\Vert _{L^2(0,T)}^2\ge \int _0^T|\Vert u(p)\Vert _{L^2(\Omega )}-\Vert u(q)\Vert _{L^2(\Omega )}|^2dt\) for any \(p,\,q\in L^\infty _+(0,T)\) for the convergence of the Algorithm 5.1 (please refer to [48] for more details). We can see from (5.11) that at each iteration step, we only need to solve the forward problem (1.1) once for \(u(p^k)\) and the backward problem (5.5) once for \(z(p^k)\) subsequently. As a result, the numerical implementation of algorithm 5.1 is easy and computationally cheap.

5.4 Numerical experiments

In this subsection, we shall present several numerical examples in one and two dimensional spaces to show the accuracy and efficiency of the proposed iterative thresholding Algorithms 5.1. To begin with, we assign the general settings of the reconstructions as follows. Without loss of generality, in (1.1) we set

The noisy data \(u^\delta\) is obtained by adding some uniform random noise to the exact data \(\Vert u(p^*)\Vert _{L^2(\Omega )}\), which means

where \(R(-1,1)\) is a uniform random function varying in the range [-1,1].

Let \(p^{k}\) be the numerical reconstruction by Algorithms 5.1 at the kth iteration, other than the illustrative figures, we mainly evaluate the numerical performance by the relative \(L^2\)-norm error

We divide the space-time region \(\Omega \times [0,1]\) as equidistant meshes. All the variable-order time-fractional differential equations in Algorithm 5.1 are solved by the continuous linear finite element method in space. And for the time discretization, we use the forward difference scheme to approximate the first order time derivative, while apply the similar finite difference scheme proposed in [52] to approximate the variable-order time-fractional derivative, i.e.,

where \(d_j^m:=(j+1)^{1-\alpha _{m+1}}-j^{1-\alpha _{m+1}}\) for \(j=0,1,\ldots ,m\).

We first start two numerical experiments for the reconstructions of the time-dependent potential in system (1.1) with \(d=1\). We set \(\Omega =(0,1)\) and divide the space-time region \([0,1]\times [0,1]\) into \(40\times 40\) equidistant meshes. We fix \(f(x,t)=5+2\pi x t\), \(a(x,t)=1+x+t\) and set the tuning parameter \(A=2\), the tolerance parameter \(\epsilon =7\times 10^{-3}\) and the regularization parameter \(\beta =0.02\).

Example 5.1

In this example, we take the exact time-dependent component \(p^*(t)=\sin (\pi t)\) in [0, 1] and set the initial guess \(p^0(t)=\frac{3}{2}-6(t-\frac{1}{2})^2\) in [0, 1].

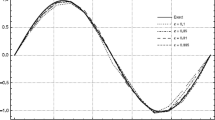

Figure 1 (left) presents the reconstructed solutions and the exact ones, the iteration number k and the relative error \(e_k\) by fixing the noise level \(\delta =3\%\). We can see that the numerical reconstructed solutions appear to be quite satisfactory in the presence of a 3% noise in the data.

Example 5.2

In this example, we take the exact time-dependent component \(p^*(t)=1-t\) in [0, 1] and set the initial guess \(p^0(t)=5(\ln 4-\ln (3+t))\) in [0, 1].

Figure 1 (right) presents the exact and reconstructed solutions, the iteration number k and the relative error \(e_k\) by fixing the noise level \(\delta =3\%\). It appears that the numerical reconstructed solutions is also quite satisfactory in the presence of a 3% noise in the data.

Then we start two numerical experiments for the reconstructions of the time-dependent potential in system (1.1) with \(d=2\). We set \(\Omega =(0,1)^2\) and divide the space-time region \([0,1]^2\times [0,1]\) into \(40^2\times 40\) equidistant meshes. We fix \(f(x_1,x_2,t)=5+2\pi (x_1+x_2) t\), \(a(x_1,x_2,t)=1+x_1+x_2+t\) and set the tuning parameter \(A=2\), the tolerance parameter \(\epsilon =8\times 10^{-3}\) and the regularization parameter \(\beta =0.02\).

Example 5.3

In this example, we take the exact time-dependent component \(p^*(t)=\sin (\pi t)\) in [0, 1] and set the initial guess \(p^0(t)=\frac{3}{2}-6(t-\frac{1}{2})^2\) in [0, 1].

Example 5.4

In this example, we take the exact time-dependent component \(p^*(t)=1-t\) in [0, 1] and set the initial guess \(p^0(t)=5(\ln 4-\ln (3+t))\) in [0, 1].

Figure 2 (left) and (right) present the reconstructed solutions and the exact ones, the iteration number k and the relative error \(e_k\) by fixing the noise level \(\delta =3\%\) for Example 5.3 and Example 5.4 respectively. We can see that the numerical reconstructed solutions also appear to be quite satisfactory in the presence of a 3% noise in the data for two dimensional cases.

6 Concluding remarks

In this paper, we have discussed the inverse problem of recovering a temporally varying potential in the variable order time-fractional diffusion equation from a nonlinear and nonlocal type additional data. We have shown the well-posedness of the forward problem by using the Fredholm alternative principle for compact operators and also proved the uniqueness of the inverse problem by assuming the measurement is strictly positive. Numerically, in order to reduce the computational cost of the Frechét derivative of the minimizing functional, a newly backward adjoint system has been constructed, whose well-posedness has also been verified. The iterative thresholding algorithm has been proposed and several numerical experiments have been presented to show its feasibility and efficiency.

Finally, it would be interesting to investigate what happens with the inverse problem if the additional data can touch zero. Moreover, it is known that the conditional stability results hold for the inverse problems for recovering the spatially varying potential in parabolic or hyperbolic equations based on Carleman estimates. Unfortunately, such techniques do not work in our case. It will be a challenging and interesting direction of research to investigate the stability of the inverse problem.

References

Bagley, R.L., Torvik, P.J.: On the fractional calculus model of viscoelastic behavior. J. Rheol. 30(1), 133–155 (1998)

Chen, C., Liu, F., Burrage, K.: Numerical analysis for a variable-order nonlinear cable equation. J. Comput. Appl. Math. 236, 209–224 (2011)

Chen, C., Liu, F., Anh, V., Turner, I.: Numerical methods for solving two-dimensional variable-order anomalous subdiffusion equation. Math. Comput. 81(277), 345–366 (2012)

Chen, S., Liu, F., Jiang, X., Turner, I., Burrage, K.: Fast finite difference approximation for identifying parameters in a two-dimensional space-fractional nonlocal model with variable difusivity coefcients. SIAM J. Numer. Anal. 54(2), 606–624 (2016)

Davis, L.C.: Model of magnetorheological elastomers. J. Appl. Phys. 85(6), 3348–3351 (1999)

Evans, L.: Partial Differential Equations. American Mathematical Society (1998)

Floridia, G., Li, Z., Yamamoto, M.: Well-posedness for the backward problems in time for general time-fractional diffusion equation. Atti Accad. Naz. Lincei Rend. Lincei Mat. Appl., 31(3): 593–C610 (2020)

Giona, M., Roman, H.E.: Fractional diffusion equation for transport phenomena in random media. Phys. A 185, 82–97 (1992)

Glöckle, W.G., Nonnenmacher, T.F.: A fractional calculus approach to self-similar protein dynamics. Biophys. J . 68, 46–53 (1995)

Gorenflo, R., Luchko, Y., Yamamoto, M.: Time-fractional diffusion equation in the fractional Sobolev spaces. Fract. Calc. Appl. Anal. 18, 799–820 (2015)

Gu, Y., Sun, H.: A meshless method for solving three-dimensional time fractional diffusion equation with variable-order derivatives. Appl. Math. Model. 78(Feb.): 539–549 (2020)

Huang, X., Li, Z., Yamamoto, M.: Carleman estimates for the time-fractional advection-diffusion equations and applications. Inverse Prob. 35, 045003 (2019)

Jiang, D., Li, Z., Liu, Y., Yamamoto, M.: Weak unique continuation property and a related inverse source problem for time-fractional diffusion-advection equations. Inverse Prob. 33(5), 055013 (2016)

Jin, B., Rundell, W.: An inverse problem for a one-dimensional time-fractional diffusion problem. Inverse Probl. 28(7): 075010 (19pp) (2012)

Jin, B., Rundell, W.: A tutorial on inverse problems for anomalous difusion processes. Inverse Prob. 31(3), 035003 (2015)

Kian, Y., Soccorsi, E., Yamamoto, M.: On time-fractional diffusion equations with space-dependent variable order. Ann. Henri Poincaré 19, 3855–3881 (2018)

Kian, Y., Oksanen, L., Soccorsi, E., Yamamoto, M.: Global uniqueness in an inverse problem for time fractional diffusion equations. J. Differ. Equ. 264(2), 1146–1170 (2018)

Kian, Y., Li, Z., Liu, Y., Yamamoto, Y.: The uniqueness of inverse problems for a fractional equation with a single measurement. Math. Ann. 2020: 1–31 (2020)

Kubica, A., Yamamoto, M.: Initial-boundary value problems for fractional diffusion equations with time-dependent coefficients. Fract. Calc. Appl. Anal. 21(2), 276–311 (2018)

Li, G., Zhang, D., Jia, X., Yamamoto, M.: Simultaneous inversion for the space-dependent diffusion coefficient and the fractional order in the time-fractional diffusion equation. Inverse Prob. 29(6), 065014 (2013)

Li, Z., Liu, Y., Yamamoto, M.: Initial-boundary value problem for multi-term time-fractional diffusion equation with positive constants coefficients. Appl. Math. Comput. 257, 381–397 (2015)

Li, Z., Imanuvilov, O.Y., Yamamoto, M.: Uniqueness in inverse boundary value problems for fractional diffusion equations. Inverse Prob. 32(1), 015004 (2016)

Li, Z., Wang, H., Xiao, R., Yang, S.: A variable order fractional differential equation model of shape memory polymers. Chaos Solit. Fract. 102, 473–485 (2017)

Lions, J.L., Magenes, E.: Non-homogeneous Boundary Value Problems and Applications, vol. 1. Springer, Berlin (1972)

Liu, J.J., Yamamoto, M.: A backward problem for the time-fractional diffusion equation. Appl. Anal. 89(11), 1769–1788 (2010)

Liu, Y., Rundell, W., Yamamoto, M.: Strong maximum principle for fractional diffusion equations and an application to an inverse source problem. Fract. Calc. Appl. Anal. 19(4), 888–906 (2016)

Luchko, Y.: Maximum principle for the generalized time-fractional diffusion equation. J. Math. Anal. Appl. 351(1), 218–223 (2009)

Mainardi, F.: Fractional Calculus and Waves in Linear Viscoelasticity: An Introduction to Mathematical Models. World Scientific, Singapore (2010)

Meerschaert, M.M., Sikorskii, A.: Stochastic Models for Fractional Calculus. De Gruyter Studies in Mathematics (2011)

Metzler, R., Klafter, J.: The random walk’s guide to anomalous diffusion: a fractional dynamics approach. Phys. Rep. 339, 1–77 (2000)

Metzler, R., Klafter, J.: Boundary value problems for fractional diffusion equations. Phys. A 278, 107–125 (2000)

Ramlau, R., Teschke, G.: A Tikhonov-based projection iteration for nonlinear ill-posed problems with sparsity constraints. Numer. Math. 104, 177–203 (2006)

Sakamoto, K., Yamamoto, M.: Initial value/boundary value problems for fractional diffusion-wave equations and applications to some inverse problems. J. Math. Anal. Appl. 382, 426–447 (2011)

Shiga, T.: Deformation and viscoelastic behavior of polymer gels in electric fields. Proc. Jpn. Acad. Ser. B 74(1), 6–11 (1998)

Sokolov, I.M., Klafter, J.: Field-induced dispersion in subdiffusion. Phys. Rev. Lett. 97(14), 140602 (2006)

Sun, L., Wei, T.: Identification of the zeroth-order coefficient in a time fractional diffusion equation. Appl. Numer. Math. 111, 160–180 (2017)

Sun, H., Chang, A., Zhang, Y., Chen, W.: A review on variable-order fractional differential equations: mathematical foundations, physical models, numerical methods and applications. Fract. Calc. Appl. Anal. 15, 141–160 (2012)

Sun, H., Chen, W., Li, C., Chen, Y.: Finite difference schemes for variable-order time fractional diffusion equation. Int. J. Bifurc. Chaos 22(4), 1250085 (2012)

Sun, H., Zhang, Y., Chen, W., Donald, M.: Reeves, Use of a variable-index fractional-derivative model to capture transient dispersion in heterogeneous media. J. Contam. Hydrol. 157, 47–58 (2014)

Sun, L., Zhang, Y., Wei, T.: Recovering the time-dependent potential function in a multi-term time-fractional diffusion equation. Appl. Numer. Math. 135, 228–245 (2019)

Temam, R.: Navier–Stokes Equations. Revised North-Holland, Netherlands (1979)

Umarov, S.R., Steinberg, S.T.: Variable order differential equations with piecewise constant order-function and diffusion with changing modes. ZAA 28, 131–150 (2009)

Wang, L., Liu, J.: Data regularization for a backward time-fractional diffusion problem. Comput. Math. Appl. 64(11), 3613–3626 (2012)

Wang, H., Zheng, X.: Wellposedness and regularity of the variable-order time-fractional diffusion equations. J. Math. Anal. Appl. 475, 1778–1802 (2019)

Wang, S., Wang, Z., Li, G., Wang, Y.: A simultaneous inversion problem for the variable-order time fractional differential equation with variable coefficient. Math. Probl. Eng. 2019, 1–13 (2019)

Wu, G., Baleanu, D., Xie, H., Zeng, S.: Discrete fractional diffusion equation of chaotic order. Int. J. Bifurc. Chaos 26(1), 281–286 (2016)

Xu, T., Lü, S., Chen, W., Chen, H.: Finite difference scheme for multi-term variable-order fractional diffusion equation. Adv. Differ. Equ. 2018(1), 103 (2018)

Yu, J., Liu, Y.K., Yamamoto, M.: Theoretical stability in coefficient inverse problems for general hyperbolic equations with numerical reconstruction. Inverse Probl. 34, 045001 (2018)

Zaky, M.A., Doha, E.H., Taha, T.M., Baleanu, D.: New recursive approximations for variable-order fractional operators with applications. Math. Model. Anal. 23(2), 227–239 (2018)

Zhang, Z., Zhou, Z.: Recovering the potential term in a fractional diffusion equation. IMA J. Appl. Math. 82(3), 579–600 (2017)

Zheng, X., Cheng, J., Wang, H.: Uniqueness of determining the variable fractional order in variable-order time-fractional diffusion equations. Inverse Probl. 35 (2019)

Zheng, X., Wang, H.: An optimal-order numerical approximation to variable-order space-fractional diffusion equations on uniform or graded meshes. SIAM J. Numer. Anal. 58, 330–352 (2020)

Zhuang, P., Liu, F.F., Turner, V.A.: Numerical methods for the variable-order fractional advection-diffusion equation with a nonlinear source term. Siam J. Numer. Anal. 47(3), 1760–1781 (2009)

Acknowledgements

The first author is financially supported by National Natural Science Foundation of China (no. 11871240, no. 12271197) and self-determined research funds of CCNU from the colleges’ basic research and operation of MOE (no. CCNU20TS003). The second author thanks National Natural Science Foundation of China (no. 11801326, no. 12271277).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In this part, we shall show the solution to the problem (1.1) is strictly positive provided that the source term \(f>0\) in \(\Omega _T\). We first give the following useful lemma.

Lemma 6.1

Assume \(g\in C[0,T]\) such that \(\frac{d}{dt}g\in C(0,T]\cap L^1(0,T)\) and g(t) attains its minimum value at \(t=t_0\in (0,T)\), then \(\partial _t^{\alpha (t_0)} g(t_0)\le 0\).

Proof

Let \(h(t) = g(t) - g(t_0)\) for \(t\in (0,T)\), then \(h \ge 0\). From the definition of the variable order Caputo derivative, we see that \(\partial _t^{\alpha (\cdot )} h(t) = \partial _t^{\alpha (\cdot )} g(t)\). Therefore, for any \(0<\delta <t_0\) which will be chosen later, we obtain by a direct calculation that

We will estimate \(I_1^\delta\) and \(I_2^\delta\) separately. For \(I_1^\delta\), since \(\frac{d}{dt}h\in L^1(0,T)\), we see that \(\int _0^\eta |\frac{d}{d\tau } h(\tau )| d\tau\) is continuous with respect to \(\eta \in (0,\delta )\). Therefore for any \(\varepsilon >0\), there exists \(0<\delta _0<t_0\) such that for any \(0<\delta <\delta _0\),

It remains to evaluate \(I_2^\delta\). Firstly, noting \(\frac{d}{dt}h \in C(0,T]\) and \(h(t_0)=0\), we conclude for any \(\tau \in (\delta ,t_0)\) that

which implies that

Therefore, by integration by parts, a direct calculation yields that

It is not difficult to check that the last term on the right hand side of the above equality is well defined. Indeed, since \(\frac{d}{dt}h\in C(0,T]\) and \(h(t_0)=0\), we see that

Moreover, using the fact that \(\Gamma (1-\alpha (t_0))>0\), we see that \(I_2^\delta \le 0\). Combining all the above results, we finally see that \(I_1^\delta +I_2^\delta < \varepsilon\) holds true for any \(\varepsilon >0\), that is, \(\partial _t^{\alpha (t_0)} h(t_0) < \varepsilon\).

Since \(\varepsilon >0\) is arbitrarily given, we can assert that \(\partial _t^{\alpha (t_0)} h(t_0) =\partial _t^{\alpha (t_0)} g(t_0)\le 0\). We finish the proof of the lemma. \(\square\)

Lemma 6.2

Assume \(f\in C({\overline{\Omega }}_T)\) satisfying \(f(x,t)>0\) and \(p(t)\ge 0\) for any \(t\in (0,T]\). Then for any \((x,t)\in \Omega _T\) we have \(u(x,t)>0\), where \(u\in C({\overline{\Omega }}_T)\cap C^1((0,T];L^2(\Omega )) \cap L^2(0,T;H^2(\Omega ))\) solves the problem (1.1).

Proof

From the maximum principle, e.g., Luchko [27], it follows that \(u(x,t)\ge 0\) for any \((x,t)\in \Omega _T\). If the strictly positivity of u is not true, we can choose \(t_0\in (0,T)\) and \(x_0\in \Omega\) such that \(u(x_0,t_0)=0\), which means u attains its minimum value zero. We consequently see that \(L(t_0) u(x_0,t_0)\le 0\) and \(\partial _t^{\alpha (t_0)} u(x_0,t_0)\le 0\), where the last assertion is due to Lemma 6.1. Consequently, we see that the estimate \(p(t_0)u(x_0,t_0) = f(x_0,t_0) -L(t_0)u(x_0,t_0)-\partial _t^{\alpha (t_0)} u(x_0,t_0)>0\), which contradicts with \(u(x_0,t_0)=0\). \(\square\)

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jiang, D., Li, Z. Coefficient inverse problem for variable order time-fractional diffusion equations from distributed data. Calcolo 59, 34 (2022). https://doi.org/10.1007/s10092-022-00476-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10092-022-00476-3