Abstract

Finite-time stabilities of a class of fractional-order neural networks delayed systems with order \(\alpha {:}\) \(0<\alpha \le 0.5\) and \(0.5<\alpha <1\) are addressed in this paper, respectively. By using inequality technique, two new delay-dependent sufficient conditions ensuring stability of such fractional-order neural networks over a finite-time interval are obtained. Obtained conditions are less conservative than that given in the earlier references. Two numerical examples are given to show the effectiveness of our proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

It is well known that neural networks have important potential utilization in optimization, signal processing, associative memory, parallel computation, pattern recognition, artificial intelligence, and so on, and such applications heavily depend on the dynamical behavior of neural networks, especially stability. So, the study of stability of neural networks has become one of the most active areas of research [1–6]. Note that these results mainly focus on integer-order neural networks model, in which dynamical behavior of the neurons is described by integer-order derivative. With the rapid development of fractional calculus and its advantages, some scholars claimed that it may be appropriate to depict the “memory” of neurons by using fractional-order derivative [7]. The reasons are that fractional calculus is nonlocal and has weakly singular kernels, and it provides an excellent instrument for the description of memory and hereditary properties of dynamical processes. Up to now, fractional-order neural networks have been attracted wide attention. Some positive and interesting results are obtained in biological neurons [7, 8], neural network approximation [9], parameter estimations [10], and so on. In particular, the dynamical behavior of fractional-order artificial neural networks has been a very recent and promising research topic. The chaotic behavior on fractional-order neural network models has been discussed by numerical simulations in Refs. [11–14], and stability analysis of fractional-order neural network has been discussed in Refs. [15–17]. Refs. [18, 19] considered chaotic synchronization in fractional-order neural networks. Mittag-Leffler stability and synchronization of memristor-based fractional-order neural networks were discussed in Ref. [20].

For the analysis of the stability of the neural network, asymptotic stability is an important concept, which implies convergence of the system trajectories to an equilibrium state over the infinite time, and much effort has been devoted to it. However, in some situations, one is not only interested in system stability (in the sense of Lyapunov), but also for the boundedness properties of system responses (for example, technical and practical stability). It should be paid attention to the fact that a system could be stable but still completely useless because it possesses undesirable transient performances. Thus, it is desirable that the dynamical system possesses the property that the trajectories must be within some bounds during a specific time interval. Hence, finite-time stability was proposed by non-Lyapunov point of view [21]. Neural networks are said to be finite-time stable; given a set of admissible initial conditions, the state trajectories of the system remain, over a prespecified finite-time interval, in a bounded region of the state space. Finite-time stability and asymptotic stability are independent concepts, which neither imply nor exclude each other. Some important results on finite-time stability are obtained, which were also carried out on fractional-order systems, for instance, sufficient conditions were derived for finite-time stability of linear fractional-order delayed systems in Refs. [22–25].

In recent years, there have been some advances in stability theory and control of fractional differential systems [26–38]. However, due to the fact that fractional derivatives are nonlocal and have weakly singular kernels, the analysis on stability of fractional differential equations is more complex and difficult than that of classical differential equations, which causes the development of stability of fractional differential equations is a bit slow, and much attention has also been paid to explore robust stability conditions for fractional-order linear systems, see [39–42] and references therein. In fact, on fractional-order neural network as a typical fractional-order delayed nonlinear system, there is no effective way to analyze its stability. Although Ref. [43] proposed fractional-order Lyapunov direct method to analyze asymptotic stability for fractional-order nonlinear delayed systems in the sense of Lyapunov, to choose a suitable Lyapunov function and calculate the fractional-order derivative are very difficult. Here, our contribution is to adopt new methods and obtain some new sufficient conditions which guarantee that fractional-order delayed neural networks with order \(\alpha {:}\,0<\alpha <1\) is stable over a finite-time interval.

The rest of the paper is organized as follows. Some necessary Definitions, Lemmas and model are given in Sect. 2. Main results are discussed in Sect. 3. Two simple examples are present in Sect. 4.

Notations: \(\Vert x\Vert =\sum _{i=1}^n|x_i|\) and \(\Vert A\Vert \) = max\(_j\sum _{i=1}^n|a_{ij}|\) are the Euclidean vector norm and the matrix norm respectively, where \(x_i\) and \(a_{ij}\) are the element of the vector \(x\) and the matrix \(A\), respectively. \(R^+\) and \(Z^+\) are the sets of positive real and integer numbers, respectively.

2 Preliminaries and problem description

In this section, some notation, definitions and well-known results about fractional differential equations are presented firstly.

Definition 1

The fractional integral (Riemann–Liouville integral) \(D_{t_0, t}^{-\alpha }\) with fractional order \(\alpha \in R^+\) of function \(x(t)\) is defined as

where \(\varGamma (\cdot )\) is the gamma function, \(\varGamma (\tau )=\int _0^\infty t^{\tau -1}e^{-t}\hbox {d}t\).

Definition 2

The Riemann–Liouville derivative of fractional order \(\alpha \) of function \(x(t)\) is given as

where \(n-1<\alpha <n\in Z^+\).

Definition 3

The Caputo derivative of fractional order \(\alpha \) of function \(x(t)\) is defined as follows:

where \(n-1<\alpha <n\in Z^+\).

Based on the definition of integral derivative and fractional derivative, it is recognized that the integral derivative of a function is only related to its nearby points, while the fractional derivative has a relationship with all of the function history information. That is, the next state of a system not only depends upon its current state but also upon its historical states starting from the initial time. As a result, a model described by fractional-order equations possesses memory. It is precisely to describe the state of neuron [16]. In the rest of this paper, we deal with fractional-order neural networks with delay involving Caputo derivative, and the notation \(D^\alpha \) is chosen as the Caputo fractional derivative operator \(D_{0,t}^\alpha \).

The dynamic behavior of a continuous fractional-order delayed neural networks can be described by the following differential equation:

or equivalently

where \(0<\alpha <1\), \(n\) corresponds to the number of units in a neural network; \(x(t)=(x_1(t),\ldots , x_n(t))^\mathrm{T}\in R^n\) corresponds to the state vector at time \(t\); \(f(x(t))=(f_1(x_1(t)),\,f_2(x_2(t)), \ldots , f_n(x_n(t)))^\mathrm{T}\) and \(g(x(t))\) = \((g_1(x_1(t))\), \(g_2(x_2(t))\),\(\ldots \), \( g_n(x_n(t)))^\mathrm{T}\) denote the neuron activation function, and \(f(x)\), \(g(x)\) are Lipschitz continuous, that is, there exist positive constants \(F, G\) such that \(\Vert f(u)-f(v)\Vert <F\Vert u-v\Vert ,\,\Vert g(u)-g(v)\Vert <G\Vert u-v\Vert , \forall u,v \in R^n\), where \(F>0, G>0\); \(C,A,B\) are constant matrices; \(C=\) diag\((c_i>0)\) represents the rate with which the \(i\)th unit will reset its potential to the resting state in isolation when disconnected from the network and external inputs; \(A=[a_{ij}]_{n\times n}\) and \(B=[b_{ij}]_{n\times n}\) are the connection weight matrix and the delayed connection weight matrix, respectively; \(\tau \) is the transmission delay and a nonnegative constant. \(I=(I_1,I_2, \ldots , I_n)^\mathrm{T}\) is an external bias vector. The initial conditions associated with system (1) are of the form \(x_i(t)=\phi _i(t),\ t\in [-\tau ,0],i\in N,\) define the norm \(\Vert \phi \Vert =\sup _{\theta \in [-\tau ,0]}\Vert \phi (\theta )\Vert \). With a given initial function, system (1) is defined over time interval \(J=[t_0, t_0+T]\), where quantity \(T\) may be any a positive real number.

Definition 4

[23] The solution of system (1) is said to be finite-time stable w.r.t. \(\{t_0, J, \delta , \varepsilon \}\), if and only if \(\Vert \varphi (t_0)-\phi (t_0)\Vert <\delta \) imply \(\Vert y(t, t_0, \varphi )-x(t, t_0, \phi )\Vert <\varepsilon \) for any two solutions \(x(t, t_0, \phi )\) and \(y(t, t_0, \varphi )\), \(\forall t\in J=[t_0, t_0+T]\), where \(\delta , \varepsilon \) are real positive numbers and index \(\varepsilon \) stands for the set of all allowable states of the system and index \(\delta \) for the set of all initial states of the system (\(\delta <\varepsilon \)).

In order to obtain main results, the following lemmas are presented for subsequent use.

Lemma 1

[44] If \(x(t)\in C^m[0,\infty )\) and \(m-1<\alpha <m\in z^+\), then

Lemma 2

[45] (Hölder Inequality) Assume that \(p,q>1\), and \(\frac{1}{p}+\frac{1}{q}=1\), if \(|f(\cdot )|^p\), \(|g(\cdot )|^q \in L^1(E)\), then \(f(\cdot )g(\cdot )\in L^1(E)\) and

where \(L^1(E)\) be the Banach space of all Lebesgue measurable functions \(f{:}\,E\rightarrow R\) with \(\int _E|f(x)|\mathrm {d}x<\infty \).

Let \(p=q=2\), it reduces to the Cauchy–Schwartz inequality as follows:

Lemma 3

[46] Let \(u(t), \omega (t), v(t)\) and \(h(t)\) be nonnegative continuous functions on \(R^+\), and let \(r\ge 1\) be a real number. If

then

where \( W(t)=\exp \left( -\int _0^tv(s)\omega ^r(s)\mathrm {d}s\right) \).

Lemma 4

[45] (Generalized Bernoulli inequality) If \(r\in R^+\), \(x<1\) and \(x\ne 0\), then, for \(0<r<1\)

or

3 Main results

In this section, two sufficient conditions are proposed for finite-time stability of a class of fractional-order neural networks delayed systems with order \(\alpha {:}\) \(0<\alpha \le 0.5\) and \(0.5< \alpha <1\), respectively.

Theorem 1

When \(\frac{1}{2}<\alpha <1\), if

where \(M_1=\frac{(\Vert C\Vert +\Vert A\Vert F)\sqrt{2\varGamma (2\alpha -1)}}{\varGamma (\alpha )2^\alpha }\), \(M_2=\frac{\Vert B\Vert G\sqrt{2\varGamma (2\alpha -1)}}{\varGamma (\alpha )2^\alpha }\), then system (1) is finite-time stable w.r.t \(\{0, J, \delta , \varepsilon \}\), \(\delta <\varepsilon \).

Proof

Assume that \(x(t)=(x_1(t),\ldots ,x_n(t))^\mathrm{T}\) and \(y(t)=(y_1(t),\ldots ,y_n(t))^\mathrm{T}\) are any two solutions of (1) with different initial conditions \(\phi (0)\) and \(\varphi (0)\), and denote \(e(t)=x(t)-y(t), \sigma (0)=\phi (0)-\varphi (0)\). It follows from the Lemma 1 that system (1) is equivalent to the following Volterra fractional integral equation with memory

It is easy to obtain that

By using Lemma 2 (Cauchy–Schwarz inequality), one has

Note that

Substituting (5) into (4), one obtains that

where \(M_1=\frac{(\Vert C\Vert +\Vert A\Vert F)\sqrt{2\varGamma (2\alpha -1)}}{\varGamma (\alpha )2^\alpha }\), \(M_2=\frac{\Vert B\Vert G\sqrt{2\varGamma (2\alpha -1)}}{\varGamma (\alpha )2^\alpha }\).

Note that \(e(t)=\sigma (t)(t\in [-\tau ,0])\) and \(\Vert \sigma (0)\Vert \le \Vert \sigma \Vert =\sup _{\theta \in [-\tau ,0]}\Vert \sigma (\theta )\Vert \), we see that

Together (6) with (7), it follows

which implies

Let \(u_0(t)=\Vert \sigma \Vert +M_2\Vert \sigma \Vert \), \(\omega (t)=(M_1+M_2e^{-\tau })\), \(u(t)=e^{-t}\Vert e(t)\Vert \), \(v(t)=1\) in Lemma 3, one have \(W(t)=e^{-(M_1+M_2e^{-\tau })^2t}\) and

Let \(r=\frac{1}{2}\), \(x=e^{-(M_1+M_2e^{-\tau })^2t}\) in Lemma 4, we have

therefore,

So, if (3) is satisfied and \(\Vert \sigma \Vert <\delta \), then \(\Vert e(t)\Vert <\varepsilon \), \(t\in J\), i.e., system (1) is finite-time stable.

Theorem 2

When \(0<\alpha \le \frac{1}{2}\), if

where \(N_1=(\Vert C\Vert +\Vert A\Vert F)(\frac{\varGamma (p(\alpha -1)+1)}{{\varGamma ^p(\alpha )p^{p(\alpha -1)+1}}})^{\frac{1}{p}}\), \(N_2=\Vert B\Vert G(\frac{\varGamma (p(\alpha -1)+1)}{{\varGamma ^p(\alpha )p^{p(\alpha -1)+1}}})^{\frac{1}{p}}\), \(p=1+\alpha , q=1+\frac{1}{\alpha }\), then system (1) is finite time stable w.r.t \(\{0, J, \delta , \varepsilon \}\), \(\delta <\varepsilon \).

Proof

According to the process of Theorem 1, we can obtain the following estimation

By setting \(p=1+\alpha , q=1+\frac{1}{\alpha }\). Obviously, \(\frac{1}{p}+\frac{1}{q}=1\). It follows from Lemma 2 that

Note that

where \(p(\alpha -1)+1=\alpha ^2>0\).

Substituting (10) into (9), one gets

From \(\Vert \sigma (0)\Vert \le \Vert \sigma \Vert =\sup _{\theta \in [-\tau ,0]}\Vert \sigma (\theta )\Vert \), it yields

where \(N_1=(\Vert C\Vert +\Vert A\Vert F)(\frac{\varGamma (p(\alpha -1)+1)}{{\varGamma ^p(\alpha )p^{p(\alpha -1)+1}}})^{\frac{1}{p}}\), \(N_2=\Vert B\Vert G(\frac{\varGamma (p(\alpha -1)+1)}{{\varGamma ^p(\alpha )p^{p(\alpha -1)+1}}})^{\frac{1}{p}}\), which means that

Denote \(u_0(t)=\Vert \sigma \Vert +N_2\Vert \sigma \Vert \), \(\omega (t)=(N_1+N_2e^{-\tau })\), \(u(t)=e^{-t}\Vert e(t)\Vert \), \(v(t)=1\) in Lemma 3, one gets \(W(t)=e^{-(N_1+N_2e^{-\tau })^qt}\) and

Let \(r=\frac{1}{q}\) and \(x=e^{-(N_1+N_2e^{-\tau })^qt}\) in Lemma 4, we have

Combining (11) and (12), one obtains

So, if (8) is satisfied and \(\Vert \sigma \Vert <\delta \), then \(\Vert e(t)\Vert <\varepsilon \), \(t\in J\), i.e., system (1) is finite-time stable.

Remark 1

The fractional-order integral-differential operator is the extended concept of integer-order integral-differential operator. Under the definition of Caputo, one can easily arrives at a fact that Caputo derivative of a constant is equal to zero. Therefore, it follows from Ref. [47] that there is at least an equilibrium point for system (1). Further, according to Theorem 1 and 2, the following corollaries hold.

Corollary 1

When \(\frac{1}{2}<\alpha <1\),if

where \(M_1=\frac{(\Vert C\Vert +\Vert A\Vert F)\sqrt{2\varGamma (2\alpha -1)}}{\varGamma (\alpha )2^\alpha }\), \(M_2=\frac{\Vert B\Vert G\sqrt{2\varGamma (2\alpha -1)}}{\varGamma (\alpha )2^\alpha }\), then the equilibrium point of system (1) is finite -ime stable w.r.t \(\{0, J, \delta , \varepsilon \}\), \(\delta <\varepsilon \).

Corollary 2

When \(0<\alpha \le \frac{1}{2}\), if

where \(N_1=(\Vert C\Vert +\Vert A\Vert F)(\frac{\varGamma (p(\alpha -1)+1)}{{\varGamma ^p(\alpha )p^{p(\alpha -1)+1}}})^{\frac{1}{p}}\), \(N_2=\Vert B\Vert G(\frac{\varGamma (p(\alpha -1)+1)}{{\varGamma ^p(\alpha )p^{p(\alpha -1)+1}}})^{\frac{1}{p}}\), \(p=1+\alpha , q=1+\frac{1}{\alpha }\), then the equilibrium point of system (1) is finite -ime stable w.r.t \(\{0, J, \delta , \varepsilon \}\), \(\delta <\varepsilon \).

Remark 2

Reference [16] discussed the uniform stability of system (1), but there exits some conservation that the initial conditions associated with system (1) equals zero. Reference [15] considered the finite-time stability of system (1), which only concerned the fractional-order \(\alpha \) lying in \((1,2)\).

Remark 3

References [22–25] have considered the finite-time stability of fractional-order linear delayed systems, but without addressing nonlinear systems. Here, a typical fractional-order nonlinear delayed systems are discussed.

Remark 4

Quite a few delay-independent finite-time stability criteria were derived for fractional-order neural networks with delay in Refs. [15, 48, 49]. Generally, delay-dependent results are less conservative than delay-independent ones when the delays are small. Here, two delay-dependent conditions are established.

Remark 5

From Definition 4, it is not hard to see that the bigger estimated time of finite-time stability will be better. For comparison, example proposed in Ref. [50] is given in next section. Numerical calculations show that the estimated time of finite-time stability obtained in this paper is bigger than that of Ref. [50]. In addition, the obtained results in this paper are slightly simpler than that of Ref. [50] in form.

4 Numeric example

In this section, we consider two simple examples to illustrate the effectiveness of theoretical results.

Example 1

Consider the fractional-order delayed Hopfield neural model (1) with following parameters, which was presented in Ref. [50]

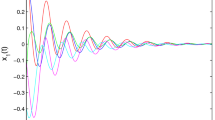

fractional order \(\alpha =0.4\) or \(\alpha =0.7\). The activation function is described by \(f(x)=g(x)=\tanh x\), \(\tau =0.1\). Clearly, \(f(x)\) and \(g(x)\) satisfy Lipschitz condition with \(F=G=1\). \(\Vert A\Vert =0.3, \Vert B\Vert =0.7, \Vert C\Vert =0.1\). Choosing the initial values \((0.08, 0.05)^\mathrm{T}\). There is a task of checking the finite-time stability w.r.t. \(\{t_0=0; J=[0,4]; \delta =0.1; \varepsilon =1; \tau =0.1\}\). When \(\alpha =0.7\), \(M_1=0.3995\), \(M_2=0.692\), according to Corollary 1, the estimated time of finite-time stability is \(0.607\), which is bigger than \(0.5542\) in Ref. [50]. The equilibrium point is finite-time stable, which is depicted in Fig. 1. When \(\alpha =0.4\), \(N_1=0.6099\), \(N_2=1.0674\). It follows from inequality (14) that the estimated time of finite-time stability is \(0.7522\), which is bigger than \(0.6700\) in Ref. [50]. Conditions of Corollary 2 are satisfied. Therefore, the equilibrium point is finite-time stable. Numeric simulation is shown in Fig. 2.

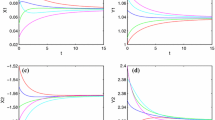

Example 2

A fractional-order Hopfield neural network of three neurons with the following parameters is given

\(\alpha =0.3\) or \(\alpha =0.8\). The activation functions are given by \(f(x)=\sin x, g(x)=\tanh x\), \(\tau =0.2\). Obviously, \(F=G=1\), \(\Vert A\Vert =0.16, \Vert B\Vert =0.14, \Vert C\Vert =0.55\). The initial values are chosen as \((0.1, 0.2, 0.3)^\mathrm{T}\). One has to check the finite-time stability, w.r.t. \(\{t_0=0; J=[0,4]; \delta =0.1; \varepsilon =1; \tau =0.2\}\). When \(\alpha =0.8\), \(M_1=0.5619\), \(M_2=0.1192\), based on inequality (13), the estimated time of finite-time stability is \(0.835\). The equilibrium point is finite-time stable, which is depicted in Fig. 3. When \(\alpha =0.3\), \(N_1=1.3827\), \(N_2=0.2933\). It follows from inequality (14) that the estimated time of finite-time stability is \(0.3183\). The time evolution of states is shown in Fig. 4.

5 Conclusion

In this paper, finite-time stability for a class fractional-order delayed neural networks with order \(\alpha {:}\, 0<\alpha <0.5\) and \( 0.5<\alpha <1\) was considered. Two effective criteria to ensure the finite-time stability for this class of fractional order systems were derived. Illustrative examples are presented to demonstrate the effectiveness of the derived criteria.

References

Rakkiyappan R, Chandrasekar A, Lakshmanan S, Park J, Jung H (2013) Effects of leakage time-varying delays in Markovian jump neural networks with impulse control. Neurocomputing 121:365–378

Rakkiyappan R, Chandrasekar A, Lakshmanan S, Park J (2014) Exponential stability of Markovian jumping stochastic Cohen–Grossberg neural networks with mode-dependent probabilistic time-varying delays and impulses. Neurocomputing 131:265–277

Li T, Wang T, Song A, Fei S (2013) Combined convex technique on delay-dependent stability for delayed neural networks. IEEE Trans Neural Netw Learn Syst 24(9):1459–1466

Wang X, Li C, Huang T, Duan S (2014) Global exponential stability of a class of memristive neural networks with time-varying delays. Neural Comput Appl 24(7–8):1707–1715

Rakkiyappan R, Zhu Q, Chandrasekar A (2014) Stability of stochastic neural networks of neutral type with markovian jumping parameters: A delay fractioning approach. J Franklin Inst 351(3):1553–1570

Xiao M, Zheng W, Cao J (2013) Bifurcation and control in a neural network with small and large delays. Neural Netw 44:132–142

Lundstrom B, Higgs M, Spain W, Fairhall A (2008) Fractional differentiation by neocortical pyramidal neurons. Nat Neurosci 11:1335–1342

Anastasio T (1994) The fractional-order dynamics of brainstem vestibulooculomotor neurons. Biol Cybern 72:69–79

Anastassiou G (2012) Fractional neural network approximation. Comput Math Appl 64(6):1655–1676

Boroomand A, Menhaj M (2009) Fractional-order Hopfield neural networks. Lecture Notes in Computer Science 5506:883–890

Arena P, Fortua L, Porto D (2000) Chaotic behavior in noninteger-order cellular neural networks. Phys Rev E 61:776–781

Liu L, Liu C, Liang D (2013) Hyperchaotic behavior in arbitrary-dimensional fractional-order quantum cellular neural network model. Int J Bifurc Chaos 23(3):1350044

Kaslik E, Sivasundaram S (2012) Nonlinear dynamics and chaos in fractional-order neural networks. Neural Netw 32:245–256

Huang X, Zhao Z, Wang Z, Li Y (2012) Chaos and hyperchaos in fractional-order cellular neural networks. Neurocomputing 94:13–21

Wu R, Hei X, Chen L (2013) Finite-time stability of fractional-order neural networks with delay. Commun Theor Phys 60(2):189–193

Chen L, Chai Y, Wu R, Ma T, Zhai H (2013) Dynamic analysis of a class of fractional-order neural networks with delay. Neurocomputing 111:190–194

Alofi A, Cao J, Elaiw A, Al-Mazrooei A (2014) Delay-dependent stability criterion of Caputo fractional neural networks with distributed delay. Discret Dyn Nat Soc, 529358

Chen L, Qu J, Chai Y, Wu R, Qi G (2013) Synchronization of a class of fractional-order chaotic neural networks. Entropy 15(8):3265–3276

Zhou S, Hua L, Zhua Z (2008) Chaos control and synchronization in a fractional neuron network system. Chaos Solitons Fractals 36(4):973–984

Chen J, Zeng Z, Jiang P (2014) Global Mittag–Leffler stability and synchronization of memristor-based fractional-order neural networks. Neural Netw 51:1–8

Dorato P (1961) Short time stability in linear time-varying systems. In: Proceedings of IRE international convention record part 4:83–87

Zhang X (2008) Some results of linear fractional order time-delay system. Appl Math Comput 197:407–411

Lazarevic M, Spasic A (2009) Finite-time stability analysis of fractional order time-delay systems: Gronwall’s approach. Math Comput Model 49(3–4):475–481

Lazarevic M, Debeljkovic D (2005) Finite time stability analysis of linear autonomous fractional order systems with delayed state. Asian J Control 7(4):440–447

Lazarevic M (2006) Finite time stability analysis of PD\(^\alpha \) fractional control of robotic time-delay systems. Mech Res Commun 33(2):269–279

Aghababa M (2014) A Lyapunov-based control scheme for robust stabilization of fractional chaotic systems. Nonlinear Dyn 78:2129C2140

Roohi M, Aghababa M, Haghighi A (2014) Switching adaptive controllers to control fractional-order complex systems with unknown structure and input nonlinearities. Complexity. doi:10.1002/cplx.21598

Aghababa M (2014) Synchronization and stabilization of fractional second-order nonlinear complex systems. Nonlinear Dyn. doi:10.1007/s11071-014-1411-4

Aghababa M (2014) Fractional modeling and control of a complex nonlinear energy supply-demand system. Complexity. doi:10.1002/cplx.21533

Haghighi A, Aghababa M, Roohi M (2014) Robust stabilization of a class of three-dimensional uncertain fractional-order non-autonomous systems. Int J Ind Math 6(2):133–139

Aghababa M (2014) Control of fractional-order systems using chatter-free sliding mode approach. J Comput Nonlinear Dyn 9(3):031003

Aghababa M (2014) A switching fractional calculus-based controller for normal non-linear dynamical systems. Nonlinear Dyn 75(3):577–588

Aghababa M (2014) Control of nonlinear non-integer-order systems using variable structure control theory. Trans Inst Measure Control 36(3):425–432

Aghababa M (2013) No-chatter variable structure control for fractional nonlinear complex systems. Nonlinear Dyn 73(4):2329–2342

Aghababa M (2013) Design of a chatter-free terminal sliding mode controller for nonlinear fractional-order dynamical systems. Int J Control 86:1744–1756

Aghababa M (2013) A novel terminal sliding mode controller for a class of non-autonomous fractional-order systems. Nonlinear Dyn 73(1–2):679–688

Aghababa M (2012) Finite-time chaos control and synchronization of fractional-order nonautonomous chaotic (hyperchaotic) systems using fractional nonsingular terminal sliding mode technique. Nonlinear Dyn 69(1–2):247–261

Aghababa M (2012) Robust stabilization and synchronization of a class of fractional-order chaotic systems via a novel fractional sliding mode controller. Commun Nonlinear Sci Numer Simul 17:2670–2681

Chen Y, Ahn H, Podlubny I (2007) Robust stability test of a class of linear time-invariant interval fractional-order system using Lyapunov inequality. Appl Math Comput 187(1):27–34

Moornani K, Mohammad H (2009) On robust stability of linear time invariant fractional-order systems with real parametric uncertainties. ISA Trans 48(4):484–490

Lim Y, Oh K, Ahn H (2013) Stability and stabilization of fractional-order linear systems subject to input saturation. IEEE Trans Autom Control 58(4):1062–1067

Deng W, Li C, Lu J (2007) Stability analysis of linear fractional differential system with multiple time delays. Nonlinear Dyn 48(4):409–416

Sadati S, Baleanu D, Ranjbar A, Ghaderi R, Abdeljawad T (2010) Mittag–Leffler stability theorem for fractional nonlinear systems with delay. Abstr Appl Anal, 108651

Li C, Deng W (2007) Remarks on fractional derivatives. Appl Math Comput 187(2):777–784

Mitrinovic D (1970) Analytic inequalities. Springer, Berlin

Willett D (1964) Nonlinear vector integral equations as contraction mappings. Arch Ration Mech Anal 15:79–86

Cao J (1999) Global stability analysis in delayed cellular neural networks. Phys Rev E 59:5940–5944

Yang X, Song Q, Liu Y, Zhao Z (2014) Finite-time stability analysis of fractional-order neural networks with delay. Neurocomputing. doi:10.1016/j.neucom.2014.11.023i

Ke Y, Miao C (2014) Stability analysis of fractional-order CohenCGrossberg neural networks with time delay. Int J Comput Math. doi:10.1080/00207160.2014.935734

Wu R, Lu Y, Chen L (2015) Finite-time stability of fractional delayed neural networks. Neurocomputing 149:700–707

Acknowledgments

This work was supported by the National Natural Science Funds of China for Distinguished Young Scholar under Grant (No. 50925727), the National Natural Science Foundation of China (Nos. 61403115, 61374135), the National Defense Advanced Research Project Grant (Nos. C1120110004, 9140 A27020211DZ5102) and the Key Grant Project of Chinese Ministry of Education under Grant (No. 313018).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chen, L., Liu, C., Wu, R. et al. Finite-time stability criteria for a class of fractional-order neural networks with delay. Neural Comput & Applic 27, 549–556 (2016). https://doi.org/10.1007/s00521-015-1876-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-015-1876-1