Abstract

An improved adaptive Kriging model-based metamodel importance sampling (IS) reliability analysis method is proposed to increase the efficiency of failure probability calculation. First, the silhouette plot method is introduced to judge the optimal number of clusters for k-means to establish the IS density function, thus avoiding the problem of only assuming clusters arbitrarily. Second, considering the prediction uncertainty of the Kriging model, a novel learning function established from the uncertainty of failure probability is proposed for adaptive Kriging model establishment. The proposed learning function is established based on the variance information of failure probability. The major benefit of the proposed learning function is that the distribution characteristic of the IS density function is considered, thus fully reflecting the impact of the IS function on active learning. Finally, the coefficient of variation (COV) information of failure probability is adopted to define a novel stopping criterion for learning function. The performance of the proposed method is verified through different numerical examples. The findings demonstrate that the refined learning strategy effectively identifies samples with substantial contributions to failure probability, showcasing commendable convergence. Particularly notable is its capacity to significantly reduce function call volumes with heightened accuracy for scenarios featuring variable dimensions below 10.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

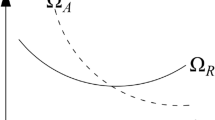

Reliability analysis theory has been widely used in many engineering problems [19, 22]. The most basic requirement is to calculate the failure probability. Presently, reliability methods mainly include numerical simulation methods and moment estimation methods. The most commonly used numerical simulation method is the crude Monte Carlo (MC) method [18]. However, since a large number of function calls are required, the efficiency is quite low. Moment estimation methods use the performance function values at some feature points to calculate moment information, and the failure probability is approximated through the calculated moment information. Presently, the first and second-order reliability methods are two classical methods [16]. These methods use the linear or quadratic function at the design point to approximate the real performance function, which is related to the nonlinearity degree of the performance function. For high-level nonlinear problems, the accuracy might be low.

To increase the efficiency and reduce the number of required function calls of the MC method, researchers often use surrogate models to fit the real performance function, such as Kriging [10, 35, 39, 40, 48, 52], neural networks [3, 23], radial basis functions [9, 26, 51] and support/relevant vector machines [19, 20, 24]. Presently, the most popular surrogate model might be the Kriging model, owing to its built-in error and uncertainty measures [18, 30, 38]. As one of the classical methods, AK-MCS [6] combines the U learning function and the Monte Carlo (MC) method. Based on the idea of AK-MCS, different learning functions [25, 29, 39, 41, 49] and stopping criteria [14, 33] are also proposed. However, there are some shortcomings in the MC-based surrogate methods. First, the candidate sample size of MC is usually quite large. As the values of the learning function should be calculated for all candidate samples, MC may consume computer memory for small failure probability problems [13]. Second, the candidate samples in MC are randomly generated in the whole sample space. This will result in many unnecessary training points with a low contribution to failure probability.

To solve the problems of crude MC, researchers often combine some improved sampling strategies with the Kriging model, such as importance sampling (IS) [31, 50], subset simulation (SS) [2, 17, 32], line sampling [21, 27], region partition sampling methods [11, 27], directional and [47] importance directional sampling [12]. These methods all have their own advantages. In this paper, we mainly focus on the IS methods. Table 1 lists the information on the current IS-based surrogate mode methods.

As listed in Table 1, a commonly used strategy is to establish the IS density function at the design point. However, this strategy has some limitations. First, it is not suitable for reliability problems with multiple failure modes. Second, the design point calculation is usually a constrained optimization problem. For the performance function with high nonlinearity, design points may not be easily available. Another widely used strategy is to use the clustering method to establish the IS density function. This approach is suitable for multiple failure modes, but it requires an artificial assumption of the number of clusters. For most reliability problems, it is quite hard to apply if the shape of the performance function is not known. In addition, the U function is often adopted for the adaptive Kriging-based IS method. The limitation is that the stopping criterion of the U function is too conservative, and it is established only based on the Kriging prediction variance without the distribution information of random samples. The above shortcomings will result in the selection of many unnecessary training samples with a low contribution to failure probability and low computational efficiency. Wen [34] and Xiao [36] have verified that by considering the probability density function in the original distribution, the computation cost of active learning in MC-based Kriging methods is greatly reduced. In the IS method, as random samples are generated based on the IS density function, the information on the IS density function should also be considered in active learning to select the most informative samples. Presently, the learning functions for the MC method are often directly applied to IS, and there is no special research on the adaptive learning function for the IS method. Since Meta-IS-AK includes the advantages of both Meta-IS and AK-IS, the purpose of this paper is to propose an improved Meta-IS-AK method for reliability analysis to further increase efficiency. First, the k-means cluster algorithm in IS density function construction is improved by the silhouette plot. Through the mean silhouette value, the silhouette plot can judge the optimal clusters, thus solving the problem of only an arbitrarily given number of clusters. Second, a novel learning function considering the characteristics of the IS density function is proposed for Kriging model updating. Then a novel stopping criterion is adopted to increase the efficiency of active learning. The proposed active learning strategy is established based on the variance of failure probability caused by the Kriging prediction uncertainty, which is specially used for the IS method.

The rest of the paper is organized as follows: Sect. 2 introduces the concept of explosion Meta-IS-AK. Section 3 introduces the proposed active learning Kriging model for IS. Section 4 introduces the proposed improvements for Meta-IS-AK. Section 5 summarizes the steps of the proposed method. Section 6 uses the numerical model to illustrate the proposed method. Section 7 draws the conclusions.

2 Reliability analysis based on Meta-IS-AK

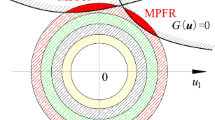

Based on the Kriging model \(g_K \left( {{\varvec{x}}} \right)\), the IS density function \(h_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\) in Meta-IS-AK is defined as:

where \(f_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\) is the joint probability density function of input variables \({{\varvec{x}}} = \left( {x_1 ,x_2 , \cdot \cdot \cdot ,x_n } \right)\). \(\pi \left( {{\varvec{x}}} \right)\) is the probability classification function:

where \(\mu_{g_K } \left( {{\varvec{x}}} \right)\) and \(\sigma_{g_K } \left( {{\varvec{x}}} \right)\) are the mean and standard deviation of the Kriging predicted value, respectively. \(\phi \left(\bullet \right)\) is the cumulative distribution function of the standard normal distribution. \(P_{f\varepsilon }\) is the augmented failure probability, which is defined as:

The failure probability \(P_f\) based on Eq. (1) is calculated by:

where \(I_F \left( {{\varvec{x}}} \right)\) is the indicator function. If \(g_K \left( {{\varvec{x}}} \right) \le 0\), \(I_F \left( {{\varvec{x}}} \right) = 1\), otherwise \(I_F \left( {{\varvec{x}}} \right) = 0\).\(\alpha_{corr}\) is the correction factor. The estimated \({\mathop{P}\limits^{\frown}}_{f\varepsilon }\) and \({\mathop{\alpha }\limits^{\frown}}_{coor}\) and the corresponding coefficient of variance (COV) are calculated by:

where \({{\varvec{x}}}_f^{\left( i \right)}\) and \({{\varvec{x}}}_h^{\left( j \right)}\) are the random samples generated by \(f_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\) and \(h_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\), respectively. \(N_\varepsilon\) and \(N_{corr}\) are the size of random samples and IS samples, respectively.

The estimated failure probability \({\mathop{P}\limits^{\frown}}_f\) and its COV are defined as:

\({\text{Cov}}\left( {{\mathop{P}\limits^{\frown}}_f } \right)\) should be less than a small constant \(\lambda_{Pf}\) to ensure the robustness of \({\mathop{P}\limits^{\frown}}_f\), which is usually defined as \(\lambda_{Pf} = 5\%\). The failure probability in Meta-IS-AK is calculated in two stages. In the first stage, the updating strategy in Meta-IS based on the k-means algorithm is adopted to approximate \(h_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\) and obtain IS samples. By setting the number of clusters \(K\), the clustering centers are taken as the added samples to update the Kriging model. When the leave-one-out estimate \({\mathop{\alpha }\limits^{\frown}}_{corrLOO}\) meets the convergence criterion, the first-stage Kriging model is obtained. \({\mathop{\alpha }\limits^{\frown}}_{corrLOO}\) is defined as:

where \(m\) is the current size of training samples. \(\pi_{\left( {T/x_T^{\left( i \right)} } \right)} \left( {{{\varvec{x}}}_T^{\left( i \right)} } \right)\) is the probability classification function constructed by the Kriging model without the \(ith\) training sample. As suggested by Zhu [53], the convergence criterion of \({\mathop{\alpha }\limits^{\frown}}_{corrLOO}\) and \(m\) should be \({\mathop{\alpha }\limits^{\frown}}_{corrLOO} \in \left[ {0.1,10} \right] \cap m > 30\). If the condition is satisfied, \(h_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\) is considered to be convergent. Then \({\mathop{P}\limits^{\frown}}_{f\varepsilon }\) can be calculated through Eq. (6) by generating random samples through \(f_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\). The second stage is to update the Kriging model based on the idea of AK-IS through the generated IS samples and U learning function. When the second-stage Kriging model has sufficient accuracy,\({\mathop{\alpha }\limits^{\frown}}_{corr}\) could be calculated through Eq. (7). Once \({\mathop{P}\limits^{\frown}}_{f\varepsilon }\) and \({\mathop{\alpha }\limits^{\frown}}_{corr}\) are calculated, \({\mathop{P}\limits^{\frown}}_f\) can be easily obtained.

3 The proposed adaptive Kriging model for Meta-IS-AK

This paper proposes a novel active learning strategy considering the influence of \(h_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\) for Meta-IS-AK to select the most informative samples. The proposed method consists of two parts: the learning function and the stopping criterion. The two parts will be introduced below.

3.1 The learning function

Based on Eq. (2), \(I_F \left( {{\varvec{x}}} \right)\) predicted by the Kriging model is subjected to a Bernoulli distribution with a mean \(E\left[ {I_F \left( {{\varvec{x}}} \right)} \right]\) and a standard deviation \(V\left[ {I_F \left( {{\varvec{x}}} \right)} \right]\):

Based on Eq. (10), the IS-based failure probability \(P_f^r\) is also a random variable, which is caused by the prediction uncertainty of the Kriging model. The corresponding mean \(E\left[ {P_f^r } \right]\) and variance \(V\left[ {P_f^r } \right]\) can be calculated by:

where \(CV\left[ {I_F \left( {{\varvec{x}}} \right),I_F \left( {{{\varvec{x}}}^{\prime}} \right)} \right]\) is the covariance between \(I_F \left( {{\varvec{x}}} \right)\) and \(I_F \left( {{{\varvec{x}}}^{\prime}} \right)\). As suggested by Dang [4], Cauchy–Schwarz inequality could be adopted to estimate \(CV\left[ {I_F \left( {{\varvec{x}}} \right),I_F \left( {{{\varvec{x}}}^{\prime}} \right)} \right]\), that is:

Then Eq. (12) is changed to:

Based on Eq. (14), the proposed novel variance-based learning function \(VL\left( {{\varvec{x}}} \right)\) is defined as:

\(VL\left( {{\varvec{x}}} \right)\) reflects the uncertainty of Kriging prediction at the sample point \({{\varvec{x}}}\). The larger \(VL\left( {{\varvec{x}}} \right)\) means the greater variance with greater uncertainty. Therefore, the adding point criterion could be defined as: \({{\varvec{x}}}^* = \max \left[ {VL\left( {{\varvec{x}}} \right)} \right]\). Compared with the existing learning functions, the greatest advantage of \(VL\left( {{\varvec{x}}} \right)\) is that \(h_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\) is included, which can more fully reflect the distribution characteristic of IS samples. In the Meta-IS-AK, one of the key steps is to calculate \(\alpha_{corr}\). As \(\alpha_{corr}\) is related to \(h_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\), the proposed \(VL\left( {{\varvec{x}}} \right)\) learning function is more suitable for Meta-IS-AK.

3.2 The stopping criterion

The stopping criterion of the U learning function is adopted in the Meta-IS-AK methods. However, this criterion is too conservative, which will lead to too many redundant training samples. To solve the problem, this paper will adopt two different stopping criteria for Meta-IS-AK. The first is the proposed COV-based stopping criterion considering the mean and standard deviation of \(P_f^r\). Based on Eqs. (11) and (14), the estimated \(E\left[ {{\mathop{P}\limits^{\frown}}_f^r } \right]\) and \(V\left[ {{\mathop{P}\limits^{\frown}}_f^r } \right]\) can be calculated by:

where \(N_{IS}\) is the size of generated IS samples. Then the estimated COV of \(P_f^r\) can be calculated by:

\({\text{COV}}\left[ {{\mathop{P}\limits^{\frown}}_f^r } \right]\) should be small enough to ensure the accuracy. Then the COV-based stopping criterion could be defined as: \({\text{COV}}\left[ {{\mathop{P}\limits^{\frown}}_f^r } \right] < \lambda_{thr}\). \(\lambda_{thr}\) is a small constant defining the allowable \({\text{COV}}\left[ {{\mathop{P}\limits^{\frown}}_f^r } \right]\).

The second is the existing error-based stopping criterion. This criterion was first proposed by Wang [33] for AK-MCS. In order to make it more suitable for Meta-AK-IS, this paper adopts the improved error-based stopping criterion proposed by Yun [44], considering the effect of \(h_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\). For a given IS sample, the probability that the predicted sign of \(g_K \left( {{\varvec{x}}} \right)\) is wrong is written as:

where \(I_F^w = 1\) means the event that the predicted sign is wrong. Based on Eq. (19), \(I_F^w = 1\) is also a random variable with a mean \(E\left[ {I_F^w = 1} \right]\) and a variance \(V\left[ {I_F^w = 1} \right]\):

As suggested by Ref. [40], the size of samples \({\mathop{S}\limits^{\frown}}_s\) predicted by the Kriging model that fail but actually reliable follows a normal distribution with a mean \(\, \mu_{{\mathop{S}\limits^{\frown}}_s }\) and a standard deviation \(\sigma_{{\mathop{S}\limits^{\frown}}_s }\), that is:

where \({\mathop{N}\limits^{\frown}}_s\) is the size of predicted reliable samples. Reference [33] suggested that the size of samples \({\mathop{S}\limits^{\frown}}_f\) predicted by the Kriging model that are reliable but actually fail can be approximately represented by a Poisson distribution. As the size of failed samples generated by the Meta-AK-IS is significantly more than that by the MC method, \({\mathop{S}\limits^{\frown}}_f\) is subjected to a normal distribution in this paper with a mean \(\mu_{{\mathop{S}\limits^{\frown}}_f }\) and a standard deviation \(\sigma_{{\mathop{S}\limits^{\frown}}_f }\), that is:

where \({\mathop{N}\limits^{\frown}}_f\) is the size of predicted failed samples.

To ensure accuracy of \(g_K \left( {{\varvec{x}}} \right)\), the difference between \({\mathop{N}\limits^{\frown}}_f\) and \(N_f\), where \(N_f\) is the size of samples, which actually fail, should be small enough. The maximum relative error \(MRE\) should be less than a small constant \(\lambda_{thr}\), that is:

where \({\mathop{S}\limits^{\frown}}_f^u\) and \({\mathop{S}\limits^{\frown}}_s^u\) are the upper bounds of \({\mathop{S}\limits^{\frown}}_f\) and \({\mathop{S}\limits^{\frown}}_s\) respectively, which are calculated by [46]:

where \(\delta\) is a constant reflecting the confidence interval of \({\mathop{S}\limits^{\frown}}_f\) and \({\mathop{S}\limits^{\frown}}_s\). If \(\delta = 3\), the likelihood of \({\mathop{S}\limits^{\frown}}_f\) and \({\mathop{S}\limits^{\frown}}_s\) located in the region \(\left[ {\mu_{{\mathop{S}\limits^{\frown}}_f } - \delta \sigma_{{\mathop{S}\limits^{\frown}}_f } ,\mu_{{\mathop{S}\limits^{\frown}}_f } + \delta \sigma_{{\mathop{S}\limits^{\frown}}_f } } \right]\) and \(\left[ {\mu_{{\mathop{S}\limits^{\frown}}_s } - \delta \sigma_{{\mathop{S}\limits^{\frown}}_s } ,\mu_{{\mathop{S}\limits^{\frown}}_s } + \delta \sigma_{{\mathop{S}\limits^{\frown}}_s } } \right]\) will both reach 99.73%. When \(\lambda_{thr}\) is small enough, the Kriging model has sufficient accuracy. Therefore, the stopping criterion is defined as \(MRE < \lambda_{thr}\).

4 Silhouette plot method

In the Meta-IS-AK method, the k-means clustering algorithm is adopted to cluster the important samples and calculate \({\mathop{\alpha }\limits^{\frown}}_{corrLOO}\). To overcome the shortcoming of assuming \(K\) artificially, the silhouette plot method is introduced. Based on the silhouette value, the silhouette plot can determine whether the cluster of each sample is reasonable, which is defined as:

where \(S\left( i \right)\) is the silhouette value of the ith point. \(a\) is the average distance between the ith point and other points in the same cluster. \(b\) is a vector and its elements represent the average distance between the ith point and other points in different clusters. The larger \(S\left( i \right)\) means the more reasonable cluster. In this paper, the mean silhouette value is used to determine \(K\). The clusters correspond to the largest \(\frac{1}{m}\sum\nolimits_{i = 1}^m {S\left( i \right)}\) are selected, and the corresponding cluster centers could be added to the training set.

It is noted that the silhouette plot is used after obtaining cluster centers. Once the important sample set and \({\mathop{\alpha }\limits^{\frown}}_{corrLOO}\) are obtained, the optimal clusters should be determined. It is noted that \(K\) should be repeatedly calculated until the condition \({\mathop{\alpha }\limits^{\frown}}_{corrLOO} \in \left[ {0.1,10} \right] \cap m > 30\) is satisfied. Therefore, in the proposed method, \(K\) is continuously changing with Kriging updates. The recommended number of clusters in this article is \(\left[ {m_f ,\max \left( {2m_f ,n} \right)} \right]\), where \(m_f\) represents the number of failure modes and \(n\) is the number of input variables. The feasibility of this strategy will be verified through numerical examples.

5 Summarized of the proposed method

Based on the previous sections, this paper proposes an improved Meta-IS-AK method for reliability analysis with the proposed variance-based learning function and COV-based stopping criterion, which is called the Meta-IS-VL method. The specific steps are summarized as follows:

-

Step 1: Transform the random variables into standard normal space and establish the initial Kriging model. As suggested by Zhang [49], the samples with the population \(N_{ini} = \max \left( {12,n} \right)\) are generated by the Sobol sequence as the initial training set in the interval [-5,5]. Calculate the real values of the performance function of these samples and the initial Kriging model is established.

-

Step 2: Generate IS samples and update the Kriging model. Based on Eq. (1), \(N_{corr}\) IS samples under the current \(g_K \left( {{\varvec{x}}} \right)\) can be generated through the MCMC method.

-

Step 3: Based on the k-means algorithm, the candidate centroids are obtained. Calculate \(S\left( i \right)\) of all IS samples. The \(K\) cluster centers correspond to the largest \(\frac{1}{m}\sum_{i = 1}^m {S\left( i \right)}\) are added to the training set to update the Kriging model.

-

Step 4: Calculate \({\mathop{\alpha }\limits^{\frown}}_{corrLOO}\) and judge the convergence criterion. If \({\mathop{\alpha }\limits^{\frown}}_{corrLOO} \in \left[ {0.1,10} \right] \cap m > 30\), output \(h_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\) and turn to Step 4. Otherwise, return to Step 2.

-

Step 5: Generate \(N_\varepsilon\) samples based on \(f_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\). Calculate \({\mathop{P}\limits^{\frown}}_{f\varepsilon }\) nd \({\text{Cov}}\left( {{\mathop{P}\limits^{\frown}}_{f\varepsilon } } \right)\) based on the first-stage Kriging model. If \({\text{Cov}}\left( {{\mathop{P}\limits^{\frown}}_{f\varepsilon } } \right) < \lambda_{f\varepsilon }\), output \({\mathop{P}\limits^{\frown}}_{f\varepsilon }\) and \({\text{Cov}}\left( {{\mathop{P}\limits^{\frown}}_{f\varepsilon } } \right)\). Otherwise, expand the size of \(N_\varepsilon\) and repeat Step 5.

-

Step 6: Update the Kriging model. Based on the previous steps, the first-stage Kriging model and the IS samples are obtained. Based on the proposed variance-based learning function, the Kriging model is updated through the selected optimal training samples.

-

Step 7: Judge the stopping criterion. If the stopping criterion defined by Eq. (18) or Eq. (23) is satisfied, output the second-stage Kriging model and turn to Step 7. Otherwise, return to Step 5 and re-update the Kriging model.

-

Step 8: Calculate \({\mathop{\alpha }\limits^{\frown}}_{corr}\) based on the second-stage Kriging model. If \({\text{Cov}}\left( {{\mathop{\alpha }\limits^{\frown}}_{corr} } \right) < \lambda_{corr}\), output \({\mathop{\alpha }\limits^{\frown}}_{corr}\) and turn to Step 8. Otherwise, return to Step 2 and expand the size of \(N_{corr}\).

-

Step 9: Calculate \({\mathop{P}\limits^{\frown}}_f\) using Eq. (8).

In Step 5 and Step 8, \(\lambda_{f\varepsilon }\) and \(\lambda_{corr}\) are both small constants that define the allowable COV. In this paper, \(\lambda_{corr} = 2\%\) and \(\lambda_{f\varepsilon } = 4\%\) are suggested. The reasons are listed as follows: First, it can guarantee that \({\text{Cov}}\left( {{\mathop{P}\limits^{\frown}}_{f\varepsilon } } \right) < 5\%\), and 5% is usually defined as the maximum allowable COV of failure probability. Second, \({\text{Cov}}\left( {{\mathop{P}\limits^{\frown}}_{f\varepsilon } } \right)\) is derived from the random samples generated by \(f_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\). For the problems with a small failure probability, the size of \(N_\varepsilon\) is much larger than that of \(N_{corr}\). Although it doesn’t require additional function calls, it still requires a large data storage space. T reduce the required computer memory, the allowable value of \({\text{Cov}}\left( {{\mathop{P}\limits^{\frown}}_{f\varepsilon } } \right)\) could be larger than \({\text{Cov}}\left( {{\mathop{\alpha }\limits^{\frown}}_{corr} } \right)\).

The proposed method is the further development of Ref. [53]. The following improvements are made: (1) The k-means algorithm combined with a silhouette plot are adopted to calculate \({\mathop{\alpha }\limits^{\frown}}_{corrLOO}\) in the first-stage Kriging model construction. Compared with the single k-means, the proposed strategy can judge the optimal number of centroids without a single artificial assumption, which has stronger data robustness. (2) A novel learning function considering the characteristics of \(h_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\) is proposed to update the Kriging model in the second stage. Compared with the U function, the proposed learning function considers the characteristics of the IS density function, which is more suitable for the Meta-IS-AK. (3) The COV-based stopping criterion is introduced considering the distribution characteristics of \(h_{{\varvec{X}}} \left( {{\varvec{x}}} \right)\), which can reduce the number of training samples and improve the efficiency of active learning in the second stage of Kriging model construction.

6 Numerical examples

In this section, four numerical examples are used to illustrate the performance of the proposed Meta-IS-VL method. The MC method is used as the benchmark, and several existing methods are used for comparative calculation. The error- and the proposed COV-based stopping criteria are both adopted to compare the performance, which are called Meta-IS-VL-ESC and Meta-IS-VL-COV, respectively. Each method is repetitively calculated 10 times, and the mean values are taken as the final results. Considering that \(\lambda_{thr}\) will affect convergence and accuracy, \(\lambda_{thr} = 0.01,0.05,{\text{ and }}0.1\) are used, respectively, to obtain the optimal \(\lambda_{thr}\). In this work, the efficiency of each method is measured by the number of required function calls. The robustness will be evaluated through the width of the failure probability and the number of evaluated performance functions.

6.1 A classical series system

This section studies a classical series system [53], as shown in Eq. (26). \(x_1\) and \(x_2\) are both standard independent normal variables. This example is used to elaborate on the intermediate calculation process of the method.

The results of different methods are listed in Table 2. It can be seen that for the proposed Meta-IS-VL, with the increase of \(\lambda_{thr}\), the number of required function calls will decrease, but the relative error will increase. When \(\lambda_{thr} = 0.1\), both the relative errors of error- and COV-based stopping criteria are greater than 2%. Therefore, the suitable \(\lambda_{thr}\) for the proposed Meta-IS-VL should be smaller than 0.05. The required function calls of Meta-IS-AK and Meta-AK-IS2 are 88.2 and 128.6, respectively. The required function calls of Meta-IS-VL-ESC and Meta-IS-VL-COV are 51.4 and 72.3, respectively, and the relative errors are 1.64% and 1.48%, respectively. The results show that the proposed method can significantly improve efficiency without losing accuracy. In addition, the size of the mean required function calls of the Meta-IS-VL-ESC is smaller than that of Meta-IS-VL-COV. From the perspective of mean value, the efficiency of the COV-based stopping criterion is lower than the error-based stopping criterion. The boxplots of failure probability and required function calls of the proposed Meta-IS-VL are shown in Fig. 1. It can be seen that the range of failure probability calculated by the Meta-IS-VL-ESC is between 2.1e−3 and 2.4e−3, while the range of Meta-IS-VL-COV is between 2.18e−3 and 2.3e−3. The results show that the robustness of Meta-IS-VL-COV is higher than that of Meta-IS-VL-ESC. Table 3 shows the calculated parameters of the proposed Meta-IS-VL when \(\lambda_{thr} = 0.01\).

When the Kriging model finishes updating in the first stage, that is, the condition \({\mathop{\alpha }\limits^{\frown}}_{corrLOO} \in \left[ {0.1,10} \right] \cap m > 30\) is satisfied and \(N_{corr}\) important samples are generated, the results of the silhouette plots under different \(K\) are shown in Fig. 2. The mean \(S\left( i \right)\) is listed in Table 4. When \(K > 4\), \(S\left( i \right)\) has negative values. When \(K = 4\), the mean \(S\left( i \right)\) is the largest. Therefore, the optimal \(K\) is 4. Therefore, the optimal number of clusters can be determined with the help of a silhouette plot, thus effectively solving the problem of only assuming \(K\) arbitrarily in the Meta-IS-AK.

Figure 3 illustrates the fitting accuracy of the limit state boundary (LSB) and the distribution of samples. It can be seen that when the condition \({\mathop{\alpha }\limits^{\frown}}_{corrLOO} \in \left[ {0.1,10} \right] \cap m > 30\) is satisfied, the accuracy of the fitted LSB is still quite low. When the Kriging updating by learning function is finished, the fitting accuracy of Meta-IS-VL-COV is higher than that of Meta-IS-VL-ESC, as Meta-IS-VL-ESC fails to identify a corner area. From the boxplots of failure probability, it can be seen that although the relative error of the mean failure probability is small, this phenomenon may lead to low robustness results. Figure 4 illustrates the iteration curves of the performance function value of added training samples and the convergence in the second stage construction process of the Kriging model. It can be seen that when the number of added training samples is greater than 30, the second-stage Kriging model starts to build through the learning function. Compared with the initial and the first-stage training samples, the performance function values of the second-stage training samples are much closer to 0. The results show that the proposed learning function can obtain the training samples with a large contribution to the failure probability.

In addition, the influence of the initial sample size on the results is studied. \(\lambda_{thr} = 0.01\) is used, as it corresponds to the highest accuracy and robustness. The results are shown in Table 5. It can be seen that when \(N_{ini} = 6\), the size of the function call is the smallest, while the relative error is the largest. When \(N_{ini} = 12,\;{\text{ or }}18\) the relative errors and the size of function calls have little difference. When \(N_{ini} = 24\) the relative error has a small difference from the relative error when \(N_{ini} = 12,\;{\text{ or }}18\). However, the size of function calls when \(N_{ini} = 24\) is much higher than that of \(N_{ini} = 12,\;{\text{ or }}18\). This conclusion is suitable for the two stopping criteria. Therefore, the adopted strategy of initial sample size in this example is reasonable.

6.2 An oscillator system

An oscillator system from Song [28] is used in this section, as shown in Fig. 5. The performance function is defined in Eq. (27), and the random variables are listed in Table 6. This example is used to verify the applicability of a nonlinear performance function.

The results are shown in Table 7. The boxplots of failure probability and function calls of the proposed method are shown in Fig. 6. It can be seen that the required function calls of Meta-IS-VL-ESC and Meta-IS-VL-COV when \(\lambda_{thr} = 0.01\) are 57.4 and 70.5, respectively, and the relative errors are 0.36% and 0.57%, respectively. From the perspective of mean value, the results show that the efficiency of the proposed COV-based stopping criterion is lower than that of error-based stopping criterion. However, from boxplots, it can be seen that the failure probability range of the error-based stopping criterion is wider than that of the COV-based stopping criterion. The results illustrate that the robustness of the proposed COV-based stopping criterion is higher than that of error-based stopping criterion. The required function call size of the Meta-IS-AK is 78.9. Compared with the traditional Meta-IS-AK, the proposed method can significantly improve efficiency without losing accuracy. In addition, it can be seen that when \(\lambda_{thr} = 0.{0}1\) the relative errors of the two stopping criteria are both smaller than 1%. When \(\lambda_{thr} = 0.05\) the relative errors are all greater than 3%. When \(\lambda_{thr} = 0.1\) the relative errors are larger than 8%, The results show that the suitable \(\lambda_{thr}\) in this example should be 0.01.

Table 8 shows the required parameters of the proposed Meta-IS-VL method through different stopping criteria. Figure 7 shows the performance function values of the training samples and the convergence in the second-stage Kriging model updating. It can be seen that the performance function values of the added training samples in the second-stage Kriging model updating are much closer to 0 compared with the initial samples. Therefore, the proposed variance-based learning function can effectively select the training samples around the LSB.

When the updating of the Kriging model in the first stage is finished, the mean \(S\left( i \right)\) are listed in Table 9. It can be seen that the mean \(S\left( i \right)\) decreased with the increase of \(K\). \(K = 2\) corresponds to the largest mean \(S\left( i \right)\). Therefore, the optimal \(K\) in this example is 2. The silhouette plots under different \(K\) are shown in Fig. 8.

The influence of the initial sample size when \(\lambda_{thr} = 0.01\) is studied, as shown in Table 10. It can be seen that when \(N_{ini} = 6\), the size of a function call is the smallest, while the relative error is the largest. When \(N_{ini} = 12,\;{\text{ or }}18\) the relative errors and the size of function calls have little difference. When \(N_{ini} = 24\) the relative error is the smallest. However, the size of function calls is also the highest. This conclusion is suitable for the two stopping criteria. Therefore, the adopted initial sample size strategy is suitable in this example.

6.3 A cantilever beam-bar system

A cantilever beam-bar system from Yun [45] is studied in this section, as shown in Fig. 9. The performance function is defined as:

where \(L = 5\).\(M\),\(T\), \(X\) are normal variables with means \(\mu_M = 1000,\mu_T = 110,\mu_X = 150\) and standard deviations \(\sigma_M = 300,\sigma_T = 20,\sigma_X = 30\), respectively. This example is used to evaluate the applicability of the proposed method in a series–parallel hybrid system. The results are listed in Table 11. It can be seen that the relative errors of Meta-IS-VL-ESC and Meta-IS-VL-COV are 1.29% and 1.27%, respectively when \(\lambda_{thr} = 0.01\), and the required function calls are 125.8 and 127.4, respectively. The results show that the proposed method has high accuracy, and the efficiency of the two stopping criteria is basically the same. The number of required function calls of Meta-IS-AK is 177.3, which is much larger than that of the proposed method. This illustrates that the traditional U learning function and its stopping criterion will lead to many redundant training samples. The required function calls of Meta-AK-IS2 is 169.2, and the efficiency is much lower than that of the proposed method. In addition, it can be seen that when \(\lambda_{thr} = 0.05\) the relative errors of the Meta-IS-VL under the two stopping criteria are all greater than 3%. When \(\lambda_{thr} = 0.1\) the relative errors are larger than 10%, The results show that the suitable \(\lambda_{thr}\) in this example should be 0.01.

The boxplots of failure probability and the number of required function calls are shown in Fig. 10. It can be seen that the ranges of failure probability for the two stopping criteria have little difference. The mean required function calls size of the error-based stopping criterion is smaller than that of the COV-based stopping criterion. However, the range widths of COV and ESC stopping criterions are 48 and 95, respectively. Therefore, the robustness of the proposed COV-based stopping criterion is higher than that of the error-based stopping criterion. Table 12 lists the calculated parameters of the proposed Meta-IS-VL method under different stopping criteria. Figure 11 shows the performance function value of the training samples and the convergence performance in the second stage Kriging model.

This example has four basic failure modes, which means that the candidate \(K\) is between 4 and 8. The results of silhouette plots are shown in Fig. 12, and the mean \(S\left( i \right)\) is listed in Table 13. It can be seen that \(K = 7\) corresponds to the largest mean \(S\left( i \right)\). Therefore, the optimal \(K\) in example 1 is 7.

The results of different initial sample size when \(\lambda_{thr} = 0.01\) are shown in Table 14. It can be seen that when \(N_{ini} = 6\), the size of the function call is the smallest, while the relative error is the largest. When \(N_{ini} = 12,\;{\text{ or }}18\) the relative errors and the size of function calls have little difference. When \(N_{ini} = 24\) the relative error has a small difference from the relative error when \(N_{ini} = 12,\;{\text{ or }}18\). However, the size of function calls when \(N_{ini} = 24\) is higher than that for \(N_{ini} = 12,{\text{ or }}18\). This conclusion is suitable for the two stopping criteria. Therefore, the applicability of the initial sample size strategy is verified in this example.

6.4 Latch lock mechanism of hatch

The latch lock mechanism from Ling [15] is studied in this section. The structure is shown in Fig. 13, and information about random variables is demonstrated in Table 15. As reported in Ling [15], the result of MC is 2.1880e−7. This example is used to verify the performance of the proposed method for the problem with a small failure probability.

All variables are subjected to an independent normal distribution, and the performance function is defined as:

The results are listed in Table 16. The relative errors of Meta-IS-AK and Meta-AK-IS2 are 1.77% and 3.45%, respectively. When \(\lambda_{thr} = 0.01,0.05\) the relative errors of Meta-IS-VL-COV and Meta-IS-VL-ESC are all lower than 3%. When \(\lambda_{thr} = 0.1\) the relative errors are greater than 5%. The results show that the suitable \(\lambda_{thr}\) for the proposed method should be smaller than 0.05. The accuracy of Meta-IS-VL-ESC, Meta-IS-VL-COV, and Meta-IS-AK is basically the same. However, the required function calls of Meta-IS-VL-ESC and Meta-IS-VL-COV when \(\lambda_{thr} = 0.01\) are only 40.4 and 39.4, respectively. The efficiency of the proposed method is much higher than that for Meta-IS-AK and Meta-AK-IS2. In addition, the efficiency of the two stopping criteria is basically the same. This example illustrates that for small failure probability problems, the proposed method can further improve the efficiency of Meta-IS-AK without losing accuracy. The boxplots of failure probability and required function call size are shown in Fig. 14. It can be seen that the range of failure probability calculated by the COV-based stopping criterion is narrower than that by the error-based stopping criterion. The required parameters calculated by Meta-IS-VL are listed in Table 17.

The results of the mean \(S\left( i \right)\), when the updating of the Kriging model in the first stage is finished, are listed in Table 18, and the silhouette plots are shown in Fig. 15. The maximum mean silhouette value corresponds to \(K = 3\). The calculation parameters of Meta-IS-VL-ESC and Meta-IS-VL-COV are shown in Table 19, and the performance function values of the training samples and the convergence performance are shown in Fig. 16.

The results corresponding to different initial sample sizes are shown in Table 19. When \(N_{ini} = 6\) the relative error is the largest. When \(N_{ini} = 6\) the size of the required function call is the largest. The numbers of required functions call when \(N_{ini} = {18}\) and \(N_{ini} = 12\) have little difference, and the relative errors are all lower than 2%. When \(N_{ini} = {24}\), the number of required function calls is much higher than \(N_{ini} = {18}\) and \(N_{ini} = 12\). However, the relative errors in the three cases show little difference.

6.5 A high dimensional problem

This section studies a classical high-dimensional problem [30], as shown in Eq. (30).

where \(x_i \sim N\left( {0,\sigma } \right)\). This example is used to study the applicability of a high-dimensional problem. Different \(n\) are studied, including 10, 20, and 30. The results are shown in Tables 20, 21 and 22. It can be seen that when \(n = 10\), Meta-IS-VL still has high accuracy under \(\lambda_{thr} = 0.01\), as the relative errors are all lower than 2%. The sizes of function call in Meta-IS-VL-COV and Meta-IS-VL-ESC are both lower than those in Meta-IS-AK, which means that the efficiency is also higher. In addition, with the increase \(\lambda_{thr}\), the size of required function calls will decrease, while the relative error will decrease. The above conclusions are the same as the previous examples. However, when \(n = {2}0\), the relative errors of the method listed in Table 6 are larger than 10%. When \(n = {3}0\) it completely loses the computing function. The results show that the proposed method may not be suitable for high-dimensional problems.

7 Conclusions

An improved Meta-AK-IS method is proposed to further improve efficiency, which is called the Meta-IS-VL method. First, the k-means cluster algorithm in IS density function construction is improved by the silhouette plot to solve the problem of only assuming clusters arbitrarily. Second, a novel learning function is proposed considering the distribution characteristics of the IS density function; thus, it can fully reflect the impact of the constructed optimal IS function. The COV information is adopted to define a novel stopping criterion for active learning, and the traditional error-based stopping criterion is also adopted to validate the performance. The conclusions are summarized as follows:

-

(1)

From the iteration curves, it can be seen that the performance function values of the added samples in the second stage of Kriging model updating are much closer to 0 compared with the initial samples. This means that the proposed learning function can effectively select the optimal training samples around the LSB.

-

(2)

The influence of the convergence criterion and the size of initial samples on failure probability are studied. The results show that the suitable \(\lambda_{thr}\) should be smaller than 0.05, and the adopted initial sample size strategy is suitable for problems with variable dimensions less than 10.

-

(3)

In examples 1–4, the mean required function calls of Meta-IS-VL-ESC are 51.4, 57.4, 125.8, and 40.4, respectively, and the required function calls of Meta-IS-VL-COV are 72.3, 70.2, 127.4, and 39.4, respectively. The relative errors of the proposed method under the two stopping criteria are all lower than 2%. Compared with the traditional Meta-IS-AK, the proposed Meta-IS-VL can significantly improve efficiency without losing accuracy.

-

(4)

Analysis of the mean required function calls indicates that the efficiency of the proposed COV-based stopping criterion is comparatively lower than the existing error-based stopping criterion. The boxplot representations across examples 1–4 reveal a wider range of failure probability estimations obtained through the error-based criterion in contrast to the COV-based criterion. Additionally, within examples 2–3, the error-based criterion demonstrates a larger range in the size of function calls. Hence, the proposed stopping criterion exhibits higher robustness.

-

(5)

While effective for various scenarios, the proposed method exhibits limitations in handling high-dimensional problems. As demonstrated in example 5, the method showcases commendable accuracy in estimating failure probabilities even with 10 variables. However, as the number of variables exceeds 20, a notable increase in relative error becomes apparent. Consequently, the method's optimal utility is primarily observed in scenarios where variable dimensions are below 10.

Data availability

The supporting codes and data are available from the corresponding author through reasonable request.

References

Cadini F, Santos F, Zio E (2014) An improved adaptive Kriging-based importance technique for sampling multiple failure regions of low probability. Reliab Eng Syst Saf 131:109–117

Chen J, Chen Z, Xu Y, Li H (2021) Efficient reliability analysis combining kriging and subset simulation with two-stage convergence criterion. Reliab Eng Syst Saf 214:107737

Chojaczyk AA, Teixeira AP, Neves LC, Carsoso JB, Soares CG (2015) Review and application of Artificial Neural Networks models in reliability analysis of steel structures. Struct Saf 52:78–89

Dang C, Wei P, Song J, Beer M (2021) Estimation of failure probability function under imprecise probabilities by active learning-augmented probabilistic integration. ASCE-ASME J Risk Uncertain Eng Syst Part A Civ Eng. 7(4):04021054

Dubourg V, Sudret B, Deheeger F (2013) Metamodel-based importance sampling for structural reliability analysis. Probab Eng Mech 33:47–57

Echard B, Gayton N, Lemaire M (2011) AK-MCS: an active learning reliability method combining kriging and Monte Carlo simulation. Struct Saf 33:145–154

Echard B, Gayton N, Lemaire M (2013) A combined importance sampling and kriging reliability method for small failure probabilities with time-demanding numerical models. Reliab Eng Syst Saf 111:232–240

Gaspar B, Teixeira AP, Guedes C (2017) Adaptive surrogate model with active refinement combining Kriging and a trust region method. Reliab Eng Syst Saf 165:277–291

Hong L, Li H, Peng K (2021) A combined radial basis function and adaptive sequential sampling method for structural reliability analysis. Appl Math Model 90:375–393

Jiang C, Qiu H, Gao L, Wang D, Yang Z, Li C (2021) EEK-SYS: System reliability analysis through estimation error-guided adaptive Kriging approximation of multiple limit state surfaces. Reliab Eng Syst Saf 206:107285

Jiang C, Qiu H, Zan Y, Chen L, Gao L, Li P (2019) A general failure-pursuing sampling framework for surrogate-based reliability analysis. Reliab Eng Syst Saf 183:47–59

Jia DW, Wu ZY (2023) Reliability and global sensitivity analysis based on importance directional sampling and adaptive Kriging model. Struct Multidiscip Optim 66:139

Lelièvre N, Beaurepaire P, Mattrand C, Gayton N (2018) AK-MCSi: A Kriging-based method to deal with small failure probabilities and time-consuming models. Struct Saf 73:1–11

Li G, Chen Z, Yang Z, He J (2022) Novel learning functions design based on the probability of improvement criterion and normalization techniques. Appl Math Model 108:376–391

Ling C, Lu Z (2021) Support vector machine-based importance sampling for rare event estimation. Struct Multidiscip Optim 63:1609–1631

Malakzadeh K, Daei M (2020) Hybrid FORM-Sampling simulation method for finding design point and importance vector in structural reliability. Appl Soft Comput 92:106313

MiarNaeimi F, Azizyan G, Rashki M (2019) Reliability sensitivity analysis method based on subset simulation hybrid techniques. Appl Math Model 75:607–626

Moustapha M, Marelli S, Sudret B (2022) Active learning for structural reliability: survey, general framework and benchmark. Struct Saf 96:102174

Pan QJ, Leung YF, Hsu SC (2021) Stochastic seismic slope stability assessment using polynomial chaos expansions combined with relevance vector machine. Geosci Front 21:405–411

Pan Q, Dias D (2017) An efficient reliability method combining adaptive support vector machine and Monte Carlo Simulation. Struct Saf 67:85–95

Papaioannou I, Straub D (2021) Combination line sampling for structural reliability analysis. Struct Saf 88:102025

Rachedi M, Matallah M, Kotronis P (2021) Seismic behavior & risk assessment of an existing bridge considering soil-structure interaction using artificial neural network. Eng Struct 232:111800

Ren Y, Bai G (2011) New neural network response surface methods for reliability analysis. Chinese J Aeronaut 24:25–31

Roy A, Chakraborty S (2020) Support vector regression based metamodel by sequential adaptive sampling for reliability analysis. Reliab Eng Syst Saf 200:106948

Shi Y, Lu Z, He R, Zhou Y, Chen S (2020) A novel learning function based on Kriging for reliability analysis. Reliab Eng Syst Saf 198:106857

Shi L, Sun B, Ibrahim DS (2019) An active learning reliability method with multiple kernel functions based on radial basis function. Struct Multidiscip Optim 60:211–229

Song K, Zhang Y, Shen L, Zhao Q, Song B (2021) A failure boundary exploration and exploitation framework combining adaptive Kriging model and sample space partitioning strategy for efficient reliability analysis. Reliab Eng Syst Saf 216:108009

Song J, Wei P, Valdebenito M, Beer M (2021) Active learning line sampling for rare event analysis. Mech Syst Signal Pr 147:107113

Sun Z, Wang J, Li R, Tong C (2017) LIF: A new Kriging based learning function and its application to structural reliability analysis. Reliab Eng Syst Saf 157:152–165

Teixeira R, Nogal M, O’Connor A (2021) Adaptive approaches in metamodel-based reliability analysis: a review. Struct Saf 89:102019

Thedy J, Liao KW (2021) Multisphere-based importance sampling for structural reliability analysis. Struct Saf 91:102099

Wang D, Qiu H, Gao L, Jiang C (2021) A single-loop Kriging coupled with subset simulation for time-dependent reliability analysis. Reliab Eng Syst Saf 216:107931

Wang Z, Shafieezadeh A (2019) ESC: an efficient error-based stopping criterion for kriging-based reliability analysis method. Struct Multidiscip Optim 59:1621–1637

Wen Z, Pei H, Liu H, Yue Z (2016) A Sequential Kriging reliability analysis method with characteristics of adaptive sampling regions and parallelizability. Reliab Eng Syst Saf 153:170–179

Xiao NC, Yuan K, Zhou CN (2020) Adaptive kriging-based efficient reliability method for structural systems with multiple failure modes and mixed variables. Comput Method Appl M 359:112649

Xiao NC, Zuo MJ, Guo W (2018) Efficient reliability analysis based on adaptive sequential sampling design and cross-validation. Appl Math Model 58:404–420

Xiao S, Oladyshkin S, Nowak W (2020) Reliability analysis with stratified importance sampling based on adaptive Kriging. Reliab Eng Syst Saf 197:106852

Yang X, Cheng X, Liu Z, Wang T (2021) A novel active learning method for profust reliability analysis based on the Kriging model. Eng Comput-Germany 2021:1–14

Yang X, Liu Y, Mi C, Tang C (2018) System reliability analysis through active learning Kriging model with truncated candidate region. Reliab Eng Syst Saf 169:235–241

Yang X, Mi C, Deng D, Liu Y (2019) A system reliability analysis method combining active learning Kriging model with adaptive size of candidate points. Struct Multidiscip Optim 60:137–150

Yang X, Liu Y, Gao Y, Zhang Y, Gao Z (2015) An active learning kriging model for hybrid reliability analysis with both random and interval variables. Struct Multidiscip Optim 51:1003–1016

Yang X, Cheng X (2020) Active learning method combining Kriging model and multimodal-optimization-based importance sampling for the estimation of small failure probability. Int J Numer Methods Eng 121:4843–4864

Yao W, Tang G, Wang N, Chen X (2019) An improved reliability analysis approach based on combined FORM and Beta-spherical importance sampling in critical region. Struct Multidiscip Optim 60:35–58

Yun W, Lu Z, Wang L, Feng K, He P, Dai Y (2021) Error-based stopping criterion for the combined adaptive Kriging and importance sampling method for reliability analysis. Probab Eng Mech 65:103131

Yun W, Lu Z, Jiang X (2018) An efficient reliability analysis method combining adaptive Kriging and modified importance sampling for small failure probability. Struct Multidiscip Optim 58:1383–1393

Zhang X, Lu Z, Cheng K (2021) AK-DS: an adaptive Kriging-based directional sampling method for reliability analysis. Mech Syst Signal Process 156:107610

Zhang X, Lu Z, Cheng K (2022) Cross-entropy-based directional importance sampling with von Mises-Fisher mixture model for reliability analysis. Reliab Eng Syst Saf 220:108306

Zhang X, Wang L, Sørensen JD (2020) AKOIS: An adaptive Kriging oriented importance sampling method for structural system reliability analysis. Struct Saf 82:101876

Zhang X, Wang L, Sørensen JD (2019) REIF: a novel active-learning function toward adaptive Kriging surrogate models for structural reliability analysis. Reliab Eng Syst Saf 185:440–454

Zhao H, Yue Z, Liu Y, Zhang Y (2015) An efficient reliability method combining adaptive importance sampling and Kriging metamodel. Appl Math Model 39:1853–1866

Zhao J, Chen J, Xu L (2019) RBF-GA: an adaptive radial basis function metamodeling with generic algorithm for structural reliability analysis. Reliab Eng Syst Saf 60:211–229

Zhou C, Xiao NC, Zuo MJ, Gao W (2022) An improved Kriging-based approach for system reliability analysis with multiple failure modes. Eng Comput 38:1813–1833

Zhu X, Zhou Z, Yun W (2020) An efficient method for estimating failure probability of the structure with multiple implicit failure domains by combining Meta-IS with IS-AK. Reliab Eng Syst Saf 193:106644

Funding

This study is supported by the National Natural Science Foundation of China under Grant 51278420.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We declare that we have no financial and personal conflict of interest with other people or organizations.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jia, DW., Wu, ZY. An improved adaptive Kriging model-based metamodel importance sampling reliability analysis method. Engineering with Computers (2024). https://doi.org/10.1007/s00366-023-01941-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00366-023-01941-5