Abstract

This work presents a novel adaptable framework for multi-objective optimization (MOO) in metal additive manufacturing (AM). The framework offers significant advantages by departing from the traditional design of experiments (DoE) and embracing surrogate-based optimization techniques for enhanced efficiency. It accommodates a wide range of process variables such as laser power, scan speed, hatch distance, and optimization objectives like porosity and surface roughness (SR), leveraging Bayesian optimization for continuous improvement. High-fidelity surrogate models are ensured through the implementation of space-filling design and Gaussian process regression. Sensitivity analysis (SA) is employed to quantify the influence of input parameters, while an evolutionary algorithm drives the MOO process. The efficacy of the framework is demonstrated by applying it to optimize SR and porosity in a case study, achieving a significant reduction in SR and porosity levels using data from existing literature. The Gaussian process model achieves a commendable cross-validation R2 score of 0.79, indicating a strong correlation between the predicted and actual values with minimal relative mean errors. Furthermore, the SA highlights the dominant role of hatch spacing in SR prediction and the balanced contribution of laser speed and power on porosity control. This adaptable framework offers significant potential to surpass existing optimization approaches by enabling a more comprehensive optimization, contributing to notable advancements in AM technology.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

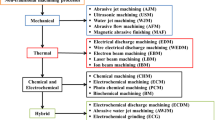

1 Introduction

Additive manufacturing (AM) technologies have undergone remarkable advancements over the past two decades, transitioning from their initial application in rapid prototyping to the production of functional end-use parts. These technologies have shown promising potential across various sectors, including aerospace, automotive, biomedical, and energy applications [1,2,3]. Forecasts indicate that these technologies are poised to evolve into a multi-billion dollar industry within the next decade [4]. These advancements have paved the way for the direct fabrication of end-use components employing a diverse array of metallic alloys, such as stainless steel, titanium alloys, and nickel-based superalloys [3, 5]. Selective laser melting (SLM) is one of the numerous most widely used additive manufacturing (AM) techniques for producing metallic components. Using SLM, it is possible to produce and to excel at creating intricate and functional parts with outstanding printing resolution, heightened density, and superior surface finish. Moreover, SLM significantly reduces the need for extensive post-processing, distinguishing it from alternative techniques, such as binder jetting [6].

At its core, SLM lies in employing a high-energy laser beam for the selective fusion of fine metallic powder particles, building functional parts layer by layer. The intricacy of this process is rooted in complex physical phenomena that transpire at each stage. These include rapid melting, evaporation, solidification, recoil, and reheating arising from the interaction between the laser beam and the material within a single layer or across successive layers [7, 8]. The intricate interplay of high thermal gradients and rapid cooling rates inherent in these phenomena can give rise to defects such as porosity and microcracks in the final manufactured parts [9]. These defects, in turn, exert detrimental effects on the mechanical properties of fabricated components [9, 10]. In addition, the current understanding of fundamental physical phenomena remains constrained, impeding our ability to anticipate the microstructure and properties of the resultant parts, which is a prerequisite for ensuring their alignment with prescribed design specifications.

Optimizing process parameters for metal selective laser melting (SLM) is challenging due to the complex physics involved and the large parameter space [8]. Conventional trial and error approaches and single-factor-at-a-time optimization strategies are insufficient and inefficient [3, 5, 11, 12]. More advanced multivariate techniques, such as the design of experiments (DoE), are required [13,14,15,16,17,18,19]. DoE has been used to optimize a variety of properties in AM, including porosity, surface roughness, fatigue life, and melt pool dimensions [20]. However, classical DoE methods have limited flexibility and efficiency, and they rely on statistical assumptions that may not always be guaranteed [21, 22]. Additionally, it is difficult to adapt classical DoE to multi-objective optimization, which is the case for AM [23].

Given the inherently multivariate and multi-objective nature of AM process optimization, many researchers have found an attractive solution by adopting surrogate modeling [24, 25]. Surrogate models (or data-driven models) can be used to approximate mathematical models that mimic the real model response within the experimental parameter space, and which are constructed using a data-driven bottom-up approach, even when the underlying physics of the AM process is not fully described. Many studies have used surrogate models in AM of metals. For example, Tapia et al. [24] used a Gaussian process (GP)-based surrogate model of the L-PBF process that predicts the melt pool depth in single-track experiments as a function of laser power, scan speed, and laser beam size combination. Similarly, Meng and Zhang [26] combined experimental and simulated data to predict remelted depths using GP. Surrogate models were also used to perform an MOO of AM. Li et al. [27] proposed a hybrid multi-objective optimization approach by combining an ensemble of metamodels (EM) and a non-dominated sorting genetic algorithm-II (NSGA-II) for optimization to generate optimal process parameters to improve energy consumption, the tensile strength, and the surface roughness of produced parts. Instead of EM, Meng et al. [28] used the response surface methodology in combination with NSGA-II to optimize the structure of a multilayer bio-inspired sandwich of Ti6Al4V. Padhye and Deb [26] carried out an MOO by considering the minimization of surface roughness and build time in the selective laser sintering (SLS) process using evolutionary algorithms, mainly NSGA‐II–II and multi-objective particle swarm optimizers (MOPSO). More recently, Chaudhry and Soulaimani [29] compared the performance of three optimization techniques, genetic algorithms (GA), particle swarm optimization (PSO), and differential evolution (DE), to optimize residual strains using Sobol sampled high-fidelity numerical simulations and deep neural networks (DNN) as a surrogate model since it was found to be much faster for prediction than the polynomial chaos expansion (PCE). One of the key criticisms facing surrogate models in AM is that they are basically “black-box” models without any physical basis, which may result in MOO procedures that can be described as “blindfolded”, as the optimization is executed without knowledge of the relationship between the input and the output. This lack of interpretability makes it difficult to gain scientific insight, which constrains the transferability of knowledge to other systems. Moreover, it also hinders the ability to gain insights into the underlying system or process being optimized, limiting transferability as well [30].

The optimization of additive manufacturing (AM) processes poses a formidable challenge due to their inherently multivariate and multi-objective nature [20, 27, 31]. Numerous process parameters interact in complex ways to influence multiple, often conflicting, quality characteristics [8, 20]. While surrogate modeling offers a computationally efficient alternative to direct optimization, traditional surrogate-based approaches often suffer from a lack of interpretability [30, 32, 33]. This “black box” nature obscures the underlying relationships between process inputs and outputs, hindering scientific understanding and limiting the transferability of optimization results [32]. Furthermore, challenges in designing efficient experiments for surrogate model construction and the lack of continuous improvement mechanisms within these frameworks highlight the need for novel paradigms. These paradigms should aim to optimize multiple facets of the AM process while providing deeper insights into the complex process dynamics.

The present study presents a novel surrogate model-based MOO framework for AM that addresses three critical challenges that have not been discussed in the literature [26,27,28,29]: inefficient experimental design, lack of model interpretability, and rigid optimization frameworks. The proposed framework addresses these challenges by (1) using a space-filling design for data collection that efficiently samples the design space and avoids leaving gaps or subsampling critical regions; (2) using explainable machine learning techniques that make the surrogate models more interpretable and transparent, thus allowing researchers and practitioners to gain deeper insights into the optimization process; and (3) by implementing Bayesian optimization, which allows for continuous process optimization, enabling the framework to refine the estimation of the objective function and potentially uncover a more optimal solution.

2 Modeling and optimization framework

This section presents the proposed optimization framework for improving the AM processes. The framework can accommodate additional factors for a comprehensive evaluation of the design space and systematically covers the parameter space by using a space-filling design which provides a foundation for reliable predictions and optimization (space-filling design). The probabilistic modeling approach enables robust predictions and uncertainties during the optimization process, and utilizes an acquisition function to balance exploration and exploitation, making the optimization process more efficient and effective. The trade-offs between conflicting objectives are balanced by adapting a multi-objective paradigm that ensures that the optimization process considers various criteria. In addition, the iterative nature of the process and the robust testing and validation enable learning from previous evaluations, adapting the model, and refining the optimization approach over successive steps.

Figure 1 summarizes the optimization framework, which starts by (Section 2.1) identifying the most critical processing variables and sampling data points \(X=\{{x}_{1},{x}_{2},\dots ,{x}_{d}\}\) based on the specified space; \({x}_{i}\) is a controllable processing parameter and \(d\) is the number of variables. The variable space should be based on previous work. It should also avoid, if known, parameter combinations, such as high scan speeds with low power, which promote defects [10, 34,35,36,37,38] (such as a lack of fusion, porosity, or balling) for the specified alloy. Space-filling designs should be used to ensure that the design space is thoroughly explored and that the effect of each factor is equally represented and accurately captured [39,40,41,42]. This set of techniques attempts to achieve good coverage of the variable space with a limited number of experiments by distributing the experimental points as uniformly as possible without being biased toward a particular region or variable [43]. In addition, this type of experimental design can easily be accommodated in the processing map by actively constraining the data points [44]. Consequently, the surrogate model can better generalize the constrained design space. However, classical experimental design can also be used in this framework.

The second step consists in manufacturing the samples at the given experimental design points and characterizing them. The dimensions and geometry of the pieces could be those of small test cubes if the goal is to optimize the process; however, genuine functional parts could also be used if the goal is to optimize the properties of the part. The characterization step could include many techniques and is set by the optimization objectives; for example, it is known that to improve the fatigue performance of AM parts, the key focus should be on minimizing crack initiation sites, mainly surface roughness and internal defects [12, 45]. In this case, therefore, both quantities of interest (QoI) must be added in the characterization step. It should be noted that the framework is sufficiently flexible to allow adding as many objectives and parameters as needed. In the case study, we used an example involving the optimization of the part density and surface roughness (\({R}_{a}\)); however, other properties could be added to the list of optimization objectives, depending on the application at hand.

After characterization (Section 2.2), each objective \(\{{Y}_{1},{Y}_{2},\dots ,{Y}_{m}\}\) is modeled using a Gaussian process (Kriging model). We assume that the true values differ from the predicted ones by additive noise that follows an independent, identical Gaussian distribution. However, measurement uncertainties exist, and then they can easily be added during training using heteroscedastic GP regression instead [46]. This step produces \(m\) models for each optimization objective. The trained models \(\{{f}_{1}, {f}_{2},\dots ,{f}_{m}\}\) are examined for generalizability using cross-validated metrics [47] and can be further inspected through train/test splits of the data points. These black-box models do not reveal the underlying functional relationship between the input and output. Although there are many techniques for interpreting the model, we opted for a variance-based global interpretation technique, known as the sensitivity analysis (SA) (Section 2.3), since it makes no assumptions regarding the functional form, it produces quantitative measurements that are relatively simple to report, and it is model-agnostic [48]. The SA result is a sensitivity index that describes each variable’s contribution to the model’s output. This index can also guide the optimization [49, 50] process in the following run.

The last step before optimization (Section 2.4) defines the objectives. It can include many other objectives than the modeled objectives \(Y\), for example. In addition to surface roughness and porosity, we can add energy consumption and laser speed as economic objectives, which allows the decision maker (DM) to assess the potential outcomes of different alternatives, given the materials, geometry, process, mechanical properties, and economic constraints. Finally, in Section 2.6, the optimization procedure is performed, and two types of results are obtained: the optimal processing parameters for each property \({Y}_{i}\) and the non-dominated set of solutions, which will be further explained in Section 2.2. These two types of results are used to inform the DM and can be employed to define the different trade-offs between different optimization objectives. If the optimization result does not meet the initial goal set by the DM, a Bayesian optimization procedure (Section 2.5) can be initiated using the acquisition function based on the fitted GP models, which allows for continuous refinement and improvements to achieve better results. This process can be iterated until the objective is met, or the number of the available experiments is exhausted.

The implementation of this framework is carried out using Python scripts by implementing several libraries: The space-filling design is implemented using the SciPy library [51] Latin hypercube function, the NumPy library [52] was used for general array manipulation and served as a communication link to pass the data from one element to another. The modeling aspect relied on the Gaussian process implementation of the scikit-learn library [53], the multiobjective optimization part used the NSGAII algorithm from the pymoo library [54], the acquisition function for the iterative process improvement used a custom function, and lastly, the sensitivity analysis was carried out using the SALib library [55].

2.1 Experimental design

The design of experiments plays a pivotal role in understanding complex systems, identifying causal relationships, and making informed decisions. Classical DoE involves several assumptions of independence, normality, and homogeneity of variance, and in some cases, a linear relationship is assumed between the variables and the response (e.g., response surface methodology) [56]. In addition, complex nonlinear physical phenomena often require the inclusion of higher-order terms, particularly interaction terms between factors. This is because these experiments aim to replicate intricate processes and capture the complexity of the actual physical system, which requires accounting for higher-order effects. Thus, as in factorial designs, relying solely on corner points would be inadequate for fully characterizing the response. Instead, space-filling (SF) designs should be used to obtain good coverage of the entire design space, allowing the fitting of various nonlinear models that can effectively explain the intricate nature of these systems [39, 57, 58].

One of the most commonly used SF designs is Latin hypercube (LHS) sampling [39]. This sampling technique provides improved sampling efficiency (compared to grid-based designs), reduces sampling bias, and is scalable to high-dimensional spaces [39, 41]. However, the direct implementation of an LHS for the optimization of laser-based AM should only be used for new materials, as this can result in a large amount of experimental waste because the LHS distributes the points uniformly in the selected regions, and a large portion of these points will be placed in regions where process defects are known to occur, as illustrated, see Fig. 2, including keyhole porosity, balling, and lack-of-fusion porosity. Other AM defects [59] can be added to further refine the processing region. In the case where the decision function that separates dense parts from parts with defects is known, instead of using a direct implementation of LHS, a constrained LHS design [44, 60] is more appropriate, where the constraints can be derived from already established processing maps [61] or by using regularized logistic regression on literature data for each major defects [62] to obtain the decision surface. The employed constraints should also be used during the MOO process by adding them as optimization constraints (see Section 2.5) and during the optimization of the acquisition function in the BO step (Section 2.6).

The proposed constrained LHS design to avoid common L-PBF defects and get defect-free parts (adapted from [63]); design constraints can also be used as constraints during the MOO procedure and during querying for the following design points during the sequential design step

2.2 Surrogate modeling

Surrogate models are constructed by statistically relating the input data to the output data collected by evaluating the black-box function at a set of carefully selected sample points. They can be used for sensitivity analysis or optimization [64]. Overall, surrogate modeling is a valuable tool for the efficient and effective optimization of complex systems, and they continue to play an increasingly important role in a wide range of fields [24, 65,66,67,68,69].

2.2.1 Gaussian processes

In the present framework, a multitude of machine learning models can serve as surrogate models. However, we deliberately chose Gaussian processes (GP) for several compelling reasons, including flexibility (nonlinearity) and uncertainty estimation [70]. However, the most important feature is that this ML class can be easily implemented in a Bayesian optimization framework for adaptive sampling (see Section 2.5). GP modeling assumes that any finite collection of \(n\) observations is modeled as having a multivariate normal distribution [25, 70], which means that they can be described using a mean function \(m({\varvec{x}})\) and a covariance function \(k({\varvec{x}},{{\varvec{x}}}^{\boldsymbol{^{\prime}}})\):

The GP model can be expressed as follows:

The mean function is typically set to zero [70]. The choice of the covariance function (also known as the kernel) is the most consequential part of GP modeling [70,71,72]. The covariance function expresses the underlying patterns of the data: smoothness, periodicity, linearity, and nonlinearity. It is an estimated function for the dependence of two points in variable space. In our study, we employed a squared exponential covariance function [70], also known as the radial basis function (RBF), because such functions are smooth, infinitely differentiable, and have a simple functional form that makes them well-suited for modeling smooth physical processes [72, 73], where the output changes gradually with the input. In addition, RBFs have only a few hyperparameters, making them easy to optimize and interpret. The RBF covariate function is expressed as follows:

where \(l>0\) is a hyperparameter that controls the input length scale, and thus, therefore the prediction’s smoothness [70]. This hyperparameter was optimized by maximizing the log-marginal likelihood (LML) during fitting using the L-BFGS algorithm. The LML may have multiple local optima, and as a result, the optimizer is restarted several times during fitting.

The kernel matrix \(K\) can at times be ill-conditioned and particularly evident in cases where the foundational kernel displays a high degree of smoothness (such as with the RBF kernel) [74]. For example, this near-singularity becomes significant when the measurements are affected by measurement noise. To solve this, a noise variance parameter \({\sigma }^{2}\) is added to effectively implement a Tikhonov regularization by adding it to the diagonal of the kernel matrix. The noise parameter represents the global noise level in the datasets, using a single value or individual noise for each data point. This is beneficial for handling numerical instabilities during the optimization of the hyperparameters, which results in inconsistent or inaccurate parameter estimates [53, 75]. Another option is to include a white noise kernel component in the kernel [53, 70], which automatically estimates the global noise level from the data.

2.2.2 Model validation using LOOCV

To evaluate the model’s predictive ability, we used a cross-validation (CV) technique [47]. The main idea with this is to split the training data into training and validation sets and to then use the validation set to estimate the model performance. A variant of this method was used to avoid the drawback of training the model on only a fraction of the dataset. This variant is known as k-fold cross-validation, in which the training dataset is divided into k equally sized subsets (usually between 3 and 10). The model is trained on the union of \(k-1\) datasets and validated on one \(k\)-th dataset, and the process is repeated on every subgroup. Due to the scarcity of data in the current dataset, the \(k\)-fold CV was applied with the setting \(k = n\) (where \(n\) is the number of data points), which is known as leave-one-out cross-validation (LOO CV) [47, 76]. The model performance could then be evaluated using standard performance metrics such as R2 and \(MAPE\) (mean absolute percentage error). The model evaluation procedure is illustrated in Fig. 3.

2.3 Sensitivity analysis for model explanation

Explainable artificial intelligence (XAI) has emerged as an important field for enhancing the interpretability of surrogate models, particularly when utilizing advanced ML techniques such as neural networks and GP. XAI offers a range of model explanation techniques that aim to provide insights from various perspectives [32, 77]. One approach that has gained significant traction within the XAI community is the variance-based sensitivity analysis [48, 78]. This method has been widely used to evaluate critical systems, and is highly valued thanks to its rigorous theoretical foundation [33].

Sensitivity analysis [48, 79] is used as it offers valuable insight into which model input contributes the most to the variability of the model output. SA was applied to increase understanding of the relationship between model inputs and outputs, investigate variable interactions, and simplify the model by considering only high-impact model inputs and as a guiding tool for future experiments. There are two SA approaches available: local and global SA [80]. Local SA focuses on the impact of small perturbations of a single parameter on the model output. In contrast, in global SA, all parameters are varied simultaneously over the entire parameter range, which gives the relative contribution of the individual parameters and the contribution of each interaction parameter pair. There are several types of global sensitivity analyses, including the Sobol method, Fourier amplitude sensitivity analysis, multiparametric sensitivity analysis, and partial rank correlation [79, 81].

In the present work, we adopted the Sobol method due to its specific advantages [82, 83], which include evaluation efficiency, handling of nonlinear relationships, interaction detection, and incorporation of stochasticity. Sobol SA and SA, in general, have proven invaluable for surrogate MOO scenarios [49, 50, 83], aiding in dimensionality reduction by pinpointing influential variables, guiding objective balancing, and enhancing the model and optimization robustness. In addition, it allows for increased transparency and interpretability of surrogate models, potentially reducing computational costs, validating results, and fostering the creation of sensitivity-informed optimization strategies [49] that integrate sensitivity information into the optimization process to adaptively adjust the exploration of the input space based on identified sensitivities, and ultimately elevating the efficiency, reliability, and comprehensibility of the optimization process, especially in an MOO setting.

2.3.1 Sobol’s sensitivity analysis

Sobol’s method is based on the decomposition of the model output variance into additive variances of the input parameters in increasing dimensionality [81, 84]; this is intended to determine the contribution of each input (based on a single parameter or the interaction of different parameters) to the output variability [81]. Sobol’s analysis makes no assumption between model input and output; thus, it can be easily implemented for black-box models and allows to evaluate the full range of input parameters and their interactions. However, Sobol’s method can be computationally expensive, especially for high-dimensional problems, which can only be computed with efficient estimators [85]. The output variance can be attributed to individual input variables and their interactions [81]. These indices can accurately reveal the influence of the individual parameters and their interactions, and their interpretation is straightforward because they are strictly positive. A higher index value (closer to 1) indicates greater importance for the analyzed variables or interactions.

2.4 Multi-objective optimization

2.4.1 Multi-objective optimization and Pareto optimal solutions

An optimization problem with a single objective can be formulated as follows:

where \(f\) is the objective function and \(S=\{{\varvec{x}}\in {\mathbb{R}}^{m}:h\left({\varvec{x}}\right)=0, g\left({\varvec{x}}\right)\ge 0\}\) is a set of variable constraints, \(m\) denotes the dimensionality of the problem, and the \(h({\varvec{x}})\) and \(g\left({\varvec{x}}\right)\) functions are the equality and the inequality constraints applied during the optimization. These constraints can be used to (a) add range constraints to limit the optimization domain, because most ML models are essentially interpolation models with weak extrapolation capabilities, (b) enforce the optimization process to navigate only the defect-free region by adding defect constraints, and (c) enforce a certain productivity exigency by adding productivity constraints based on the processing variables.

Multi-objective optimization, on the other hand, takes into consideration many objectives, usually named performance metrics [86, 87], \(\left[{f}_{1}\left({\varvec{x}}\right),{f}_{2}\left({\varvec{x}}\right),\dots ,{f}_{n}\left({\varvec{x}}\right)\right] ,\) where\(n>1\). Under these considerations, the scalar concept of “optimality” cannot be applied here. Thus, we need to use the concept of Pareto optimality [87]. Other constraints can be added, depending on the desired composition of the optimization objective or other constraints related to the variables themselves [27, 31, 86, 88]. The shape of the Pareto front reveals the nature of the compromise between different objective functions. An example is illustrated in Fig. 4, where the set of points between points A and B defines the Pareto front. MOO problems usually have multiple Pareto optimal solutions; therefore, the selection is not as straightforward as compared to single-objective optimization. In this case, the optimal solution is subject to the decision-maker’s preferences, likes, or other non-included criteria (such as another consequence of the selected optimal solution). The selection of an optimal solution may be based on predetermined preferences. These methods are called a priori methods, and include the scalarization technique, for example. The selection may also be based on finding a representative set of Pareto optimal solutions after which a solution is chosen by the decision maker. Such methods are a posteriori method and include the non-dominated sorting genetic algorithm (NSGA-II).

2.4.2 Scalarization technique

The scalarization technique combines many objectives into a single one using a function \(g({f}_{1}\left({\varvec{x}}\right),{f}_{2}\left({\varvec{x}}\right),\dots ,{f}_{k}\left({\varvec{x}}\right);{\varvec{\theta}})\), where \({\varvec{\theta}}\) is a vector parameter. A simple and intuitive method is the linear scalarization, where the objective functions are weighted by \({w}_{i}\) and summed, and the MOO problem thus becomes a single objective of \({\sum }_{i=1}^{k}{w}_{i}{f}_{i}({\varvec{x}})\).

2.4.3 Nonlinear multiobjective optimization using NSGA II

NSGA-II (non-dominated sorting genetic algorithm II) [89, 90] is a multi-objective optimization algorithm widely used to solve problems with multiple conflicting objectives. This algorithm is based on the principles of evolutionary computing, specifically genetic algorithms. In NSGA-II, a population of candidate solutions is initialized randomly, and then each individual is evaluated for fitness based on their performance over multiple objectives [90]. These objectives can be conflicting or complementary, and the optimization goal is to find a set of solutions that is not dominated by any other solution in the search space. This set of solutions is called the Pareto set and represents the optimal trade-offs between the multiple objectives. To rank the solutions in the population, NSGA-II uses a non-dominated sorting procedure based on their dominance relationships.

NSGA-II incorporates elitism by always preserving the best solutions from the previous generation, which helps ensure that progress is made toward the optimal solution. The algorithm proceeds to the next generation by selecting parents based on their fitness, using a binary tournament selection scheme. Crossover and mutation operations are then applied to the selected parents to generate new offspring solutions. NSGA-II is highly effective in solving many real-world problems with multiple objectives [89], including engineering design, financial portfolio optimization, and environmental management.

2.5 Bayesian optimization

Bayesian optimization (BO) stands out as an iterative, sample-efficient optimization algorithm, making it particularly suitable for optimizing expensive black-box systems. In the present context, “black box” refers to objective functions lacking closed-form representations and function derivatives, allowing only pointwise evaluations. Although several optimization algorithms can handle black-box functions [43, 89, 91, 92], they are not explicitly designed for sample efficiency and require evaluating the function multiple times for optimization, which is very expensive in the case of AM. To reduce the number of evaluations needed, BO adopts a model-based (surrogate model) approach with an adaptive sampling strategy that minimizes the number of function evaluations. BO has been proven in many applications, including material design using physical models [93], laboratory experiments [94], and the discovery of new materials using multiple objectives [95].

Classical design of experiments (DOE) and space-filling designs suffer from a limitation where the sampling pattern is predetermined before measurements are taken and cannot adapt to emerging features during the experiment. In contrast, the concept of adaptive sampling [58, 96, 97] offers a sequential approach where a balance between two criteria guides the selection of the following sample location. Firstly, it focuses on sampling in unexplored regions (e.g., based on the distance from previous samples). Secondly, it places denser sampling in areas that exhibit interesting behaviors, such as rapid changes or nonlinearity.

Bayesian optimization (BO), a form of model-based global optimization (MBGO) [97], employs adaptive sampling to steer the experiment toward a global optimum. Unlike pure adaptive sampling, MBGO incorporates consideration of the optimal value predicted by the modeled objective when determining the sampling locations. BO has two main components [98]:

-

A Gaussian process (GP) model for point prediction and for measuring the uncertainty associated with that prediction.

-

An acquisition function, which identifies the most promising configuration for the next experiment, considering both the predicted mean and the associated uncertainty.

The GP was already discussed in Section 2.2. The acquisition functions are derived primarily from the mean \(\mu ({\varvec{x}})\) and uncertainty \(\sigma ({\varvec{x}})\) estimates of the GP model, and their computation is inexpensive. The acquisition function tries to achieve a balance between exploitation (sampling where the mean objective value \(\mu (\bullet )\) is high) and exploration (sampling where the uncertainty \(\sigma\)(·) is high), and its global maximum is selected as the following experimental setting. The design of acquisition functions aims to be significant in regions where potentially high values of the objective function exist. Commonly used acquisition functions include the probability of improvement PI, the expected improvement EI, and the upper confidence bond GP-UCB, each of which has its specific advantages [99]. We propose to use the EI criterion as it has been shown to be better behaved than PI, and unlike GP-UCB, it does not require parameter tuning [99]. The acquisition function can be sampled inexpensively and optimized. However, like the space-filling design, this should also be a constrained optimization to prevent the algorithms from exploring regions where process defects are known to occur.

3 Case study: optimizing surface roughness and part density

As stated in Section 1, this study aims to offer a MOO paradigm that utilizes surrogate models to efficiently optimize the processing parameters of AM and allow for continuous process improvement. In the following, we will leverage the main components to illustrate the applicability of this optimization framework using literature data [100].

The data uses a central composite design (CCD) [57] as an experimental design method and a response surface model to study the results. The CCD design is essentially a fractional factorial design with an augmented group of points to estimate the curvature; the distance of the augmented group is set to 1.682 to that of the first group. The independent variables are the laser’s power, scanning speed, and hatch spacing with this central point and the extreme points of the design are set to (225 ± 75 W,1000 ± 300 mm/s, {60,80,120} µm), respectively. The data is shown in Fig. 5.

For comparison, two models were fitted on the same data: a linear model (LM) using the least squares method with a second-order polynomial and interaction terms, and a surrogate model (SM) implemented using a Kriging model in the SMT python library [101] with a squared exponential kernel. The result of the fitting is shown in Fig. 6 with the actual versus predicted plots. Both models have high training scores and fit the data perfectly. The linear model benefited well from the chosen experimental design since the CCD is specifically designed to allow the estimation of the curvature of the response based on a quadratic function; the surrogate model, on the other hand, provides not only the predictions, but also the homoscedastic confidence intervals (CI) (represented by the error bars) for the uncertainty quantification. The CI interval contains the actual value, in almost all the parameters combinations.

The two porosity and surface roughness models were visually inspected using contour plots (Fig. 7) across varying laser speeds: 700, 1000, and 1300 mm/s. The insightful contour plot effectively showcases the trade-off between achieving the finest surface roughness and achieving the ideal density. To achieve an optimal porosity, it was observed that higher laser speeds and reduced hatch distances were necessary, as opposed to the optimal surface roughness, which required lower scan speeds and increased hatch distance. This will later be demonstrated quantitatively using the proposed optimization algorithms.

A sensitivity analysis was applied using a 1024 data point generated using Saltelli’s sampling scheme [102], including interactions and a resampling number of 100. We used the evaluation of the surrogate model for the sensitivity analysis and compared it to the standardized coefficients of the linear regression. The result of the study is summarized in Fig. 8. The total sensitivity indicates that the laser power and hatch distance contribute equally to the porosity variance. At the same time, the dominating factor for the surface roughness is the hatch distance, which is almost double the influence of the laser speed and power. This can be further confirmed by looking at the first-order sensitivity indices, which isolate the effect of a single variable, where the hatch distance has almost 2.5 times the impact of laser speed on surface roughness and has virtually double the influence of laser power. The contribution of \(P\), \(V\), and \(H\) on the porosity is similar. Second-order sensitivity indices show that the sensitivity due to the interactions of the laser power and laser speed is negligible (below the 0.05 threshold) for both the porosity and surface roughness; moreover, the interaction of the laser power with the hatch distance is also insignificant for the surface roughness, leaving the interaction of the laser speed and hatch distance as the sole significant interaction for the surface roughness. On the other hand, the porosity is influenced by the interaction of the pair laser power, hatch distance (P, H), and the laser speed and hatch distance (V, H) combination in near-equal measure.

A regression analysis was done using the same data with a polynomial degree of two, and their interactions [100] confirmed the sensitivity analysis results, in which the interaction terms with negligible sensitivity indices were found nonsignificant in the regression analysis. This was due to either high p-value or small coefficients for the standardized linear regression.

The scalar optimization was applied to the linear and surrogate modes using the Powell method, and the optimization constraints were selected as the variable ranges (\(P, V, H\)). Table 1 summarizes the optimization results. The porosity optimization provided the same optimized value for both models, with a consistent process parameter value. However, the surface roughness optimization brought different values for the LM and SM models, with variances of up to 10%, and the process parameters did not coincide. This highlights the fact that although both models have a good fit on the data, the shape of the curve might still differ due to either model constraints or the selected experimental design. This also highlights the need for uncertainty analysis when it comes to modeling physical phenomena in additive manufacturing.

For the MOO using the scalarization techniques, the weights (\({w}_{1}\), \({w}_{2}\)) were set to 0.5 with settings similar to those in the previous single objective optimization. A more recent technique based on evolutionary algorithms is NSGA II. This technique is very efficient at exploring the design space while escaping suboptimal solutions, and is independent of the selected initial point. The NSGA II algorithm was set with a population size of 200 and 1000 generations, respectively, and a crossover probability of 0.9, while the offspring population was set to 10 as previous studies [103] have shown that reducing the offspring population and increasing the population size can improve the performance of the algorithm.

The NSGA II results in a set of non-dominated solutions. To narrow down the results into a single point, we use multi-criteria decision making (MCDM). First, we eliminate the effect of objective scales. This can be achieved using normalization, and to that end, we use min–max scaling \((x-{x}_{{\text{min}}})/({x}_{{\text{max}}}-{x}_{{\text{min}}})\) and employ a decomposition method like the achievement scalarizing function (ASF) [104]. Table 2 presents the results of using the scalarization technique and the NSGA II algorithm on the SM model. Both methods have consistent results, with a slight difference seen in the obtained porosity and surface roughness values, resulting from the different selected approaches. The scalarization technique linearly combines the two objectives into one without considering the scale effect between both. This is apparent in Fig. 9, where the solution of the scalarization technique is slightly biased in favor of the surface roughness. In contrast, the NSGA II tries to find the Pareto set of optimal solutions, which it then normalizes, and by using an ASF, it combines the two objectives.

Figure 10 shows the non-dominated solution set provided by the NSGA II algorithm with the interpolated change of design variables (\(P\),\(V\), and \(H\)) as a function of the objective functions (porosity and surface roughness). The non-dominated solutions show a typical Pareto curve with a compromise of approximately 3% and 12% between the porosity and surface porosity, respectively. The bottom right point represents the single objective optimal surface roughness values, and the top left point is the optimal porosity value; both points agree with the scalar optimization of SM presented in Table 3. The scale effect is present as the optimal solution using the scalarization favors optimizing the surface roughness. The NSGA II approach with the ASF method provides a more balanced solution as the optimal solution is closer to the ideal point (a reference point that represents, simultaneously, the best possible values for all objectives). Analyzing the effect of design variables on the two objectives reveals an interesting relationship. With respect to the Pareto, the porosity increases with increasing hatch distance and decreasing laser power and speed simultaneously; the opposite is true for the surface roughness, which increases with decreasing hatch distance and increasing laser power and speed. This observation qualitatively agrees with the sensitivity analysis results, as the hatch distance was the main contributing factor in the surface roughness.

The optimized processing parameters in Table 2 can be effectively set as the machine parameters for the next printing set-up. However, it should be considered that these parameters are dependent on the experimental data [105] and on the fitted model [106]; i.e., the optimized parameters are not absolute. In our case, the model is mainly controlled by the covariance function. Meanwhile, the data pose a significant challenge when optimizing the AM process due to the expensive experimental runs which results in data scarcity and can lead to model overfitting. In addition, a small dataset may only capture some of the variations and complexity of the problem. Although model validation using LOOCV and train/test splits can alleviate some of these problems, we should always strive to get more data. One way to do this efficiently is using adaptive sampling, which allows the model to actively select data points that are most uncertain or informative to improve its knowledge and reduce uncertainty by efficiently allocating the available resources. Using the prediction and the uncertainty of the two GP models, we can quantify the potential improvement of a candidate point in the design space over the current best solution using the EI acquisition function. The EI considers both the predicted value of the candidate point and the uncertainty associated with that prediction. This function can be optimized to find the best candidate having the most significant potential improvement for each model using the L-BFGS-B algorithm [107, 108]. This optimization step should be run multiple times using random starting, mainly when dealing with highly nonlinear predictions and uncertainties in Gaussian process (GP) models. This approach helps address the sensitivity to the initialization problem and assess the solution’s robustness.

Table 4 presents the selected candidates based on this approach. These candidate parameters were set based on their impact on surface roughness and porosity levels. In Fig. 11, the density plots of the predicted surface roughness and porosity, their relative uncertainty, and the expected improvement at fixed values of hatch distance are compared, and correspond to the candidate parameters. The density plots of the predicted values of the two models show expected behaviors that are typical when fitting an ML model to classical experimental design, in our case, the CCD design. The CCD design is a response surface methodology, in which the regression is typically quadratic. Thus, the fitted ML model will also assume a similar response. What is more interesting is the density plot of the uncertainty. We see in this plot that the closer we get to an initial design point (observed points), the less the model is uncertain about its prediction. The acquisition function uses this information to either explore new regions of the design space or exploit promising regions where improvements are expected. For the part density, the four points have relatively good coverage of the parameter space; this incites the acquisition function to exploit the domain to get the optimal candidate, whereas, for the surface roughness, a high laser power seems to produce parts with low porosity. Here, the acquisition function decides to explore this region further.

The proposed optimization framework, while comprehensive, is not without limitations. One key limitation is the expensiveness of the first optimization run, as this usually include a moderately large number of experiments due to the space filling design. Additionally, the effectiveness of the framework may be influenced by the quality and quantity of available data, and uncertainties during the measurement process; however, this can be overcome with the incorporation of heteroscedastic Gaussian process [46, 109] and robust property measurements. The current version of the framework also assumes that the excluded variables and the part geometry are fixed, and the transferability from one set of geometry to other ones may require further exploration of transfer learning techniques coupled with Bayesian optimization [110,111,112]. This can help reducing the number of initial experimental runs for other geometries. Lastly, the framework adapts a classical notion of multiobjective optimization where each objective is modeled separately, a modern Bayesian multiobjective optimization [113] using the expected hypervolume improvement acquisition function [114], can largely accelerate the optimization process.

To address these limitations and further enhance the optimization framework, future research could explore methods to transfer the knowledge gained from an optimization on a specific AM machine to other process. Additionally, exploring alternative surrogate modeling techniques could make the framework applicable to a broader range of additive manufacturing scenarios. Although the case study was carried out using custom python scripts, the overall framework can benefit from software design principle to construct a more coherent code. Continuous collaboration with industry and stakeholders can provide valuable insights, ensuring the framework remains relevant and effective in addressing emerging challenges in AM.

4 Conclusions

Building upon existing knowledge, this study presents an adaptable multi-objective optimization framework specifically designed for additive manufacturing (AM). This framework offers flexibility, as it accommodates diverse process variables and objectives, facilitating optimization of various AM processes. And adaptability where continuous process improvement is enabled through Bayesian optimization, leading to a data-driven learning process. Our investigation yielded valuable insights and recommendations:

-

Model selection: While initially comparable, Gaussian process models outperform simpler models like linear regressions with interactions in long-term AM optimization.

-

Sensitivity analysis: Hatch distance significantly influences surface roughness, emphasizing the need for precise control.

-

Multi-objective optimization: NSGA-II outperforms scalarization techniques in ensuring diverse optimal solutions and avoiding scaling effects.

Based on these findings, we recommend the following: utilizing GP models for AM optimization due to their superior long-term reliability compared to simpler models like linear regressions with interactions, prioritizing precise control of hatch distance for achieving desired surface roughness in AM processes, selecting NSGA-II over scalarization techniques for MOO to avoid potential biases and obtain a broader range of optimal solutions. This framework, along with the recommendations outlined, holds promising potential for advancing the optimization of diverse AM processes, leading to improved product quality and performance.

References

Guo N, Leu MC (2013) Additive manufacturing: technology, applications and research needs. Front Mech Eng 8:215–243. https://doi.org/10.1007/s11465-013-0248-8

Huang Y, Leu MC, Mazumder J, Donmez A (2015) Additive manufacturing: current state, future potential, gaps and needs, and recommendations. J Manuf Sci Eng 137. https://doi.org/10.1115/1.4028725

Kobryn PA, Semiatin SL (2001) The laser additive manufacture of Ti-6Al-4V. JOM 53:40–42. https://doi.org/10.1007/s11837-001-0068-x

Thomas DS, Gilbert SW (2014) Costs and cost effectiveness of additive manufacturing. Natl Inst Stand Technol. https://doi.org/10.6028/NIST.SP.1176

Sun Z, Tan X, Tor SB, Yeong WY (2016) Selective laser melting of stainless steel 316L with low porosity and high build rates. Mater Des 104:197–204. https://doi.org/10.1016/j.matdes.2016.05.035

Tapia G, Elwany A (2014) A review on process monitoring and control in metal-based additive manufacturing. J Manuf Sci Eng 136. https://doi.org/10.1115/1.4028540

Khairallah SA, Anderson AT, Rubenchik A, King WE (2016) Laser powder-bed fusion additive manufacturing: Physics of complex melt flow and formation mechanisms of pores, spatter, and denudation zones. Acta Mater 108:36–45. https://doi.org/10.1016/j.actamat.2016.02.014

DebRoy T, Wei H, Zuback JS, Mukherjee T, Elmer J, Milewski J et al (2018) Additive manufacturing of metallic components – process, structure and properties. https://doi.org/10.1016/J.PMATSCI.2017.10.001

Gong H, Nadimpalli VK, Rafi K, Starr T, Stucker B (2019) Micro-CT evaluation of defects in Ti-6Al-4V parts fabricated by metal additive manufacturing. Technologies 7:44. https://doi.org/10.3390/technologies7020044

Mukherjee T, DebRoy T (2018) Mitigation of lack of fusion defects in powder bed fusion additive manufacturing. J Manuf Process null:null. https://doi.org/10.1016/J.JMAPRO.2018.10.028

Ni X, Kong D, Zhang L, Dong C, Song J, Wu W (2019) Effect of process parameters on the mechanical properties of Hastelloy X alloy fabricated by selective laser melting. J Mater Eng Perform 28:5533–5540. https://doi.org/10.1007/s11665-019-04275-w

Gockel J, Sheridan L, Koerper B, Whip B (2019) The influence of additive manufacturing processing parameters on surface roughness and fatigue life. Int J Fatigue 124:380–388. https://doi.org/10.1016/j.ijfatigue.2019.03.025

Francois MM, Sun A, King WE, Henson NJ, Tourret D, Bronkhorst CA et al (2017) Modeling of additive manufacturing processes for metals: challenges and opportunities. Curr Opin Solid State Mater Sci 21:198–206. https://doi.org/10.1016/j.cossms.2016.12.001

Bian L, Thompson SM, Shamsaei N (2015) Mechanical properties and microstructural features of direct laser-deposited Ti-6Al-4V. JOM 67:629–638. https://doi.org/10.1007/s11837-015-1308-9

Masoomi M, Thompson SM, Shamsaei N (2017) Laser powder bed fusion of Ti-6Al-4V parts: thermal modeling and mechanical implications. Int J Mach Tools Manuf 118–119:73–90. https://doi.org/10.1016/j.ijmachtools.2017.04.007

Jayanath S, Achuthan A (2019) A computationally efficient hybrid model for simulating the additive manufacturing process of metals. Int J Mech Sci 160:255–269. https://doi.org/10.1016/j.ijmecsci.2019.06.007

Badia S, Chiumenti M, Martín AF, Neiva E (2017) Parallel finite-element analysis of heat transfer in AM processes by metal deposition. https://research.monash.edu/en/publications/parallel-finite-element-analysis-of-heat-transfer-in-am-processes

Kollmannsberger S, Özcan A, D'Angella D, Carraturo M, Kopp P, Zander N, Reali A, Auricchio F, Rank E (2018) Computational modelling of metal additive manufacturing. https://mediatum.ub.tum.de/1467252

Chen S (2019) Investigation of FEM numerical simulation for the process of metal additive manufacturing in macro scale. Phdthesis, Université de Lyon. https://theses.hal.science/tel-02402859

Chia HY, Wu J, Wang X, Yan W (2022) Process parameter optimization of metal additive manufacturing: a review and outlook. J Mater Inform 2:16. https://doi.org/10.20517/jmi.2022.18

Dejaegher B, Vander HY (2011) Experimental designs and their recent advances in set-up, data interpretation, and analytical applications. J Pharm Biomed Anal 56:141–158. https://doi.org/10.1016/j.jpba.2011.04.023

Bowden GD, Pichler BJ, Maurer A (2019) A Design of Experiments (DoE) Approach accelerates the optimization of copper-mediated 18F-fluorination reactions of arylstannanes. Sci Rep 9:11370. https://doi.org/10.1038/s41598-019-47846-6

Rogalewicz M, Smuskiewicz P, Hamrol A, Kujawinska A, Reis LP (2018) Possibilities and limitations of passive experiments conducted in industrial conditions. In: Hamrol A, Ciszak O, Legutko S, Jurczyk M, (Edits.), Cham: Springer International Publishing, p 869–79. https://doi.org/10.1007/978-3-319-68619-6_84

Tapia G, Khairallah S, Matthews M, King WE, Elwany A (2018) Gaussian process-based surrogate modeling framework for process planning in laser powder-bed fusion additive manufacturing of 316L stainless steel. Int J Adv Manuf Technol 94:3591–3603. https://doi.org/10.1007/s00170-017-1045-z

Gramacy RB (2020) Surrogates: gaussian Process modeling, design, and optimization for the applied sciences. Boca Raton: CRC Press, Taylor & Francis Group

Padhye N, Deb K (2011) Multi-objective optimisation and multi-criteria decision making in SLS using evolutionary approaches. Rapid Prototyp J 17:458–478. https://doi.org/10.1108/13552541111184198

Li J, Hu J, Cao L, Wang S, Liu H, Zhou Q (2021) Multi-objective process parameters optimization of SLM using the ensemble of metamodels. J Manuf Process 68:198–209. https://doi.org/10.1016/j.jmapro.2021.05.038

Meng L, Zhao J, Lan X, Yang H, Wang Z (2020) Multi-objective optimisation of bio-inspired lightweight sandwich structures based on selective laser melting. Virtual Phys Prototyp 15:106–119. https://doi.org/10.1080/17452759.2019.1692673

Chaudhry S, Soulaïmani A (2022) A comparative study of machine learning methods for computational modeling of the selective laser melting additive manufacturing process. Appl Sci 12:2324. https://doi.org/10.3390/app12052324

Carvalho DV, Pereira EM, Cardoso JS (2019) Machine learning interpretability: a survey on methods and metrics. Electronics 8:832. https://doi.org/10.3390/electronics8080832

Asadollahi-Yazdi E, Gardan J, Lafon P (2018) Multi-objective optimization of additive manufacturing process. IFAC-PapersOnLine 51:152–157. https://doi.org/10.1016/j.ifacol.2018.08.250

Molnar C (2022) Interpretable machine learning: a guide for making black box models explainable. Second edition. Munich, Germany: Christoph Molnar

Fel T, Cadène R, Chalvidal M, Cord M, Vigouroux D, Serre T (2021) Look at the variance! efficient black-box explanations with sobol-based sensitivity analysis. https://doi.org/10.48550/arXiv.2111.04138

Gu D, Shen Y (2009) Balling phenomena in direct laser sintering of stainless steel powder: metallurgical mechanisms and control methods. Mater Des 30:2903–2910. https://doi.org/10.1016/j.matdes.2009.01.013

Li R, Liu J, Shi Y, Wang L, Jiang W (2012) Balling behavior of stainless steel and nickel powder during selective laser melting process. Int J Adv Manuf Technol 59:1025–1035. https://doi.org/10.1007/s00170-011-3566-1

Tang M, Pistorius PC, Beuth JL (2017) Prediction of lack-of-fusion porosity for powder bed fusion. Addit Manuf 14:39–48. https://doi.org/10.1016/j.addma.2016.12.001

Bayat M, Thanki A, Mohanty S, Witvrouw A, Yang S, Thorborg J et al (2019) Keyhole-induced porosities in laser-based powder bed fusion (L-PBF) of Ti6Al4V: high-fidelity modelling and experimental validation. Addit Manuf 30:100835. https://doi.org/10.1016/j.addma.2019.100835

Forien J-B, Calta NP, DePond PJ, Guss GM, Roehling TT, Matthews MJ (2020) Detecting keyhole pore defects and monitoring process signatures during laser powder bed fusion: A correlation between in situ pyrometry and ex situ X-ray radiography. Addit Manuf 35:101336. https://doi.org/10.1016/j.addma.2020.101336

Husslage BGM, Rennen G, van Dam ER, den Hertog D (2011) Space-filling Latin hypercube designs for computer experiments. Optim Eng 12:611–630. https://doi.org/10.1007/s11081-010-9129-8

Pronzato L, Müller WG (2012) Design of computer experiments: space filling and beyond. Stat Comput 22:681–701. https://doi.org/10.1007/s11222-011-9242-3

Cioppa TM, Lucas TW (2007) Efficient nearly orthogonal and space-filling Latin hypercubes. Technometrics 49:45–55. https://doi.org/10.1198/004017006000000453

Das S, Tesfamariam S (2022) State-of-the-art review of design of experiments for physics-informed deep learning. https://doi.org/10.48550/arXiv.2202.06416

Jones DR, Schonlau M, Welch WJ (1998) Efficient global optimization of expensive black-box functions. J Glob Optim 13:455–492. https://doi.org/10.1023/A:1008306431147

Wang S, Lv L, Du L, Song X (2019) An improved LHS approach for constrained design space based on successive local enumeration algorithm. 2019 IEEE 9th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), p 896–9. https://doi.org/10.1109/CYBER46603.2019.9066677

Romano S, Nezhadfar PD, Shamsaei N, Seifi M, Beretta S (2020) High cycle fatigue behavior and life prediction for additively manufactured 17–4 PH stainless steel: effect of sub-surface porosity and surface roughness. Theoret Appl Fract Mech 106:102477. https://doi.org/10.1016/j.tafmec.2020.102477

Kersting K, Plagemann C, Pfaff P, Burgard W (2007) Most likely heteroscedastic Gaussian process regression. Proceedings of the 24th international conference on Machine learning, New York, NY, USA: Association for Computing Machinery, p 393–400. https://doi.org/10.1145/1273496.1273546

Refaeilzadeh P, Tang L, Liu H (2009) Cross-Validation. In: Liu L, Özsu MT (eds) Encyclopedia of database systems. Springer US, Boston, pp 532–8. https://doi.org/10.1007/978-0-387-39940-9_565

Saltelli A (ed) (2008) Global sensitivity analysis: the primer. Wiley, Chichester

Castillo E, Mínguez R, Castillo C (2008) Sensitivity analysis in optimization and reliability problems. Reliab Eng Syst Saf 93:1788–1800. https://doi.org/10.1016/j.ress.2008.03.010

Tanino T (1988) Sensitivity analysis in multiobjective optimization. J Optim Theory Appl 56:479–499. https://doi.org/10.1007/BF00939554

Virtanen P, Gommers R, Oliphant TE, Haberland M, Reddy T, Cournapeau D et al (2020) SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat Methods 17:261–72. https://doi.org/10.1038/s41592-019-0686-2

Harris CR, Millman KJ, van der Walt SJ, Gommers R, Virtanen P, Cournapeau D et al (2020) Array programming with NumPy. Nature 585:357–362. https://doi.org/10.1038/s41586-020-2649-2

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O et al (2011) Scikit-learn: machine learning in Python. J Mach Learn Res 12:2825–2830

Blank J, Deb K (2020) Pymoo: Multi-objective optimization in Python. IEEE Access 8:89497–89509. https://doi.org/10.1109/ACCESS.2020.2990567

Herman J, Usher W (2017) SALib: An open-source Python library for sensitivity analysis. J Open Source Softw 2:97. https://doi.org/10.21105/joss.00097

Myers RH, Montgomery DC, Anderson-Cook CM (2016) Response surface methodology: process and product optimization using designed experiments, 4th edn. Wiley, Hoboken

Montgomery DC (2017) Design and analysis of experiments, 9th edn. John Wiley & Sons, Inc, Hoboken

Garud SS, Karimi IA, Kraft M (2017) Design of computer experiments: a review. Comput Chem Eng 106:71–95. https://doi.org/10.1016/j.compchemeng.2017.05.010

Mostafaei A, Zhao C, He Y, Reza Ghiaasiaan S, Shi B, Shao S et al (2022) Defects and anomalies in powder bed fusion metal additive manufacturing. Curr Opin Solid State Mater Sci 26:100974. https://doi.org/10.1016/j.cossms.2021.100974

Petelet M, Iooss B, Asserin O, Loredo A (2010) Latin hypercube sampling with inequality constraints. AStA Adv Stat Anal 94:325–339. https://doi.org/10.1007/s10182-010-0144-z

Johnson L, Mahmoudi M, Zhang B, Seede R, Huang X, Maier JT et al (2019) Assessing printability maps in additive manufacturing of metal alloys. Acta Mater 176:199–210. https://doi.org/10.1016/j.actamat.2019.07.005

(2022) Detection, classification and prediction of internal defects from surface morphology data of metal parts fabricated by powder bed fusion type additive manufacturing using an electron beam. Addit Manuf 54:102736. https://doi.org/10.1016/j.addma.2022.102736

Oliveira JP, LaLonde AD, Ma J (2020) Processing parameters in laser powder bed fusion metal additive manufacturing. Mater Des 193:108762. https://doi.org/10.1016/j.matdes.2020.108762

Crestaux T, Le Maıˆtre O, Martinez J-M (2009) Polynomial chaos expansion for sensitivity analysis. Reliab Eng Syst Saf 94:1161–1172. https://doi.org/10.1016/j.ress.2008.10.008

Vohra M, Nath P, Mahadevan S, Tina Lee Y-T (2020) Fast surrogate modeling using dimensionality reduction in model inputs and field output: Application to additive manufacturing. Reliab Eng Syst Saf 201:106986. https://doi.org/10.1016/j.ress.2020.106986

Quirante N, Javaloyes J, Ruiz-Femenia R, Caballero JA (2015) Optimization of chemical processes using surrogate models based on a kriging interpolation. Computer Aided Chemical Engineering, vol. 37, Elsevier, p. 179–84. https://doi.org/10.1016/B978-0-444-63578-5.50025-6

Kudela J, Matousek R (2022) Recent advances and applications of surrogate models for finite element method computations: a review. Soft Comput 26:13709–13733. https://doi.org/10.1007/s00500-022-07362-8

Sheng L, Zhao W, Zhou Y, Lin W, Du C, Lou H (2022) A surrogate model based multi-objective optimization method for optical imaging system. Appl Sci 12:6810. https://doi.org/10.3390/app12136810

Poëtte G (2019) A gPC-intrusive Monte-Carlo scheme for the resolution of the uncertain linear Boltzmann equation. J Comput Phys 385:135–162. https://doi.org/10.1016/j.jcp.2019.01.052

Rasmussen CE, Williams CKI (2006) Gaussian processes for machine learning. MIT Press, Cambridge

Durrande N (2017) Kernel design. Presented at the gaussian process summer school, The University of Sheffield. http://gpss.cc/gpuqss16/slides/durrande_school.pdf

Wilson A, Adams R (2013) Gaussian process kernels for pattern discovery and extrapolation. Proceedings of the 30th International conference on machine learning, in Proceedings of Mach Learn Res 28(3):1067–1075. https://proceedings.mlr.press/v28/wilson13.html

Wong Y (1991) How Gaussian radial basis functions work. IJCNN-91-Seattle International Joint Conference on Neural Networks, vol. ii, Seattle, WA, USA: IEEE, p 133–8. https://doi.org/10.1109/IJCNN.1991.155326

Marchildon AL, Zingg DW (2023) A non-intrusive solution to the Ill-conditioning problem of the gradient-enhanced Gaussian covariance matrix for Gaussian processes. J Sci Comput 95. https://doi.org/10.1007/s10915-023-02190-w

Basak S, Petit S, Bect J, Vazquez E (2022) Numerical issues in maximum likelihood parameter estimation for Gaussian process interpolation. In: Nicosia G, Ojha V, La Malfa E, La Malfa G, Jansen G, Pardalos PM et al (eds) Machine Learning, Optimization, and Data Science, vol 13164. Springer International Publishing, Cham, pp 116–31. https://doi.org/10.1007/978-3-030-95470-3_9

Mirtaheri SL, Shahbazian R (2022) Machine learning theory to applications, 1st edn. CRC Press, Boca Raton. https://doi.org/10.1201/9781003119258

Zhong X, Gallagher B, Liu S, Kailkhura B, Hiszpanski A, Han TY-J (2022) Explainable machine learning in materials science. Npj Comput Mater 8:1–19. https://doi.org/10.1038/s41524-022-00884-7

Kuhnt S, Kalka A (2022) Global sensitivity analysis for the interpretation of machine learning algorithms. In: Steland A, Tsui K-L (eds) Artificial intelligence, big data and data science in statistics: challenges and solutions in environmetrics, the natural sciences and technology. Springer International Publishing, Cham, pp 155–69. https://doi.org/10.1007/978-3-031-07155-3_6

Christopher Frey H, Patil SR (2002) Identification and review of sensitivity analysis methods. Risk Anal 22(3):553–78. https://doi.org/10.1111/0272-4332.00039

Morio J (2011) Global and local sensitivity analysis methods for a physical system. Eur J Phys 32:1577–1583. https://doi.org/10.1088/0143-0807/32/6/011

Sobol′ IM (2001) Global sensitivity indices for nonlinear mathematical models and their Monte Carlo estimates. Math Comput Simul 55:271–280. https://doi.org/10.1016/S0378-4754(00)00270-6

Zhang X-Y, Trame MN, Lesko LJ, Schmidt S (2015) Sobol sensitivity analysis: a tool to guide the development and evaluation of systems pharmacology models. CPT Pharmacom Syst Pharmacol 4:69–79. https://doi.org/10.1002/psp4.6

Peter JEV, Dwight RP (2010) Numerical sensitivity analysis for aerodynamic optimization: a survey of approaches. Comput Fluids 39:373–391. https://doi.org/10.1016/j.compfluid.2009.09.013

Saltelli A, Tarantola S, Chan KP-S (1999) A quantitative model-independent method for global sensitivity analysis of model output. Technometrics 41:39–56. https://doi.org/10.1080/00401706.1999.10485594

Puy A, Becker W, Piano SL, Saltelli A (2022) A comprehensive comparison of total-order estimators for global sensitivity analysis. Int J Uncertain Quantif 12:1–18. https://doi.org/10.1615/Int.J.UncertaintyQuantification.2021038133

Ashby MF (2000) Multi-objective optimization in material design and selection. Acta Mater 48(1):359–69. https://doi.org/10.1016/S1359-6454(99)00304-3

Caramia M, Dell´Olmo P, editors (2008) Multi-objective Optimization. Multi-objective management in Freight logistics: increasing capacity, service level and safety with optimization algorithms, London: Springer, p 11–36. https://doi.org/10.1007/978-1-84800-382-8_2

Aboutaleb AM, Mahtabi MJ, Tschopp MA, Bian L (2019) Multi-objective accelerated process optimization of mechanical properties in laser-based additive manufacturing: case study on selective laser melting (SLM) Ti-6Al-4V. J Manuf Process 38:432–444. https://doi.org/10.1016/j.jmapro.2018.12.040

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evol Computat 6:182–197. https://doi.org/10.1109/4235.996017

Eiben AE, Smith JE (2015) Introduction to evolutionary computing. Springer Berlin Heidelberg, Berlin. https://doi.org/10.1007/978-3-662-44874-8

Powell MJD (2007) A view of algorithms for optimization without derivatives. Technical report DAMTP2007/NA03, Department of applied mathematics and theoretical physics, University of Cambridge, Cambridge

Runarsson TP, Yao X (2000) Stochastic ranking for constrained evolutionary optimization. IEEE Trans Evol Comput 4:284–294. https://doi.org/10.1109/4235.873238

Packwood D (2017) Bayesian optimization for materials science, vol 3. Springer Singapore, Singapore. https://doi.org/10.1007/978-981-10-6781-5

Li C, de Celis Rubín, Leal D, Rana S, Gupta S, Sutti A, Greenhill S et al (2017) Rapid Bayesian optimisation for synthesis of short polymer fiber materials. Sci Rep 7:5683. https://doi.org/10.1038/s41598-017-05723-0

Gopakumar AM, Balachandran PV, Xue D, Gubernatis JE, Lookman T (2018) Multi-objective optimization for materials discovery via adaptive design. Sci Rep 8:3738. https://doi.org/10.1038/s41598-018-21936-3

Liu X, Wu Y, Wang B, Ding J, Jie H (2017) An adaptive local range sampling method for reliability-based design optimization using support vector machine and Kriging model. Struct Multidisc Optim 55:2285–2304. https://doi.org/10.1007/s00158-016-1641-9

Liu H, Ong Y-S, Cai J (2018) A survey of adaptive sampling for global metamodeling in support of simulation-based complex engineering design. Struct Multidisc Optim 57:393–416. https://doi.org/10.1007/s00158-017-1739-8

Greenhill S, Rana S, Gupta S, Vellanki P, Venkatesh S (2020) Bayesian optimization for adaptive experimental design: a review. IEEE Access 8:13937–13948. https://doi.org/10.1109/ACCESS.2020.2966228

Snoek J, Larochelle H, Adams RP (2012) Practical bayesian optimization of machine learning algorithms. In Adv Neural Inf Process Syst Vol. 25. Curran associates, Inc. https://papers.nips.cc/paper_files/paper/2012/hash/05311655a15b75fab86956663e1819cd-Abstract.html

Deng Y, Mao Z, Yang N, Niu X, Lu X (2020) Collaborative optimization of density and surface roughness of 316L stainless steel in selective laser melting. Materials 13:1601. https://doi.org/10.3390/ma13071601

Bouhlel MA, Hwang JT, Bartoli N, Lafage R, Morlier J, Martins JRRA (2019) A Python surrogate modeling framework with derivatives. Adv Eng Softw 135:102662. https://doi.org/10.1016/j.advengsoft.2019.03.005

Saltelli A, Annoni P, Azzini I, Campolongo F, Ratto M, Tarantola S (2010) Variance based sensitivity analysis of model output. Design and estimator for the total sensitivity index. Comput Phys Commun 181:259–70. https://doi.org/10.1016/j.cpc.2009.09.018

Hort M, Sarro F (2021) The effect of offspring population size on NSGA-II: a preliminary study. Proceedings of the Genetic and Evolutionary Computation Conference Companion, New York, NY, USA: Association for Computing Machinery, p 179–180. https://doi.org/10.1145/3449726.3459479

Li X, Li X, Wang K, Yang S, Li Y (2021) Achievement scalarizing function sorting for strength Pareto evolutionary algorithm in many-objective optimization. Neural Comput Appl 33:6369–6388. https://doi.org/10.1007/s00521-020-05398-1

Villarreal-Marroquin MG, Mosquera-Artamonov JD, Cruz CE, Castro JM (2020) A sequential surrogate-based multiobjective optimization method: effect of initial data set. Wireless Netw 26:5727–5750. https://doi.org/10.1007/s11276-019-02212-2

Gunnell LL, Manwaring K, Lu X, Reynolds J, Vienna J, Hedengren J (2022) Machine learning with gradient-based optimization of nuclear waste vitrification with uncertainties and constraints. Processes 10:2365. https://doi.org/10.3390/pr10112365

Morales JL, Nocedal J (2011) Remark on “algorithm 778: L-BFGS-B: Fortran subroutines for large-scale bound constrained optimization.” ACM Trans Math Softw 38:7:1-7:4. https://doi.org/10.1145/2049662.2049669

Byrd RH, Lu P, Nocedal J, Zhu C (1995) A limited memory algorithm for bound constrained optimization. SIAM J Sci Comput 16( 5):1190–1208. https://doi.org/10.1137/0916069

Le QV, Smola AJ, Canu S (2005) Heteroscedastic gaussian process regression. In Proceedings of the 22nd International conference on machine learning. Assoc Comput Mach, ICML ’05. New York, NY, USA, pp 489–96. https://doi.org/10.1145/1102351.1102413

Liu S, Stebner AP, Kappes BB, Zhang X (2021) Machine learning for knowledge transfer across multiple metals additive manufacturing printers. Addit Manuf 39:101877. https://doi.org/10.1016/j.addma.2021.101877

Feurer M, Letham B, Hutter F, Bakshy E (2022) Practical transfer learning for bayesian optimization. https://doi.org/10.48550/arXiv.1802.02219

Theckel Joy T, Rana S, Gupta S, Venkatesh S (2019) A flexible transfer learning framework for Bayesian optimization with convergence guarantee. Expert Syst Appl 115:656–672. https://doi.org/10.1016/j.eswa.2018.08.023

Daulton S, Eriksson D, Balandat M, Bakshy E (2022) Multi-objective Bayesian optimization over high-dimensional search spaces. https://doi.org/10.48550/arXiv.2109.10964

Daulton S, Balandat M, Bakshy E (2020) Differentiable expected hypervolume improvement for parallel multi-objective bayesian optimization. http://arxiv.org/abs/2006.05078

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Heddar, M.I.E., Mehdi, B., Matougui, N. et al. Adaptable multi-objective optimization framework: application to metal additive manufacturing. Int J Adv Manuf Technol 132, 1897–1914 (2024). https://doi.org/10.1007/s00170-024-13489-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-024-13489-9