Abstract

The question of how methods from the field of artificial intelligence can help improve the conventional frameworks for topology optimisation has received increasing attention over the last few years. Motivated by the capabilities of neural networks in image analysis, different model-variations aimed at obtaining iteration-free topology optimisation have been proposed with varying success. Other works focused on speed-up through replacing expensive optimisers and state solvers, or reducing the design-space have been attempted, but have not yet received the same attention. The portfolio of articles presenting different applications has as such become extensive, but few real breakthroughs have yet been celebrated. An overall trend in the literature is the strong faith in the “magic”of artificial intelligence and thus misunderstandings about the capabilities of such methods. The aim of this article is therefore to present a critical review of the current state of research in this field. To this end, an overview of the different model-applications is presented, and efforts are made to identify reasons for the overall lack of convincing success. A thorough analysis identifies and differentiates between problematic and promising aspects of existing models. The resulting findings are used to detail recommendations believed to encourage avenues of potential scientific progress for further research within the field.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Topology Optimisation (TO) is a mathematical approach to mechanical and multiphysics design aimed at maximising structural performance. Spatial optimisation of the distribution of material within a defined domain subject to sets of physical and geometric constraints, effectively increases the design freedom compared to other design approaches. Since the introduction of the homogenisation approach for topology optimisation (Bendsøe and Kikuchi 1988), the field has become an increasingly popular academic field as well as a practical design tool for industry, also fuelled by the developments in Additive Manufacturing (AM) permitting production of more complex features to better exploit the increased design freedom gained from TO. While the homogenisation approach demonstrated the promise of TO, it was considered to consist of complex operations and resulted in indistinct blurry optimised designs. Therefore, the SIMP (Solid Isotropic Material with Penalisation) approach (Bendsøe 1989; Zhou and Rozvany 1991) soon became the preferred method. This approach considers the relative material-density in each element of the Finite Element (FE) mesh as design variables, allowing for a simpler interpretation and optimised designs with more clearly defined features. Later, alternative approaches to TO emerged, among others, evolutionary algorithms (Xie and Steven 1997), the level-set method (Allaire et al. 2002; Wang et al. 2003), feature-mapping methods (Norato et al. 2004; Guo et al. 2018; Wein et al. 2020) and stochastic metaheuristics such as Simulated Annealing (SA) and Genetic Algorithms (GA). The latter non-gradient based TO algorithms have been proven inefficient and intractable for practical problems (Sigmund 2011).

Common for the implementation of the different solution methods is that they use an iterative procedure to create a complex mapping from problem-defining characteristics (i.e. supports, loads and objective function) to an optimised structure. To ensure coherence with laws of physics the structure is governed by a system of partial differential equations. As the considered approach to TO is based on nested analysis and design, this system of equations must be solved for the intermediate solution in each iterate of these procedures. For problems increasing in size and complexity obtaining this solution becomes a highly computationally expensive process posing a challenge in large-scale topology optimisation. As accuracy and obtained detail of solutions are highly dependent upon the element size in FE-analysis, the applicability of topology optimisation for real-life design cases is limited by this computational complexity. Therefore, current developments within the field are strongly motivated by the desire to either limit the number of iterations needed to obtain an optimised structure or the computational cost of completing an iteration.

The technological development in high-performance computing has not only provided important support in the progress of topology optimisation, but also in other increasingly popular research fields such as Artificial Intelligence (AI). Especially prominent is the growth within the field of Machine Learning (ML) and its subfield Deep Learning (DL), offering promising capabilities in pattern recognition and approximation of complex relations. Machine learning is roughly a collection of model-frameworks for applied function approximations when explicit descriptions of input-target mappings are difficult or impossible (Goodfellow et al. 2018). The field has also evolved towards establishing ML-models for approximating probability distributions rather than predicting specific targets (Lee et al. 2017). Due to such developments within the field, there has been an emergence of algorithms that are able to solve specific tasks, i.e. object or face recognition, better than humans (Goodfellow et al. 2018). It is especially advances within image analysis by Artificial Neural Networks (ANNs) and deep learning which have motivated the growing research interest in applying such technologies to increase the efficiency of topology optimisation. Assuming a regular finite element mesh is used to discretise the design-domain, one obtains a direct relation between pixels in an image and the material-density distribution throughout the elements in the mesh. This makes the application of well-known image analysis type models directly applicable to the discretised domain in terms of data representation. Further, as topology optimisation in itself consists of several approximate mappings achieved by iterative solvers, i.e. for solving the PDEs or optimising the sub-problem in each iteration, the idea of reduced and more direct substitutes for these operations is alluring.

In the past few years there has been an increase in publications applying AI-frameworks in an attempt to reduce the computational cost of TO. Many of these proposed frameworks are motivated by the resemblance between an element-based material distribution and an image, and the significant developments within deep learning for pattern recognition in image analysis. Increasing attention has, however, not yet yielded much significant progress. The existing literature demonstrates several dead ends, where non-transparent presentations of results oversell the promise of model architectures with unrealistic expectations. Neural network models are in some works treated as magic black-boxes with capabilities exceeding human limits, overlooking well-known limitations within the AI field. The idea of iteration-free TO by use of deep learning is particularly prominent, but also problematic. This review article is a reaction to these apparent misconceptions about the current state of AI, and what these models are capable of. Much like Sigmund (2011) was a response to then current trends in non-gradient TO, this review seeks to clarify why many existing AI-applications in TO seem unfruitful.

It is noted that the field of using neural networks for inverse design generally is expanding rapidly, not only in mechanics but also in a wide range of different fields, and not only in TO but also for many other varieties of design parameterisations. This review will mainly concentrate on TO formulations in solid mechanics, but also include some discussions about alternative physics applications. Considering the rapid growth and expansion of this field, there is no guarantee that all relevant works are included in this review, however, it is believed that the selection of papers discussed are representative of the current state of the art.

The paper is structured as follows; Sect. 1.1 gives a brief introduction to machine learning and neural networks, Sect. 2 presents the literature considered in this review and the different applications of neural networks presented in these articles, Sect. 3 addresses how to assess and evaluate such solution frameworks in TO, Sect. 4 discusses the limitations of current AI-technology and how these are reflected in the reviewed literature, Sect. 5 formulates some recommendations for further research into AI-aided TO and Sect. 6 summarises the important findings and comments on future prospects.

1.1 Artificial intelligence and neural networks

Artificial intelligence is a branch of the computer science field aiming to simulate intelligent behaviour using computers (Tiwari et al. 2018). The conceptual idea of AI has been present for decades, but the real acceleration in research interest has only been apparent over the last few years. The resurrection and increasing popularity is a reaction to technological developments improving computing power and techniques, where especially the introduction of GPUs for more efficient parallel processing has been crucial or the determining factor. Currently, AI is one of the hottest research topics due to the prospects of efficient computer-driven applications and the dream of obtaining AI systems capable of matching or succeeding human capabilities. General AI refers to the concept of a machine able to mimic the intelligence of humans and can be applied to serve any relevant function. This type of AI is, however, not yet realised and the feasibility of obtaining such machines is unknown. Research, therefore, mostly concerns itself with the area of Narrow AI, which is designed for specific applications.

Within Narrow AI especially the sub-field of machine learning, and subsequently deep learning, has received increased attention. These sub-disciplines are focused on exploiting existing data to make algorithms or models capable of solving specific problems or serving particular functions.

Machine learning refers to the group of methods engineered to complete specific computational tasks intelligently by learning from existing data. The field distinguishes itself from general computational sciences as it aims to automate the task of analytical model building using data and experience, relieving the degree of human analysis and hardcoded rules needed. This separation between what is seen as human and artificial intelligence is not consistently agreed upon in the scientific community. Some go as far as deeming anything that is programmable as being AI, which would imply that conventional TO is also AI. The authors of this review paper do, however, support the definition presented by Copeland (2016), which describes the distinction by

So rather than hand-coding software routines with a specific set of instructions to accomplish a particular task, the machine is “trained”using large amounts of data and algorithms that give it the ability to learn how to perform the task.

A “traditional” gradient-based algorithm is indeed hand-coded and does not have any such built-in learning aspects. All changes and update rules are pre-programmed. Given deterministic computing conditions and perfect arithmetics, repeated applications will arrive at the exact same final solution, even if the algorithm navigates through a complicated design space. Potential variations in final solutions may be caused by imperfect arithmetics or non-deterministic computing, i.e. due to parallel execution, but these variations are not deliberate actions the algorithm does to improve performance for the next run, and hence nothing is learned. The same can be said about genetic algorithms. Given the same starting conditions and random seed, the algorithm will always converge to the same solution. If later solving a slightly perturbed design problem, there is no mechanism for exploiting knowledge from the previous study to improve the solution obtained for the new problem. With this definition, it is thus not correct to categorise TO in its traditional form as AI.

To illustrate what actually does qualify as AI, based on the presented definition, this section aims at introducing the core concepts of machine learning relevant for topology optimisation. The methods applied in the papers reviewed are specialised models based on versions and combinations of those to be presented in this brief theoretical introduction.

The majority of ML-methods used for topology optimisation are deep learning frameworks, meaning they are based on the use of Artificial Neural Networks (ANNs). Therefore, the following theory will focus on introducing such ANN-based methods. This family of methods is popular due to the associated design-flexibility resulting in possible modifications for a wide variety of applications (Janiesch et al. 2021). An ANN mimics information processing in biological systems by modelling connected processing units referred to as neurons, where the connections between them represent signal transmissions.

General structure of an ANN illustrating how the input-array \({\varvec{x}}\) flows through the layers of the network and is translated to some output-array \({\varvec{y}}\). Different network architectures are achieved by varying the number of hidden layers, the number of nodes within each hidden layer and the connections between the nodes in different hidden layers

In simple terms ANNs are used to represent non-linear functions, mapping some n-dimensional input to some m-dimensional output. Fig. 1 illustrates the general structure of an ANN where the neurons are represented by nodes and the signal transmissions as edges. The network consists of three elementary types of layers, namely input, hidden and output layers. Data are passed to the network in the input layer, and passed on through the hidden layers using the neural connections, before the mapped result is passed to the output layer. During each connection the signal from the origin node is multiplied by a weight and added to a bias before being passed through an activation function associated with the destination node. Different activation functions may be used for separate parts of the network, and the general definition is that such a function determines how the weighted sum of the neural input is transformed to the appropriate neural output. The typical choices of such activation functions is what introduces non-linearity in the ANN.

The different layers in an ANN as such represent nested function evaluations of the input data to obtain the desired output format. The nature of the overall model is determined by the network architecture in terms of number of hidden layers and number of neurons associated with each of these layers, as well as the weights, biases and activation functions used. The weight and bias parameters of a network are determined through the training process, where the model is fitted to the desired application based on available data. Training an ANN can be seen as a form of complex regression analysis or an optimisation problem, aimed at obtaining the best cost function for the model based on the desired input-output characteristics. Depending on the specific application this cost function may include simple measures like prediction accuracy or more complex measures such as distributional transport or equilibria to min-max games as discussed further below. There are several different learning algorithms available for such tasks, these are typically categorised by the characteristics of the desired model and the available data for knowledge extraction.

Figure 2 gives a general overview of learning methods in terms of training strategy and how they relate to TO applications. Supervised and unsupervised learning constitute the most commonly considered strategies for fixed datasets while reinforcement learning is a different experience-based approach (Goodfellow et al. 2016). Transfer learning acts as an extension applicable to any of the other strategies. To further elaborate on the fundamentals of ML it is also relevant to give an introduction to the mathematical models commonly applied within each of these categories.

Supervised learning is used when the training data consists of inputs with known corresponding output values, i.e. the x-values and desired y-values of Fig. 1 are known for each data sample. In this case the parameters of the network are updated as to minimise the prediction error, i.e. the loss function, between the model-obtained (\({\varvec{x}}\)) and target output (\({\varvec{y}}\)) across each of the training samples, such that an approximate map from input to output is achieved. Supervised learning is commonly applied when the aim is to achieve iteration-free TO (Abueidda et al. 2020; Yu et al. 2019) or when an efficient approximation of sensitivities is desired (Qian and Ye 2021). In the first case the inputs could be the problem boundary conditions and applied loads while the outputs are corresponding pre-optimised structures. In the latter case the inputs also include information about the structural design, i.e. the element density values, and the outputs could for instance be the displacement field or strain energy density for each element in the structure.

Unsupervised learning is used when the model should detect underlying patterns without any predefined output images. Instead, the formulation of the loss function alone controls the objective of the learning process. Care is therefore needed to ensure that the loss function measures the performance for the intended task, accounting for all aspects of what defines a desired output. On the other hand, this feature makes unsupervised learning more suited than supervised learning for problems where there are multiple useful outputs for each input. This would, however, require that one is only interested in one of the possible outputs for each input and that there exists an appropriate function for measuring the quality of the output. The unsupervised approach to achieving iteration-free TO could therefore circumvent the generation of pre-optimised structures by including the compliance and volume fraction constraint in the loss function (Halle et al. 2021). Unsupervised learning can also be used directly as an optimisation process of a reparameterised design representation (Chandrasekhar and Suresh 2021c; Deng and To 2021), or post-processing of an optimised structure by de-homogenisation (Elingaard et al. 2022).

Reinforcement learning is a process for discovering policies for how to best choose a sequence of actions to evolve a system from an initial state to reach some predefined goal. The loss function equivalent for this learning procedure is defined by a reward-punishment scheme evaluating the quality of an action. Reinforcement learning differs from unsupervised learning in that the possible system states and actions must be pre-defined. This strategy is useful for conducting optimisation tasks which can be reformulated as a Markov decision process like binary optimisation of trusses by evolutionary strategies (Hayashi and Ohsaki 2020). In this case, the system states correspond to a truss structure, formed by a set of members, the actions are the removal of some structural members, and the punishment or reward is measured by whether the chosen action leads to a violation of constraints (i.e. compliance or stress). As such, the goal is to achieve an optimised structure satisfying the constraints by iteratively removing structural members, and the trained model is used to determine what structural members to remove at each iteration. This learning strategy can also be used for exploration of the design space by choosing different parameter settings for topology optimisation (Sun and Ma 2020; Jang et al. 2022)

Transfer learning refers to when a pre-trained model developed to solve a specific task is re-purposed to a second task using the parameters and biases of the pre-trained network as initial settings in the training process for the new task. Knowledge gained from training the model to handle the initial task is as such used to limit the effort in terms of data samples and computational time needed to obtain good performance for solving a different but related task. The applicability and viability of transfer learning heavily depends on the generality of the initial task or how closely the different tasks are related. Transfer learning could be used to improve the performance of a model trained for iteration-free TO on new problems with boundary conditions, length scale or constraints different from those covered in the training cases for the original model.

Several of the solution frameworks contained in this review incorporate what is deemed active or online learning. This could either indicate that transfer learning is conducted during the optimisation procedure to improve the performance for the specific problem being solved, or that the learning procedure is re-started for each instance, where sequential transfer learning ensures adaption to the current problem. As for transfer learning, both supervised and unsupervised learning can be utilised in this way.

Based on some initial guesses for the weights and biases, an optimisation procedure is used to iteratively update the parameters to improve the performance measured by the loss function. There are different learning algorithms with different settings available to perform this training process, and an appropriate choice of method and settings is up to the designer.

In supervised and unsupervised learning some version of a gradient-based optimisation algorithm like gradient descent and Levenberg-Marquardt is commonly employed to determine the best choice for model parameters. The concept of backpropagation is often exploited to compute the desired gradients. Backpropagation simply refers to the procedure of computing the gradient of the loss function with respect to the model parameters using the chain rule, very similar to TO with multiple filtering operations (Wang et al. 2011). Reinforcement learning problems are represented as Markov Decision processes and differ from the other training categories in that the sequence of actions chosen are dependent. Therefore, the frameworks of the applied training algorithms are inspired by Dynamical Programming or probabilistic methods like Monte Carlo simulation.

In addition to the training procedure setup, the activation functions and the network architecture must be determined. The network architecture is defined by the number of hidden layers, the number of nodes in each of these layers and the connectivity between nodes of different layers. These characteristics are commonly referred to as the network hyperparameters and can both be determined manually or by a separate optimisation-like routine (Goodfellow et al. 2016).

The number of hidden layers is used to distinguish between shallow and Deep Neural Networks (DNNs). It is especially within the deep learning segment that technological advances have had an important influence, as an increasing number of hidden layers and thus an increasing model complexity, requires both more robust learning algorithms and more efficient hardware technology. As the number of parameters in a network grows, so does the memory consumption and time needed for training. Therefore it is crucial to exploit the flexibility in the network structure to increase performance for the desired task, while limiting the size of the network.

There are some well-established network architecture types that form the foundations for most ANN models. Feedforward Neural Networks (FNN) or Multilayer Perceptrons (MLPs) are acyclic ANNs where information only moves forward from the input layer, through the hidden layers sequentially, towards the output layer (Goodfellow et al. 2016). Feedforward neural networks are usually used for supervised learning of data that is neither sequential nor time-dependent. A network is fully connected if each neuron is connected to all neurons in the next layer. Such networks are useful as no special assumptions need to be made about the structure of the input. The drawback is however that this generality may hamper the model performance and require unnecessarily high computational costs.

Convolutional Neural Networks (CNNs) are a special case of feedforward networks which are not fully connected, and where weight sharing is used to make the networks translation equivariant. Translation equivariance means that the network has the same output for given features, regardless of where they are located in the input. CNNs are particularly useful for treating regular grids such as 2D or 3D images (Janiesch et al. 2021). A crucial ingredient in a CNN is the use of an ANN as a filter which can be seen as a sequence of discrete convolutions where each is followed by a non-linear mapping. Considering the input image to be the discrete function being convolved, the network weights define the convolution function while the activation functions introduce the non-linearity in the network. The concept of weight sharing means that the same filter can be placed in different locations of the input image reusing the same weights to extract the same features. Typically, many layers of such filters are used, paired with pooling, downsampling, or upsampling between layers. The term CNN refers to the entirety of the network constructed by these multiple layers of filters. Note that the weights are not shared between layers but only within each layer.

As such, weight sharing allows for the network to be trained to recognise the same objects anywhere in the image, even if the object placement is not varied in the training dataset. Another important benefit of weight sharing is that the size of the network is reduced, in terms of the number of parameters one need to adjust during training. The CNN-architecture therefore allows for using fewer training data samples to create a smaller network with improved performance.

The mentioned network architectures are suitable for supervised training and generating discriminative models, which are usually used for regression or classification with known output features. Alternatively, there are generative models that aim to learn some data distribution through unsupervised learning. One such model is the Variational Autoencoder (VAE) which aims at learning how to efficiently represent the data by compressing it to a latent vector, and consequently how to translate from such a latent vector back to the original input format. The corresponding network thus has an encoder-decoder structure similar to CNN, but the purpose is to accomplish a proficient dimensionality reduction of the data. Based on a trained VAE, new data instances can then be generated by sampling in the latent space and subsequently applying the decoding procedure. This allows for training a VAE to reduce the dimensionality of the design representation such that optimisation can be performed by iteratively updating the latent vector (Guo et al. 2014). The drawback of the VAE is that when used to generate new data one can obtain blurry samples as a consequence of the learned average data representation.

Generative Adversarial Networks (GANs) take a different approach to the generative task by coupling a generator network with a discriminator used to judge the quality of the data samples created by the generator. The training of a GAN constitutes a min-max game between the two networks where the generator aims at improving its ability to create “fake” data samples imitating the available training data, while the discriminator is trained to distinguish whether some input data sample is a generated fake or not. By this procedure the GAN learns how to create new seemingly real data samples. The provided data samples could consist of TO-optimised structures, where the generator then learns to generate new believable structural layouts and the discriminator learns how to detect inappropriate structures. In application the provided data samples could consist of TO-optimised structures, where the generator then learns to generate new believable structural layouts and the discriminator learns how to detect inappropriate structures. The model can then be used within a framework for diversifying design options for a specific mechanism (Oh et al. 2019; Rawat and Shen 2018)

A third family of generative models is Normalising Flows (NFs) which, in contrast to VAEs and GANs, explicitly learns the probability density function of the input data (Kobyzev et al. 2021). These models are constructed by invertible transformations mapping the complex distribution of observed data to a standard Gaussian latent variable. The latent space is in this case of the same dimensionality as the input and does therefore not suffer from the loss of information by averaging as VAEs do, where the latent space commonly serves as a compression of the input data. The invertible nature of the NF models allows for loss-less reconstruction of the input data and generative potential. This generative potential allows for high-dimensional image (Kingma and Dhariwal 2018; Dinh et al. 2016) and point cloud (Yang et al. 2019) generation and could be utilised for TO in a similar manner to VAE and GANs. NFs can further be used to map between image and point cloud representations (Pumarola et al. 2020) which could indicate potential for post-processing of TO optimized structures. To the best of our knowledge, there are no works in the current literature utilising NFs for TO.

As such, an overview of the modelling principles at the core of ML-applications for TO has been presented. How these strategies are combined and exploited to aid in the development of TO solution frameworks is covered in more detail by the following literature review.

2 Literature review

Initially the motivation behind combining AI with TO was related to the increasingly successful utilisation of deep learning models for image analysis and generation. This is reflected within the currently most popular applications of AI in TO, where some NN-architecture is trained in the hopes of generating viable structural images given problem descriptive inputs. In such approaches, one seeks to develop an AI-methodology replacing the need for conventional iterative optimisation methods. Other applications of AI-methods related to sub-procedures of the optimisation process are however also receiving increasing interest, with the hopes that one can develop models to support or fully replace certain computationally expensive components of the solution procedure.

2.1 Overview

The current literature on AI in TO can be categorised into five main groups. For the purpose of this review, these categories are defined as Direct design, Acceleration, Post-processing, Reduction and Design diversity. This section will give a quick overview over what these categories entail and connect them to the principal AI concepts utilised within each category.

Direct design refers to the strategy of creating learning models to directly predict an optimal structure when given some problem descriptive characteristics, and as such the aim is to achieve optimal structures “instantly”, in an iteration-free manner.

Acceleration refers to learning models used as supplements to conventional iterative solution methods, with the aim of reducing the computational costs. This is typically achieved through replacing the FE-analysis with some approximate model at a subset of the iterations, or by constructing a direct mapping between intermediate structures effectively skipping some subset of iterations.

Post-processing is defined as the modification of structures obtained through conventional TO or homogenisation usually aimed at ensuring manufacturability by changing the shape, determining microstructure configurations, smoothing of boundaries or as a substitute for de-homogenisation approaches.

Reduction is performed with the aim of reducing the size of the design space by constructing a model that describes the topology in a more compact way. This reparameterisation then allows for iterative optimisation with fewer design variables, which effectively speeds up the solution procedure. Note that such approaches resemble standard Model Order Reduction methods, but with the distinction that the nature of the AI approaches is different, since these are not explicitly programmed.

Design-diversity concerns generating multiple design solutions to the same topology optimisation problem and is somewhat related to finding the Pareto-front in multi-objective optimisation. A set of several candidate structures exhibiting different desired characteristics are generated providing multiple different design options to choose from.

For ease of describing trends within the different application areas Table 1 sorts most of the reviewed articles into appropriate, more specific sub-categories of each of the five main groups.

2.2 Categorisation

Within each of the five main categories presented, the research is built on similar fundamental ideas and motivations. Further, the resulting model performances exhibit mostly comparable strengths and weaknesses. Therefore, this section will focus on the contents of each category in a collective manner, highlighting works if distinction is deemed necessary.

2.2.1 Direct design

The direct design model approach is currently one of the most popular applications of AI in TO, and the aim is to directly achieve an optimised structure for a given problem definition, completely removing the need for expensive iterative procedures. Commonly this is achieved by implementing neural network architectures popular in image segmentation, like CNN or GAN. The structural design representation is typically defined by element densities within a regular FE-mesh, similar to the conventional SIMP approach, but some base their structural representation on geometrical features inspired by Feature Mapping or Moving Morphable Components (MMC) techniques (Zheng et al. 2021b; Hoang et al. 2022).

The considered optimisation problem is usually minimum compliance subject to a volume constraint, but other applications like thermal conduction problems, Li et al. (2019) and Lin et al. (2018), are also considered. Model inputs consist of boundary conditions, applied forces and volume fraction, given in spatial representation by a sequence of input matrices with dimensions equal to those of the considered FE-mesh. In certain works, additional inputs related to initial stress or strain (Nie et al. 2020b; Yan et al. 2022) and displacement fields (Wang et al. 2021c) are also included. The trained network is then used to map these inputs to some final structural design, either by regression as continuous grey scale element-density values, or by classification as binary black-and-white values indicating whether material is present within an element or not. Garrelts et al. (2021) presented a slightly different approach aiming at training a model to also handle rotated pictures taken of hand-sketched boundary conditions as input, and then mapping this image to an optimised Michell structure.

Most of the direct design models are trained in a supervised (CNN) or semi-supervised (GAN) manner where a large number of optimised structures are used as training output target samples. This means that at least one complete run of conventional TO must be completed for each problem case considered in the training process. Therefore it is critical to limit the size of the needed training dataset as well as the number of elements in each sample to make the computational time for building the desired model viable. This is however in conflict with obtaining a well-performing model that is able to handle a wide variety of different problems, as neural networks perform better on inputs that are similar to the previously seen training samples. These factors are likely the reason for most direct design models in the literature focusing on problems with fixed or very similar support conditions, only varying the volume fraction and applied loads, where the number of and possible placements of loads typically also come with limitations.

Common for most of these network architectures, is that they avoid fully connected layers. Therefore, in theory, they allow for flexibility in terms of the dimensions of and number of elements in the considered FE-mesh. Still, the presented training and test problems are typically restricted to a small fixed mesh where the input matrices and the output image for the network are explicitly defined by the dimensions of this fixed mesh, such that this potential adaptability is not exemplified. Further, when the network is only trained for the same regular mesh dimensions as a direct mapping from boundary conditions to an optimised structure, it is unclear whether the model is readily translated to problems with different mesh dimensions or resolutions, even given the inherent flexibility of the CNN. Zheng et al. (2021c) made some effort to ensure mesh flexibility by designing a network for a larger reference mesh with a mechanism for defining empty elements, such that the mesh dimensions could be varied within this reference domain. Their approach implies that the fixed-dimension reference mesh is defined before training, where it must be large enough to encompass the meshes of all problems the network will be used to solve in the future. In this review, it is found that none of the published direct design models explore whether it is possible to fully exploit the generality offered by CNN-types networks, in terms of input image dimensions.

If the network architecture is designed specifically for certain mesh dimensions, then the network size increases with the number of elements in the mesh, resulting in more parameters to be determined during training and a larger memory consumption for storing the model. In turn, this also increases the cost of obtaining training samples, as finer meshes imply more time needed to optimise a structure using conventional TO. It appears that such perceived mesh dependency of the direct design models is a limiting factor for research within this class of approaches. Further, the expense of generating high-resolution training samples and the increased structural complexity associated with higher resolution FE-meshes, are likely determining factors explaining why most of the current literature only considers low-resolution meshes, typically with fewer than 4,000 elements and at the most 26,000 elements (Lei et al. 2019; Li et al. 2019; Zheng et al. 2021c), which is five orders of magnitude below state-of-the-art TO methods using two billion elements (Baandrup et al. 2020).

Yu et al. (2019) considered 2D coarse grid problem cases with fixed boundary conditions, randomly sampled volume fraction between 0.2 and 0.8, and random single-point directional force application. By repeated sampling and application of open-source topology optimisation code (Andreassen et al. 2011) 100,000 corresponding optimised structures are generated, where a random subset of 80,000 of these are used for training and validating the network, while the remaining 20,000 are used for testing. The restricted sampling space, the large number of generated structures and the random selection of training and test data means that there is a high likelihood of each test-sample being similar to one of the training cases. Still, the reported results show that the prediction ability of the model is lacking when applied to the test-cases as larger structural disconnections are apparent in the predicted structures. Thus, thousands of expensive datasamples are collected to train a network which fails at solving problems strongly related to those seen by the network during training. Nakamura and Suzuki (2020) used the results reported by Yu et al. (2019) as a benchmark for their direct design network. By increasing the number of optimised structures used for training and validation of the model to 330,000, within the same sample-space, they reported a greater prediction accuracy in terms of pixel-wise density errors, as expected when allowing for more than three times the number of training instances. However, the worst case solutions still exhibit structural disconnections, implying a large prediction error in terms of the compliance of the design.

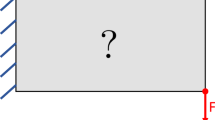

To illustrate both why such disconnections may occur and their effect on the structural performance, a simple test case inspired by the type of problems considered by Yu et al. (2019) and Nakamura and Suzuki (2020), is presented in Fig. 3-4. By reducing the density of two of the elements in the original structure (4a), the central bar is almost disconnected completely (4b). Applying volume-preserving thresholding (Sigmund and Maute 2013) the corresponding solid-void structures (4c) and (4d) are obtained, where a full disconnection is now obtained. The structural compliance with respect to the boundary conditions (Fig. 3) is indicated for each of the four presented structures. The presented test case is as such modelled on a square domain with a clamped left side, subjected to an external single-point load of horizontal magnitude 0.5 and vertical magnitude 1.0 applied to the top right node.

Compliance minimisation example for boundary conditions in Fig. 3 (subject to a volume fraction constraint of 0.2) illustrating the effect of disconnections on a 32x32 mesh. The grey scale structure (a) is obtained by top88(32,32,0.2,3,1.5,2) (Andreassen et al. 2011). Disconnections are imposed (b) and thresholding is applied to obtain the 0-1 counterparts (c)-(d)

Table 2 presents the mean average density error (MAE) as well as the relative increase in compliance (Gap) when comparing the connected and disconnected structures for both the grey scale and black-and-white designs. Firstly, it can be observed that for the grey scale structures, if the MAE was used to assess the difference between the two, they would be nearly identical. However, the compliance of the semi-disconnected structure is 12.9% higher than for the originally connected. After thresholding to fully black-and-white designs this effect is intensified, as the MAE remains below 0.4%, but the compliance is more than doubled. Therefore, if the model is trained with an increased focus on minimising errors related to image-reconstruction (e.g. MAE or Binary-Cross-Entropy) there is a risk of overlooking adverse effects when it comes to model performance. The works of Luo et al. (2021) and Behzadi and Ilies (2021) corroborate this suspicion, as physical performance or topology awareness is included in the loss function of the direct design model and a reduction in structural disconnections is observed. Halle et al. (2021) further considered fully unsupervised learning for direct TO where no disconnections are observed in the illustrated examples, but occurences of discontinuities and a loss of fine features are still presented. Note that with increased physical information embedded in training, FEA is needed each time the loss function is evaluated, making the actual training procedure much more computationally expensive. This could, however, reduce the overall cost of training data generation by obtaining higher accuracy using fewer optimised structures for training.

By presenting both the grey scale and 0-1 designs, other important aspects of comparing optimised structures are also highlighted. Firstly, considering the compliances of the two connected structures from Fig. 4, volume-preserving thresholding improves the structural performance significantly. Secondly, a partial disconnection by a low-density region in grey scale may result in a full disconnection when thresholded to a 0-1 design, which leads to a significant increase in compliance. As such, thresholding is important to reveal the true structural performance. Therefore, in line with the recommendations of Wang et al. (2021b), solid-void designs are advised for a fair comparison of optimised structures.

Based on the presented example, it is explained why MAE alone is not sufficient for either training a direct design model nor evaluating performance of a solution framework, as this measure may erroneously overestimate the performance of a structure. Further, the degree of grey scale may influence compliance comparisons between structures. Either a structure with more grey scale can be at a disadvantage or it can fail to capture a crucial structural disconnection. Hence, it is clearly a fundamental mistake to compare discrete to grey scale designs, or vice versa.

Bielecki et al. (2021) proposed an extended direct design approach utilising a three-step procedure. Given the problem-defining boundary conditions a DNN is first trained to realise a structure by determining the material distribution within the design-domain. Thereafter a CNN is used for structure refinement achieving reduced grey scale and smoother boundaries. In the last step conventional TO is applied for a maximum of 5 iterations to post-process the structure to ensure physical consistency and volume-constraint coherence, i.e. removing disconnections and ensuring that constraints are satisfied. The model was trained separately for 2D and 3D problems with fixed mesh sizes of 80x80 and 20x20x20 elements, respectively. Similar conditions for sampling of training data were utilised in both cases, where different volume fractions and supports or loads in corner nodes constituted the sampling space. To avoid rigid body motion, 3 out of 8 and 6 out of 24 degrees of freedom (DOFs) were fixed throughout for 2D and 3D cases, respectively. In total 614,304 samples were optimised and used for training for the 2D case, while for the more time-consuming 3D-case the training set size was restricted to 45,000 samples. Test-cases used for model assessment are obtained by sampling from the same problem space as for the training data generation. Comparative results are not reported across all 1,000 test samples, but a significant speed-up is obtained and for the presented results the compliance values are at least as good as those obtained by conventional TO. This holds true for both the 2D and 3D cases, but as a significantly smaller fraction of the problem space is used for training the 3D-model, the overlap between training and test problems is expected to be smaller. Due to the large number of optimised structures needed for training, the construction of the model is computationally expensive. Further, as the problem instances considered are sampled within a very restricted subset of possibilities that utilise the same coarse mesh resolution, transferring this framework to problems outside the training instance distribution is expected to increase the computational cost further.

A common trend amongst the papers within the direct design category is the requirement of a large set of TO-optimised structures, which becomes computationally expensive to collect and is likely the reason why these papers only consider very coarse scale fixed meshes and few variations in boundary conditions. Even with a large amount of training data and a very restricted problem space most works present results with poor structural performance. The use of image-reconstruction type loss functions only is a popular way of training the presented ANNs, significantly reducing the training time compared to if structural performance was to be integrated. Image-based errors do, however, not reflect the quality of a structure and thus the network learns based on an incorrect measure. There are other reasons for why the premise of this application category is flawed, which will be covered later in Sects. 3 and 4.

2.2.2 Acceleration

The application of AI-methods for accelerating TO is receiving increasing attention, where approaches both aim at limiting the number of iterations and complex computations needed within a conventional iterative optimisation procedure. The strategies in this category offer a more diverse profile than for the previously described direct design models, but there are some key similarities in the motivational ideas behind the presented works.

Sensitivity analysis An AI-method is considered to apply to sensitivity analysis when the aim is to replace or reduce the need for exact evaluations of sensitivities. Some of the works (Aulig and Olhofer 2013; Olhofer et al. 2014; Aulig and Olhofer 2015) contained in this section are only applicable, in the sense that they actually facilitate a speed-up, in cases where the sensitivities are difficult to obtain by conventional FEA and adjoint analysis. The claim of existence of such TO problems is often heard, but seldom exemplified. Other works (Chi et al. 2021; Qian and Ye 2021; Keshavarzzadeh et al. 2021b) try to reduce the computational load of, or completely eliminate, FE-analysis needed in the TO process.

Common for these approaches is that they aim to train a model to approximate complex computations by some functional relation. With this purpose some feed-forward neural network is constructed and trained as a regression model using supervised learning. Typically the network inputs consist of at least the current element densities. In some cases the loading conditions are also included and for procedures where FEA is still present in some form, displacement or strain energies are also supplied.

A single pass through the network may apply to one individual element, a patch of elements within the structure or all elements in the structure simultaneously. Approaches only considering subsets of elements at a time can allow for increased generalisation ability in that mesh- and problem-dependencies are potentially reduced, but on the other hand, important global information may be overlooked. Further, FE-analysis of a single structure and its density histories can provide a larger set of training samples with varying characteristics. As such, data generation is in most cases significantly cheaper than for the direct design models and there is potential to naturally capture greater input-diversity. By considering such sub-structures, the similarities between training and test data could be expected to increase, even with vastly different boundary conditions and load cases.

Lee et al. (2020) proposed a solution framework based on the conventional Optimality Criteria (OC) method, where two separate CNN-models are trained to predict compliance and volume fraction, respectively. For compliance this means that the need for FEA to evaluate the structural integrity is eliminated, replacing the computations with a less complex functional approximation. The conventional computation of the volume fraction is of linear complexity which is now replaced by some non-linear function represented by the corresponding CNN. The overall idea is that these neural networks will reduce the computational load of each iteration in the optimisation process, resulting in a significant speed-up. Most of the presented experimental results are focused on the network’s ability to predict volume fraction and compliance for a given structure, and thus the integration of the model in a TO process, where element sensitivities are needed, is not detailed. The MBB-beam and cantilever beam with fixed mesh discretisation and varying volume fractions are considered throughout the paper, both for training and testing. A full assessment of the method performance is therefore difficult, due to large similarities between training and test cases. Papadrakakis et al. (1998) and Sasaki and Igarashi (2019) similarly presented ANNs trained to predict objective and constraint values of a structure, aimed at replacing the fitness-evaluations in each iteration of a GA framework. Qiu et al. (2021) trained networks to iteratively remove material from a fully solid domain, similarly to evolutionary structural optimisation, but without the use of FEA in the actual optimisation procedure.

Aulig and Olhofer (2013); Olhofer et al. (2014) and Aulig and Olhofer (2015) focused on designing regression-type ML-models for predicting sensitivities when the adjoint approach is unattainable (not exemplified in their work) such that finite differencing is the only alternative. Standard compliance minimisation problems are used as examples, for which the formulas for exact sensitivities are known. The model inputs are related to the element densities and displacements (computed by FEA). In compliance minimisation the exact gradient of an element with respect to the objective is a function of these same features. This means that a good performance in terms of sensitivity accuracy can be expected for this exact problem formulation, but no conclusions about transferrability to other formulations can be made. A framework for further limiting the computational cost of the finite differencing alternative is also presented. Here the computational load of FEA is reduced by adaptive sampling of elements needed for exact evaluation, reducing the degrees of freedom in the FEA. This strategy is, however, not related to ML or NNs, and thus outside the scope of this review.

If the desired effect of the model is to reduce the computational load of FEA, but not necessarily completely removing this analysis technique from the optimisation procedure, another option for training the model is to facilitate for online learning. Online learning refers to when the model is not trained on pre-collected data, but rather trained during application to adapt to a specific problem. For the considered purpose, this entails that the model is trained during the optimisation run where FEA is then only completed in a subset of iterations and the obtained solutions are used in a sequential transfer learning procedure with an increasing number of data samples. Chi et al. (2021), and similarly Zhang et al. (2021a), presented such an approach where a transfer learning-based procedure is conducted after each set of new training data additions as to iteratively make the model more precise. The authors propose to perform the optimisation as a two-scale approach where a coarse grid version of the structure is subject to FEA at each iteration, while the trained model is applied to map these results to a finer mesh where FEA is only applied at a subset of the iterations. These approaches are as such not only concerned with sensitivity analysis, but utilise a multi-level TO approach to obtain the computational reductions associated with the sensitivity analysis. The presented results show promise and accuracy of the fine grid sensitivity predictions appear to improve with this online-approach. The approach and resulting speed-up are similar to those of multi-resolution techniques (Groen et al. 2017; Nguyen et al. 2012).

The main challenges for ANNs aimed at reducing the computational cost spent on FEA during optimisation are two-fold. Firstly, some approaches build on erroneous premises where the conventional alternatives are assumed less efficient than they really are, leaving these approaches redundant. Secondly, models developed for very specific problem instances become too restricted to be used as a general framework for TO. As for the multi-resolution online-learning approaches, these may be affected by coarse mesh restrictions of fine features when projected to a higher resolution. This phenomenon is common for the two-scale methods reviewed and will be covered in more detail when covering the upscaling category in Section 2.2.3.

Convergence If a model is trained to map between intermediate solution structures with the aim of reducing the total number of iterations in the optimisation procedure, it is said to pertain to accelerating convergence. Typical choices of methods are based on either a direct design-like model (Ye et al. 2021; Joo et al. 2021) or some time-series inspired forecasting (Kallioras et al. 2020). The direct design-type models map the input grey scale image of an intermediate design to an almost converged structure. Alternatively, the time-series inspired methods consider the trajectories of the densities of the individual elements and seek to directly map each element from a given iteration to a close to converged state.

The first approach inherits most of the challenges associated with direct design models. If the full image is to be mapped at once, the constructed model is likely to be mesh-dependent. Ensuring diversity and accuracy for different problem characteristics is difficult and comes with a large computational cost related to data sample generation. It does, however, benefit from the fact that more descriptive data is available as network inputs. From the performed optimisation iterations, used to reach the intermediate structure, both the displacement field and density history of the elements are known and can be used as inputs to the model.

Sosnovik and Oseledets (2017) trained a CNN to translate the grey scale image of the structure obtained after k SIMP iterations to a final black-and-white design. The training dataset was generated by running 100 iterations of SIMP on pseudo-random problem formulations on a 40x40 mesh. As inputs to the network the element densities at iteration \(k\le 100\) and the latest change in densities from iteration \(k{-}1\) to iteration k are supplied as two grey scale images. Different strategies for sampling k in each training sample were tested, and the output target considered was the black-and-white image obtained by thresholding the optimised structure at \(k=100\). The trained model is shown to outperform standard thresholding for the training dataset, but when tested on new problem formulations, heat conduction problems, the performances become similar. Performances are measured by binary similarities between structures, and no measures for compliance or volume fraction of the obtained structures are reported. Structural results are also illustrated for problems similar to the training datasamples on finer grids (up to 72x108 elements), but the effect seems to simply be smoothing of boundaries compared to coarser structures. Joo et al. (2021) proposed a similar approach, but instead of mapping the full structure at once, their model divided the structural image into overlapping sub-modules, which then separately are mapped to an optimised sub-structure, and the complete structure is subsequently obtained by integrating over these sub-modules.

One benefit of considering the structural image as patches or a whole instead of element wise is that some information about the interaction between the elements can be retained and learned by the CNN. In the time-series approach however, there is an assumption of independent density-trajectories of each element which might be problematic exactly because of the fact that the elements must have appropriate interaction to form a viable structure. Some of these interactions may be observable for the network given similarities in their iterative density histories before the mapping is applied. A benefit of this approach is that the generalisation ability of the proposed method is likely to increase as there is less mesh- and problem-dependency reflected in the training samples. Success of this method does, however, depend on the expected iterative trajectories being similar even for different problem definitions. A moving structural member will lead to bell-type trajectories, meaning the direction of the density-trajectory of the concerned elements change several times during the full iteration history. The assumption is therefore that an element’s density-trajectory during the first few iterations is sufficient to distinguish the elements for which this happens, no matter what structural problem is considered.

Kallioras et al. (2020) proposed a time-series approach where the iterative element density histories over the first 36 SIMP iterations were used as input to a neural network which individually maps the element densities to close-to-converged values to form a structure from which SIMP is continued until convergence. The model used is a Deep-Belief Network (DBN) which is a type of ANN where feature detection to achieve dimensionality reduction is conducted in each layer. As such, the input vector of the iterative density history of an element is gradually reduced to a final density value throughout the network. The network was trained on data samples consisting of the iterative history obtained from solving versions of the cantilever and simply supported beams with different length-scales and discretisations. The number of finite elements in these training samples ranged from 1,000-100,000, where four sets of boundary conditions for two different length-scales were solved for each resolution. When testing the model on problems different from the training cases, a computational speed-up is achieved. The speed-up is reported in terms of the number of SIMP-iterations needed to reach convergence, compared to the conventional approach. The obtained solutions have compliance values approximately matching those of the SIMP-obtained benchmarks. It should be noted that the comparisons to the conventional SIMP-approach is done in grey scale, which as shown in Fig. 4 may significantly underestimate the actual stiffness of a structure and thus the comparative results may not be representative.

Common for the presented convergence applications is that they assume part of the iterative density-history early in the optimisation procedure is sufficient to determine the nature of the final result. This can be a problematic assumption for problem instances where structural members move during optimisation causing large changes in both the individual element densities and the density field as a whole. The time-series approach based on individual pixel density histories is especially unlikely to succeed, but there may still be potential for approaches considering the global design change (Muñoz et al. 2022). It is reasonable to assume that given previous iteration history it is possible to predict the density-change after a subset of consecutive iterations, but by eliminating significant parts of the iterative search one is likely to face some of the same challenges as for the direct design applications.

2.2.3 Post-processing

AI-methods are considered as post-processing procedures when an optimised structure is used to generate the model input. As such, this application category pertains to methods for interpolating the given structure to a finer mesh resolution or shape optimisation and feature extraction for manufacturability purposes.

Shape optimisation When the aim of the formulated model is to alter the features of the obtained structure to ensure practical and cost-efficient manufacturability requirements are satisfied the method is said to perform post-processing by shape optimisation. Design aesthetics may also motivate such applications, where for instance Vulimiri et al. (2021) considered TO for minimal compliance while adhering to some reference design for structural patterns like circles or spider-webs.

Two of the works presented in this category, Lin and Lin (2005) and Yildiz et al. (2003), each proposed versions of ANN designed to perform hole-classification in a TO-optimised structure. Lin and Lin (2005) proposed a two-stage procedure where the first ANN is trained to recognise the underlying basic geometric shape of the hole, when given an input in the form of invariant moments describing the geometric characteristics of the hole in the optimised structure. The second stage consists of several ANNs, one for each basic geometry group defined for the first ANN, and they are each trained to fit a detailed shape template for the hole, within their basic geometry group. The input format used is a set of distance and area-ratios of the hole image represented in a dimension-independent manner, and the network uses this information to map the hole to one of twelve predefined geometric shape templates within the considered shape-category. Yildiz et al. (2003) trained a single ANN which uses the grey scale image of the TO-optimised structure as input and computes confidence measures for each hole in the structure, based on the perceived similarities to four basic feature templates. The identified hole-shapes are then used to formulate a feature based part model which is subjected to shape optimisation to obtain the desired final structural layout. The main challenges associated with the presented works relate to the fact that they both rely on manually defined sets of possible shapes. Further, as the sizes of these sets are limited it is unclear whether anything is gained from applying AI-based models to perform the identification tasks. Yildiz et al. (2003) also found, by testing different ANN architectures, that the best results were obtained when the ANN only contained one hidden layer. This raises the question of whether alternative and simpler deterministic methods could achieve similar results.

Hertlein et al. (2021) proposed a direct design type GAN-model with integrated manufacturing constraints for additive manufacturing and paired it with a post-processing procedure utilising conventional TO. The inputs to the model consist of channels relating directly to the 64x64 mesh considered, indicating supports and loads as well as build plate orientation to account for the manufacturability. The input encoding is constructed such that existence of material is encouraged in elements where the optimised structure is expected to have material, here defined by the locations of loads and build plate. The output from the GAN is a grey scale image representing the optimised topology. Training data are obtained running conventional TO (Andreassen et al. 2011) with an integrated overhang filter as presented by Langelaar (2017). It is also suggested that the resulting structure is post-processed by running some number of iterations of this conventional alternative, to further eliminate overhanging features and correct any compliance-related inaccuracies.

Overall, this type of application of AI-technology is not well-studied in the literature. This might be due to the introduction of filters and manufacturability constraint in the TO problem formulation and solution process reducing the need for post-processing, or that alternative conventional methods for post-processing with satisfactory performance exist. It would be of great benefit if ML could be used to extract CAD geometries from optimised designs, as many manufacturing methods require a format for structural representation which is not directly attainable from density-based designs. The viability of obtaining such a model is, however, not guaranteed. There is a body of literature on reverse engineering methods (Buonamici et al. 2018). These methods reconstruct CAD models from acquired 3D data in the form of triangle meshes or point clouds. Some methods produce constructive solid geometry (CSG) models (Du et al. 2018). These methods are often based on detecting primitive shapes in the input (Li et al. 2011) whereas Eck and Hoppe (1996) generated B-Spline patches from the input. Such methods could be exploited for post-processing of TO-optimised structures.

Upscaling The works belonging to the post-processing upscaling category typically consider a coarse grid structure optimised using conventional methods and apply some type of neural network to translate this structure to a finer mesh. There are various approaches to how to format the input to the considered model where Wang et al. (2021a) and Yu et al. (2019) evaluated the entire structural image of element densities as input while Napier et al. (2020) and Xue et al. (2021) divided the structure into patches of element densities that are processed individually but may have some overlap in terms of what elements belong to each patch. The latter option is likely to be the most beneficial in terms of generalisation ability, especially as the models have the potential to be more or less mesh-independent. Further, the patch-based approach may allow for fewer optimised high-resolution structures to be used for training data, and thus overall significant computational cost-savings may be achieved. One concern is, however, that the applied model does not have any concept of the structure as a whole, such that only boundary fine-tuning and no topology refinement is obtained.

Kallioras and Lagaros (2021) proposed a method (DL-scale) that somewhat differs from this approach as they apply deterministic upscaling, iteratively paired with the DBN convergence acceleration framework proposed in Kallioras et al. (2020). This work does therefore not apply AI-methodology for the actual upscaling, but is mentioned here as the reported results still reflect some of the common challenges within this category. Even though it is true that they observe significant speed-up for increasingly finer grids it becomes evident that the solutions obtained by the modified approach has a reduced capability of capturing finer features, when compared to the corresponding SIMP optimised structures. Further, for several of the reported cases, the level of grey scale appears to be higher for the DL-scale obtained structures, and as the minimum compliances compared in each test case are computed for grey scale images, it is unclear whether the overall better objective values obtained using DL-scale actually are representative. The lack of finer structural components when applying upscaling is a common occurrence in the literature, and works like Wang et al. (2021a) illustrate how the attempt to capture fine features might lead to structures with a large degree of blurry grey scale areas or even structural disconnections. Essentially, details on the fine scale are limited by the coarse scale resolution, which effectively works as a crude length-scale constraint.

Elingaard et al. (2022) proposed a CNN for mapping a set of lamination parameters on a coarse mesh to a fine scale design promoting very fine features. The network is as such used as a computationally efficient substitute for de-homogenisation (Pantz and Trabelsi 2008; Groen and Sigmund 2018) to overcome the current bottleneck in extraction of fine scale results in homogenisation-based topology optimisation. As inputs to the network the orientations from a homogenisation-based TO solution are used. The network is then used to upsample this information to an intermediate density field, which is post-processed using a sequence of graphics-based steps running in linear time to obtain the final high-resolution one-scale design. Unsupervised training is utilised to avoid the need for generating expensive targets and cheap input data generation for training is ensured by sampling from a surrogate field of low-frequency sines. The training is as such performed independently of the physical properties of the underlying structural optimisation problem, which makes the method mesh and problem independent. By numerical experiments, it is found that this approach achieves a speed-up of factor 5 to 10, compared to current state-of-the-art de-homogenisation approaches.

Common for the presented two-scale approaches aimed at translating structural information from a coarse to a fine grid, using the same measures, mainly densities or sensitivities, is that the coarse scale mesh imposes length-scale constraints on the fine grid. This means that little information is gained by utilising ANNs to perform this mapping, when compared to conventional interpolation techniques. This challenge does not occur if the ANN is applied for de-homogenisation, as here it serves as a tool for replacing a computational process which is a part of a pre-existing upscaling scheme where details are constructed from coarse scale information based on predefined rules.

2.2.4 Reduction

The typical approach for achieving reduction or problem re-parameterisation by use of AI-methods is to construct one or more inter-connected neural networks with the aim of representing a structure using fewer design variables and thus decrease the computational load of the optimisation procedure. This can be done by training a VAE for feature extraction and exploiting the reduced dimensionality of the obtained latent space to conduct the optimisation on this latent vector. Alternatively, the network can be constructed as a direct surrogate for the optimisation process such that the training of the network is equivalent to solving the given optimisation problem exploiting that the parameters and biases of the network are sufficient as design variables.

Guo et al. (2018) considered a multi-objective thermal conduction problem for which a VAE is trained in a supervised manner, with the aim of minimising the reconstruction-error of the encoder-decoder network. The model is then tested by integration in various conventional optimisation frameworks, including gradient-based methods, genetic algorithms and hybrid versions of the two. By encoding the intermediate structure, design-updates can be executed in the reduced latent space. The new latent vector can then be translated to an interpretable structure by the decoder, which is next subjected to physical analysis. As such, FEA is still needed for the full design space, using the same mesh discretisation, meaning the computational cost of computing objective and sensitivities remains the same as for the conventional methods. Nevertheless, there might be a potential gain in performance by reducing the number of iterations required, as the number of FEAs reported to reach convergence varies between the different solution frameworks tested. However, few test cases are reported and little comparison to state-of-the art procedures is conducted.

Chandrasekhar and Suresh (2021c) used an ANN to re-parameterise the density function, and thus in principle making the density representation independent of the FE-mesh. When integrating the new structural descriptor into a conventional solution framework, the weights and biases of the ANN become the design-variables that are optimised through unsupervised learning with a loss function corresponding to a weighted sum of structural compliance and volume-constraint violation. This is equivalent to conventionally optimising a new design representation, meaning that the network is not subjected to any actual learning. Thus, this is an example of using an ANN without the learning aspect.

Later, Chandrasekhar and Suresh (2021b) showed how this framework can be extended to a multi-material TO problem where the distribution of two or more materials within the structure is obtained simultaneously with the optimised topology, and Chandrasekhar and Suresh (2021a) added a Fourier-series extension to the ANN to impose length-scale control. In either case, FEA is evaluated on the same FE-mesh, which in each iteration is constructed by sampling densities for the needed spatial coordinates using the ANN. As such, conventional physical analysis on a discretised grid is still necessary to compute the sensitivities of the objective (in this case also the loss function) with respect to element densities, after which the sensitivities with respect to network parameters can be determined by classical back-propagation. A promising feature of this application is that because of the analytical density-field representation, sharper structural boundaries can be obtained. Currently, however, the structures are projected on a fixed FE-mesh for analysis which means that the boundary effectively is blurred. Further, there is a loss of fine features in the structures obtained by the new solution procedure, and detail does not appear to increase much with finer meshes. The results presented in Chandrasekhar and Suresh (2021a) also indicate that artefacts from the coarse discretisation may cause non-physical structures in the upscaled results. Moreover, as >90% of the optimisation time is spent on the FEA, this approach is unlikely to provide any promising speed-up unless additional measures are implemented to reduce the efforts needed to complete this evaluation process.

Deng and To (2020) presented a re-parameterisation approach similar to that of Chandrasekhar and Suresh (2021c), but with an increased focus on enabling representation of detailed 3D-geometries. Their method is coined deep representation learning and several different test-cases illustrate the increased ability to achieve structures including finer features. A comparative study to conventional TO is not detailed in the article, but the results do encourage further exploration of this method’s capabilities. Additionally, applications related to post-processing, and more specifically extraction of CAD models for manufacturability, could potentially benefit from this approach.

Other similar versions of re-parameterisation applications are also attempted in the literature. Chen and Shen (2021) perform online training of a GAN to obtain an optimised structure. The model is trained to firstly ensure volume-constraint satisfaction and secondly compliance value minimisation in an iterative manner. Hoyer et al. (2019) and Zhang et al. (2021b) altered the approach to directly enforce the constraints in each iteration, reducing the loss function to compliance only. Deng and To (2021) replaced the level-set function with an ANN, Zehnder et al. (2021) combined the method with a second ANN aimed at predicting displacements to achieve mesh-free TO, and Greminger (2020) ensured manufacturability in each iteration by manipulations in the latent space of a trained GAN. Hayashi and Ohsaki (2020) and Zhu et al. (2021) performed reparameterisation by reinforcement learning for truss structure optimisation. As most of these approaches still perform FE-analysis on the full mesh in each iteration, reported speed-ups are mainly caused by a reduction in the number of iterations until convergence. Another common trend for these works is that the resulting structures have fewer fine scale features than the corresponding solutions obtained by conventional TO. One could therefore speculate whether the reduction in iterations is a result of the re-parameterisation causing a perceived larger filter radius or coarser mesh.

Most of the works utilising ANNs for reductions in the dimensionality of design representation do not rely on typical learning techniques, as the network is re-initialised before optimisation each time. Here the NN architectures are simply used as a reparameterisation of the density field which is then subjected to a conventional optimisation procedure. An evident challenge for such approaches is the decreased ability in representing fine features when using fewer network-defining parameters.

2.2.5 Design-diversity