Abstract

Reliability-based design optimization (RBDO) derives an optimum design that satisfies target reliability and minimizes an objective function by introducing probabilistic constraints that take into account failures caused by uncertainties in random inputs. However, existing RBDO studies treat all failures equally and derive the optimum design without considering the magnitude of failure exceeding the limit state. Since the damage caused by failure varies according to the magnitude of failure, a probabilistic framework that considers the magnitude of failure differently is necessary. Therefore, this study proposes a weighted RBDO (WRBDO) framework that assigns a different weight to each failure according to the magnitude of failure and derives an optimum design that quantitatively reflects the magnitude of failures. In the WRBDO framework, the weight function is modeled based on warranty cost or damage cost according to the magnitude of failure, and the weighted failure is determined by assigning different weights according to the magnitude of failure through the weight function. Then, weighted probabilistic constraints reflecting the weighted failure are evaluated. Sampling-based reliability analysis using the direct Monte Carlo simulation (MCS) is performed to evaluate the weighted probabilistic constraints. Stochastic sensitivity analysis that calculates the sensitivities of the weighted probabilistic constraints is derived, and it is verified through numerical examples that the stochastic sensitivity analysis is more accurate and efficient than the sensitivity analysis using the finite difference method (FDM). To enable the practical application of WRBDO, AK-MCS for WRBDO in which the Kriging model is updated to identify both the limit state and the magnitude of failures in the failure region is proposed. The results of various WRBDO problems show that the WRBDO yields conservative designs than a conventional RBDO, and more conservative designs are derived as the slope of weight functions and the nonlinearity of constraint functions increase. The optimum results of a 6D arm model show that the cost increases by 3.39% and the number of failure samples decreases by 88.48% in WRBDO and the weighted failures of WRBDO are averagely 9.1 times larger than those of RBDO. The results of applying AK-MCS for WRBDO to the 6D arm model verify that the AK-MCS for WRBDO enables practical application of WRBDO with a small number of function evaluations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Reliability-based design optimization (RBDO) has been studied to consider failures caused by uncertainties in the design of engineering systems and has been widely applied in fields such as mechanical engineering (Wang and Wang 2012; Shin and Lee 2014; Wang et al. 2020a), aerodynamics (Lian and Kim 2006; Kim et al. 2011; Hu et al. 2016; Kusano et al. 2020), vehicle safety (Shi and Lin 2016; Wang et al. 2020b), and structural analysis (Papadrakakis et al. 2005; López et al. 2017; Meng et al. 2018; Ni et al. 2020; Yang et al. 2020). RBDO introduces probabilistic constraints to derive the optimum design that minimizes the objective function and satisfies the target reliability. Recently, many studies related to analytical and surrogate model-based methods have been conducted to improve the accuracy and efficiency of RBDO. Jiang et al. (2017) proposed an adaptive hybrid single-loop method (AH-SLM) that automatically find and calculate the approximate most probable point (MPP) or accurate MPP. Infeasible approximate MPP, which does not satisfy KKT-condition, is replaced by accurate MPP obtained using iterative control strategy (ICS) that adaptively updates the step length required to search for the MPP. This method solves the problem of the single-loop method that derives an incorrect MPP in complex nonlinear RBDO problems; however, the error that occurs in the approximation of the limit state function at the MPP still remains. To improve the computational efficiency of the novel novel second-order reliability method (SORM) that fully integrates a linear combination of non-central random variables, Park and Lee (2018) proposed a method to obtain the PDF of the quadratically approximated function using the convolution integral method. The proposed method shows better computational efficiency with the same accuracy than the existing novel SORM; however, an error occurs when the nonlinear limit state function is approximated as a quadratic function at the MPP. Meng et al. (2019) proposed an importance learning method (ILM) that considers the importance degree of points on the limit state function during the active learning process, improving the computational efficiency and accuracy of reliability analysis based on the Kriging model; however, there is still a disadvantage that high-dimensional or highly nonlinear problems are not modeled effectively with the Kriging model.

However, when evaluating the probabilistic constraint in the RBDO problem, the aforementioned studies have limitations as the probability of failure is calculated by treating all parts of the failure region exceeding the limit state equally regardless of the extent to which the limit state is exceeded. In actual engineering applications, the damage caused by failure may vary according to the magnitude of failure (Mukhopadhyay et al. 2015; Karagiannis et al. 2017). Since failures have different magnitudes, a different weight should be assigned for each failure, and thus, a probabilistic framework that considers the magnitude of failure in the probability of failure calculation is necessary. Therefore, this study proposes a weighted RBDO (WRBDO) framework that determines the weighted failure according to the magnitude of failure and evaluates the weighted probabilistic constraints reflecting the weighted probability of failure. Weighted failure is determined by the weight function, which is a function of the magnitude of failure, and the weighted probability of failure is calculated as the proportion of the weighted failure among both safe and failure sets. In general, the magnitude of failure far from the limit state can be considered to be higher than that of failure near the limit state. In addition, the nonlinearity of the constraint function in the failure region also affects the magnitude of failure. In actual WRBDO applications, the weight function can be modeled based on warranty cost or damage cost according to the magnitude of failure. First, the function type is determined by considering the trend in warranty or damage costs according to the magnitude of failure. Then, the function parameters can be determined using the relationship between the magnitude of failure and the warranty or damage costs. As the cost incurred by failure increases, the weight function operates so that the weighted failure increases. The weight function quantifies how many low magnitude of failures correspond to a high magnitude of failure.

The reliability analysis of WRBDO evaluates the weighted probabilistic constraints, and in this study, sampling-based reliability analysis is performed using the direct Monte Carlo simulation (MCS) method that evaluates weighted probabilistic constraints through large number of function evaluations. The design optimization process of WRBDO requires sensitivities of probabilistic responses derived from MCS, as in RBDO (Lee et al. 2011). Sensitivity analysis can be performed with the finite difference method (FDM) using the difference between two probability of failures; however, a large number of samples are required to obtain accurate sensitivity due to statistical noise of MCS and the number of MCSs required for the sensitivity analysis increases as the number of random variables increases. In addition, the accuracy of sensitivity obtained from FDM is reduced if an inadequate perturbation size is used (Montoya and Millwater 2017). Instead, this study presents a method to calculate the sensitivities of weighted probabilistic constraints based on stochastic sensitivity analysis, which does not require approximation in calculating the sensitivities (Rahman 2009). The direct MCS method used for sampling-based reliability analysis is accurate but computationally inefficient. Therefore, AK-MCS, which is defined as an active learning reliability method combining Kriging and MCS, is introduced and AK-MCS for WRBDO in which the Kriging model is updated to identify both the limit state and the magnitude of failures is proposed. Once an accurate Kriging model is established by sequentially adding samples through a learning function that determines the next best sample, MCS can be applied to the Kriging model to evaluate weighted probabilistic constraints without computational burden.

The innovations and contributions of this paper can be summarized as follows. The proposed WRBDO derives an optimum design that quantitatively reflects the magnitude of failures in the failure region, and therefore, it alleviates the limitation of the existing RBDOs that classify only safe or failure and cannot reflect the magnitude of failures in the design. Unlike risk-based approaches that achieve the same conservativeness for given magnitude of failures, WRBDO can achieve various conservativeness by reflecting the magnitude of failures differently through the establishment of appropriate weight functions. Since the weight function is established based on the information of the resulting damage or cost according to the magnitude of failure, it can be evaluated that WRBDO secures desirable conservativeness.

The rest of this paper is organized as follows. In Sect. 2, the conventional sampling-based RBDO is briefly reviewed. Section 3 suggests the necessity of the WRBDO framework and presents the proposed WRBDO framework, stochastic sensitivity analysis, and MCS for WRBDO framework. The AK-MCS for WRBDO is proposed in Sect. 4. Section 5 demonstrates the accuracy of sensitivities of the weighted probabilistic constraints through numerical examples consisting of highly nonlinear and/or high-dimensional problems. In addition, WRBDO results obtained using various optimization methods and weight functions are compared, and an engineering example is used to compare the results of RBDO and WRBDO. The AK-MCS for WRBDO is also validated through numerical examples in Sect. 5. Finally, discussions and conclusion are provided in Sect. 6.

2 Fundamentals of sampling-based RBDO

This section briefly reviews fundamentals of the conventional RBDO. Before explaining the sampling-based RBDO, Sect. 2.1 presents a general RBDO formulation. Then, Sect. 2.2 explains the stochastic sensitivity analysis that calculates sensitivities of probabilistic constraints without using sensitivities of limit states. Finally, Sect. 2.3 shows how to obtain the probability of failure and its sensitivity through MCS in the sampling-based RBDO.

2.1 RBDO formulation

In the conventional RBDO framework, a general RBDO problem is formulated as follows:

where d is the nd-dimensional design variable vector; X is the N-dimensional random variable vector; \(P[ \cdot ]\) indicates the probability measure; \(P_{{F_{j} }}^{{{\text{Target}}}}\) is the target probability of failure for the jth probabilistic constraint \(g_{j} ({\mathbf{X}})\); dL and dU represent the lower and upper design bounds, respectively; and nc, nd, and N are the number of probabilistic constraints, design variables, and random variables, respectively.

A reliability analysis evaluates the probabilistic constraint, which involves the probability of failure defined as follows:

where \({{\varvec{\upmu}}}\) is a vector of the mean of the random input \({\mathbf{X}} = \{ X_{1} , \ldots ,X_{N} \}^{{\text{T}}}\); \(\Omega_{F}\) is the failure set defined as \(\{ {\mathbf{x}}:g({\mathbf{x}}) > 0\}\); \(f_{{\mathbf{X}}} ({\mathbf{x}};\,{{\varvec{\upmu}}})\) represents a joint probability density function of X; and \(E[ \cdot ]\) is the expectation operator. \(I_{{\Omega_{F} }} ({\mathbf{x}})\) is an indicator function for the failure set and defined as follows:

2.2 Stochastic sensitivity analysis

This section explains the stochastic sensitivity analysis for the probabilistic constraints. By taking the partial derivative of probability of failure in Eq. (2) with respect to the ith design variable μi, the sensitivity of probability of failure can be obtained as follows:

Since \(I{}_{{\Omega_{F} }}({\mathbf{x}})\) is not a function of μi, interchange between the integral and differential operators using the Leibniz’s rule of differentiation (Browder 1996) yields

2.3 MCS for RBDO framework

In the sampling-based RBDO, MCS is used to calculate the probability of failure and its sensitivity. The probabilistic constraint can be estimated by using MCS as follows:

where L is the number of MCS samples, \({\mathbf{x}}^{(l)}\) is the lth realization of X, and \(P_{F}^{{{\text{Target}}}}\) is the target probability of failure. Then, the sensitivity of the probability of failure in Eq. (5) can be obtained as follows:

In Eqs. (6) and (7), a surrogate model can be utilized to reduce the computational burden of calculating the probability of failure and its sensitivity. Even if the surrogate model is used in Eq. (7), only the function values of the surrogate model are used in the indicator function and the sensitivity of the surrogate model is not required, and thus the sensitivity of probability of failure can be obtained accurately and efficiently.

3 Proposed WRBDO framework

This section explains the proposed WRBDO framework. Section 3.1 explains limitations of the existing RBDO framework and why the proposed WRBDO framework is necessary. Then, Sect. 3.2 presents the WRBDO framework and explains weighted probabilistic constraints that involve the weighted probability of failure calculated using a weight function. Section 3.3 derives sensitivities of the weighted probabilistic constraints. A method that evaluates the weighted probabilistic constraint and its sensitivity using MCS is explained in Sect. 3.4.

3.1 Necessity of WRBDO

As shown in the indicator function of Eq. (3), the reliability analysis of the conventional RBDO framework assigns the same value to all failures in the failure set; however, failures have different magnitude of failure, which is determined by the limit state function value: Fig. 1 shows an example of the limit state and MCS samples to illustrate the magnitude of failures exceeding the limit state, and the color bar indicates the limit state function values representing the magnitude of failures in the failure region. This implies that the conventional RBDO cannot explain different damages caused by each failure, and this is why it is necessary to develop a new RBDO framework where failures are differently evaluated according to their magnitudes. To this end, risk-based design optimization attempts to consider the failure magnitude in design optimization by introducing conditional value-at-risk (CVaR) and buffered probability of failure (bPoF) approaches (Rockafellar and Uryasev 2000; Rockafellar and Royset 2010; Zhang et al. 2016; Royset et al. 2017; Chaudhuri et al. 2020). In the CVaR approach, target reliability α is defined and the constraint is given so that the tail expectation of a set greater than or equal to the α-quantile is less than the failure threshold. The bPoF approach assigns a buffer zone to safe set near the failure threshold so that the expectation of the buffer zone and failure set becomes the failure threshold. Then, the proportion of the sum of the buffer zone and failure set among the total sets is defined as bPoF, and the constraint is given so that bPoF is less than or equal to the probability of failure. These risk-based approaches consider the magnitude of failure, but only simple tail expectation is reflected. In order to consider the magnitude of failure more realistically and delicately, the resulting damage should be considered as well as the magnitude of failure. That is, a new probability measure that quantifies how many low magnitude of failures correspond to a high magnitude of failure is necessary.

Therefore, this study proposes a WRBDO framework that defines the weighted probability of failure, which is the proportion of the sum of weighted failures—calculated by assigning weights to failures according to the magnitude of failure—among both safe and failure sets. The WRBDO framework considers the characteristics of the limit state function in the failure region and determines to what extent the magnitude of failure is reflected.

3.2 WRBDO framework

The flowchart for obtaining the weighted probability of failure in the WRBDO framework is shown in Fig. 2. For a given design, the failure region and magnitude of failures can be specified. As mentioned in Sect. 3.1, the magnitude of failure determined by the limit state function in the failure region is different from failure to failure. A failure with a high magnitude of failure corresponds to several low magnitude of failures. For example, one failure with a cost of $1000 can be matched to 10 failures with a cost of $100 for each failure. In order to reflect this relationship in the reliability analysis, a weight function is introduced to determine the weighted failure, which is defined as follows:

where f is a function that increases with the magnitude of failure in the failure set and three examples of weight function are shown in Fig. 3. In Eq. (8), the weight is assigned to failure regions only, not to safe regions. The weight function can be modeled based on warranty cost or damage cost according to the magnitude of failure. The function type is determined by taking into account the trend in warranty or damage costs according to the magnitude of failure. Then, the function parameters can be determined using the relationship between the magnitude of failure and the warranty or damage costs. Since the weight function can have a significant effect on the optimization results of WRBDO, sufficient data are required to accurately model the weight function. As the cost incurred by failure increases, the weight function operates so that the weighted failure increases. The weight function quantifies how many low magnitude of failures corresponds to a high magnitude of failure. Since the weight function represents the relationship of warranty or damage costs according to the magnitude of failure, the number of low magnitude of failures corresponding to a high magnitude of failure can be determined by comparing the warranty or damage costs of high and low magnitude of failures. Although weights can be similarly assigned to safe regions, only weighted failures are considered in this study.

The weighted probability of failure, which is calculated by reflecting the weighted failure for the failure set, is expressed as follows:

where \(\Omega_{S}\) is the safe set defined as \(\{ {\mathbf{x}}:g({\mathbf{x}}) \le 0\}\) and \(I_{{\Omega_{S} }} ({\mathbf{x}})\) is an indicator function for the safe set defined as follows:

The weighted probability of failure in Eq. (9) consists of two probabilities calculated from the failure set and the safe set. The probability calculated in the failure set is given by

and the probability calculated in the safe set is given by

Compared to the probability of failure in Eq. (2), the safe region is considered in the calculation of the weighted probability of failure. In addition, the magnitude of failure is reflected through the weight function. Since the multi-dimensional integrals in Eqs. (11 and 12) are difficult to calculate, several approximation techniques can be used to ease the computational difficulties. However, in this study, direct MCS without any approximation is used to calculate the weighted probability of failure to present the basic concept of WRBDO, which will be covered in Sect. 3.4.

3.3 Stochastic sensitivity analysis for WRBDO

This section explains the derivation of stochastic sensitivity for the weighted probabilistic constraints. By taking a partial derivative of the weighted probability of failure in Eq. (9) with respect to the ith design variable \(\mu_{i}\) and using the chain rule, the sensitivity of the weighted probability of failure can be obtained as follows:

The partial derivative of \(P_{1} ({{\varvec{\upmu}}})\) with respect to \(\mu_{i}\) is given by

and similar to Eq. (5), interchange between the integral and differential operators using the Leibniz’s rule of differentiation yields

since \(I{}_{{\Omega_{F} }}({\mathbf{x}})\) and \(f_{W} \left( {g({\mathbf{x}})} \right)\) are not a function of \(\mu_{i}\). Similarly, the partial derivative of \(P_{2} ({{\varvec{\upmu}}})\) with respect to \(\mu_{i}\) is given by

Finally, the sensitivity of the weighted probability of failure in Eq. (13) can be obtained using Eqs. (11, 12, 15, and 16). As shown in Eqs. (15 and 16), the proposed stochastic sensitivity analysis uses the sensitivity of the joint distribution, which can be obtained analytically, whereas the FDM is based on the perturbation of the probabilistic response. Therefore, the proposed stochastic sensitivity analysis results in higher accuracy and efficiency compared to FDM.

In comparison with Eq. (5), the stochastic sensitivity of the weighted probability of failure in Eq. (13) shows that the magnitude of failure is reflected through the weight function and the sensitivity of the joint distribution in the safe region is considered. If \(f_{W} \left( {g({\mathbf{x}})} \right) = 1\), the weighted probability of failure in Eq. (9) and its sensitivity in Eq. (13) can be simplified as follows:

and

respectively. This implies that the conventional sampling-based RBDO is a special case of the proposed WRBDO where \(f_{W} \left( {g({\mathbf{x}})} \right) = 1\).

3.4 MCS for WRBDO framework

In this study, MCS is used to calculate the weighted probability of failure and its sensitivity. The weighted probabilistic constraint can be estimated by using MCS as follows:

where \(P_{F,W}^{{{\text{Target}}}}\) is the target-weighted probability of failure. To apply MCS in calculating the sensitivity of the weighted probability of failure in Eq. (13), estimations in Eqs. (11, 12, 15, and 16) are given as follows:

The aforementioned sensitivity analysis of the weighted probability of failure obtained using MCS does not require the sensitivity of the constraint function and the weight function. In addition, since no approximation is involved in the sensitivity analysis, the statistical noise occurred because MCS is the only factor that degrades the accuracy of the sensitivity analysis, which can be resolved by using a sufficiently large number of MCS samples.

4 AK-MCS for WRBDO

In WRBDO framework, the direct MCS method that evaluates weighted probabilistic constraints through large number of function evaluations is very accurate but computationally inefficient. Therefore, to enable the practical application of WRBDO, this section proposes AK-MCS for WRBDO in which the Kriging model is updated to identify both the limit state and the magnitude of failures. Once an accurate Kriging model is established by sequentially adding samples through a learning function that determines the next best sample, MCS can be applied to the Kriging model to evaluate weighted probabilistic constraints without computational burden. In the existing AK-MCS for RBDO, the learning function is defined so that samples are added near the limit state since the classification of safe or failure is the only interest; however, in WRBDO, a newly defined learning function is required to identify both the limit state and the magnitude of failures in the failure region. In Sect. 4.1, the learning function U used in the existing AK-MCS for RBDO and a new learning function proposed for the AK-MCS for WRBDO are explained. Then, the framework of AK-MCS for WRBDO is presented in Sect. 4.2.

4.1 Learning functions

A Kriging predictor is considered as a realization of stochastic process. Kriging is an interpolation method based on Gaussian process, and the uncertainty of local predictions can be quantified by Kriging variance. In AK-MCS, the next best sample is determined through the learning functions considering the uncertainty information, and the Kriging model is sequentially updated by adding new samples. Learning functions represent the active learning characteristics of AK-MCS. In this section, the learning function U used in the existing AK-MCS for RBDO is explained, and a learning function named V is proposed for the AK-MCS for WRBDO.

4.1.1 Learning function U

The learning function U proposed by Echard et al. (2011) is used to identify the limit state in the AK-MCS for RBDO. To select a sample close to the limit state and having a large prediction uncertainty as the next best sample, the learning function U is defined as follows:

where \(\hat{g}({\mathbf{x}})\) and \(\sigma_{{\hat{g}}}\) are the mean and standard deviation of a Kriging model, respectively. Then, the next best sample x* is given as follows:

where S is a Monte Carlo population. The learning function U focuses on the samples near the limit state, and therefore, the ability to classify safety or failure of the sequentially updated Kriging model can be improved.

4.1.2 Learning function V

To evaluate weighted probabilistic constraints in WRBDO, the identification of the magnitude of failures as well as the limit state is required. To identify them, the learning function V is proposed for the AK-MCS for WRBDO as follows:

Then, the next best sample x* is given as follows:

where SF is a Monte Carlo population in the failure region, indicating that candidates for the next best sample are limited to samples in the failure region. The first term of the learning function V, \(f_{W} \left( {\hat{g}({\mathbf{x}})} \right)\), prioritizes the sample with a large weighted failure. This enables the exploration of the failure region by considering the magnitude of failure and the weight function. The second term of the learning function V, \(\sigma_{{\hat{g}}} ({\mathbf{x}})\), prioritizes the sample with a high prediction uncertainty. This term is also considered in the learning function U. Finally, the last term of the learning function V, \(f_{{\mathbf{X}}} ({\mathbf{x}};\,{{\varvec{\upmu}}})\), prioritizes the sample with a high probability density. This enables the exploration of the limit state because the sample close to the limit state has a high probability density when the mean of random variables μ is located in the safe region. By sequentially updating the Kriging model with the samples obtained through the learning function V consisting of the three terms, both the limit state and the magnitude of failures in the failure region can be accurately identified, and therefore, weighted probabilistic constraints can be evaluated successfully.

4.2 Framework of AK-MCS for WRBDO

In this section, the active learning process of AK-MCS for WRBDO using the learning function V described in Sect. 4.1.2 is presented. The flowchart of AK-MCS for WRBDO is presented in Fig. 4. The active learning process consists of 8 steps.

-

(1)

Generate an initial design of experiments (DoE) and perform function evaluations on the generated samples.

-

(2)

Construct the Kriging model according to the generated DoE.

-

(3)

Estimate the Kriging predictions—\(\hat{g}({\mathbf{x}})\) and \(\sigma_{{\hat{g}}}\)—for all Monte Carlo population S.

-

(4)

Evaluate stop criteria 1: if the maximum Kriging standard deviation is less than \(\varepsilon_{1}\), terminate the method and calculate the estimated weighted probability of failure \(\hat{P}_{F,W}\). If the prediction uncertainty of the Kriging model is sufficiently small, the limit state and failure region can be accurately identified without additional samples, and 10–10 is used as \(\varepsilon_{1}\) in this study.

-

(5)

Evaluate stop criteria 2: if the average relative change of sum of weighted failures \(\overline{{\delta_{{W_{F} }} }}\) is less than \(\varepsilon_{2}\), terminate the method and calculate the estimated weighted probability of failure \(\hat{P}_{F,W}\). The sum of weighted failures \(W_{F}\) is given as follows:

$$W_{F} = \sum\limits_{l = 1}^{L} {\left\{ {I_{{\Omega_{F} }} \left( {{\mathbf{x}}^{(l)} } \right)f_{W} \left( {g\left( {{\mathbf{x}}^{(l)} } \right)} \right)} \right\}} ,$$(28)and the average relative change of \(W_{F}\) is given as follows:

$$\overline{{\delta_{{W_{F} }} }} = \frac{1}{2}\sum\limits_{i = N - 1}^{N} {\frac{{\left| {W_{F}^{i} - W_{F}^{i - 1} } \right|}}{{W_{F}^{i - 1} }}} .$$(29)If the change in \(W_{F}\) is small even when a new sample is added, the Kriging model is considered to be converged, and 0.1% is used as \(\varepsilon_{2}\) in this study.

-

(6)

Identify the next best sample. As already explained in Sect. 4.1.2, the learning function V uses the Monte Carlo population in the failure region as candidates for the next best sample, and therefore, if there exists a failure region, the sample with the maximum learning criterion V becomes the next best sample x*. If a failure region does not exist, the focus is on identifying the limit state using the learning function U, and thus the sample with the minimum learning criterion U becomes the next best sample x*.

-

(7)

Perform function evaluation on x* and update the DoE.

-

(8)

Go back to Step 2 and construct the Kriging model according to the updated DoE.

5 Numerical studies

In this section, the results of numerical studies related to the proposed WRBDO framework are presented. To analyze the characteristics of the WRBDO framework, Sect. 5.1 presents the results obtained by applying the direct MCS on WRBDO. Section 5.2 validates the AK-MCS for WRBDO, which is proposed in Sect. 4 to enable the practical application of WRBDO.

5.1 Direct MCS for WRBDO

Through numerical examples, this section verifies the stochastic sensitivity of the weighted probabilistic constraints derived in Sect. 3.3 and shows observations obtained from the results of the WRBDO applications. In order to focus on analyzing the characteristics of the WRBDO framework, this section performs WRBDO through the direct MCS without using a surrogate model. The FDM using MCS is used to compare the accuracy of the proposed stochastic sensitivity analysis. For the benchmark sensitivity, the fitting sensitivity obtained from the derivative of the function established by performing polynomial fitting with weighted probability of failure values, which are calculated near the design point where the sensitivity is measured, is used. In numerical examples, the MCS sample size for stochastic sensitivity, the FDM, and weighted probability of failure is 108. The results of stochastic sensitivities of the weighted probabilistic constraints for highly nonlinear and high-dimensional functions are explained in Sects. 5.1.1 and 5.1.2, respectively. Section 5.1.3 compares the results of a WRBDO problem obtained using the stochastic sensitivity and FDM. Observations obtained through various weight functions and standard deviations of the design variables are shown in Sect. 5.1.4. In Sect. 5.1.5, an engineering example using a 6D arm model is presented and the optimum results obtained from RBDO and WRBDO are compared.

5.1.1 Sensitivities of the weighted probabilistic constraints for highly nonlinear function

To verify the applicability of the proposed stochastic sensitivity analysis for highly nonlinear functions, a 2D polynomial function is given as follows:

where \(\left\{ \begin{gathered} Y \hfill \\ Z \hfill \\ \end{gathered} \right\} = \left[ \begin{gathered} 0.9063 \, \,\,\,\,0.4226 \hfill \\ 0.4226 \, - {0}{\text{.9063}} \hfill \\ \end{gathered} \right]\left\{ \begin{gathered} X_{1} \hfill \\ X_{2} \hfill \\ \end{gathered} \right\}\), and X1, X2 follow a normal distribution. The standard deviations of two random variables are 0.4 and all random variables are assumed to be statistically independent. A linear function given by

is used as the weight function in the failure region. The sensitivities of weighted probability of failure at several design points are listed in Table 1. For the perturbation size of FDM, 0.1% of \(\mu_{i}\) is used. The fitting sensitivity with respect to \(\mu_{i}\), which is used as the benchmark sensitivity, can be obtained by differentiating the function established using 20D polynomial fitting with the weighted probability of failure values calculated from the interval [\(\mu_{i}\) − 1, \(\mu_{i}\) + 1] with step size of 0.01. The results in Table 1 show that the stochastic sensitivity and the sensitivity obtained using FDM agree well at design points of the highly nonlinear function. Compared to the sensitivity obtained using FDM, the stochastic sensitivity is closer to the fitting sensitivity used as the benchmark sensitivity since the stochastic sensitivity does not involve any approximation except the statistical noise due to MCS, as explained in Sect. 3.4. In addition, to obtain the sensitivity, the stochastic sensitivity analysis requires only one MCS at a given design point, whereas FDM requires N + 1 MCSs. Therefore, the stochastic sensitivity analysis derives the sensitivity of the weighted probabilistic constraints more accurately and efficiently than the sensitivity analysis using FDM, and does not require determination of perturbation size.

5.1.2 Sensitivities of the weighted probabilistic constraints for high-dimensional functions

Sensitivity analysis for 9D, 20D, and 30D polynomial functions is performed to verify that the proposed stochastic sensitivity analysis works well for high-dimensional functions. Table 2 shows the high-dimensional constraint functions, the properties of random variables, and the sensitivities of weighted probability of failure. All random variables are assumed to be statistically independent, and the function presented in Eq. (31) is used as the weight function. As in Sect. 5.1.1, the stochastic sensitivity and the sensitivity obtained using FDM agree well, and the stochastic sensitivity is closer to the fitting sensitivity compared to the sensitivity obtained using FDM. Therefore, the proposed stochastic sensitivity analysis can be used to accurately and efficiently derive the sensitivity of weighted probability of failure even for highly nonlinear and high-dimensional functions.

5.1.3 WRBDO using the proposed stochastic sensitivity analysis

This section shows how the proposed stochastic sensitivity is applied to the WRBDO problem. Consider a 2D mathematical WRBDO problem formulated as follows:

where the weight function and three constraint functions are given as follows:

where \({\mathbf{d}} = \mu ({\mathbf{X}})\) is the design vector and \({\mathbf{x}}({\mathbf{d}})^{(l)}\) is the lth realization of \({\mathbf{X}}({\mathbf{d}})\). The cost function and three constraint functions are plotted in Fig. 5, and the deterministic optimum \({\mathbf{d}}^{0} = [5.1969,0.7405]^{{\text{T}}}\) obtained from deterministic design optimization is used as the initial design point to enhance the efficiency of the WRBDO. The properties of random variables are presented in Table 3 and they are assumed to be statistically independent. The target-weighted probability of failure (\(P_{F,W}^{{{\text{Target}}}}\)) is 2.275% for all three constraints as shown in Eq. (32).

Table 4 shows the WRBDO results obtained using various optimization methods. Sequential quadratic programming (SQP) is a gradient-based optimization method, and the optimization results obtained using the stochastic sensitivity and FDM are compared. Genetic algorithm (GA) is a non-gradient approach and is used to verify whether the proposed stochastic sensitivity works properly in the WRBDO framework. The optimum design obtained using the stochastic sensitivity and FDM is identical; however, the number of iterations—the number of design points required to reach the optimum design—is smaller when the stochastic sensitivity is used. This verifies that since the proposed sensitivity is more accurate, the WRBDO converged faster to the optimal design. The optimum results obtained using SQP and GA are almost similar, indicating that the proposed stochastic sensitivity analysis works properly in the WRBDO framework.

5.1.4 WRBDO with various weight functions

Various weight functions are applied to the problem presented in Sect. 5.1.3 to observe the effect of the weight function on the results of WRBDO. Three cases with different weight functions are compared: a different weight function is used for each case, and the weight function given for each case applies equally to three constraint functions of Eqs. (34, 35, and 36). Each weight function has a linear function in the failure region, and the slope of the linear function is different for each case. The weight function of the first case is given as follows:

Since the weight function has the same value in both the safe region and the failure region, the magnitude of failure is not considered, and thus, the same result as in the existing RBDO is derived. The weight function presented in Eq. (33) is used in the second case. The weight function of the third case is given as follows:

Table 5 presents the WRBDO results of three cases, and the optimum designs are depicted in Fig. 6. The WRBDO designs of Cases 2 and 3 are more conservative than the RBDO design of Case 1, resulting in higher costs. Compared to Case 1, the costs of Cases 2 and 3 increase by 1.37% and 4.29%, respectively. The results also show that the weight function affects the optimum design. As the slope of the weight function in the failure region increases, the magnitude of failure is more significantly reflected, resulting in a more conservative design. This can be verified by comparing the number of failure samples, which is the number of samples in the failure region, in Cases 1, 2, and 3. As the slope of the weight function in the failure region increases, the decrease rate of the number of failure samples also increases. Compared to Case 1, the numbers of failure samples of g1 and g2 in Case 2 decrease by 21.98% and 24.37%, respectively, whereas in Case 3, the numbers of failure samples of g1 and g2 decrease by 56.16% and 59.54%, respectively. The decrease in the number of failure samples is due to the increased weight of each failure sample.

In addition, the nonlinearity of the constraint function affects the WRBDO design. The numbers of failure samples of g1 and g2 in Case 1 are almost the same. However, the numbers of failure samples of g2 in Cases 2 and 3 are lower than that of g1. The numbers of failure samples of g2 in Cases 2 and 3 are 3.05% and 7.71% lower than that of g1, respectively. The fact that the number of failure samples in g2 is less than g1 indicates that the WRBDO design is more conservative for g2 than g1. This is because the nonlinearity of g2 is higher than that of g1, so the failure samples of g2 tend to have a relatively high weight, and thus, the design is derived to reduce the number of samples in the failure region. As the slope of the weight function increases, the weight of the magnitude of failure increases, resulting in a more conservative design with a reduced number of failure samples in the failure region. And thus, it has the same effect as decreasing the target probability of failure in RBDO from the perspective of the conventional probability of failure. Therefore, the accuracy of reliability analysis decreases relatively under the same number of MCS samples, resulting in decrease in the accuracy of cost and optimum design. For clear comparison between the WRBDO design and the RBDO design, RBDO is performed by setting the conventional probability of failures of the WRBDO designs in Cases 2 and 3 − 1.775% for g1 and 1.721% for g2 in Case 2 and 0.997% for g1 and 0.920% for g2 in Case 3 − as the target probability of failure of the RBDO problem. The results show that the RBDO designs in Cases 2 and 3 are exactly the same as the WRBDO designs, and it can be said that the weighted probability of failure of WRBDO provides RBDO a more conservative target probability of failure.

In order to observe the effect of standard deviations of design variables on WRBDO results, optimum results obtained with different standard deviations of random variables are compared. Table 6 presents the optimum results obtained when the standard deviations of X1 and X2 are 0.7, and the optimum designs are depicted in Fig. 7. As the standard deviations of random variables increase, the number of failure samples for the same design increases, resulting in more conservative designs with increased cost for all three cases. However, the WRBDO designs have a higher cost increase rate compared to the RBDO design. As the standard deviations of random variables increase, the costs of Cases 1, 2, and 3 increase by 19.65%, 23.57%, and 32.85%, respectively. This is because the magnitude of failure increases as the standard deviations increase, and the magnitude of failure increases more critically as the slope of the weight function increases. This can be explained by comparing the number of failure samples at the optimum designs. In Case 1, even if the standard deviations increase, the number of failure samples at the optimum design remains the same because the magnitude of failure is not considered and all failure samples are treated equally. However, in Cases 2 and 3, since the weight of each failure sample increases as the standard deviations increase, the number of failure samples in optimum designs decreases. In Cases 2 and 3, the averaged decrease rates of the number of failure samples are 18.30% and 35.59%, respectively. The larger average decrease rate in Case 3 than in Case 2 is due to the tendency that the weight of the failure sample increases as the slope of the weight function increases. As in the case where the standard deviations of X1 and X2 are 0.4, if the conventional probability of failure corresponding to the weighted probability of failure of WRBDO is set as the target probability of failure of RBDO, the RBDO results in the same design as WRBDO.

Furthermore, the increase in magnitude of failure caused by increasing standard deviations is affected by the nonlinearity of the constraint function. As the standard deviations increase, the numbers of failure samples of g1 decrease by 7.31% and 15.76% in Cases 2 and 3, respectively, while the numbers of failure samples of g2 decrease by 29.28% and 55.41% in Cases 2 and 3, respectively. In the case of g2, since the nonlinearity is higher than that of g1, the weight of the failure sample in g2 tends to be larger than that of g1 as the standard deviation increases, resulting in a larger decrease rate of the number of failure samples. As the standard deviation increases, the target probability of failure from the perspective of the conventional probability of failure decreases since the effect of the weight function increases, and therefore, more MCS samples are required to maintain the accuracy of the reliability analysis. Once an appropriate weight function is selected according to the problem, a WRBDO design reflecting the given standard deviation can be derived.

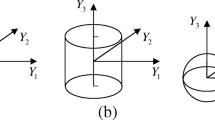

5.1.5 Engineering example: 6D arm model

This section compares the results of RBDO and WRBDO for the 6D arm model provided by Hyperworks tutorial (Altair 2017). The loading and boundary conditions for the arm model are shown in Fig. 8 where six random variables consisting of morphing parameters denoted as d are used to control the geometry of the arm. Properties of the random variables are shown in Table 7 and the MCS sample size for weighted probability of failure is 105. The WRBDO for the 6D arm model is formulated as follows:

where the objective is to minimize the volume of the arm and failure is defined such that the maximum displacement exceeds the target displacement. The weight function is modeled based on the vehicle repairing cost caused by potholes. The arm is a part of the vehicle and the repairing cost varies depending on the magnitude of failure of the arm, because other parts are also damaged by the arm's damage as well as the arm itself is damaged. The repairing cost ranges from less than $250 to over $1000 (AAA Exchange 2017). By considering the repairing cost and variance of displacement, the weight function is assumed to be given as follows:

The optimum design results of RBDO and WRBDO are listed in Table 8 where it can be shown that WRBDO yields a more conservative optimum design than RBDO: the cost increases by 3.39% and the number of failure samples decreases by 88.48%. In WRBDO, the magnitude of failure exceeding the maximum displacement determines the weight of each failure through the weight function modeled according to the repair cost, and therefore, a high magnitude of failure can be converted into multiple low magnitude of failures, resulting in fewer number of failure samples than in RBDO. The weighted failures of WRBDO have averagely 9.1 times larger values than those of RBDO. From the perspective of the weighted probability of failure, it can be seen that the RBDO design is infeasible.

To further investigate the characteristic of WRBDO, the histograms of \(g({\mathbf{x}})\) derived from the optimum designs of RBDO and WRBDO are compared in Fig. 9. The Y-axis indicates the frequency of \(g({\mathbf{x}})\) obtained by propagating uncertainties, and the figure shows that the conventional probability of failure of the WRBDO design is smaller than that of the RBDO design. In addition, the figure shows that high magnitudes of failures of WRBDO design almost disappear because the weight function assigns high weights to high magnitudes of failures. Strictly setting reliability constraints can lead to similar results; however, it just derives more reliable designs than required without considering any information on the magnitude of failure. The risk-based approaches consider the magnitude of failure to obtain the optimal design, but only simple tail expectation is reflected and information on damage or repair according to the magnitude of failure is not considered. Unlike risk-based approaches, different conservativeness can be induced in WRBDO depending on the nature of the weight function. As presented in this engineering example, WRBDO can be considered to secure desirable conservativeness because it considers information on resulting repair cost according to the magnitude of failure by defining an appropriate weight function. As in Sect. 5.1.4, if the conventional probability of failure corresponding to the weighted probability of failure of WRBDO is set as the target probability of failure of the RBDO problem, the RBDO design is the same as the WRBDO design.

5.2 Validation of AK-MCS for WRBDO

In this section, the AK-MCS for WRBDO proposed in Sect. 4 is validated through numerical examples. To validate the AK-MCS for WRBDO, the results of AK-MCS obtained using learning function U and learning function V are compared. The AK-MCS for RBDO using U in AK-MCS is referred to as AK-MCS + U, and the AK-MCS for WRBDO using V in AK-MCS is referred to as AK-MCS + V. For fair comparison, the stop criteria presented in Sect. 4.2 are equally applied to both methods. The results obtained through direct MCS are used as benchmarks. Section 5.2.1 compares the reliability analysis results obtained using AK-MCS + U and AK-MCS + V. WRBDO results of 3D mathematical problem and 6D arm model obtained by applying AK-MCS + U and AK-MCS + V are presented in Sects. 5.2.2 and 5.2.3, respectively.

5.2.1 Reliability analysis in WRBDO: 2D mathematical problem

Consider a 2D constraint function formulated as follows:

where the weight function is given as follows:

The properties of random variables, which are assumed to be statistically independent, are presented in Table 9. To validate AK-MCS + V, reliability analysis results of AK-MCS + U and AK-MCS + V are compared. AK-MCS employs 10 initial samples obtained by Latin hypercube sampling, and the result obtained from direct MCS with 107 samples is used as a benchmark. The results of reliability analysis are summarized in Table 10, and the results of sample distribution are shown in Fig. 10. The results show that AK-MCS + V requires fewer additional samples and more accurately derives \(P_{F,W}\) than AK-MCS + U. Compared to AK-MCS + U, the number of added samples and error in AK-MCS + V decrease by 55.26% and 85.5%, respectively. It is verified that AK-MCS + V can perform accurate reliability analysis in WRBDO even with a small number of function evaluations. From the sample distribution results, it can be seen that samples are added only near the limit state when using AK-MCS + U, whereas samples are added throughout the failure region as well as near the limit state when using AK-MCS + V. Since the Kriging model generated by AK-MCS + U cannot accurately predict the magnitude of failures, the error of \(P_{F,W}\) becomes large.

5.2.2 WRBDO of 3D mathematical problem

This section shows how the AK-MCS for WRBDO is applied to the WRBDO problem. Consider a 3D mathematical WRBDO problem formulated as follows:

where the weight function and constraint function are given as follows:

The properties of random variables are presented in Table 11, and they are assumed to be statistically independent. The target-weighted probability of failure (\(P_{F,W}^{{{\text{Target}}}}\)) is 5%. AK-MCS employs 20 initial samples obtained by Latin hypercube sampling and the results obtained from direct MCS with 106 samples are used as a benchmark.

The WRBDO results are summarized in Table 12, and the relative error of \(P_{F,W}\) according to design point is shown in Fig. 11. The results show that the AK-MCS + V requires fewer additional samples and more accurately derives optimum design than AK-MCS + U. Compared to AK-MCS + U, the number of added samples and the error of \(P_{F,W}\) in AK-MCS + V decrease by 51.54% and 97.93%, respectively. As shown in Fig. 11, AK-MCS + V accurately calculates \(P_{F,W}\) at every changed design point, and therefore, the optimum design can be accurately derived. However, in the case of AK-MCS + U, an inaccurate optimum design is derived because inaccurate \(P_{F,W}\) is calculated at almost every changed design point. This numerical example verifies that AK-MCS + V can accurately perform WRBDO with a small number of function evaluations.

5.2.3 Engineering example: 6D arm model using AK-MCS for WRBDO

The results of applying AK-MCS to the 6D arm model presented in Sect. 5.1.5 are compared in this section.

AK-MCS employs 30 initial samples obtained by Latin hypercube sampling, and the results obtained from direct MCS with 105 samples are used as a benchmark. The WRBDO results are summarized in Table 13 and the relative error of \(P_{F,W}\) according to design point is shown in Fig. 12. Similar to the results of Sect. 5.2.2, AK-MCS + V derives a more accurate optimum design with fewer additional samples compared to AK-MCS + U. Compared to AK-MCS + U, the number of added samples and the error of \(P_{F,W}\) in AK-MCS + V decrease by 60.78% and 91.37%, respectively. As shown in Fig. 12, AK-MCS + V calculates \(P_{F,W}\) more accurately at every changed design point compared to AK-MCS + U, resulting in accurate optimum design. This numerical example verifies that AK-MCS + V enables practical application of WRBDO with a small number of function evaluations.

6 Discussions and conclusion

This study proposes a WRBDO framework that considers the magnitude of failure. By defining a weight function that reflects the magnitude of failure exceeding the limit state, weighted failures can be determined for failures and how many low magnitude of failures correspond to a high magnitude of failure can be quantified. Then, the weighted probability of failure is calculated as the probability of weighted failure. The stochastic sensitivity for the weighted probabilistic constraints is also derived enabling accurate and efficient WRBDO. From the sensitivity results of the weighted probability of failure for highly nonlinear and/or high-dimensional functions, it is verified that the proposed stochastic sensitivity analysis is more accurate and efficient than the sensitivity analysis using FDM. This is because the stochastic sensitivities do not use any approximation and FDM requires more MCSs to obtain the sensitivity of the probabilistic response than the stochastic sensitivity analysis. To enable the practical application of WRBDO, the AK-MCS for WRBDO is proposed. In the AK-MCS for WRBDO, the Kriging model is sequentially updated by using learning function V to identify both the limit state and the magnitude of failures in the failure region, and MCS is applied to the established Kriging model to evaluate weighted probabilistic constraints without computational burden.

The characteristics of the WRBDO framework are analyzed through numerical examples performing WRBDO with direct MCS. The WRBDO results obtained through various optimization methods indicate that using the stochastic sensitivity yields the same optimum design as using FDM, but its convergence rate to the optimum design is faster. In addition, the results obtained from the non-gradient approach GA and the gradient-based approach SQP are similar, which verifies that the proposed stochastic sensitivity analysis is working properly in the WRBDO framework. The WRBDO results obtained using various weight functions show that the WRBDO yields conservative designs than a conventional RBDO, and more conservative designs are derived as the slope of the weight function increases. The nonlinearity of the constraint function also affects the WRBDO design, and a more conservative design is obtained as the nonlinearity increases. Furthermore, the WRBDO results with different standard deviations of design variables show that as the slope of the weight function and the nonlinearity of the constraint function increase, the influence of the standard deviations on the optimum design increases, resulting in a more conservative design. An engineering example using the 6D arm model is used for practical application of RBDO and WRBDO. The optimum results show that WRBDO leads to a more conservative design than RBDO, and the weighted failures of WRBDO are averagely 9.1 times larger than those of RBDO. It is noteworthy that the RBDO design becomes the same as the WRBDO design if the conventional probability of failure corresponding to the weighted probability of failure of WRBDO is set as the target probability of failure of the RBDO problem. In actual applications, RBDO only requires the company to set the target probability of failure, whereas WRBDO requires the company to set the weight function and target-weighted probability of failure, which can be cumbersome; however, the weighted probability of failure of WRBDO is meaningful in that it can provide RBDO a more conservative target probability of failure.

The AK-MCS for WRBDO is validated through numerical examples. Through the results of reliability analysis in WRBDO, it is verified that AK-MCS + V using learning function V in AK-MCS can perform accurate reliability analysis in WRBDO with a small number of function evaluations. The results of 3D mathematical problem show that AK-MCS + V can accurately perform WRBDO with a small number of function evaluations. The results obtained by performing WRBDO of the 6D arm model using AK-MCS + V verify that AK-MCS + V enables practical application of WRBDO with a small number of function evaluations.

This study is novel in that it alleviates the limitation of the existing RBDOs, which considers all failures equally, by numerically quantifying the weight between failures according to the magnitude of failure. Unlike risk-based approaches, different conservativeness can be achieved in WRBDO depending on the nature of the weight function, and WRBDO can be evaluated as securing desirable conservativeness because it considers information on resulting damage or cost according to the magnitude of failure.

In this study, a sampling-based WRBDO framework using the direct MCS and the AK-MCS for WRBDO is presented. In future work, a framework that combines WRBDO with analytical methods such as the first-order reliability method (FORM), SORM, and dimension reduction method (DRM) can be presented.

Abbreviations

- d :

-

Design variable vector

- X :

-

Random variable vector

- \(P[ \cdot ]\) :

-

Probability measure

- g(X):

-

Constraint function

- \(P_{F}^{{{\text{Target}}}}\) :

-

Target probability of failure

- d L :

-

Lower design bound

- d U :

-

Upper design bound

- P F :

-

Probability of failure

- \({{\varvec{\upmu}}}\) :

-

Vector of the mean of the random input

- \(f_{{\mathbf{X}}} ({\mathbf{x}};\,{{\varvec{\upmu}}})\) :

-

Joint probability density function of X

- \(E[ \cdot ]\) :

-

Expectation operator

- \(\Omega_{F}\) :

-

Failure set

- \(I_{{\Omega_{F} }} ({\mathbf{x}})\) :

-

Indicator function for the failure set

- \(f_{W}\) :

-

Weight function

- \(P_{F,W}\) :

-

Weighted probability of failure

- \(\Omega_{S}\) :

-

Safe set

- \(I_{{\Omega_{S} }} ({\mathbf{x}})\) :

-

Indicator function for the safe set

- \(P_{F,W}^{{{\text{Target}}}}\) :

-

Target-weighted probability of failure

- S :

-

Monte Carlo population

- S F :

-

Monte Carlo population in the failure region

References

AAA Exchange (2017) Potholes and vehicle damage. AAA Exchange. https://exchange.aaa.com/automotive/automotive-trends/potholes-and-vehicle-damage/. Accessed 25 Jan 2021

Altair (2017) Altair HyperStudy tutorials. Altair. https://www.altairhyperworks.com. Accessed 25 Jan 2021

Browder A (1996) Mathematical analysis: an introduction. Springer, Berlin

Chaudhuri A, Norton M, Kramer B (2020) Risk-based design optimization via probability of failure, conditional value-at-risk, and buffered probability of failure. AIAA SciTech Forum, Orlando, FL

Echard B, Gayton N, Lemaire M (2011) AK-MCS: an active learning reliability method combining Kriging and Monte Carlo simulation. Struct Saf 33:145–154

Hu WF, Choi KK, Cho H (2016) Reliability-based design optimization of wind turbine blades for fatigue life under dynamic wind load uncertainty. Struct Multidisc Optim 54(4):953–970

Jiang C, Qiu H, Gao L, Cai X, Li P (2017) An adaptive hybrid single-loop method for reliability-based design optimization using iterative control strategy. Struct Multidisc Optim 56:1271–1286

Karagiannis GM, Chondrogiannis S, Krausmann E, Turksever ZI (2017) Power grid recovery after natural hazard impact. European Commission, Luxembourg

Kim S, Jun S, Kang H, Park Y, Lee DH (2011) Reliability based optimal design of a helicopter considering annual variation of atmospheric temperature. J Mech Sci Technol 25(5):1095–1104

Kusano I, Montoya MC, Baldomir A, Nieto F, Jurado JÁ, Hernández S (2020) Reliability based design optimization for bridge girder shape and plate thickness of long–span suspension bridges considering aeroelastic constraint. J Wind Eng Ind Aerod 202:104176

Lee I, Choi KK, Noh Y, Zhao L, Gorsich D (2011) Sampling-based stochastic sensitivity analysis using score functions for RBDO problems with correlated random variables. J Mech Des 133(2):21003

Lian Y, Kim NH (2006) Reliability-based design optimization of a transonic compressor. AIAA J 44(2):368–375

López C, Bacarreza O, Baldomir A, Hernández S, Aliabadi M (2017) Reliability-based design optimization of composite stiffened panels in post-buckling regime. Struct Multidisc Optim 55(3):1121–1141

Meng Z, Zhou H, Hu H, Keshtegar B (2018) Enhanced sequential approximate programming using second order reliability method for accurate and efficient structural reliability-based design optimization. Appl Math Model 62:562–579

Meng Z, Zhang D, Li G, Yu B (2019) An importance learning method for non-probabilistic reliability analysis and optimization. Struct Multidisc Optim 59:1255–1271

Montoya A, Millwater H (2017) Sensitivity analysis in thermoelastic problems using the complex finite element method. J Therm Stresses 40(3):302–321

Mukhopadhyay S, Jones MI, Hallett SR (2015) Compressive failure of laminates containing an embedded wrinkle; experimental and numerical study. Compos Part A Appl Sci Manuf 73:132–142

Ni P, Li J, Hao H, Yan W, Du X, Zhou H (2020) Reliability analysis and design optimization of nonlinear structures. Reliab Eng Syst Saf 198:106860

Papadrakakis M, Lagaros N, Plevris V (2005) Design optimization of steel structures considering uncertainties. Eng Struct 27(9):1408–1418

Park JW, Lee I (2018) A study on computational efficiency improvement of novel SORM using the convolution integration. J Mech Des 140(2):024501

Rahman S (2009) Stochastic sensitivity analysis by dimensional and score functions. Probab Eng Mech 24(3):278–287

Rockafellar RT, Uryasev S (2000) Optimization of conditional value-at-risk. J Risk 2:21–41

Rockafellar RT, Royset JO (2010) On buffered failure probability in design and optimization of structures. Reliab Eng Syst Saf 95:499–510

Royset JO, Bonfiglio L, Vernengo G, Brizzolara S (2017) Risk-adaptive set-based design and applications to shaping a hydrofoil. J Mech Des 139(10):101403

Shi L, Lin SP (2016) A new RBDO method using adaptive response surface and first-order score function for crashworthiness design. Reliab Eng Syst Saf 156:125–133

Shin J, Lee I (2014) Reliability-based vehicle safety assessment and design optimization of roadway radius and speed limit in windy environments. J Mech Des 136(8):1006–1019

Wang Z, Wang P (2012) A nested extreme response surface approach for time-dependent reliability-based design optimization. J Mech Des 134(12):12100701–12100714

Wang W, Dai S, Zhao W, Wang C, Ma T, Chen Q (2020a) Reliability-based optimization of a novel negative Poisson’s ratio door anti-collision beam under side impact. Thin-Walled Struct 154:106863

Wang Z, Zhang Y, Song Y (2020b) A modified conjugate gradient approach for reliability-based design optimization. IEEE Access 8:16742–16749

Yang M, Zhang D, Han X (2020) New efficient and robust method for structural reliability analysis and its application in reliability-based design optimization. Comput Methods Appl Mech Eng 366:113018

Zhang W, Rahimian H, Bayraksan G (2016) Decomposition algorithms for risk-averse multistage stochastic programs with application to water allocation under uncertainty. Inf J Comput 28(3):385–404

Acknowledgements

This research was supported by the KAIST-funded Global Singularity Research Program for 2021 (N11210060).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Replication of results

The code for this paper is available at the website: (https://drive.google.com/file/d/1Zskpr_Sjqyj-hx3r8dbE1wAbE8uIyq1S/view?usp=sharing).

Additional information

Responsible Editor: Axel Schumacher

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lee, U., Lee, I. Sampling-based weighted reliability-based design optimization. Struct Multidisc Optim 65, 20 (2022). https://doi.org/10.1007/s00158-021-03133-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00158-021-03133-5