Abstract

In many practical applications, probabilistic and bounded uncertainties often arise simultaneously, and these uncertainties can be described by using probability and convex set models. However, the computing cost becomes unacceptable when directly solving the reliability-based design optimization (RBDO) problem with these uncertainties involved. To address this issue, in this study, a sequential sampling strategy by extending classical sequential optimization and reliability assessment (SORA) method for RBDO is developed. The proposed strategy can successively select sample points to update the surrogate model at each step of the optimization process. New samples for reliability constraints are mainly chosen from the local region around the approximate minimum performance target point (MPTP) and worst-case point (WCP). Typical design examples, including one engineering application, are investigated to demonstrate the efficiency and accuracy of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Various methods have been developed to treat uncertainties related to reliability analysis and reliability-based design optimization (RBDO) of engineering systems (Au et al. 2003; Chen et al. 1997; Du and Chen 2004; Wang et al. 2011; Youn et al. 2005, Liu et al. 2016; Liu and Wen 2018). In RBDO, reliability is defined as the probability that system performance will meet its marginal value while taking uncertainties into account. While uncertainty can be probabilistic and/ or non-probabilistic. In the former case, the uncertainty is generally characterized using random variable and random field. At the academic level, tremendous efforts have focused on improving the accuracy and efficiency of RBDO techniques. For example, new reliability estimation methods have been developed to improve the efficiency and stability of numerical algorithms (Most and Knabe 2010; Youn et al. 2005; Youn et al. 2008), including two main optimization strategies, namely, the double-loop and decoupled approaches (Chen et al. 1997; Cheng et al. 2006; Du and Chen 2004; Liang et al. 2007). In traditional RBDO, reliability analysis requires the precise probability distribution of uncertain variables, which may be impossible to obtain for some uncertainties due to limited experimental data. To overcome the deficiency of the probability approach, in the last two decades a number of attempts have been made to apply non-probabilistic models (the convex model or interval model) for RBDO. Ben-Haim (1994) and Ben-Haim and Elishakoff (1995) first proposed the concept of non-probabilistic reliability based on convex model theory. Since then, non-probabilistic reliability-based design optimization using convex models has been addressed by a number of studies(such as anti-optimization technique (Elishakoff et al. 1994), the interval analysis method (Qiu and Elishakoff 1998), the convex model superposition method (Qiu and Elishakoff 1998). Kang and his colleagues (Kang and Luo 2009; Luo et al. 2009b) proposed a non-probabilistic reliability-based continuum topology optimization method based on convex models, in which reliability constraints are reformulated into equivalent constraints on the concerned performance. They further extended this method to address nonlinear topology optimization problems (Kang and Luo 2009). Cheng (2009) proposed a novel robust reliability optimization method for designing mechanical components based on a non-probabilistic interval model. Jiang et al. (2011a) proposed a correlation analysis technique of convex models when non-probabilistic uncertainties were involved. In addition, RBDO method considering dependent interval variables was also investigated via using the sequence optimization strategy (Du 2012).

The researches discussed previously consider only one kind of uncertainties; in other words, either probabilistic uncertainty or non-probabilistic uncertainty are involved. However, it is well-known that practical engineering normally consists of various kinds of uncertainties. Some uncertainties can be characterized with probabilistic models with precise distributions, while others need to be treated with non-probabilistic models with bounded information. New strategies should be further developed to deal with such circumstances. To this end, several structural reliability analysis methods have been emerged recently in the presence of mixed uncertain variables. Guo and Lu (2002) developed a hybrid probabilistic and non-probabilistic reliability approach based on interval analysis for linear problems via a two-stage limit-state function. Penmetsa and Grandhi (2002) used a function approximation method to improve the efficiency of reliability analysis with random and interval variables. For direct reliability analysis and inverse reliability analysis, Du et al. (2005), and Guo and Du (2009) proposed a semi-analytic method for hybrid reliability sensitivity analysis with both random and interval variables. Qiu and Wang (2010) and Wang and Qiu (2010) put forward two hybrid models through the use of interval arithmetic, namely, a probabilistic and non-probabilistic hybrid model and probabilistic and interval hybrid model. An iterative procedure was developed by Luo et al. (2009a) for obtaining the worst-case point and the most likely point from a probability and convex set model. Later, different forms of limit state strips resulting from the interval uncertainties were considered and summarized, based on which some more efficient hybrid reliability analysis methods (Jiang et al. 2013; Jiang et al. 2011a) were further formed.

Compared to reliability analyses, research on RBDO of hybrid uncertainty systems remains in its infancy. Du et al. (2005) addressed a hybrid RBDO problem in which reliability was determined with the worst-case combination of interval variables. Kang and Luo (2010) presented an RBDO approach based on a probability and convex set hybrid model. Yao et al. (2013) quantified mixed uncertainties from statistical laws and evidence theory and then attempted to formulate an optimization design obtained from the given hybrid reliability index. Wang et al. (2016) investigated a new formulation and numerical solution for the RBDO of structures exhibiting random and uncertain-but-bounded (interval and convex) mixed uncertainties. Xia et al. (2015) proposed a hybrid perturbation random moment method and hybrid perturbation inverse mapping method that transform nested loop optimizations into single loop optimizations. The sequential optimization and reliability assessment (SORA) method is one of the most famous strategy to efficiently solve the RBDO problems. However, algorithms that use SORA methods to solve RBDO problems with hybrid models have seldom been studied.

The difficulty of direct evaluation of probabilistic models of RBDO with complex practical problems using expensive black-box simulation models renders the computational cost extremely prohibitive. To improve practical utility, a preferable approach is to employ approximation models, which are often referred to as metamodels, to replace the actual limit-state functions with expensive simulation models during reliability analysis and RBDO. To improve the efficiency of RBDO, a variety of metamodelling techniques have been applied to replace true constraint function evaluations. Youn and Choi (2004) and Lee and Song (2011) used moving least square methods for RBDO. Kim and Choi (2008) proposed an a response surface method (RSM) with prediction interval estimation. Lee and Jung (2008) suggested a constraint boundary sampling (CBS) method and Kriging model for RBDO. Bect et al. (2012) proposed the sequential design of computer experiments for the estimation of the probability of failure. Basudhar and Missoum (2008) proposed adaptive explicit decision functions for probabilistic design and optimization using support vector machines (SVM). Hu and Youn (2009) applied adaptive-sparse polynomial chaos expansion to the reliability analysis and design of complex engineering systems. Bichon et al. (2008) proposed an efficient global reliability analysis method for nonlinear implicit performance functions. Echard et al. (2011) proposed an active learning reliability method combining Kriging and Monte Carlo simulation. Cheng and Li (2008) proposed a reliability analysis method using artificial neural network (ANN)-based genetic algorithms. Zhao et al. (2011b) used the RSM and sequential sampling for probabilistic design. Basudhar and Missoum(2008) proposed an adaptive sampling technique for RBDO based on support vector machine (SVM). Picheny et al. (2010) addressed the issue of designing metamodel experiments with sufficient accuracy for a certain level of the response value. Zhao et al. (2011a) proposed a dynamic Kriging approach for design optimization. Chan et al. (2010) developed a modified efficient global optimization algorithm for measuring maximal reliability in a probabilistic constrained space; Zhuang and Pan (2012) proposed a new sequential sampling method for design under uncertainty. Wang and Wang (2013) developed a maximum confidence enhancement (MCE)-based sequential sampling approach for RBDO using surrogate models. Chen et al. (2014) proposed a local adaptive sampling method for RBDO using the Kriging model. Li et al. (2016) presented a new local approximation method that employs the most probable point (MPP) for RBDO. Applications of various metamodelling techniques have been extensively studied in support of conventional probability-based structural reliability analysis and design. In the conventional probability model, the limit state defines a unique surface referred to as the limit state surface. However, when random and uncertain-but-bounded variables coexist, there are a cluster of limit-state surfaces that form a critical region of the band in the standard space for all possible values of bounded uncertainties. Due to these characteristics, it is quite difficult to accurately construct a cluster of limit-state surfaces from an approximating model, and thus some computational costs are wasted in reliability assessments of an inactive probabilistic constraint. The proposed method involves local sampling around the minimum performance target point (MPTP) and worst-case point (WCP) (i.e., worst-case realization of bounded uncertainties) rather than requiring the whole limit state boundaries within the design region to be accurate. Since probability and convex set theory is fundamentally different from conventional probability theory, the applicability of different metamodelling techniques to RBDO with the hybrid model may vary considerably, and it seems very important for developing corresponding efficient algorithms.

In this paper, a sequential sampling method is proposed to solve RBDO problem based on the hybrid model of probability and convex set, which significantly improves the computational efficiency and accuracy of RBDO. The remainder of this paper is organized as follows. A definition of reliability based on probability and convex set hybrid models is introduced in Section 2. A sequential optimization reliability assessment method is introduced in Section 3. The Kriging technique employed and a sequential improving sampling strategy are proposed in Section 4. Three numerical examples are investigated in Section 4. Finally, conclusions are given in Section 5.

2 Reliability definition based on probability and convex set hybrid models

2.1 Classical probabilistic-based reliability model

In a reliability analysis where only random variables X are involved, the failure probability can be given as

where X denotes an m-dimensional vector of independent random variables, G(⋅) is a limit-state function, G(X) ≤ 0 defines the failure event, and Pfdenotes the failure probability, which can be computed from the following equation:

where pX(X) denotes the joint probability density function (PDF) of X. It appears to be difficult to directly solve the above integral, and the first-order reliability method (FORM) is widely used in uncertainty analysis. When applying FORM, random variables X = (X1, X2, ⋯, Xm) in X-space can be changed to standard normal random variables u = (u1, u2, ⋯, um) in u-space:

where \( {F}_{X_i} \) is the cumulative distribution function (CDF) of the random variable Xi and Φ−1denotes the inverse CDF of the standard normal distribution. A limit-state function G in u-space can be written as

where T is a probability transformation function.

The integral included in Eq. (2) can be rewritten in u-space:

wherepu(u)is the joint CDF of u. Eq. (6) can ultimately be solved using the reliability index approach (RIA) (Liu and Der Kiureghian 1991; Tu et al. 1999)where an equivalent optimization problem should be constructed as follows:

where ‖ ⋅ ‖ denotes the vector norm. The optimum solutionu of the above problem is called the most probable point (MPP) or the design point.

Due to its efficiency, computational robustness and convenience in sensitivity analysis, the performance measurement approach (PMA) is widely used for reliability-based design optimization(Lee et al. 2002; Tu et al. 1999). The basic premise of PMA is to determine the minimum performance target point (MPTP) rather than the MPP. Using PMA, the feasibility of a given design can be evaluated by solving the following optimization problem:

where βt denotes the target reliability index. The optimum point \( {\mathbf{u}}_{\mathrm{MPTP}}^R \) is the MPTP.

2.2 Non-probabilistic reliability model based on a convex model

Convex models are used to describe bounded uncertain variables without using a precise probability distribution. The two convex models that are most widely used are the “interval model” and the “ellipsoidal model”. In practical situations, it might be suitable to classify bounded uncertain variables into different groups such that variables in the same group are correlated, whereas variables of different groups are uncorrelated. Denote the vector of convex variables as Y ∈ Rn. Suppose the uncertain variables are divided into NE groups; then, Y can be written as

where \( \widehat{{\boldsymbol{Y}}_i} \) is the nominal value of Yi, Wi is a symmetric positive-definite matrix called the characteristic matrix of an ellipsoid, and εi is a real number defining the magnitude of parameter variability. ni is the number of bounded uncertainties in the i-th group, and \( {\sum}_{i=1}^{N_E}{n}_i=n \). As shown in Fig. 1 (a)–(c), there are three specific multi-ellipsoid cases of problems involving three uncertain parameters in which the parameters may be divided into three groups (Y = {[Y1], [Y2], [Y3]}T), two groups (Y = {[Y1, Y2], [Y3]}T) and one group (Y = {[Y1, Y2, Y3]}T). We direct the reader to research articles by Luo et al. (2009a).

In Eq.(10), the ith characteristic matrix can be decomposed by solving the following eigenvalue problem:

where Qi is an orthogonal matrix, Λi is the diagonal matrix of eigenvalues of Wi and Ι is the identity matrix.

By introducing the vectors defined as

The convex model in (9) becomes

where qi is the normalized or standard vector of the ith group of uncertain variablesYi.Here, the ellipsoids are transformed into spheres of unit radius in the normalized space (q-space).E is the ellipsoid set.

For the more general case of a multi-ellipsoid model for k groups of uncertainties, the non-probabilistic reliability index η can be defined as

2.3 Reliability definition based on probability and convex set mixed model

In practical engineering problems, random and bounded variables may coexist. In order to assess structural reliability, a quantitative definitions of failure states is required. We assume that the expected performance of the structure is denoted by G(X, Y)>0.After the normalization of original uncertain variables XandYinto u and q as described in (4) and (12), the limit-state functionG(X, Y) is transformed into the corresponding normalized limit-state function g(u, q). Due to the presence of convex variables, the limit state g(u, q) = 0will form a cluster of limit-state surfaces in probability space, as shown in Fig. 2. Its lower boundary surface SL and upper boundary surface SR can be expressed as:

In fact, SL and SR are two limit-state surfaces with different q values, which have the nearest and furthest distances to the origin, respectively. We can calculate the reliability indices of the two bounding limit-state surfaces, respectively, whereby form the mixed reliability index βm (Kang and Luo 2010; Luo et al. 2009a):

where βL and βR represent the reliability indices of the lower and upper bounding limit state surfaces. Correspondingly, the failure probability of the structure is also an interval:

where\( {P}_f^L \)and \( {P}_f^U \)are the minimum and maximum failure probability values, respectively, corresponding to the minimum limit state function.

With respect to practical problems, the maximal failure probability is generally our greatest concern. Thus, to ensure design safety, we use the maximal failure probability (or the minimal reliability) to measure the design’s reliability. The following double-layer optimization problem can be obtained:

where βLrepresents the minimum reliability index, from which the maximum failure probability \( {P}_f^U=\phi \left(-{\beta}^L\right) \) can be approximated. Obviously, Eq.(18) is a very complex optimization problem. However, it is equivalent to the following single-layer optimization problem:

A study (Lee et al. 2002) has shown that PMA can be easily applied. The equivalent optimization problem for PMA can be expressed as

where optimal solutions \( {\mathbf{u}}_{PMPP}^R \) and q∗ shown in Eq.(20) are the minimum performance target point (MPTP) and worst-case point, (WCP), respectively.\( g\left({\mathbf{u}}_{PMPP}^R,{\mathbf{q}}^{\ast}\right) \) is the performance value of the limit state function andβtis the prescribed target reliability index.

3 Reliability-based design optimization under probability and convex set hybrid models

The general reliability-based design optimization problem based on probability and convex set hybrid models can be expressed as

wheref(d) is the objective function;d = {d1, d2, ..., dn}T denotes the vector of design variables, which take the mean (or nominal) value when their variations are modelled as random (or bounded) uncertainties; βL[gj(d, u, q) ≥ 0] is the mixed reliability index corresponding to the jth performance function; and βt, j(j = 1, 2, ..., ng) is the prescribed target value of the reliability index.

The optimization problem equivalent to (21) in PMA terms is expressed as

where Zj(d)is the minimum target performance value.

As shown in Eq.(22), this is a typical nested optimization problem. The inner reliability analysis loop is embedded within the overall optimization loop. Thus, numerous function evaluations may be required.

Among them, the WCP q∗ is defined as

Obviously, the formula (23) and (24) can be integrated into a single level problem:

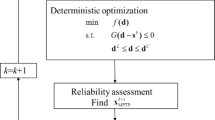

Various methods have been proposed as the means to improve computational efficiency levels. Du and Chen (2004) developed the sequential optimization and reliability assessment (SORA) method for efficiently solving RBDO problems. SORA performs RBDO with sequential cycles of deterministic optimization and reliability analysis. After an optimal design point is identified from the deterministic optimization loop, PMA is performed on this point in the reliability analysis loop. The result of the reliability analysis is then used to formulate a new deterministic optimization model for the following cycle to improve the reliability. In this paper, SORA is extended to solve the proposed reliability-based optimization problem using the hybrid uncertainty model. The deterministic optimization problem of the (k + 1)th cycle is formulated as

where \( {\mathbf{u}}_{MPTP,j}^{R,(k)} \)andq∗, (k)are the jth MPTP and WCP, respectively, from the reliability analysis conducted in the kth cycle. This process is repeated until \( {g}_j\left(\mathbf{d},{\mathbf{u}}_{MPTP,j}^R,{\mathbf{q}}^{\ast}\right)\ge 0, \) (j = 1, 2, ..., ng) and the objective function becomes stable.

4 A sequential sampling method for reliability-based design optimization

4.1 Kriging theory

In this study, the Kriging model (Sakata et al. 2003; Simpson et al. 2001) is used. Kriging assumes that the deterministic response of a system is a stochastic process y(x)(x = {X Y}T) comprising a regression model and a stochastic error as

wheref(x) is the known regression function, and Z(x) is a stationary stochastic process with zero mean, zero variance σ2 and non-zero covariance. The f(x) term provides a global approximation of the design response. Z(x)defines ‘localized’ deviations that can be formulated as

whereRis the n0 × n0 symmetric positive definite matrix with a unit diagonal, and R(xi, xj) is the correlation function between sample points xiand xj. The correlation function R(xi, xj) used in the study is

where θk is an unknown correlation parameter, npis the number of design variables, and \( \mid {x}_k^i-{x}_k^j\mid \) is the distance between the kth components of points \( {x}_k^i \) and \( {x}_k^j \). The estimator \( \tilde{y}\left(\mathbf{x}\right) \) for the response y(x) made at an untried point x can be formulated by

whererT(x) is the correction vector between a predicted x and n0sample points, vector y represents the responses at each sampling point, and fis an n0 vector. Vector rT(x) and the scalar parameter \( \widehat{\beta} \) are given by

The estimation of variance\( {\widehat{\sigma}}^2 \) for Eq. (28) is given by

The maximum likelihood estimate of θk included in Eq. (29) can be obtained by maximizing the following expression:

More information on the calculation method can be found in the relevant literature (Sakata et al. 2003; Simpson et al. 2001). When constructing Kriging models, the selection of sampling points can be very important. The design of experiment (DoE) method can be used for the effective selection of a minimum number of sampling points from the design space. Many different DoE methods, such as the factorial, Koshal, composite, Latin hypercube, and D-optimal design methods, have been proposed (Myers and Montgomery 1995). In this paper, the Latin hypercube sampling (LHS) scheme (Morris and Mitchell 1995) is adopted to generate sample points from non-deterministic design spaces. LHS performs considerably well in generating a representative distribution of sample points from a design space with uncertain variables (Jiang et al. 2008; Zhao et al. 2010; Zhuang and Pan 2012). In this paper, LHS is used for the initial sampling to construct Kriging models.

4.2 Sequential improvement sampling

Since being proposed by Jones (1998), the efficient global optimization (EGO) has been widely employed in various areas. EGO involves first constructing a Kriging model based on the initial set of small samples and then adding new samples that maximize the expected improvement function (EI).The improvement of a sample X for global minimum of G(X) is given as

whereGminis the best solution obtained from all sampled training points. Suppose there are currently n training points; Gminis equal tomin{G(X(1)), ..., G(X(n))};G(X)follows a normal distribution, \( N\left(\tilde{G}\left(\mathbf{X}\right),{s}^2\left(\mathbf{X}\right)\right) \); and \( \tilde{G}\left(\mathbf{X}\right) \)ands(X)represent the Kriging predictor and its standard error, respectively. The expected improvement function is

whereΦ(⋅) and ϕ(⋅)are the cumulative distribution function and probability density function, respectively, of a standard Gaussian variable. In a previous study (Zhuang and Pan 2012), to compare EI with different constraints, the relative improvement criterion (ERI) is expressed as follows:

Therefore, the sequence sampling criterion for RBDO optimization with hybrid models can be expressed as

where Y∗ is the WCP, which is converted from the q space by (12) and (24).

Thus, a sampling criterion is used to select a new sample:

By maximizing ERI, we can find a new training point.

A flowchart of the sequential sampling process is shown in Fig. 3, and the procedures are as follows:

-

(1)

Initialize the sampling space Eval, which is a combination of the current design and uncertainty spaces. In other words, (d, X, Y) corresponds to a sample point forming the sampling space for the simulation, and LHD is used to sample points in Eval. Set the number of samples as Ns and the iterative step as k = 0. Use expensive high fidelity computational models to calculate the objective and constraint functions for all sample points.

-

(2)

The responses of objective and constraint functions are computed for these points. Kriging models \( \tilde{f}\left(\mathbf{d},\mathbf{X},\mathbf{Y}\right) \) and \( \tilde{G}\left(\mathbf{d},\mathbf{X},\mathbf{Y}\right) \)are constructed. Where d, X and Y are used as input variables for the Kriging model.

-

(3)

A deterministic optimization is performed through (26), and the design variables can then be obtained. In the first optimization cycle, set \( {\mathbf{u}}_{MPTP,j}^{R,(0)}=0,{\mathbf{q}}^{\ast, (0)}=0 \).

-

(4)

Given d, the original X and Y spaces are transformed into standard normalizedu and qspaces, respectively. The reliability analysis is performed according to Eq.(20) the MPTP is found, the variables are transformed back to the X and Y space, evaluated through the computer experiment,and it becomes the current minimum Gmin, If there are multiple constraints, each constraint will produce Gi, min, (j = 1, 2, ..., ng).The currentGi, min, (j = 1, 2, ..., ng)can be obtained and added to update the Kriging model.

-

(5)

The point which maximizes ERI values on the \( \tilde{G}\left(\mathbf{X},\mathbf{Y}\right) \) function is chosen as the next sampling point, and is added to the original Eval to update the Kriging model\( \tilde{G} \). Repeat step (5) to choose the maximum ERI in the constraint until the maximum ERI is less thanε.ε is a small positive number used as the convergence criterion for the maximization of ERI.

If there is only one constraint, the point with maximum ERI will be added to Eval; if there are multiple constraints, the point with the largest ERI will be added to Eval. this sample is used to update only the surrogate of the constraint that has the largest ERI value.

-

(6)

The MPTP is found based on the updated Kriging model. If all the \( {\tilde{g}}_{i,\mathrm{MPTP}}^{(k)}\ge 0 \), the design variables d can be obtained. If any \( {\tilde{g}}_{i,\mathrm{MPTP}}^{(k)}<0 \), proceed to step (3) and perform another deterministic optimization cycle.

In Step (1), a basic requirement for an initial design is that the entire input space should be sampled uniformly so that all regions of the whole space have same opportunities to be searched. In addition, the number of points in the initial design is dependent on the dimension of the input space, that is, the higher the dimension of the input space, the greater the number of sampling points in the initial design.

The i-th original ellipsoid model in Eq. (13) becomes a unit model. By virtue of the spherical coordination transformation

With \( {q}_{i,{n}_i} \) the nth component of qi, the uncertain space of Eq. (13) becomes

where ρi is the radical coordinate and αi, jis the jth angular coordinate for the ith ellipsoid.

For convex variables, the samples are chosen with a uniform distribution in the space defined by Eq. (13) and mapped into the original space defined in Eq. (12) from Eq. (40).

In Step (5), the optimal solution of eq. (39) is the point on the circle whose origin is located in the u space with a radius of βt. This point is considered to bring the maximum improvement to the approximation model \( \tilde{G} \) function estimation subject to the MPTP and WCP constraints. The corresponding point in the X-space is depicted in Fig. 4. The limit state \( \tilde{G} \)= 0 forms a critical failure region, the two bounds of this region can be represented as \( \underset{\mathbf{Y}}{\min }G\left(\mathbf{X},\mathbf{Y}\right)=0 \) and \( \underset{\mathbf{Y}}{\max }G\left(\mathbf{X},\mathbf{Y}\right)=0 \),respectively. The plus signs represent the initial sample points, and point (\( {\mu}_{X_1} \),\( {\mu}_{X_2} \)) is the optimal solution obtained via deterministic optimization in step (3).

As the current MPTP and WCP may not be sufficiently accurate due to the prediction error of metamodel \( \tilde{G}\left(\mathbf{X},\mathbf{Y}\right) \), the ERI criterion is employed to find a new sampling point (denoted by the square mark) on the ellipsis. Then, the Kriging metamodel is reconstructed and the prediction error in the neighbourhood of MPTP and WCP will decrease. It is not necessary to perform uniform sampling on the two bounds of the limit state surface(i.e. \( \underset{\mathbf{Y}}{\min }G\left(\mathbf{X},\mathbf{Y}\right)=0 \)and \( \underset{\mathbf{Y}}{\max }G\left(\mathbf{X},\mathbf{Y}\right)=0 \)). The sampling criterion can help identify the regions that should be densified, such as the boundary of a critical failure region (i.e.\( \underset{\mathbf{Y}}{\min }G\left(\mathbf{X},\mathbf{Y}\right)=0 \)) which we most care about and neighborhood of MPTP. The precision of the approximation of the bounds of \( \underset{\mathbf{Y}}{\min}\tilde{G}\left(\mathbf{X},\mathbf{Y}\right)=0 \) is gradually improved at each step. The major focus is to improve the precision of the local critical regions rather than the whole hybrid space. In other words, the samples are distributed uniformly in the entire hybrid space by the LHD method, which will make the accuracy of Kriging model low.

5 Numerical examples

This section presents three examples to illustrate capacities of the proposed method. The results of the nested optimization method, which calls the true probabilistic constraint functions, are considered standard reference values. The results of the Kriging-based methods are compared with those of the analytical method to evaluate the errors. All programme codes were tested in MATLAB. DACE toolbox developed by Lophaven et al. (2002) was used in the numerical examples and engineering application.

5.1 Numerical example 1

Two random design variables, two non-probabilistic parameters and two probabilistic constraints are considered in numerical example 1:

where the design variables d = {d1, d2}T represent the mean values of x1 and x2,respectively. The coefficients of variation (COVs) for x1 and x2 are both 0.03. The variation ranges of y1 and y2 are given by \( y\in \left\{\mathrm{y}|{\left({\mathrm{y}}_i-{\widehat{y}}_i\right)}^{\mathrm{T}}{\mathrm{W}}_1\left({y}_i-{\widehat{y}}_i\right)\le {0.5}^2\right\}, \) where their nominal values are \( \widehat{y}={\left\{{\widehat{y}}_1,{\widehat{y}}_2\right\}}^T={\left\{0.25,2\right\}}^T \) and the characteristic matrix \( {\mathrm{W}}_1=\left[\begin{array}{cc}4& 0\\ {}0& 1\end{array}\right] \). The target reliability index βt, 1 = βt, 2 = 3.0, which denotes the structure’s probability of failure, must be valued at less than 0.135%.

In Fig. 5, the optimal solution is in the narrow neighbourhood between the limit-state surfaces G1 = 0 and G2 = 0. The initial Latin hypercube sampling is shown in Fig. 6 with 20 initial sample points. Kriging models \( {\tilde{G}}_1 \) and \( {\tilde{G}}_2 \) are created from these sample points. The sample points are evenly distributed throughout the entire sampled space. The limit-state surface \( {\tilde{G}}_2=0 \) is inexact around the region of the optimal design point. The results of the sequential sampling method are shown in Fig. 7. Additional sample points appear in both feasible and infeasible regions and are clustered around the optimal solution of d1 and d2.

The iteration history is shown in Table 1. Initially, the optimal solution based on the approximate model is close to the solution of the real function, but the approximate response\( {\tilde{G}}_2 \) is very different from the accurate response G2 under the same design variables. The relative error between the approximate response and accurate response decreases with each iteration, which indicates that the initial approximation model is not sufficiently accurate. However, the boundaries of constraints 1 and 2 around the optimal solution are well fitted after several sequential sampling steps.

The summary results of the optimization for example 1 are shown in Table 2. The nested optimization involves 558 function calls. However, using the present method, the total number of function calls is reduced to only 54. Thus, the total number of function calls required is less than that of the conventional nested optimization for test function 1.

We repeated the RBDO based on Kriging 10 times, and summarized the results of relative error. It can be clearly seen from Fig. 8 that all relative errors are very small, revealing that the effect of the randomness on optimization results could be negligible.

5.2 Numerical example 2

Test function 2 includes two random design variables, two non-probabilistic parameters and three probabilistic constraints:

where design variables d = {d1, d2}T represent the mean values of x1 and x2, respectively. The standard deviations of x1 and x2 are σ1 = σ2 = 0.3.The variation ranges of y1 and y2are given by \( \mathbf{y}\in \left\{\mathbf{y}|{\left({\mathbf{y}}_i-{\widehat{\mathbf{y}}}_i\right)}^{\mathrm{T}}{\mathrm{W}}_1\left({\mathbf{y}}_i-{\widehat{\mathbf{y}}}_i\right)\le 1\right\}, \) where their nominal values are \( \widehat{y}={\left\{{\widehat{y}}_1,{\widehat{y}}_2\right\}}^T={\left\{5,10\right\}}^T \) and the characteristic matrix is \( {\mathrm{W}}_1=\left[\begin{array}{cc}4& 0\\ {}0& 1\end{array}\right] \). The target reliability index is βt, j = 3.0(j = 1,2,3).

The objective function decreases down and to the left in the design region. The third constraint G3 = 0 is highly nonlinear. The optimal design point ‘*’ for RBDO is located at (3.5878, 3.1678) as shown in Fig. 9. The area enclosed by the three constraint functions is the feasible region. This circle surrounding the optimal design point is the βt-circle.

The initial 30 LHS sample points are denoted by ‘+’ (plus) signs in Fig. 10, and the additional 52 sample points selected by the sequential ERI strategy for constraints G1, G2 and G3 are represented by ‘×’ signs in Fig. 11. As shown in Fig. 10, the approximate limit-state surfaces of \( {\tilde{G}}_1 \)=0 and \( {\tilde{G}}_3 \)=0 are far from the accurate limit-state surfaces initially. As shown in Fig. 11, additional samples are positioned in the feasible and infeasible regions and cluster around the optimal design point. Consequently, the approximate limit-state surfaces \( {\tilde{G}}_1 \)=0, \( {\tilde{G}}_2 \)=0, and \( {\tilde{G}}_3 \)=0 are more accurate in the area surrounding the optimal solution and limit state function boundaries.

The iteration history of the present method is shown in Table 3. Initially, the optimal solution based on the approximate model agrees with the solution of the real function, but the approximate response\( {\tilde{G}}_1 \) and \( {\tilde{G}}_3 \) is far from the accurate response G1 and G3 under the same design variables. The difference between the approximate response and accurate response decreases as the iterations proceed. This result shows that the initial approximation model has globally low accuracy, and approximations in the boundary regions can achieve high accuracy after several sequential sampling steps.

As shown in Table 4, in terms of efficiency, the present method requires 12 iterations and a total of 82 sample evaluations, whereas the nested optimization method requires 264 evaluations. Thus, for this problem, our method again exhibits high precision and efficiency.

We repeated the Kriging-based RBDO 10 times and summarized the various results of relative error. Figure 12 clearly shows that all relative errors are small, revealing that the effect of randomness on the optimization results is negligible.

5.3 Numerical example 3

This example is modified from Refs.(Hu and Du 2015; Jiang et al. 2011b; Wang et al. 2016). As shown in in Fig. 13, a ten-bar truss is subjected to forcesF1,F2and F3. The vertical displacement on joint 2,dy, must be less than the allowable value dymax = 0.004 m.The Young’s modulus E of the material is treated as a normal random variable, whereas F1,F2and F3 are assumed to be interval variables. The details of the uncertainty properties are listed in Table 5.

In this problem, the truss members’ cross-sectional areasAi(i = 1, 2, ..., 10) are taken as design variables, and the design objective is to minimize the total area of the structure. According to the above displacement constraint, the target reliability index βt is 2.5, i.e., the target failure probability value is 6.21 × 10−3. This reliability-based design optimization problem can be expressed as

in which d is computed as described previously (Au et al. 2003)

where

whereai, j(i = 1, 2; j = 1, 2) and bi(i = 1, 2)can be defined as

The results obtained from the two different methods are given in Table 6. There are ten random design variables, one random parameter and three non-probabilistic parameters in this illustrative example, which will increase the number of calculations compared to the previous examples. The total number of evaluations conducted for the actual constraint function is 544, with 350 initial sample points and 194 additional samples. The total number of functions requires nested optimization with a true function of 3706. Thus, the proposed method is highly efficient compared to conventional nested optimization. In this high-dimension hybrid space, high-accuracy approximation models are very difficult to construct from limited samples with a uniform distribution; nevertheless, accurate results can be obtained by supplementing the approach with the sequential sampling method. The example shows that the proposed method is highly efficient and accurate for the problem of reliability-based optimization with many variables.

The randomness of the Latin hypercube sampling technique for generating initial samples as shown in Fig. 14 has some effects on the optimization result, specifically, the relative errors are generally smaller than 0.7%. Nevertheless, it may be safe to say that the accuracy of the Kriging approximation model can be guaranteed by the sequence sampling method.

6 Engineering application

This section focuses on a family of practical engineering applications, namely, vehicle crashworthiness designs. Thin-walled beam structures are typically used to absorb energy during vehicle frontal and rear collisions. Therefore, it is important to investigate the design optimization of thin-walled beam structures with regard to vehicle crashworthiness (Xiang et al. 2006; Zhao et al. 2010).

The thin-walled beam design problem described herein is taken from numerical studies in the literature (Xiang et al. 2006; Zhao et al. 2010). Here, the crashworthiness of the closed-hat beam shown in Fig. 15 is investigated. The closed-hat beam is impacting a rigid wall with an initial velocity of 13.8 m/s. The beam is formed by a hat beam and a web plate connected with uniformly distributed spot-welding points along the two rims of the hat beam.

The FE model comprises 5760 shell elements. A 300-kg mass is attached to the free end of the beam during the crash analysis to supply crushing energy. The impact duration time is 30 ms. The FEM simulation is performed using the explicit non-linear finite element software package LS-DYNA. Typical deformation behaviours of the numerical model are shown in Fig. 16.

Based on previous research (Xiang et al. 2006; Zhao et al. 2010), the closed-hat beam will be optimized to maximize the absorbed energy when subjected to an average normal impact force on the rigid wall. The plate thickness t, height h, width w, and spacing d between each spot-welding point have prominent effects on the crashworthiness of a closed-hat beam, and hence these four parameters are used as design variables in this application. Due to manufacturing variability and measurement errors, Young’s modulus and yield stress values are treated as bounded uncertainties described by a two-dimensional ellipsoid model. The plate thickness t, height h, width w, and spacing d between each spot-welding point are assumed to be normally distributed random variables. The uncertainty properties are given in Table 7.

Thus, the hybrid RBDO problem can be formulated as

where the objective function f and constraint F represent the absorbed energy of the closed-hat beam and the axial impact force, respectively. Both parameters are obtained via FEM simulation. The target reliability index βt is set to 3.0 for the average force constraints. The starting point of the optimization is set at (\( {d}_1^0,{d}_2^0,{d}_3^0,{d}_4^0 \))T = (1.5 mm, 30 mm, 70 mm, 70 mm)T.

Initially, 50 sampling points in the design and uncertainty spaces are generated through LHS to construct Kriging model approximations of the objective functions. The stopping criterion of the sequential ERI sampling strategy is set to a maximum of ERI < 0.005. The results are given in Table 8. The optimal design vector is found to be (0.81 mm, 19.07 mm, 92.99 mm, 101.94 mm) T, with 62 additional sample points selected by sequential ERI and 112 FEM evaluations.

7 Conclusion

In this article, a new sequential sampling method is introduced and integrated into the SORA to solve kriging-based RBDO problems with both stochastic and uncertain-but-bounded uncertainties. Based on the kriging approximation model, an ERI criterion for selecting additional samples and improving RBDO solutions is proposed. The sampling strategy focuses on the neighborhood of current RBDO solution and maximally improves the MPTP and WCP estimation, while ignore other areas of the constraint function that are not important to the RBDO solution.

Several examples are tested to verify the accuracy and efficiency of the proposed method. The optimization results of the sequence sampling method are almost the same as those of the nested optimization, which confirms that the proposed sequence sampling method is very accurate. In addition, the sequential sampling method uses the smallest number of samples, which indicates that the computational cost can be significantly reduced.The present method is also applied to a practical engineering problem, namely, thin-walled beam crashworthiness analysis. A stable solution is obtained using only a small number of iterations and FEM evaluations, illustrating the fine practicability of the proposed method. Additional work is required to improve the accuracy of RBDO methods. For example, different approximation models (e.g., response surface methods and radial basis functions) may be used to fit implicit constraint functions. In addition, the method may be extended and applied to more complex problems, such as multi-objective reliability optimization.

References

Au F, Cheng Y, Tham L, Zeng G (2003) Robust design of structures using convex models. Comput Struct 81(28):2611–2619

Basudhar A, Missoum S (2008) Adaptive explicit decision functions for probabilistic design and optimization using support vector machines. Comput Struct 86(19–20):1904–1917

Bect J, Ginsbourger D, Ling L (2012) Sequential design of computer experiments for the estimation of a probability of failure. Stat Comput 22(3):773–793

Ben-Haim Y (1994) A non-probabilistic concept of reliability. Struct Saf 14(4):227–245

Ben-Haim Y, Elishakoff I (1995) Discussion on: A non-probabilistic concept of reliability. Struct Saf 17(3):195–199

Bichon BJ, Eldred MS, Swiler LP, Mahadevan S, McFarland JM (2008) Efficient Global Reliability Analysis for Nonlinear Implicit Performance Functions. AIAA J 46(10):2459–2468

Chan KY, Papalambros PY, Skerlos SJ (2010) A method for reliability-based optimization with multiple non-normal stochastic parameters: a simplified airshed management study. Stoch Env Res Risk A 24(1):101–116

Chen X, Hasselman TK, Neill DJ Reliability based structural design optimization for practical applications. In: Proceedings of the 38th AIAA/ASME/ASCE/AHS/ASC structures, structural dynamics, and materials conference, 1997. p 2724–2732

Chen Z, Qiu H, Gao L, Li X, Li P (2014) A local adaptive sampling method for reliability-based design optimization using Kriging model. Struct Multidiscip Optim 49(3):401–416

Cheng G, Xu L, Jiang L (2006) A sequential approximate programming strategy for reliability-based structural optimization. Comput Struct 84(21):1353–1367

Cheng J, Li QS (2008) Reliability analysis of structures using artificial neural network based genetic algorithms. Comput Methods Appl Mech Eng 197(45–48):3742–3750

Cheng X Robust reliability optimization of mechanical components using non-probabilistic interval model. In: Computer-Aided Industrial Design & Conceptual Design, 2009 CAID & CD 2009 IEEE 10th International Conference on, 2009. IEEE, p 821–825

Du X (2012) Reliability-based design optimization with dependent interval variables. Int J Numer Methods Eng 91(2):218–228

Du X, Chen W (2004) Sequential Optimization and Reliability Assessment Method for Efficient Probabilistic Design. J Mech Des 126(2):225–233

Du X, Sudjianto A, Huang B (2005) Reliability-based design with the mixture of random and interval variables. J Mech Des 127(6):1068–1076

Echard B, Gayton N, Lemaire M (2011) AK-MCS: An active learning reliability method combining Kriging and Monte Carlo Simulation. Struct Saf 33(2):145–154

Elishakoff I, Haftka RT, Fang J (1994) Structural design under bounded uncertainty—Optimization with anti-optimization. Comput Struct 53(6):1401–1405

Guo J, Du X (2009) Reliability sensitivity analysis with random and interval variables. Int J Numer Methods Eng 78(13):1585–1617

Guo S, Lu Z (2002) Hybrid probabilistic and non-probabilistic model of structural reliability. Chinese J Mech Strength 24(4):524–526

Hu C, Youn BD Adaptive-Sparse Polynomial Chaos Expansion for Reliability Analysis and Design of Complex Engineering Systems. In: ASME 2009 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, 2009

Hu Z, Du X (2015) A random field approach to reliability analysis with random and interval variables. ASCE-ASME Journal of Risk and Uncertainty in Engineering Systems, Part B. Mech Eng 1(4):041005

Jiang C, Bi R, Lu G, Han X (2013) Structural reliability analysis using non-probabilistic convex model. Comput Methods Appl Mech Eng 254:83–98

Jiang C, Han X, Liu GP (2008) A sequential nonlinear interval number programming method for uncertain structures. Comput Methods Appl Mech Eng 197(49–50):4250–4265

Jiang C, Han X, Lu G, Liu J, Zhang Z, Bai Y (2011a) Correlation analysis of non-probabilistic convex model and corresponding structural reliability technique. Comput Methods Appl Mech Eng 200(33):2528–2546

Jiang C, Li W, Han X, Liu L, Le P (2011b) Structural reliability analysis based on random distributions with interval parameters. Comput Struct 89(23):2292–2302

Jones DR (1998) Efficient Global Optimization of Expensive Black-Box Functions. J Glob Optim 13(4):455–492

Kang Z, Luo Y (2009) Non-probabilistic reliability-based topology optimization of geometrically nonlinear structures using convex models. Comput Methods Appl Mech Eng 198(41):3228–3238

Kang Z, Luo Y (2010) Reliability-based structural optimization with probability and convex set hybrid models. Struct Multidiscip Optim 42(1):89–102

Kim C, Choi KK (2008) Reliability-Based Design Optimization Using Response Surface Method With Prediction Interval Estimation. J Mech Des 130(12):121401–121401–12

Lee J, Song CY (2011) Role of conservative moving least squares methods in reliability based design optimization: a mathematical foundation. J Mech Des 133(12):121005

Lee JO, Yang YS, Ruy WS (2002) A comparative study on reliability-index and target-performance-based probabilistic structural design optimization. Comput Struct 80(3–4):257–269

Lee TH, Jung JJ (2008) A sampling technique enhancing accuracy and efficiency of metamodel-based RBDO: Constraint boundary sampling. Comput Struct 86(13):1463–1476

Li X, Qiu H, Chen Z, Gao L, Shao X (2016) A local Kriging approximation method using MPP for reliability-based design optimization. Comput Struct 162(C):102–115

Liang J, Mourelatos ZP, Nikolaidis E (2007) A single-loop approach for system reliability-based design optimization. J Mech Des 129(12):1215–1224

Liu P-L, Der Kiureghian A (1991) Optimization algorithms for structural reliability. Struct Saf 9(3):161–177

Liu J, Wen G (2018) Continuum topology optimization considering uncertainties in load locations based on the cloud model. Eng Optim 50(6):1041–1060

Liu J, Wen G, Xie YM (2016) Layout optimization of continuum structures considering the probabilistic and fuzzy directional uncertainty of applied loads based on the cloud model. Struc Multidiscip Optim 53(1):81–100

Lophaven SN, Nielsen HB, Søndergaard J (2002) A MATLAB Kriging toolbox. Technical University of Denmark. Kongens Lyngby. Technical Report No. IMM-TR-2002-12

Luo Y, Kang Z, Li A (2009a) Structural reliability assessment based on probability and convex set mixed model. Comput Struct 87(21):1408–1415

Luo Y, Kang Z, Luo Z, Li A (2009b) Continuum topology optimization with non-probabilistic reliability constraints based on multi-ellipsoid convex model. Struct Multidiscip Optim 39(3):297–310

Morris MD, Mitchell TJ (1995) Exploratory designs for computational experiments. J Stat Plan Infer 43(3):381–402

Most T, Knabe T (2010) Reliability analysis of the bearing failure problem considering uncertain stochastic parameters. Comput Geotech 37(3):299–310

Myers RH, Montgomery DC (1995) Response Surface Methodology: Process and Product in Optimization Using Designed Experiments. J. Wiley

Penmetsa RC, Grandhi RV (2002) Efficient estimation of structural reliability for problems with uncertain intervals. Comput Struct 80(12):1103–1112

Picheny V, Ginsbourger D, Roustant O, Haftka RT, Kim NH (2010) Adaptive Designs of Experiments for Accurate Approximation of Target Regions. J Mech Des 132(7):461–471

Qiu Z, Elishakoff I (1998) Antioptimization of structures with large uncertain-but-non-random parameters via interval analysis. Comput Methods Appl Mech Eng 152(3):361–372

Qiu Z, Wang J (2010) The interval estimation of reliability for probabilistic and non-probabilistic hybrid structural system. Eng Fail Anal 17(5):1142–1154

Sakata S, Ashida F, Zako M (2003) Structural optimization using Kriging approximation. Comput Methods Appl Mech Eng 192(7):923–939

Simpson TW, Mauery TM, Korte JJ, Mistree F (2001) Kriging models for global approximation in simulation-based multidisciplinary design optimization. AIAA J 39(12):2233–2241

Tu J, Choi KK, Park YH (1999) A New Study on Reliability-Based Design Optimization. J Mech Des 121(4):557–564

Wang J, Qiu Z (2010) The reliability analysis of probabilistic and interval hybrid structural system. Appl Math Model 34(11):3648–3658

Wang L, Wang X, Wang R, Chen X (2016) Reliability-based design optimization under mixture of random, interval and convex uncertainties. Archive of Applied Mechanics:1–27

Wang P, Hu C, Youn BD (2011) A generalized complementary intersection method (GCIM) for system reliability analysis. J Mech Des 133(7):071003

Wang Z, Wang P (2013) A Maximum Confidence Enhancement Based Sequential Sampling Scheme for Simulation-Based Design. J Mech Des 136(2):021006

Xia B, Lü H, Yu D, Jiang C (2015) Reliability-based design optimization of structural systems under hybrid probabilistic and interval model. Comput Struct 160:126–134

Xiang Y, Wang Q, Fan Z, Fang H (2006) Optimal crashworthiness design of a spot-welded thin-walled hat section. Finite Elem Anal Des 42(10):846–855

Yao W, Chen X, Huang Y, Gurdal Z, van Tooren M (2013) Sequential optimization and mixed uncertainty analysis method for reliability-based optimization. AIAA J 51(9):2266–2277

Youn BD, Choi K, Du L (2005) Adaptive probability analysis using an enhanced hybrid mean value method. Struct Multidiscip Optim 29(2):134–148

Youn BD, Choi KK (2004) A new response surface methodology for reliability-based design optimization. Comput Struct 82(2):241–256

Youn BD, Xi Z, Wang P (2008) Eigenvector dimension reduction (EDR) method for sensitivity-free probability analysis. Struct Multidiscip Optim 37(1):13–28

Zhao L, Choi KK, Lee I (2011a) Metamodeling Method Using Dynamic Kriging for Design Optimization. AIAA J 49(9):2034–2046

Zhao L, Choi KK, Lee I, Gorsich D (2011b) Conservative Surrogate Model Using Weighted Kriging Variance for Sampling-Based RBDO. J Mech Des 135(91003):1–10

Zhao Z, Han X, Jiang C, Zhou X (2010) A nonlinear interval-based optimization method with local-densifying approximation technique. Struct Multidiscip Optim 42(4):559–573

Zhuang X, Pan R (2012) A sequential sampling strategy to improve reliability-based design optimization with implicit constraint functions. J Mech Des 134(2):021002

Acknowledgements

This work was partially supported by the National Natural Science Foundation of China (11302033, 11772070, 11672104), the Open Fund of State Key Laboratory of Automotive Simulation and Control (20121105), and Scientific Research Fund of Hunan Provincial Education Department (17C0044).

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: KK Choi

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, F., Liu, J., Wen, G. et al. Extending SORA method for reliability-based design optimization using probability and convex set mixed models. Struct Multidisc Optim 59, 1163–1179 (2019). https://doi.org/10.1007/s00158-018-2120-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-018-2120-2