Abstract

Sampling efficiency is important for simulation-based design optimization. While Bayesian optimization (BO) has been successfully applied in engineering problems, the cost associated with large-scale simulations has not been fully addressed. Extending the standard BO approaches to multi-fidelity optimization can utilize the information of low-fidelity models to further reduce the optimization cost. In this work, a multi-fidelity Bayesian optimization approach is proposed, in which hierarchical Kriging is used for constructing the multi-fidelity metamodel. The proposed approach quantifies the effect of HF and LF samples in multi-fidelity optimization based on a new concept of expected further improvement. A novel acquisition function is proposed to determine both the location and fidelity level of the next sample simultaneously, with the consideration of balance between the value of information provided by the new sample and the associated sampling cost. The proposed approach is compared with some state-of-the-art methods for multi-fidelity global optimization with numerical examples and an engineering case. The results show that the proposed approach can obtain global optimal solutions with reduced computational costs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Bayesian optimization (BO) is a metamodel-based global optimization approach, where the search process is assisted by constructing and updating a metamodel iteratively, and the sequential sampling is guided by an acquisition function to incorporate uncertainty (Ghoreishi and Allaire 2019; Tran et al. 2019b). The construction of metamodels helps improve the search efficiency, while the sequential sampling guided by the acquisition function reduces the overall number of samples. The sequential sampling strategy is particularly helpful when high-cost simulations or physical experiments are involved. Different definitions of acquisition functions have been developed to balance between exploration and exploitation, such as expected improvement (EI), probability of improvement, and lower confidence bound. BO with the EI acquisition function is also called efficient global optimization (EGO) by some researchers.

Similar to other metamodel-based global optimization methods (Queipo et al. 2005; Wang and Shan 2007), the computational challenge for BO to solve large-scale problems still exists, because the number of samples to cover the search space grows exponentially as the dimension of the space increases. Multi-fidelity (MF) surrogate modeling is one approach to reduce the cost by combining sample points predicted by high-fidelity (HF) and low-fidelity (LF) models to construct the surrogates, since running LF models is less costly (Huang et al. 2006; Jones 2001; Shu et al. 2019a; Xiong et al. 2008; Zhou et al. 2017). The existing MF metamodels can be categorized as three types. The first type is the scaling function-based MF metamodeling, which tunes the LF model according to the HF model responses (Chang et al. 1993; Zhou et al. 2015). The second type is space-mapping MF metamodels, in which a transformation operator is applied to map the LF design space to the HF space and the optimal sample point in the HF space can be estimated (Bakr et al. 2001; Bandler et al. 1994; Koziel et al. 2006). The third type is MF Kriging models, such as the co-Kriging model (Kennedy and O'Hagan 2000) and hierarchical Kriging model (Han and Görtz 2012). Co-kriging models are constructed with the information of covariance between the LF and HF samples. However, they are constructed based on the nested HF sample points, which adds limitations in their applications. In hierarchical Kriging models, the LF Kriging model is directly used as the trend of the MF metamodel, without the requirement of nested sample points (Han and Görtz 2012; Zhang et al. 2018). Hierarchical Kriging allows designers to choose sample points more freely in the optimization process. Because of its flexibility in sampling, hierarchical Kriging received much more attentions in engineering design optimization (Courrier et al. 2016; Palar and Shimoyama 2017; Zhang et al. 2015). MF metamodels for multi-objective optimization (Shu et al. 2019b; Zhou et al. 2016), incorporating gradient information (Song et al. 2017; Ulaganathan et al. 2015), and adaptive hybrid scaling method (Gano et al. 2005) have also been developed.

The acquisition-guided sequential sampling has been applied in MF metamodel-based design optimization. For instance, Xiong et al. (2008) applied the lower confidence bound in sequential sampling to construct MF metamodels. Kim et al. (2017) used the EI acquisition function for the hierarchical Kriging model. However, these methods merely adopt the high-fidelity simulation data to update the MF metamodels. The acquisition functions were only applied to determine the locations of new samples, while the different costs associated with LF and HF samplings are not considered. To solve this problem, Huang et al. (2006) developed an augmented EI acquisition function for co-Kriging, in which EI is augmented by the correlation of predictions between different fidelity models and a ratio of sampling costs so that both the sample location and the fidelity level can be determined by maximizing the acquisition. Liu et al. (2018) improved the augmented EI criterion with the consideration of the sample cluster issue to reduce the computational cost of the co-Kriging. Ghoreishi et al. (2018) proposed to identify the next best fidelity information source and the best location in the input space via a value-gradient policy. Then they considered more information sources with different fidelity levels and explicitly account for the computational cost associated with individual sources (Ghoreishi et al. 2019). Zhang et al. (2018) proposed a multi-fidelity global optimization approach based on the hierarchical Kriging model, in which an MFEI acquisition function is extended from EI with different uncertainty levels corresponding to the samples of low and high fidelities. Tran et al. (2020) proposed to combine the overall posterior variance reduction and computational cost ratio to select the fidelity level.

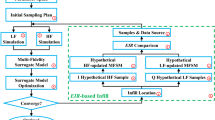

In this paper, a new MF Bayesian optimization (MFBO) approach with the hierarchical Kriging model is developed. A MF acquisition function based on a new concept of expected further improvement is proposed, which enables the simultaneous selections of both location and fidelity level for the next sample. The different costs of HF and LF samples as well as the extra information of HF samples are considered altogether. A constrained MF acquisition function for unknown constraints is also introduced. The proposed MFBO approach is compared with the standard EGO method and the MFEI method (Zhang et al. 2018) using five numerical examples and one engineering case.

The remainder of this paper is organized as follows. In the “Background” section, the hierarchical Kriging model and standard EGO method are reviewed. In the “The proposed MFBO approach” section, the proposed MFBO approach and the new acquisition function are described in details. Five numerical examples and one engineering case study with the comparisons of results are presented in the “Examples and results” section, followed by concluding remarks in the “Concluding remarks” section 5.

2 Background

2.1 Hierarchical Kriging

Hierarchical Kriging is a MF metamodeling method, in which the LF Kriging model is taken to predict the overall trend whereas the HF samples are used to correct the LF model. The metamodel can be expressed as

where \( {\hat{y}}_l\left(\boldsymbol{x}\right) \) is the predicted mean of the LF Kriging model, which is constructed based on LF sample points, β0 is a scaling factor, and Z(x) is a stationary random process with zero mean and a covariance of

where σ2 is the process variance. R(x, x') is the spatial correlation function which only depends on the distance between two design sites, x and x'. Given HF sample points Xh = {xh, 1, xh, 2, …, xh, n} and their responses fh(Xh) = {f(xh, 1), f(xh, 2), …, f(xh, n)}, the predicted mean and variance of the hierarchical Kriging model at an unobserved point can be calculated as

and

respectively, where r(x) is the correlation vector with elements ri(x) = R(x, xi), xi ∈ Xh. R is the correlation matrix with elements R(i, j) = R(xi, xj), xi, xj ∈ Xh. F is the vector of predictions by the LF Kriging model at the locations of HF samples. The Gaussian correlation function

is used in this paper. The hyper-parameters of hierarchical Kriging can be trained by maximizing the likelihood function:

More details of hierarchical Kriging can be found in Han and Görtz (2012).

2.2 Efficient global optimization approach

The EGO is a Bayesian optimization method where the EI acquisition function is used. The standard EGO method was originally proposed by Jones et al. (Jones et al. 1998) for expensive black-box problems. For Kriging and hierarchical Kriging models, the prediction at an unsampled point x can be regarded as a random variable and obeys a normal distribution \( Y\left(\boldsymbol{x}\right)\sim N\left(\hat{y}\left(\boldsymbol{x}\right),{\sigma}^2\left(\boldsymbol{x}\right)\right) \), where \( \hat{y}\left(\boldsymbol{x}\right) \) and σ2(x) are the predicted mean and variance. The improvement at x for a minimization problem is

where fmin is the best solution in the current sample set. The expected improvement is

By expressing the right-hand side of (8) as an integral, one can obtain the EI in the closed form as

where ϕ(•) and Φ(•) are the probability density function and cumulative distribution function of the standard normal distribution, respectively. The EGO method helps obtain the next sample point by maximizing the EI function expressed in (9). Then the new sample point is used to update the metamodel. The iteration continues until the algorithm converges. More details of EGO can be found in Jones et al. (1998).

3 The proposed MFBO approach

The standard EGO method provides a way to select a new sample point in single-fidelity optimization. However, in MF optimization, the decision of choosing the next sample at either HF or LF level needs to be made to update the MF metamodel. In the proposed MFBO, a new acquisition function is developed to support the sequential sampling strategy for selecting sample points of different fidelity levels adaptively in MF optimization.

3.1 Acquisition function based on the expected further improvement

In general, HF sample points are more expensive to obtain but can provide more precise information, whereas LF sample points are less costly but less reliable. In MF optimization, the sample points in both HF and LF levels are chosen to update the MF metamodel. Here, a new acquisition function is defined so that the choice of fidelity level incorporates the considerations of both cost and benefit in LF and HF samples.

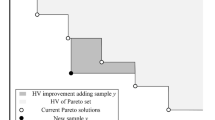

With the sequential sampling, the maximum EI gradually decreases as the BO algorithm converges to the optimal solution. If a sample point x∗ is selected to update the metamodel, the EI will decrease from EI(x∗) calculated from (9) to zero. Therefore, the reduction of the EI value can be equivalently used to guide the sequential sampling. In the proposed MFBO, the reduction of EI value incorporates the different effects of LF and HF samples. If a HF sample is chosen at x∗, the HF effect on reducing the EI is

where EI(·) is the EI function of hierarchical Kriging based on the existing HF samples and LF samples. If a LF sample at this location \( {\boldsymbol{x}}_l^{\ast } \) is chosen instead, the LF effect on reducing the EI, which is named further improvement given that \( {\boldsymbol{x}}_l^{\ast } \) is taken, is calculated as

where Yl(·) is the LF metamodel with predicted mean \( {\hat{y}}_l\left(\cdotp \right) \) and variance σl2(x∗), \( EI\left({\boldsymbol{x}}^{\ast}\left|{Y}_l\left({\boldsymbol{x}}_l^{\ast}\right)\right.\right) \) represents the EI if calculated by HF sample x∗ given that a LF sample \( {\boldsymbol{x}}_l^{\ast } \) was taken instead at the same location of x∗ with the predicted LF response \( {Y}_l\left({\boldsymbol{x}}_l^{\ast}\right) \). Note that \( {Y}_l\left({\boldsymbol{x}}_l^{\ast}\right) \) is a random variable which follows a Gaussian distribution. Therefore, both the conditional expected value \( EI\left({\boldsymbol{x}}^{\ast}\left|{Y}_l\left({\boldsymbol{x}}_l^{\ast}\right)\right.\right) \) and the conditional further improvement in (11) vary according to \( {Y}_l\left({\boldsymbol{x}}_l^{\ast}\right) \). The overall expected value of \( EI\left({\boldsymbol{x}}^{\ast}\left|{Y}_l\left({\boldsymbol{x}}_l^{\ast}\right)\right.\right) \) is obtained as

Note that \( EI\left({\boldsymbol{x}}^{\ast}\left|{Y}_l\left({\boldsymbol{x}}_l^{\ast}\right)\right.\right) \) in (12) is calculated based on the HF metamodel with HF sample x∗. Thus the expected further improvement is

Considering the different costs of HF and LF samples and assuming that the cost ratio of a HF sample to a LF sample is T, we define a new acquisition function as

to decide both the sample location and the fidelity level, where fidelity equals 1 for LF level and fidelity equals 2 for HF level. The location and fidelity level can be obtained by maximizing the acquisition function.

The proposed approach can be further extended to problems with multiple fidelity levels. The expected value of \( EI\left({\boldsymbol{x}}^{\ast}\left|{Y}_j\left({\boldsymbol{x}}_j^{\ast}\right)\right.\right) \) at the jth fidelity level can be calculated similarly as in (12), whereas \( EI\left({\boldsymbol{x}}^{\ast}\left|{Y}_j\left({\boldsymbol{x}}_j^{\ast}\right)\right.\right) \) itself is calculated based on metamodel \( {Y}_{j+1}\left(\boldsymbol{x}\right)={\beta}_{\mathrm{j}}{\hat{y}}_j\left(\boldsymbol{x}\right)+{Z}_j\left(\boldsymbol{x}\right) \) that is similar to (1). The acquisition function in (14) can be adjusted to include all fidelity levels with different cost ratios accordingly.

Because of the multiple integral in (12), direct calculation of the acquisition function in (14) can be computationally expensive. An alternative approach can be taken here to search the maximum of the acquisition function. From (11), it is seen that ΔEIl(x∗) tends to be large when EI(x∗) is large. ΔEIl(x∗) has a similar trend as EI(x∗). Thus, the location of the new LF sample point tends to be selected near the location where a large EI is obtained. Therefore, the EI function for HF sampling can be used in search of maximum, which approximates the true location of the maximum expected further improvement. From the sample location, the acquisition function in (14) can be evaluated based on the surrogates at both fidelity levels, and the fidelity level which leads to a larger acquisition value is selected. The worst-case scenario of this heuristic searching approach is that its searching efficiency is the same as the standard EGO.

3.2 Constrained acquisition function

In general, the constraints in engineering optimization can be divided into two categories: known constraints and unknown constraints. Known constraints can be evaluated easily and analytically without running a simulation. In contrast, unknown constraints are much more complex and usually related to design performance. Whether they are satisfied or not can only be determined after running a simulation. In this work, the penalty function approach (Coello 2000; Shu et al. 2017) is used to handle known constraints where the objective function is penalized. Researchers have proposed different approaches to hand unknown constraints such as constrained EI (Schonlau et al. 1998) and surrogates of constraints (Gardner et al. 2014; Gelbart et al. 2014; Tran et al. 2019a). Here, unknown constraints are incorporated in the new acquisition function.

For an unknown constraint g(x)≤0, we define an indication function F(x) as

Since g(x) cannot be evaluated without running a simulation, we can also construct a hierarchical Kriging model and assume that the prediction of g(x) obeys a normal distribution \( g\left(\boldsymbol{x}\right)\sim N\left(\hat{g}\left(\boldsymbol{x}\right),{\sigma}_g^2\left(\boldsymbol{x}\right)\right) \). Then, the constrained acquisition function is defined as

In general, the correlation between F(x) and a(x, fidelity) can be ignored. Thus, cov[F(x), a(x, fidelity)] = 0. According to the definition of F(x), E[F(x)] can be calculated as

Then (16) can be expressed as

4 Examples and results

In this section, five numerical examples and one engineering case study are used to demonstrate the applicability and performance of the proposed approach. The formulations of the five numerical examples and the respective optimal solutions (Cai et al. 2016; Zhang et al. 2018; Zhou et al. 2016) are listed in Table 1. fh(x) and fl(x) represent the HF and LF models, respectively. gh(x)and gl(x) represent the HF and LF constraints, respectively. xbest is the optimal solution and fh(xbest) is the corresponding response.

The proposed approach is compared with the standard EGO (Jones et al. 1998) and the MFEI method (Zhang et al. 2018). Note that the cost difference between HF and LF samples is not considered in the MFEI method, in contrast to our approach. The computational cost is calculated as

where nh and nl are the numbers of HF and LF samples, respectively. T is the cost ratio.

4.1 The one-dimensional example

The first numerical example in Table 1 is used for the illustration of the proposed approach as well as a detailed comparison between different approaches. Here we assume that the cost of a HF sample point is 4 times of a LF sample point (T = 4).

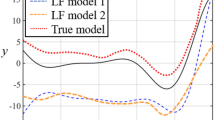

The initial hierarchical Kriging model is constructed based on six LF sample points Sl = {0.0, 0.2, 0.4, 0.6, 0.8, 1.0} and three HF sample points Sh = {0.0, 0.5, 1.0}. The initial samples are uniformly distributed in the design space. The initial sample points, the constructed HF and LF models, the initial hierarchical Kriging model, and the EI function are shown in Fig. 1a. The maximum value of EI function is at x1 = 0.9093. At this location, \( \frac{1}{T}\Delta {EI}_{\mathrm{h}}\left({x}_1\right)=1.8598 \) and E[ΔEIl(x1)] = 6.7459. Hence, a LF sample point is added at this location.

The updated hierarchical Kriging model and EI function are shown in Fig. 1b. Similarly, a LF sample point is added at x2 = 0.8232 in the second iteration, where \( \frac{1}{T}\Delta {EI}_{\mathrm{h}}\left({x}_2\right)=1.7698 \) and E[ΔEIl(x2)] = 5.7174. The updated hierarchical Kriging model and EI function are shown in Fig. 1c. The maximum value of EI function in this iteration is at x3 = 0.7211. At this location, \( \frac{1}{T}\Delta {EI}_{\mathrm{h}}\left({x}_3\right)=0.8018 \) and E[ΔEIl(x3)] = ‐ 1.8252. Hence, a HF sample point is added in the third iteration.

The searching process of the proposed approach in the first numerical example is listed in Table 2. The proposed approach requires three LF samples and three HF samples to find the optimal solution. The termination criterion for this numerical example is set as

where fmin is the best observed objective function and ε is set to be 0.01.

The convergences of the three approaches for the first numerical example are compared in Fig. 2. The numbers of total HF and LF sample points, including the initial samples, and the computational costs of the three approaches are listed in Table 3. Note that the initial samples also need to be included in estimating the overall costs. For the standard EGO which itself is for single-fidelity optimization, the same numbers of initial LF and HF samples are recorded for comparison. After the initial model is constructed, the sample points added in the following iterations are counted as HF samples. It is seen from Table 3 that the proposed approach requires the least computational cost to find the optimal solution for the first example. The convergence criterion in Eq. (20) is applied in all three approaches.

4.2 Numerical examples for Cases 2 to 4

For numerical examples of Cases 2 to 4, Latin hypercube sampling (LHS) (Park 1994; Wang 2003) is used to generate the initial HF and LF sample sets. The sizes of the initial HF and LF sample sets are set to be 3 times and 6 times of the dimensions of the problems, respectively. To account for the influence of randomness, each of these cases is solved 30 times with each of the three approaches. The results for the average numbers of LF and HF sample points are compared in Table 4. The same convergence criterion in (20) is applied for all cases. To illustrate the effect of the cost ratio on the proposed approach, two different cost ratios (T = 4 and T = 10) are tested for the proposed approach. Based on the acquisition functions in (14) and (18), the proposed approach tends to select more LF sample points if the cost of LF sampling is lower (i.e., a higher cost ratio).

For Case 2, the EGO and proposed approach require almost the same number of LF and HF sample points, while the MFEI method samples much more LF sample points than the other two approaches. However, this does not reduce the number of HF samples required in the MFEI method. For Case 3 and Case 4, the MFEI method and the proposed approach require fewer HF sample points than the EGO by supplementing with LF sample points. One key difference between the MFEI method and the proposed approach is that the cost ratio is not considered in the MFEI method. The proposed approach tends to sample more LF sample points and fewer HF sample points as the cost ratio increases.

The computational costs of different approaches for T = 4 and T = 10 according to (18) are listed in Table 5. For Case 2, the EGO and the proposed approach have similar computational costs, which are lower than that of the MFEI method. For Case 3 and Case 4, the MFEI method is more efficient than EGO, and the proposed approach has the lowest cost among the three approaches.

4.3 Case 5: a high-dimensional example

The fifth numerical example is used to test the ability of the different approaches to solve high-dimensional optimization problems. LHS is applied to generate the 40 initial HF samples and 100 initial LF samples. In this example, we set the maximum number of iterations to 200 to observe the convergence process of different optimization approaches.

The convergences of the objective values along with the computational costs for the three different approaches are compared in Fig. 3. The best observed objectives, the numbers of LF and HF sample points, and the corresponding computational costs (T = 4) for the three approaches after convergence are listed in Table 6.

From Fig. 3 and Table 6, it is seen that the standard EGO and the proposed approach can obtain a better optimal solution than the MFEI approach. Compared to the EGO, the proposed approach has a lower computational cost to converge to the optimal solution. After 200 iterations, the cost of MFEI is the least, since the MFEI approach added the most LF samples and the fewest HF samples. The overreliance on LF samples led to the missing out on the opportunities to reach a better solution.

4.4 Engineering case study: impedance optimization of the long base

As an engineering case study, the proposed approach is applied to optimize the long base of a ship. The simulation model of the problem consists of a cylindrical shell and a long base, which is shown in Fig. 4. The optimization objective is to maximize the minimum impedance of the pedestal while keeping the weight below 3.4 tons. The mechanical impedance of a vibrating system is the complex ratio of a harmonic excitation to its response. In this example, the impedance is the origin impedance, which is the complex ratio of a harmonic excitation to its response at the same location. To calculate the impedance, two unit harmonic forces are loaded in the Y-axis direction at point A and point B of the long base in Fig. 4. The frequency of the unit harmonic forces ranges from 0 to 350 Hz. The displacements at the ends of the cylindrical shell and the part of the base connected to the bulkhead are fixed to zeros. The six design variables shown in Fig. 4 are listed in Table 7. Other fixed parameters related to materials and geometry are shown in Table 8.

For the HF model, the step size of frequency calculation is chosen to be 2.5 Hz. For the LF model, the step size of calculation is 10 Hz. The computational cost of the HF model is 4 times of the LF model (T = 4). The convergence of the objective values along with the computational costs for the three approaches is plotted in Fig. 5. The best observed objectives, the numbers of LF and HF sample points, and the corresponding computational costs for the three approaches after convergence are listed in Table 9. For further comparison, the simulation resolution is further reduced to the step size of 25 Hz and applied as the LF model (T = 10). The results are also listed in Table 9.

From Fig. 5 and Table 9, it is seen that the MFEI method and the proposed approach can obtain a better optimal solution than the standard EGO. Compared to the EGO and MFEI, the proposed approach has a lower computational cost to converge to the optimal solution. The proposed approach can rely more on LF sample points when the LF model is cheaper. This indicates that the proposed approach can adjust the sampling process adaptively according to the cost of obtaining extra information.

5 Concluding remarks

In this paper, a MFBO approach for global optimization is proposed based on the hierarchical Kriging model and a new acquisition function. In the new acquisition function, the value of LF sample points is quantified as the expected further improvement and the cost ratio between HF and LF sampling is considered. Both the location and fidelity level of the next sample point are determined simultaneously by maximizing the acquisition function. For constrained problems, the acquisition function can be further generalized with the surrogates of constraints. The proposed approach has been demonstrated with five numerical problems and one engineering design case. Compared to single-fidelity BO and an existing multi-fidelity BO method, the new approach incorporates the sampling cost differences in the sequential process and shows a higher level of efficiency.

The major limitation of the proposed acquisition function is the cost of direct computation. In this paper, a heuristic approach is taken in search of the maximum of acquisition based on the EI of the HF model. The search efficiency is usually better than or at least not worse than the standard EGO. In future work, efficient computational methods for the new acquisition function with the expected further improvement will be investigated. Numerical integration methods such as quadrature and importance sampling can be helpful.

In the proposed acquisition function, the cost ratio of HF to LF samples plays a major role. In all examples of this paper, the ratios were assumed to be known a priori. When the costs of HF and LF simulations are not previously known in simulation-based design optimization, an initial ratio can be estimated. During the sequential sampling process, the cost ratio can be updated on the fly once the simulations are run and actual costs become available. Thus, the acquisition function can be adjusted adaptively. Nevertheless, the overall sampling cost proposed in (19) to evaluate the performance of MFBO approaches requires further study for its fairness in comparisons.

Scalability has been a major issue for Kriging-based metamodeling. The number of samples increases exponentially as the dimension of the searching space increases. Approaches such as batch parallelization (Tran et al. 2019a, 2019b) and sparse Gaussian process (McIntire et al. 2016; Zhang et al. 2019) have been applied in Bayesian optimization to alleviate the dimensionality challenge of Kriging. Yet much work of Bayesian optimization for high-dimensional problems remains.

References

Bakr MH, Bandler JW, Madsen K, Søndergaard J (2001) An introduction to the space mapping technique. Optim Eng 2:369–384

Bandler JW, Biernacki RM, Chen SH, Grobelny PA, Hemmers RH (1994) Space mapping technique for electromagnetic optimization. IEEE Trans Microw Theory Tech 42:2536–2544

Cai X, Qiu H, Gao L, Yang P, Shao X (2016) An enhanced RBF-HDMR integrated with an adaptive sampling method for approximating high dimensional problems in engineering design. Struct Multidiscip Optim 53:1209–1229

Chang KJ, Haftka RT, Giles GL, Kao I-J (1993) Sensitivity-based scaling for approximating structural response. J Aircr 30:283–288

Coello CAC (2000) Use of a self-adaptive penalty approach for engineering optimization problems. Comput Ind 41:113–127

Courrier N, Boucard P-A, Soulier B (2016) Variable-fidelity modeling of structural analysis of assemblies. J Glob Optim 64:577–613

Gano SE, Renaud JE, Sanders B (2005) Hybrid variable fidelity optimization by using a kriging-based scaling function. AIAA J 43:2422–2433

Gardner JR, Kusner MJ, Xu ZE, Weinberger KQ, Cunningham JP (2014) Bayesian optimization with inequality constraints. In: ICML. pp. 937–945

Gelbart MA, Snoek J, Adams RP (2014) Bayesian optimization with unknown constraints. arXiv preprint arXiv:1403.5607

Ghoreishi SF, Allaire D (2019) Multi-information source constrained Bayesian optimization. Struct Multidiscip Optim 59:977–991

Ghoreishi SF, Molkeri A, Srivastava A, Arroyave R, Allaire D (2018) Multi-information source fusion and optimization to realize ICME: application to dual-phase materials. J Mech Des 140:111409

Ghoreishi SF, Molkeri A, Arróyave R, Allaire D, Srivastava A (2019) Efficient use of multiple information sources in material design. Acta Mater 180:260–271

Han Z-H, Görtz S (2012) Hierarchical kriging model for variable-fidelity surrogate modeling. AIAA J 50:1885–1896

Huang D, Allen TT, Notz WI, Miller RA (2006) Sequential kriging optimization using multiple-fidelity evaluations. Struct Multidiscip Optim 32:369–382

Jones DR (2001) A taxonomy of global optimization methods based on response surfaces. J Glob Optim 21:345–383

Jones DR, Schonlau M, Welch WJ (1998) Efficient global optimization of expensive black-box functions. J Glob Optim 13:455–492

Kennedy MC, O'Hagan A (2000) Predicting the output from a complex computer code when fast approximations are available. Biometrika 87:1–13

Kim Y, Lee S, Yee K, Rhee D-H (2017) High-to-low initial sample ratio of hierarchical kriging for film hole array optimization. J Propuls Power 34:108–115

Koziel S, Bandler JW, Madsen K (2006) A space-mapping framework for engineering optimization—theory and implementation IEEE transactions on microwave. Theory Tech 54:3721–3730

Liu Y, Chen S, Wang F, Xiong F (2018) Sequential optimization using multi-level co-Kriging and extended expected improvement criterion. Struct Multidiscip Optim 58:1155–1173

McIntire M, Ratner D, Ermon S. (2016). Sparse Gaussian processes for Bayesian optimization. Proceedings of the Thirty-Second Conference on Uncertainty in Artificial Intelligence, pp. 517-526

Palar PS, Shimoyama K (2017) Multi-fidelity uncertainty analysis in CFD using hierarchical kriging. In: 35th AIAA Applied Aerodynamics Conference, p. 3261

Park J-S (1994) Optimal Latin-hypercube designs for computer experiments. J Stat Plan Inference 39:95–111

Queipo NV, Haftka RT, Shyy W, Goel T, Vaidyanathan R, Tucker PK (2005) Surrogate-based analysis and optimization. Prog Aerosp Sci 41:1–28

Schonlau M, Welch WJ, Jones DR (1998) Global versus local search in constrained optimization of computer models. Lecture Notes-Monograph Series 11-25

Shu L, Jiang P, Wan L, Zhou Q, Shao X, Zhang Y (2017) Metamodel-based design optimization employing a novel sequential sampling strategy. Eng Comput 34:2547–2564

Shu L, Jiang P, Song X, Zhou Q (2019a) Novel approach for selecting low-fidelity scale factor in multifidelity metamodeling. AIAA J 57:5320–5330

Shu L, Jiang P, Zhou Q, Xie T (2019b) An online variable-fidelity optimization approach for multi-objective design optimization. Struct Multidiscip Optim 60:1059–1077

Song C, Song W, Yang X (2017) Gradient-enhanced hierarchical kriging model for aerodynamic design optimization. J Aerosp Eng 30:04017072

Tran A, Sun J, Furlan JM, Pagalthivarthi KV, Visintainer RJ, Wang Y (2019a) pBO-2GP-3B: a batch parallel known/unknown constrained Bayesian optimization with feasibility classification and its applications in computational fluid dynamics. Comput Methods Appl Mech Eng 347:827–852

Tran A, Tran M, Wang Y (2019b) Constrained mixed-integer Gaussian mixture Bayesian optimization and its applications in designing fractal and auxetic metamaterials. Struct Multidiscip Optim 59:2131–2154

Tran A, Wildey T, McCann S (2020) sMF-BO-2CoGP: a sequential multi-fidelity constrained Bayesian optimization framework for design applications. J Comput Inf Sci Eng 20:031007

Ulaganathan S, Couckuyt I, Ferranti F, Laermans E, Dhaene T (2015) Performance study of multi-fidelity gradient enhanced kriging. Struct Multidiscip Optim 51:1017–1033

Wang GG (2003) Adaptive response surface method using inherited Latin hypercube design points. J Mech Des 125:210–220

Wang GG, Shan S (2007) Review of metamodeling techniques in support of engineering design optimization. J Mech Des 129:370–380

Xiong Y, Chen W, Tsui K-L (2008) A new variable-fidelity optimization framework based on model fusion and objective-oriented sequential sampling. J Mech Des 130:111401

Zhang Y, Han Z-H, Liu J, Song W-P (2015) Efficient variable-fidelity optimization applied to benchmark transonic airfoil design. In: 7th Asia-Pac int Symp Aerosp Technol, Cairns, Australia, pp. 25–27

Zhang Y, Han Z-H, Zhang K-S (2018) Variable-fidelity expected improvement method for efficient global optimization of expensive functions. Struct Multidiscip Optim 58:1431–1451

Zhang J, Yao X, Liu M, Wang Y. (2019) A Bayesian discrete optimization algorithm for permutation problems. Proceedings of 2019 IEEE Symposium Series on Computational Intelligence (SSCI 2019), pp.871-881

Zhou Q, Shao X, Jiang P, Zhou H, Shu L (2015) An adaptive global variable fidelity metamodeling strategy using a support vector regression based scaling function. Simul Model Pract Theory 59:18–35

Zhou Q, Shao X, Jiang P, Gao Z, Wang C, Shu L (2016) An active learning metamodeling approach by sequentially exploiting difference information from variable-fidelity models. Adv Eng Inform 30:283–297

Zhou Q, Wang Y, Choi S-K, Jiang P, Shao X, Hu J (2017) A sequential multi-fidelity metamodeling approach for data regression. Knowl-Based Syst 134:199–212

Acknowledgements

This research was supported by the National Natural Science Foundation of China (Grant No. 51775203, 51805179, and 51721092). The support of the China Scholarship Council is also appreciated.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Replication of results

Data or implementation code are available upon request.

Additional information

Responsible Editor: Nestor V Queipo

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shu, L., Jiang, P. & Wang, Y. A multi-fidelity Bayesian optimization approach based on the expected further improvement. Struct Multidisc Optim 63, 1709–1719 (2021). https://doi.org/10.1007/s00158-020-02772-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-020-02772-4