Abstract

The Vold–Kalman (VK) order tracking filter plays a vital role in the order analysis of noise in various fields. However, owing to the limited accuracy of double-precision floating-point data type, the order of the filter cannot be too high. This problem of accuracy makes it impossible for the filter to use a smaller bandwidth, meaning that the extracted order signal has greater noise. In this paper, the Python mpmath arbitrary-precision floating-point arithmetic library is used to implement a high-order VK filter. Based on this library, a filter with arbitrary bandwidth and arbitrary difference order can be implemented whenever necessary. Using the proposed algorithm, a narrower transition band and a flatter passband can be obtained, a good filtering effect can still be obtained when the sampling rate of the speed signal is far lower than that of the measured signal, and it is possible to extract narrowband signals from signals with large bandwidth. Test cases adopted in this paper show that the proposed algorithm has better filtering effect, better frequency selectivity, and stronger anti-interference ability compared with double-precision data type algorithm.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Order analysis is used in a variety of applications, from basic plant machinery testing to complex automotive engine testing. It is often combined with acoustic measurements to analyze the noise, vibration, and harshness (NVH) qualities of an engine or vehicle as a whole. Automotive engineers often use order tracking methods for product evaluation and development, design validation, production testing, quality evaluation, and trouble shooting. The paper [1] reviewed some basic ideas behind different kinds of order analysis methods and compared their main advantages and limitations.

Particularly, the VK filter is a vital technique in order analysis. The main framework of the filter have been basically presented [2,3,4] and then on this basis, the algorithm appears in almost all NVH-related commercial software presently on the market. Because of the importance of these researches, the algorithm is also named after the main author. Based on the conventional Kalman filter, the VK filter was proposed by Vold and Leuridan in 1993 [2]. The authors found that normal tracking filters (analog or digital implementations) have limited resolution in situations where the reference RPM is rapid. Thus, the authors proposed the application of nonstationary Kalman filters for the tracking of periodic components in such noise and vibration signals, namely, the VK filter. Vold then introduced the mathematical background of the VK filter [3]. This was the first presentation of the second-generation algorithm and its theoretical foundations. This new algorithm enables the simultaneous estimation of multiple orders, effectively decoupling close and crossing orders. In another paper published the same year [4], the authors explored the advantages of the filter in detail, including: (1) RPM estimation accuracy, even for fast-changing events such as gear shifts, (2) higher-order Kalman filters, with improved shapes for extracting modulated orders, and (3) decoupling of close and even crossing orders by use of multiple RPM references. Vold et al. [5] reported the development of a new VK filter for decoupling interacting orders in multi-axle systems. Based on the foundation of the first- and second-generation VK filters, a number of studies provided further understanding of the mathematical derivation of the filters, the physical meaning of their parameters, and the relationship between these parameters [6,7,8,9,10,11]. Herlufsen et al. [6] described the filter characteristics of the VK order tracking filter, investigating both the frequency response and time response of their time–frequency relationship. Pelant et al. [7] derived the detailed formulation of the filter, while Tuma [8] reported the bandwidth calculation formula for the 1st–4th order of the filter and established the relationship between the bandwidth and the weight coefficient. As an extension, the present paper presents a calculation formula for the filter bandwidth at arbitrary orders. Blough [9] explained the formulations and behavior of the filter in very straightforward and practical terms through the use of both equations and example datasets. Čala and Beneš [10] described the implementation of both first- and second-generation VK order tracking filters, with a focus on optimizing the calculations. It is worth mentioning that Vold et al. [11] considered the bandwidth of the VK filter to be limited by the numerical conditioning of the least-squares normal equations associated with its application. This suggests that even narrower bandwidths may be achieved by a direct least-squares solution using a banded version of the QR algorithm. As a more general approach, the present study adopts another method based on an arbitrary-precision floating-point arithmetic library. Similar to the VK filter, the method of transforming the filter problem into an optimization problem appears, although this has not yet become the mainstream approach. Amadou et al. [12] proposed another method that converges quickly and provides a small estimation error compared to those used for the linear time-invariant model. An offline processing approach using the preconditioned conjugate gradient method has also been proposed [13]. Pan et al. [14] further studied theoretical basis, numerical implementation and parameter of VK filter. It should be pointed out that VK filter is very useful in many fields of sound analysis, even fault diagnosis [15, 16].

When the order of the VK filter is large, it has the advantages of a flat passband and a fast-changing transition band. At the same time, smaller filter bandwidths can better isolate the influence of noise and other vibration signals. However, both cases result in larger matrix values, even beyond the precise representation of double-precision data. None of the research mentioned above can solve this problem effectively. This is the main problem considered in this paper—how to obtain higher-order and narrower passband VK filters for an arbitrary desired order and bandwidth.

To better understand how this problem is solved, there sections are introduced as follows. Section 2 describes the relevant VK filter in detail and gives the pseudocode of the related algorithm. Using an arbitrary-precision floating-point arithmetic library, the extension of this VK filter to any higher orders is explained. Section 3 presents the results from three test cases to verify the effectiveness of the algorithm. Finally, Sect. 4 gives the conclusions to this study.

2 VK Filter Formulation

This section discusses the VK filter algorithm and its numerical implementation in detail. The numerical implementation of the algorithm is given in the form of pseudocode. Readers can use the Python programming language and its arbitrary-precision numerical operation library to realize this algorithm, or contact the author to obtain the source code. The author will accept any requests with an open mind, and later relevant source code will be released on GitHub.

2.1 Analytical Solution of VK Filter

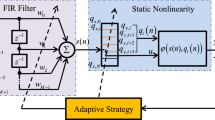

In this section, the analytical solution of the VK filter will be derived. Firstly, two basic equations, i.e., data equation and structural equation, correspond to the measurement equation and state equation of the standard Kalman filter, respectively. Based on minimizing the weighted sum of squares of the error terms of the two equations, the analytic solution of the VK filter is obtained.

The recorded signal \( y\left( t \right) \) is modeled as follows:

where \( \theta \left( n \right) \) is the phase of an ideal signal, that is, the integral of the angular velocity, \( \theta \left( n \right) = \sum\limits_{i = 0}^{n} {\omega \left( i \right)\Delta t} \), η represents the noise item. The complex envelope \( x\left( n \right) \) represents the signal amplitude and phase fluctuations. This equation is named the data equation.

The matrix representation is

and the square of the error vector norm is

The structural equation can be described by the following higher-order difference equation:

where \( \Delta \) represents the difference computation symbol, \( n_{\text{d}} \) is the order of the difference equation, and the value of \( \varepsilon \left( n \right) \) should be sufficiently small so that the complex envelope \( x\left( n \right) \) changes very slowly. The difference equation is

To deduce the formula and programming conveniently, the coefficients of the difference equation are expressed as \( {d}_{{v}} \). This is a vector of elements

Thus, the difference equation can be described as follows:

where \( i = 0,1, \ldots ,n_{\text{d}} \). The matrix representation is

which can be written as

The dimension of A is \( \left( {N - n_{\text{d}} + 1} \right) \times N \).

The optimization objective is to minimize

where r is the weight factor. We compute

which can be expressed as

and so

The above formula gives the analytical solution of the VK filter for a single-order signal. For the purpose of convenience, define a new matrix

When using regular data types, the limitations of the accuracy of double-precision floating data type mean that the identity matrix E will be submerged in addition operations if the weight factor r is too large. In the following sections, this issue will be discussed further and the relationship between r and the bandwidth of the filter in the steady state will be considered.

2.2 Frequency Response of VK Filter in Steady State

In this section, the basic principles of the VK filter are described from the perspective of the frequency domain, which contributes to a deeper understanding of the filter and provides a reference for setting reasonable weight coefficients in engineering practice. Before giving the exact calculation process, it should be emphasized that a larger weight coefficient always means a narrower bandwidth. Thus, larger weight coefficients are needed to achieve narrower bandwidths, even beyond the computational range of double-precision floating-point numbers. Firstly, by exploiting the structure of the analytical solution of the VK filter, the frequency response of the filter is obtained. The pseudocode for calculating the frequency response of the filter is then given.

The dimension of matrix A is \( \left( {N - n_{\text{d}} + 1} \right) \times N \), and its elements can be represented as follows:

According to the matrix multiplication formula:

Thus, according to (15), assuming that \( A^{{T}} A\left( {i,j} \right) \) is nonzero, the following relationship holds:

This transforms to

Let \( S_{u} = \hbox{min} \left( {N - n_{\text{d}} , i ,j} \right) \) and \( S_{d} = \hbox{max} \left( {i - n_{\text{d}} , j - n_{\text{d}} ,1} \right) \). According to (15)–(18), the following relationships can be obtained:

Further,

If \( A^{{T}} A\left( {i,j} \right) \) is nonzero, then \( S_{u} \ge S_{d} \), that is, \( \left| {i - j} \right| \le n_{\text{d}} \), which means each row of the matrix \( {A}^{{T}} {A} \) has at most \( 2n_{\text{d}} + 1 \) nonzero elements on the diagonal.

Let us exploit the structure of \( {A}^{{T}} {A} \) and go a step further. As \( { A}^{{T}} {A} \) is symmetric, the case \( i \ge {\text{j}} \) is first considered. Assuming that \( i \ge n_{\text{d}} + 1 \) and \( j \le N - n_{\text{d}} \), then

In the same way, when \( i < j \), assuming that \( j \ge n_{\text{d}} \) + 1 and \( i \le N - n_{\text{d}} \),

From (21) and (22), it can be seen that the \( 2n_{\text{d}} + 1 \) nonzero diagonal elements of each row of the matrix are the same, except for the first \( n_{\text{d}} \) rows and the last \( n_{\text{d}} \) rows of the matrix. Because \( { A}^{{T}} {A} \) is symmetric, B = \( ({r}^{2} {\mathrm{A}}^{{T}} {\mathrm{A}} + {\mathrm{E}} \)) has the same structure as \( { A}^{{T}} {A} \). Abbreviate the diagonal elements of each row and \( n_{\text{d}} \) elements after the diagonal element of matrix B as the vector b. The elements of b can be calculated as follows:

As mentioned above, B has the same structure as \( {A}^{{T}} {A} \), which means B is a sparse symmetric matrix with \( 2n_{\text{d}} + 1 \) nonzero diagonal elements. According to the following relations:

we have that

After performing a Z transformation, the following formulas are obtained:

Substituting \( z = {\text{e}}^{j\omega } \) into the above, the frequency response of the filter is obtained as

Through Euler’s formula, the following mathematical relations are obtained:

Substituting (30) into (29), the final frequency response function of the filter is:

The pseudocode for calculating the frequency response of the VK filter in the steady state is given in Algorithm 1.

In the following deduction process, both \( {A}^{{T}} {A} \) and B play an important role, but because both matrices are \( N \times N \) dimensional, the number of elements in these matrices increases sharply with the signal dimension N, resulting in a dramatic increase in memory requirements. Thus, the elements of these two matrices are not all stored but are instead calculated when they are needed. The method of calculating \( {A}^{{T}} {A} \) and B is given in Algorithm 2.

2.3 Relationship Between the Filter Bandwidth and the Weighting Coefficient

On the basis of the frequency response function derived in the previous section, the relationship between the filter bandwidth and the weighting coefficient r is now discussed. Finally, an analytical solution for the weight coefficients is obtained for a certain bandwidth. For convenience, a new vector ata is introduced, which satisfies the following relations:

Substituting this into (23) and (24) yields

Substituting this into (31) gives

The cutoff frequency satisfies the following relationship:

By specifying the bandwidth of the filter, the weight coefficient can be calculated as

The above formula describes the relationship between the weight coefficient and the bandwidth, and gives the physical meaning of the weight coefficient. That is, the bandwidth of the filter depends directly on the value of the weight coefficient. The larger the weight coefficient, the smaller the bandwidth of the filter. This relationship is established when the signal enters the steady state, but this does not mean that the VK filter is only suitable for steady-state systems; in fact, it is highly suitable for the unsteady state. When the bandwidth is known, the weight coefficients are computed as described in Algorithm 3.

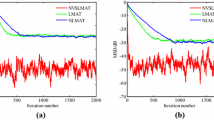

Once the order and bandwidth of the filter have been determined, the weight coefficient of the filter can be obtained. The frequency response function of the filter is then given by (35). As shown in Fig. 1, the frequency response of the filter varies with the difference order. The higher the order, the better the flatness of the passband and the narrower the transition band. In other words, filters with high differential orders offer better frequency selection. Note that, when the difference order is 7, the response curve fluctuates at the passband. For difference orders of 8 or more, the filter cannot be designed at this bandwidth. Of course, this phenomenon is the result using double-precision floating-point numbers.

As shown Fig. 2, there is no such problem for an algorithm using arbitrary-precision floating-point numbers. After using a high-precision floating-point number, the filter passband response fluctuation at difference order 7 disappears, and filters with a differential order of 8 or more can be designed without fluctuation.

2.4 Maximum of Weight Coefficient

The results in the previous section indicate that higher-order filters produce flatter passband bandwidths and faster transition band changes. The higher order also means that the diagonal elements of \( {A}^{{T}} {A} \) are larger. A smaller bandwidth ensures better frequency selectivity and a greater weight factor r. Both these factors increase the value of the diagonal elements of \( r^{2} {A}^{{T}} {A} \). If the diagonal elements of \( r^{2} {A}^{{T}} {A} \) are too large, the limitations of double-precision floating-point accuracy imply that, when the diagonal elements of \( r^{2} {A}^{{T}} {A} \) are added to 1, the value 1 is ignored, and the solution will fail. In this section, the minimum bandwidth, i.e., the maximum weight coefficient, is derived under different filter orders. Firstly, the double-precision data type is examined, and then arbitrary-precision numbers are explored.

Firstly, the method of measuring the precision of arbitrary-precision floating-point numbers is introduced. There are two terms involved, referred to as prec and dps. The term prec denotes the binary precision (measured in bits), whereas dps denotes the decimal precision. Binary and decimal precisions are approximately related through the formula prec = 3.33 × dps. For example, a precision of roughly 333 bits is required to hold an approximation of dps, that is, accurate to 100 decimal places (actually, slightly more than 333 bits are used). Double-precision floating-point numbers, on most systems, correspond to 53 bits of precision. For double-precision floating-point numbers, the maximum number that can be accurately represented is \( 2^{53} \) = 9,007,199,254,740,992.

However, approaching this number should be avoided, because the missing decimal part will affect the accuracy of the result.

Assuming that a 10-bit binary number is reserved to ensure the accuracy of the calculation, the maximum value of the diagonal element of a matrix should be less than \( 2^{43} \) = 8,796,093,022,208.

The maximum element value of \( {A}^{{T}} {A} \) is found on the diagonal of the matrix. More accurately, it will be the first element of the \( {\mathrm{ata}} \) vector, that is, \( {\text{ata}}\left[ 0 \right] \). To ensure the accuracy of the calculation results, the following equation should be satisfied:

The weight coefficients corresponding to different orders of difference are shown in Fig. 3.

The relation between the cutoff frequency and the weight coefficient of the filter is given by (35). Using this formula, the minimum bandwidth, corresponding to the maximum weight coefficient, can be calculated. However, from the point of view of the equation, solving the weight coefficient is fairly straightforward, assuming that the bandwidth, namely, the cutoff frequency, is known. However, the reverse process is quite complicated. Obviously, it is necessary to solve nonlinear equations. The analytical solution cannot be obtained but can only be realized through some numerical algorithm. At the same time, to realize an algorithm for an arbitrary difference order and avoid the inaccuracy of double-precision floating-point numbers, an algorithm for solving nonlinear equations based on arbitrary-precision numbers will be needed. For this purpose, intersection-based solvers such as ‘anderson’ or ‘ridder’ are recommended. Usually, they converge quickly and are very reliable. These solvers are especially suitable for cases where only one solution is available and the interval of the solution is known, which is the case for determining the cutoff frequency, assuming that the bandwidth is known. The minimum bandwidth under the dps = 53 floating data type is shown in Fig. 4.

The following describes an extension to arbitrary-precision floating-point numbers, based on which the maximum allowable weight coefficients under the corresponding accuracy can be calculated (or parameters with more practical physical significance, i.e., the minimum bandwidth). Similar to double-precision data, two parameters are required, \( {\mathrm{dps}} \), which again denotes the number of decimal places, and \( {dps}_{{{re}}} \), which denotes the number of reserved decimal places needed to ensure the accuracy of the calculation. The maximum weight coefficient can be calculated by the following formula:

2.5 Numerical Implementation of VK Filter

This section describes how a numerical method can be used to solve the analytic solution of the filter. Although various numerical methods have been developed to solve the above equation, the relevant numerical algorithms should be discussed for two main reasons. The first, and most important, reason is that an arbitrary-precision floating-point arithmetic library is used to implement the filter. The second reason is that full use should be made of the structural characteristics of sparse matrices to accelerate the calculation. Thus, the problem is not whether the problem can be solved, but how to solve it efficiently and how to embed it into the arbitrary-precision floating-point arithmetic library.

The filter solution can be obtained by solving the linear equation \( {\mathrm{Bx}} = {y}^{{{\prime }}} \) using Cholesky factorization. In this method, the matrix is decomposed into the product of a lower-triangular and an upper-triangular matrix, which can be expressed as \( {\mathrm{B}} = {LL}^{{T}} \). The Cholesky–Banachiewicz and Cholesky–Crout algorithms can be expressed as follows:

The lower-triangular matrix L has only \( n_{\text{d}} + 1 \) nonzero diagonal elements (this is proved below).

For the first column of L,

For the second column of L

and so on. It can be inferred that

From a rigorous point of view, mathematical induction can be used to prove that the above equation holds. Through the above method, it can be proved that L is a lower-triangular matrix with \( n_{\text{d}} + 1 \) nonzero diagonal elements. This structural feature greatly reduces the computational complexity of Cholesky factorization. Equations (40)–(43) can be rewritten as follows:

As L is diagonally sparse, the memory requirements can be reduced by storing only the nonzero elements of L. This matrix is called \( {\mathrm{Ls}} \). L is a \( N \times N \) dimensional matrix, but \( {\mathrm{Ls}} \) is \( N \times n_{\text{d}} \) dimensional.

After LU factorization, the solution of the VK filter can be obtained by forward reduction and backward substitution:

Let

Then,

Equations (53) and (54) can be solved using a row-by-row method. Firstly, \( {y^{\prime}} \) is obtained; this is equal to \( {C}^{{H}} {y} \). The pseudocode for this process is given in Algorithm 5.

The process of solving (52) is forward reduction using the following equations:

The process of solving (53) is backward substitution using the following equations:

Ultimately, the filter solution is obtained. As mentioned above, the complex envelope \( x_{k} \) represents the signal amplitude and phase fluctuations. It does not represent the time-domain solution of the filter. In fact, the time-domain solution of the filter can be calculated as follows:

where \( {\text{real}} \) represents the real part of the complex number. Equations (55)–(59) can be represented by the pseudocode in Algorithm 6.

3 Validation of VK Filter Algorithm

This section presents three test cases that verify the effectiveness of the proposed algorithm. In the first test case, the filter effectiveness is tested under different intensities of background white noise by adding white noise to a sine wave signal. The second test case is similar to the first, but another sine wave signal with a different frequency is added. In the second test case, the two sine wave signals with different frequencies are accurately extracted and the noise is isolated. In the third case, actual measurement data are used. This is a MATLAB example, and so the MATLAB algorithm is compared with the algorithm presented in this paper.

3.1 Extraction of a Signal from Background White Noise

In this section, the VK filter is used to extract a sine waveform signal from background white noise. The data for testing the algorithm are shown in Fig. 5. The added noise obeys a Gaussian probability distribution. Three sets of noise with different expectations are added to the sine wave signal with an amplitude of 1 and frequency of 2 Hz. The expectations of the noise signals are 0.5, 1, and 1.5, respectively. As can be seen from Fig. 5, the greater the expected white noise, the more violent the fluctuation is. Note that the sampling frequency of the signal is 800 Hz.

A 4th order VK filter with bandwidth of 2Hz is designed. Note that the arbitrary-precision arithmetic capability allows an arbitrary bandwidth and order to be allocated. The filtering effect is shown in Fig. 6. More noise is introduced when the bandwidth is 2 Hz, and the greater the noise, the greater the distortion of the result.

As a contrast, a 4th order VK filter with bandwidth of 0.8 Hz is designed. As shown in Fig. 7, although the greater noise results in greater distortion of the waveform from a microscopic point of view, from a macroscale point of view, the filtering results almost coincide with the sine wave signal. This shows that a narrower bandwidth can better isolate the influence of noise. Note that the design parameters of the filter are beyond the range of double-precision data. With the help of an arbitrary-precision arithmetic library, a narrowband signal can be extracted from the full signal with high sampling frequency. This is the advantage of arbitrary-precision floating-point arithmetic algorithms.

3.2 Extraction of a Multi-component Signal from Background White Noise

In this section, two sine waveform signals of different frequencies are extracted from background white noise. The frequencies of these two signals are 2 Hz and 4 Hz, respectively, and both have an amplitude of 1. Similarly, the added noise obeys a Gaussian probability distribution and has an expectation of 1.5. The signals with and without noise are shown in Fig. 8. The signal without noise is obtained by adding two sine wave signals. This signal, with added white noise, yields the signal with noise.

A 4th order VK filter with bandwidth of 1 Hz is designed. As shown in Fig. 9, the amplitude and frequency of the two extracted signals are basically 2 Hz and 4 Hz, respectively, the same as the original signal. The two sine wave signals can be extracted from the noise, and there is no interference between them. There is a slight fluctuation in the amplitude, and a smaller bandwidth could be set to suppress this fluctuation. However, without using the algorithm based on arbitrary-precision floating-point arithmetic, this cannot be achieved, which demonstrates the advantages of the arbitrary-precision algorithm. Obviously, test cases with more than two signals could be considered, but this would make the figure appear very cluttered. Two signals are sufficient to verify the feasibility of the algorithm and are easier to understand and demonstrate.

3.3 Extraction from Real Measurement Signal

The data processed in this section are derived from actual measurement signals, namely, vibration data from an accelerometer in the cabin of a helicopter during a run-up and coast-down of the main motor. The data are taken from the MATLAB Signal Processing Toolbox.

A helicopter has several rotating components, including the engine, gearbox, and the main and tail rotors. Each component rotates at a known, fixed rate with respect to the main motor, and each may contribute some unwanted vibrations. The frequency of the dominant vibration components can be related to the rotational speed of the motor to investigate the source of high-amplitude vibrations. The helicopter in this example has four blades in both the main and the tail rotors. Important components of vibration from a helicopter rotor may be found at integer multiples of the rotational frequency of the rotor when the vibration is generated by the rotor blades. The signal in this test case is a time-dependent voltage, vib, sampled at a rate of 500 Hz. The data include the angular speed of the turbine engine, and a vector t of time instants. The ratios of rotor speed to engine speed for each rotor are stored in the variables main Rotor Engine Ratio and tail Rotor Engine Ratio and have values of 0.0520 and 0.0660, respectively. The signal is shown in Fig. 10.

A filter with a difference order of 4 and bandwidth of 1 Hz was designed. The orders to be extracted are 0.0520 and 0.0660, which have the two largest amplitudes of all the orders. The filtering result is shown in Fig. 11. In contrast, Fig. 12 shows the filtering result of the 3rd order filter using the MATLAB algorithm. From these figures, it can be seen that the envelope fluctuation is obviously more violent than that given by the algorithm proposed in this paper. Because the graphs are also drawn in MATLAB, they are slightly different from those given by the Python code.

4 Conclusions

This paper has presented the relevant theoretical and numerical implementations of a VK filter in detail. Using the pseudocode given in this paper, the VK filter algorithm based on arbitrary-precision floating-point numbers can be easily realized. The main body of this paper is Sect. 2, where the analytical solution of an arbitrary-order VK filter was given and the relationship between the filter bandwidth and the weighting coefficient r was obtained. The frequency response of various difference orders was also derived. In this process, the use of arbitrary-precision floating-point numbers successfully avoids the problem of high-order filter passband fluctuations. Finally, the proposed numerical method was used to determine the VK filter with reduced computational complexity by facilitating the use of arbitrary-precision algorithms.

Three test cases show that the proposed algorithm has better filtering effect, better frequency selectivity, and stronger anti-interference ability compared with double-precision data type algorithm. The main contribution of this paper is to overcome the problem whereby the bandwidth of the VK filter cannot be too narrow by using an arbitrary-precision floating-point arithmetic library. Based on this library, a filter with arbitrary bandwidth and arbitrary difference order can be implemented whenever necessary. From the practical application point of view, the numerical implementation of the algorithm is also given in detail, so that according to the ideas and methods of this paper, using Python to implement related algorithms is a brisk job.

References

Brandt, A., Lagö, T.L., Ahlin, K., et al.: Main principles and limitations of current order tracking methods. Sound Vib. 39, 19–22 (2005)

Vold, H., Leuridan, J.: High resolution order tracking at extreme slew rates, using Kalman tracking filters. SAE Paper Number 931288 (1993)

Vold, H., Mains, M., Blough, J.: Theoretical foundations for high performance order tracking with the Vold–Kalman tracking filter. SAE Paper Number 972007 (1997)

Vold, H., Deel, J.: Vold–Kalman order tracking: new methods for vehicle sound quality and drive train NVH applications. SAE Paper Number 972033 (1997)

Vold, H., Mains, M., Corwin-Renner, D.: Multiple axle order tracking with the Vold–Kalman tracking filter. Sound Vib. Mag. 31, 30–34 (1997)

Herlufsen, H., Gade, S., Konstantin-Hansen, H., et al.: Characteristics of the Vold–Kalman order tracking filter. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Process 6, 3895–3898 (2000)

Pelant, P., Tuma, J., Benes, T.: Vold–Kalman order tracking filtration in car noise and vibration measurements. In: Proceedings of Internoise, Prague (2004)

Tuma, J.: Setting the passband width in the Vold–Kalman order tracking filter. In: 12th International Congress on Sound and Vibration, (ICSV12), Paper 719, Lisabon (2005)

Blough, J.R.: Understanding the Kalman/Vold–Kalman order tracking filters formulation and behavior. In: Proceedings of the SAE Noise and Vibration Conference, SAE paper No. 2007-01-2221 (2007)

Čala, M., Beneš, P.: Implementation of the Vold–Kalman order tracking filters for online analysis. In: 23rd International Congress on Sound and Vibration 2016 (ICSV 23) 1, 367–374 (2016)

Vold, H., Miller, B., Reinbrecht, C., et al.: The Vold–Kalman order tracking filter implementation and application. In: 2017 International Operational Modal Analysis Conference

Amadou, A., Julien, R., Edgard, S., et al.: A new approach to tune the Vold–Kalman estimator for order tracking. Ciba-Geigy A-G, Switz (2016)

Feldbauer, C., Holdrich, R.: Realisation of a Vold–Kalman tracking filter—a least square problem. In: Proceedings of the COST G-6 Conference on Digital Audio Effects (DAFX-000), Verona, Italy (2000)

Pan, M.C., Chu, W.C., Le, D.D.: Adaptive angular-velocity Vold–Kalman filter order tracking–theoretical basis, numerical implementation and parameter investigation. Mech. Syst. Signal. Process. 81, 148–161 (2016)

Zhao, D., Li, J.Y., Cheng, W.D., et al.: Vold-Kalman generalized demodulation for multi-faults detection of gear and bearing under variable speeds. Procedia Manuf. 26, 1213–1220 (2018)

Feng, Z.P., Zhu, W.Y., Zhang, D.: Time-frequency demodulation analysis via Vold-Kalman filter for wind turbine planetary gearbox fault diagnosis under nonstationary speeds. Mech. Syst. Signal. Process. 128, 93–109 (2019)

Acknowledgements

The paper is supported by the National Science Foundation for Young Scientists of China, Intelligent collaboration control of all-terrain vehicle via active attitude, and four-wheel steering control systems (Grant No. 51705185).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Ge, L., Ma, F., Shi, J. et al. Numerical Implementation of High-Order Vold–Kalman Filter Using Python Arbitrary-Precision Arithmetic Library. Automot. Innov. 2, 178–189 (2019). https://doi.org/10.1007/s42154-019-00065-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42154-019-00065-1