Abstract

This paper studies the finite-time synchronization problem of Cohen–Grossberg neural networks (CGNNs) with discontinuous neuron activations and mixed time-delays. Under the extended differential inclusion framework, the famous finite-time stability theorem and generalized Lyapunov approach are used to realize the finite-time synchronization control of drive-response system. Different from the conventional controllers, we propose two classes of novel switching state-feedback controllers which include discontinuous factor sign (\(\cdot\)). By doing so, the synchronization error of CGNNs can be controlled to converge zero in a finite time. Moreover, we also provide an estimation of the upper bound of the settling time for synchronization. Finally, two examples and simulation experiment are given to demonstrate the validity of theoretical results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Since 1983, Cohen–Grossberg neural networks (CGNNs) have attracted considerable attention due to their potential applications in pattern recognition, parallel computing, signal and image processing, associative memory, combinatorial optimization, etc (see [1]). Many interesting results on the dynamical behaviors of CGNNs have been obtained (see, for example, [2–5, 26–28]). However, most of the previous results on CGNNs did not consider the jump discontinuities of neuron activations. As far as we know, discontinuous or non-Lipschitz activation functions of neural networks are significant and can be used to solve programming problems and various control problems (see [6–8]). Nowadays, the study of dynamical behaviors for neural networks with discontinuous activations has become a hot research topic. For instance, the paper [29] gave global stability analysis of a general class of discontinuous neural networks with linear growth activation functions. In [30], the authors discussed the existence and stability of periodic solution for BAM neural networks with discontinuous neuron activations and impulses. The paper [31] investigated the exponential state estimation for Markovian jumping neural networks with discontinuous activation functions and mixed time-varying delays. In recent years, under the Filippov differential inclusion framework, some attempts have been made to investigate the existence and convergence of periodic solutions or almost periodic solutions or even equilibrium points for CGNNs possessing discontinuous neuron activations (see [9–11]). Nevertheless, there is still not much research on finite-time synchronization issues of CGNNs with discontinuous activation functions.

Actually, finite-time synchronization requires that the system trajectories of error states converge to the desired aim over the finite time and to keep them there then after. As a powerful tool, finite-time synchronization control plays an important role in the field of artificial neural networks. On the one hand, we can understand an unknown dynamical neuron system from the well-known dynamical neuron system via finite-time synchronization. On the other hand, by using finite-time synchronization control, the convergence time of error states can be shortened. This means that finite-time synchronization possesses faster convergence speed than asymptotic synchronization and exponential synchronization (see [12]). Note that the dynamical neuron system with discontinuous neuron activation may also exhibit some unstable behaviors such as periodic oscillation and chaos. Especially, if time-delays between neuron signals occur in a discontinuous neuron system, the behaviors of oscillation or chaos will become more apparent. Therefore, it is of great necessity for us to realize the finite-time synchronization control of CGNNs with discontinuous activations and time-delays. In addition, the finite-time synchronization of drive-response system is more easy to be achieved by utilizing non-smooth controllers such as sliding mode controller and switching controller. However, the classical continuous linear controllers are usually difficult to realize the finite-time synchronization of discontinuous neural network systems. The main reasons include two aspects: (1) the classical continuous linear controllers are difficult to deal with the uncertain differences between the Filippov solutions of the drive system and response system with discontinuous factors. (2) The classical continuous linear controllers are difficult to eliminate the influence of time-delays on the error states of discontinuous neuron systems. It should be emphasized that, different from classical continuous linear controllers, the switching state-feedback controllers usually include a discontinuous function sign \((\cdot )\) which can effectively overcome the above two difficulties.

Up to now, some theoretical results on the synchronization issues of neural networks with discontinuous activations have already been obtained. For example, the authors of [13] dealt with the quasi-synchronization problem of neural networks with discontinuous neuron activations, but the state error of drive-response system can only be controlled within a small region around zero. In [32] the adaptive exponential synchronization problem of delayed Cohen–Grossberg neural networks with discontinuous activations was considered. In [14, 39], by constructing suitable Lyapunov functionals, the exponential synchronization of time-delayed neural networks with discontinuous activations were investigated. Also in [15], the periodic synchronization problem of time-delayed neural networks with discontinuous activations was discussed via switching control approach. However, the convergence time of the state error for synchronization in [13–15, 32, 39] is sufficiently large. The paper [16] handled the finite-time synchronization issue of complex networks with discontinuous node dynamics, but the discrete and distributed time-delays between neuron signal are not considered. To the best of the authors’ knowledge, only a few papers have studied finite-time synchronization problems of neural networks with time-delays and discontinuous neuron activations.

Inspired by the above discussions, this paper consider a class of discontinuous Cohen–Grossberg neural network model with both discrete time-delays and distributed time-delays as follows:

where \(i\in \mathbb {N}\doteq \{1,2,\ldots ,n\},\) n corresponds to the number of units in the delayed network system (1); \(x_{i}(t)\) denotes the state variable of the ith unit at time t; \(f_{j}(\cdot )\) represents the activation function of jth neuron; \(d_{i}(x_{i}(t))>0\) represents the amplification function of the ith neuron; \(a_{i}(x_{i}(t))\) denotes appropriately behaved function; \(b_{ij}\) represents the connection strength of jth neuron on the ith neuron; \(c_{ij}\) denotes the discrete time-delayed connection strength of jth neuron on the ith neuron; \(w_{ij}\) denotes the distributed time-delayed connection strength of jth neuron on the ith neuron; \(J_{i}\) is the neuron input on the ith unit; \(\tau _{j}(t)\) corresponds to the discrete time-varying delay at time t and is a continuous function satisfying \(0\le \tau _{j}(t)\le \tau ^{M},\) \(\dot{\tau }_{j}(t)\le \tau _{j}^{D}<1,\) where \(\tau ^{M}=\max \limits _{j\in \mathbb {N}}\{\sup \limits _{t\ge 0}\tau _{j}(t)\}\) and \(\tau _{j}^{D}\) are nonnegative constants; \(\delta _{j}(t)\) denotes the distributed time-varying delay at time t and is a continuous function satisfying \(0\le \delta _{j}(t)\le \delta ^{M}\), \(\dot{\delta }_{j}(t)\le \delta _{j}^{D}<1\), where \(\delta ^{M}=\max \limits _{j\in \mathbb {N}}\{\sup \limits _{t\ge 0}\delta _{j}(t)\}\) and \(\delta _{j}^{D}\) are nonnegative constants.

Throughout this paper, we always make the following fundamental assumptions:

-

(\(\mathscr {H}\)1) For each \(i\in \mathbb {N}\), the amplification function \(d_{i}(u)\) is continuous and there exist positive numbers \(d_{i}^{*}\) and \(d_{i}^{**}\) such that \(0<d_{i}^{*}\le d_{i}(u)\le d_{i}^{**}\) for \(u\in \mathbb {R}\).

-

(\(\mathscr {H}\)2) The neuron activation \(f(x)=(f_{1}(x_{1}),f_{2}(x_{2}),\ldots ,f_{n}(x_{n}))^\mathrm{T}\) is allowed to be discontinuous and satisfies the following conditions:

-

(i)

For each \(i\in \mathbb {N}\), \(f_{i}:\mathbb {R}\rightarrow \mathbb {R}\) is piecewise continuous, i.e., \(f_{i}\) is continuous except on a countable set of isolate points \(\{\rho _{k}^{i}\}\), where there exist finite right and left limits, \(f_{i}^{+}(\rho _{k}^{i})\) and \(f_{i}^{-}(\rho _{k}^{i})\), respectively. Moreover, \(f_{i}\) has at most a finite number of discontinuities on any compact interval of \(\mathbb {R}\).

-

(ii)

For each \(i\in \mathbb {N}\), there exist nonnegative constants \(L_{i}\) and \(p_{i}\) such that for any \(u,v\in \mathbb {R}\),

$$\begin{aligned} \sup \limits _{\xi _{i}\in \overline{\mathrm{co}}[f_{i}(u)],\eta _{i}\in \overline{\mathrm{co}}[f_{i}(v)]}|\xi _{i}-\eta _{i}|\le L_{i}|u-v|+p_{i}, \end{aligned}$$(2)where, for \(\theta \in \mathbb {R},\)

$$\begin{aligned} \overline{\mathrm{co}}[f_{i}(\theta )]=\left[ \min \{f_{i}^{-}(\theta ) ,f_{i}^{+}(\theta )\},\max \{f_{i}^{-}(\theta ) ,f_{i}^{+}(\theta )\}\right] . \end{aligned}$$

-

(i)

-

(\(\mathscr {H}\)3) For any \(i\in \mathbb {N}\), there exists a positive constant \(\dot{a}_{i}(u)\ge \beta _{i}\), where \(\dot{a}_{i}(u)\) denotes the derivative of \(a_{i}(u)\) for \(u\in \mathbb {R}\) and \(a_{i}(0)=0\).

The structure of this paper is organized as follows. Section 2 presents some preliminary knowledge. Our main results and their proofs are contained in Sect. 3. Section 4 gives two numerical examples to verify the theoretical results. Finally, we conclude this paper in Sect. 5.

Notations Let \(\varsigma =\max \{\tau ^{M},\delta ^{M}\}\). \(\mathbb {R}\) denotes the space of real number and \(\mathbb {R}^{n}\) represents the n-dimensional Euclidean space. Given column vectors \(x=(x_{1},x_{2},\ldots ,x_{n})^{\mathrm{T}}\in \mathbb {R}^{n}\) and \(y=(y_{1},y_{2},\ldots ,y_{n})^{\mathrm{T}}\in \mathbb {R}^{n}\), \(\langle x,y\rangle =x^{\mathrm{T}}y=\sum \nolimits _{i=1}^{n}x_{i}y_{i}\) denotes the scalar product of x and y, where the superscript \(\mathrm{T}\) represents the transpose operator. Given \(x=(x_{1},x_{2},\ldots ,x_{n})^{\mathrm{T}}\in \mathbb {R}^{n}\), let \(\parallel x\parallel\) denote any vector norm of x. Finally, let \(\mathrm {sign}(\cdot )\) be the sign function and \(2^{\mathbb {R}^{n}}\) denote the family of all nonempty subsets of \(\mathbb {R}^{n}\).

2 Preliminaries

In this section, we give some preliminary knowledge about set-valued analysis, differential inclusion theory, non-smooth Lyapunov approach and an important inequality. The readers may refer to [17–25] for more details.

Definition 1

(see [17, 18]) Suppose \(X\subseteq \mathbb {R}^{n}\), then \(x\mapsto F(x)\) is said to be a set-valued map from \(X\rightarrow 2^{\mathbb {R}^{n}},\) if for every point x of the set \(X\subset \mathbb {R}^{n},\) there corresponds a nonempty set \(F(x)\subset \mathbb {R}^{n}.\) A set-valued map F with nonempty values is said to be upper semi-continuous (USC) at \(x_{0}\in X,\) if for any open set N containing \(F(x_{0}),\) there exists a neighborhood M of \(x_{0}\) such that \(F(M)\subset N.\)

Now let us introduce extended Filippov-framework (see [18, 19]). Let \(\varsigma\) be a given nonnegative real number and \(C=C([-\varsigma ,0],\mathbb {R}^{n})\) denote the Banach space of continuous functions \(\phi\) mapping the interval \([-\varsigma ,0]\) into \(\mathbb {R}^{n}\) with the norm \(\Vert \phi \Vert _{C}=\sup \limits _{-\varsigma \le s\le 0}\Vert \phi (s)\Vert .\) If for \(\mathcal {T}\in (0,+\infty ],\) \(x(t):[-\varsigma ,\mathcal {T})\rightarrow \mathbb {R}^{n}\) is continuous, then \(x_{t}\in C\) is defined by \(x_{t}(\theta )=x(t+\theta ),\) \(-\varsigma \le \theta \le 0\) for any \(t\in [0,\mathcal {T}).\) Consider the following time-delayed differential equation:

where \(x_{t}(\cdot )\) represents the history of the state from time \(t-\varsigma\), up to the present time t; \(\mathrm{d}x/\mathrm{d}t\) is the time derivative of x and \(f:\mathbb {R}\times C\rightarrow \mathbb {R}^{n}\) denotes measurable and essentially locally bounded function. In this case, \(f(t,x_{t})\) is allowed to be discontinuous in \(x_{t}\).

Let us construct the following Filippov set-valued map \(F:\mathbb {R}\times C\rightarrow 2^{\mathbb {R}^{n}}\)

Here \(\mathrm{meas}(\mathcal {N})\) denotes the Lebesgue measure of set \(\mathcal {N}\); intersection is taken over all sets \(\mathcal {N}\) of Lebesgue measure zero and over all \(\rho>0\); \(\mathcal {B}(x_{t},\rho )=\{x^{*}_{t}\in C\mid \Vert x^{*}_{t}-x_{t}\Vert _{C}<\rho \}\); \(\overline{\mathrm{co}}[\mathbb {E}]\) represents the closure of the convex hull of some set \(\mathbb {E}\).

Definition 2

We say a vector-valued function x(t) defined on a non-degenerate interval \(\mathbb {I}\subseteq \mathbb {R}\) is a Filippov solution for time-delayed differential equation (4), if it is absolutely continuous on any compact subinterval \([t_{1},t_{2}]\) of \(\mathbb {I}\), and for a.e. \(t\in \mathbb {I}\), x(t) satisfies the following time-delayed differential inclusion

In the following, we apply the extended Filippov differential inclusion framework in discussing the solution of CGNNs with mixed time-delays and discontinuous activations.

Definition 3

A function \(x(t)=(x_{1}(t),x_{2}(t),\ldots ,x_{n}(t))^{\mathrm{T}}:[-\varsigma ,\mathcal {T})\rightarrow \mathbb {R}^{n},\mathcal {T}\in (0,+\infty ],\) is a state solution of the delayed and discontinuous system (1) on \([-\varsigma ,\mathcal {T})\) if

-

(i)

x is continuous on \([-\varsigma ,\mathcal {T})\) and absolutely continuous on any compact subinterval of \([0,\mathcal {T})\);

-

(ii)

there exists a measurable function \(\gamma =(\gamma _{1},\gamma _{2},\ldots ,\gamma _{n})^\mathrm{T}:[-\varsigma ,\mathcal {T})\rightarrow \mathbb {R}^{n}\) such that \(\gamma _{j}(t)\in \overline{\mathrm{co}}[f_{j}(x_{j}(t))]\) for a.e. \(t\in [-\varsigma ,\mathcal {T})\) and for a.e. \(t\in [0,\mathcal {T}),~i\in \mathbb {N}\),

$$\begin{aligned} \frac{\mathrm{d}x_{i}(t)}{\mathrm{d}t}& =-d_{i}(x_{i}(t))[a_{i}(x_{i}(t))-\sum \limits _{j=1}^{n}b_{ij}\gamma _{j}(t)\nonumber \\& \quad -\sum \limits _{j=1}^{n}c_{ij}\gamma _{j}(t-\tau _{j}(t))-\sum \limits _{j=1}^{n}w_{ij}\int _{t-\delta _{j}(t)}^{t}\gamma _{j}(s)\mathrm{d}s-J_{i}]. \end{aligned}$$(6)

Any function \(\gamma =(\gamma _{1},\gamma _{2},\ldots ,\gamma _{n})^\mathrm{T}\) satisfying (6) is called an output solution associated with the state x. With this definition it turns out that the state \(x(t)=(x_{1}(t),x_{2}(t),\ldots ,x_{n}(t))^\mathrm{T}\) is a solution of (1) in the sense of Filippov since for a.e. \(t\in [0,\mathcal {T})\) and \(i\in \mathbb {N}\), it satisfies

The next definition is the initial value problem associated with CGNNs (1) as follows.

Definition 4

Let \(\phi =(\phi _{1},\phi _{2},\ldots ,\phi _{n})^\mathrm{T}:[-\varsigma ,0]\rightarrow \mathbb {R}^{n}\) be any continuous function and \(\psi =(\psi _{1},\psi _{2},\ldots ,\psi _{n})^\mathrm{T}:[-\varsigma ,0]\rightarrow \mathbb {R}^{n}\) be any measurable selection, such that \(\psi _{j}(s)\in \overline{\mathrm{co}}[f_{j}(\phi _{j}(s))]\) \((j\in \mathbb {N})\) for a.e. \(s\in [-\varsigma ,0]\), an absolute continuous function \(x(t)=x(t,\phi ,\psi )\) associated with a measurable function \(\gamma\) is said to be a solution of the Cauchy problem for system (1) on \([-\varsigma ,\mathcal {T})\) (\(\mathcal {T}\) might be \(+\infty\)) with initial value \((\phi (s),\psi (s)), s\in [-\varsigma ,0]\), if

Consider the neural network model (1) as the drive system, the controlled response system is given as follows:

where \(i\in \mathbb {N}\), \(u_{i}(t)\) is the controller to be designed for realizing synchronization of the drive-response system. The other parameters are the same as those defined in system (1)

Similar to Definition 4, we can give the initial value problem (IVP) of response system (8) as follows:

Lemma 1

(Chain Rule [20, 21]) Assume that \(V(z):\mathbb {R}^{n}\rightarrow \mathbb {R}\) is C-regular, and \(z(t):[0,+\infty )\rightarrow \mathbb {R}^{n}\) is absolutely continuous on any compact subinterval of \([0,+\infty )\). Then, z(t) and \(V(z(t)):[0,+\infty )\rightarrow \mathbb {R}\) are differential for a.e. \(t\in [0,+\infty )\) and

where \(\partial V(z)\) denotes the Clarke’s generalized gradient of V at point \(z\in \mathbb {R}^{n}\).

Lemma 2

(see [21]) Assume that \(V(z(t)):\mathbb {R}^{n}\rightarrow \mathbb {R}\) is C-regular, and that \(z(t):[0,+\infty )\rightarrow \mathbb {R}^{n}\) is absolutely continuous on any compact subinterval of \([0,+\infty )\). If there exists a continuous function \(\Upsilon :(0,+\infty )\rightarrow \mathbb {R}\), with \(\Upsilon (\varrho )>0\) for \(\varrho \in (0,+\infty )\), such that

and

then we have \(V(t)=0\) for \(t\ge t^{*}\). Especially, we have following conclusions.

-

(i)

If \(\Upsilon (\varrho )=K_{1}\varrho +K_{2}\varrho ^{\mu }\), for all \(\varrho \in (0,+\infty )\), where \(\mu \in (0,1)\) and \(K_{1},K_{2}>0\), then the settling time can be estimated by

$$\begin{aligned} t^{*}=\frac{1}{K_{1}(1-\mu )}\ln \frac{K_{1}V^{1-\mu }(0)+K_{2}}{K_{2}}. \end{aligned}$$(11) -

(ii)

If \(\Upsilon (\varrho )=K\varrho ^{\mu }\) and \(K>0\), then the settling time can be estimated by

$$\begin{aligned} t^{*}=\frac{V^{1-\mu }(0)}{K(1-\mu )}. \end{aligned}$$(12)

Definition 5

Discontinuous drive-response systems (1) and (8) are said to be finite-time synchronized if, for a suitable controller, there exists a time \(t^{*}\) such that \(\lim \limits _{t\rightarrow t^{*}}\Vert e(t)\Vert =0,\) and \(\Vert e(t)\Vert =\Vert y(t)-x(t)\Vert \equiv 0\) for \(t>t^{*}\), where x(t) and y(t) are the solutions of drive system (1) and response system (8) with initial conditions \(\phi\) and \(\varphi\), respectively.

Lemma 3

(Jensen Inequality [25]) If \(a_{1},a_{2},\ldots ,a_{n}\) are positive numbers and \(0<r<p\), then

3 Main results

In this section, we consider the possibility to implement finite-time synchronization control of time-delayed neural networks with discontinuous activations. Different from previous works, we design two classes of novel switching state-feedback controllers which involve time-delays and discontinuous factors. Based on extended Filippov differential inclusion framework and famous finite-time stability theory, we will provide some new basic results about finite-time synchronization of drive-response neural networks with time-delays and discontinuous activations. Now let us define the synchronization error between the drive system (1) and the response system (8) as follows

In order to realized finite-time synchronization goal, we design the following two classes of switching state-feedback controllers:

Case (1) The switching state-feedback controller \(u(t)=(u_{1}(t),u_{2}(t),\ldots ,u_{n}(t))^\mathrm{T}\) is given by

where \(i\in \mathbb {N}\), the constants \(k_{i}, r_{i},\pi _{ij},\varpi _{ij}\) are the gain coefficients to be determined, and the real number \(\sigma\) satisfies \(0<\sigma <1\).

Case (2) The switching state-feedback controller \(u(t)=(u_{1}(t),u_{2}(t),\ldots ,u_{n}(t))^\mathrm{T}\) is given by

Here \(i\in \mathbb {N}\), the constants \(\ell _{i},\eta _{i},\zeta _{i},\vartheta _{i}\) are the gain coefficients to be determined, \(\hbar>0\) and \(q>0\) are tunable constants, and the real number \(\sigma\) satisfies \(0<\sigma <1\).

Remark 1

Different from conventional continuous linear controllers and adaptive controllers, the switching state-feedback controllers (13) and (14) include the discontinuous term \(\mathrm{sign}(e_{i}(t))\) which can switch the system state and makes the error state converge to zero in a finite-time. Such switching state-feedback controllers possess some merits. One of merits is that they can well handle the uncertain differences of the Filippov solutions for neural networks with discontinuous activations. Another merit is that such switching state-feedback controllers can eliminate the influence of time-delay on the states of neuron system with especial state-dependent nonlinear discontinuous properties.

3.1 State-feedback control design in Case (1)

Theorem 1

Suppose that the conditions (\(\mathscr {H}\)1)-(\(\mathscr {H}\)3) are satisfied, assume further that

-

(\(\mathscr {H}\)4) \(k_{i}\ge \sum \limits _{j=1}^{n}\left( |b_{ij}|+|c_{ij}|+\delta ^{M}|w_{ij}|\right) p_{j}\) for each \(i\in \mathbb {N}\), \(\mathscr {B}>\mathscr {A}\), \(\min \limits _{1\le i,j\le n}\left\{ \pi _{ij}\right\} \ge \max \limits _{1\le i,j\le n}\left\{ |c_{ij}|L_{j}\right\}\) and \(\min \limits _{1\le i,j\le n}\left\{ \varpi _{ij}\right\} \ge \max \limits _{1\le i,j\le n}\left\{ |w_{ij}|L_{j}\right\}\), where

$$\begin{aligned} \mathscr {B}=\min \limits _{1\le i\le n}\left\{ \beta _{i}\right\} ,~\mathscr {A}=\max \limits _{1\le j\le n}\left\{ \sum \limits _{i=1}^{n}|b_{ij}|L_{j}\right\} . \end{aligned}$$

If the drive-response system is controlled with the control law (13), then the response system (8) can be synchronized with the drive system (1) in a finite time, and the settling time for finite-time synchronization is given by

Proof

Consider the following Lyapunov functional for the drive-response system with switching state-feedback controller (13):

Obviously, V(t) is C-regular and the following inequality holds:

where \(d_{\min }^{*}=\min \nolimits _{i\in \mathbb {N}}\{d_{i}^{*}\}\) and \(d_{\max }^{**}=\max \nolimits _{i\in \mathbb {N}}\{d_{i}^{**}\}\). By the chain rule in Lemma 1, calculate the time derivative of V(t) along the trajectories of the drive-response system, we can obtain

Recalling the assumptions (\(\mathscr {H}\)1) and (\(\mathscr {H}\)3), we can get from (18) that

Multiplying both sides of switching state-feedback controller (13) by \(\mathrm{sign}(e_{i}(t))\), we have

Substituting the formula (20) into Eq. (19) and using the assumption (\(\mathscr {H}\)4), we can deduce that

where \(\mathscr {B}=\min \limits _{1\le i\le n}\left\{ \beta _{i}\right\}\), \(\mathscr {A}=\max \limits _{1\le j\le n}\left\{ \sum \limits _{i=1}^{n}|b_{ij}|L_{j}\right\}\) and \(\mathscr {D}=\min \limits _{1\le i\le n}\left\{ r_{i}\right\}\). From \(0<\sigma <1\) and Lemma 3, we can get

which implies

Combining (17) and (23), it follows from (21) that

According to the special case (i) in Lemma 2, the response system (1) and the drive system (8) can achieve the finite-time synchronization under the switching state-feedback controller (13). Obviously, \(\Upsilon (\varrho )=K_{1}\varrho +K_{2}\varrho ^{\sigma }\), where \(K_{1}=(\mathscr {B}-\mathscr {A})d_{\min }^{*}>0\) and \(K_{2}=\mathscr {D}(d_{\min }^{*})^{\sigma }>0\). Therefore, the settling time can be given by

The proof is complete. \(\square\)

3.2 State-feedback control design in Case (2)

Theorem 2

Under the assumptions (\(\mathscr {H}\)1)-(\(\mathscr {H}\)3) and \(0<\sigma <1\), assume further that the following inequalities hold.

-

(\(\mathscr {H}\)5) For each \(i\in \mathbb {N}\), \(\beta _{i}-\sum \limits _{j=1}^{n}|b_{ji}|L_{i} -\frac{\zeta _{i}}{1-\tau _{i}^{D}}-\frac{\vartheta _{i}\delta ^{M}}{1-\delta _{i}^{D}}\ge 0\), \(\ell _{i}\ge \sum \limits _{j=1}^{n}\left( |b_{ij}|+|c_{ij}|+\delta ^{M}|w_{ij}|\right) p_{j}\), \(\zeta _{i}\ge \sum \limits _{j=1}^{n}|c_{ji}|L_{i}\) and \(\vartheta _{i}\ge \sum \limits _{j=1}^{n}|w_{ji}|L_{i}\).

If the drive-response system is controlled with the control law (14), then the response system (8) can be synchronized with the drive system (1) in a finite time, and the settling time forfinite-time synchronization is given by

Proof

Consider the following Lyapunov functional for the drive-response system with switching state-feedback controller (14):

Obviously, V(t) is C-regular. By the chain rule in Lemma 1, calculate the time derivative of V(t) along the trajectories of the drive-response system, we have

Multiplying both sides of controller (14) by \(\mathrm{sign}(e_{i}(t))\), we have

Recalling the assumption (\(\mathscr {H}\)3), we can obtain that

By using the assumption (\(\mathscr {H}\)2), we can get

Similarly, we have

and

It follows from (27)–(32) that

According to the assumption (\(\mathscr {H}\)5), we can obtain from (33) that

By using the assumptions (\(\mathscr {H}\)1), we have

It follows from Eqs. (34) and (35) that

where

Since \(0<\sigma <1\), based on Lemma 3, we have

which yields

From (36) and (38), we derive that

By the special case (ii) in Lemma 2, the response system (1) and the drive system (8) can realize the finite-time synchronization under the switching state-feedback controller (14). Clearly, \(\Upsilon (\varrho )=K\varrho ^{\mu }\), where \(K=\mathscr {G}>0\). Hence, the settling time can be given by

The proof is complete. \(\square\)

Remark 2

By using the finite-time stability theorem given by Forti et al., this paper has studied the finite-time synchronization problem of CGNNs with discontinuous activation functions. In [33], Wang and Zhu dealt with the problem of finite-time stabilization for a class of high-order stochastic nonlinear systems in strict-feedback form. However, the finite-time stability theorem of [33] is invalid to handle the discontinuous dynamical systems.

Remark 3

In the existing literature [34–38], there are some results on synchronization for neural networks. However, the neural network models of [34–38] did not consider the discontinuities of neuron activations. Moreover, the finite-time synchronization results of this paper are more better than the synchronization results of [34–38]. That is because finite-time synchronization possesses faster convergence speed than asymptotic or exponential synchronization.

Remark 4

Different from the classical state-feedback methods, our state-feedback methods (13) and (14) include switching term, time-delayed term and integral term. From Theorems 1 and 2, we can see that the two state-feedback control methods are effective in realizing the finite-time synchronization of CGNNs with discontinuous activations and mixed time-delays. However, the classical controllers are difficult to deal with the uncertain differences of the Filippov solutions of differential equation with discontinuous right-hand side. In [14], the authors used adaptive control method to study the exponential synchronization problem of time-delayed neural networks with discontinuous activations. Nevertheless, the adaptive control method of [14] is difficult to realize the finite-time synchronization of neural network model (1) due to the emergence of mixed time-delays and discontinuities of activations.

4 Numerical simulations

In this section, two numerical examples are given to illustrate the effectiveness of main results.

Example 1

Consider the following 2-dimensional CGNNs (1) with \(d_{i}(x_{i}(t))=0.6+\frac{0.1}{1+x_{i}^{2}(t)}(i=1,2)\), \(a_{1}(x_{1}(t))=1.9x_{1}(t)\), \(a_{2}(x_{2}(t))=2x_{2}(t)\), \(b_{11}=2\), \(b_{12}=-0.2\), \(b_{21}=-1\), \(b_{22}=0.5\), \(c_{11}=-1.5\), \(c_{12}=c_{21}=-0.5\), \(c_{22}=-3,\) \(w_{11}=w_{22}=-0.01\), \(w_{21}=w_{12}=0\), \(J_{1}=J_{2}=0\) and \(\tau _{j}(t)=\delta _{j}(t)=1(j=1,2).\) The discontinuous activation functions are described by

It is obvious that the discontinuous activation function \(f_{i}(\theta )\) is non-monotonic and satisfies the assumption (\(\mathscr {H}\)2). Actually, the activation function \(f_{i}(\theta )\) possesses a discontinuous point \(\theta =0\) and \(\overline{\mathrm{co}}[f_{i}(0)]=[f_{i}^{+}(0),f_{i}^{-}(0)]=[-0.1,0.1].\) We can choose \(L_{1}=L_{2}=0.6\) and \(p_{1}=p_{2}=0.2\) such that the inequality (2) holds. Under the switching state-feedback controller (13), let us select the control gains \(k_{1}=k_{2}=12,\) \(r_{1}=r_{2}=6,\) \(\pi _{ij}=2\), \(\varpi _{ij}=0.02\) and \(\sigma =0.5\). By simple computation, we can get

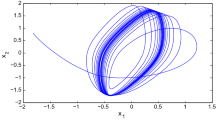

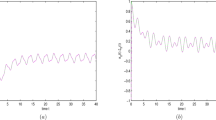

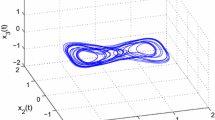

This shows that the conditions of Theorem 1 are satisfied. Thus, the discontinuous and time-delayed CGNNs can realize the finite-time synchronization with the corresponding response system under the switching state-feedback controller (13). Consider the drive-response neural network system with initial conditions \([\phi (t),\psi (t)]=[(2.5,-1.2)^\mathrm{T},(f_{1}(2.5),f_{2}(-1.2))^\mathrm{T}]\), for \(t\in [-1,0]\) and \([\varphi (t),\omega (t)]=[(-1,2)^\mathrm{T},(f_{1}(-1),f_{2}(2))^\mathrm{T}]\) for \(t\in [-1,0]\). Figure 1 presents the trajectory of each error state which approaches to zero in a finite time. Figure 2 describes the state trajectories of drive system and its corresponding response system. The numerical simulations fit the theoretical results perfectly.

Example 2

Consider the following 2-dimensional CGNNs (1) with \(d_{i}(x_{i}(t))=0.7+\frac{0.2}{1+x_{i}^{2}(t)}(i=1,2)\), \(a_{1}(x_{1}(t))=4x_{1}(t)\), \(a_{2}(x_{2}(t))=3x_{2}(t)\), \(b_{11}=-1\), \(b_{12}=-0.1\), \(b_{21}=-1.5\), \(b_{22}=0.8\), \(c_{11}=-1\), \(c_{12}=-0.2\), \(c_{21}=0.8\), \(c_{22}=-2.5\), \(w_{11}=w_{22}=0.02\), \(w_{21}=w_{12}=0\), \(J_{1}=J_{2}=0\) and \(\tau _{j}(t)=\delta _{j}(t)=1(j=1,2)\). The discontinuous activation functions are given as

It is not difficult to check that the discontinuous activation function \(f_{i}(\theta )\) satisfies the assumption (\(\mathscr {H}\)2)with \(L_{1}=L_{2}=0.3\) and \(p_{1}=p_{2}=0.2\). Under the switching state-feedback controller (14), let us choose the control gains \(\ell _{1}=\ell _{2}=6\), \(\eta _{1}=\eta _{2}=5\), \(\hbar =q=0.01\), \(\zeta _{i}=\vartheta _{i}=1\) and \(\sigma =0.5\). It is easy to calculate that

Hence, all the conditions of Theorem 2 are satisfied. Then, the discontinuous and time-delayed CGNNs can realize the finite-time synchronization with the corresponding response system under the switching state-feedback controller (14). Consider the initial conditions of drive-response neural network system: \([\phi (t),\psi (t)]=[(3,-2)^\mathrm{T},(f_{1}(3),f_{2}(-2))^\mathrm{T}]\), for \(t\in [-1,0]\) and \([\varphi (t),\omega (t)]=[(-2,3)^\mathrm{T},(f_{1}(-2),f_{2}(3))^\mathrm{T}]\) for \(t\in [-1,0]\). Figures 3 and 4 also show the theoretical results are correct.

Remark 5

Comparing with the control methods of [34–38], only the asymptotic or exponential synchronization can be realized for neural networks. From Example 1 and 2, it can be seen that the proposed finite-time synchronization control method here in this paper has better convergence property for neural networks. On the other hand, different from the existing control methods used in studying finite-time synchronization of [16, 43, 47], our switching state-feedback control method can be used to deal with more general neural networks with time-delay and discontinuous factors. Example 1 and 2 can also illustrate that our control method is effective and the conditions are easy to be verified.

5 Conclusions

In this paper, we have dealt with the finite-time synchronization issue of a class of CGNNs with discontinuous activations and mixed time-delays. Firstly, we have designed two classes of novel switching state-feedback controllers. Such switching state-feedback controllers play an important role in handling the uncertain differences of the Filippov solutions for discontinuous CGNNs and can eliminate the influence of time-delay on the states of CGNNs. Then, some easily testable conditions have been established to check the finite-time synchronization control of drive-response system. The main tools of this paper involve the differential inclusion theory, non-smooth analysis theory, the famous finite-time stability theorem, inequality techniques and the generalized Lyapunov functional method. Finally, the designed control method and theoretical results have been illustrated by numerical examples. We think that it would be interesting to extend the theory and control method of this paper to other classes of discontinuous neural networks such as stochastic neural networks, Markovian jump switched neural networks, reaction-diffusion neural networks, memristor-based neural networks, bidirectional associative memory (BAM) neural networks, and so on. There are some papers [40–45] related to this topic. In addition, it is also expected to explore some other complex dynamical behaviors of discontinuous neural networks such as homoclinic orbits, cluster synchronization, exponential \(H_{\infty }\) filtering problem, finite-time boundedness and passivity [46–49].

References

Cohen M, Grossberg S (1983) Absolute stability and global pattern formation and parallel memory storage by competitive neural networks. IEEE Trans Syst Man Cybern 13:815–826

Liao XF, Li CG, Wong KW (2004) Criteria for exponential stability of Cohen–Grossberg neural networks. Neural Netw 17:1401–1414

Wang L, Zou XF (2002) Exponential stability of Cohen–Grossberg neural networks. Neural Netw 15:415–422

Zheng CD, Shan QH, Zhang HG, Wang ZS (2013) On stabilization of stochastic Cohen–Grossberg neural networks with mode-dependent mixed time-delays and Markovian switching. IEEE Trans Neural Netw Learn Syst 24:800–811

Zhu QX, Li XD (2012) Exponential and almost sure exponential stability of stochastic fuzzy delayed Cohen–Grossberg neural networks. Fuzzy Sets Syst 203:74–94

Chong EKP, Hui S, Zak SH (1999) An analysis of a class of neural networks for solving linear programming problems. IEEE Trans Autom Control 44:1995–2006

Forti M, Nistri P (2003) Global convergence of neural networks with discontinuous neuron activations. IEEE Trans Circuits Syst I. Fundam Theory Appl 50:1421–1435

Kennedy MP, Chua LO (1988) Neural networks for nonlinear programming. IEEE Trans Circuits Syst I. Fundam Theory Appl 35:554–562

Wang DS, Huang LH, Cai ZW (2013) On the periodic dynamics of a general Cohen–Grossberg BAM neural networks via differential inclusions. Neurocomputing 118:203–214

Wang DS, Huang LH (2014) Periodicity and global exponential stability of generalized Cohen–Grossberg neural networks with discontinuous activations and mixed delays. Neural Netw 51:80–95

Wang DS, Huang LH (2016) Periodicity and multi-periodicity of generalized Cohen–Grossberg neural networks via functional differential inclusions. Nonlinear Dyn 85:67–86

Liu XY, Park JH, Jiang N, Cao JD (2014) Nonsmooth finite-time stabilization of neural networks with discontinuous activations. Neural Netw 52:25–32

Liu XY, Chen TP, Cao JD, Lu WL (2011) Dissipativity and quasi-synchronization for neural networks with discontinuous activations and parameter mismatches. Neural Netw 24:1013–1021

Yang XS, Cao JD (2013) Exponential synchronization of delayed neural networks with discontinuous activations. IEEE Trans Circuits Syst I. Fundam Theory Appl 60:2413–2439

Cai ZW, Huang LH, Guo ZY, Zhang LL, Wan XT (2015) Periodic synchronization control of discontinuous delayed networks by using extended Filippov-framework. Neural Netw 68:96–110

Liu XY, Yu WW, Cao JD, Alsaadi F (2015) Finite-time synchronisation control of complex networks via non-smooth analysis. IET Control Theory Appl 9:1245–1253

Filippov AF (1988) Differential equations with discontinuous right-hand side. Mathematics and its applications (Soviet series). Kluwer Academic, Boston

Aubin JP, Cellina A (1984) Differential inclusions, set-valued functions and viability theory. Springer, Berlin

Wang KN, Michel AN (1996) Stability analysis of differential inclusions in banach space with application to nonlinear systems with time delays. IEEE Trans Circuits Syst I. Fundam Theory Appl 43(8):617–626

Clarke FH (1983) Optimization and nonsmooth analysis. Wiley, New York

Forti M, Grazzini M, Nistri P, Pancioni L (2006) Generalized Lyapunov approach for convergence of neural networks with discontinuous or non-Lipschitz activations. Phys D Nonlinear Phenom 214(1):88–99

Cortés J (2008) Discontinuous dynamical systems. IEEE Control Syst 28(3):36–73

Aubin JP, Frankowska H (1990) Set-valued analysis. Birkhauser, Boston

Clarke FH, Ledyaev Y, Stern RJ, Wolenski PR (1998) Nonsmooth analysis and control theory. Springer, New York

Hardy GH, Littlewood JE, Polya G (1988) Inequalities. Cambridge University Press, London

Zhu QX, Cao JD (2010) Robust exponential stability of Markovian jump impulsive stochastic Cohen–Grossberg neural networks with mixed time delays. IEEE Trans Neural Netw 21:1314–1325

Zhu QX, Cao JD (2011) Exponential stability analysis of stochastic reaction–diffusion Cohen–Grossberg neural networks with mixed delays. Neurocomputing 74:3084–3091

Zhu QX, Li XD, Yang XS (2011) Exponential stability for stochastic reaction–diffusion BAM neural networks with time-varying and distributed delays. Appl Math Comput 217:6078–6091

Wu HQ (2009) Global stability analysis of a general class of discontinuous neural networks with linear growth activation functions. Inf Sci 179:3432–3441

Wu HQ, Shan CH (2009) Stability analysis for periodic solution of BAM neural networks with discontinuous neuron activations and impulses. Appl Math Model 33:2564–2574

Wu HQ, Wang LF, Wang Y, Niu PF, Fang BL (2016) Exponential state estimation for Markovian jumping neural networks with mixed time-varying delays and discontinuous activation functions. Int J Mach Learn Cybern 7:641–652

Wu HQ, Zhang XW, Li RX, Yao R (2015) Adaptive exponential synchronization of delayed Cohen–Grossberg neural networks with discontinuous activations. Int J Mach Learn Cybern 6:253–263

Wang H, Zhu QX (2015) Finite-time stabilization of high-order stochastic nonlinear systems in strict-feedback form. Automatica 54:284–291

Rakkiyappan R, Dharani S, Zhu QX (2015) Synchronization of reaction–diffusion neural networks with time-varying delays via stochastic sampled-data controller. Nonlinear Dyn 79:485–500

Zhu QX, Cao JD (2012) pth moment exponential synchronization for stochastic delayed Cohen–Grossberg neural networks with Markovian switching. Nonlinear Dyn 67:829–845

Zhu QX, Cao JD (2011) Adaptive synchronization under almost every initial data for stochastic neural networks with time-varying delays and distributed delays. Commun Nonlinear Sci Numer Simul 16:2139–2159

Zhu QX, Cao JD (2010) Adaptive synchronization of chaotic Cohen–Crossberg neural networks with mixed time delays. Nonlinear Dyn 61:517–534

Cao JD, Alofi A, Al-Mazrooei A, Elaiw A (2013) Synchronization of switched interval networks and applications to chaotic neural networks. Abstract and applied analysis, Article ID 940573, 11 pages

Yang XS, Cao JD, Ho DWC (2015) Exponential synchronization of discontinuous neural networks with time-varying mixed delays via state feedback and impulsive control. Cognit Neurodyn 9:113–128

Syed Ali M, Saravanan S, Cao JD (2017) Finite-time boundedness, \(L_{2}\)-gain analysis and control of Markovian jump switched neural networks with additive time-varying delays. Nonlinear Anal Hybrid Syst 23:27–43

Rakkiyappan R, Dharani S, Zhu QX (2015) Stochastic sampled-data \(H_{\infty }\) synchronization of coupled neutral-type delay partial differential systems. J Franklin Inst 352:4480–4502

Yang XS, Cao JD, Yang ZC (2013) Synchronization of coupled reaction–diffusion neural networks with time-varying delays via pinning-impulsive controller. SIAM J Control Optim 51:3486–3510

Velmurugan G, Rakkiyappan R, Cao JD (2016) Finite-time synchronization of fractional-order memristor-based neural networks with time delays. Neural Netw 73:36–46

Cao JD, Song QK (2006) Stability in Cohen–Grossberg-type bidirectional associative memory neural networks with time-varying delays. Nonlinearity 19:1601–1617

Cao JD, Ho DWC, Huang X (2007) LMI-based criteria for global robust stability of bidirectional associative memory networks with time delay. Nonlinear Anal Theory Methods Appl 66:1558–1572

Cao JD, Li LL (2009) Cluster synchronization in an array of hybrid coupled neural networks with delay. Neural Netw 22:335–342

Wu YY, Cao JD, Alofi A, Al-Mazrooei A, Elaiw A (2015) Finite-time boundedness and stabilization of uncertain switched neural networks with time-varying delay. Neural Netw 69:135–143

Cao JD, Rakkiyappan R, Maheswari K, Chandrasekar A (2016) Exponential \(H_{\infty }\) filtering analysis for discrete-time switched neural networks with random delays using sojourn probabilities. Sci China Technol Sci 59:387–402

Wu AL, Zeng ZG (2014) Passivity analysis of memristive neural networks with different memductance functions. Commun Nonlinear Sci Numer Simul 19:274–285

Acknowledgements

This work was supported by National Natural Science Foundation of China (11626100, 11371127), Natural Science Foundation of Hunan Province (2016JJ3078), Scientific Research Youth Project of Hunan Provincial Education Department (16B133).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Cai, ZW., Huang, LH. Finite-time synchronization by switching state-feedback control for discontinuous Cohen–Grossberg neural networks with mixed delays. Int. J. Mach. Learn. & Cyber. 9, 1683–1695 (2018). https://doi.org/10.1007/s13042-017-0673-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-017-0673-9