Abstract

This paper discusses the synchronization problem for a class of reaction–diffusion neural networks with Dirichlet boundary conditions. Unlike other studies, a sampled-data controller with stochastic sampling is designed in order to synchronize the concerned neural networks with reaction–diffusion terms and time-varying delays, where \(m\) sampling periods are considered whose occurrence probabilities are given constants and satisfy the Bernoulli distribution. A novel discontinuous Lyapunov–Krasovskii functional with triple integral terms is introduced based on the extended Wirtinger’s inequality. Using Jensen’s inequality and reciprocally convex technique in deriving the upper bound for the derivative of the Lyapunov–Krasovskii functional, some new synchronization criteria are obtained in terms of linear matrix inequalities. Numerical examples are provided in order to show the effectiveness of the proposed theoretical results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

During the past decades, different types of neural network models have been investigated extensively and have been implemented widely in different areas such as combinatorial optimization, signal processing, pattern recognition, speed detection of moving objects, optimization and associative memories, see [1–4]. Such potential applications strongly depend on the dynamical behaviors of neural networks. Therefore, the study of dynamical behaviors of neural networks is an essential step in the practical design of neural networks. Since neural networks can exhibit some complicated dynamics and even chaotic behavior, the synchronization of neural networks has also become an important area of study and there have been many investigations, see [5–10], for instance.

It is well known that in the course of studying neural networks, time delays are unavoidable, which is an inherent phenomena due to the finite processing speed of information, for example, the finite axonal propagation speed from soma to synapses, the diffusion of chemical across the synapses, the postsynaptic potential integration of membrane potential at the neuronal cell body and dendrites. Moreover, in the electronic implementation of analog neural networks, time delays inevitably occur in the communication and response of neurons due to the finite switching speed of amplifiers [11] and transmission of signals in hardware implementation. In addition, the process of moving images requires the introduction of delays in signals transmitted among the cells [12]. Time delays may lead to undesirable dynamical network behaviors such as oscillation, divergence or instability and thus to manufacture high-quality neural networks that it is necessary to study the dynamics of neural networks with delays. However, there are few results in the literature for the synchronization issue of neural networks with time-varying delays, see [13–16] and references therein. All the aforementioned works do not take diffusion effects into consideration.

It is common to describe the neural network models by ordinary differential equations. But in the real world, the diffusion effects cannot be ignored in the neural network model when electrons are moving in an uneven electromagnetic field. For instance, it is well known that the multilayer cellular neural networks which are arrays of nonlinear and simple computing elements characterized by local interactions between cells will well suit to describe locally interconnected simple dynamical systems showing a lattice-like structure. In other words, the whole structure and dynamic behavior of multilayer cellular neural networks not only heavily depend on the evolution time of each variable and its position but also on its interactions deriving from the space-distributed structure of the whole networks. Therefore, it essential to consider the state variables varying with time and space variables. On the other side, there are a large number of reaction–diffusion phenomena in nature and many discipline fields, particularly in chemistry and biology. When such phenomena exist in chemical reactions, the interaction between chemicals and spatial diffusion on the chemical media can be seen until a steady-state spatial concentration pattern has completely developed. Thus, it is of great importance to model both biological and man-made neural networks with reaction–diffusion effects. In [17–21], the authors have investigated the globally exponential stability and periodicity of reaction–diffusion recurrent neural networks with Dirichlet boundary conditions. The globally exponential stability and synchronization of delayed reaction–diffusion neural networks with Dirichlet boundary conditions under the impulsive controller have been studied in [22]. In [23], the authors have formulated and solved the feedback stabilization problem for unstable nonuniform spatial pattern in reaction–diffusion systems. Boundary control to stabilize a system of coupled linear plant and reaction–diffusion process has been considered in [24], and backstepping transformations with a kernel function and a vector-valued function were introduced to design control laws. Adaptive synchronization in an array of linearly coupled neural networks with reaction–diffusion terms and time delays has been discussed in [25]. Very recently, the authors in [26] have studied the synchronization problem for coupled reaction–diffusion neural networks with time-varying delays via pinning-impulsive controller, and some novel synchronization criteria have been proposed. Both theoretically and practically the models with time delays and reaction–diffusion terms provide a good approximation for neural networks, and this effect leads to poor performance of the networks. Therefore, it becomes a challenging problem for the researchers to design a controller that completely makes use of their advantages and also that completely ignores their disadvantages.

In recent years, the sampled-data-based discrete control approach has experienced a wide range of applications than other type of control approaches such as state feedback control, sliding mode control, fuzzy logic control and intermittent control. Since the signals which we use in real world are analog, such as our voices, in order to process these signals in computers, it is necessary to convert them into digital signals, which are discrete in both time and amplitude. Employing the sampling process in which the values of the signals are measured at certain interval of time, the continuous signals are converted into discrete ones and this measurement is referred to as a sample signal. Then, these signals are fed into the digital controller, which processes and finally transformed into continuous signals from the zero-order hold device. An application of this technique is in the radio broadcasts of the live musical program. Therefore, due to the wide range of applications in the real world, the controller design problem using the sampled-data has attracted much attention and corresponding results have been published in the literature, see [27–34].

Selecting proper sampling period is the most important task in sampled-data control systems for designing suitable controllers. In the last decades, a considerable attention has been paid for constant sampling but in recent years it is seen that variable sampling periods are applied to various practical systems [35] due to their ability in dealing effectively with the problems such as change in network situation and limitation of the calculating speed of hardware. The stability problem of feedback control systems with time-varying sampling periods was discussed in [36, 37]. Further extension to the time-varying sampling periods is the stochastically varying sampling periods, and it has been used in the control of sampled-data systems in most recent works. In [38], the problem of robust \(H_\infty \) control for sampled-data systems with uncertainties and probabilistic sampling has been investigated. More recently, robust synchronization of uncertain nonlinear chaotic systems has been investigated in [39] using stochastic sampled-data control, where \(m\) sampling periods are taken into consideration. To the best of authors’ knowledge, there has been no results found in the literature regarding the synchronization problem for reaction–diffusion neural networks with time-varying delays via a stochastic sampled-data controller.

Motivated by the above discussions, in the present work, we have studied the synchronization problem for reaction–diffusion neural networks with time-varying delays by designing a suitable sampled-data controller with stochastic sampling. We have considered \(m\) sampling periods, whose occurrence probabilities are given constants and satisfy Bernoulli distribution. By constructing a new discontinuous Lyapunov–Krasovskii functional with triple integral terms and by employing Jensen’s inequality and reciprocally convex technique, some novel and easily verified synchronization criteria are derived in terms of LMIs, which can be solved using any of the available standard softwares. Numerical simulations are provided in order to show the efficiency of our theoretical results.

The rest of the paper is organized as follows: Notations that we carry out throughout this paper and some necessary lemmas are given in Sect. 2. In Sect. 3, the considered model of reaction–diffusion neural networks with time-varying delays is presented. In Sect. 4, asymptotic synchronization of the proposed model in mean-square sense is studied by designing a sampled-data controller with stochastic sampling. Numerical simulations are presented in Sect. 4, and conclusions are drawn in Sect. 5.

2 Notations and preliminaries

Let \(R^n\) denotes the \(n\)-dimensional Euclidean space and \(R^{n\times m}\) denotes the set of all real \(n\times m\) matrices. \(P>0\) (\(P\ge 0\)) means that the \(P\) is symmetric and positive definite (positive semi-definite). In symmetric matrices, the notation \((\star )\) represents a term that is induced by symmetry. Let \(\text{ Prob }\{\alpha \}\) denotes the occurrence probability of an event \(\alpha .\) The conditional probability of \(\alpha \,\text{ and }\,\ \beta \) is denoted by \(\text{ Prob }\{\alpha | \beta \}. \mathbb {E}\{x\} \,\text{ and }\, \mathbb {E}\{x| y\}\) are the expectation of a stochastic variable \(x\) and the expectation of the stochastic variable \(x\) conditional on the stochastic variable \(y,\) respectively. \(\varXi (i,j)\) denotes the \(i\)th row, \(j\)th column element of a matrix \(\varXi .\,A=(a_{ij})_{N\times N}\) denotes a matrix of \(N\)-dimension. T denotes the transposition of a matrix. \(C=\mathrm{diag}(c_1,c_2,\ldots ,c_n)\) means that \(C\) is a diagonal matrix.

Before proceeding further, it is necessary to introduce the following Lemmas.

Lemma 1

[40, 41] For any constant matrix \(X\in R^{n\times n}, X=X^T>0,\) two scalars \(h_1>0,h_2\ge 0\) such that the integrations concerned are well defined, then

Lemma 2

[42] For any vectors \(\delta _{1}, \delta _{2}\), constant matrices \(R,S\) and real scalars \(\alpha \ge 0, \beta \ge 0\) satisfying that \(\left[ \begin{array}{ll} R &{} S \\ \star &{} R\end{array} \right] \ge 0\), and \(\alpha +\beta =1\), then the following inequality holds:

3 Problem formulation

Generally, neural network models are described by ordinary differential equations. But in the real world, diffusion effect cannot be avoided in the neural network model when electrons are moving in asymmetric electromagnetic field. Due to this phenomenon, we introduce a single neural network with reaction–diffusion terms and time-varying delays as follows:

where \(x=(x_1,x_2,\ldots ,x_q)^T\in \varOmega \subset R^q\), where \(\varOmega =\{x||x_k|\le l_k, k=1,2,\ldots ,q\}\) and \(l_k\) is a positive constant. \(d_{mk}\ge 0\) means the transmission diffusion coefficient along the \(m\)th neuron, \(y_m(t,x) (m=1,2,\ldots ,n)\) is the state of the \(m\)th unit at time \(t\) and in space \(x\). \(n\) corresponds to the number of neurons. \(f_j(y_j(t,x))\) denotes the neuron activation function of the \(j\)th unit at time \(t\) and in space \(x\). \(c_m>0 (m=1,2,\ldots ,n)\) represents the rate with which the \(m\)th unit will reset its potential to the resting state in isolation when disconnected from the network and external inputs. \(a_{mj}\) and \(b_{mj}\) denotes the strength of the \(j\)th unit on the \(m\)th unit at time \(t\) and in space \(x\) and the strength of the \(j\)th unit on the \(m\)th unit at time \(t-d(t)\) and in space \(x\), respectively. \(d(t)\) denotes the time-varying transmission delay along the axon of the \(j\)th unit from the \(m\)th unit and satisfies \(d_1\le d(t)\le d_2, \dot{d}(t)\le \mu ,\) where \(d_2>d_1>0, \mu \) are real constants. \(J_m\) is the external input.

Assumption 1

For any \(u,v \in R,\) the neuron activation function \(g_i(\cdot )\) is continuously bounded and satisfy

where \(F_i^-\) and \(F_i^+\) are some real constants and may be positive, zero or negative.

System (4) is supplemented with the following Dirichlet boundary condition

Also, system (4) has a unique continuous solution for any initial condition of the form

where \(\phi _m(s,x)\!=\!(\phi _1(s,x),\phi _2(s,x),\ldots ,\phi _n(s,x))^T\!\in C([-d_2,0]\times \varOmega ,R^n),\) in which \(C([-d_2,0]\times \varOmega ,R^n)\) stands for the Banach space of all continuous functions from \([-d_2,0] \times \varOmega \) to \(R^n\) with the norm

Rewriting system (4) in a compact form, we obtain

where \(y(t,x)=(y_1(t,x),y_2(t,x),\ldots ,y_n(t,x))^T\!, D_k=\mathrm{diag}(d_{1k},d_{2k},\ldots ,d_{nk}), f(y(t,x))\!=\!(f_1(y_1(t,x)),\)

\(f_2(y_2(t,x)),\ldots ,f_n(y_n(t,x)))^T\!, C\!\!=\!\mathrm{diag}(c_1,c_2,\ldots ,c_n), A\!=\!(a_{mj})_{n\times n}\), and \(B\!=\!(b_{mj})_{n\times n}, J\!=\!(J_1,J_2,\ldots ,J_n)^T.\) It is a common fact that neural networks may lead to bifurcation, oscillation, divergence or instability if the networks’ parameters and time delays are appropriately chosen. In this case, those networks become unstable. Thus, in order to control the dynamic behaviors of system (9), we introduce the control model of system (9) as

where \(u_i(t,x)=(u_{i1}(t,x),u_{i2}(t,x),\ldots ,u_{in}(t,x))^T\in R^n\) is the state vector of the \(i\)th node at time \(t\) and space \(x, U_i(t,x)\) is the controller of the \(i\)th node at time \(t\) and space \(x.\) Define the error vector as \(e_i(t,x)=u_i(t,x)-y(t,x), i=1,2,\ldots ,N.\) Thus, we get the error dynamical system from (9) and (10) as

where \(g(e_i(t,x))=f(u_i(t,x))-f(y(t,x)).\) It is obvious that (11) satisfies the Dirichlet boundary condition and its initial condition is given by

Controllers which we use nowadays are mostly digital controllers or networked to the system. These control systems can be modeled by sampled-data control systems. Thus, the sampled-data control approach has received much attention, and in this paper, the controller design using sampled-data signal with stochastic sampling is investigated. For this purpose, assume the control input to be in the form,

where \(K_i\) is the gain matrix, \(t_k\) is the updating instant time and the sampling interval is defined as \(h=t_{k+1}-t_k.\) Under the controller (12), system (11) can be represented as

The sampling period is denoted by \(h\) and is assumed to take \(m\) values such that \(t_{k+1}-t_k = h_p,\) where the integer \(p\) take values randomly in a set \(\{1,2,\ldots ,m\}.\) The occurrence probability of each sampling period is given by

where \(\beta _p\in [0,1] ,p=1,2,\ldots ,m\) are known constants and such that \(\sum _{p=1}^m \beta _p=1.\) Also, \(0=h_0<h_1<\cdots <h_m.\)

Further, time delays in the control input are often encountered in many real-world problems, which may cause poor performance or instability of the system, and hence, the presence of time delays should be considered in control input. Moreover, it should be mentioned that time delays in the control input may be variable due to the complex disturbance or other conditions. Motivated by this fact, in this paper, we introduce the time-varying delay in the control input, and thus, the controller (12) takes the form

where we write \(t_k=t-(t-t_k)=t-\tau (t).\) Here, \(\tau (t)\) is the time-varying delay and satisfies \(\dot{\tau }(t)=1.\) The probability of the time-varying delay is defined as follows:

The stochastic variables \(\alpha _p(t)\,\text{ and }\, \beta _p(t)\) are defined as

The probability of these stochastic variables are given by,

where \(p=1,2,\ldots ,m\) and \(\sum _{p=1}^m \alpha _p=1.\) Since \(\alpha _p(t)\) satisfies the Bernoulli distribution as reported in [38], we have

Therefore, system (11) with \(m\) sampling intervals can be expressed as

where \(i=1,2,\ldots ,N\) and \(h_{p-1}\le \tau _p(t)<h_p.\)

Definition 1

[38] The error system (17) is said to be mean-square stable if for any \(\epsilon >0, \sigma (\epsilon ) >0\) such that \(\mathbb {E}\{\Vert e_i(t,x)\Vert ^2\}<\epsilon , t>0 \) when \(\mathbb {E}\{\Vert e_i(0,x)\Vert ^2\ < \sigma (\epsilon ).\) In addition, if \(\lim _{t\rightarrow \infty } \mathbb {E}\{\Vert e_i(t,x)\Vert ^2\}=0,\) for any initial condition, the system (17) is said to be globally mean-square asymptotically stable.

In the following theorem, asymptotic stability of the error system (17) in the sense of mean square is investigated based on a novel Lyapunov–Krasovskii functional, and sufficient conditions that ensure the system stability are derived by employing some inequality techniques. We establish our main result based on the LMI approach.

For representation convenience, the following notations are introduced:

Theorem 1

For given positive constants \(\alpha _p, \lambda _p, h_p (p=1,2,\ldots ,m), \mu \), system (17) is globally mean-square asymptotically stable, if there exist matrices \(P>0, Q>0, G>0, W>0, H>0, S\!>\!0, X\!>\!0, \tilde{T}>0, G_1>0, G_2>0, Z_p>0, \ U_p>0, X_p>0, Y_p>0, M_p>0, S_p>0 (p=1,2,\ldots ,m),\) symmetric matrices \(T_p>0, W_p>0 (p=1,2,\ldots ,m),\) diagonal matrices \(\varLambda _{1}>0, \varLambda _{2}>0, \varLambda _{3}>0, \varLambda _{4}>0,\) and any matrices \(H, V_p (p=1,2,\ldots ,m)\) with appropriate dimensions satisfying the following LMIs:

where

and other entries of \(\varXi _1, \varXi _2, \varXi _3\) are zero, \(h_0=0\) and \(\alpha _p,Z_p,U_p,\bar{S}_p=0,\,\text{ for }\,p>m.\) Then, the desired gain is given by \(K=G^{-1}\tilde{H}.\)

Proof

Consider the following discontinuous Lyapunov functional for the error system (17):

where

Obviously, \(V_{10p}(t)\) can be easily computed by Wirtinger’s inequality. Also, since \(V_{10p}(t)\) will disappear at \(t=t_k,\) we obtain \(\lim _{t\rightarrow t_k^-}V(t)\ge V(t_k).\)

Define the infinitesimal operator \(L\) of \(V(t)\) as follows:

It can be derived that

where

Applying Lemma 1 to the last integration term, it follows that

\(\delta _{1p}(t)\!=\!\int _{t-\tau _p(t)}^{t-h_{p-1}} \dot{e}_i(s,x)\mathrm {d}s,\ \delta _{2p}(t)\!=\!\int _{t-h_p}^{t-\tau _p(t)}\dot{e}_i(s,x) \mathrm {d}s.\) Combining (19) and Lemma 2, we obtain

Substituting (45) into (44) yields

Furthermore,

Consider the following two zero equalities,

where \(T_p\,\text{ and }\,W_p\) are any symmetric matrices. By adding the above zero equalities to the left-hand side of \(LV_8(t),\) we get

Also, we have

In order to obtain \(LV_{10p}(t)\), we do the following to the last integration term of \(V_{10p}(t):\)

The definition of \(\tau _p(t)=t-t_k\) shows that the last integration term of \(V_{10p}(t)\) is

Using fully the information of stochastically varying interval delay \(\tau (t)\), the stochastic variables \(\rho _{pr}(t)\,(r\le p=1,2,\ldots ,m)\) are introduced and such that

with the probability,

where \(\sum \nolimits _{p=1}^{m} \sum _{r=1}^p \rho _{pr}=1.\) Then, \(V_{10p}(t)\) can be rewritten as the following:

By (51), we can easily get

According to the error system (17), we can have

with \(\tilde{H}=GK_i.\)

For positive diagonal matrices \(\varLambda _{1},\varLambda _{2},\varLambda _{3}\,\text{ and }\,\varLambda _{4}\), we can get from Assumption 1 that

From Eqs. (37)–(48) and adding (53)–(57) to \(LV(t)\), we obtain

where the matrix \(\varPhi \) is defined in Theorem 1 and \(\zeta _i(t,x)=[e_i^T(t,x) e_{im}^T(t,x) e_i^T(t-d_1,x) e_i^T(t-d(t),x) e_i^T(t-d_2,x) \dot{e}_i^T(t,x) g^T(e_i(t,x)) g^T(e_i(t-d_1,x)) g^T(e_i(t-d(t),x)) g^T(e_i(t-d_2,x)) \dot{e}_i^T(t-d_1,x) \int _{t-d_1}^t e_i^T(s,x)\mathrm {d}s \int _{t-d_2}^{t-d_1}e_i^T(s,x)\mathrm {d}s]^T\) with \(e_{im}(t,x) = [e_i^T(t-\tau _1(t),x) e_i^T(t-h_1,x) \int _{t-h_1}^{t-h_0}e_i^T(s,x)\mathrm {d}s \cdots e_i^T(t-\tau _m(t),x) e_i^T(t-h_m,x) \int _{t-h_m}^{t-h_{m-1}}e_i^T(s,x)\mathrm {d}s]^T.\)

By (58) and (18)–(21), we obtain

which together with Definition 1 implies that the error system (17) is mean-square stable. This completes the proof.

Remark 1

In Theorem 1 taking \(m=2,\) we obtain the matrix \(\varPhi \) as

where

The Lyapunov functional (23) is discontinuous due to the presence of the Lyapunov functional \(V_{10}(t)\). If \(S_p=0\) in (23), the Lyapunov functional becomes continuous and the following can be obtained as a consequence of the above theorem.

Corollary 1

For given positive constants \(\alpha _p, \lambda _p, h_p (p=1,2,\ldots ,m), \mu \), the system (17) is globally mean-square asymptotically stable, if there exist matrices, \(P>0, Q>0, G>0, W>0, H>0, S>0, X>0, \tilde{T}>0, G_1>0, G_2>0, Z_p>0, U_p>0, X_p>0, Y_p>0, M_p>0 (p=1,2,\ldots ,m),\) symmetric matrices \(T_p>0, W_p>0 (p=1,2,\ldots ,m),\) diagonal matrices \(\varLambda _1>0, \varLambda _2>0, \varLambda _3>0, \varLambda _4>0,\) and any matrices \(H, V_p (p=1,2,\ldots ,m)\) with appropriate dimensions satisfying the LMIs (18) such that (18) \(|_{S_p=0} (\forall p=1,2,\ldots ,m)\) and (19)–(21). Moreover, the desired control gain matrix is given by \(K_i=G^{-1}\tilde{H}.\)

Proof

The proof of this corollary is similar to that of Theorem 1 without considering the discontinuous Lyapunov functional.

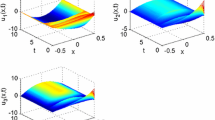

Chaotic behavior of the states \(y_1(t,x)\) and \(y_2(t,x)\) in system (60)

Remark 2

In this paper, the discontinuous Lyapunov functional approach, which makes full use of the sawtooth characteristic of the sampling input delay, has been handled, and for this purpose, the stochastic variables \(\beta _p(t)\) and \(\rho _{pr}(t)\) are introduced. This gives the significance of this present work. To the best of authors’ knowledge, this procedure for the synchronization of reaction–diffusion neural networks with time-varying delays has not yet been studied in the literature.

Remark 3

The effects of time delays and diffusion in the real world cannot be avoided in modeling neural networks, and thus, the neural networks with reaction–diffusion terms and time-varying delays have been considered rigorously, and numerous results have been proposed in the existing literature, see for example [17, 22, 25, 26, 43, 44]. In [43], the authors have discussed the synchronization scheme for a class of delayed neural networks with reaction–diffusion terms using inequality techniques and Lyapunov method.

In [44], the problem of asymptotic synchronization for a class of neural networks with reaction–diffusion terms and time-varying delays has been investigated. Very recently, in [26], the synchronization problem for coupled reaction–diffusion neural networks with time-varying delays has been proposed and a pinning-impulsive control strategy has been developed. However, the problem of synchronization for reaction–diffusion neural networks with time-varying delays through sampled-data controller with stochastic sampling has not yet been investigated in the literature. A discontinuous Lyapunov approach, that uses the sawtooth structure characteristic of the sampling input delay, has been employed, and the synchronization criterion depending on the lower and upper delay bounds has been derived in terms of LMIs using some inequality techniques. Numerical simulations were provided to illustrate the effectiveness of the proposed synchronization criteria.

Remark 4

We can easily see that the results and research method obtained in this paper can be easily extended to many other types of neural networks with reaction–diffusion effects and the Dirichlet boundary conditions, for example, chaotic continuous-time neural networks [45], recurrent neural networks [46] and fuzzy cellular neural networks [47].

4 Numerical examples

In this section, two numerical examples are provided to demonstrate the effectiveness of the proposed results. For convenience, let us choose the number of sampling periods to be two, i.e., \(m=2\).

Example 1

Consider the following reaction–diffusion neural network with the time-varying delay

where \(y(t,x) = (y_1(t,x),y_2(t,x))^T, \tanh (y(t,x))=(\tanh (y_1(t,x)), \tanh (y_2(t,x)))^T, D\!=\!\mathrm{diag}(0.1,0.1), J=0\) and with

Also the activation function satisfies Assumption 1 with \(F_1^-=F_2^-=0\) and \(F_1^+=F_2^+=0.5.\) Thus,

The neural network (60) exhibits chaotic behavior as shown in Fig. 1. Under the stochastic sampled-data controller \(U\), the system (60) can be rewritten as

Then, the error system can be obtained as

Let \(h_0=0, h_1=0.2, h_2=0.4, \beta _1=0.25\) and the time-varying delay \(d(t)=e^t/(1+e^t).\) With these parameters, using the Matlab LMI toolbox, sufficient conditions in terms of LMIs in Theorem 1 are found to be feasible for the given values of \(d_1=0.5, d_2=1\), and the control gain matrix is obtained as

The simulation results are shown in Fig. 2, where \(e_1(t,x)\) and \(e_2(t,x)\) are very close to zero when time increases gradually to 0.5 under the stochastic sampled-data controller (14) and those states are maintained along with the increasing of t, which imply that system (62) is globally asymptotically stable under the stochastic sampled-data controller (14).

Example 2

Consider the same reaction–diffusion neural network (60) with the time-varying delay and with the parameters

and correspondingly the same error system (62). Obviously, Assumption 1 holds with \(F_1^-=F_2^-=0\) and \(F_1^+=F_2^+=0.5.\) Thus, we have

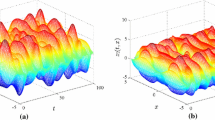

For the aforementioned parameters, system (60) behaves chaotically and it is shown in Fig. 3.

Chaotic behavior of states \(y_1(t,x)\) and \(y_2(t,x)\) of system (60)

Let \(h_0=0, h_1=0.2, h_2=0.4\,\text{ and }\,\beta _1=0.8.\) Using the Matlab LMI toolbox, sufficient conditions of Theorem 1 are verified and found to be feasible for the given values of \(d_1=0.7, d_2=1.3.\) In this case, the controller gain is obtained as

Thus, we conclude that the error system (62) is asymptotically stable in the sense of mean square for the aforementioned parameters. The simulation results that show the chaotic behavior of system (60) is given in Figs. 3 and 4 describes that the error system with aforementioned gain of stochastic sampled-data controller (14) is asymptotically stable as time increases gradually.

The numerical simulations clearly verify the effectiveness of the developed stochastic sampled-data controller with \(m\) sampling intervals to the asymptotical synchronization of neural networks with time-varying delays and reaction–diffusion effects.

5 Conclusions

In contrast to the studies on design of controllers for synchronization of reaction–diffusion neural networks with time-varying delays, in this paper, we have used the sampled-data controller with stochastic sampling for the synchronization process. The sampling periods are assumed to be \(m\) in number, whose occurrence probabilities are given constants and satisfy Bernoulli distribution. The discontinuous Lyapunov functional with triple integral terms that capture the information on the upper and lower bounds of time-varying delays has been constructed based on the extended Wirtinger’s inequality to fully use the sawtooth characteristic of the sampling delay. Sufficient conditions have been derived in terms of LMIs and have been proved to be less conservative due to the use of the discontinuous type Lyapunov functional. The obtained LMIs were easily verified for their feasibility using the Matlab LMI toolbox and the corresponding results are presented with two numerical examples.

References

Young, S., Scott, P., Nasrabadi, N.: Object recognition using multilayer Hopfield neural network. IEEE Trans. Image Process. 6, 357–372 (1997)

Atencia, M., Joya, G., Sandoval, F.: Dynamical analysis of continuous higher order Hopfield neural networks for combinatorial optimization. Neural Comput. 17, 1802–1819 (2005)

Hopfield, J.: Neurons with graded response have collective computational properties like those of two-state neurons. Proc. Natl. Acad. Sci. USA 81, 3088–3092 (1984)

Diressche, P., Zou, X.: Global attractivity in delayed Hopfield neural network models. SIAM J. Appl. Math. 58, 1878–1890 (1998)

Grassi, G., Mascolo, S.: Synchronizing high dimensional chaotic systems via eigenvalue placement in application to cellular neural networks. Int. J. Bifur. Chaos 9, 705–711 (1999)

Ortin, S., Gutierrez, J.M., Pesquera, L., Vasquez, H.: Nonlinear dynamic extraction for time-delay systems using modular neural networks synchronization and prediction. Phys. A 351, 133–141 (2005)

Sanchez, E.N., Ricalde, L.J.: Chaos control and synchronization with input saturation via recurrent neural networks. Neural Netw. 16, 711–717 (2003)

Zhu, Q., Cao, J.: pth moment exponential synchronization for stochastic delayed Cohen–Grossberg neural networks with Markovian switching. Nonlinear Dyn. 67, 829–845 (2012)

Zhu, Q., Cao, J.: Adaptive synchronization under almost every initial data for stochastic neural networks with time-varying delays and distributed delays. Commun. Nonlinear Sci. Numer. Simulat. 16, 2139–2159 (2011)

Cheng, C., Liao, T., Hwang, C.: Exponential synchronization of a class of chaotic neural networks. Chaos Solitons Fract. 24, 197–206 (2005)

Marcus, C.M., Westervelt, R.M.: Stability of analog neural networks with delay. Phys. Rev. A 39, 347–359 (1989)

Civalleri, P., Gilli, M., Pandolfi, L.: On stability of cellular neural networks with delay. IEEE Trans. Circuits Syst. I 40(40), 157–165 (1993)

Cheng, C.J., Liao, T.L., Yang, J.J., Hwang, C.C.: Exponential synchronization of a class of neural networks with time-varying delays. IEEE Trans. Syst. Man Cybern. Part-B 36, 209–215 (2006)

Cui, B.T., Lou, X.Y.: Synchronization of chaotic recurrent neural networks with time-varying delays using nonlinear feedback control. Choas Solitons Fract. 39, 288–294 (2009)

Gao, X., Zhong, S., Gao, F.: Exponential synchronization of neural networks with time-varying delays. Nonlinear Anal. 71, 2003–2011 (2009)

Wang, L., Ding, W., Chen, D.: Synchronization schemes of a class of fuzzy cellular neural networks based on adaptive control. Phys. Lett. A. 74, 1440–1449 (2010)

Lu, J.: Robust global exponential stability for interval reaction–diffusion Hopfield neural networks with distributed delays. IEEE Trans. Circuits Syst. II Expr. Briefs 54, 1115–1119 (2007)

Lu, J., Lu, L.: Global exponential stability and periodicity of reaction–diffusion recurrent neural networks with distributed delays and Dirichlet boundary conditions. Chaos Solitons Fract. 39, 1538–1549 (2009)

Li, X., Cao, J.: Delay-independent exponential stability of stochastic Cohen–Grossberg neural networks with time-varying delays and reaction–diffusion terms. Nonlinear Dyn. 50, 363–371 (2007)

Lu, J.: Global exponential stability and periodicity of reaction–diffusion delayed recurrent neural networks with Dirichlet boundary conditions. Chaos Solitons Fract. 35, 116–125 (2008)

Wang, J., Lu, J.: Global exponential stability of fuzzy cellular neural networks with delays and reaction–diffusion terms. Chaos Solitons Fract. 38, 878–885 (2008)

Hu, C., Jiang, H., Teng, Z.: Impulsive control and synchronization for delayed neural networks with reaction–diffusion terms. IEEE Trans. Neural Netw. 21, 67–81 (2010)

Kashima, K., Ogawa, T., Sakurai, T.: Feedback stabilization of non-uniform spatial pattern in reaction-diffusion systems. American Control Conference (ACC), Washington, DC, USA, June 17–19 (2013)

Zhao, A., Xie, C.: Stabilization of coupled linear plant and reaction–diffusion process. J. Franklin Inst. 351, 857–877 (2014)

Wang, K., Teng, Z., Jiang, H.: Adaptive synchronization in an array of linearly coupled neural networks with reaction–diffusion terms and time-delays. Commun. Nonlinear Sci. Numer. Simul. 17, 3866–3875 (2012)

Yang, X., Cao, J., Yang, Z.: Synchronization of coupled reaction–diffusion neural networks with time-varying delays via pinning-impulsive controller. SIAM J. Control Optim. 51, 3486–3510 (2013)

Wu, Z.-G., Park, J.H., Su, H., Chu, J.: Discontinuous Lyapunov functional approach to synchronization of time-delay neural networks using sampled-data. Nonlinear Dyn. 69, 2021–2030 (2012)

Lam, H.: Stabilization of nonlinear systems using sampled-data output-feedback fuzzy controller based on polynomial-fuzzy-model-based control approach. IEEE Trans. Syst. Man Cybern. Part B Cybern. 42, 258–267 (2012)

Zhu, X., Wang, Y.: Stabilization for sampled-data neural-network-based control systems. IEEE Trans. Syst. Man Cybern. Part B Cybern. 41, 210–221 (2011)

Gan, Q., Liang, Y.: Synchronization of chaotic neural networks with time-delay in the leakage term and parametric uncertainties based on sampled-data control. J. Franklin Inst. 349, 1955–1971 (2012)

Jeeva Sathya Theesar, S., Santo, B., Balasubramaniam, P.: Synchronization of chaotic systems under sampled-data control. Nonlinear Dyn. 70, 1977–1987 (2012)

Krishnasamy, R., Balasubramaniam, P.: Stabilisation analysis for switched neutral systems based on sampled-data control. Int. J. Syst. Sci. doi:10.1080/00207721.2013.871368

Wu, Z., Shi, P., Su, H., Chu, J.: Stochastic synchronization of Markovian jump neural networks with time-varying delay using sampled-data. IEEE Trans. Cybern. 43, 1796–1806 (2013)

Lakshmanan, S., Park, J.H., Rakkiyappan, R., Jung, Y.: State estimator for neural networks with sampled data using discontinuous Lyapunov functional approach. Nonlinear Dyn. 73, 509–520 (2013)

Tahara, S., Fujii, T., Yokoyama, T.: Variable sampling quasi multirate deadbeat control method for single phase PWM inverter in low carrier frequency, pp. 804–809. In PCC. Power Conversion Conference (2007)

Hu, B., Michel, A.N.: Stability analysis of digital feedback control systems with time-varying sampling periods. Automatica 36, 897–905 (2000)

Sala, A.: Computer control under time-varying sampling period: an LMI gridding approach. Automatica 41, 2077–2082 (2005)

Gao, H., Wu, J., Shi, P.: Robust sampled-data \(H_{\infty }\) control with stochastic sampling. Automatica 45, 1729–1736 (2009)

Lee, T., Park, J., Lee, S., Kwon, O.: Robust synchronization of chaotic systems with randomly occurring uncertainties via stochastic sampled-data control. Int. J. Control 86, 107–119 (2013)

Kwon, O., Lee, S., Park, J., Cha, E.: New approaches on stability criteria for neural networks with interval time-varying delays. Appl. Math. Comput. 218, 9953–9964 (2012)

Tian, J., Zhong, S.: Improved delay-dependent stability criterion for neural networks with time-varying delay. Appl. Math. Comput. 217, 10278–10288 (2011)

Park, P., Ko, J., Jeong, C.: Reciprocally convex approach to stability of systems with time-varying delays. Automatica 7, 235–238 (2011)

Wang, Y., Cao, J.: Synchronization of a class of delayed neural networks with reaction–diffusion terms. Phys. Lett. A 369, 201–211 (2007)

Lou, X., Cui, B.: Asymptotic synchronization of a class of neural networks with reaction–diffusion terms and time-varying delays. Comput. Math. Appl. 52, 897–904 (2006)

Balasubramaniam, P., Chandran, R., Jeeva Sathya Theesar, S.: Synchronization of chaotic nonlinear continuous neural networks with time-varying delay. Cogn. Neurodyn. 5, 361–371 (2011)

Balasubramaniam, P., Vembarasan, V.: Synchronization of recurrent neural networks with mixed time-delays via output coupling with delayed feedback. Nonlinear Dyn. 70, 677–691 (2012)

Kalpana, M., Balasubramaniam, P.: Stochastic asymptotical synchronization of chaotic Markovian jumping fuzzy cellular neural networks with mixed delays and the Wiener process based on sampled-data control. Chin. Phys. B 22, 078401 (2013)

Acknowledgments

The work of the first author was supported by NBHM Research Project; Quanxin Zhu’s work was jointly supported by the National Natural Science Foundation of China (61374080), the Natural Science Foundation of Zhejiang Province (LY12F03010), the Natural Science Foundation of Ningbo (2012A610032), and a Project Funded by the Priority Academic Program Development of Jiangsu Higher Education Institutions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Rakkiyappan, R., Dharani, S. & Zhu, Q. Synchronization of reaction–diffusion neural networks with time-varying delays via stochastic sampled-data controller. Nonlinear Dyn 79, 485–500 (2015). https://doi.org/10.1007/s11071-014-1681-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-014-1681-x