Abstract

The main focus of the present study is to develop GEP, ELM, LSSVM, and GMDH soft computing models predicting the scour depth of GCSs enhanced by an energy-based approach and to compare the uncertainty of these developed models. A new definition of energy parameter was employed to achieve more accurate predictions along with a scenario-based approach for input vector selection. The Monte Carlo framework was developed for the uncertainty, reliability, and resiliency analysis of the results. The results confirm the enhancement of the energy-based GEP method for the scour depth prediction in comparison with the other models. The results of robustness evaluations show the lowest uncertainty value of GEP with reliability 52.63% and resiliency 67.57% in comparison with LSSVM (reliability = 35.53%, resiliency = 40%), ELM (reliability = 34.2%, resiliency = 29.4%), and GMDH (reliability = 26.3%, resiliency = 24.6%) in the testing phase. In addition to the simplicity of the extracted equation and adaptability to a wide range of laboratory and field data, the uncertainty and robustness analysis indicate that the proposed model is more efficient than the existing equations and artificial intelligence models such as ELM, GMDH, and LSSVM in predicting the scour depth. It can be concluded that the GEP is more reliable and resilient than the other methods. The developed uncertainty analysis framework in this paper is a new approach reliably predicting the scour depth and extracting an equation that can be combined with mathematical modeling of sediment transport and scour hole geometry predictions or real-case modeling of scour around hydraulic structures.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The high velocity jet over dam spillways can create deep scour holes and riverbed degradation downstream of the spillways in alluvial beds (Roushangar et al. 2016; Sattar et al. 2017). Scouring is the removal of sediments around the hydraulic structure in an alluvial stream. Often, it is the structure that changes the flow pattern around it in a way that it reinforces sediment transport and thereby initiates scouring (Aghaee-Shalmani and Hakimzadeh 2015) where it can eventually cause damage and destroy hydraulic structures (Ebtehaj et al. 2018; Regazzoni and Marot 2011). Grade-control structures are structures made of sand, stone, wood, concrete, or other materials with the purpose of limiting erosion in riverbeds and downstream of dam spillways (Guven and Gunal 2008). These structures are commonly used to manage flood streams and prevent bed erosion and sediment transport (Galia et al. 2016). Therefore, local scour modeling is an important topic in river hydrodynamics, preventing river bed degradation and protecting the integrity of grade-control structures (Laucelli and Giustolisi 2011; Riahi-Madvar et al. 2019a, b).

During the past five decades, multiple traditional and statistical equations have been developed based on experimental and field data to predict the scour depth (Hooshyaripor et al. 2014; Riahi-Madvar et al. 2019a). Although empirical equations can be used easily, they often overestimate or underestimate the scour depth in validation phase (Roushangar et al. 2016). Hence, several soft computing models were developed using effective parameters including upstream water head, weir height, tail water level, bed particle grain-size distribution, and particle density (Roushangar et al. 2016; Sattar et al. 2017; Eghbalzadeh et al. 2018; Sharafati et al. 2019). Ebtehaj et al. (2015) applied group method of data handling (GMDH) to predict the discharge coefficient of spillways. Mehri et al. (2019) also used GMDH to predict the discharge coefficient of a piano key weir. Najafzadeh et al. (2015) used this method to estimate the scour depth around bridge abutments. In these studies, the superiority of GMDH over artificial neural networks (ANNs) and nonlinear regression equations was confirmed.

Gene expression programming (GEP) is another type of soft computing models frequently applied in hydraulic engineering (Guven and Gunal 2008; Najafzadeh and Barani 2011; Azamathulla 2012; Alavi et al. 2011; Moussa 2013; Mesbahi et al. 2016; Riahi-Madvar et al. 2019b and 2021b).

Least-squares support vector machine (LSSVM) is another form of expert system models that have been extensively used for predicting the scour depth of various hydraulic structures (Etemad-Shahidi and Ghaemi 2011; Pal et al. 2011; Chou and Pham 2014, 2017; Najafzadeh et al. 2016; Pourzangbar et al. 2017; Goel and Pal 2009; Barzegar et al. 2019; Chaucharda et al. 2004; Samui and Kothari 2011; Lu et al. 2016; Wang et al. 2017; Han et al. 2019; Hoang 2019; Seifi and Riahi 2020).

Among ANN-based models, the extreme learning machine (ELM) is a novel model (Nourani et al. 2017) which has been employed in different sciences due to high performance and a creative design (Huang et al. 2012; Sahoo et al. 2013; Abdullah et al. 2015; Yaseen et al. 2016; Ebtehaj et al. 2018). Applying ELM model can reduce the training time using the single-layer feed-forward neural networks and random selection of parameters (Huang et al. 2004; Liang et al. 2006; Zhao et al. 2018; Imani et al. 2018; Yousif et al. 2019).

Reviewing the recent studies on predicting the scour depth states a focus on artificial intelligence (AI) evaluations on training and testing datasets. The rigorous study by Scurlock et al. (2012) declared that in most AI approaches, the data used in model developments were not satisfactory to provide reliable predictions of the scour depth, and model errors could reach 300%. Limited training and test datasets can restrict the cross-validation of GCS scour depth prediction model results during the model development process causing a failure to capture the complex relationship between the key factors.

Another major disadvantage of these models originates from the selection of input parameters. The scour depth downstream of GCSs can be predicted based on different parameters including the height of the structure, depth of flow, grain size, tailwater depth and etc. (D’Agostino and Ferro 2004). Pan et al. (2013) showed the importance of the flow energy in the scouring process and its applicability for the scour depth prediction. Regazzoni and Marot (2011) formulated a new erosion resistance index using energy exchange between fluid and sediment. Pagliara et al. (2015) reported that the energy method is reasonably accurate for predicting the state of scour. Based on previous studies survey, the energy-based approach has not yet been integrated with AI models to estimate scour depth downstream of GCSs. Also, the reliability and resilience analysis of developed models over validation data has not been performed.

Another novel contribution in scour modeling downstream of the GCSs is the uncertainty analysis of AI model results. All engineering design processes have uncertainty, and it is not possible to accurately estimate the values of model parameters (Seifi et al. 2020a; Riahi-Madvar and Seifi 2018). Hence, the uncertainty is considered as a part of the design in reliability analysis (Johnson 1992; Alimohammadi et al., 2019 and 2020). The scale differences and lack of data measurement in real-scale analysis can cause uncertainty (D’Agostino and Ferro 2004; Azmathullah et al. 2005; Seifi et al. 2020b). The scour equations formulated in previous studies were simple, and they did not investigate the uncertainty of parameters (Khalid et al. 2019; Muzzammil and Siddiqui 2009). It was assumed that all model parameters were known as certain quantities, while the hydraulic parameters such as depth and flow are stochastic in nature, and equations based on these parameters are also stochastic and uncertain. Therefore, the scour phenomenon and models have uncertainty, and a probabilistic method is required to analyze the uncertainty of model results. So far, the scouring process has not been accurately modeled due to the existing uncertainty of the parameters, unknown physics of the phenomenon, and measurement errors (Yanmaz and Cicekdag 2001; Galia et al. 2016; Lenzi and Comiti 2003). The Monte Carlo method has been extensively used in different study areas to investigate the uncertainty of different AI models (Seifi et al. 2020a, b a,b; Riahi-Madvar et al. 2011; Brandimarte et al. 2006; Sharafati et al. 2020; Gholami et al. 2018).

The present study aims to apply a new approach to improve major shortcomings of scour modeling at GCSs including biased input variables, explicit AI-based predictive equations, and lack of quantification of uncertainty, reliability, and resilience of models developed in previous studies. The current study discusses the effect of energy as a reasonable input variable, a parameter rarely considered in the prediction of the scour state downstream of GCSs. Furthermore, the uncertainty, reliability, and resilience analysis of developed models for GCSs scour prediction are other major drawbacks of the traditional models that are addressed here. To achieve these goals, the performance of GEP, ELM, LSSVM, and GMDH models developed to predict the scour depth downstream of GCSs is compared using the existing field and laboratory data with an energy-based approach, and validation data are used to evaluate the results.

Materials and methods

Datasets

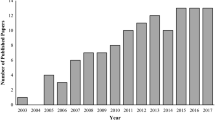

Development of expert system models was performed using 318 datasets, including 276 laboratory datasets (Veronese 1937; Bormann and Julien 1991; D’Agostino 1994; Mossa 1998; Lenzi et al. 2000; Ben Meftah and Mossa 2020) and 42 the Missiga stream field data (data pertaining to (Falciai and Giacomin 1978; D’Agostino and Ferro 2004)) (Table 1). The 13 datasets of Lenzi et al. (2000) were used for cross-validating, reliability, and resilience analysis of the developed models over validation data. From the first dataset (318 datasets), 75% (229 datasets; randomly chosen) were used to train the models and 25% (76 datasets) were used to test the models.

Identification of influencing parameters on the scour depth

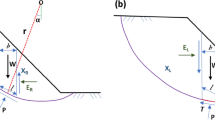

Reviewing previous studies implies that the maximum scour depth of a grade-control structure caused by the erosive power of flow is a function of geometry, hydraulic, and bed properties as expressed in the following functional form.

where s denotes the scour depth, Z is the height of the grade control structure, B is the width of the structure, b is the width of the spillway, H is the difference between the depth of water in the upstream and downstream, h is the depth at the downstream, Q is the water discharge, ρs is the sediment density, ρ is the water density, g is the gravitational acceleration, d90 is the diameter of the particle larger than 90% of the weight of all particles, and d50 is the diameter of the particle larger than 50% of the weight of all particles (Zheng et al. 2021). The box plots of the effective parameters on scour depth, based on the collected datasets in this study, are presented in Fig. 1. The box plot summarizes the distribution of the measured parameters and indicates the skewness, center, spread, and the presence of extreme outlier values in the used datasets.

Previous studies have shown that using dimensionless parameters produces better results than the dimensional parameters (Azamathulla et al. 2005; Guven 2011; Najafzadeh et al. 2014; Mesbahi et al. 2016). Therefore, the Buckingham π theorem is used to obtain dimensionless parameters (Zadehmohamad and Bolouri Bazaz 2017) affecting the scour depth. Using Z, Q, and ρ as main repetitious parameters, the dimensionless parameters of the phenomena can be derived as follows:

By combining the above parameters, and rearranging the dimensionless numbers, new parameters can be obtained as follows:

D’Agostino and Ferro (2004), Najafzadeh (2015) used incomplete self-similarity theory and reported that the dimensionless parameter A50 had a significant effect on scour process. This parameter is defined as follows:

and the functional form of scour depth is rewritten as:

As discussed before, Pagliara et al. (2015) and Pan et al. (2013) used the energy parameter in scour analysis. Therefore, here, the Buckingham π theorem is used to extract the parameter. \({\pi }_{10}=\frac{E}{Z}\) The flow energy (E) at GCS is defined by Eq. (6):

In which, the \({h}_{0}=H+h-Z\), and u is the flow velocity.

The scenario-based approach for the selection of input vectors

In order to investigate the effects of different input parameter configurations and evaluate the sensitivity of results of the developed expert system models to these configurations, a scenario-based approach is applied for the selection of input vectors (Seyedian et al. 2014). The seven scenarios listed in Fig. 2 are created, and a parameter is eliminated in each scenario in order to investigate the effect of various input parameters on the model output. In the first scenario, all parameters in Eq. 5 except energy, including \(\frac{b}{B}, \frac{h}{H}, \frac{{d}_{90}}{{d}_{50}}, {A}_{50}, \frac{b}{Z}\), are used as input vector to the models for estimating the scour depth, and \(\frac{s}{Z}\) is the output. In the second scenario, the parameter \(\frac{b}{B}\) is eliminated from the first scenario, and this procedure is repeated for all of the scenarios. As discussed, energy is a significant parameter in scour studies. Given the importance of this parameter, scenario 7 is developed combining the first scenario and the energy parameter as a new dimensionless parameter. To this end, four expert system models of GEP, GMDH, ELM, and LSSVM were trained, tested, and validated using these seven scenarios.

The soft computing models

GEP

Genetic programming (GP) was first introduced by Cramer (1985), expanded by Koza (1992), and developed by Ferreira (Ferreira 2001). Gene Expression Programming (GEP) is a variant of the genetic algorithm (GA) and belongs to the family of evolutionary algorithms based on Darwin theory. The main difference between these algorithms is the nature of the population members. In GA, the population members are strings (chromosomes) of a fixed length. In GP, entities with different shapes and sizes are non-linear individuals for parse trees, and in GEP, individuals are encoded as a linear string of fixed length expressed as non-linear entities of different shapes and sizes (Guven and Gunal 2008). In general, GEP is an automated technique for finding the solutions to a problem through computer programming. Unlike other methods, it can automatically select the inputs having the greatest impact on the model.

GMDH

The multi-objective neural network form of group method of data handling (GMDH) was developed as a multivariate analysis method for capturing, modeling, and predicting the behavior of complex systems without detailed expert knowledge about their mechanisms (Ivakhnenko 1971). The main purpose of this algorithm is to predict the structure of a complex model based on inherent knowledge of the phenomena represented in the data, rather than the trial–error and priority of the user (Farlow 1981). The relationship of input and output data can be estimated using the Volterra-Kolmogorov-Gabor series (Ivakhnenko 1971) as follows:

where Yi represents the output, xi, xj, …, xk are the inputs, a, bi, cij, dij…k are the polynomial coefficients, and M is the number of independent variables (Najafzadeh et al. 2015; Dargahi-Zarandi et al. 2017).

ELM

Extreme learning machine (ELM) is a fast-learning algorithm with a high-level generalization function belonging to the family of single-layer feed-forward neural networks (SLFN), which was first introduced by Huang et al. (2006; 2012). The learning process in ELM is faster, more generalizable, robust, and accurate than the traditional algorithms such as back-propagation–based artificial neural network (BPANN) (Huang et al. 2004; Imani et al. 2018; Mahmoud et al. 2018). The SLFN function defines as (Huang et al. 2006; Liang et al. 2006):

where ai and bi are ELM learning parameters,\(G\left({a}_{i},{b}_{i},x\right)\) is the output of the ith node based on the input x, and \({\beta }_{i}\) is the weight matrix relating the ith hidden node to the output node. The additive hidden node with G (x): R → R as the activation function (e.g., radial basis) is presented as follows (Huang et al. 2006):

For N optional samples, xi = n × 1 and ti = m × 1 respectively show the input and output vectors, where \(\left({x}_{i},{t}_{i}\right) \epsilon {R}^{n}\times {R}^{m}\). An SLFN with L hidden nodes estimates the N samples with a negligible error as follows:

LSSVM

The least-squares support vector machine (LSSVM) is a variant of support vector machine (SVM) proposed by Vapnik (1995), which was developed based on the statistical learning theory and the structural risk minimization in conjunction with the least-squares error minimization (Suykens et al. 2002). As xi and yi respectively show the input and output datasets with \(i=\mathrm{1,2},3,\dots n, {x}_{I},{ y}_{i}\in {R}^{N}\), the non-linear LSSVM function is expressed as follows:

where W represents the weight vector, \(\varnothing \left(X\right)\) is a function mapping X to an infinite dimensional feature vector, and b shows the bias term. The regression function in LSSVM can be expressed as an optimization problem:

With the constraints as

where \(\gamma\) is a regularization parameter used for adjusting the penalties for errors, and ei shows the regression error (Chaucharda et al. 2004; Barzegar et al. 2019; Cimen 2008; Misra et al. 2009; Kumar and Kar 2009; Kakaei Lafdani et al. 2013; Nourani et al. 2015; Zounemat-Kermani et al. 2016). The parameters used in the LSSVM model are presented in Table 2.

Existing equations predicting the scour depth

The most well-known existing equations for scour depth prediction are presented in Table 3. Six equations are regression-based, and the other two are AI-based. D’Agostino and Ferro (2004) proposed an equation for predicting the scour depth using experimental and field data using regression analysis. Laucelli and Giustolisi (2011) developed 14 equations using 312 experimental and field data applying multi-objective evolutionary paradigms where the two most accurate equations are provided in Table 3. Guven (2011) proposed two equations using the multi-output descriptive neural network (DNN) and regression analysis. He used Bormann and Julien (1991) 82 datasets derived from large-scale experiments to train and test his model. In these equations, \(\mathrm{F}\_\mathrm{rd}\) is defined as \({F}_{rd}=\frac{q}{{\left[bZ\times \frac{\left({\rho }_{s}-\rho \right)}{{\rho }_{s}}\times g{d}_{50}\right]}^{0.5}}\).

Sattar et al. (2017) developed three GEP models using 265 large-scale datasets, and their best equations are used for comparison in this study. Ben Meftah and Mossa (2020) performed 32 experiments and proposed Eq. (21) for predicting the scour depth, where λ represents the downstream face angle of the GCS, and \({Fr}_{sd}=\frac{q}{{h}_{0}\sqrt{\left(\frac{{\rho }_{s}-\rho }{\rho }\right)g{d}_{50}}}\).

The developed Monte-Carlo framework for uncertainty, reliability, and resiliency analysis

In this study, to evaluate the uncertainty in the AI model results, the models are hybridized with the non-parametric Monte Carlo simulation (MCS). Based on the MCS results, the probability distribution of scour dimension is determined and applied for uncertainty quantification considering the Hsc as a structure of the simulator model for scour hole of GCS, the gs as the input vector of parameters with the initial value of gso, and Uq as the main source of uncertainty generation of AI models. The scour depth (S) can be derived in the following functional form (Riahi-Madvar et al. 2021a):

where \(\varepsilon\) is the error defined as the difference between the observed scour depth (S) and the AI model predicted scour depth (\(\widehat{S}\)). In this way, the AI model uncertainties resulting from AI model regulatory parameters, architecture, and data clustering in the training phase can be written as

where \(U{q}_{i}\) is the adaptive parameters of AI model, \({\widehat{S}}_{i}\) is the calculated scour depth by AI model in the ith run of MCS, and n is the number of MCS in AI model training. The results of AI model over the MCS are quantified by the prediction interval (PI) as:

where \(\widehat{h}\left(p\right)\) is the pth quantile in the ith MCS, wi is the likelihood weight of the results at ith training trial in MCS. The upper and lower limits are calculated by:

where \(\overline{{S }_{j}}\) is the mean of AI model results over the MCS and \(\sigma\) j is the variance, v is the degree of freedom, and tv is the threshold of empirical cumulative distribution function of AI model outputs from MCS runs. The prediction intervals are determined by:

where the PIu and PIl are the upper and lower limits of AI model results, and \({{\widehat{S}}_{i}}^{opt}\) is the prediction of optimal AI model. The confidence interval of the AI results for uncertainty quantification is determined using the 2.5th and 97.5th percentiles of 1000 MCS run:

The reliable model is the one resulted from 100% of data bracketed by 95PPU. The desirable value of 95PPU is greater than 80%. The desirable d-factor value is less than one. Furthermore, two metrics are used to quantify the reliability and resilience of AI models for the prediction of sour depth downstream of GCS. The first metric is the reliability analysis evaluating the overall consistency calculated by the value of random error from the simulation model. High reliability occurs when a model produces similar results under consistent conditions.

where the \({k}_{i}\) is calculated based on the relative average error (RAE) values of predictions and the threshold value of RAE, if RAEi ≤ α then ki = 1; otherwise, ki = 0. The RAE is calculated as

In which the \({S}_{ro,i}\) is the measured value, \({S}_{rp,i}\) is the estimated model output, and n is the number of data. The second index is the resilience evaluation index regarding predicted S values over observed values to withstand stressors, adapt, and rapidly recover from disruptions of the estimations. The higher value of resiliency confirmed the higher levels of the robustness of the predicted values to noise and is calculated as:

In which, the ri is the total cases in which the simulation had the possibility of recovering from an inaccurate prediction to an accurate forecast. Also, four criteria are used in order to evaluate the expert systems and identify and select the most critical parameters for the scour depth prediction. The coefficient of determination (R2), root mean square error (RMSE), mean bias error (MBE) (Seyedian and Rouhani 2015), and Akaike information criterion (AIC) are used as presented in Eqs. (32–35). The R2 values closer to one represent better performance and more accurate predictions. The MBE index shows the extent of skewness (bias) in predictions, and ideally, its value should be zero. Positive and negative MBE values show that the model tends to overestimate or underestimate, respectively. Both RMSE and MBE represent the predictive error of the models. The closer the RMSE and MBE values are to zero, the higher the accuracy of the model and the closer predictions are to observations. In the ideal case where all predicted and observed data are equal, the above indicators will be MBE = 0, RMSE = 0, and R2 = 1:

In these equations, P denotes predicted values, O denotes observed values, \(\overline{O }\) is the mean of observed values, n is the number of data, and K represents the total number of model parameters including all constant and variable parameters.

Results and discussion

Scenario-based evaluation of expert systems

MBE, RMSE, and R2 values of the expert model results of the testing and training phases of seven scenarios are presented in Table 4. The scatter plots of consistency between predicted and observed scour depths of different scenarios of the testing phase are displayed in Fig. 3. As the first scenario, some points pertaining to the GEP model are below the 1:1 line, but the points of this model are generally less dispersed than the predictions of ELM, GMDH, and LSSVM models (Fig. 3a). The results in Table 4 show that the GEP model has the highest coefficient of determination (R2 = 0.89) and the lowest error (RMSE = 0.95) among the other expert models in the testing phase. There is no difference between the coefficient of determination and error of the ELM and GEP models (RMSE = 1.02, R2 = 0.86). For the GMDH model, most of the points seem to be close to the 1:1 line, but according to the coefficient of determination, the points have a slightly higher scattering than those of GEP and ELM models.

As the second scenario is shown in Fig. 3b, a small number of points of the GEP model lay outside the ± 25% range, and the model has mainly underestimated the scour depth (MBE = − 0.14). In this scenario, the ELM model has a lower bias than other models (MBE = − 0.01) and has almost the same coefficient of determination and error as the GEP model. According to Fig. 3b and Table 4, the GMDH model underestimates the scour depth (MBE = − 0.16) with about 15% higher error than the ELM model.

As the third scenario, GEP and the ELM models perform almost the same in terms of error and scattering. Compared to GEP and ELM, GMDH model predictions show a higher scattering, and its coefficient of determination is 20% lower than the others while its error is 30% higher.

As the fourth scenario, most of the GMDH model predictions are located below the 1:1 line, which reflects the fact that the model significantly underestimates the scour depth. As the fifth scenario, the GMDH model fairly underestimates and the LSSVM model significantly overestimates the scour depth.

As the sixth scenario, the GEP and ELM models perform with a similar rate of error (RMSE = 1.03), and in comparison, the GMDH and LSSVM models show a higher error and lower coefficient of determination. The scour depth predictions by the GEP and ELM models are almost the same and lay within the ± 25% range. The errors of the two models are mostly in the form of underestimation.

As the seventh scenario using the energy parameter as a new effective parameter, the GEP model shows the lowest error (RMSE = 0.88, MBE = − 0.12) and highest coefficient of determination (R2 = 0.92), and most of its points lay within the ± 25% range. The ELM model shows 10% higher error than the GEP model. Moreover, all the models generally underestimate the scour depth.

In all scenarios, as the results are shown in Table 4 and Fig. 3, the LSSVM model shows the lowest prediction accuracy, and most of the scour depth predictions of the GEP model perform reasonably, as compared to the other three models.

The GEP model estimates better results based on scenarios 1 (GEP-1) and 7 (GEP-7) among the evaluated models. The GEP equations of these best scenarios as the final predictive equations of scour depth are derived as follows:

Input parameter significance

The importance of input parameters in each model was assessed by removing them one at a time from the first scenario. The results of the sensitivity analysis of the evaluated expert system models are presented in Table 4. Figure 4 displays the results of the model’s performance based on the scenario-based evaluations.

Based on the results in Fig. 4, in the first scenario, where all five parameters were included, the GEP model performance results a lower error and higher coefficient of determination than the other scenarios. In the second scenario, with the removal of b/B from the first scenario, the error somewhat increases, and the coefficient of determination decreases. In the third scenario, the error of the GEP model is higher and its coefficient of determination is lower than the first scenario. In the fourth scenario, with the removal of d90/d50, the error increases, but the coefficient of determination remains similar to the first scenario. In the fifth scenario, the error is significantly higher than the first scenario \(\left(\frac{RMSE 5}{RMSE 1}=\frac{1.29}{0.95}\right)\), and the coefficient of determination is significantly lower (\(\frac{{R}^{2} 5}{{R}^{2} 1}=\frac{0.75}{0.89}\)). The results of this scenario show that removal of A50 in the fifth scenario has a great impact on the accuracy of scour depth predictions of GCS and proves its significance. In the sixth scenario, with the removal of b/z, the error increases, but the coefficient of determination also increases by about 0.1 (all compared to the first scenario). In the seventh scenario, the addition of the parameter Z/E decreases the error from 0.95 (in the first scenario) to 0.88 and decreases the MBE from − 0.16 to − 0.12. In conclusion, these results show that using the energy parameter in the GEP model can improve both RMSE and MBE.

As the GMDH, LSSVM, and ELM models, the highest accuracy of scour depth prediction results from the first scenario, and the highest error and lowest coefficient of determination are seen for the fifth scenario, where the parameter A50 is removed. The addition of the energy parameter for the seventh scenario decreases the RMSE of the GMDH and ELM models from respectively 1.06 and 1.02 (the first scenario) to 0.99 and 0.97 and increases their coefficient of determination from 0.84 and 0.86 (the first scenario) to 0.86 and 0.88, respectively. The LSSVM model shows almost the same error and coefficient of determination for the first and fifth scenarios (R2 = 0.71).

In general, all of the developed expert system models exhibit the highest error and the lowest correlation for scenario 5, where A50 is removed from calculations. Therefore, it can be concluded that A50 is the most significant parameter for predicting the scour depth. This conclusion is consistent with the results of Sattar et al. (2017) and Guven (2011). A sensitivity analysis performed by Guven (2011) showed that removing the parameter d90/d50 significantly decreases the accuracy of the scour prediction. This is inconsistent with the finding of the present study that the A50 is the most significant parameter. A study by Tavakolizadeh and Kashefipour (2008) reported that all of the b/B, A50, h/H, b/z, and d90/d50 parameters were significant for scour depth prediction, which partially supports the results of the present study. Najafzadeh (2015) also found the b/B to be the most effective parameter in the prediction of scour depth.

In all scenarios, the lowest error and the highest coefficient of determination values are provided by the GEP as the most accurate model followed by the ELM and GMDH models. The highest error and the lowest coefficient of determination result from the LSSVM model predictions. Based on the results of the present study, the GEP model produces more accurate predictions of scour depth than other models. This is consistent with the findings of Mesbahi et al. (2016) which shows that the GEP model is the best approach for predicting scour downstream of structures, as well as the results of Moussa (2013) which reported reasonable performance of the GEP model predicting the scour depth downstream of hydraulic structures. Furthermore, based on the findings of this study, the ELM model performs better than the LSSVM model which is consistent with the results of Nourani et al. (2017). The results of Huang et al. (2012) also showed the superiority of the ELM in comparison to LSSVM in regression and classification applications. Ebtehaj et al. (2018) also reported that ELM provides significantly better results than the regression-based models predicting the scour depth around bridge piers.

Uncertainty, reliability, and resiliency analysis results

A three-aspect comparison is established for each model while being compared with the observed dataset (indicated by the actual label in the horizontal axis) and is depicted in Fig. 5 as a Taylor diagram. Taylor diagram summarizes evaluation indices of several model results. In the Taylor diagram, the performance of the models is visually displayed on the polar diagram by comparing the predicted values with the actual ones (Riahi-Madvar et al. 2019b; zhu et al. 2019). The reference point denotes the observation values located on the horizontal axis (standard deviation). Also, the azimuth angle of the correlation coefficient diagram indicates the actual and predicted values. Besides, the radial distance from the reference point describes the normalized standard deviation of the predicted values from the actual ones. Each point in this diagram shows the accuracy of each model, and the closer the model is to the reference point, the more accurate it is. The results of this study show the minimum RMSD value of 0.88 in the testing phase, which is related to the GEP model. The GEP also displays the highest correlation coefficient compared to the other three models. As shown by Fig. 5, GEP is the best and closest model to the reference point.

Monte Carlo simulation is employed to determine the uncertainty of the modeling process. In this method, the input parameters are described using a probability distribution, and based on this distribution, a single set of input data is randomly generated. Multiple simulations (typically 1000) must be performed so that the results would not influence the probability distribution of the output variable. Here, the uncertainty and robustness of the proposed model (GEP-7) are investigated. Moreover, the quality of fitted models is evaluated using three indices of 95PPU, reliability, and resiliency. The evaluation results are reported in Table 5.

According to Table 5, the uncertainty of the models at the training phase does not significantly differ from each other. However, in the testing phase, the GEP model with 95PPU = 62 is slightly better than LSSVM (95PPU = 64), ELM (95PPU = 65), and GMDH (95PPU = 65). The results indicate that the scour depths predicted by GEP are more reliable (56.96%) and resilient (57.58%) than LSSVM (46.29 and 45.53), ELM (26.96 and 25.60), and GMDH (28.70 and 27.44) models. Furthermore, the results associated with the testing phase are similar to the training, and GEP with reliability of 52.63 and resiliency of 67.57 has a better rating than the other three. In order to perform a more comprehensive assessment of the GEP-7 model, two criteria of confidence bounds (95PPU) and d-factor are used in the training and testing phases. The 95PPU criterion represents the percentage of data fitting in 95% confidence bounds. In this section, the uncertainty analysis of the GEP-7 model is quantified in the training and testing phases using confidence intervals. 95% confidence intervals (95PPU) are determined by calculating the 2.5th and 97.5th percentiles of the cumulative probability distribution function. The d-factor indicates the confidence bounds width, and theoretically the best value for this criterion is zero, and the higher the d-factor is, the greater the uncertainty produced. As the d-factor value is higher, a large amount of data will fall into confidence bounds, and this shows the two above-mentioned criteria complement each other. However, the proposed model would not be useful due to the high uncertainty, and the best result could be achieved when 100% of the predictions were within 95PPU. As mentioned before, to calculate the uncertainty of the GEP model, 1000 simulation runs are performed with Monte Carlo method. The model uncertainty would be lower with narrower confidence bounds and larger percentage of observation data within the 95PPU range. The best model is the one with minimum difference between the lower and upper bands and the highest percentage of observation data within 95PPU.

The values of d-factor and 95% confidence intervals associated with scour depth predictions of the best model (GEP-7) in the training and testing phases are presented in Fig. 6, and the values of 95PPU are 69% and 62%, respectively. The proposed model is acceptable if more than 80% of the observation data would fall within the 95PPU bound. However, a value of 50% can be acceptable as the measurement data endured an imperfect quality (Abbaspour et al. 2007). The values of d-factor are obtained as 0.25 and 0.4 for the training and testing phases, respectively. Xue et al (2014) and Abbaspour et al. (2007) reported that the d-factor value of less than 1 would be desirable. Lastly, due to the narrowness of confidence bounds of the training and testing phases (d-factor = 0.25, 0.40) as well as the 95PPU values, the GEP-7 model achieves an acceptable uncertainty in both phases.

Comparison of GEP with existing equations

In order to compare the results of best scenarios (1, 7) with the existing equations shown in Table 3, the dataset of Lenzi et al. (2000) is applied as validation data where this data is not used in model training and testing phases. The existing equations are classified into regression-based equations (Eqs. (14–18, 21)) and AI-based equations (Eqs. (19 and 20)). The results of these comparisons are presented in Table 6. The RMSE values of Yen (1987), Guven (2011), and D’Agostino and Ferro (2004) were 3.77, 9.81, and 2.32, respectively, and their MBE values were 3.33, 2.85, and 1.99 respectively. Comparison of these values with the developed GEP model results indicates very low accuracy of these equations and significant overestimation of the scour depth.

The AIC index is used for a fair comparison of different equations with different complexities. AIC evaluates different equations using the model parameters. The AIC value increases when increasing the number of parameters. Therefore, AIC guarantees a fair comparison between simple equations with few parameters and complex equations with a large number of parameters. In general, a low AIC value indicates the higher efficiency of a model. For instance, RMSE and MBE of Laucelli and Giustolisi (2011) were slightly smaller than those of the Laucelli and Giustolisi (2011). Based on these criteria, Eq. (26) would achieve the better equation title. However, AIC indicates a better estimation of the scour depth by the Laucelli and Giustolisi (2011) because of its lower level of complexity and smaller number of parameters.

GEP-7 and GEP-1 models with an AIC value of − 31.65 and − 13.10, respectively, confirm the best performance predicting the scour depth, followed by those proposed by Sattar et al. (2017) and Guven (2011). Guven (2011) showed the highest AIC value. The equations proposed by Yen (1987) and D’Agostino and Ferro (2004) show the lowest performance predicting the scour depth.

Figure 7 displays the s/z values estimated by various equations in validation dataset. As evidently seen, the Guven (2011) overestimates some of the scour depth significantly. The equation proposed by Yen (1987) overestimates mostly and predicts the scour depth 3–5 times higher than the observed scour depth. Given the large errors of the equations proposed by Yen (1987), Guven (2011), and D’Agostino and Ferro (2004), their results are not displayed in the scatter plot (Fig. 7). The scour depths estimated by Guven (2011) are more scattered than other equations, and the range of predicted s/z is greater than 1. For instance, the observed values of 1.75 and 1.78 for s/z are predicted as 1.97 and 2.61, respectively. The corresponding values for 1.22 and 1.29 are 1.18 and 0.78, respectively.

Based on the results in Fig. 7, all values predicted by Ben Meftah and Mossa (2020) are equal or below the − 25% line, indicating a significant underestimation. The error of this equation increases in higher s/z values. Unlike Ben Meftah and Mossa (2020), Laucelli and Giustolisi (2011) overestimate the scour depth two times higher than the observed depth in most points with the MBE value of 0.93. Nearly all points predicted by Laucelli and Giustolisi (2011) are equal or beyond the ± 25% line. This equation overestimates the lower s/z values and underestimates the higher s/z values.

The equation proposed by Sattar et al. (2017) underestimates the scour depth as s/z increases, but all points are in the range of ± 25% at low s/z values as presented in Fig. 7. All scour depth predictions in GEP-1 and nearly all points in GEP-7 are in the range of ± 25%. GEP-7 confirms that GEP-based models have succeeded to predict accurately the scour depth at lower and higher s/z values. Hence, the proposed model shows better results in prediction of the scour depth downstream of GCSs compared to the previously empirical and AI models.

Conclusion

Given the importance of the scouring downstream of hydraulic structures, which could destabilize, damage, or even destroy the structure, this study investigates the most important parameters influencing the scour depth downstream of grade-control structures using a scenario-based approach applying expert system models. To assess the modeling results, some error indices (e.g., R2, RMSE, MBE, AIC) and graphical evaluations (scatter plots, boxplots, column plots) are used in different phases (training, testing, and validation). The results indicate the superiority of energy and GEP-based approach (R2 = 0.86, RMSE = 0.16, MBE = 0.05, AIC = − 31.65) compared to the ELM, LSSVM, and GMDH models. The scenario-based results of developed expert system models reveal that eliminating the b/B, h/H, d90/d50, A50, and b/Z parameters from GEP-1 model would increase the RMSE error 3, 6, 3, 30, and 4%, respectively. By adding the energy parameter to GEP-1, the error decreases 8%. Comparison of results shows that A50 is the most significant parameter predicting the scour depth. When A50 parameter is eliminated, the error in GEP, ELM, GMDH, and LSSVM models increases to reach 30, 34, 42, and 63%, respectively. The results of this study confirm that the energy-based approach increases the accuracy of scour depth predictions. In this study, GEP-based equations are reasonably more accurate and persistent than the previous equations and in good agreement with observed field data over validation phase. Since using empirical equations for predicting the maximum scour depth is only applicable in a specific range of data and laboratory conditions, soft computing methods can be recommended for the scour depth prediction. Due to the importance of uncertainty in the proposed relationship, the Monte Carlo simulation method was employed to assess this parameter. Three methods of 95PPU, reliability, and resiliency were employed to analyze the results. The results indicate that the proposed relationship shows the least uncertainty, and an acceptable percentage of the data would fall within 95% confidence intervals in testing phase. The proposed model exhibits several advantages over the conventional relations and artificial intelligence models, which include providing an explicit relationship to predict the scour depth and using flow energy parameter in order to improve the prediction accuracy. Further to these, GEP-based model can predict the scour depth with higher accuracy, reliability, and resiliency compared to other models.

The developed uncertainty analysis framework presented in this study is a new approach in reliable scour depth prediction, and the extracted equation can be combined with mathematical models of sediment transport and scour hole geometry predictions or real-case modeling of scour around hydraulic structures. Finally, the authors of this study would like to acknowledge that different data measurement and collection methods used in the previous studies would constitute a major limitation of this study and a potential source of error while compiling the data set for the machine learning.

Data availability

Not applicable.

Code availability

Not applicable.

Change history

04 June 2022

The original version of this paper was updated to correct authors insitution to "Gonbad Kavous University" and "Vali-e-Asr University of Rafsanjan".

References

Abbaspour KC, Yang J, Maximov I, Siber R, Bogner K, Mieleitner J, Zobrist J, Srinivasan R (2007) Modelling hydrology and water quality in the pre-alpine/alpine Thur watershed using SWAT. J Hydrol 333(2–4):413–430. https://doi.org/10.1016/j.jhydrol.2006.09.014

Abdullah SS, Malek MA, Abdullah NS, Kisi O, Yap KS (2015) Extreme learning machines: a new approach for prediction of reference evapotranspiration. J Hydrol 527:184–195. https://doi.org/10.1016/j.jhydrol.2015.04.073

Aghaee-Shalmani Y, Hakimzadeh H (2015) Experimental investigation of scour around semi-conical piers under steady current action. J Environ Civ Eng 19(6):717–732. https://doi.org/10.1080/19648189.2014.968742

Alavi AH, Ameri M, Gandomi AH, Mirzahosseini MR (2011) Formulation of flow number of asphalt mixes using a hybrid computational method. Constr Build Mater 25(3):1338–1355. https://doi.org/10.1016/j.conbuildmat.2010.09.010

Alimohammadi H, Esfahani MD, Yaghin ML (2019) Effects of openings on the seismic behavior and performance level of concrete shear walls. Int J Eng Appl Sci 6(10):34–39

Azamathulla HM, Deo MC, Deolalikar PB (2005) Neural networks for estimation of scour downstream of a ski-jump bucket. J Hydraul Eng (ASCE) 131(10):898–908. https://doi.org/10.1061/(ASCE)0733-9429(2005)131:10(898)

Azamathulla HM (2012) Gene expression programming for prediction of scour depth downstream of sills. J Hydrol 460:156–159. https://doi.org/10.1016/j.jhydrol.2012.06.034

Barzegar R, Ghasri M, Qi Z, Quilty J, Adamowski J (2019) Using bootstrap ELM and LSSVM models to estimate river ice thickness in the Mackenzie River Basin in the Northwest Territories. Canada J Hydrol 577:123903. https://doi.org/10.1016/j.jhydrol.2019.06.075

Ben Meftah M, Mossa M (2020) New approach to predicting local scour downstream of grade-control structure. J Hydraul Eng 146(2):1–13. https://doi.org/10.1061/%28ASCE%29HY.1943-7900.0001649

Bormann NE, Julien PY (1991) Scour downstream of grade-control structures. J Hydraul Eng 117(5):579–594. https://doi.org/10.1061/(ASCE)0733-9429(1991)117:5(579)

Brandimarte L, Montanari A, Briaud JL, D’Odorico P (2006) Stochastic flow analysis for predicting river scour of cohesive soils. J Hydraul Eng 132(5):493–500. https://doi.org/10.1061/(ASCE)0733-9429(2006)132:5(493)

Chaucharda F, Cogdillb R, Rousselc S, Rogera JM, Bellon-Maurel V (2004) Application of LS-SVM to non-linear phenomena in NIR spectroscopy: development of a robust and portable sensor for acidity prediction in grapes. Chemom Intell Lab Syst 71:141–150. https://doi.org/10.1016/j.chemolab.2004.01.003

Chou JS, Pham AD (2014) Hybrid computational model for predicting bridge scour depth near piers and abutments. Autom Constr 48:88–96. https://doi.org/10.1016/j.autcon.2014.08.006

Chou JS, Pham AD (2017) Nature-inspired metaheuristic optimization in least squares support vector regression for obtaining bridge scour information. Inf Sci 399:64–80. https://doi.org/10.1016/j.ins.2017.02.051

Cimen M (2008) Estimation of daily suspended sediment using support vector machines. Hydrol Sci J 53(3):656–666. https://doi.org/10.1623/hysj.53.3.656

Cramer NL (1985) A representation for the adaptive generation of simple sequential programs. In proceedings of an International Conference on Genetic Algorithms and the Applications 183–187

D’Agostino V, Ferro V (2004) Scour on alluvial bed downstream of grade control structures. J Hydraul Eng 130(1):24–37. https://doi.org/10.1061/(ASCE)0733-9429(2004)130:1(24)

D’Agostino V (1994) Indagine sullo scavo a valle di opere trasversali mediante modello fisico a fondo mobile. Energia Elettrica 71(2):37–51 ((in Italian))

Dargahi-Zarandi A, Hemmati-Sarapardeh A, Hajirezaie S, Dabir B, Atashrouz S (2017) Modeling gas/vapor viscosity of hydrocarbon fluids using a hybrid GMDH-type neural network system. J Mol Liq 236:162–171. https://doi.org/10.1016/j.molliq.2017.03.066

Ebtehaj I, Bonakdari H, Moradi F, Gharabaghi B, Khozani ZS (2018) An integrated framework of Extreme Learning Machines for predicting scour at pile groups in clear water condition. Coastal Eng 135:1–15. https://doi.org/10.1016/j.coastaleng.2017.12.012

Ebtehaj I, Bonakdari H, Zaji AH, Azimi H, Khoshbin F (2015) GMDH-type neural network approach for modeling the discharge coefficient of rectangular sharp-crested side weirs. Eng Sci Technol Int J 18(4):746–757. https://doi.org/10.1016/j.jestch.2015.04.012

Eghbalzadeh A, Hayati M, Rezaei A, Javan M (2018) Prediction of equilibrium scour depth in uniform non-cohesive sediments downstream of an apron using computational intelligence. Eur J Environ Civ Eng 22(1):28–41. https://doi.org/10.1080/19648189.2016.1179677

Etemad-Shahidi A, Ghaemi N (2011) Model tree approach for prediction of pile groups scour due to waves. Ocean Eng 38(13):1522–1527. https://doi.org/10.1016/j.oceaneng.2011.07.012

Falciai M, Giacomin A (1978) Indagine sui gorghi che si formano a valle delle traverse torrentizie. Ital for Mont 23(3):111–123 ((in Italian))

Farlow SJ (1981) The gmdh algorithm of Ivakhnenko. Am Stat 35:210–215. https://doi.org/10.1080/00031305.1981.10479358

Ferreira C (2001) Gene expression programming: a new adaptive algorithm for solving problems. Complex Syst 13(2):87–129

Galia T, Škarpich V, Hradecký J, Přibyla Z (2016) Effect of grade-control structures at various stages of their destruction on bed sediments and local channel parameters. Geomorphol 253:305–317. https://doi.org/10.1016/j.geomorph.2015.10.033

Gholami A, Bonakdari H, Ebtehaj I, Mohammadian M, Gharabaghi B, Khodashenas SR (2018) Uncertainty analysis of intelligent model of hybrid genetic algorithm and particle swarm optimization with ANFIS to predict threshold bank profile shape based on digital laser approach sensing. Meas 121:294–303. https://doi.org/10.1016/j.measurement.2018.02.070

Goel A, Pal M (2009) Application of support vector machines in scour prediction on grade-control structures. Eng Appl Artif Intell 22(2):216–223. https://doi.org/10.1016/j.engappai.2008.05.008

Guven A, Gunal M (2008) Genetic programming approach for prediction of local scour downstream of hydraulic structures. J Irrig Drain Eng 134(2):241–249. https://doi.org/10.1061/(ASCE)0733-9437(2008)134:2(241)

Guven A (2011) A multi-output descriptive neural network for estimation of scour geometry downstream from hydraulic structures. Adv Eng Software 42(3):85–93. https://doi.org/10.1016/j.advengsoft.2010.12.005

Han H, Cui X, Fan Y, Qing H (2019) Least squares support vector machine (LS-SVM)-based chiller fault diagnosis using fault indicative features. Appl Thermal Eng 154:540–547. https://doi.org/10.1016/j.applthermaleng.2019.03.111

Hoang ND (2019) Estimation of scour depth around bridge piers using a least squares support vector machine program developed in Visual C#.NET. DTU J Sci Technol 05(36):03–09

Hooshyaripor F, Tahershamsi A, Golian S (2014) Application of copula method and neural networks for predicting peak outflow from breached embankments. J Hydro-Environ Res 8(3):292–303. https://doi.org/10.1016/j.jher.2013.11.004

Huang GB, Zhu QY, Siew CK (2004) Extreme learning machine: a new learning scheme of feedforward neural networks. In: IEEE Int Conf neural networks, Budapest (Hungary) 2:985–90. https://doi.org/10.1109/IJCNN.2004.1380068

Huang GB, Zhu QY, Siew CK (2006) Extreme learning machine: theory and applications. Neurocomputing 70(1–3):489–501. https://doi.org/10.1016/j.neucom.2005.12.126

Huang G, Member S, Zhou H, Ding X, Zhang R (2012) Extreme learning machine for regression and multiclass classification. IEEE Trans Syst Man Cybern, Part B Cybern 42(2):513–529. https://doi.org/10.1109/TSMCB.2011.2168604

Imani M, Kao HC, Lan WH, Kuo CY (2018) Daily sea level prediction at Chiayi coast, Taiwan using extreme learning machine and relevance vector machine. Global Planet Change 161:211–221. https://doi.org/10.1016/j.gloplacha.2017.12.018

Ivakhnenko AG (1971) Polynomial theory of complex systems. Trans Syst Man Cybern SMC-1(4):364–378. https://doi.org/10.1109/TSMC.1971.4308320

Johnson PA (1992) Reliability-based pier scour engineering. J Hydraul Eng 118(10):1344–1358. https://doi.org/10.1061/(ASCE)0733-9429(1992)118:10(1344)

Kakaei Lafdani E, Moghaddam Nia A, Ahmadi A (2013) Daily suspended sediment load prediction using artificial neural networks and support vector machines machine. J Hydrol 478:50–62. https://doi.org/10.1016/j.jhydrol.2012.11.048

Khalid M, Muzzammil M, Alam J (2019) A reliability-based assessment of live bed scour at bridge piers. ISH J Hydraul Eng 1–8. https://doi.org/10.1080/09715010.2019.1584543

Koza JR (1992) Genetic programming: on the programming of computers by means of natural selection, vol 1. MIT Press, Cambridge, MA

Kumar M, Kar IN (2009) Non-linear HVAC computations using least square support vector machines. Energy Convers Manage 50(6):1411–1418. https://doi.org/10.1016/j.enconman.2009.03.009

Laucelli D, Giustolisi O (2011) Scour depth modelling by a multi-objective evolutionary paradigm. Environ Modell Software 26(4):498–509. https://doi.org/10.1016/j.envsoft.2010.10.013

Lenzi MA, Comiti F (2003) Local scouring and morphological adjustments in steep channels with check-dam sequences. Geomorphol 55(1–4):97–109. https://doi.org/10.1016/S0169-555X(03)00134-X

Lenzi MA, Marion A, Comiti F, Gaudio R (2000) Riduzione dello scavo a valle di soglie di fondo per effetto dell’interferenza tra le opere. 27th Convegno di Idraulica e Costruzioni Idrauliche, Genova, Italy 271–278 (in Italian)

Liang NY, Huang GB, Rong HJ, Saratchandran P, Sundararajan N (2006) A fast and accurate on-line sequential learning algorithm for feedforward networks. IEEE Trans Neural Networks 17:1411–1423. https://doi.org/10.1109/TNN.2006.880583

Lu C, Chen J, Hong R, Feng Y, Li Y (2016) Degradation trend estimation of slewing bearing based on LSSVM model. Mech Syst Sig Process 76:353–366. https://doi.org/10.1016/j.ymssp.2016.02.031

Mahmoud T, Dong ZY, Ma J (2018) An advanced approach for optimal wind power generation prediction intervals by using self-adaptive evolutionary extreme learning machine. Renewable Energy 126:254–269. https://doi.org/10.1016/j.renene.2018.03.035

Mehri Y, Soltani J, Khashehchi M (2019) Predicting the coefficient of discharge for piano key side weirs using GMDH and DGMDH techniques. Flow Meas Instrum 65:1–6. https://doi.org/10.1016/j.flowmeasinst.2018.11.002

Mesbahi M, Talebbeydokhti N, Hosseini SA, Afzali SH (2016) Gene-expression programming to predict the local scour depth at downstream of stilling basins. Sci Iran 23(1):102–113. https://doi.org/10.24200/SCI.2016.2101

Misra D, Oommen T, Agarwal A, Mishra SK, Thompson AM (2009) Application and analysis of support vector machine based simulation for runoff and sediment yield. Biosyst Eng 103(4):527–535. https://doi.org/10.1016/j.biosystemseng.2009.04.017

Mossa M (1998) Experimental study on the scour downstream of grade-control structures. 26th Convegno di Idraulica e Costruzioni Idrauliche. Catania, Italy 3:581–594

Moussa YAM (2013) Modeling of local scour depth downstream hydraulic structures in trapezoidal channel using GEP and ANNs. Ain Shams Eng J 4(4):717–722. https://doi.org/10.1016/j.asej.2013.04.005

Muzzammil M, Siddiqui NA (2009) A reliability-based assessment of bridge pier scour in non-uniform sediments. J Hydraul Res 47(3):372–380. https://doi.org/10.1080/00221686.2009.9522008

Najafzadeh M, Barani GA, Hessami-Kermani MR (2015) Evaluation of GMDH networks for prediction of local scour depth at bridge abutments in coarse sediments with thinly armored beds. Ocean Eng 104:387–396. https://doi.org/10.1016/j.oceaneng.2015.05.016

Najafzadeh M, Barani GA, Hessami-Kermani MR (2014) Group method of data handling to predict scour at downstream of a ski-jump bucket spillway. Earth Sci Inf 7(4):231–248. https://doi.org/10.1007/s12145-013-0140-4

Najafzadeh M, Barani GA (2011) Comparison of group method of data handling based genetic programming and back propagation systems to predict scour depth around bridge piers. Sci Iran 18(6):1207–1213. https://doi.org/10.1016/j.scient.2011.11.017

Najafzadeh M, Etemad-Shahidi A, Lim SY (2016) Scour prediction in long contractions using ANFIS and SVM. Ocean Eng 111:128–135. https://doi.org/10.1016/j.oceaneng.2015.10.053

Najafzadeh M (2015) Neuro-fuzzy GMDH based particle swarm optimization for prediction of scour depth at downstream of grade control structures. Eng Sci Technol Int J 18(1):42–51. https://doi.org/10.1016/j.jestch.2014.09.002

Nourani V, Alizadeh F, Roushangar K (2015) Evaluation of a two-Stage SVM and spatial statistics methods for modeling monthly river suspended sediment load. Water Resour Manage 30(1):393–407. https://doi.org/10.1007/s11269-015-1168-7

Nourani V, Andalib Gh, Sadikoglu F (2017) Multi-station streamflow forecasting using wavelet denoising and artificial intelligence models. Procedia Comput Sci 120:617–624. https://doi.org/10.1016/j.procs.2017.11.287

Pagliara S, Palermo M, Kurdistani SM, Sagvand Hassanabadi L (2015) Erosive and hydrodynamic processes downstream of low-head control structures. J Appl Water Eng Res 3(2):122–131. https://doi.org/10.1080/23249676.2014.1001880

Pal M, Singh NK, Tiwari NK (2011) Support vector regression based modeling of pier scour using field data. Eng Appl Artif Intell 24(5):911–916. https://doi.org/10.1016/j.engappai.2010.11.002

Pan H, Wang R, Huang J, Ou G (2013) Study on the ultimate depth of scour pit downstream of debris flow Sabo dam based on the energy method. Eng Geol 160:103–109. https://doi.org/10.1016/j.enggeo.2013.03.026

Pourzangbar A, Brocchini M, Saber A, Mahjoobi J, Mirzaaghasi M, Barzegar M (2017) Prediction of scour depth at breakwaters due to non-breaking waves using machine learning approaches. Appl Ocean Res 63:120–128. https://doi.org/10.1016/j.apor.2017.01.012

Regazzoni PL, Marot D (2011) Investigation of interface erosion rate by jet erosion test and statistical analysis. Eur J Environ Civ Eng 15(8):1167–1185. https://doi.org/10.1080/19648189.2011.9714847

Riahi-Madvar H, Ayyoubzadeh SA, Namin MM, Seifi A (2011) Uncertainty analysis of quasi-two-dimensional flow simulation in compound channels with overbank flows. J Hydrol Hydromech 59(3):171–183. https://doi.org/10.1061/(ASCE)0733-9429(2006)132:5(493)

Riahi-Madvar H, Dehghani M, Memarzadeh R, Gharabaghi B (2021a) Short to long-term forecasting of river flows by heuristic optimization algorithms hybridized with ANFIS. Water Resour Manage 35(4):1149–1166. https://doi.org/10.1007/s11269-020-02756-5

Riahi-Madvar H, Dehghani M, Seifi A, Salwana E, Shamshirband Sh, Mosavi A, Chau KW (2019a) Comparative analysis of soft computing techniques RBF, MLP, and ANFIS with MLR and MNLR for predicting grade-control scour hole geometry. Eng Appl Comput Fluid Mech 13(1):529–550. https://doi.org/10.1080/19942060.2019.1618396

Riahi-Madvar H, Seifi A (2018) Uncertainty analysis in bed load transport prediction of gravel bed rivers by ANN and ANFIS. Arabian J Geosci 11(21):1–20. https://doi.org/10.1007/s12517-018-3968-6

Riahi-Madvar H, Gholami M, Gharabaghi B, Seyedian SM (2021b) A predictive equation for residual strength using a hybrid of subset selection of maximum dissimilarity method with Pareto optimal multi-gene genetic programming. Geosci Front 12(5):101222. https://doi.org/10.1016/j.gsf.2021.101222

Riahi-Madvar H, Dehghani M, Seifi A, Singh VP (2019b) Pareto optimal multigene genetic programming for prediction of longitudinal dispersion coefficient. Water Resour Manage 33(3):905–921. https://doi.org/10.1007/s11269-018-2139-6

Roushangar K, Akhgar S, Erfan A, Shiri J (2016) Modeling scour depth downstream of grade-control structures using data driven and empirical approaches. J Hydroinf 18(6):946–960. https://doi.org/10.2166/hydro.2016.242

Sahoo S, Mohapatra SK, Panda B (2013) Classification using extreme learning machine. Compusoft, Int J Adv Comput Technol 2(12):415–421

Samui P, Kothari DP (2011) Utilization of a least square support vector machine (LSSVM) for slope stability analysis. Sci Iran 18:53–55. https://doi.org/10.1016/j.scient.2011.03.007

Sattar AMA, Plesinski k, Radecki-Pawlik A, Gharabaghi, B, (2017) Scour depth model for grade-control structures. J Hydroinf 20(1):117–133. https://doi.org/10.2166/hydro.2017.149

Scurlock SM, Thornton CI, Abt SR (2012) Equilibrium scour downstream of three-dimensional grade-control structures. J Hydraul Eng 138(2):167–176. https://doi.org/10.1061/(ASCE)HY.1943-7900.0000493

Seifi A, Dehghani M, Singh VP (2020a) Uncertainty analysis of water quality index (WQI) for groundwater quality evaluation: application of Monte-Carlo method for weight allocation. Ecol Indic 117(106653):1–15. https://doi.org/10.1016/j.ecolind.2020.106653

Seifi A, Ehteram M, Soroush F (2020b) Uncertainties of instantaneous influent flow predictions by intelligence models hybridized with multi-objective shark smell optimization algorithm. J Hydrol 587:124977. https://doi.org/10.1016/j.jhydrol.2020.124977

Seifi A, Riahi H (2020) Estimating daily reference evapotranspiration using hybrid gamma test-least square support vector machine, gamma test-ANN, and gamma test-ANFIS models in an arid area of Iran. J Water Clim Change 11(1):217–240. https://doi.org/10.2166/wcc.2018.003

Seyedian SM, Ghazizadeh MJ, Tareghian R (2014) Determining side-weir discharge coefficient using ANFIS. Proc Inst Civ Eng Water Manag 167(4):230–237. https://doi.org/10.1680/WAMA.12.00102

Seyedian SM, Rouhani H (2015) Assessing ANFIS accuracy in estimation of suspended sediments. Gradevinar 67(12):1165–1176. https://doi.org/10.14256/JCE.1210.2015

Sharafati A, Haghbin M, Motta D, Yaseen ZM (2019) The application of soft computing models and empirical formulations for hydraulic structure scouring depth simulation: a comprehensive review, assessment and possible future research direction. Arch Comput Methods Eng 1–25. https://doi.org/10.1007/s11831-019-09382-4

Sharafati A, Tafarojnoruz A, Motta D, Yaseen ZM (2020) Application of nature-inspired optimization algorithms to ANFIS model to predict wave-induced scour depth around pipelines. J Hydroinf 22(6):1425–1451. https://doi.org/10.2166/hydro.2020.184

Suykens JAK, Van Gestel T, De Brabanter J, De Moor B, Vandewalle J (2002) Least squares support vector machines. World Sci Publ, Singapore. https://doi.org/10.1142/5089

Tavakolizadeh AA, Kashefipour SM (2008) Modeling local scour on loose bed downstream of grade control structures using artificial neural network. J Appl Sci 8(11):2067–2074. https://doi.org/10.3923/jas.2008.2067.2074

Veronese A (1937) Erosioni di fondo a valle di uno scarico. Annal Lavori Pubbl 75(9):717–726 ((in Italian))

Wang L, Kisi O, Zounemat-Kermani M, Li H (2017) Pan evaporation modeling using six different heuristic computing methods in different climates of China. J Hydrol 544:407–427. https://doi.org/10.1016/j.jhydrol.2016.11.059

Xue C, Chen B, Wu H (2014) Parameter uncertainty analysis of surface flow and sediment yield in the Huolin Basin. China J Hydrol Eng 19(6):1224–1236. https://doi.org/10.1061/(ASCE)HE.1943-5584.0000909

Yanmaz AM, Cicekdag O (2001) Composite reliability model for local scour around cylindrical bridge piers. Can J Civ Eng 28(3):520–535. https://doi.org/10.1139/l01-009

Yaseen ZM, Jaafar O, Deo RC, Kisi O, Adamowski J, Quilty J, El-Shafie A (2016) Stream-flow forecasting using extreme learning machines: a case study in a semi-arid region in Iraq. J Hydrol 542:603–614. https://doi.org/10.1016/j.jhydrol.2016.09.035

Yen C (1987) Discussion of “Free Jet Scour Below Dams and Flip Buckets” by Peter J Mason and Kanapathypilly Arumugam (February, 1985, Vol. 111, No. 2). J Hydraul Eng 113:1200–1202. https://doi.org/10.1061/(asce)0733-9429(1987)113:9(1200)

Yousif AA, Sulaiman SO, Diop L, Ehteram M, Shahid S, Al-Ansari N, Yaseen ZM (2019) Open channel sluice gate scouring parameters prediction: different scenarios of dimensional and non-dimensional input parameters. Water 11(2):353. https://doi.org/10.3390/w11020353

Zadehmohamad M, Bolouri Bazaz J (2017) Cyclic behaviour of geocell-reinforced backfill behind integral bridge abutment. Int J Geotech Eng 133(5):438–450. https://doi.org/10.1080/19386362.2017.1364882

Zhu S, Heddam S, Wu S, Dai J, Jia B (2019) Extreme learning machine-based prediction of daily water temperature for rivers. Environ Earth Sci 78(6):1–17. https://doi.org/10.1007/S12665-019-8202-7

Zheng J, He H, Alimohammadi H (2021) Three-dimensional Wadell roundness for particle angularity characterization of granular soils. Acta Geotech 16:133–149. https://doi.org/10.1007/s11440-020-01004-9

Zhao YP, Hu QK, Xu JG, Li B, Huang G, Pan YT (2018) A robust extreme learning machine for modeling a small-scale turbojet engine. Appl Energy 218:22–35. https://doi.org/10.1016/j.apenergy.2018.02.175

Zounemat-Kermani M, Kisi O, Adamowski J, Ramezani A (2016) Evaluation of data driven models for river suspended sediment concentration modeling. J Hydrol 535:457–472. https://doi.org/10.1016/j.jhydrol.2016.02.012

Author information

Authors and Affiliations

Contributions

Not applicable.

Corresponding author

Ethics declarations

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Responsible Editor: Zeynal Abiddin Erguler

Rights and permissions

About this article

Cite this article

Seyedian, S.M., Riahi-Madvar, H., Fatabadi, A. et al. Comparative uncertainty analysis of soft computing models predicting scour depth downstream of grade-control structures. Arab J Geosci 15, 418 (2022). https://doi.org/10.1007/s12517-022-09704-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12517-022-09704-0