Abstract

Slope stability is the main attribute of geotechnical engineering systems which can be established by calculating factor of safety, FoS. In this context, there are various existing conventional methods which can be used for slope stability analysis. However, in the era of Artificial Intelligence (AI), the slope stability analysis can be performed using soft computing, SoCom, models which have superior predictive capability in comparison to other methods. SoCom is capable of addressing uncertainty and imprecision and which can be quantified using statistical parameters (viz., R2, RMSE, MAPE, t-stat, etc.). In this context, this review paper mainly focuses on conventional methods viz., Bishop method, Taylor method, Janbu method, Hoek–Brown method, apart from SoCom models viz., SVM, Model Tree, CA, ELM, GRNN, GPR, MARS, MCS, GP, etc. Also, quality assessment parameter like data preprocessing techniques and performance measures have been covered in this paper. Furthermore, merits and limitations of SoCom techniques in comparison to conventional approaches has also been discussed elaborately in this review.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Slope stability is a major concern for geotechnical structures due to involvement of monetary as well as loss of flora and fauna due to slope failures (Liu et al. 2014; Gao et. al 2019). Anthropogenic activities and natural conditions leads in climate change and global warming which ends up affecting natural as well as engineered slopes, also results in first-time and reactivated failures in some cases (Dijkstra and Dixon 2010; Liu et al. 2010; Zhang et al. 2020; Mehta et al. 2021; Wong et al. 2022; Peethambaran et al. 2022). The designing and construction of optimum slopes not only includes stability and safety but also consists of vast economic factors (Verma et al. 2013; Chen et al. 2020), which needs to be decided on the basis of various parameters (viz., Factor of safety (FoS), reliability index (β), sensitivity analysis). Researchers have mentioned various methods for conducting slope failure analysis such as empirical equations (Bieniawski 1989; Janbu 1973; Taheri and Tani 2010; Wang et al. 2021) and computational intelligence approaches (Bui et al. 2020; Singh et al. 2019; Taheri and Tani, 2010; Chen et al. 2020). Thus, the analysis and detailed construction of slopes should be conducted properly, survey and monitoring of prior and post construction should be conducted using effective techniques to ensure the stability of slopes and thus structures (viz., roads and railways embankments, hydraulic structures, etc.) and also long-term sustainability (Kainthola et al. 2013; Singh et al. 2020; Zhang and Tang 2021). Slope behaviour eventually described and mainly discussed focussing on the factor of safety (FoS) values which evaluates the stability and failure of slopes (Kainthola et al. 2013; Liu et al. 2014). Unfortunately, many failures due to slope instability issues has been occurred in past due to: (i) generation of pore-water pressure, (ii) erosion, (iii) earthquakes, (iv) geological characteristics, and (v) external loading.

Incidentally, there are many methods (conventional and soft computing) available for conducting slope analysis. However, the suitability and applicability of methods changes with change in slope conditions wherein, factor of safety (FoS) of natural as well as man-made slopes plays a very crucial role and thus, needs to be considered elaborately. Furthermore, new utility tools and approaches in the form of SoCom models and numerical modelling has been explored by researchers (Tinoco et al. 2018; Singh et al. 2020; Hassan et al. 2022), apart from conventional methods.

Unfortunately, there is lack of extensive review studies on methods which have been used for performing the slope stability analysis. Furthermore, with increase in soft computing models-based slope stability analysis, the suitability of these methods on various slope conditions followed their performance accuracy needs to be understood. Therefore, the studies available on rock slopes as well as soil slopes using conventional and soft computing methods have been reviewed and discussed critically in this review. The models have also been compared in terms of FoS and their performance statistics. Thereafter, the suitability and limitations of the methods have also been considered and discussed briefly in this review.

Methods of slope stability used in literature

Researchers have used several methods for doing slope stability analysis of rocks (Taheri and Tani 2010; Pinheiro et al. 2015; Singh et al. 2020) and soils (Javankhoshdel and Bathurst 2014; Liu et al. 2014; Tinoco et al. 2018). The methods involve conventional (viz., Mohr–Coulomb method, Bishop method, Janbu method, Taylor method, Hoek and Bray) as well as SoCom methods (viz., M5 Model Tree, Cubist, MARS, ELM, GPR, GRNN, MCS, SVM, etc.) which have been keep evolving with time (Samui 2008; Zhang and Li 2019). Summary of the various work conducted by researchers are elaborately compiled in Tables 1 and 2 for conventional methods and SoCom methods, respectively. Some of the methods used by researchers have been briefly discussed in the following sections.

Conventional methods

Bishop method

The failure of the soil mass is assumed to be by rotation on a circular slip circle which is centred at a point (Bishop 1955; Zhang and Li 2019). The normal force assumed to be act at the centre of each slice’s base and shear stress acting between the slices is neglected as forces on slices sides are assumed to be horizontal. The simplified Bishop’s method provides relatively accurate values for the FoS, however, the method does not satisfy the complete static equilibrium (Verma et al. 2013). The FoS as per Bishop’s method can be obtained using Eq. (1)

where c’ = cohesion, l = width of slice, α = angle at the base of sliding slice, \({\phi }^{^{\prime}}\) = angle of internal friction, u = Pore water pressure, W = effective weight of the slice, P = Normal force acting at the base of the slice, and FoS = Factor of safety.

Verma et al. (2013) used Bishop’s method for analysing stability of an open cut slope at Wardha valley coal field near Chandrapur, India. The simplified schematic diagrams of circular failure of slopes using Bishop’s method are presented in Fig. 1a (Verma et al. 2013). The average value of geotechnical properties used by researchers have been presented in graphical form (refer Fig. 2). From Fig. 2, it can be noticed that the highest geological strength index (GSI) rating is 43, which was assigned to yellow sandstone, while the material was assigned a GSI of 10. Estimation of reduction in strength parameters for different geological conditions of rock mass is represented in terms of GSI rating (Hoek et al. 1998). Researchers took mi values in their study as suggested by Hoek and Brown (1997). FoS values obtained are presented in Fig. 3. From the FoS values, it is clear that the cut slope at that location was in very critical state which may lead to failure without warning and thus, recommendation for proper attention was suggested by the researchers. In this context, FoS plays a vital role while performing stability analysis of slopes.

Janbu method

Janbu’s method is applicable to non-circular failure of the soil mass (Janbu 1973). As like Bishop’s method, the normal force assumed to be act at the centre of each slice’s base and shear stress acting between the slices is neglected as forces on slices sides are assumed to be horizontal. Verma et al. (2013) used Janbu’s corrected method where interslices shear force has been taken into consideration. The expression for FoS as per given by Janbu (1973) is presented using Eq. (2).

where c’ = cohesion, l = width of slice, α = angle at the base of sliding slice, \({\phi }^{^{\prime}}\) = angle of internal friction, u = Pore water pressure, W = effective weight of the slice, P = Normal force acting at the base of the slice and FoS = Factor of safety.

The method of slices for analysis of non-circular failures in slopes using Janbu’s method is presented in Fig. 1b. Researchers have used non-circular failure analysis and also used corrected Janbu’s method for circular failure in slopes. The average value of geotechnical properties used by researchers is presented in Fig. 2, while the FoS values obtained for Janbu’s method are presented in Fig. 3.

Taylor method

Taylor’s method is applicable for circular failure of the soil mass (Taylor 1937). The assumption that soils are homogeneous and isotropic does not applicable in reality (Sakellariou and Ferentinou 2005). Taylor’s slope stability chart was earlier used by researchers for estimating factor of safety of slopes having simple geometry with homogeneous and isotropic soil properties in clays under single-value undrained shear strength (Javankhoshdel and Bathurst 2014; Liu et al. 2022). Taylor (1937) also derived charts for computing factor of safety for simple slopes of cohesive-frictional (c-ϕ) shear strength soils. The drawbacks associated with Taylor’s chart are the necessity associated in form of iterative procedures to calculate the FoS. Taylor’s slope stability chart is presented in Fig. 4. The FoS can be calculated using Eq. (3)

where stability number (Ns) depends on slope angle (α) and depth factor (D). In Fig. 4, DH is depth of firm stratum from slope crest and ‘H’ is height of slope. ‘H’ and ‘α’ are considered to be deterministic. Also, the probability of failure can be calculated using Su as lognormally distributed or random variable and γ as a constant or both can be considered for lognormal distributions having uncorrelated random variables.

Schematic representing Taylor’s slope stability chart for cohesive soils (Taylor 1937)

Steward et al. (2011) proposed modified version of Taylor’s chart for slope stability design of dry slopes having no tension cracks and the same can be seen in Fig. 5. The modified chart has advantage associated with it as the critical slope FoS can be computed without iteration.

Schematic representing Stability chart of c’-ϕ’ soil (Steward et al. 2011)

Hoek–Brown method

Hoek–Brown strength criteria is an non-linear strength criteria and can be expressed in terms of shear and normal stresses, can also be represented in terms of major and minor principal stresses (Li and Yang 2019). For strength analysis of soils, power law criteria in terms of σn—τ stress space can be used, which are presented by Eq. (4).

where Pa = atmospheric pressure (standard, 100 kPa), n (degree of curvature), A (shear strength coefficient), and T are dimensionless experimental parameters (using triaxial). Product Pa.T is tensile strength and has been denoted as tNL (refer Fig. 6). The ranges of mentioned dimensionless parameters are T ≥ 0, A > 0 and 1 ≥ n ≥ 0.5.

Nonlinear failure envelope and stress circle (strength reduction method) (Li and Yang 2019)

In the case of pore-water pressure, effective stress and strength parameters should be taken into consideration for analysis (Baker 2004). Slope stability analysis can be performed by calculating FoS using strength reduction method. In Fig. 6, solid line represents original strength curve, while dotted one represents strength curve after reduction in the plane of normal and shear stresses. Modified strength criterion can be represented using Eq. (5).

where τF represents shear strength after reduction.

Soft computing (SoCom) models used in literature

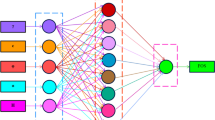

Artificial intelligence-based soft computing consists of several models which have been extensively used for solving civil engineering problems (viz., slope stability). Prior to training and validation of data, it is required to normalize the data in a range of appropriate values of lower and upper limits (generally between 0 and 1) (Bardhan et al. 2021). Furthermore, dataset is divided into training (generally 70/80/90%) and testing (generally 30/20/10%) of total database. A schematic of flowchart representing various steps of training and validation is presented in Fig. 7. Subsequently, after developing the model, performance of the same is accessed using various statistical parameters (Zhang et al. 2021; Kardani et al. 2021; Wu et al. 2022; Zhang et al. 2022a; Phoon and Zhang 2022; Zhang and Phoon 2022).

Support vector machine (SVM)

Support vector machine (SVM) are algorithms which is being used for classification and regression analysis of a dataset and a better alternative to artificial neural networks (Boser et al. 1992; Samui 2008; Zhang et al. 2020). Suppose, T set of training vectors belongs to two separate classes, then for classification analysis, can be represented using Eq. (6).

such that, y € {− 1, + 1}, x € Rn

where l is the number of training dataset and n-dimensional data vector, x€ Rn, belonging to either of two classes labelled as y € {− 1, + 1}. Samui (2008) considered angle of internal friction (ϕ), cohesion (c), unit weight of soil (γ) and pore-water pressure coefficient (ru) for slope’s strength determination while for geometry of slope, height (H) and slope angle (β) was considered. Thus, in this case, x = [ϕ, c, γ, ru, H, β]. Linear hyperplane for distinguishing the two classes is presented using Eq. (7).

Graphs representing margin widths of hyperplanes and maximum margins are presented in Fig. 8a, b. Further details can be found in Samui (2008) and Boser et al. (1992). Thus, authors conclude that SVM is a powerful and effective computational which can be used extensively for problems related to slope stability.

Schematic representing a Hyperplanes margin width, and b Maximum margins and support vectors (Samui 2008)

Model tree

M5 algorithm used in model tree was first proposed by Quinlan (1993). Model tree works on the concept of divide and rule. Multiple linear regression models applied on input parameter space after dividing them into smaller sub-spaces. Furthermore, model tree approach involves: (i) building the tree, and (ii) inferring knowledge from the tree created. Also, the linear regression model is used for tree building by partitioning the input space into mutually exclusive regions as presented in Fig. 9a, b. A splitting technique is adopted in the inference procedure to fed new instance at the leaves of the tree using one of the models and thus, using linear model at the leaf, predicted output is obtained (Jung et al. 2010; Zhang et al. 2020). Wang and Witten (1997) modified the M5 algorithm and introduced M5’, which was able to deal effectively with missing values. M5’ model tree considers set of piecewise linear models as final solution of the problem after dividing the input space into sub-spaces and works in three stages: (i) building the tree, (ii) pruning the tree, and (iii) smoothening the tree (Jung et al. 2010; Etemad-Shahidi and Bali 2012; Bui et al. 2020). Suppose a model tree of training cases for ‘T’ sets, while ‘Ti’ represents the subset of ‘i’ outcomes with standard deviation sd(Ti), then the formula for calculating the standard reduction factor (SDR) can be given as:

where T = set of data point before spitting, sd = standard deviation, Ti = data point which as per chosen splitting parameter falls into one subspace and is result of splitting the space. Different splitting points is tested by M5’ model tree after dividing the space and thus, using sd for sub-spaces. SDR also represents the change in error value, i.e., Δerror. As the test proceeds, model tree picks the outcome with maximum reduction in the value of errors.

Schematic representing: a Splitting of input space by M5 model tree algorithm such that every model follows linear regression, and b New instance prediction by model tree (Jung et al. 2010)

Cubist algorithm (CA)

Cubist, a rule-based model, is an extension of Quinlan’s M5 model tree (Kuhn et al. 2016). CA is based on recursive partitioning, and also it splits training cases as like growing a decision tree. Regression problems having large number of input attributes (even thousands of variables) can be effectively solved using CA. It should be noted that, CA generally works in four steps (refer Fig. 10) to predict a regression problem and also while analysing FoS for slope stability. Luo et al. (2021) applied CA for slope stability and obtained R2 as 0.983. Pouladi et al. (2019) used CA and obtained R2 as 0.88 with RMSE value of 3.26%.Also, Singh et al. (2020) used CA, while performing reliability analysis on rock slopes and obtained R2 as 0.999.

Extreme learning machines (ELM)

Extreme learning machines, ELM, consists of three layers in the form of the: (i) input layer, (ii) hidden layer, and (iii) output layer as presented in Fig. 11. Weight connections been exposed between the input layer and the hidden layers and also between the hidden layer and the output layer (Liu et al. 2014; Wang et al. 2020). The input layer consists of n neurons which corresponds to 6 dimensions of n slope cases. The hidden layers have l hidden neurons while the output layer consists of m neurons which represents m factor of safety (refer Fig. 11). From Fig. 11, it can be noted that the weight w connecting the input layer with the hidden layer while the weight β is connecting the hidden layer with the output layer. It has been observed that for used dataset by Liu et al. (2014), ELM performs better as compared to GRNN which have been realised from values of statistical parameters like R2 and MAPE. Unfortunately, the researchers have not considered the seismic parameters in their study, which needs to be considered to get more generalised outputs. Singh et al. (2020) used ELM model on 80 datasets for slope stability of rock mass and used statistical parameters for analysis, and observed reliability index (β) values as 1.772 and 2.163 for training and testing cases, respectively.

Slope stability analysis using Extreme learning machine model (modified from Liu et al. (2014))

Generalized regression neural networks (GRNN)

Generalized regression neural network (GRNN), a memory based model proposed by Specht (1991), can be used for fitting non-linear relationships (Liu et al. 2018; Zhang et al. 2021). GRNN structure consists of (i) input layer, (ii) pattern layer, (iii) summation layer, and (iv) output layer (Polat and Yildirim 2008). GRNN draws the function using the data and approximating the arbitrary functions among the input and output vectors in the place of iterative training procedure as in back-propagation. The estimate error becomes zero only with mild restrictions on the function, as the training set size increases. A typical GRNN architecture is presented in Fig. 12. First layer consists of input units, the second layer has the pattern units whose outputs are passed on to the summation units in the third layer, and output units cover the final layer as can be seen in Fig. 12. GRNN uses below algorithm while calculating yi’, Eq. (9) can be used.

where xi = training dataset input, yi = associated output, n = number of training dataset, yi’ = corresponding output to the input xi, α = spread.

The expression of D(x, xi) can be given using Eq. (10).

Gaussian process regression (GPR)

Gaussian process consists of a collection of random variables (Williams 1998; Rasmussen 2004; Ray et al. 2022). The random variables have finite subset of the variables having Gaussian distribution. Suppose a random variables collection G as \({G}_{y1}, {G}_{y2}, {G}_{y3},\dots \dots ..{G}_{yn}\). Variables have been indexed using elements y of a set Ƴ. The corresponding vector of the variables containing normal or multivariate Gaussian distribution \({G}_{y}= {\left[{G}_{y1}, {G}_{y2}, {G}_{y3},\dots \dots ..{G}_{yn}\right]}^{T}\) for any finite length of vector which have indices as \(= {\left[{y}_{1}, {y}_{2}, \dots \dots .., {y}_{n}\right]}^{T}\),

where k is kernel function and μ(y) is prior mean function of μ(yi). Kernel gives covariance between variables Gyj and Gyi by taking two indices yj and yi correspondingly. Each Gyi is marginally Gaussian with variance k(yi, yi) and mean μ(yi). k returns the matrix of covariances among all pairs of variables vectors of indices yj and yi, where the first and second in pair comes from Gyj and Gyi respectively.

Suppose a function g(y) needs to be optimized. Also assume function g cannot be observed directly but can be observed as a random variable Gy which is indexed by the similar domain as g(y), and whose expected value is g, i.e. \(\forall y \in \Upsilon , E\left[{G}_{y}\right] = g\left(y\right)\). Furthermore, the function g has prior mean as μ and kernel k conforms to a Gaussian process. As Gy is an observation of g(y) which has been corrupted by zero mean, i.e. \({G}_{y}= g\left(y\right) + \epsilon \), where \(\epsilon \sim {\rm N}\left(0, {{\sigma }_{\epsilon }}^{2}\right)\). Thus, the resulting inference is called Gaussian process regression (GPR).

Assume Gy as the resulting real-valued observation of y set of observation points. For calculating the posterior distribution of a new point ŷ ϵ Ƴ, the mean and the corresponding variance of the Gaussian distribution can be given using Eqs. (12) and (13).

Furthermore, optimum of the unknown function can be calculated in the final step using the optimum of resulting posterior mean yR = argmaxŷϵƳ μ(ŷ|y). In general, the same cannot be computed in closed form and thus, some technique is required for the optimization of the function. The remaining challenge is to decide the points yi for which the observations have to be generalized.

Optimal selection

Model can be computed theoretically by considering all the possible points in the domain. Furthermore, it can be done by considering: (i) all possible points in the domain, (ii) possible outcomes (real-valued), and (iii) expectations and maximizations for all possible future sequences out to the horizon. Thus, next observation is made at the point which maximizes the posterior maximum considering future optimal decisions.

Most probable improvement

For finding the domain with largest observed value, let Gy+ is the maximum observed value and take ympi to maximize the posterior probability P (Gxmpi > Gx+), which is known as the most probable improvement (MPI) criterion.

Incidentally, the mean and the posterior standard deviation in this ratio are continuous functions and differentiable, and thus the function optimization techniques can be applied in the model to find ympi (Mockus 2005).

Multivariate adaptive regression splines (MARS)

MARS, developed by Friedman (1991), uses adaptive regression methods for achieving flexible modelling of even large dimensional dataset. It uses non-linear, non-parametric regression approach and works on the strategy of divide and conquer, and thus partitions training data into distinct domains having their own regression string. Basis function (BF) formation initiated by the model, defines the number of parameters (location of knots and degree of product) and number of BF, automatically by operations performed on given data. It should be noted that the knots are the connection between two BF (LeBlanc and Tibshirani 1994; Zhang et al. 2020). Furthermore, knots are located at the best search possible locations. Therefore, best possible location of the search is selected with lowest sum of square error with only one knot. Unfortunately, use of two or more knots for searching locations is challenging as it requires more computational effort as compared to earlier (single knot). Locations of knots are determined by MARS model in two steps: (i) collection of BF using possible knot’s locations, and (ii) decision on number of BF in final model (backward step). FB, generally known as splines, forms flexible model after combining and as mentioned earlier, connection points of two consecutive splines are knots. Schematic representing typical MARS model having splines with knots and BF can be seen in Fig. 13a, b, respectively. Suman et al. (2016) used MARS for stability analysis of soil slopes and obtained R2 values of 0.923 and 0.922 for training and testing cases, respectively, which represents superior performance of MARS model. Incidentally, Singh et al. (2020) used MARS model while performing reliability analysis of rock slopes and observed R2 values of 0.999 for training as well as testing cases. Further information about MARS can be found in Yuvaraj et al. (2013), Zhang and Goh (2013), Suman et al. (2016), Zhang and Goh (2016), Goh et al. (2017, 2018), Zhang et al. (2017, 2019), and Bardhan et al. (2021).

Schematic representing a MARS model having splines and knots, and b basis function (BF) (Bardhan et al. 2021)

Monte Carlo Simulation (MCS)

Monte Carlo Simulation (MCS) uses SoCom techniques considering not only impact of uncertainty but also risk associated (Mahdiyar et al. 2017). When the failure domain cannot be presented in analytical form and the analytical solution is not attainable, MCS is employed in slope stability problems (Tobutt 1982). MCS can also be applied in situations of complex nature of problems, having large number of basic variables, and also in some cases, where other methods are not applicable or not able to perform. MCS mathematical formulation is comparatively simple and has the capability to handle every practical possible case regardless of its complexity level. Excessive computational effort is involved in conventional MCS. Due to this, by reducing the statistical error inherent in MCS, a lot of variance reduction technique (sampling technique), have been developed to improve the computational efficiency. Importance sampling and conditional expectation are of special interest due to their potential of being very efficient (Dyson and Tolooiyan 2019; Kong et al. 2022).

Suppose, a limit state function be F(Y), having vector of basic random variable Y = (Y1, Y2, Y3,……., Yn), then the probability of failure can be expressed using Eq. (15):

where fy(Y) is the joint probability and F(Y) is an irregular domain (non-linear boundaries). Also, impartial estimator of probability of failure can be given by Eq. (16)

where I(Yi) is an indicator which can be defined using Eq. (17).

Probability of failure (pf) in terms of sample mean using MCS can be expressed as presented in Eq. (18).

where n = total number of simulations and ni = number of successful simulations.

Using values of mean and coefficient of variation (ASTM International 1966; Kadar 2017), standard deviation can be calculated and thus generation of data can be processed through MCS using command formula “randn”. Different datasets of 50, 100, 500, 1000, 5000, 10,000 etc., from a limited number of datasets can be generated using MATLAB and the equation can be represented by Eq. (19).

where μ = mean dataset values, σ = standard deviation of dataset values, and N = number of datasets needed to be generated.

Genetic programming (GP)

Genetic programming (GP) is an extension of John Holland’s genetic algorithm (Holland 1975). GP creates a population of fixed length character string based on the Darwinian operation of ‘Survival of fittest’, each representing possible solution of the problem. While obtaining iterations solution, GP algorithm uses an evolutionary function which uses genetic manipulation (chromosomes) in the form of mutation, crossover, and reproduction. Random population contains terminals and functions hierarchically as branches of a tree. Some of the functions used for computations like: (i) Boolean (NOR, OR, AND, NAND, NOT, etc.), (ii) Integers (1, 3, 7, 44, 69, 98, etc.), (iii) Arithmetic (+ , -, ÷ , × , etc.), and (iv) Trigonometric (sinh, cos, sech, cot, etc.). A blind random exploration of maiden population is created in the space of approx. solutions. Optimized fitness function is obtained by GP by direct searches in the space of chromosomes. Thus, fitness function is created for each chromosome. However, each chromosome act as a separate case, the search remains parallel and tests proceeds on individual possible solution. Fitness value, linked to each individual, monitors search. Structure and the framework of the genetic algorithm (GA) can be found in Fig. 14. More details of GP can be found in Koza (1992, 1994), Manouchehrian et al. (2014) and Bardhan et al. (2021). Singh et al. (2019) applied GP while performing reliability analysis of rock slope and proposed an equation of FoS as given below.

where ϕ = angle of internal friction, c = cohesion and σt = residual tensile stress.

Other soft computing methods adopted include gated recurrent unit (GRU) networks (Zhang et al. 2022b), ensemble learning (Zhang et al. 2022c), Long Short-Term Memory Neural Network and Prophet Algorithm (Tang et al. 2021).

Quality assessment

Data preprocessing techniques

Data analysis using SoCom models deals with huge data having various (varying) patterns. The raw data has complexities associated with it in the form of: (i) noisy data, (ii) missing values, (iii) inconsistent data, (iv) incomplete data, and (v) outlier data, etc. Thus, in order to enhance the efficiency of data, various advanced Data-preprocessing techniques needs to be applied as an essential step. A typical flowchart representing data-preprocessing technique is presented in Fig. 15. Therefore, in this context, several techniques like (i) cleaning, (ii) transformation, (iii) integration, and (iv) reduction have been elaborately discussed in the below sections.

Data integration

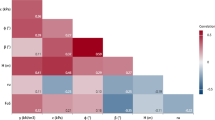

Data from various resources viz., data warehouse combines in data integration technique and can be having multiple database, data cubes and files. Issues like redundancy, object matching and scheme integration are issues associated with data integration. Some redundancies can be detected using correlation analysis. Attributes of one associated on the other can be measured from correlation between two variables.

Aggregation

It is the process of summarizing the data using mean, median and variance. The aggregated data thus obtained are used in data mining algorithms.

Data transformation

It is the process of transforming the data suitable for mining process.

Smoothing

The process of removal of noise from the data is known as smoothing of the data. Techniques generally used for smoothing the data are regression, clustering and binning.

Normalization

Normalization is the process of adjusting the data into specific range values, viz., − 1 to 1 and 0 to 1. Normalization is useful in mining techniques including clustering algorithms, artificial neural network and classification.

Data reduction

Data reduction technique is used to reduce representation of dataset in smaller volumes maintaining the integrity of the original dataset.

Binning

Binning is splitting top to down technique having dependency on bins number. Hierarchy generation and discretization also being conducted by data smoothing techniques like binning. For discretizing the values of attribute, equal-frequency and equal-width binning can be performed by replacing the bin value by median or mean similar to smoothing. Binning does not use class label and thus unsupervised technique, and also depends on bin numbers specified by the user.

Performance measures

Factor of safety (FoS) plays vital role in stability analysis of slopes and is a key factor for ensuring the performance of conventional methods. However, performance of SoCom models is used to be analysed based on different statistical parameters as mentioned in Table 3. More details of Table 3 can be found in Singh et al. (2020) and Kumar et al. (2021).

Discussion

The challenges associated with the slope stability analysis are the limited knowledge, data and site specificity of the problems and thus, SoCom models seems to be a promising tool to solve problems which can even perform in cases where no prior relationship between the predictors and predicted variables are available, which makes SoCom methods advantageous over conventional statistical and empirical relationships. It is prudent to mention that, many researchers have worked extensively and developed several SoCom models, performance of whose depends on a number of factors which have been discussed elaborately in this review. However, suitability of SoCom models generally decided on the basis of several statistical parameters (viz., R2, RMSE, MABE, and RMSE etc.), which depends on (i) input parameters, (ii) slope conditions, (iii) training and testing of dataset, (iv) number of data points and many other factors. Thus, in the era of AI, SoCom models seems to be an emerging and efficient methods for doing analysis of different types of problems which are not easy to solve using conventional methods.

However, there are some challenges in using SoCom in slope stability problems due to the (i) unavailability of data in some studies which are location specific and varies from place to place, (ii) underfitting and overfitting of models, (iii) as SoCom models are case and site-specific, there are chances of mis-fitting of data which may result in falsified output. Thus, more extensive and comprehensive research needs to be continued on SoCom models to enhance the efficiency of these methodologies.

Conclusion and way-forward

Applications and suitability of SoCom models and recent developments in the context of slope stability of geotechnical structures have been elaborately discussed in this paper. Furthermore, the analysis of conventional methods which have been continuously used since ages have also been considered in this article. The authors believe that most of the SoCom models are user-friendly and time-saving and are good substitution to conventional methods. Incidentally, the problems associated in the field of geotechnical engineering, especially slope stability, evolve with number of variables which make it difficult for numerical modelling and also solving using conventional methods. Thus, in the era of big data and time-efficient requirements, SoCom models are acting like a boon to solve difficult and complex problems more efficiently and in least amount of time as compared to other methods. Authors believe that in future, application of SoCom models forecasting and analysis would be brighter as more and more research efforts are devoted in this area.

Abbreviations

- SoCom :

-

Soft computing

- FoS :

-

Factor of safety

- β :

-

Reliability index

- CA:

-

Cubist Algorithm

- MARS:

-

Multivariate adaptive regression splines

- ELM:

-

Extreme learning machines

- GPR:

-

Gaussian process regression

- GRNN:

-

Generalized regression neural networks

- MCS:

-

Monte-Carlo simulation

- SVM:

-

Support vector machine

- GP:

-

Genetic Programming

- FN:

-

Functional network

- sd :

-

Standard deviation

- AAE :

-

Average absolute error

- R 2 :

-

Coefficient of determination

- Adj R 2 :

-

Adjusted coefficient determination

- RMSE:

-

Root mean square error

- NS:

-

Nash–Sutcliffe efficiency

- VAF:

-

Variance account factor

- MAE:

-

Maximum absolute error

- MBE:

-

Mean bias error

- WMAE:

-

Weighted mean absolute error

- MAPE:

-

Mean absolute percentage error

- NMBE:

-

Normalized mean bias error

- RPD:

-

Relative percent difference

- LMI:

-

Legate and McCabe’s Index

- U 95 :

-

Uncertainty at 95% confidence level

- α, β :

-

Slope angle

- c :

-

Cohesion

- ϕ :

-

Angle of internal friction

- γ :

-

Unit weight

- τ :

-

Shear stress

References

Al-karni AA, Al-shamrani MA (2000) Study of the effect of soil anisotropy on slope stability using method of slices. Comput Geotech 26:83–103

Armstrong JS, Collopy F (1992) Error measures for generalizing about forecasting methods: empirical comparisons. Int J Forecast 8:69–80. https://doi.org/10.1016/0169-2070(92)90008-W

ASTM International (1966) Testing Techniques For Rock Mechanics - American Society for Testing and Materials—Google Books. The Society, 1966

Baker R (2004) Nonlinear mohr envelopes based on triaxial data. J Geotech Geoenvironmental Eng 130:498–506. https://doi.org/10.1061/(asce)1090-0241(2004)130:5(498)

Bardhan A, Gokceoglu C, Burman A et al (2021) Efficient computational techniques for predicting the California bearing ratio of soil in soaked conditions. Eng Geol 291:106239. https://doi.org/10.1016/j.enggeo.2021.106239

Behar O, Khellaf A, Mohammedi K (2015) Comparison of solar radiation models and their validation under Algerian climate—the case of direct irradiance. Energy Convers Manag 98:236–251. https://doi.org/10.1016/j.enconman.2015.03.067

Bieniawski ZT (1989) Engineering rock mass classifications: a complete manual for engineers and geologists in mining, civil, and petroleum engineering. John Wiley & Sons, Hoboken

Bishop AW (1955) The use of the slip circle in the stability analysis of slopes. Geotechnique 5:7–17. https://doi.org/10.1680/geot.1955.5.1.7

Boser BE, Vapnik VN, Guyon IM (1992) Training Algorithm Margin for Optimal Classifiers. Perception 144–152

Bui XN, Nguyen H, Choi Y et al (2020) Prediction of slope failure in open-pit mines using a novel hybrid artificial intelligence model based on decision tree and evolution algorithm. Sci Rep 10:1–17. https://doi.org/10.1038/s41598-020-66904-y

Chen L, Zhang W, Gao X, Wang L, Li Z, Böhlke T, Perego U (2020) Design charts for reliability assessment of rock bedding slopes stability against bi-planar sliding: SRLEM and BPNN approaches. Georisk. https://doi.org/10.1080/17499518.2020.1815215

Dijkstra TA, Dixon N (2010) Climate change and slope stability in the UK: challenges and approaches. Q J Eng Geol Hydrogeol 43:371–385. https://doi.org/10.1144/1470-9236/09-036

Dyson AP, Tolooiyan A (2019) Prediction and classification for finite element slope stability analysis by random field comparison. Comput Geotech 109:117–129. https://doi.org/10.1016/j.compgeo.2019.01.026

Etemad-Shahidi A, Bali M (2012) Stability of rubble-mound breakwater using H50 wave height parameter. Coast Eng 59:38–45. https://doi.org/10.1016/j.coastaleng.2011.07.002

Friedman JH (1991) Multivariate adaptive regression splines. Ann Stat. https://doi.org/10.1214/aos/1176347963

Goh ATC, Zhang Y, Zhang R, Zhang W, Xiao Y (2017) Evaluating stability of underground entry-type excavations using multivariate adaptive regression splines and logistic regression. Tunn Undergr Space Technol 70:148–154

Goh ATC, Zhang WG, Zhang YM, Xiao Y, Xiang YZ (2018) Determination of EPB tunnel-related maximum surface settlement: a multivariate adaptive regression splines approach. Bull Eng Geol Environ 77:489–500

Gueymard C (2014) A review of validation methodologies and statistical performance indicators for modeled solar radiation data: towards a better bankability of solar projects. Renew Sustain Energy Rev 39:1024–1034

Hassan MA, Ismail MAM, Shaalan HH (2022) Numerical modeling for the effect of soil type on stability of embankment. Civ Eng J 7:41–57. https://doi.org/10.28991/CEJ-SP2021-07-04

Hoek E, Brown ET (1997) Practical estimates of rock mass strength. Int J Rock Mech Min Sci 34:1165–1186. https://doi.org/10.1016/S1365-1609(97)80069-X

Hoek E, Marinos P, Benissi M (1998) Applicability of the geological strength index (GSI) classification for very weak and sheared rock masses. The case of the Athens Schist Formation. Bull Eng Geol Environ 57:151–160. https://doi.org/10.1007/s100640050031

Holland JH (1975) Adaptation in Natural and Artificial Systems. Univ Michigan Press Ann Arbor Mich. https://doi.org/10.1007/s11096-006-9026-6

Janbu N (1973) Slope stability computations. John Wiley Sons, Hoboken

Javankhoshdel S, Bathurst RJ (2014) Simplified probabilistic slope stability design charts for cohesive and cohesive-frictional (c-ø) soils. Can Geotech J 1045:1033–1045

Jung NC, Popescu I, Kelderman P et al (2010) Application of model trees and other machine learning techniques for algal growth prediction in yongdam reservoir, Republic of Korea. J Hydroinformatics 12:262–274. https://doi.org/10.2166/hydro.2009.004

Kadar I (2017) The examination of different soil parameters’ coefficient of variation values and types of distributions. issmge.org

Kainthola A, Verma D, Thareja R, Singh TN (2013) A review on numerical slope stability analysis. Int J Sci Eng Technol Res 2:1315–1320

Kardani N, Bardhan A, Kim D et al (2021) Modelling the energy performance of residential buildings using advanced computational frameworks based on RVM, GMDH, ANFIS-BBO and ANFIS-IPSO. J Build Eng 35:102105. https://doi.org/10.1016/j.jobe.2020.102105

Keshtegar B, Kisi O (2017) M5 model tree and Monte Carlo simulation for efficient structural reliability analysis. Appl Math Model 48:899–910. https://doi.org/10.1016/j.apm.2017.02.047

Kong D, Luo Q, Zhang W et al (2022) Reliability analysis approach for railway embankment slopes using response surface method based Monte Carlo simulation. Geotech Geol Eng. https://doi.org/10.1007/s10706-022-02168-9

Koza JR (1994) Genetic programming as a means for programming computers by natural selection. Stat Comput 4:87–112. https://doi.org/10.1007/BF00175355

Koza J (1992) Genetic programming: on the programming of computers by means of natural selection

Kuhn M, Weston S, Keefer C, Coulter N (2016) Cubist Models For Regression. R Packag Vignette R Packag version 00 18

Kumar M, Bardhan A, Samui P et al (2021) Reliability analysis of pile foundation using soft computing techniques: a comparative study. Processes 9:486. https://doi.org/10.3390/pr9030486

Kung GT, Juang CH, Hsiao EC, Hashash YM (2007) Simplified model for wall deflection and ground-surface settlement caused by braced excavation in clays. J Geotech Geoenvironmental Eng 133:731–747. https://doi.org/10.1061/(ASCE)1090-0241(2007)133:6(731)

LeBlanc M, Tibshirani R (1994) Adaptive principal surfaces. J Am Stat Assoc 89:53–64. https://doi.org/10.1080/01621459.1994.10476445

Legates DR, Mccabe GJ (2013) A refined index of model performance: a rejoinder. Int J Climatol 33:1053–1056. https://doi.org/10.1002/joc.3487

Li YX, Yang XL (2019) Soil-slope stability considering effect of soil-strength nonlinearity. Int J Geomech 19:04018201. https://doi.org/10.1061/(asce)gm.1943-5622.0001355

Lin Y, Zhou K, Li J (2018) Prediction of slope stability using four supervised learning methods. IEEE Access 6:31169–31179. https://doi.org/10.1109/ACCESS.2018.2843787

Liu Z, Shao J, Xu W et al (2014) An extreme learning machine approach for slope stability evaluation and prediction. Nat Hazards 73:787–804. https://doi.org/10.1007/s11069-014-1106-7

Liu Q, Liu Y, Peng J et al (2018) Linking GRNN and neighborhood selection algorithm to assess land suitability in low-slope hilly areas. Ecol Indic 93:581–590. https://doi.org/10.1016/j.ecolind.2018.05.008

Liu LL, Zhang P, Zhang SH et al (2022) Efficient evaluation of run-out distance of slope failure under excavation. Eng Geol 306:106751. https://doi.org/10.1016/J.ENGGEO.2022.106751

Liu ZB, Xu WY, Jin HY, Liu DW (2010) Study on warning criterion for rock slope on left bank of Jinping No.1 Hydropower Station. Shuili Xuebao/Journal Hydraul Eng 41:

Luo Z, Bui XN, Nguyen H, Moayedi H (2021) A novel artificial intelligence technique for analyzing slope stability using PSO-CA model. Eng Comput 37:533–544. https://doi.org/10.1007/s00366-019-00839-5

Mahdiyar A, Hasanipanah M, Armaghani DJ et al (2017) A Monte Carlo technique in safety assessment of slope under seismic condition. Eng Comput 33:807–817. https://doi.org/10.1007/s00366-016-0499-1

Manouchehrian A, Gholamnejad J, Sharifzadeh M (2014) Development of a model for analysis of slope stability for circular mode failure using genetic algorithm. Environ Earth Sci 71:1267–1277. https://doi.org/10.1007/s12665-013-2531-8

Mehta AK, Kumar D, Singh P, Samui P (2021) Modelling of Seismic Liquefaction Using Classification Techniques. Int J Geotech Earthq Eng 12:12–21. https://doi.org/10.4018/IJGEE.2021010102

Mockus J (2005) The Bayesian approach to global optimization. System modeling and optimization. Kluwer academic publishers, New York, pp 473–481

Nash JE, Sutcliffe JV (1970) River flow forecasting through conceptual models part I—a discussion of principles. J Hydrol 10:282–290. https://doi.org/10.1016/0022-1694(70)90255-6

Peethambaran B, Kanungo DP, Anbalagan R (2022) Insights to pre- and post-event stability analysis of rainfall-cum-anthropogenically induced recent Laxmanpuri landslide, Uttarakhand, India. Environ Earth Sci 81:1–11. https://doi.org/10.1007/S12665-021-10143-5

Phoon K-K, Zhang W (2022) Future of machine learning in geotechnics. Georisk Assess Manag Risk Eng Syst Geohazards. https://doi.org/10.1080/17499518.2022.2087884

Pinheiro M, Sanches S, Miranda T et al (2015) A new empirical system for rock slope stability analysis in exploitation stage. Int J Rock Mech Min Sci 76:182–191. https://doi.org/10.1016/j.ijrmms.2015.03.015

Polat Ö, Yildirim T (2008) Genetic optimization of GRNN for pattern recognition without feature extraction. Expert Syst Appl 34:2444–2448. https://doi.org/10.1016/j.eswa.2007.04.006

Pouladi N, Møller AB, Tabatabai S, Greve MH (2019) Mapping soil organic matter contents at field level with Cubist, Random Forest and kriging. Geoderma 342:85–92. https://doi.org/10.1016/j.geoderma.2019.02.019

Prasomphan S, Machine SM (2013) Generating prediction map for geostatistical data based on an adaptive neural network using only nearest neighbors. Int J Mach Learn Comput 3:98

Quinlan JR (1993) A case study in machine learning. Proc 16th Aust Comput Sci Conf 2:731–737

Rafiei Renani H, Martin CD (2020) Slope stability analysis using equivalent mohr-coulomb and hoek-brown criteria. Rock Mech Rock Eng 53:13–21. https://doi.org/10.1007/s00603-019-01889-3

Rasmussen CE (2004) Gaussian processes in machine learning. Springer, Berlin, Heidelberg, pp 63–71

Ray R, Choudhary SS, Roy LB (2022) Reliability analysis of soil slope stability using MARS, GPR and FN soft computing techniques. Model Earth Syst Environ 8:2347–2357. https://doi.org/10.1007/S40808-021-01238-W

Sakellariou MG, Ferentinou MD (2005) A study of slope stability prediction using neural networks. Geotech Geol Eng 23:419–445. https://doi.org/10.1007/s10706-004-8680-5

Samui P (2008) Slope stability analysis: a support vector machine approach. Environ Geol 56:255–267. https://doi.org/10.1007/s00254-007-1161-4

Singh P, Kumar D, Samui P (2020) Reliability analysis of rock slope using soft computing techniques. Jordan J Civ Eng 14:27–42

Singh P, Mehta A, Kumar D, Samui P (2019) Rock slope reliability analysis using genetic programming. In: ICGGE. MNNIT Allahabad, ICGGE- 2019, Allahabad, pp 1–7

Specht DF (1991) A general regression neural network. IEEE Trans Neural Netw 6:568–576. https://doi.org/10.1109/72.97934

Srinivasulu S, Jain A (2006) A comparative analysis of training methods for artificial neural network rainfall-runoff models. Appl Soft Comput J 6:295–306. https://doi.org/10.1016/j.asoc.2005.02.002

Steward T, Sivakugan N, Shukla SK, Das BM (2011) Taylor’s slope stability charts revisited. Int J Geomech 11:348–352. https://doi.org/10.1061/(asce)gm.1943-5622.0000093

Stone RJ (1993) Improved statistical procedure for the evaluation of solar radiation estimation models. Sol Energy 51:289–291. https://doi.org/10.1016/0038-092X(93)90124-7

Suman S, Khan SZ, Das SK, Chand SK (2016) Slope stability analysis using artificial intelligence techniques. Nat Hazards 84:727–748. https://doi.org/10.1007/s11069-016-2454-2

Taheri A, Tani K (2010) Assessment of the stability of Rock slopes by the slope stability rating classification system. Rock Mech Rock Eng 43:321–333. https://doi.org/10.1007/s00603-009-0050-4

Tang L, Ma Y, Wang L, Zhang W, Zheng L, Wen H (2021) Application of long short-term memory neural network and prophet algorithm in slope displacement prediction. Int J Geoeng Case Hist 6(4):48–66

Taylor D (1937) Stability of earth slopes. J Bost Soc Civ

Tinoco J, Gomes Correia A, Cortez P, Toll DG (2018) Stability condition identification of rock and soil cutting slopes based on soft computing. J Comput Civ Eng 32:04017088. https://doi.org/10.1061/(asce)cp.1943-5487.0000739

Tobutt DC (1982) Monte Carlo simulation methods for slope stability. Comput Geosci 8:199–208. https://doi.org/10.1016/0098-3004(82)90021-8

Verma D, Kainthola A, Thareja R, Singh TN (2013) Stability analysis of an open cut slope in Wardha valley coal field. J Geol Soc India 81:804–812. https://doi.org/10.1007/s12594-013-0105-8

Viscarra Rossel RA, McGlynn RN, McBratney AB (2006) Determining the composition of mineral-organic mixes using UV-vis-NIR diffuse reflectance spectroscopy. Geoderma 137:70–82. https://doi.org/10.1016/j.geoderma.2006.07.004

Wang Y, Witten IH (1997) Induction of model trees for predicting continuous classes. In: Proc. 9th Eur. Conf. Mach. Learn. Poster Pap. 128–137

Wang Z, Liu H, Gao X, Böhlke T, Zhang W (2020) Stability analysis of soil slopes based on strain information. Acta Geotech 15(11):3121–3134

Wang Z, Gu D, Zhang W (2021) A DEM study on influence of excavation schemes on slope stability. J Mt Sci 17:1509–1522

Williams CKI (1998) Prediction with Gaussian processes: from linear regression to linear prediction and beyond. Learning in graphical models. Springer, Netherlands, Dordrecht, pp 599–621

Wong JL, Lee ML, Teo FY, Liew KW (2022) A review of impacts of climate change on slope stability. Lect Notes Civ Eng 178:157–178. https://doi.org/10.1007/978-981-16-5501-2_13

Wu C, Hong L, Wang L et al (2022) Prediction of wall deflection induced by braced excavation in spatially variable soils via convolutional neural network, Gondwana Research, https://doi.org/10.1016/j.g.

Xiang X, Zi-Hang D (2017) Numerical implementation of a modified Mohr-Coulomb model and its application in slope stability analysis. J Mod Transp 25:40–51. https://doi.org/10.1007/s40534-017-0123-0

Yuan C, Moayedi H (2020) The performance of six neural-evolutionary classification techniques combined with multi-layer perception in two-layered cohesive slope stability analysis and failure recognition. Eng Comput 36:1705–1714. https://doi.org/10.1007/s00366-019-00791-4

Yuvaraj P, Ramachandra Murthy A, Iyer NR et al (2013) Multivariate adaptive regression splines model to predict fracture characteristics of high strength and ultra high strength concrete beams. Comput Mater Contin 36:73–97

Zhang WG, Goh ATC (2013) Multivariate adaptive regression splines for analysis of geotechnical engineering systems. Comput Geotech 48:82–95

Zhang WG, Goh ATC (2016) Multivariate adaptive regression splines and neural network models for prediction of pile drivability. Geosci Frontiers 7:45–52

Zhang WG, Phoon KK (2022) Editorial for Advances and applications of deep learning and soft computing in geotechnical underground engineering. J Rock Mech Geotech Eng 4:671–673

Zhang T, Cai Q, Han L et al (2017) 3D stability analysis method of concave slope based on the Bishop method. Int J Min Sci Technol 27:365–370. https://doi.org/10.1016/j.ijmst.2017.01.020

Zhang W, Zhang R, Wu C et al (2020) State-of-the-art review of soft computing applications in underground excavations. Geosci Front 11:1095–1106. https://doi.org/10.1016/j.gsf.2019.12.003

Zhang W, Li H, Li Y, Liu H, Chen Y, Ding X (2021) Application of deep learning algorithms in geotechnical engineering: a short critical review. Artif Intell Rev 54(8):5633–5673

Zhang WG, Li HR, Tang LB, Gu X, Wang LQ, Wang L (2022) Displacement prediction of Jiuxianping landslide using gated recurrent unit (GRU) networks. Acta Geotech. https://doi.org/10.1007/s11440-022-01495-8

Zhang W, Li H, Han L, Chen L, Wang L (2022a) Prediction of slope stability using ensemble learning techniques: a case study in Yunyang County, Chongqing, China. J Rock Mech Geotech Eng. https://doi.org/10.1016/j.jrmge.2021.12.011

Zhang W, Gu X, Tang L, Yin Y, Liu D, Zhang Y (2022b) Application of machine learning, deep learning and optimization algorithms in geoengineering and geoscience: comprehensive review and future challenge. Gondwana Res 109:1–17

Zhang J, Li J (2019) A comparative study between infinite slope model and Bishop’s method for the shallow slope stability evaluation. 25:1503–1520. https://doi.org/10.1080/19648189.2019.1584768

Zhou J, Li E, Yang S et al (2019) Slope stability prediction for circular mode failure using gradient boosting machine approach based on an updated database of case histories. Saf Sci 118:505–518. https://doi.org/10.1016/j.ssci.2019.05.046

Funding

High-end Foreign Experts Recruitment Plan of China, DL2021165001L, Zhang, G20200022005, Wengang Zhang, Chongqing Science and Technology Commission, 2019-0045, Wengang Zhang.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors wish to confirm that there are no known conflicts of interest associated with this publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Singh, P., Bardhan, A., Han, F. et al. A critical review of conventional and soft computing methods for slope stability analysis. Model. Earth Syst. Environ. 9, 1–17 (2023). https://doi.org/10.1007/s40808-022-01489-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40808-022-01489-1