Abstract

We present a linear-time approximation scheme for solving the trust region subproblem (TRS). It employs Nesterov’s accelerated gradient descent algorithm to solve a convex programming reformulation of (TRS). The total time complexity is less than that of the recent linear-time algorithm. The algorithm is further extended to the two-sided trust region subproblem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the classical trust region subproblem

where \(A\in {\mathbb {R}}^{n\times n}\) is symmetric and possibly indefinite and \(b\in {\mathbb {R}}^{n}\). (TRS) plays a great role in trust region methods [2, 14] for nonlinear programming. Efficient numerical algorithms for (TRS) can be found in [5, 8]. When \(b = 0\), (TRS) reduces to the maximal eigenvector problem, which can be approximated in linear time [4]. Recently, the first linear-time approximation algorithm for (TRS) is proposed [6] based on strong duality, approximate eigenvector oracle and the bisection scheme.

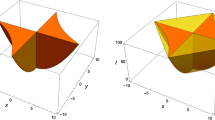

In this paper, we present a new and simple linear-time approximation scheme for solving (TRS). Actually, our algorithm works for a more general version of (TRS), the two-sided trust region subproblem:

where \(0\le \alpha \le 1\). (TTRS) was first introduced in [11]. For a recent survey on (TTRS), we refer to [10].

In the following, we study (TTRS) and keep in mind that (TRS) is a special case of (TTRS) when \(\alpha = 0\). Our approximation scheme contains two main steps. First we establish a new convex programming reformulation for (TTRS), which is different from the existing convex reformulations [1, 13]. Secondly, we employ Nesterov’s accelerated gradient descent algorithm [9] to solve the convexified version of (TTRS). The total worst-case time complexity is even less than that of the recent linear-time algorithm [6], which only works for (TRS).

The remainder of this paper is as follows. Section 2 presents a new hidden convexity for (TTRS). Section 3 applies Nesterov’s fast gradient method to solve the convexified formulation of (TTRS) and then analyzes the worst-case time complexity. The complexity is even less than the recent linear-time algorithm for (TRS). Conclusions are made in Sect. 4.

2 Hidden convexity

In this section, we present a new convex quadratic constrained quadratic programming reformulation for (TTRS).

Let \(v(\cdot )\) be the optimal value of \((\cdot )\). Denote by \({\lambda _1}\) the minimal eigenvalue of A. Let \(v\ne 0\) be the corresponding eigenvector. Without loss of generality, we assume \(b^Tv\le 0\).

Theorem 1

(TTRS) is equivalent to the following convex programming relaxation

in the sense that v(TTRS) \(= v\)( C). Moreover, for any optimal solution of \((\mathrm{C})\), denoted by \((x^*,t^*)\),

globally solves \((\mathrm{TTRS})\).

Proof

Let \({\widetilde{x}}\) be an optimal solution of \((\mathrm{TTRS})\). Then, \(({\widetilde{x}},\widetilde{t}:={\widetilde{x}}^T{\widetilde{x}})\) is a feasible solution of (C) whose objective value is equal to \(v(\mathrm{TTRS})\). Therefore, we have \(v(\mathrm{TTRS})\ge v(\mathrm{C})\). Now it is sufficient to show that the lower bound \(v(\mathrm{C})\) is attained for (TTRS).

Let \((x^*,t^*)\) be an optimal solution of \((\mathrm{C})\). We assume \(x^{*T}x^*<t^*\), otherwise, we are done. Define

Then, \((x(\gamma ),t^*)\) is a feasible solution of (C) for any \(\gamma \) such that \(x(\gamma )^Tx(\gamma )\le t^*\). Moreover, the objective value of \((x(\gamma ),t^*)\) is decreasing when \(\gamma \ge 0\) increases. Consequently, \((x(\gamma ),t^*)\) remains optimal for \(\gamma \ge 0\) and \(x(\gamma )^Tx(\gamma )\le t^*\). Notice that the equation \(x(\gamma )^Tx(\gamma )= t^*\) in terms of \(\gamma \) has a positive root \(\frac{\sqrt{(x^{*T}v)^2-v^Tv(x^{*T}x^*-t^*)}-x^{*T}v}{v^Tv}\). The proof is complete. \(\square \)

When \(\alpha =0\), Theorem 1 reduces to the following result for (TRS).

Corollary 1

([3], Theorem 1) Let \(\mu =\min \{\lambda _1,0\}\). \((\mathrm{TRS})\) is equivalent to the convex programming relaxation

in the sense that \(v(\mathrm{TRS})=v(\mathrm{C_0})\). Moreover, when \(\lambda _1<0\), for any optimal solution of \((\mathrm{C_0})\), denoted by \(x^*\),

globally solves \((\mathrm{TRS})\).

To approximately calculate \({\lambda _1}\), we need the following estimation [6] on the maximal eigenvalue of A, denoted by \(\lambda _{\max }(A)\), which is based on the analysis by Kuczynski and Wozniakowski [7].

Theorem 2

[6, 7] Given a symmetric matrix \(A \in {\mathbb {R}}^{n\times n}\) with \(\left\| A \right\| \le \rho \), parameters \(\varepsilon , \delta >0\) and let \(N(\ge n)\) is an upper bound of the number of non-zero entries in A, the Lanczos method [4] runs in time \(O(\frac{{N\sqrt{\rho }}}{{\sqrt{\varepsilon }}}\log \frac{n}{\delta })\) and returns a unit vector \(x\in {\mathbb {R}}^n\) for which

with probability at least \(1 - \delta \).

Notice that \(\left\| \rho I-A \right\| \le 2\rho \) and the maximal eigenvalue of \(\rho I-A\) is equal to \(\rho -\lambda _1\). Thus, applying Theorem 2 to \(\rho I-A\) yields the following estimation on \(\lambda _1\).

Corollary 2

Given a symmetric matrix \(A \in {\mathbb {R}}^{n\times n}\) with \(\left\| A \right\| \le \rho \) , parameters \(\varepsilon , \delta >0\) and let \(N(\ge \) n) is an upper bound of the number of non-zero entries in A, the Lanczos method [4] runs in time \(O(\frac{{N\sqrt{\rho }}}{{\sqrt{\varepsilon }}}\log \frac{n}{\delta })\) and returns a unit vector \({\widetilde{v}}\in {\mathbb {R}}^n\) for which

with probability at least \(1 - \delta \).

Define \({\widetilde{\lambda }}_1={\widetilde{v}}^TA{\widetilde{v}}-\epsilon \). Then,

As an approximation of (C), we turn to solve the following convex programming problem

It is not difficult to verify that

3 Nesterov’s accelerated gradient method and complexity

In this section, we employ Nesterov’s accelerated gradient descent method [9] to solve (AC) (7), (8) and then study the worst-case time complexity.

Notice that g(x, t) defined in (7) is a convex quadratic function. Then, the gradient of g, denoted by \(\nabla g\), is Lipschitz continuous:

where \(\rho \) is an upper bound of \(\left\| A \right\| \).

To solve (AC) (7), (8), we use the following algorithm in [12], which is a slightly generalized version of Nesterov’s accelerated gradient method [9].

Algorithm 1

-

1.

Choose \({\theta _0} = {\theta _{ - 1}} \in (0,1]\), \((x_0,t_0)=(x_{ - 1}, t_{ - 1})\in X\). Let \(k:=0\).

-

2.

Let

$$\begin{aligned} (y_k,s_k) = (x_k,t_k) + {\theta _k}(\theta _{k - 1}^{ - 1} - 1)((x_k,t_k)- (x_{k-1},t_{k-1})) \end{aligned}$$and \(\nabla g_k=\nabla g(y_k,s_k)\). Update

$$\begin{aligned} (x_{k+1},t_{k+1})= \arg \min _{(x,t) \in X}\nabla g_k^T(x,t)+\rho \Vert (x,t)-(y_k,s_k)\Vert ^2. \end{aligned}$$(10) -

3.

Choose \({\theta _{k + 1}} \in (0,1]\) satisfying

$$\begin{aligned} \frac{{1 - {\theta _k}}}{{\theta _{k + 1}^2}} \le \frac{1}{{\theta _k^2}}. \end{aligned}$$If the stopping criterion does not hold, update \(k:=k + 1\), and go to step 2.

Theorem 3

[9, 12] Let \(\left\{ {({x_k},{t_k})} \right\} \) be generated by Algorithm 1, \((x^*,t^*)\) be the global minimizer of (AC) (7), (8), then

That is, Algorithm 1 returns an \(\epsilon \)-approximation solution of (AC) in \(O(\sqrt{\rho /\varepsilon })\) iterations.

In each iteration of Algorithm 1, the main computational cost is to solve (10). In the following, we show the solution details of (10). We first rewrite the objective function of (10) as follows

where \(c=(A - {\widetilde{\lambda }}_1 I - 2\rho I){y_k} + b\), \(d={\widetilde{\lambda }}_1/2 - 2\rho s_k\) and \(e=\rho \Vert (y_k,s_k)\Vert ^2\).

Now, (10) is equivalent to the following convex program:

It is sufficient to solve the KKT system of (12)–(14), which reads as follows:

where \(\mu _1\) and \(\mu _2\) are the Lagrangian multipliers corresponding to (13) and (14), respectively.

Notice that \(\rho >0\), \(\mu _1\ge 0\) and \(\mu _2\ge 0\). It follows from (15) and (16) that

Consider the following different cases.

-

(a)

Suppose \(\mu _1=0\) and \(\mu _2=0\). If \(x(0)^Tx(0)\le t(0,0)\) and \(\alpha \le t(0,0)\le 1\), then (x(0), t(0, 0)) is the optimal solution of (10).

-

(b)

Suppose \(\mu _1=0\) and \(\mu _2>0\). According to (18), \(t(0,\mu _2)=\alpha \) or 1. Hence, \(\mu _2\) is solved. If \(x(0)^Tx(0)\le t(0,\mu _2)\), then \((x(0),t(0,\mu _2))\) is the optimal solution of (10).

-

(c)

Suppose \(\mu _1>0\) and \(\mu _2=0\). According to (17), we have \(x(\mu _1)^Tx(\mu _1)= t(\mu _1,0)\). Consequently, \(\mu _1\) is obtained by solving this cubic equation. If \(\alpha \le t(\mu _1,0)\le 1\), then \((x(\mu _1),t(\mu _1,0))\) is the optimal solution of (10).

-

(d)

Suppose \(\mu _1>0\) and \(\mu _2>0\). It follows from (17) to (18) that \(x(\mu _1)^Tx(\mu _1)= t(\mu _1,\mu _2)\), and \(t(\mu _1,\mu _2)=\alpha \) or 1. The first equation is cubic and the second is linear. Therefore, combining both yields a cubic function of \((\mu _1)\), which also has an explicit solution. For the obtained \((\mu _1,\mu _2)\), if \(x(\mu _1)^Tx(\mu _1)\le t(\mu _1,\mu _2)\) and \(\alpha \le t(\mu _1,\mu _2)\le 1\), then \((x(\mu _1),t(\mu _1,\mu _2))\) is the optimal solution of (10).

From above, we can see that (10) is modeled in O(N) time and then solved in O(n) time, where \(N(\ge n)\) is an upper bound of the number of non-zero entries in A. According to Theorem 3, we have the following estimation.

Lemma 1

The time complexity of approximately solving (AC) is

Furthermore, combining (9), Theorem 1, Corollary 2, and Lemma 1 yields the following main result.

Theorem 4

Given the parameters \(\epsilon >0\), \(\delta >0\), with probability at least \(1-\delta \) over the randomization of an approximate eigenvector oracle, we can find an \(\epsilon \)-approximation solution of (TTRS), \({\widetilde{x}}\in {\mathbb {R}}^n\), satisfying \(\alpha \le {\widetilde{x}}^T{\widetilde{x}}\le 1\) and

in total time

We notice that Hazan and Koren’s approximation scheme [6] is particular to (TRS). To the best of our knowledge, whether it can be extended to solve (TTRS) remains open. Nevertheless, even for (TRS), our complexity (23) is clearly less than Hazan and Koren’s [6]:

where \(\beta = \max ( \left\| A \right\| + \left\| b \right\| , 1)\).

4 Conclusions

Recently, the first linear-time algorithm for the trust region subproblem (TRS) is proposed based on strong duality, approximate eigenvector oracle and the bisection scheme. In this paper, we present a new linear-time approximation scheme for solving the trust region subproblem (TRS). It trivially employs Nesterov’s accelerated gradient descent algorithm to solve a convex quadratic constrained quadratic programming reformulation of (TRS). However, the total time complexity of our scheme is less than that of the recent one for (TRS). Our approach is further extended to the two-sided trust region subproblem. The future work will include more extensions to the other nonconvex quadratic programming problems.

References

Ben-Tal, A., Teboulle, M.: Hidden convexity in some nonconvex quadratically constrained quadratic programming. Math. Program. 72, 51–63 (1996)

Conn, A.R., Gould, N.I., Toint, P.L.: Trust Region Methods. Society for Industrial and Applied Mathematics, Philadelphia (2000)

Flippo, O.E., Jansen, B.: Duality and sensitivity in nonconvex quadratic optimization over an ellipsoid. Eur. J. Oper. Res. 94, 167–178 (1996)

Golub, G.H., Van Loan, C.F.: Matrix Computations. Johns Hopkins University Press, Baltimore (1989)

Gould, N.I.M., Lucidi, S., Roma, M., Toint, P.L.: Solving the trust-region subproblem using the Lanczos method. SIAM J. Optim. 9(2), 504–525 (1999)

Hazan, E., Koren, T.: A linear-time algorithm for trust region problems. Math. Program. 158(1), 363–381 (2016)

Kuczynski, J., Wozniakowski, H.: Estimating the largest eigenvalue by the power and Lanczos algorithms with a random start. SIAM J. Matrix Anal. Appl. 13(4), 1094–1122 (1992)

Moré, J.J., Sorensen, D.C.: SIAM J. Sci. Statist. Comput. Computing a trust region step 4(3), 553–572 (1983)

Nesterov, YuE: A method for unconstrained convex minimization problem with the rate of convergence \( O(1/k^2)\). Soviet Math. Dokl. 27(2), 372–376 (1983)

Pong, T.K., Wolkowicz, H.: Generalizations of the trust region subproblem. Comput. Optim. Appl. 58(2), 273–322 (2014)

Stern, R., Wolkowicz, H.: Indefinite trust region subproblems and nonsymmetric eigenvalue perturbations. SIAM J. Optim. 5(2), 286–313 (1995)

Tseng, P.: On accelerated proximal gradient methods for convex–concave optimization (unpublished manuscript) (2008)

Wang, S., Xia, Y.: Strong duality for generalized trust region subproblem: S-lemma with interval bounds. Optim. Lett. 9(6), 1063–1073 (2015)

Yuan, Y.: Recent advances in trust region algorithms. Math. Program. 151(1), 249–281 (2015)

Acknowledgments

The authors are grateful to the two anonymous referees for valuable comments. This research was supported by National Natural Science Foundation of China under Grants 11471325 and 11571029, and by fundamental research funds for the Central Universities under Grant YWF-15-SXXY.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, J., Xia, Y. A linear-time algorithm for the trust region subproblem based on hidden convexity. Optim Lett 11, 1639–1646 (2017). https://doi.org/10.1007/s11590-016-1070-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-016-1070-0