Abstract

The interval bounded generalized trust region subproblem (GTRS) consists in minimizing a general quadratic objective, q 0(x)→min, subject to an upper and lower bounded general quadratic constraint, ℓ≤q 1(x)≤u. This means that there are no definiteness assumptions on either quadratic function. We first study characterizations of optimality for this implicitly convex problem under a constraint qualification and show that it can be assumed without loss of generality. We next classify the GTRS into easy case and hard case instances, and demonstrate that the upper and lower bounded general problem can be reduced to an equivalent equality constrained problem after identifying suitable generalized eigenvalues and possibly solving a sparse system. We then discuss how the Rendl-Wolkowicz algorithm proposed in Fortin and Wolkowicz (Optim. Methods Softw. 19(1):41–67, 2004) and Rendl and Wolkowicz (Math. Program. 77(2, Ser. B):273–299, 1997) can be extended to solve the resulting equality constrained problem, highlighting the connection between the GTRS and the problem of finding minimum generalized eigenvalues of a parameterized matrix pencil. Finally, we present numerical results to illustrate this algorithm at the end of the paper.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the following quadratic minimization problem, which we call the interval bounded generalized trust region subproblem (GTRS):

Here A, \(B \in { \mathcal{S}^{n}}\), the space of real n×n symmetric matrices, a, \(b\in {\mathbb{R}}^{n}\), and −∞<l≤u<∞. To avoid trivialities, we assume that B≠0 and the GTRS is feasible.Footnote 1 We emphasize that the quadratic constraint is two-sided, and both the objective and constraint functions are possibly nonconvex. Thus we are essentially considering a general nonlinear program with a quadratic objective and two quadratic constraints.

Problem (1.1) is most studied in the one-sided ball-constrained case, i.e., the special case when B=I, b=0, and ℓ=0<u, known as the trust region subproblem (TRS):

These problems arise in regularization or trust region methods for unconstrained and constrained nonlinear programming; see, e.g., [7] for a comprehensive discussion. The TRS provides a quadratic model in a trust region around the current point. The use of scaled trust regions with a general B≻0 in the constraint (see, e.g. [15]) motivates our consideration of GTRS. In our general consideration of (1.1), our scaled trust region is defined with a possibly indefinite B; hence, x T Bx could be a quadratic form induced from the indefinite inner product 〈x,y〉:=x T By; see, e.g. [13]. This together with the two-sided constraints model annular/hyperbolic type regions that allow minimum as well as maximum steplengths. In addition, the possibly nonzero b accounts for a shift of the center of the trust region, which allows trust regions to be built around previous iterates.

Problem (1.2) is explicitly nonconvex, since A is not necessarily positive semidefinite. Nevertheless, it is implicitly convex in that necessary and sufficient optimality conditions have been derived, see Gay [12] and Moré and Sorensen [25]. The conditions are rephrased in a modern primal-dual paradigm in, for example, [7, 10, 11, 15, 29, 34]. The optimality conditions of the TRS can also be derived using the S-lemma; see, e.g. [28]. The S-lemma, developed by Yakubovich [36] in 1971, states whether a quadratic (in)equality is a consequence of other quadratic (in)equalities. The earliest results of this kind date back to Finsler [9] and Hestenes and McShane [19] and we refer the readers to [28] for a detailed account of its history and its applications. Turning to the more general problem (1.1), necessary and sufficient optimality conditions have been studied in [24, 34], under certain constraint qualifications in the special cases when b=0 or u=ℓ. The general case (1.1) was considered in [37, Sect. 2.1] under a dual strict feasibility assumption; see also [3, 8, 20]. Further references are available in the online bibliography [17].

The necessary and sufficient optimality conditions for (1.2) have been the basis for developing efficient algorithms for solving TRS. The classical algorithm [25] by Moré and Sorensen (MS algorithm) applies Newton’s method with backtracking to the so-called secular function, and takes a primal step to the boundary for near hard case instances. This algorithm can be expensive since each iteration requires a full Cholesky factorization. The MS algorithm was later incorporated into the generalized Lanczos trust-region (GLTR) method [15, 16]. The GLTR algorithm uses the Lanczos procedure to obtain a sequence of TRS on low dimensional subspaces with tridiagonal matrices as objectives. The resulting sequence of TRS is then solved efficiently by a variant of the MS algorithm; see [15, Sect. 5.2]. Another related algorithm is the sequential subspace method (SSM) [18]. This algorithm constructs very low dimensional subspaces that include a point obtained from a sequential quadratic programming subproblem. The resulting sequence of problems are diagonalized and solved by a Newton-secant type method. Another line of algorithms involves reformulating the TRS into a parameterized eigenvalue problem. This includes, e.g., the Rendl-Wolkowicz (RW) algorithm [11, 29], the large-scale trust-region subproblem (LSTRS) by Rojas et al. [31, 32] and its variant [21]. These algorithms are factorization free, and can take advantage of well developed eigensolvers for large, sparse matrices. Other algorithms for TRS can be found in, e.g. [33, 35]. Despite the many algorithms developed for TRS, there are currently no algorithms specifically designed for the general problem (1.1); though the special case when B≻0, b=0 and ℓ=0 can be solved by the GLTR method.

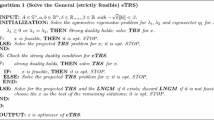

In this paper we present characterizations of optimality and propose an algorithm for solving large-scale instances of GTRS (1.1). Specifically, we obtain optimality conditions under a constraint qualification and show that it can be assumed without loss of generality. Our results show that even though the GTRS is a problem consisting of general, possibly nonconvex, quadratic functions, it has both necessary and sufficient optimality conditions as in convex programming, and it has strong duality results as in linear programming, in the sense that when the constraint qualification fails, the problem can be explicitly solved. Thus, the GTRS is implicitly convex like the classical TRS. It sits on the boundary between linear and nonlinear programming, and on the boundary between convex, and general nonconvex programming. We also discuss in detail the so-called easy and hard cases corresponding to (non)singularity of the Hessian of the Lagrangian, which was previously only studied for some special cases of (1.1) in [34]. Moreover, as in [11], we include a shift and deflate operation that finds an explicit solution in the hard case (case 2). We then demonstrate that the GTRS can be reduced to an equality constrained problem (i.e., an instance of GTRS with u=ℓ) after finding some suitable generalized eigenvalues and possibly solving a sparse system. To solve this equality constrained problem, we generalize the ideas in [11, 29] and transform the problem into a parameterized generalized eigenvalue problem. The latter problem can then take advantage of specialized solvers for finding generalized eigenvalues/eigenvectors, e.g., the eigifp developed in [14]. We compare this approach with the GLTR algorithm (when B≻0) and a simple implementation of Newton’s method with the Armijo line search rule as applied to solve the dual problem of (1.1). Our computational results on large-scale instances show that our approach is competitive with the Newton method and usually outperforms the GLTR algorithm for random sparse positive definite B in both runtime and solution accuracy. In the case when B is indefinite, our approach requires additional inputs for initialization; see Sect. 3.3 for details. Granting such inputs, our computational results show that our approach is competitive with the Newton method.

1.1 Outline

We complete this section with some preliminary notations in Sect. 1.2. We then present the characterizations of optimality in Sect. 2.1. This includes a constraint qualification that can be assumed without loss of generality in the sense that if it fails, then an explicit solution of the GTRS (1.1) can be obtained. In Sect. 2.2, we discuss the so-called easy and hard cases, the shift and deflation procedure to obtain an explicit solution in the hard case, and the reduction to an equality constrained problem. The algorithm for this equality constrained problem and its implementation details are discussed in Sect. 3. We present numerical tests in Sect. 3.4 and finally some concluding remarks in Sect. 4.

1.2 Notation

In this paper, the symbol \({ {\mathbb{R}}^{n}}\) denotes the n-dimensional vector space. For \(v\in { {\mathbb{R}}^{n}}\), ∥v∥ denotes the Euclidean norm of v. The space of n×n symmetric matrices equipped with the trace inner-product is denoted by \({ \mathcal{S}^{n}}\). For \(C\in { \mathcal{S}^{n}}\), λ max(C) and λ min(C) denote the largest and smallest eigenvalue of C, respectively. For \(C,D\in { \mathcal{S}^{n}}\), C⪰D,C≻D denote C−D is positive semidefinite, and positive definite, respectively. For a (not necessarily symmetric) square matrix M, tr(M) denotes the trace of M, M † denotes the Moore-Penrose generalized inverse of M, and \(\operatorname{{Null}}(M)\) and \(\operatorname{{Range}}(M)\) denote its null and range spaces, respectively. The identity matrix is denoted by I, whose dimension should be clear from the context.

For an interval J on the real line, the symbol cl(J) denotes its closure, and ri(J) denotes its relative interior. Finally, for a subspace V of \({ {\mathbb{R}}^{n}}\), the orthogonal complement of V is denoted by V ⊥, and if a vector \(v\in { {\mathbb{R}}^{n}}\) is orthogonal to V, we write v⊥V.

We use a number of acronyms in this paper. TRS refers to the standard trust region subproblem in (1.2); while GTRS refers to the generalized trust region subproblem in (1.1). GTRS= refers to the special equality constrained GTRS in (3.1). The acronyms D-GTRS, D≻-GTRS, SDP-GTRS and DSDP-GTRS correspond to various reformulations of the GTRS and are defined in the beginning of Sect. 2.1. RICQ stands for the relative interior constraint qualification defined in (2.6). Finally, RW algorithm stands for the Rendl-Wolkowicz algorithm studied extensively in [11, 29] and Sect. 3, while the GLTR algorithm stands for the generalized Lanczos trust-region method in [15, 16].

2 Properties of GTRS

2.1 Duality and optimality conditions

In this section, we present the characterizations of optimality and duality properties for GTRS (1.1). In particular, we include assumptions that can be made without loss of generality. These assumptions include a constraint qualification, whose failure means that an explicit solution for GTRS can be obtained.

We start by writing down the Lagrangian dual of (1.1). The Lagrangian for (1.1) can be written with one free Lagrange multiplier λ, or with two nonnegative Lagrange multipliersFootnote 2

The Lagrangian dual problem of (1.1) can then be reduced to the following problem:

where the dual functional

Note that the objective function in D-GTRS is concave. In this paper, we will also look at the following closely related problem formed by enforcing positive definiteness in (2.2) and thus reducing the size of the feasible set:

where

It is useful to study this problem since positive definiteness as in (2.3) is maintained in the algorithm presented below. Finally, we write down the semidefinite programming (SDP) relaxation of GTRS

By a Schur complement argument, the final inequality is equivalent to the linear constraint  .

.

Proposition 2.1

The dual of SDP-GTRS is

where the multipliers are defined in (2.1). Moreover, (2.5) is equivalent to (2.2) with \(d_{DSDP}^{*} =d^{*}\).

Proof

It is routine to show that the dual of (2.4) is given by (2.5). Furthermore, by considering the Schur complement, for any γ, \(\lambda\in {\mathbb{R}}\), we have

Thus, (2.5) is equivalent to (2.2). □

2.1.1 Assumptions and properties

We consider the following assumptions on the GTRS (1.1).

Assumption 2.1

-

1.

B≠0.

-

2.

GTRS (1.1) is feasible.

-

3.

The following relative interior constraint qualification holds

$$ (\mathit{RICQ}) \qquad \mathrm{tr}(B \hat{X}) - 2b^T\hat{x} \in\mathrm{ri}\bigl([\ell,u]\bigr), \quad \text{ for some } \hat{X}\succ\hat{x}{\hat{x}}^T. $$(2.6) -

4.

GTRS is bounded below.

-

5.

D-GTRS (2.2) is feasible.

Note that Assumption 2.1 together with weak duality yields

We show in the next theorem that Assumption 2.1 is reasonable in the sense that it can be made without loss of generality, i.e., if the assumption fails, then an explicit solution or a simplification can easily be obtained.

Theorem 2.1

The following holds for the Items in Assumption 2.1.

-

(i)

The Items 1, 2, 3 in Assumption 2.1 can be made in the order given, without loss of generality, i.e., if an assumption fails then an explicit solution can easily be obtained.

-

(ii)

If Items 1, 2, 3 in Assumption 2.1 hold and b=0, then Item 4 implies Item 5.

-

(iii)

If Item 4 in Assumption 2.1 fails, then Item 5 fails.

Proof

Let x ∗:=B † b, \(q_{1}^{*}:=q_{1}(x^{*})\). We now provide the details about how the assumptions hold in the order given.

-

(i)

-

Suppose that B=0, i.e., the constraint is linear. If \(A\succeq0, a\in \operatorname{{Range}}(A)\) and the unconstrained minimum \(\bar{x}=A^{\dagger}a\) satisfies ℓ≤−2b T(A † a)≤u, then \(\bar{x}\) solves GTRS. Otherwise, the optimum, if it exists, is on one of the two boundaries. Therefore, we can change the linear inequality constraint to an equality, and we can again check for an unconstrained minimum after the appropriate substitution using the linear constraint. Therefore, the assumption that B≠0 can be made without loss of generality.

-

First, GTRS is infeasible if, and only if, the following three conditions hold:

$$ \begin{array}{l} B \text{ is semidefinite};\\ b\in \operatorname{{Range}}(B) \quad\text{(equivalently $b=Bx^{*}$)};\\ q^*_1 < \ell\text{ if } B \preceq0; \quad\text{or} \quad q^*_1 > u \text{ if } B \succeq0. \end{array} $$More precisely, the characterization for infeasibility follows from the fact that q 1 is bounded below with minimum at x ∗ (resp. bounded above with maximum at x ∗) if, and only if, \(b\in \operatorname{{Range}}(B)\) and B⪰0 (resp. B⪯0). We can verify the semidefiniteness of B by finding the largest and smallest eigenvalues. The range condition follows from finding the best least squares solution of Bx=b. If the range condition holds, then the final inequalities can be checked by evaluating q 1 at x ∗. Thus, we conclude that feasibility can be verified by finding λ max(B) and λ min(B) and solving a system of equations, and hence, Assumption 2.1, Item 2, can be made without loss of generality.

-

Suppose that Assumption 2.1, Items 1–2 hold, but the RICQ Assumption 2.1, Item 3 fails. Then we can find an explicit solution for GTRS. More precisely, since the RICQ fails, we have \(b\in \operatorname{{Range}}(B)\), and either B⪯0 with ℓ=supx T Bx−2b T x or B⪰0 with u=infx T Bx−2b T x. In either case, the conditions imply that the feasible set is \(x^{*}+\operatorname{{Null}}(B)\). We can then use a nullspace representation and substitute into the objective function to obtain an explicit solution or realize that the problem is unbounded below. In conclusion, if RICQ fails, we can obtain an explicit solution or realize that the problem is unbounded.

-

-

(ii)

This conclusion is proved as Proposition A.1 in the Appendix.

-

(iii)

If Assumption 2.1, Item 5 holds, then d ∗>−∞. Combining with weak duality, we see that p ∗≥d ∗>−∞, i.e., Item 4 holds.

□

Remark 2.1

From the proof of Theorem 2.1(2.1) above, it is not hard to see that if we assume Items 1 and 2 of Assumption 2.1, then the RICQ (2.6) fails if and only if the constraint is convex and no Slater point (strict feasibility) exists, or equivalently, when the range of values of the quadratic q 1(x) in the constraint only meets the interval [ℓ,u] at one point. Thus, in the case of equality constraint, i.e., when u=ℓ, the RICQ holds if and only if inf x q 1(x)<ℓ=u<sup x q 1(x), which is precisely condition (3.2) in [24].

For the rest of the paper, we assume that Assumption 2.1 holds.

2.1.2 Weak duality

We have the following weak duality result describing the relationship between the optimal values of the above optimization problems.

Proposition 2.2

The optimal values satisfy

Moreover, if \(d^{*}_{\succ}> -\infty\), then \(d^{*}_{\succ}= d^{*}\).

Proof

The proof of (2.8) follows from the feasibility Assumption 2.1, weak duality, Proposition 2.1 and the definitions.

Now suppose that \(d^{*}_{\succ}\) is finite and suppose to the contrary that \(d^{*}_{\succ}< d^{*}\). Then there exists λ 1 and λ 2 feasible for (2.2) and (2.3), respectively, such that \(f(\lambda_{1}) > d^{*} - \frac{d^{*} - d^{*}_{\succ}}{2}\) and \(f(\lambda_{2}) > d^{*}_{\succ}- \frac{d^{*} - d^{*}_{\succ}}{2}\), where f(λ) is the common objective function for both problems. Since f(λ) is a concave function and \(\frac{\lambda_{1}+\lambda_{2}}{2}\) is feasible for (2.3), we obtain that

a contradiction. This completes the proof. □

2.1.3 Strong duality and characterization of optimality

We show below that equality holds for four of the five finite optimal values in (2.8); and, moreover, two of them are attained. We start with the following technical lemma where the quadratic forms in (1.1) are linearized. Recall that a Schur complement argument implies that the quadratic constraint X⪰xx

T in (2.9) is equivalent to the linear constraint  .

.

Lemma 2.1

Let C≻A and let

Then, the optimal value \(p_{C,SDP}^{*}\) is finite and attained.

Proof

The programs (D-GTRS) and (SDP-GTRS) are dual to each other. Assumption 2.1 implies that (2.9) is feasible and that there exists \(\hat{\lambda}\) satisfying the Slater condition \(C-\hat{\lambda}B \succ A-\hat{\lambda}B \succeq 0\), i.e., a Slater point exists for (D-GTRS) when A is replaced by C. This means that the dual program (2.9) is feasible and attained. □

We next prove strong duality between (1.1) and (2.2). This technical result is used repeatedly throughout this paper. Similarly as in [6], we make use of bounds on the rank of the extreme points of SDP representable sets [1, 2, 27] to prove the exactness of the SDP relaxations.

Theorem 2.2

Recall that Assumption 2.1 holds. Then the following holds for GTRS:

-

(i)

The optimal values of GTRS and its SDP relaxation are equal,

$$p_{SDP}^* = p^*. $$ -

(ii)

Strong duality holds for GTRS, i.e., p ∗=d ∗ and the dual optimal value d ∗ is attained. Moreover, equality holds for four of the five optimal values in (2.8),

$$ d^*_\succ\le d^* = d^*_{DSDP} = p^*_{SDP} = p^*. $$(2.10)Furthermore, if \(d^{*}_{\succ}> -\infty\), then all quantities in (2.10) are equal.

Proof

(i) For each ϵ≥0, let A ϵ :=A+ϵI. Consider the following perturbation of (1.1)

and its SDP relaxation

Then \(p_{\epsilon}^{*}\ge v_{\epsilon}^{*}\), for all ϵ≥0.

We proceed by first showing that \(p_{\epsilon}^{*} = v_{\epsilon}^{*}\) for each fixed ϵ>0. We start with the case when ℓ=u. In this case, the SDP relaxation can be written as

The optimal set of the above SDP is nonempty by Lemma 2.1. Since the cone of positive semidefinite matrices does not contain lines, the optimal value must be attained at an extreme point U ∗ of the feasible set. Since there are two equality constraints, by [27, Theorem 2.2], the rank r U of the extreme point satisfies

Since U

∗≠0, we must have r

U

=1 and hence  , for some x

∗. Then x

∗ is feasible for (1.1) and we conclude that \(p_{\epsilon}^{*} = v_{\epsilon}^{*}\).

, for some x

∗. Then x

∗ is feasible for (1.1) and we conclude that \(p_{\epsilon}^{*} = v_{\epsilon}^{*}\).

Next, we consider the case when ℓ<u. In this case, the SDP relaxation can be written as

The optimal set of the above SDP is nonempty, again by Lemma 2.1. Hence, the optimal value must be attained at an extreme point (U ∗,α ∗,β ∗) of the feasible set. Since there are three equality constraints, by [27, Theorem 2.2], the ranks r U , r α and r β of this extreme point have to satisfy

Notice that at optimality, α

∗ and β

∗ cannot both be zero. This fact together with (2.11) and the fact U

∗≠0 shows that r

U

=1 and hence  , for some x

∗. Then x

∗ is feasible for (1.1) and we again conclude that \(p_{\epsilon}^{*} = v_{\epsilon}^{*}\).

, for some x

∗. Then x

∗ is feasible for (1.1) and we again conclude that \(p_{\epsilon}^{*} = v_{\epsilon}^{*}\).

Hence, we have shown that \(p_{\epsilon}^{*} = v_{\epsilon}^{*}\), for all ϵ>0. Now, let (x,X) be feasible for the SDP relaxation (2.4). Then we have

Taking the infimum over the feasible set of the SDP relaxation gives the desired equality \(v_{0}^{*} = p_{0}^{*}\). This completes the proof.

(ii) Recall that the Lagrangian dual of the SDP relaxation (2.4) is given by (2.5). Moreover, from RICQ (2.6), the generalized Slater condition for (2.4) holds with the Slater point  . Hence \(p_{0}^{*}=v_{0}^{*} = d^{*}_{DSDP} = d^{*}\) and the dual optimal values are attained. This proves (2.10). The rest of the claim follows from Proposition 2.2. □

. Hence \(p_{0}^{*}=v_{0}^{*} = d^{*}_{DSDP} = d^{*}\) and the dual optimal values are attained. This proves (2.10). The rest of the claim follows from Proposition 2.2. □

We remark that, when ℓ<u in the above proof, the equality \(p^{*}_{\epsilon}= v^{*}_{\epsilon}\), for all ϵ>0, can also be obtained as a consequence of [37, Theorem 2.3]. We are now ready to characterize optimality for GTRS. We note that our constraint qualification (2.6) is different from that of [34, Theorem 2.1]. In particular, we do not require ℓ<u when B is indefinite with the optimal solution in its kernel and b=0. Moreover, as seen in Theorem 2.1, Assumption 2.1 can be made without loss of generality.

Theorem 2.3

Recall that Assumption 2.1 holds. A point x ∗ is a solution to GTRS (1.1) if, and only if, for some (Lagrange multiplier) \(\lambda^{*} \in {\mathbb{R}}\), we have

Proof

Suppose first that x ∗ is a solution to (1.1). Then the third relation in (2.12) holds. Furthermore, by Theorem 2.2, strong duality holds. Hence, there exists λ ∗ such that

The first two relations in (2.12) follow immediately from the optimality condition of the unconstrained optimization problem (2.14). Moreover, from (2.14) and primal feasibility of x ∗, we have

Comparing this last relation with (2.13), we obtain the complementary slackness expressions in the last two relations of (2.12).

Next, assume that x ∗ is primal feasible and that there exists λ ∗ so that (2.12) holds. Then we have the following chain of inequalities.

where the first equality follows from the definition of the Lagrangian dual problem, the second equality follows from the first two relations of (2.12), the third equality follows from the last two relations of (2.12), while the last inequality follows from the primal feasibility of x ∗. Thus, in particular, we have x ∗ T Ax ∗−2a T x ∗=p ∗, and so x ∗ solves (1.1). □

Remark 2.2

We note that in proving the optimality conditions (i.e., version of Theorem 2.3) in [24, 34] for the special cases of GTRS (1.1), the authors did not assume Items 4 and 5 of Assumption 2.1. Indeed, suppose we only assume Items 1 through 3 of Assumption 2.1. When b=0, if x ∗ is known to be a solution of the GTRS (1.1), then the problem is bounded below and thus by Theorem 2.1(ii), we conclude that Assumption 2.1 holds. On the other hand, if there exists λ ∗ and x ∗ such that (2.12) holds, then we conclude from Theorem 2.1(iii) that Assumption 2.1 holds. Thus, our result is a strict improvement of [34, Theorem 2.1], which assumes in addition b=0, since our RICQ (2.6) is weaker than condition (2.5) in [34, Theorem 2.1]. However, since it is not known to us whether Item 4 implies Item 5 when b≠0, we cannot assert that our Theorem 2.3 reduces to [24, Theorem 3.2], even though our RICQ is equivalent to their constraint qualifications.

Example 2.1

We illustrate that (2.10) can fail if Assumption 2.1 is violated. Moreover, attainment for GTRS can fail. Consider

Then clearly, the optimal value of the above optimization problem is zero. Moreover, the optimal value of its SDP relaxation, which is given below, is also zero:

However, it is not hard to observe that the dual problem is infeasible and thus the dual optimal value d ∗=−∞. Hence, we have \(p^{*} = p^{*}_{SDP} > d^{*}\). Indeed, Assumption 2.1 Item 3 and Item 5 are violated and thus Theorem 2.2 does not apply.

In the unconstrained case, boundedness below of q 0(x) yields attainment. This is not the case for GTRS. Consider

The optimal value 0 is unattained. Note that λ=0 is the unique dual feasible point. Further results on attainment can be found in, e.g., [23].

2.2 Easy and hard cases and regular pencils

From the first two relations in (2.12), it is obvious that we have to work with A−λI and the eigenvalues of A for TRS. Similarly, for GTRS, we have to deal with generalized eigenvalues and the matrix pencil A−λB corresponding to the matrix pair (A,B). In the latter case, due to the arbitrariness of B, several singular cases may arise, see e.g., [26]. Below are some prototypical examples.

-

1.

Consider the case when

, where

, where  is a common nullspace vector. Then the corresponding generalized eigenvalues include all of \({\mathbb{R}}\), i.e. the determinant det(A−tB)=0 is identically 0 in t. This case is problematic since a small perturbation in the matrices can cause a big change in the solution set.

is a common nullspace vector. Then the corresponding generalized eigenvalues include all of \({\mathbb{R}}\), i.e. the determinant det(A−tB)=0 is identically 0 in t. This case is problematic since a small perturbation in the matrices can cause a big change in the solution set. -

2.

Let

,

,  . The eigenpairs include complex eigenvalues. This case is problematic as the dual problem is infeasible.

. The eigenpairs include complex eigenvalues. This case is problematic as the dual problem is infeasible.

2.2.1 Regular case

To avoid the aforementioned singular matrix cases, we assume from now on that the matrix pencil is definite. This corresponds to the so-called (positively) regular case in [34].

Condition (2.15) covers most of the interesting cases. Indeed, it is shown in [34, Lemma 2.3] that if (2.15) fails and det(A−tB) is not an identically zero polynomial in t, then the feasible set of (2.2) is a singleton set. Condition (2.15) also guarantees the existence of a solution to (1.1); the proof of this fact follows similarly to that of Lemma 2.1. For convenience in the discussions below, we state this result as a proposition.

Proposition 2.3

If condition (2.15) holds, then the GTRS problem (1.1) has an optimal solution.

Proof

See the proofs of Lemma 2.1 and Theorem 2.2(i). □

Under the additional regularity assumption (2.15), we would like to classify the GTRS into the so-called easy case and hard cases analogously to the classical trust region subproblem. To this end, we follow a similar line of arguments as in [34, Sect. 3], which analyzes the case when ℓ=u=1 and b=0. We have to make use of the fact that under (2.15), the matrix pencil A−λB is diagonalizable by a congruence, see e.g., [22, Theorem 10.1]. We include a proof in Theorem 2.4 below for the convenience of the readers.

Theorem 2.4

Recall that the pencil is (positively) regular. Then, there exists an invertible matrix S and diagonal matrices D 1 and D 2 such that

Furthermore, A−λB⪰0 if, and only if,

where α i and β i are the ith diagonal entry of D 1 and D 2, respectively. Moreover, \((\underline{\lambda},\overline{\lambda})\) is a nonempty open interval; at least one of the end points of the interval has to be finite; and,

Proof

Let \(\widehat{C}\) be as in (2.15). Without loss of generality, we can assume \(\widehat{\lambda}\neq0\). Let \(\widehat{C} =PDP^{T}\) denote the spectral decomposition (orthogonal diagonalization) of \(\widehat{C}\). Therefore the diagonal D satisfies \(0\prec D=P^{T}(A-\widehat{\lambda}B)P\) and, \(I=D^{-1/2} P^{T}(A-\widehat{\lambda}B)PD^{-1/2}\). Let Q give the orthogonal diagonalization of D −1/2 P T APD −1/2, i.e. Q T D −1/2 P T APD −1/2 Q=D 1. Define S=PD 1/2 Q. Then it follows that A=SD 1 S T and \(B = -\frac{1}{\widehat{\lambda}}S(I - D_{1})S^{T}\).

Since positive semidefiniteness is preserved under congruence, we see immediately that A−λB⪰0 if, and only if,

Since D 1 and D 2 are diagonal, we obtain further that A−λB⪰0 is equivalent to

where α i and β i are the ith diagonal entry of D 1 and D 2, respectively. Next, note that \(\lambda\in(\underline{\lambda},\overline{\lambda})\) if, and only if, A−λB≻0. Thus, by (2.15), \((\underline{\lambda},\overline{\lambda})\) is a nonempty open interval. Furthermore, since B≠0, it follows that at least one of the end points of the interval has to be finite. Finally, we note that α i and β i cannot both be zero, because of (2.15). □

We remark that the equivalence in (2.16) was established earlier in [24, Theorem 5.3]; see also [34, Lemma 3.1]. Next, for each real \(\lambda\in\mathrm{cl}{(\underline{\lambda},\overline {\lambda})}\), the closure of the interval, we define the first order stationary point

and the constraint evaluated at x(λ)

Recall that in the TRS case where B=I and b=0, we can orthogonally diagonalize A and get \(\underline{\lambda} = -\infty< \lambda^{*} \leq\overline{\lambda}=\lambda_{\min}(A)\). In addition, if we also have a=0 (homogeneous case), then both x(λ) and ψ(λ) are constant functions, identically 0. This property extends to the general case as follows, whose proof can be found in [24, p. 202].

Lemma 2.2

Let \(\lambda\in(\underline{\lambda},\overline{\lambda})\). Then the functions x(λ) and ψ(λ) in (2.17) and (2.18), respectively, are differentiable with derivatives given by

Moreover, on \((\underline{\lambda},\overline{\lambda})\), ψ(λ) is either constant or strictly increasing with

Remark 2.3

Notice that if (2.19) holds, then (a−λb)=(A−λB)v, for some v. Therefore, the objective in (2.2) becomes −v T(A−λB)v+ℓλ +−uλ −. Thus (2.2) reduces to a linear programming problem.

We now define the easy case and the hard cases. Unlike the case for TRS, we have to consider separately the cases when \(\overline{\lambda}\) or \(\underline {\lambda}\) is infinite.

Definition 2.1

The easy case occurs if one of the following three cases holds:

-

1.

both \(\overline{\lambda}\) and \(\underline{\lambda}\) are finite, and \(-\lim_{\lambda\downarrow\underline{\lambda}}\psi(\lambda) = \lim_{\lambda\uparrow\overline{\lambda}}\psi(\lambda) = +\infty\);

-

2.

only \(\overline{\lambda}\) is finite, and \(\lim_{\lambda\uparrow\overline{\lambda}}\psi(\lambda) = +\infty\);

-

3.

only \(\underline{\lambda}\) is finite, and \(\lim_{\lambda\downarrow\underline{\lambda}}\psi(\lambda) = -\infty\).

Otherwise, we have the hard case.

From the above definition and Lemma 2.2, we see immediately that in the easy case, the function ψ has to be strictly increasing on \((\underline {\lambda},\overline{\lambda})\). Sample shapes of the function ψ in the easy and hard cases are shown in Figs. 1 and 2. The next lemma and the discussion following it justify the terminology easy case.

Lemma 2.3

If \(\overline{\lambda}=+\infty\), then \(\lim_{\lambda\uparrow \overline{\lambda}}\psi(\lambda) = \sup x^{T}Bx-2b^{T}x > \ell\). Similarly, if \(\underline{\lambda}=-\infty\), then \(\lim_{\lambda\downarrow\underline{\lambda}}\psi(\lambda) = \inf x^{T}Bx-2b^{T}x < u\).

Proof

Suppose first that \(\overline{\lambda} = +\infty\). Then, from the definition of \(\overline{\lambda}\), we see that B⪯0, is negative semidefinite. We consider two cases: \(b\in \operatorname{{Range}}(B)\) and \(b\notin \operatorname{{Range}}(B)\).

Suppose that \(b\in \operatorname{{Range}}(B)\). Notice first from the definition of \(\underline{\lambda}\) and \(\overline{\lambda}\) that A−λB≻0, and so also \(B - \frac{1}{\lambda}A\prec0\), for all sufficiently large λ. From this and the definition of x(λ), for all sufficiently large λ and for any \(x\in {\mathbb{R}}^{n}\), we see that

Furthermore,

where α i and β i are defined as above, while ξ=S −1 a and γ=S −1 b. Since \(b\in \operatorname{{Range}}(B)\) means that γ i =0 whenever β i =0, we see immediately that

Taking limits in λ on both sides of (2.20) and then taking the supremum over all \(x\in {\mathbb{R}}^{n}\), we obtain that \(\lim_{\lambda\uparrow\overline{\lambda}}\psi (\lambda) \ge\sup x^{T}Bx -2b^{T}x > \ell\), where the last strict inequality is a consequence of RICQ (2.6). Hence, the first conclusion follows when \(b\in \operatorname{{Range}}(B)\).

Next, suppose \(b\notin \operatorname{{Range}}(B)\). Then by the definition of ψ(λ)=q 1(x(λ)), we have

The assumption on b implies that there exists i 0 such that \(\beta _{i_{0}}=0\) but \(\gamma_{i_{0}}\neq0\) (notice that by (2.15), necessarily the corresponding \(\alpha_{i_{0}} > 0\)). Thus, \(\lim_{\lambda\uparrow\overline{\lambda}}\psi(\lambda) = +\infty \) and the first conclusion holds trivially in this case.

The case when \(\underline{\lambda} = -\infty\) can be proved similarly. □

From Lemmas 2.2 and 2.3, we see that, in the easy case, ψ(λ)=s has a unique solution in the open interval \((\underline{\lambda},\overline{\lambda})\) for any s∈(infψ,supψ). We next show that we can alternatively characterize the easy case and hard cases via the null space of \(A - \overline{\lambda} B\) or \(A - \underline {\lambda} B\); see also [34, Sect. 3.1] for a similar analysis on the special case of GTRS (1.1) with u=ℓ=1 and b=0.

Lemma 2.4

If \(\underline{\lambda}\) is finite, then \(\lim_{\lambda\downarrow \underline{\lambda}}\psi(\lambda) = -\infty\) if, and only if, \(a-\underline{\lambda}b\notin \operatorname{{Range}}(A - \underline{\lambda}B)\). Similarly, if \(\overline{\lambda}\) is finite, then \(\lim_{\lambda\uparrow\overline{\lambda}}\psi(\lambda) = +\infty \) if, and only if, \(a-\overline{\lambda}b \notin \operatorname{{Range}}(A - \overline{\lambda}B)\).

Proof

Suppose first that \(\underline{\lambda}\) is finite. Then the set of indices \(\underline{I}\) such that \(\alpha_{i} - \underline {\lambda}\beta_{i} = 0\) is nonempty. Moreover, from the definition of \(\underline{\lambda}\) and the fact that \(\underline{\lambda} < \overline{\lambda}\), we obtain that β i <0 for all \(i\in\underline{I}\). Next, notice that for any \(\lambda\in(\underline{\lambda},\overline{\lambda})\), we have from (2.18) that

with α i , β i defined as above and ξ=S −1 a, γ=S −1 b. Since \(a-\underline{\lambda}b \notin \operatorname{{Range}}(A - \underline{\lambda}B)\) if, and only if, there exists \(i_{0}\in\underline{I}\) with \(\xi_{i_{0}} - \underline{\lambda}\gamma_{i_{0}}\neq0\), the first conclusion now follows immediately. The second statement can be proved similarly. □

Using Lemma 2.4, we see that the hard and easy cases can be alternatively characterized as in Table 1.

Before describing how the easy case and hard cases can be tackled, we need the following technical lemma concerning the smallest and largest generalized eigenvalues \(\underline{\lambda}\) and \(\overline{\lambda}\). This result was briefly discussed in [24, Sect. 5]. We include a proof here for the convenience of the readers.

Lemma 2.5

If \(\overline{\lambda}\) is finite, then v T Bv>0 for all \(v\in \operatorname{{Null}}(A - \overline{\lambda} B)\backslash\{0\}\). Similarly, if \(\underline{\lambda}\) is finite, then v T Bv<0 for all \(v\in \operatorname{{Null}}(A - \underline{\lambda} B)\backslash\{0\}\).

Proof

Suppose first that \(\overline{\lambda}\) is finite and let \(v\in \operatorname{{Null}}(A - \overline{\lambda} B)\backslash\{0\}\). Take any \(\widetilde{\lambda}\in(\underline{\lambda},\overline {\lambda })\). Then \(A - \widetilde{\lambda}B \succ0\) and hence we have

Since \(\overline{\lambda} > \widetilde{\lambda}\), we conclude that v T Bv>0. This proves the first part. The second conclusion can be proved similarly. □

2.2.2 Three intervals for λ

We are now ready to describe how GTRS can be tackled. Our discussion is different from that in the concluding remarks of [24] in several aspects. First, our approach does not require solving two GTRS with equality constraints. Second, we include detailed discussion about the easy case and the hard cases, with hard case, case 2, solved explicitly. In what follows, we consider three cases dependent on where 0 is located relative to the interval \((\underline{\lambda}, \overline{\lambda})\).

Case 1: \(\underline{\lambda}< 0< \overline{\lambda}\)

-

Easy case: If ℓ≤ψ(0)≤u, then it is easy to check that the optimality conditions of GTRS are satisfied with λ ∗=0 and x ∗=A −1 a. We have an interior solution in this case.

Otherwise, suppose we have ψ(0)<ℓ instead. Since ψ is strictly increasing in \((\underline{\lambda},\overline{\lambda})\), we observe that the optimal Lagrange multiplier λ ∗>0, i.e., it has to be positive. From the complementary slackness condition in (2.12), we observe further that such a multiplier is the unique solution of ψ(λ)=ℓ. The optimal solution is then given by x(λ ∗).

The case when ψ(0)>u can be considered similarly, where the optimal Lagrange multiplier λ ∗<0 solves ψ(λ)=u, and the optimal solution is x(λ ∗).

-

Hard case: If ℓ≤ψ(0)≤u, then x(0)=x ∗=A −1 a and we again obtain an interior solution.

Otherwise, we take a primal step to the boundary. First, suppose we have ψ(0)<ℓ. Since ψ is increasing, we necessarily have λ ∗>0. If \(\lim_{\lambda\uparrow\overline{\lambda}}\psi(\lambda) > \ell \), then the equation ψ(λ)=ℓ is solvable and gives the optimal Lagrange multiplier \(\lambda^{*} < \overline{\lambda}\). The optimal solution is given by x(λ ∗). On the other hand, consider \(\lim_{\lambda\uparrow\overline{\lambda}}\psi(\lambda) \le \ell\). Then \(\overline{\lambda}\) is finite by Lemma 2.3 and thus \(q_{1}(x(\overline{\lambda}))=\lim_{\lambda\uparrow \overline{\lambda}}\psi(\lambda)\). Hence Lemma 2.5 implies that there exists \(v\in \operatorname{{Null}}(A - \overline{\lambda}B)\) satisfying v T Bv>0. Scale such v so that \(q_{1}(x(\overline{\lambda}) + v) = \ell\). Then an optimal solution is given explicitly by \(x(\overline{\lambda}) + v\), with optimal Lagrange multiplier equal to \(\overline{\lambda}\).

Finally, suppose we have ψ(0)>u instead. Then we necessarily have λ ∗<0. If \(\lim_{\lambda\downarrow\underline{\lambda}}\psi(\lambda) < u\), then the equation ψ(λ)=u is solvable and gives the optimal Lagrange multiplier \(\lambda^{*} > \underline{\lambda}\). The optimal solution is given by x(λ ∗). On the other hand, if \(\lim_{\lambda\downarrow\underline{\lambda }}\psi (\lambda) \ge u\), then \(\underline{\lambda}\) is finite by Lemma 2.3 and thus \(q_{1}(x(\underline{\lambda}))=\lim_{\lambda\downarrow \underline{\lambda}}\psi(\lambda)\). From Lemma 2.5, there exists \(v\in \operatorname{{Null}}(A - \underline {\lambda}B)\) satisfying v T Bv<0. Scale such v so that \(q_{1}(x(\underline{\lambda}) + v) = u\). Then an optimal solution is given explicitly by \(x(\underline{\lambda}) + v\), with the corresponding Lagrange multiplier being \(\underline {\lambda}\).

Case 2: \(\overline{\lambda}\le0\)

Notice that the optimal Lagrange multiplier has to be nonpositive and \(\overline{\lambda}\) has to be finite.

-

Easy case: We see that the optimal Lagrange multiplier λ ∗ has to be negative, and thus, a solution of ψ(λ)=u. The optimal solution is then given by x(λ ∗).

-

Hard case: If \(\lim_{\lambda\downarrow \underline{\lambda}}\psi(\lambda) < u < \lim_{\lambda\uparrow \overline{\lambda}}\psi(\lambda)\), then ψ(λ)=u is solvable with the optimal Lagrange multiplier λ ∗ as the unique solution. The optimal solution is again given by x(λ ∗).

On the other hand, if \(\lim_{\lambda\downarrow\underline{\lambda }}\psi (\lambda)\ge u\), then by Lemma 2.3, \(\underline{\lambda}\) is finite and thus \(q_{1}(x(\underline{\lambda})) =\lim_{\lambda\downarrow\underline{\lambda}}\psi(\lambda)\). Hence, by Lemma 2.5 there exists \(v\in \operatorname{{Null}}(A - \underline{\lambda}B)\) satisfying v T Bv<0. Scale such v so that \(q_{1}(x(\underline{\lambda}) + v) = u\). Then an optimal solution is given explicitly by \(x(\underline{\lambda})+v\), and \(\lambda^{*} = \underline{\lambda}<0\). Finally, if \(\lim_{\lambda\uparrow\overline{\lambda}}\psi(\lambda )\le u\), then \(q_{1}(x(\overline{\lambda})) =\lim_{\lambda\uparrow\overline{\lambda}}\psi(\lambda)\). Thus, by Lemma 2.5 there exists \(v\in \operatorname{{Null}}(A - \overline{\lambda}B)\) satisfying v T Bv>0. Scale such v so that \(q_{1}(x(\overline{\lambda}) + v) = u\). Then an optimal solution is given explicitly by \(x(\overline{\lambda}) + v\), and \(\lambda^{*} = \overline{\lambda}\le0\).

Case 3: \(\underline{\lambda}\ge0\)

Notice that the optimal Lagrange multiplier has to be nonnegative and \(\underline{\lambda}\) has to be finite.

-

Easy case: Arguing similarly as in Case 2, we conclude that in the easy case, the optimal Lagrange multiplier λ ∗ is a solution of ψ(λ)=ℓ and the optimal solution is given by x(λ ∗).

-

Hard case: If \(\lim_{\lambda\downarrow\underline{\lambda}}\psi(\lambda) < \ell< \lim_{\lambda\uparrow\overline{\lambda}}\psi(\lambda)\), then the optimal Lagrange multiplier λ ∗>0 solves ψ(λ)=ℓ and the optimal solution is given by x(λ ∗). Furthermore, if \(\lim_{\lambda\downarrow\underline{\lambda}}\psi(\lambda)\ge \ell\), then an optimal solution is \(x(\underline{\lambda}) + v\), where \(v\in \operatorname{{Null}}(A - \underline{\lambda}B)\) satisfies v T Bv<0 and \(q_{1}(x(\underline{\lambda}) + v) = \ell\), and \(\lambda^{*} = \underline{\lambda}\). Finally, if \(\lim_{\lambda\uparrow\overline{\lambda}}\psi(\lambda )\le \ell\), then an optimal solution is \(x(\overline{\lambda}) + v\), where \(v\in \operatorname{{Null}}(A - \overline{\lambda}B)\) satisfies v T Bv>0 and \(q_{1}(x(\overline{\lambda}) + v) = \ell\), and \(\lambda^{*} = \overline {\lambda}\).

From the above discussion, we see immediately that, unless A≻0 and A −1 a is an interior solution, the GTRS always has a solution on the boundary of the feasible set. Following the above procedures, we either end up solving an equality constrained problem, or obtain a closed form solution by moving a point to the suitable boundary along a suitable generalized eigenvector. In the next section, we will adapt the Rendl-Wolkowicz (RW) algorithm for TRS in [29] to tackle the equality constrained problem.

Before closing this section, we discuss how to compute \(\lim_{\lambda \uparrow\overline{\lambda}}\psi(\lambda)\) and \(\lim_{\lambda\downarrow\underline{\lambda}}\psi(\lambda)\) efficiently, which are important for the above case analysis. We only outline the procedure for computing \(\lim_{\lambda\uparrow \overline{\lambda}}\psi(\lambda)\). The one for computing \(\lim_{\lambda\downarrow\underline{\lambda}}\psi (\lambda )\) is similar.

Recall that \(A - \overline{\lambda}B\) is singular and positive semidefinite. Thus, a nullspace vector of \(A - \overline {\lambda}B\) can be found by finding an eigenvector corresponding to the smallest eigenvalue, i.e., 0.

Procedure for computing \(\lim_{\lambda\uparrow\overline {\lambda }}\psi(\lambda)\) .

-

Step 1.

Take \(v\in \operatorname{{Null}}(A - \overline{\lambda}B)\backslash\{0\}, \| v\|=1\).

If \(v^{T}(a - \overline{\lambda}b)\neq0\), this means \((a - \overline{\lambda}b)\notin \operatorname{{Range}}(A - \overline {\lambda}B)\) and thus \(\lim_{\lambda\uparrow\overline{\lambda}}\psi(\lambda) = +\infty\); quit. Else, update/deflate A←A+αvv T for some α>0. Repeat Step 1 if \(\operatorname{{Null}}(A - \overline{\lambda}B)\neq\{0\}\).

-

Step 2.

Solve for \(\bar{x} = (A-\overline{\lambda}B)^{-1}(a - \overline {\lambda}b)\). Then \(\lim_{\lambda\uparrow\overline{\lambda}}\psi(\lambda) = q_{1}(\bar{x})\).

3 A method for solving GTRS: the extended Rendl-Wolkowicz algorithm

In this section, we discuss how the Rendl-Wolkowicz (RW) algorithm proposed in [29] can be adapted to solve the following equality constrained problem:

where \(s\in {\mathbb{R}}\). Recall that we assume Assumption 2.1 holds and that the regularity in (2.15) holds. Thus, by Proposition 2.3, (3.1) has an optimal solution. Following [29], we consider the following chain of inequalities.

Let Ω denote the set of all real numbers r such that A+rB⪰0 and \(a+rb\in \operatorname{{Range}}(A+rB)\). This set is nonempty by Assumption 2.1, Item 5. Furthermore, if r∉Ω, then it is easy to see that

regardless of τ. Thus, continuing from (3.2) we have

where: the first equality follows from the observation in (3.3); while the second equality follows from Theorem 2.2, since for any r∈Ω, a Schur complement argument implies that there exists sufficiently large τ such that

the third equality follows from the homogenization; the fourth equality follows from an observation similar to (3.3) for the inner optimization problem; while the last equality follows from Theorem 2.2. Thus, equality holds throughout in (3.2). In particular, from the third line in (3.2), we have

where

The RW algorithm adapted to our context amounts to solving (3.1) via maximizing k(t).

3.1 Properties of k 0(t)

In this subsection, we discuss differentiability of the function k 0(t) and show that a maximizer of k(t):=k 0(t)−t necessarily exists. In addition to Assumption 2.1 and the regularity in (2.15), we also assume that s+1≠0, which can always be satisfied by scaling B and b. Furthermore, to avoid triviality, we assume that (2.19) fails so that ψ is strictly increasing. Indeed, in view of Remark 2.3, if (2.19) holds, (2.2) is a simple linear programming problem and can be readily solved.

Our analysis is based on the following function:

This function reduces to the d(λ) considered in [29] for problem (1.2). Since d′(λ)=1+ψ(λ) on \((\underline{\lambda},\overline{\lambda})\) and (2.19) fails, we see that this function is strictly convex with strictly increasing derivative on \((\underline{\lambda },\overline {\lambda})\). With a Schur complement argument, we get the following result.

Lemma 3.1

The following four properties hold.

-

(i)

Suppose \(\lambda\in(\underline{\lambda},\overline{\lambda })\). Then

$$\begin{aligned} \left[ \begin{array}{c@{\quad }c} t & -a^T\\ -a& A \end{array}\right] - \lambda \left[ \begin{array}{c@{\quad }c} 1&-b^T\\ -b&B \end{array}\right] \succ0 \quad \Leftrightarrow\quad t > d(\lambda), \\ \left[ \begin{array}{c@{\quad }c} t & -a^T\\ -a& A \end{array}\right] - \lambda \left[ \begin{array}{c@{\quad }c} 1&-b^T\\ -b&B \end{array} \right] \succeq0 \quad \Leftrightarrow\quad t \ge d(\lambda). \end{aligned}$$ -

(ii)

Suppose \(\lim_{\lambda\downarrow\underline{\lambda }}d(\lambda)\) is finite and \(\underline{\lambda}\) is finite. Then

$$ \left[ \begin{array}{c@{\quad }c} t & -a^T\\ -a& A \end{array} \right] - \underline{\lambda} \left[ \begin{array}{c@{\quad }c} 1&-b^T\\ -b&B \end{array}\right] \succeq0 \quad \Leftrightarrow\quad t \ge d(\underline{\lambda}). $$ -

(iii)

Suppose \(\lim_{\lambda\uparrow\overline{\lambda}}d(\lambda )\) is finite and \(\overline{\lambda}\) is finite. Then

$$ \left[ \begin{array}{c@{\quad }c} t & -a^T\\ -a& A \end{array}\right] - \overline{\lambda} \left[ \begin{array}{c@{\quad }c} 1&-b^T\\ -b&B \end{array}\right] \succeq0 \quad \Leftrightarrow\quad t \ge d(\overline{\lambda}). $$ -

(iv)

For any \(s\in {\mathbb{R}}\) and any \(t > \inf_{\underline{\lambda }<\lambda <\overline{\lambda}}d(\lambda)\), we have

$$\begin{aligned} &\sup \biggl\{ (s + 1)\lambda:\; \left[ \begin{array}{c@{\quad }c} t & -a^T\\ -a& A \end{array}\right] - \lambda \left[ \begin{array}{c@{\quad }c} 1&-b^T\\ -b&B \end{array} \right] \succeq0 \biggr\} \\ &\quad =\sup \bigl\{ (s + 1)\lambda:\; \lambda\in(\underline{\lambda },\overline{\lambda}), t\ge d(\lambda) \bigr\} . \end{aligned}$$

Proof

For part (i), notice that \(\lambda\in(\underline{\lambda},\overline {\lambda})\) if, and only if, A−λB≻0. Hence the conclusion follows immediately from an application of the Schur complement. For part (ii), notice first that \(\lim_{\lambda\downarrow\underline{\lambda}}d(\lambda)\) being finite implies \(d(\underline{\lambda})=\lim_{\lambda\downarrow\underline{\lambda }}d(\lambda)\) and hence d is continuous and convex on \([\underline{\lambda },\overline{\lambda})\). Next, by taking \(\lambda\in(\underline{\lambda},\overline{\lambda})\) and r>d(λ), we have from part (i) that

Hence, the convexity of d(⋅) and the continuity of d(⋅) at \(\underline{\lambda}\) yields

Since \((1-\epsilon)\underline{\lambda} + \epsilon\lambda\in (\underline{\lambda},\overline{\lambda})\) for all 0<ϵ<1, the conclusion of part (ii) now follows immediately from the above two relations and part (i). Part (iii) can be proved similarly. Finally, for part (iv), since \(t > \inf_{\underline{\lambda}<\lambda<\overline{\lambda }}d(\lambda)\), the optimal value on the left hand side is unchanged if we intersect the feasible region further with \((\underline{\lambda},\overline {\lambda})\). The conclusion now follows from this observation and part (i). □

We next consider three cases: B is indefinite, positive semidefinite and negative semidefinite. We only discuss the first two cases in detail since the third case is analogous to the second case. For this purpose, we will need to consider the following quantities:

Intuition behind the definitions of \(\overline{t}\) and \(\underline{t}\) will be described briefly after Corollary 3.3. We remark that these four quantities are not necessarily finite. Furthermore, it follows from weak duality that

Hence, t 0 is finite when s 0≤−1.

Case 1: B is indefinite

Notice that in this case, the quantities t 0, \(\underline{\lambda}\) and \(\overline{\lambda}\) are finite. In the next theorem, we show that k 0(t) is well-defined on a closed interval, and is differentiable in the interior of that interval, except possibly at one point.

Theorem 3.1

The function k 0(t) is well-defined and continuous on t≥t 0. Moreover:

-

(i)

when s+1>0, k 0(t) is differentiable on \((t_{0},\overline {t})\cup(\overline{t},\infty)\), and \(k_{0}(t) = (s+1)\overline{\lambda}\) on \(t> \overline{t}\);

-

(ii)

when s+1<0, k 0(t) is differentiable on \((t_{0},\underline {t})\cup(\underline{t},\infty)\), and \(k_{0}(t) = (s+1)\underline{\lambda}\) on \(t> \underline{t}\).

Proof

Suppose first that t>t 0. Then from the definition of t 0, there exists λ so that

It follows from this, Lemma 3.1(i), (iv) and Theorem 2.2 that

Thus, k 0(t) is finite. Next, suppose t=t 0. Notice that the infimum value t 0 in (3.4) has to be attained at some \(\lambda_{0}\in[\underline{\lambda },\overline {\lambda}]\). Thus, we have from Lemma 3.1 that

Hence, from Theorem 2.2, we conclude that (3.5) holds with t=t 0. Finally, since k 0 is a one variable concave function, it is continuous in the interior of its domain [30, Theorem 10.1] and is lower semicontinuous up to the boundary [30, Theorem 10.2]. Furthermore, since k 0 is the infimum of a family of continuous functions

it also follows that k 0 has to be upper semicontinuous. Thus, k 0 is continuous on t≥t 0.

We now turn to part (i). Since d(λ) is a strictly convex function, we deduce that

has at most 2 solutions in \((\underline{\lambda},\overline{\lambda})\) for any t>t 0, at which d has opposite slopes.

We first consider the case when \(\overline{t}\) is infinite. In this case, there must be a unique solution \(\mu\in (\underline{\lambda},\overline{\lambda})\) to (3.6) with d′(μ)>0. Since s+1>0, we see from Lemmas 3.1(iv) and (3.5) that k 0(t)=(s+1)μ. Moreover, since d′(μ)>0, by the inverse function theorem, μ is differentiable at t and \(\mu'(t)=\frac{1}{d'(\mu)}\). This shows that k 0 is differentiable at t.

We next assume that \(\overline{t}\) is finite. When \(t_{0}<t<\overline {t}\), it still holds true that (3.6) has a unique solution \(\mu\in(\underline{\lambda},\overline{\lambda })\) satisfying d′(μ)>0 and k 0(t)=(s+1)μ as above. Similarly, it can be shown that k 0(t) is differentiable on \(t_{0}<t<\overline{t}\).

On the other hand, when \(t> \overline{t}=d(\overline{\lambda})\), we see from Lemma 3.1(iii) that

Hence, from Lemmas 3.1(iv) and (3.5), we conclude that \(k_{0}(t) = (s+1)\overline{\lambda}\). This completes the proof of part (i).

The cases when s+1<0 in part (ii) can be proved similarly, by noting that k 0(t)=(s+1)ν for \(t_{0} < t < \underline{t}\), where ν=ν(t) is the unique root of (3.6) in \((\underline{\lambda},\overline{\lambda})\) with d′(ν)<0. □

Before proceeding, we collect several facts that are readily obtained from the proof of Theorem 3.1.

Corollary 3.1

-

(i)

If s+1>0 and \(t_{0}<t<\overline{t}\), then k 0(t)=(s+1)μ, where μ=μ(t) is the unique root of (3.6) on \((\underline {\lambda},\overline{\lambda})\) with d′(μ)>0; moreover, \(\mu '(t) = \frac{1}{d'(\mu)} > 0\).

-

(ii)

If s+1<0 and \(t_{0}<t<\underline{t}\), then k 0(t)=(s+1)ν, where ν=ν(t) is the unique root of (3.6) on \((\underline {\lambda},\overline{\lambda})\) with d′(ν)<0; moreover, \(\nu '(t) = \frac{1}{d'(\nu)} < 0\).

In the next proposition, we confirm that the domain of k 0(t) is actually t≥t 0, i.e., k 0(t)=−∞ whenever t<t 0. This is a consequence of the more general result from Proposition A.1.

Corollary 3.2

When t<t 0, k 0(t)=−∞.

Notice that \(k'(t) = k_{0}'(t)-1\) whenever t>t 0 and k 0 is differentiable at t. Moreover, \(\lim_{t\uparrow\infty}k_{0}'(t) = 0\), in view of Corollary 3.1 and Theorem 3.1. Thus, it holds true that a maximizer t ∗ of k(t) must exist.

A plot of d(λ) is shown in Fig. 3.

Case 2: B is positive semidefinite

In this case, we have \(\overline{\lambda}\) being finite and \(\underline {\lambda} = -\infty\). Moreover, we have the following result concerning the behavior of d(λ) as λ goes to −∞. See Figs. 4, 5 for plots of d(λ) in this case.

Proposition 3.1

It holds that lim λ→−∞ d′(λ)=1+s 0. Hence

-

(i)

If s 0<−1, then lim λ→−∞ d(λ)=∞;

-

(ii)

If s 0=−1, then lim λ→−∞ d(λ)=t 0>−∞;

-

(iii)

If s 0>−1, then lim λ→−∞ d(λ)=−∞.

Proof

The fact that lim λ→−∞ d′(λ)=1+s 0 follows from d′(λ)=1+ψ(λ) and Lemma 2.3. The rest of the conclusion then follows immediately. □

In view of the definition of s 0 and Assumption 2.1, Item 3, we only need to consider s>s 0 in subsequent analysis. We discuss the differentiability property of k 0(t) in the next theorem.

Theorem 3.2

-

(i)

Suppose that s 0<−1. Then k 0(t) is continuous and well-defined on t≥t 0. Furthermore, when s+1>0, k 0(t) is differentiable on \((t_{0},\overline{t})\cup (\overline{t},\infty)\) and \(k_{0}(t) = (s+1)\overline{\lambda}\) on \(t> \overline{t}\); when s+1<0, k 0(t) is differentiable on (t 0,∞).

-

(ii)

Suppose that s 0=−1. Then k 0(t) is continuous and well-defined on t>t 0. Furthermore, when s+1>0, k 0(t) is differentiable on \((t_{0},\overline{t})\cup (\overline{t},\infty)\) and \(k_{0}(t) = (s+1)\overline{\lambda}\) on \(t> \overline{t}\).

-

(iii)

Suppose that s 0>−1. Then k 0(t) is continuous and well-defined everywhere. Furthermore, when s+1>0, k 0(t) is differentiable on \((-\infty,\overline {t})\cup (\overline{t},\infty)\) and \(k_{0}(t) = (s+1)\overline{\lambda}\) on \(t> \overline{t}\).

Proof

The theorem can be proved similarly as Theorem 3.1 once we show that (3.5) holds for each case. To prove this, it suffices to show that Assumption 2.1, Item 3 is satisfied for the corresponding GTRS in (3.5) in each case. Suppose first that s

0+1<0. Then it is easy to see that  has to be indefinite and so Assumption 2.1, Item 3 is satisfied. On the other hand, if s

0+1≥0, then

has to be indefinite and so Assumption 2.1, Item 3 is satisfied. On the other hand, if s

0+1≥0, then

showing that  is positive semidefinite. Since s+1>s

0+1, we see that Assumption 2.1, Item 3 is also satisfied in this case. □

is positive semidefinite. Since s+1>s

0+1, we see that Assumption 2.1, Item 3 is also satisfied in this case. □

As in Corollary 3.1, we have the following relationship between k 0(t) and the roots of (3.6), which can be obtained from a detailed proof of Theorem 3.2.

Corollary 3.3

-

(i)

If s+1>0 and \(t_{0}<t<\overline{t}\), then k 0(t)=(s+1)μ, where μ=μ(t) is the unique root of (3.6) on \((\underline {\lambda},\overline{\lambda})\) with d′(μ)>0; moreover, \(\mu '(t) = \frac{1}{d'(\mu)} > 0\);

-

(ii)

If s+1<0 and t 0<t, then k 0(t)=(s+1)ν, where ν=ν(t) is the unique root of (3.6) on \((\underline{\lambda},\overline{\lambda})\) with d′(ν)<0; moreover, \(\nu'(t) = \frac{1}{d'(\nu)} < 0\).

Combining with Corollary 3.1, we have k 0(t)=(s+1)μ(t) for \(t_{0}<t<\overline{t}\) and s+1>0 in both Case 1 (indefinite B) and Case 2 (positive semidefinite B). Also, using the definition of \(\underline{t}\) and Proposition 3.1, we have a symmetric statement for s+1<0 in these two cases: k 0(t)=(s+1)ν(t) for \(t_{0}<t<\underline{t}\). The definitions of \(\overline{t}\) and \(\underline{t}\) were indeed introduced so that the above representations of k 0(t) hold for any nonzero symmetric matrix B.

The existence of a maximizer t ∗ of k(t) in this case is less trivial and is obtained as a consequence of the next theorem.

Theorem 3.3

-

(i)

Suppose that s 0<−1. When s+1>0, we have lim t→∞ k′(t)=−1. When s+1<0, we have \(\lim_{t\rightarrow\infty} k'(t) = \frac {s+1}{s_{0}+1}-1<0\). Furthermore, in either case, k(t)=−∞ for t<t 0.

-

(ii)

Suppose that s 0=−1. When s+1>0, we have lim t→∞ k′(t)=−1 and \(\lim_{t\downarrow t_{0}}k'(t) = \infty\). Furthermore, k(t)=−∞ for t≤t 0.

-

(iii)

Suppose that s 0>−1. When s+1>0, we have lim t→∞ k′(t)=−1 and \(\lim_{t\rightarrow-\infty}k'(t) = \frac{s+1}{s_{0}+1}-1>0\).

Proof

First of all, in all three cases, when s+1>0, the limit of k′(t) as t goes to ∞ can be found similarly as in the case when B is indefinite.

We now consider part (i). When s+1<0, by Corollary 3.3, we have \(k'(t) = \frac{s+1}{d'(\nu)}-1\). Moreover, from Proposition 3.1, we see that lim λ→−∞ d(λ)=∞. Thus, ν is defined for all sufficiently large t and ν→−∞ as t→∞. We conclude from these results and Proposition 3.1 that \(\lim_{t\rightarrow\infty} k'(t) = \frac{s+1}{s_{0}+1}-1<0\). The conclusion that k(t)=−∞ for t<t 0 follows from Proposition A.1.

We next turn to part (ii). Since d is strictly increasing, it follows from Proposition 3.1 that

By Corollary 3.3, we have \(k'(t) = \frac{s+1}{d'(\mu)}-1\) for \(\bar{t}> t> t_{0}\). Furthermore, from Proposition 3.1, we see that lim λ→−∞ d(λ)=t 0>−∞ and hence μ→−∞ as t→t 0. We conclude from this, Proposition 3.1 and the fact that s 0+1=0 that \(\lim_{t\downarrow t_{0}} k'(t) = \infty\). Furthermore, since k(t)=k 0(t)−t by definition, we have by Corollary 3.3 that

It then follows from concavity of k that k(t)=−∞ when t≤t 0.

Finally, for part (iii), note that from Proposition 3.1, we have lim λ→−∞ d(λ)=−∞ and hence μ→−∞ as t→−∞. Thus, we conclude further from Proposition 3.1 that \(\lim _{t\rightarrow-\infty} k'(t) = \frac{s+1}{s_{0}+1}-1>0\). □

3.2 Recovering solution of GTRS from maximizing k(t)

In this subsection, we discuss how one can obtain a solution to (3.1) after obtaining a maximizer t ∗ of k(t). In addition, we will argue that such a maximizer has to be unique.

We focus on the case when s+1>0. We will briefly comment on the case when s+1<0 at the end of this subsection. Due to the definition of \(\overline{t}\), we have k 0(t)=(s+1)μ(t) for \(t_{0}<t <\overline{t}\), where μ is the unique root of (3.6) in \((\underline {\lambda },\overline{\lambda})\) with d′(μ)>0. Furthermore, any maximizer of k(t) has to lie in \(\mathrm{cl}(t_{0},\overline{t})\). Moreover, for any \(t\in(t_{0},\overline{t})\), we see from the definition of t 0 that the maximization problem in (3.5) is strictly feasible, and hence the infimum in (3.5) is attained. By Theorem 2.3, the infimum is attained at some generalized eigenvector of the matrix pair

with corresponding eigenvalue μ. Let  be a generalized eigenvector attaining the infimum in (3.5). Since \(\mu\in(\underline{\lambda},\overline{\lambda})\), it follows that the first coordinate y

0(t) of y(t) must be nonzero. Thus, we may further scale the vector so that y

0(t)>0. Moreover, since s+1>0, we can scale the vector again so that

be a generalized eigenvector attaining the infimum in (3.5). Since \(\mu\in(\underline{\lambda},\overline{\lambda})\), it follows that the first coordinate y

0(t) of y(t) must be nonzero. Thus, we may further scale the vector so that y

0(t)>0. Moreover, since s+1>0, we can scale the vector again so that  .

.

We claim that such a vector is unique. To see this, notice first that for any \(\lambda\in(\underline{\lambda},\overline{\lambda})\), we have

It thus follows from this, det(A−μB)>0 and d′(μ)>0 that μ is a root of multiplicity one of the polynomial

Hence, the dimension of the nullspace of  is one; i.e., any generalized eigenvector corresponding to μ differs only by a scaling. Since the scaling is uniquely determined by the constraints

is one; i.e., any generalized eigenvector corresponding to μ differs only by a scaling. Since the scaling is uniquely determined by the constraints  and y

0>0, the generalized eigenvector constructed under these two restrictions is unique. Using standard arguments and the implicit function theorem, one can show in addition that y(t) is differentiable for \(t_{0}<t<\overline{t}\).

and y

0>0, the generalized eigenvector constructed under these two restrictions is unique. Using standard arguments and the implicit function theorem, one can show in addition that y(t) is differentiable for \(t_{0}<t<\overline{t}\).

By [5, Theorem 4.13] and using the above notations, the derivative of k(t) at any \(t\in(t_{0},\overline{t})\) is given by

We next analyze the following cases. Recall that we currently assume s+1>0 and that t ∗ is a maximizer of k(t).

Case 1: \(t^{*}\in(t_{0},\overline{t})\)

In this case, k is differentiable at t ∗. Hence, necessarily

It follows, after a simple calculation, that \(x^{*}:=\frac{z(t^{*})}{y_{0}(t^{*})}\) satisfies x ∗ T Bx ∗−2b T x ∗=s, from which it follows by checking optimality conditions that x ∗ is an optimal solution to (3.1), with the Lagrange multiplier given by \(\mu(t^{*})\in(\underline{\lambda},\overline {\lambda})\).

Case 2: \(t^{*} = t_{0} < \overline{t}\)

In this case, k′(t)≤0 for all t>t 0 sufficiently close to t 0. Hence

Furthermore, since t ∗∈dom(k) by definition of maximizer, the assumption implies that t 0∈dom(k) and thus k 0 is (right) continuous at t 0. Then, since k 0(t)=(s+1)μ(t) for \(t_{0}<t <\overline{t}\), we see that \(\lim_{t\downarrow t_{0}}\mu(t)\) exists (and is finite). We claim that \(\lim_{t\downarrow t_{0}}\mu(t) = \underline{\lambda}\). To see this, note that if \(\lim_{t\downarrow t_{0}}\mu(t) \in(\underline{\lambda },\overline {\lambda})\), then \(d'(\lim_{t\downarrow t_{0}}\mu(t)) = 0\), which implies that \(\mu'(t)=\frac{1}{d'(\mu(t))}>0\) is arbitrarily large as t↓t 0. In view of (3.8), this contradicts the fact that k′(t)≤0 for t close to t 0. On the other hand, if \(\lim_{t\downarrow t_{0}}\mu (t)=\overline{\lambda}\), then \(\overline{\lambda}\) is finite. But by definition of μ(t), it is the unique root of d(λ)=t on \((\underline{\lambda},\overline{\lambda})\) with positive slope and thus \(\mu(t) > \lim_{t\downarrow t_{0}}\mu(t) = \overline{\lambda}\) for all \(\overline{t} > t > t_{0}\). But \(\mu(t)<\overline{\lambda}\) by definition, and we arrive at a contradiction. Thus, we must have \(\lim_{t\downarrow t_{0}}\mu(t) = \underline{\lambda}\). In particular, \(\underline{\lambda}\) is finite.

We next claim that there exists a generalized eigenvector \(y^{*} = \begin{bmatrix} y_{0}^{*}\\z^{*} \end{bmatrix} \) of the matrix pair

corresponding to \(\underline{\lambda}\) such that \(y_{0}^{*}\neq0\) and  .

.

To prove this, fix \(t_{1}\in(t_{0},\overline{t})\) and consider {y(t): t 0<t<t 1}. Since t 1>t 0, by the definition of t 0, there exists \(\hat{\lambda}>\underline{\lambda}\) such that

for some ϵ>0. Then, for any t 0<t<t 1, we have that

where the last inequality follows from (3.10). Thus,

This together with (3.9) shows that {y(t): t

0<t<t

1} is bounded. Consider any cluster point y

∗ of {y(t)} as t↓t

0. Then  , and hence in particular y

∗≠0. Moreover, since \(\mu(t)-\hat{\lambda}\rightarrow\underline{\lambda} -\hat{\lambda}<0\), we see further from (3.11) that \(y_{0}^{*} > 0\). Finally, it is easy to check that y

∗ is a generalized eigenvector of the matrix pair

, and hence in particular y

∗≠0. Moreover, since \(\mu(t)-\hat{\lambda}\rightarrow\underline{\lambda} -\hat{\lambda}<0\), we see further from (3.11) that \(y_{0}^{*} > 0\). Finally, it is easy to check that y

∗ is a generalized eigenvector of the matrix pair

corresponding to the eigenvalue \(\underline{\lambda}\).

Next, define \(x^{*} := \frac{1}{y_{0}^{*}}z^{*}\). Then it follows from (3.9) that x ∗ T Bx ∗−2b T x ∗≥s and from the definition of y(t) that \((A-\underline{\lambda}B)x^{*}= a-\underline{\lambda}b\). By Lemma 2.5, there exists \(v\in \operatorname{{Null}}(A-\underline{\lambda}B)\) with v T Bv<0, and thus x ∗+αv would solve (3.1), for some suitable α>0.

Case 3: \(t^{*}=\overline{t} > t_{0}\)

In this case, we must have k′(t)≥0 for all \(t_{0}<t<\overline{t}\). Hence

Furthermore, since \(\overline{t}\) is finite by assumption, we must have \(\overline{\lambda}\) finite from the definition of \(\overline{t}\) and thus \(d(\overline{\lambda}) = \lim_{\lambda\uparrow\overline{\lambda}}d(\lambda) = \overline{t}\). This, together with the definition of μ(t) and the fact that d(λ) is strictly increasing when \(\lambda>\lim_{t\downarrow t_{0}}\mu(t)\), implies that \(\lim_{t\uparrow\overline{t}}\mu(t) = \overline {\lambda}\). We claim that there exists a generalized eigenvector  of the matrix pair

of the matrix pair

corresponding to \(\overline{\lambda}\) such that \(y_{0}^{*}\neq0\) and  .

.

We first show that the parameterized set of eigenvectors \(\{y(t):\; t_{1}\le t<\overline{t}\}\) is bounded for any fixed \(t_{1}\in(t_{0},\overline{t})\). Since \(t_{1}\in (t_{0},\overline {t})\), by definition of t 0 and Lemma 3.1(i), there exists \(\hat{\lambda}<\overline{\lambda}\) such that

for some ϵ>0. Using this and the definition of generalized eigenvector and eigenvalue, we have, for any \(t_{1}\le t<\overline{t}\), that

where the last inequality follows from (3.13). This shows that \(\{y(t):\; t_{1}\le t<\overline{t}\}\) is bounded. Consider any cluster point y

∗ of {y(t)} as \(t\uparrow\overline{t}\). Due to (3.12), the first coordinate \(y_{0}^{*}\) of y

∗ is nonzero. It is easy to check that  and that y

∗ is a generalized eigenvector of the matrix pair

and that y

∗ is a generalized eigenvector of the matrix pair

corresponding to the eigenvalue \(\overline{\lambda}\).

Next, define \(x^{*} := \frac{1}{y_{0}^{*}}z^{*}\). Then it follows from (3.12) that x ∗ T Bx ∗−2b T x ∗≤s and from the definition of y(t) that \((A-\overline{\lambda}B)x^{*}= a - \overline{\lambda}b\). By Lemma 2.5, there exists \(v\in \operatorname{{Null}}(A-\overline{\lambda}B)\) with v T Bv>0, and thus x ∗+αv would solve (3.1), for some suitable α>0.

Case 4: \(t^{*}=t_{0}=\overline{t}\)

In this case, from the definitions of t 0 and \(\overline{t}\), we have \(\overline{\lambda}\) is finite and the infimum of d is attained at the right end point of the interval \((\underline{\lambda},\overline{\lambda})\), i.e., at \(\overline {\lambda }\). From the definition of d, this implies in particular that \(a - \overline{\lambda}b\in \operatorname{{Range}}(A - \overline{\lambda}B)\). Furthermore,

Hence, using the fact that s+1>0, we see that

where \(x^{*} := x(\overline{\lambda})\). Finally, recall from Lemma 2.5 that there exists \(v\in \operatorname{{Null}}(A-\overline{\lambda}B)\) with v T Bv>0. Combining these few facts, we conclude that x ∗+αv solves (3.1), for some suitable α>0.

Remark 3.1

(Uniqueness of maximizer of k)

In passing, we remark that k(t) must have a unique maximizer. First of all, it is easy to see that the four cases analyzed above are mutually exclusive for a maximizer \(t^{*}\in\mathrm{cl}(t_{0},\overline{t})\) of k(t). If case 4 happens, then clearly the maximizer is unique and equals \(t_{0} = \overline{t}\). Otherwise, in all other three cases, we see that for each maximizer t ∗, there corresponds at least one dual solution λ ∗ of the GTRS= (3.1). Such λ ∗ constructed in those cases are different for different t ∗, thanks to the strict monotonicity of μ(t) on \((t_{0},\overline {t})\). Since the GTRS= (3.1) must have a unique dual optimal solution in view of the analysis in Sect. 2.2.2 and our assumption that ψ is strictly increasing on \(\mathrm{cl}(\underline{\lambda},\overline{\lambda})\), we conclude that k(t) must have a unique maximizer.

The above arguments for s+1<0 are completely analogous. Note that we need to scale by \(\sqrt{-(s+1)}\) instead of \(\sqrt{s+1}\) in the constraint of the minimization problem in (3.5). Hence  and moreover \(k'(t) = -(s+1)y_{0}^{2}(t)-1\).

and moreover \(k'(t) = -(s+1)y_{0}^{2}(t)-1\).

In this section, we just gave a complete analysis on how a solution of (3.1) can be recovered after maximizing k(t). Comparing this with the procedures outlined in Sect. 2.2, we see that if the problem (3.1) is obtained from those procedures, then \(\lambda^{*}\in(\underline{\lambda},\overline{\lambda})\). Thus, we must be in Case 1 and hence k(t) has to be differentiable at t ∗. On the other hand, it is easy to see that closed form solutions are obtained for the other cases using the procedures in Sect. 2.2.

3.3 Implementation details

In this subsection, we discuss implementation details of our algorithm. For simplicity, we only consider the case when B is nonsingular. In this case, by translating the optimization variable by B −1 b if necessary, we may further assume without loss of generality that b=0. Moreover, we only need to consider the cases when B is positive definite or indefinite. Furthermore, we still assume Assumption 2.1, the regularity (2.15) and s+1≠0. To explicitly guarantee that (2.19) never holds, we also assume a≠0.

When B is positive definite, \(\underline{\lambda}\) is negative infinity and \(\overline{\lambda}\) is the minimum generalized eigenvalue of the matrix pair (A,B), which can be computed efficiently. On the other hand, when B is indefinite, the interval \((\underline{\lambda},\overline{\lambda})\) cannot easily be determined in general. Thus, in this case, we consider the further subcase that A is positive definite. Then we know that \(0\in (\underline{\lambda},\overline{\lambda})\). Observe that for any \(\lambda\in[\underline{\lambda},0)\), we have

Since \(\lambda\in[\underline{\lambda},0)\) if, and only if, \(\frac {1}{\lambda} \in(-\infty,\frac{1}{\underline{\lambda}}]\), we conclude from (3.14) that \(\underline{\lambda} = \frac {1}{\overline{\rho}_{1}}\), where \(\overline{\rho}_{1}\) is the minimum generalized eigenvalue of the matrix pair (B,A). Similarly, one can show that \(\overline{\lambda} = -\frac {1}{\overline {\rho}_{2}}\), with \(\overline{\rho}_{2}\) being the minimum generalized eigenvalue of the matrix pair (−B,A). The quantities \(\overline{\rho}_{1}\) and \(\overline{\rho}_{2}\) can both be computed efficiently.

Case check

We first carry out the case check as described in Sect. 2.2.2. We obtain an explicit solution when the GTRS (1.1) has an interior solution A −1 a or when the GTRS instance is a hard case, case 2 instance.

Shift and deflation for the hard case, case 1, when B is positive definite

After the case check, the bound side has been determined and so we focus on the equality constrained case x T Bx=s. In the next proposition, we describe a shift and deflation technique that transforms a GTRS= instance from hard case, case 1, to the easy case, when B is positive definite.

Proposition 3.2

Suppose that we consider the GTRS = with the equality constraint x T Bx=s with B≻0. Let \(\overline{\lambda}\) be the smallest generalized eigenvalue of the matrix pair (A,B) and suppose that a corresponding nonzero eigenvector v satisfies a T v=0. Furthermore, let α>0. Then

Proof

First, since x T Bx=s, it is clear that (x ∗,λ ∗) solves GTRS= if, and only if, (x ∗,λ ∗) solves GTRS= with A replaced by \(A-\overline {\lambda}B\). Next, consider the GTRS= with A replaced by \(A-\overline{\lambda}B\). Let \(y=B^{\frac{1}{2}} x\) and use the substitution \(x=B^{-\frac{1}{2}}y\). This results in the following TRS:

where \(\bar{A}= B^{-\frac{1}{2}}(A-\overline{\lambda}B)B^{-\frac{1}{2}}\) and \(\bar{a} = B^{-\frac{1}{2}}a\).

We now apply the shift and deflate lemma in [11] stated for the above TRS and obtain that

where \(w = B^{\frac{1}{2}} v\) is the corresponding eigenvector and hence satisfies \(w^{T} \bar{a}=0\) by assumption and the definition of \(\bar{a}\). After substituting again using \(y = B^{\frac{1}{2}}x\), we see that

The linear term and constraint follow similarly. □

Initialization

After the case check and the possible shift and deflation, we proceed to solve the equality constrained case by the RW algorithm. We discuss initialization in this subsection.

We first consider the case when B is positive definite. In this case, k(t) is well-defined for all t by Theorem 3.2. Let t ∗ be the optimal solution of maxk(t). As in [29], we derive an interval that contains t ∗ in the next proposition for initializing the RW algorithm.

Proposition 3.3

Suppose that \(\lambda^{*} < \overline{\lambda}\). Then

Proof

The assumption \(\lambda^{*} < \overline{\lambda}\) implies that we can recover a solution of GTRS= from the maximizer of k(t). Let x

∗ be the solution of GTRS= thus recovered. Then  is a generalized eigenvector of the matrix pair

is a generalized eigenvector of the matrix pair

with generalized eigenvalue λ ∗. Hence, in particular,

This proves the upper bound in (3.15). We next derive the lower bound. In this case, we define \(\delta= \overline{\lambda} - \lambda^{*} > 0\). Then we have

and hence

In addition, from the definition of d(λ), λ ∗ and (3.16), we have

Combining (3.17) and (3.18), we see that

Finally, we observe from (3.18) that t ∗≥λ ∗ and hence \(\delta\ge\overline{\lambda} - t^{*}\). The lower bound in (3.15) now immediately follows. □

On the other hand, when B is indefinite, according to Theorem 3.1 and Corollary 3.2, we conclude that t 0 is finite and the function k(t) would have t 0 as a left end point of its domain. We currently do not know of any efficient way for computing/estimating t 0. However, if t 0 and the corresponding λ 0 such that d(λ 0)=t 0 are known, then the function value and the derivative of k at any t>t 0 can be efficiently computed; see the paragraph below Proposition 3.4, with μ=λ 0 in (3.23). Furthermore, for initialization, one can find an interval containing t ∗ as follows:

Procedure for finding an interval containing t ∗ when B is indefinite.

-

Step 1.

Take ϵ>0 and compute k′(t 0+ϵ).

-

Step 2.

If k′(t 0+ϵ)>0, set t +=t 0+ϵ. Otherwise, set t −=t 0+ϵ and i=1.

-

Step 3.

While k′(t −)<0, update t +←t − and t −←t 0+ζ i ϵ for some fixed ζ∈(0,1). Update i←i+1. Repeat this step.

-

Step 4.

While k′(t +)>0, update t −←t + and t +←κ(t +−λ 0)+λ 0 for some fixed κ>1. Repeat this step.

-

Step 5.

By construction, k′(t −)>0 and k′(t +)<0. Thus, t ∗∈(t −,t +).

Computing k 0(t) and \(k'_{0}(t)\)

We next discuss how k 0(t) and \(k'_{0}(t)\) for t>t 0, when they exist, can be computed efficiently. Recall from the definition of k 0(t) and (3.8) that

where u 0(t)≥0 is the first coordinate of a vector u(t) attaining the infimum defining k 0(t). In the case when B is positive definite, k 0(t) can be computed from the minimum generalized eigenvalue of the matrix pair

and u 0(t) is obtained from the corresponding eigenvector. However, when B is not positive definite, k 0(t) and its derivative cannot be obtained directly from an extremal generalized eigenpair. We now discuss how to compute k 0 and its derivative in this case.

To this end, we first consider a closely related problem. Let C and D be symmetric matrices. Consider the following program.

Then we have the following result.

Proposition 3.4

Suppose that (3.19) is feasible and that there exists λ so that C−λD⪰0. Suppose further that val≠0.

-

(i)

If val>0 and if x ∗ is a solution to (3.19), then \(\frac{1}{\sqrt{\mathrm{val}}}x^{*}\) is a solution to the following optimization problem, whose optimal value is \(-\frac{1}{\mathrm{val}}\):

$$ \begin{array}{r@{\quad }l} \inf& -x^T D x\\ \mathrm{s.t.} & x^T C x = 1. \end{array} $$(3.20) -

(ii)