Abstract

To assess the status of hotspots and research trends on geographic information system (GIS)–based landslide susceptibility (LS), we analysed 1142 articles from the Thomas Reuters Web of Science Core Collection database published during 2001–2020 by combining bibliometric and content analysis. The paper number, authors, institutions, corporations, publication sources, citations, and keywords are noted as sub/categories for the bibliometric analysis. Thematic LS data, including the study site, landslide inventory, conditioning factors, mapping unit, susceptibility models, and mode fit/prediction performance evaluation, are presented in the content analysis. Then, we reveal the advantages and limitations of the common approaches used in thematic LS data and summarise the development trends. The results indicate that the distribution of articles shows clear clusters of authors, institutions, and countries with high academic activity. The application of remote sensing technology for interpreting landslides provides a more convenient and efficient landslide inventory. In the landslide inventory, most of the sample strategies representing the landslides are point and polygon, and the most frequently used sample subdividing strategy is random sampling. The scale effects, lack of geographic consistency, and no standard are key problems in landslide conditioning factors. Feature selection is used to choose the factors that can improve the model’s accuracy. With advances in computing technology and artificial intelligence, LS models are changing from simple qualitative and statistical models to complex machine learning and hybrid models. Finally, five future research opportunities are revealed. This study will help investigators clarify the status of LS research and provide guidance for future research.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Landslides are the most common natural hazards and cause damage to infrastructure and natural ecosystems; they result in serious casualties and tremendous property losses and affect the sustainable development of the human living environment in mountainous regions (Lee et al. 2007; Hungr et al. 2014; Sassa, 2019; Ling et al. 2021). Therefore, the effective prediction and susceptibility mapping of landslides are regarded as urgent tasks to reduce the related detrimental impacts, which is of great significance for promoting the sustainable development of society and the environment (Zhao et al. 2019). Landslide susceptibility (LS) assessments have been performed since the 1970s to solve practical problems at the small-catchment, regional, national, and global scales (Neuland 1976; Lin et al. 2017; Tang et al. 2020). Essentially, LS is defined as the likelihood of landslide occurrence in a given study area on the basis of the local environmental conditions by predicting where landslides are likely to occur (Brabb 1984). All LS approaches and methods are generally based on the following assumptions: (1) The deformation and failure signs of landslides can be identified through field investigation or remote sensing images; (2) conditions that affect landslide occurrence are directly or indirectly linked, and thus, they can be used to build predictive models (Reichenbach et al. 2018); and (3) future slope failures will be more likely to occur under the conditions that led to previous instabilities (Kanungo 2015).

In recent years, geographic information system (GIS) technology has been extensively used to produce LS mapping because of the strong capability of GIS for spatial data collection, storage, processing, and visualisation (Bui et al. 2012; Ling et al. 2022). Generally, LS calculations based on GIS environment are performed in four complex steps, including (i) landslide inventory preparation, (ii) conditioning factor selection, (iii) model construction, and (iv) model validation and evaluation (Pradhan 2011; Huang et al. 2022a). LS approaches can be broadly categorised into empirically based, process-based, statistically based, and machine learning methods. The empirically based method (e.g. heuristic approach (HA), fuzzy logic (FL), and multicriteria decision analysis (MCDA)) is an indirect and qualitative approach that relies on expert opinions and judgements to rank and weight the instability factors. The process-based method (e.g. stability index mapping (SINMAP)) is based on a physically based modelling scheme to construct the physical process for landslide occurrence, which can compute the safety factor using a range of geotechnical, topographic, and hydrological parameters (Kim et al. 2014). The statistical models (e.g. information value (IV), statistical index (SI), certainty factor (CF), logistic regression (LR), and frequency ratio (FR)) rely on statistical analysis theory to reveal the spatial relationship between variables and landslides. Finally, machine learning attempts to build LS models by learning from complex data without banking on rules-based functions (Merghadi et al. 2020). Generally, machine learning algorithms can be divided into supervised learning (e.g. artificial neural network (ANN), decision tree (DT), random forest (RF), support vector machine (SVM), and naive Bayesian (NB)) and unsupervised learning models. Supervised learning can handle classification problems that rely on learning from labelled training data, whereas unsupervised learning attempts to predict landslides in unlabelled data (Chang et al. 2020).

In the past two decades, numerous investigators have developed models and techniques to construct maps of LS worldwide and have formed an abundant literature base. The literature, which is composed of several hundred papers, has provided a valuable overview of LS for scholars. In these studies, only a few investigators have attempted to conduct scientific reviews. In general, these reviews can be classified into the following four categories: (1) a summary of the methods of generating landslide inventory maps (Guzzetti et al. 2012; Jaboyedoff et al. 2012; Scaioni et al. 2014) and discussion of parameters derived from and/or used with digital elevation models for LS (Saleem et al. 2019; Kakavas & Nikolakopoulos 2021); (2) systematic and critical reviews of LS assessment systems that discuss landslide inventory mapping, mapping units, conditioning factors, and the different models used while also exploring the intrinsic and/or specific advantages and disadvantages of these approaches (Guzzetti et al. 1999; Wang et al. 2005; Lee & Pradhan 2007; van Westen et al. 2008; Pardeshi et al. 2013; Kanungo 2015; Saleem et al. 2019; Shano et al. 2020); (3) an overview of the availability of machine and deep learning techniques for landslide detection and/or LS assessment (Huang & Zhao 2018; Ma et al. 2021; Mohan et al. 2021); and (4) a discussion of the methods for LS model fitting and for the evaluation of the models’ prediction performances (Brenning 2005; Begueria 2006; Chacon et al. 2006).

Although the above reviews provide valuable insights for the LS field, most of them may tend to be qualitative and subjective analyses. With a growing body of literature, the knowledge structure of the domain is not completely provided in these reviews. For example, it is difficult to answer the following questions using traditional review techniques: (i) What is the trend in terms of the number of publications in this domain? (ii) How are the most-productive and influential stakeholders (authors, institutions, countries, and journals) interconnected in this field? (iii) What are the evolutionary patterns of research hotspots? and (iv) How can the dynamic development of research frontiers be tracked? However, this information is very useful for investigators to understand the structure of this domain. Bibliometric analysis, as a modern technique in computer engineering, database management, and statistics, has become a prominent method for analysing published literature (Qin et al. 2022). It utilises a scientific and structured method to quantitatively analyse the distribution structure and internal relationship among numerous publications to determine the research hotspots and assess the development trends in a certain field (Chen 2017; Zhou & Song 2021). It has been applied to identify current statuses and development trends and assess advanced topics in the landslide (Wu et al. 2015; Yang et al. 2019), marine geohazard (Camargo et al. 2019), natural hazard (Fan et al. 2020), and deep learning (Li et al. 2020) domains. The relevant conclusions are significant for researchers to determine the key areas, explore future research directions, and pursue cooperation with other institutions or countries in a particular research domain. Previous literature reviews in the LS field, such as Pourghasemi et al. (2018), Reichenbach et al. (2018), and Lee (2019), reviewed the status of LS according to the authors, journals, study areas, landslide inventories, conditioning factors, models, model evaluations, and number of publications based on articles published from 2005–2016, 1983–2016, and 1999–2018, respectively. These reviews spend considerable time manually collecting information about LS, which may cause the loss of certain key information. Furthermore, these three reviews do not analyse the advantages and disadvantages of certain methods used in LS (e.g. modelling methods and model fit/prediction performance evaluation methods). In addition, Budimir et al. (2015) and Merghadi et al. (2020) reviewed the application of LR and machine learning in LS based on articles published from 2001–2013 to 2000–2019, respectively. Both studies mostly focused on a particular model or method. Significantly, these five reviews covered an inadequate number of articles and lacked recent research findings. Moreover, cooperation network, co-citation network, and keyword co-occurrence analyses have not previously been adopted to investigate GIS-based LS research, which results in an incomplete understanding of this domain. Therefore, further systematic literature reviews that explore research expert contributions, evolution, themes, and future scholarly opportunities in the LS field are needed.

To enrich the study of GIS-based LS, a systematic and objective bibliometric analysis is conducted on a sample of 1142 publications obtained from the Thomas Reuters Web of Science Core Collection (WoSCC) database from 2001 to 2020. In addition, we also perform a content analysis on the key information (e.g. study area, landslide inventory, conditioning factors, mapping units, susceptibility models, and validation methods) from these 1142 publications to further enhance the objectiveness and comprehensiveness of the bibliometric analysis, thus identifying the research frontiers and trends in the GIS-based LS field. The contributions of this paper include combining bibliometric and content analyses to (i) present publication trends in GIS-based LS from 2001 to 2020; (ii) identify the influential authors, institutions, countries and journals; (iii) analyse the collaboration relationship of authors, institutions, and countries; (iv) reveal the main research hot themes and dynamic developments; (v) discuss the advantages and limitations for key information of LS research; and (vi) address the challenges ahead and research directions. Accordingly, it will help professional and nonprofessional investigators elucidate the status and development trends, the core author groups, and the study hotspots of LS research in the last two decades, providing guidance for future research.

Materials and methodology

Data sources and filtering strategies

Peer-reviewed publications between 2001 and 2020 were retrieved online through the WoSCC database until January 29, 2021. The search and analysis statistical flow charts are presented in Fig. 1. The search keywords were entered into the database as follows: Topic: (landslide susceptibility) AND Topic: (GIS) AND Language: (English) AND Document types: (article, review). Through this step, a “large database” of 1837 publications was acquired. Then, we refined the search results through the following three filtering steps. First, according to the final type of publication, we excluded publications with early online access (41 publications). Second, we checked the titles, abstracts, and keywords and excluded articles from the flood (47 publications), land subsidence (17 publications), snow avalanche (5 publications), gully or soil erosion (47 publications), and groundwater (35 publications) susceptibility domains, which identified 1645 valid records. Finally, the data were downloaded directly from the literature database, and we carefully read every publication and excluded those about landslide vulnerability and risk assessment. A total of 1142 publications in which GIS-based LS generally included the abovementioned four main steps were selected (Appendix Table S1).

Analysis methods

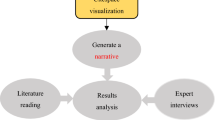

A bibliometric analysis was conducted using HistCite (Garfield et al. 2003) and VOSviewer (version 1.6.15, van Eck & Waltman 2010) software. HistCite software can analyse the trends in the field of GIS-based LS and calculate the total local citation score (TLCS). The TLCS is the number of times that the papers by an author in the GIS-based LS database were cited by other papers in the database (Zhang & Chen 2020). The collaboration of authors, institutions, and countries and keyword co-occurrences were analysed using a social network in VOSviewer (Tao et al. 2020). A unified approach to mapping and clustering techniques is used to study the structure of a network. VOS (visualisation of similarities) is a distance-based map, and the idea of the mapping technique is to minimise a weighted sum of the squared Euclidean distances between all pairs of items (van Eck & Waltman, 2010). VOSviewer uses the association strength algorithm to measure the similarity between nodes and determine the thickness of the connection between nodes. In social network maps, the node size indicates the number of items (e.g. counties, institutions, authors, and keywords), and the links that connect the nodes denote cooperative relationships (Xiao et al. 2022). The thicker the line is, the greater the relationship between the nodes is (Yang et al. 2020). The clustering technique proposed in VOSviewer is a kind of weighted variant of modularity-based clustering, which contains a resolution parameter γ (Waltman et al. 2010). This clustering operates based on the same principles as node positioning (Leydesdorff & Rafols 2012). The parameter γ can be changed interactively to overcome the problem that a small cluster cannot be identified. The cluster results are automatically coloured into groups to facilitate the interpretation of relationships. The clustering analysis reveals research themes.

To identify the research content and explore the research trends, systematic content analysis was adopted for the literature data. First, six critical datasets related to GIS-based LS mapping were extracted and included in the studied database, including the (1) study area, (2) landslide inventory, (3) conditioning factors, (4) mapping units, (5) susceptibility model, and (6) model fit/prediction performance evaluation data. We then counted the number of these parameters by year and divided them into three time periods (2001–2010, 2011–2015, and 2016–2020) for a comprehensive analysis based on the number of articles.

Results

Publication trends

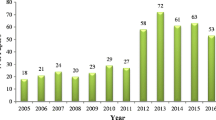

Figure 2 describes the annual number, total citations, and average citations of the research publications related to GIS-based LS mapping over the past 20 years. It is evident that an increasing interest in LS research and the relationship between the publication year and the cumulative number of publications are exponential. The number of publications slowly increased from 2001 to 2009, with fewer than 40 publications each year. A steady increase in the number of publications between 2010 and 2015 can be observed, but there were fewer than 80 publications per year. Subsequently, the number of publications dramatically increased, reaching 151 in 2020. In the 20-year period, a total number of citations of publications in 2010 were the highest (5610), but the average number of citations of publications reached a maximum (225) in 2005. One factor that contributed to the development of the field may be that governments and decision-makers need LS maps as valuable decision-support tools in land use infrastructural planning and management (Ciampalini et al. 2016). Second, the “Guidelines for Landslide Susceptibility, Hazard and Risk Zoning for Land Use Planning”, published by the Joint ISSMGE, ISRM and IAEG Technical Committee on Landslides and Engineered Slopes in 2008 (Fell et al. 2008), unified and standardised this field. Third, the rapid development of GIS, digital photogrammetry, global positioning systems, digital image processing, and artificial intelligence reduces the technical threshold and increases the availability of data (e.g. digital elevation data and landslide inventories), which drives more researchers to conduct more in-depth research (van Westen et al. 2008). GIS technology with a strong capability to visualise spatial data and 3D spatial analysis has made great contributions to the development of this field. The application of GIS involves any stage of LS assessment. For example, GIS can obtain terrain parameters, conduct overlay analysis between landslide and terrain parameters, and implement bivariate statistical modelling. Furthermore, with a general increase in the number of academic publications and journals related to geoscience and the environment over time, such as Land, the number of articles in this field has also increased. We believe that if the number of journals and/or researchers decreases, the number of publications may also decrease.

Bibliometric analysis

Influential authors

Significantly, the analyses of cooperation networks of authors, institutions, and countries in VOSviewer are not confined to the first authors and their institutions and countries, but all signed authors, institutions, and countries are included. According to the 1142 publications, a total of 2570 authors have contributed to GIS-based LS research. However, 72.41% of all authors published only one paper, 29 authors (1.13%) published more than 10 papers, and 12 authors (1.05%) published more than 20 papers. The top 10 most-productive authors together published 457 articles (Table 1), which accounted for 40.02% of all articles. This finding suggests that although many investigators are involved in LS work, there are very few productive authors. Perhaps these investigators have switched research fields or focused on other topics, and only a few scholars have focused on one field. The most-productive and cited author is Biswajeet Pradhan from the University of Technology Sydney, with 91 publications and 10,898 citations, followed by Saro Lee from the Korea Institute of Geoscience and Mineral Resources (KIGAM), with 73 publications and 7037 citations, and Dieu Tien Bui from Duy Tan University, with 64 publications. Of them, Saro Lee, Wei Chen, Binh Thai Pham, Biswajeet Pradhan, and Dieu Tien Bui are the most-productive first authors with 31, 26, 20, 19, and 17 publications, respectively. This may be because with the development of their labs, researchers manage more graduate students, and more articles may change first authors to corresponding authors or other signed authors. Candan Gokceoglu from Hacettepe University is the most-cited author on average, with 134.67 citations per paper. Notably, six of the top ten authors are from China, Iran, and Vietnam. The research interests of Biswajeet Pradhan focus on the field of GIS, remote sensing and image processing, machine learning and soft-computing applications, and natural hazards and environmental modelling. Based on average citations, Biswajeet Pradhan’s articles rank second, indicating that the overall quality of the articles published by Pradhan is high. In his earliest research as the first author (Pradhan et al. 2006), he used remote sensing data to obtain the stress orientation and terrain variables, and then the SI model was used to predict landslides. Since then, as first author, he has published more relevant articles from 2008 to 2014, and the study area was Malaysia. Of the 10 most-cited publications in the field of GIS-based LS (Appendix Table S2), Pradhan participated in four, two as first author, indicating his significant influence in this domain. In his most-cited publication (Pradhan 2013), he compared the predication ability of different factors in the DT, SVM and adaptive neuro-fuzzy inference system (ANFIS) models. Saro Lee contributed the three most-cited publications, two of which were published by the first author. For example, he discovered that the FR model has better prediction accuracy than the LR model for predicting landslides in Malaysia (Lee & Pradhan 2007). Dieu Tien Bui also contributed the most-cited article (Bui et al. 2016), in which he introduced a framework for the training and validation of shallow LS models using the latest statistical methods.

The threshold for the number of publications was at least 5, with a total of 125 authors meeting this criterion; this is illustrated in Fig. 3. Figure 3 indicates that authors can be grouped into 6 categories based on cluster analysis with different colours for international cooperation, total number of links (TNLs) and total link strength (TLS). Generally, clusters of the same and different colours show domestic and international cooperation, respectively. TLS indicates the total strength of the links of an item to other items. When the TLS is larger, there is more co-authorship between a given author and other authors (Yang et al. 2020). The first key groups (green) can be identified around Biswajeet Pradhan in Fig. 3, where the largest TNLs of 45 and the second TLS value of 195 are displayed. This group mainly comprises Malaysian scholars. Their articles were mainly published from 2010 to 2015, and the modelling methods are primarily traditional machine learning and statistical methods (Pradhan & Lee 2010a). A second key group (blue) mainly includes scholars from Vietnam and India, such as Dieu Tien Bui (TNLs = 30, TLS = 222, Table 1), Binh Thai Pham (TNLs = 27, TLS = 160) and Indra Prakash (TNLs = 8, TLS = 49). Their research is mainly concentrated in the last 5 years, and the models adopted are mostly machine learning, deep learning, and ensemble models. The third key group (red) mainly includes Wei Chen (TNLs = 38, TLS = 154) and Haoyuan Hong (TNLs = 8, TLS = 49) from China. They proposed an LS modelling and optimisation system based on the optimisation of conditioning factors, mapping units, and model parameters under multisource and heterogeneous conditions (Hong et al. 2018). In addition, the cluster centred on Saro Lee (TNLs = 30, TLS = 126) is another key group in purple, comprising Korean scholars. They generally used statistical models and machine learning to predict shallow landslides caused by rainfall (Lee et al. 2006). The top 10 authors have exhibited direct or indirect cooperation, and co-authorship is common. For example, the groups led by Biswajeet Pradhan and Saro Lee have continuously focused on GIS-based LS, and they have collaborated with one another on 13 papers.

Influential research institutions

The 1142 retrieved publications involve 948 institutions. Table 2 shows the top 10 most-productive institutions, which together account for 451 publications and 39.49% of all publications. China and Iran have more research institutions (6 institutions) in this group than other countries, which suggests that a small number of institutions dominate this field. KIGAM is the most-productive and most-cited institution, with 77 publications and 7511 citations, which has promoted the development of this field. The institution has focused on the verification of landslide susceptibility mapping since 2001 (Lee & Min 2001), and the most commonly used verification methods are the receiver operating characteristic (ROC) curve and field surveys at an early stage. University Putra Malaysia ranks second in total publications and citations but first in average citations (124.11); it usually uses heuristic models, statistical models and machine learning models to predict landslides (Pradhan et al. 2008). The Chinese Academy of Science has published the third most publications (54), with 2292 citations, but the average number of citations ranks almost the lowest. This indicates that the overall quality of articles published by China needs to be improved. Heuristic, statistical, and machine learning models are frequently used by this institution to predict landslides (Yi et al. 2019). The co-authorship network for institutions that have published GIS-based LS studies with a > 10 publication threshold is shown in Fig. 4. KIGAM is the core of the yellow cluster with 29 TNLs and 133 TLSs. The purple clusters are the centre of University Putra Malaysia for the main geographical co-institutions in Malaysia. The red clusters represent co-institutions from China, such as the Chinese Academy of Science, Xi’an University of Science & Technology, and China University of Geosciences. The institutions that form the core of the green cluster are from Middle East and Southeast Asian countries, such as Duy Tan University, which has 32 TNLs and 150 TLSs. The blue clusters are mainly collaborations among Sejong University, Shiraz University, and Islamic Azad University. The dominant co-institutions occur not only at the national level but also at the international level (Fig. 4).

Geographical distribution and international cooperation analysis

The geographical distribution of the number of articles published in the 79 countries is shown in Fig. 5. The map clearly shows that there are clear geographical clusters of high academic activity related to GIS-based LS. The most-productive countries are mainly in Asia, Europe, and North America, and most published papers are concentrated in a few countries with frequent geological disasters. China is the most-productive country, with 282 publications, accounting for 24.69% of all articles. The next most-productive countries are India, Iran, and South Korea, with 154, 148, and 139 publications, respectively. The remaining countries among the top 10 most-productive countries are Malaysia (8.32%), Turkey (8.23%), Italy (7.88%), Vietnam (7.18%), the USA (7.09%), and Norway (4.38%). The top 10 countries accounted for 66.72% of the global total document volume with the development of the economy, which means there was an increasing focus on geohazards in these countries. The number of articles of these countries or territories is not equal to the number of LS study areas in a country or territory. This is because the country or territory frequency statistics are based on all the signed authors’ affiliations in the articles. Some articles have similar contents, such as the same prediction models being used in different study areas or different models being used to evaluate the LS of the same study areas. Therefore, the information of the study area, landslide inventory, conditioning factors, LS model, and evaluation method for each publication are extracted to reveal the differences in the field, and the results are shown in Section Content analysis.

The network of country collaboration for GIS-based LS studies for countries that meet the publication threshold of > 5 articles is illustrated in Fig. 6, which can be divided into six clusters. The largest cluster (red) is led by China, which occupies the central position on the map and forms an international collaborative network with 27 countries (TNLs = 27), including Australia, the UK, and Saudi Arabia. The China cluster maintains co-authorship ties with the other five international collaborative networks (Fig. 6). The blue cluster reflects an international collaboration network with three main nodes with the centres of India, Italy, and Germany. In the bottom-right part of the map, two clusters (green and yellow) include a variety of countries that are mostly located in Asia and Europe, with abundant connections between these clusters. Iran and Malaysia have the highest number of collaborations, with 41 joint papers in the yellow clusters. The cluster at the top of the diagram (purple) includes countries such as the USA, Mexico, and Poland. However, the remaining cluster (cyan) only includes Norway and Vietnam, which cooperated on 38 publications. It is apparent that the main collaborations between countries in this field include visiting scholars, visiting Ph.D. students, postdoctoral exchanges, and affiliation adjustments, such as Biswajeet Pradhan, who changed his affiliation at least 4 times at the Dresden University of Technology, University Putra Malaysia, Sejong University (South Korea), and the University of Technology Sydney (Australia).

Analysis of published sources and highly cited publications

To identify the journals that are most influential and highly published in this domain and to help investigators find suitable journals for their articles, the number of documents from different publication sources and the corresponding citation parameters were analysed. From 2001 to 2020, 183 journals published 1142 articles related to GIS-based LS, which included 92 journals that published only one article in this field.

The top 15 source publications in terms of the number of publications are listed in Table 3, and these journals published 63.92% of all articles in the last two decades. Environmental Earth Sciences (impact factor = 2.748) published 128 publications, which accounted for 11.21% of the total publications and made it the most predominant journal, as reflected by the maximum TLCS (3516) and average citations (183.65). The next most popular journals were Natural Hazards (92 publications) and Geomorphology (77 publications). Geomorphology (impact factor = 4.139) had the highest number of citations (8291) and the third-highest number of average citations (107.68) among the top 15 journals. Landslides (64 publications) had the third-highest number of citations (4715), and Computers & Geosciences (22 publications) had the second-highest number of average citations (142.59). Obviously, studies of GIS-based LS meet the requirements of these journals that involve earth science or algorithms.

The top 10 most-cited publications related to GIS-based LS are displayed in Appendix Table S2, indicating that these publications received 4993 citations and 2201 TLCSs. Of the top 10 most-cited articles, three were published in Geomorphology. The most-cited article was published in Geomorphology by Ayalew and Yamagishi (2005), with 890 citations and 435 TLCSs. It attempts to extend the application of LR combined with bivariate statistical analyses for LS mapping, which has a notable effect on the LS field and provides an integrated method for LS. We found that highly cited journals seem to prefer to publish new algorithms or integrated models in this research field. Therefore, investigators can easily publish articles in high-quality journals to promote this field for rapid development and obtain high citations if their results provide ground-breaking findings.

Co-occurrence network analysis of the main keywords

In this analysis, a total of 2051 terms were identified by VOSviewer from the keywords, titles, and abstracts of the 1142 articles. To eliminate the interference caused by some research topics, we removed the terms GIS and LS mapping. To assess the temporal evolution in the field, Fig. 7 shows a temporal overlay of the keyword co-occurrence map, and Table 4 lists the top 20 terms used in this research domain during three periods (2001–2010, 2011–2015, and 2016–2020) from 2001 to 2020.

The primary terms before 2010 included certain study areas, LS models, zonation, validations, and predictions. A validation and zonation of the results were considered in this period (Lee 2005). The LS models included LR, FR, ANN, and IV. The main study areas were Lantau Island (China) (Dai et al. 2001), Turkey (Yalcin & Bulut, 2007), Apennines (Italy) (Clerici et al. 2010), Malaysia (Zulhaidi et al. 2010), and Boun (South Korea) (Lee et al. 2003). We note that these sites are located in urban areas adjacent to mountains and by the sea. Because of the rapid expansion of cities, slope instability is often triggered by rainfall or extreme climate. For example, over 800 landslides occurred on Lantau Island, China, after the 1993 Ira typhoon (Dai & Lee 2001). Therefore, there have been many related studies to manage urban construction. Some study-scale terms, such as area, basin, island, region, and mountains, were also common keywords before 2010.

From 2011 to 2015, conventional models, such as LR, FR, ANN, and IV, were still popular methods to predict shallow landslides, rainfall-induced landslides, and debris flows. Only a few new or more intensely studied research topics emerged in this period, which include conditional probability, AHP, FL, and SVM. The study areas were the Wenchuan earthquake-impacted areas in China (Xu et al. 2012), the lesser Himalayas and Himalayas in India and Nepal (Das et al. 2011), and the south coast of the Black Sea in Turkey (Ercanoglu & Temiz 2011). These sites are in mostly tectonically active zones and alpine gorge areas that are prone to landslides. In addition, different sampling strategies were considered for LS mapping (Sujatha et al. 2012).

In the third period (2016 to 2020), the research topics were similar to those in the previous two periods. Thus, this period signifies a continuation of the research topics. Notably, novel LS models that use machine learning, DT, RF, RoF, ensemble models, hybrid or integrated models, neural networks, and ANFIS received increasing attention in GIS-based LS studies during this period. The study sites are mainly at the province scale and are located in the Three Gorges Reservoir in China (Zhou et al. 2018), Hoa Binh/Yen Bai Province in Vietnam (Pham et al. 2018), and some neighbouring provinces of the Alborz Mountains in Iran (Aghdam et al. 2016).

Content analysis

Study area

Information on the study areas, such as the country, location (latitude and longitude), number of study areas, and spatial extent, was extracted from 1142 publications. However, some articles fail to provide the location and extent of the research areas directly. The latitude and longitude are determined in Google Earth based on the location names of the research areas described in the articles or the location names marked in the location map of the research areas. These spatial extents are calculated by the polygon drawn by the unknown extent map in GIS and the scale provided in the article. A total of 1198 study areas were extracted from 1142 publications, and the numbers of articles with 1, 2, 3, and 4 study areas were 1105, 20, 15, and 2, respectively. The 1142 articles in the database include 4 country group-level, 1 continent-scale (Africa) (Broeckx et al. 2018) and 5 global-level study areas (Hong et al. 2007a, b; Hong & Adler 2008; Lin et al. 2017; Stanley & Kirschbaum, 2017). The remaining 1188 study areas in the remaining 1132 publications were distributed in 72 countries. Based on the longitudinal and latitudinal coordinates given in the publications, the spatial distribution of the study sites is presented in Fig. 8. We find that the distribution of study sites has a significant geographical bias. The study areas are mainly distributed in China (257 sites), India (126 sites), Iran (97 sites), South Korea (89 sites), and Italy (81 sites), which are all located in Asia and Europe. In the first decade of the twenty-first century, the hotspots in the study areas were in Italy and France in Europe and South Korea and Japan in Asia. The study area hotspots shifted to China, India, and Iran from 2011 to 2015. In the last 5 years, i.e. from 2016 to 2020, the hotspots in the study areas were basically the same as those in the previous stage, but some African and South American countries (e.g. Ethiopia and Brazil) were given increasing attention in this field.

After excluding the continental- and global-scale assessments, the distribution size of the remaining 1192 study areas is illustrated in Fig. 9, with a total coverage area of 30.77 million km2. The extent of the study areas varies from 0.05 km2 to 9.6 million km2. The size of the study areas was largest in Asia, particularly from 2011 to 2015. The median size of the study areas showed an increasing trend. Based on the size of the study area, we divided the study area scale for LS assessment into the detailed scale (0 ~ 10 km2), medium scale (10 ~ 100 km2), large scale (100 ~ 1000 km2), regional scale (1000 ~ 100,000 km2), and national scale (> 100,000 km2). The 57 detailed-scale assessments in the database mainly focused on small watersheds and specific areas in South Korea (11), Italy (9) and China (5). The medium-scale LS assessments encompassed 275 study areas, including small watersheds, basins, valleys, towns, and cities in South Korea (62), India (40), Italy (34), Turkey (23), and China (21). Large-scale assessments were the most common, spanning 461 study areas, including large watersheds and cities in China (67), India (63), Turkey (44), Iran (43), Malaysia (33), and Italy (23). Regional-scale assessments were performed in 371 areas and mainly included large watersheds, multiple watersheds, large cities, and provinces in China (150), Iran (40), Vietnam (21) and India (20). Finally, national-scale studies focused on 28 areas, including country groups, countries, and provinces.

Landslide inventory

A study area in an article might have one or more landslide inventories. Most studies (92.74%, 1111 out of 1198 study areas) used a single inventory for a given area, whereas the other 7.26% either used > 2 landslide inventories or did not describe the detailed inventory. Figure 10 shows the geographic distribution of the number of landslides in the 1101 single-inventory cases, whereas the remaining 10 landslide inventories were excluded due to the lack of specific longitudinal and latitudinal coordinates for the national groups, Africa, and global scales. A total of 2,048,882 landslides occurred at 1111 study sites, and an average of 1843 landslides were used in each study area to evaluate susceptibility. Huang et al. (2013) and Dumperth et al. (2016) included the maximum (634,265) and minimum (1) numbers of landslides, respectively, in the evaluations of LS in their study areas. Of the 1111 single inventories, 614 (55.27%) included 1 to 250 landslides, 227 (20.43%) included 251 to 500 landslides, and only 16 contained more than 10,000 landslides.

In addition, the different sampling strategies were applied to prepare landslide inventory maps in the database. Of the 1142 articles, 1116 articles (97.72%) had one sampling strategy, 13 articles (1.14%) used two or more sampling strategies, and the remaining articles did not describe the sampling strategy. Through the understanding of different terms and principles of various sampling strategies, we identified four sampling strategies to determine the landslide boundary, namely, point, polygon, seed cell, and circle. A point-based sampling strategy was used in 55.43% of the articles, which uses the initiation point (5 times), the highest point (5 times), and the mass (587 times) or scarp (34 times) centroid as the landslide location (a single pixel). The polygon-based sampling strategy is the second most used, accounting for 41.77% of the articles. This strategy draws the main scarp zone (38 times), the depletion zone (5 times), or the whole area composed of accumulation and depletion zones (428 times) as the landslide boundary. The other sampling strategies were less frequent, with seed cells used in 1.93% of articles and circles used in 0.61% of articles. A seed cell-based sampling strategy was used to represent the possible prefailure conditions by adding a buffer around the crown and flanks of the present landslides (Suzen & Doyuran 2004). A similar approach is that the landslide representative element is extracted on and around the landslide crown, which basically is the upper edge of the landslide scarp area, the so-called the main scarp upper edge (MSUE) (Clerici et al. 2006). Finally, the circle-based sampling strategy uses a buffer zone around the highest point or the centroid of the scarp/polygon of the landslide as the landslide boundary.

Conditioning factors

A total of 10,980 conditioning factors were involved in 1142 publications. Overall, 1 to 34 factors were used per article, with an average of 9.61 factors. Of the 10,980 conditioning factors, we identified 430 different factors based on the following two main criteria (Reichenbach et al. 2018). First, synonymous terms are grouped together, such as “precipitation” and “rainfall”. Second, variables that have different descriptions but similar meanings are grouped together. For example, “slope position” can be quantified by the “topographic position index”, so “slope position” and “topographic position index” were grouped into the class “topographic position index”. Ultimately, we distinguished 430 different conditioning factors involved in 1142 publications. These factors can be categorised into six main groups, namely, topographical factors, geological factors, land cover (e.g. soil and forest), hydrological factors, anthropogenic factors, and environmental factors (Fig. 11). Topographical factors were used most frequently and reached 4322 usage counters. Geological, land cover, and hydrological factors were used 1861, 1849, and 1752 times, respectively. The numbers of anthropogenic factors sharply increased and were used from 59 to 153 times and then to 387 times in the three periods. Likewise, environmental factors sharply increased from 66 to 136 to 395 times in the three periods. The doughnut analysis reveals that the proportions of the topographical, hydrological, anthropogenic, and environmental factors present increasing tendencies in the three periods, whereas the proportions of geological factors and land cover have degressive tendencies.

Conditioning factors. The horizontal bar chart shows the count of six factor classes used in the 2001–2010, 2011–2015, and 2016–2020 periods. The doughnut shows the percentage of the clusters of variables used in these three periods. The vertical bar chart shows the count of the top 17 factors used per year. TWI, topographic wetness index; NDVI, normalised difference vegetation index

Slope was used most frequently, with 1104 uses in 96.67% of all articles, followed by lithology (926, 81.09%), aspect (911, 79.77%), elevation (702, 61.47%), land use/land cover (699, 61.21%), distance to roads (491, 42.99%), distance to faults (472, 41.33%), distance to rivers (429, 37.57%), rainfall (384, 33.63%), and curvature (368, 32.22%) (Fig. 11). These factors were steadily applied over time in LS assessments. In addition, the soil type, distance to drainages and stream power index (SPI) were used 40, 80, and 148 times in the 2001–2010, 2010–2015, and 2016–2020 periods, respectively.

In addition, the conditioning factors used in different study scales were extracted from 1192 study areas, and the results are shown in Fig. 12. We found that the commonly used factors are differences for the five study scales. Topographical factors are the most applied at all study scales. For the detailed scale (0 ~ 10 km2) and medium scale (10 ~ 100 km2), the second most frequently used factor is land cover (e.g. land use/land cover, soil type, soil depth, and soil cohesion). However, geological factors are the second most applied at the large scale (100 ~ 1000 km2), regional scale (1000 ~ 100,000 km2), and national scale (> 100,000 km2).

Mapping unit

The selection of the mapping unit, as the smallest indivisible unit, is crucial to the accuracy and practicality of LS mapping (Qin et al. 2019). Mapping units were extracted from 1142 articles. Most of the articles (98.69%) used a single mapping unit, the remaining used two or more mapping units, and a total of 1157 mapping units in the database (Fig. 13). These mapping units can be divided into five units: grid, slope, unique condition, watershed, and other types. Grid units were widely adopted in the database, accounting for 95.53% of all articles (1091 out of 1142). The range of the grid size is from 0.25 m ~ 2 km. The grid sizes of 30 m, 10 m, 20 m, 25 m, and 5 m were the most commonly used, accounting for 26.12%, 21.36%, 13.29%, 11.09%, and 9.90% of the 1091 articles, respectively. However, the other mapping units were used less frequently, with slope units, unique condition units, and watershed units used in 2.80%, 1.66%, and 0.61% of the articles, respectively.

Mapping units were produced from digital elevation data with different spatial resolutions. We extracted the spatial resolution of the digital elevation data used in 1142 articles for further analysis. Of the 1142 articles, 1096 articles used a single spatial resolution, 12 articles used two or three spatial resolutions, and the remaining did not describe the spatial resolution. The range of the resolution is from 0.25 m × 0.25 m to 1 km × 1 km, and the resolution data of < 30 m × 30 m are used 1007 times. Thirty-metre digital elevation data are most frequently applied, with 26.62% of the 1142 articles, followed by resolutions of 10 m (19.70%), 20 m (12.35%), 25 m (10.51%), and 5 m (10.51%). These resolutions are basically consistent with the grid units.

Susceptibility models

Based on the literature statistics, a total of 2220 models were used in 1142 articles. A total of 27.88%, 20.36%, 20.81%, and 16.04% of the publications used one, two, three, and four model types, respectively. Based on the 2220 model names as given by the authors and their algorithm principles, we identified the repeated or combined use of 429 different model types. We reclassified these model types into six groups based on an algorithm or model principles as follows: qualitative models (28 types), bivariate statistical models (39 types), multivariate statistical models (29 types), machine learning models (96 types), deterministic models (19 types), and hybrid models (218 types). A hybrid model is defined as the combination of two or more methods (e.g. the combination of machine learning and ensemble learning) (Huang et al. 2022b). Figure 14 shows the six groups of model types and the top 13 model types used from 2001 to 2020. The results show that bivariate statistical models were used most often (562 times), accounting for 25.32% of the model use from 2001 to 2020. Machine learning models (553 times, 24.91%), multivariate statistical models (389 times, 17.52%), hybrid models (331 times, 14.91%), and qualitative models (312 times, 14.05%) were the next most common, and deterministic models were only used 73 times (3.29%). The most frequently used models in the three periods were multivariate statistical models, bivariate statistical models, and machine learning models, respectively. Increasing attention has been given to hybrid models, which increased from 6 times in the first period to 32 times in the second period and 293 times in the third period. Similarly, machine learning models increased from 42 to 75 times and then to 436 times during the 2001–2010, 2011–2015, and 2016–2020 periods, respectively.

Among the top 13 model types in the 1142 publications, LR, one type of multivariate statistical model, was used most frequently, specifically 301 times (26.36%). Bivariate statistical models such as FR (174 times, 15.24%), WoE (109 times, 9.54%), and IV/SI (96 times, 8.41%) were frequently used from 2011 to 2020. Machine learning models such as SVM (121 times, 10.60%) and ANN (94 times, 8.23%) were more common from 2001 to 2020, and RF and DT became increasingly popular in the third period of 2016–2020. ANFIS, as a hybrid model, was used frequently in the 2010–2020 period.

Furthermore, the proportion of different factors and the top 10 most-used factors in the five study scales are shown in Fig. 15. The results indicated that deterministic models (e.g. TRIGRS (Transient Rainfall Infiltration and Grid-based Regional Slope-stability) and SINMAP) are widely applied, and hybrid models are the least used in the detail scale (0 ~ 10 km2). Bivariate statistical models (e.g. FR, WoE, and IV) and multivariate statistical (e.g. LR) models are commonly used at the medium scale (10 ~ 100 km2). Machine learning and bivariate statistical models are used more often in dealing with large (100 ~ 1000 km2) and regional (1000 ~ 10,000 km2) scales. At the national scale, machine learning and hybrid models are frequently applied.

Model performance evaluation

To verify the robustness of the constructed LS models, the geospatial data (landslide inventories and conditioning factors) are usually divided into training and testing datasets or training, validation and testing datasets using holdout split, k-fold cross-validation, and bootstrap sampling. These three datasets have different functions; for example, the training dataset is used to model development and model fit performance, the validation dataset is used to optimise the parameters of the learning algorithms, and the testing dataset is used to obtain the model prediction performance. An analysis of the literature database revealed that 57.97% of all articles divided the input data using a holdout strategy, 1.93% a k-fold cross-validation, 0.44% a bootstrap sampling, 0.18% a combination of the three sample subdividing strategies, and the remaining no divided the data. For the popular holdout split, temporal, spatial, and random sampling techniques have been commonly applied. Temporal validation denotes that the landslide events are divided into training and testing datasets based on time information. When adopting a spatial validation, the landslide dataset was geographically divided into two region groups. In the random sampling, the landslide dataset is segmented based on the proportion between the training and testing samplings. Of the 662 articles that described the model performance validation using the holdout strategy, 565, 52, 31, and 14 articles adopted the random selection, temporal selection, spatial selection, and other techniques (e.g. the combination of split techniques, or Mahalanobis distance), respectively. We found that 563 articles separated the landslide inventory into two groups as training and testing datasets based on a certain ratio by means of a random selection technique and only 9 articles randomly divided the landslide inventory into training, validation, and testing datasets. Regarding the ratio between the training and testing samples, a total of 550 publications used one ratio, and 11 publications used two or more ratios, with the maximum number being 11 ratios. We rearranged the training and testing sample ratios and found that the most common training–testing ratio was 70/30 (287 times), followed by 80/20 (75 times), 50/50 (67 times), 75/25 (58 times), 60/40 (20 times), and 90/10 (19 times).

In addition, different metrics were employed in the articles to evaluate the model fit and the model prediction performance. We found that 33.71% of all articles used one metric to measure the model fit and prediction performance, 56.48% used two or more metrics, and 9.81% did not use a metric. To assess the model fit performance, approximately 40.37% (461 publications) of the 1142 publications used only one metric, 10.16% (116 publications) used two metrics, and 15.50% (177 publications) used more than two metrics, with a maximum of 14 metrics (Guzzetti et al. 2006). The remaining 388 publications (33.98%) did not perform any assessment of the model fit performance. A total of 1603 metrics were used for model fit in 754 publications, which can distinguish 93 unique metrics based on the author’s description. According to the purpose and previous studies (Reichenbach et al. 2018), these unique metrics were arranged into six groups: probability, class label, regression, landslide density, signification test, and others (Fig. 16a). We found that the metrics of probability performance were commonly used in the articles, with 37.87% of 1603 metrics. The output result of the model is a format of probabilities ranging between 0 and 1, and the probabilistic outputs can be used as class predictions. The success rate curve (35.73% of 1142 articles) is the most widely applied metric in classification prediction, followed by ROC (17.16%). The model output category value is discrete, which is represented as a class label (landslide or nonlandslide). The metrics of class label performance are the second most used, which include accuracy (9.81%), sensitivity (8.67%), specificity (6.04%), precision (5.60%), and kappa index (5.17%). Root mean square error (4.47% of 1142 articles), − 2Log likelihood (2.19%), Nagelkerke R2 (1.93%), Cox and Snell R2 (1.58%), mean square error (1.14%), and mean absolute error (0.79%) were commonly used regression problem metrics. For the groups of landslide density, landslide density/percentage is the most used in 10.77% of articles, followed by frequency ratio plots (1.40%). In addition, significance test metrics such as the chi-squared test (2.98% of 1142 articles) can compare the significance difference of the proposed models using the training data, which also reveals the model performance.

Similarly, the results of the metrics adopted to assess the model prediction performance revealed that 738 articles (64.62%) used 1534 metrics to validate model prediction performance, and the remaining (404, 35.38% of all articles) did not perform any model verification. We identified 74 unique metrics, which were also reclassified into six classes (Fig. 16b). The results indicated that the most common were the predication rate curve (36.69% of 1142 articles), ROC (18.48%), accuracy (10.68%), landslide density/percentage (7.88%), specificity (7.79%), sensitivity (7.44%), kappa index (5.69%), precision (5.60), and root mean square error (4.82%).

Discussion

Popular research topics and development trends

Trend of the study sites

Both the keywords and study site statistical analysis indicated that the variation in the study sites and study scale were basically similar from 2001 to 2020; however, the focus of the research site has shifted from developed countries (South Korea, Italy, and Japan) in the period 2001–2010 to a few developing and mountainous countries (China, Iran, India, and Malaysia) in the period 2011–2020. One potential reason for the shift in the study sites may be that landslides occur frequently in mountainous countries. Infrastructure construction in developed countries is relatively mature, which indicates that engineering construction is basically completed. However, infrastructure construction is ongoing in many developing countries. Therefore, LS is needed to guide engineering construction and management in developing countries. Moreover, these developing countries are increasingly focusing on environmental protection and have sufficient funding to research and manage the LS field. For example, the National Natural Science Foundation of China supported the highest number of articles (149), which accounted for 13.05% of all 1142 articles. China is at the centre of this research field with the greatest number of publications and research sites and has launched international cooperation with most countries (Figs. 5, 6, and 8). Many institutions (Figs. 3 and 4), such as the Chinese Academy of Sciences, China University of Geosciences and Chengdu University of Technology, have specialised departments to carry out research on landslide prevention and mitigation and have trained many engineering geological experts, which has promoted the development of this field. Certainly, India, and Iran have also carried out much research in this field. In addition, the number of LS assessments remains low in Africa, South America, and Oceania, which reveals a significant geographical bias. Therefore, we recommend that researchers devote more attention to Africa, South America, and Oceania.

Landslide inventory

Landslide inventory is the basic data used to determine landslide susceptibility, hazard, vulnerability, and risk (Jimenez-Peralvarez et al. 2017). Generally, a complete landslide inventory map contains the location, type, volume, activity, main causes, and occurrence data of landslides. Landslide inventory can fall into archives or geomorphological (e.g. historical, event, seasonal, or multitemporal) inventories according to the mapping type (Guzzetti et al. 2012). A detailed definition of these inventories can be found in Guzzetti et al. (2012). Analysis of the literature database reveals that 1111 out of 1198 study areas used one inventory, which are mainly historical inventories with landslide age not differentiated (e.g. Jaafari et al. (2015); Chen et al. (2018b)) or event inventories with landslides induced by a single event such as an earthquake, rainfall, and snowmelt (e.g. Reichenbach et al. (2014); Dou et al. (2020); Ling & Chigira (2020)). However, seasonal and multitemporal landslide inventories in which landslides are triggered by multiple events over longer periods have rarely been analysed in the literature. This is because analysing seasonal and multitemporal inventories is difficult and time-consuming, requiring abundant resources (Guzzetti et al. 2006; Reichenbach et al. 2018).

(1) Source of landslide inventory

In the literature database, landslide inventories are produced by the following techniques: historical reports, field surveys, and remote sensing analysis. This finding is basically consistent with previous studies (Reichenbach et al. 2018; Zou & Zheng 2022). Landslide information from historical records and literature can be used to prepare an archive inventory, but it lacks sufficient data to support regional landslide mapping. Therefore, it is more suitable for small-scale inventories (< 1:200,000). Through the field investigation of landslide characteristics with the assistance of a global positioning system and drilling technology, detailed landslide information (e.g. type, volume, scarp, accumulation of landslides) was delineated on the topographic map. Field surveys are the best method for preparing large-scale inventories (> 1:25,000). However, they have limitations in mapping a large number of landslides or old landslides, especially in mountainous areas where access is difficult or even impossible (Choi et al. 2012). For instance, landslides are partially or totally covered by forests, which makes it difficult to determine the landslide boundary in the field (Guzzetti et al. 2012). Emerging technology based on remote sensing can quickly and contactlessly obtain surface displacement information in the area covered by remote sensing images, which improves the efficiency and quality of landslide identification. In the literature database, the data sources of remote sensing technology mainly include aerial photographs, satellite images, unmanned aerial vehicles (UAVs), interferometric synthetic aperture radar (InSAR), light detection and ranging (LiDAR), and high-resolution digital elevation models (DEMs). The manual interpretation of aerial photographs and high-resolution satellite images is used to identify landslides according to the shape, size, colour, tone, texture, and site topography of geomorphic surfaces, which is the most used landslide detection technology (Suzen & Doyuran 2004; Lee et al. 2018). The visual interpretation of aerial photography is intuitive and low cost but is usually based on expert experience with uncertainty. A new generation of high-resolution remote sensing images, such as IKONOS (Youssef et al. 2015), Quickbird (Sharma et al. 2011), SPOT 5 (Pradhan & Buchroithner 2010), and World View, offers resolutions ranging from 0.5 to 2 m, which provides a very powerful tool for the quick mapping of regional landslide distributions (Rosi et al. 2018). Recently, Google Earth images have been used to draw landslides due to their easy availability and high resolution (Pourghasemi & Rahmati 2018; Nhu et al. 2020; Pandey et al. 2020). UAV technology is not controlled by the terrain and can flexibly and conveniently obtain high-precision surface information, which can compensate for the limitation of shadows generated by satellite images in alpine gorges. The application of InSAR provides a high precision and allows the coverage of large areas, which contributes to the creation and updating of landslide inventory maps (Rosi et al. 2018). We found that differential InSAR (Pareek et al. 2013), small baseline subset InSAR (Xie et al. 2017), and persistent scatter interferometry (Huang et al. 2020b) have produced a few successful case studies dealing with landslide detection, but these technologies were less common in the literature databases. The limitations of InSAR are that the quality of the results depends on optimal conditions concerning slope orientation, only slow deformation can be detected, and the temporal resolution of historical SAR images is limited (Jimenez-Peralvarez et al. 2017). LiDAR can penetrate through vegetative cover to capture the surface terrain changes and generate a high-resolution DEM, although it is expensive; furthermore, it is difficult to manipulate the point cloud. More LiDAR-derived products, such as shaded relief, slope, and contour line maps, can be calculated by a high-resolution DEM in a GIS environment, which improves the interpretation of landslides (Hess et al. 2017). Therefore, many studies have used LiDAR to generate landslide inventories (e.g. Chen et al. (2013); Jebur et al. (2014); Dou et al. (2019)).

With the rapid development of remote sensing and GIS technologies, remote sensing data are becoming increasingly abundant. The application of remote sensing technology to interpret landslides has the following two trends:

-

(i)

The information source is from a single remote sensing image to multitemporal and multisource images. A single image can prepare a historical or an event inventory, whereas seasonal and multitemporal inventories can be obtained using multiple sets of images on different dates (Guzzetti et al. 2012). In the literature database, we found that some studies have used multisource satellite images to map landslides (e.g. Bui et al. (2018); Wu et al. (2020)), but new technologies, such as spatial analysis of DEM and 3D visualisation of images, are less applied in this process.

-

(ii)

The method to extract landslide information is changed from visual interpretation to semiautomatic/automatic interpretation (Zou & Zheng 2022). Visual interpretation of landslides is still popular in the literature database (e.g. Tanoli et al. (2017); Yi et al. (2020)), but it is time-consuming and subjective. The automatic method is based on the change in morphometric data (e.g. slope angle, surface roughness, semi-variance, and fractal dimension) and spectral parameters (e.g. normalised difference vegetation index (NDVI), spectral angle, principal or independent components) using index thresholding (Shou & Yang 2015), change detection methods (Xu et al. 2013), and machine or deep learning algorithms (Gorsevski et al. 2016) to provide rapid landslide inventory. The automatic method has the advantages of objectivity and high efficiency. Therefore, we recommend the application of an automatic method to regularly update the landslide inventory, which can provide unique information on the spatial and temporal evolution of landslides.

(2) Sampling strategies

An inspection of the literature database reveals that most of the sample strategies representing landslides are points (55.43%) and polygons (41.77%), followed by seed cells (1.93%) and circles (0.61%). This indicates that the sample strategies are not consistent in the landslide inventory. The first reason is that multiple sources provide very heterogenic information about landslides (Canavesi et al. 2020). Second, a standard and recognised operating procedure is not introduced to prepare landslide maps (Guzzetti et al. 2012). Last, identifying the location on the hillslope where the factor triggering the landslides exceeds the stability threshold and makes the hillslope unstable is a difficult problem (Regmi et al. 2014). Landslides may occur anywhere on hillslopes (e.g. hillslope toe, landslide centroid) and then can extend downslope, sidewise, and upslope from the initial location. If a large region has abundant landslides, it would be more difficult to determine the locations of initiations through remote sensing images or field surveys (Wang et al. 2014). However, while the locations of landslide initiations are not accurately determined, a significant uncertainty can be introduced (Regmi et al. 2014). Therefore, adopting an appropriate sampling technique is necessary to minimise the uncertainty and generate a reliable result (Bordoni et al. 2020).

In the point-based sampling strategy, there seems to be a consensus on representing the area of landslides by the pixel unit at the centroid locations of landslides. This location is easy to obtain in the GIS environment, which only needs to convert landslides mapped as polygons into centroids. In addition, some studies used data from centroids of the landslide scarps or detachment zones and the highest locations of landslides (Park & Chi 2008). For polygon sampling, inventories of complete landslide areas (from scarps to accumulation zones) are far more extensive. This may be due to the difficulty in dividing the accumulation zones and the depletion zones. In some studies, only the scarps, the depletion zones, the detachment zones (Das et al. 2012), or the deposit areas (Mahalingam et al. 2016) are analysed to determine LS zones. What is the best sampling strategy? Although there is no agreement on the sampling strategy, we can develop insights from previous studies. For instance, some studies (Simon et al. 2017; Zezere et al. 2017; Pourghasemi et al. 2020) show that the centroid of the landslide rupture zone performs best, followed by the landslide centroid and landslide polygon in sampling strategies. Under the condition that landslides are small in size, a single point per landslide located in the centroid of the landslide rupture zone or in the landslide centroid can minimise possible heterogeneity of inducing factors within the landslide boundary, which is sufficient to produce a better prediction result (Zezere et al. 2017). According to Regmi et al. (2014), when the sizes of landslides are large, landslide scarps perform better than point sampling in generating reliable results. Similarly, the comparative result of the effect of different sampling strategies from Yilmaz (2010) showed that the scarp is better than the seed cell and point sampling strategies. In addition, Conoscenti et al. (2008) found that LS assessment using scarps instead of landslide areas for rotational slides generates better prediction results, whereas using areas uphill from crown instead of scarps for flow slide landslides can obtain a better result. In conclusion, the sampling strategies of landslide scarps or rupture zones may obtain a better prediction result because they are the most diagnostically unstable landforms.

Therefore, we recommend that landslide scarps or rupture zones as far away as possible be used to sample landslide data. The selection of sampling strategies can be guided by the following conditions: (i) the landslide types, (ii) the positional accuracy of landslides; (iii) the scale of the study area; and (iv) the limitations of software and hardware.

(3) Sample subdividing strategy

The number of landslides in a landslide inventory usually ranges from 1 to 250, with the areas of the study sites being the nonlinear correlation in the 1142 publications. A total of 60.51% of all articles used a sample subdividing strategy to divide the landslide dataset into training and testing sets. The different samples input into the model have different results, and the quantity of landslide samples available and sample subdividing strategy determine the accuracy of the LS mapping. For example, Maxwell et al. (2020) summarised that increasing the training sample size tended to improve the model accuracy, with the largest improvement at lower sample sizes (1 ~ 250); however, this benefit diminishes as the sample size increases. Random selection is the most frequently used sample subdividing strategy. It allows different ratios to be easily calculated using Hawth’s analysis tool for ArcGIS. A random sample cannot guarantee selecting the best subset to train a model. Compared to the whole samples, the distribution of the training samples should not change much. The inconsistent results are mainly due to the differences in the training samples created by a random process, without investigating the attributes of the created samples (Sameen et al. 2020a). To improve this method, a stratified random sample is applied to each data layer. Landslide/nonlandslide training and testing datasets were constructed by this approach to allow all classes of variables to be represented with the same proportion in these constructed datasets (Eker et al. 2015). This can alleviate the problem that excessive amounts of samples would lead to overfitting of the model. When the sizes of the available original samples are limited, stratified random sampling can augment an existing sample to reduce the standard errors of the regional estimates, thus improving the accuracy of the results (Stehman et al. 2011). However, stratified random sampling is rarely studied in the literature database. Some studies attempt to search the best representative dataset by adopting other methods, such as cross-validation, bootstrap, and Mahalanobis distance (Wan 2009; Goetz et al. 2011; Kornejady et al. 2017; Chen et al. 2018a). For instance, when samples come from the same slide event, multiple points within the same polygon could be grouped into the same partition so as not to bias the assessment. Cross-validation was used to randomly partition the landslide dataset into k subsets. One of the subsets is used as testing data, whereas the remaining subsets are used to train the model and optimise the parameters. It is considered the gold standard for machine learning with many advantages, such as effectively reducing the randomness of the train-test split, better evaluating the modelling methods with limited data, and generating a less biased model.

In the literature database, the commonly used training–testing ratios are ordered from more to less: 70/30, 80/20, 50/50, 75/25, 60/40, and 90/10. What is the best ratio? Many studies directly choose a ratio without any proper explanation to predict landslides and show that the results are satisfactory (Luo et al. 2019; Akinci et al. 2020). However, there is no evidence in these studies that the results can be attributed to the subdividing strategy, or the results would not be optimised under alternative strategies (Pourghasemi et al. 2020). Some studies considered different training/testing ratios for landslide modelling to obtain a better division (Mancini et al. 2010; Paulin et al. 2010). For instance, Jaafari et al. (2019) and Sahin et al. (2020) compared the effect of nine sampling ratios (from 10/90 to 90/10, with intervals of 10%) on the accuracy of prediction models and found that the best results were achieved with a ratio of 70/30, and the lowest performance was achieved with a 90/10 ratio. However, Shirzadi et al. (2018) investigated four different sample sizes and different raster resolutions for preparing LS mapping, and the results revealed that the random subspace algorithm obtained the highest prediction accuracy with sample ratios of 60/40 and 70/30 and a raster resolution of 10 m, whereas the multiboost ensemble algorithm achieved the highest prediction accuracy with ratios of 80/20 and 90/10 and a raster resolution of 20 m. Moreover, Vakhshoori & Zare (2018) considered three ratios (50/50, 60/40, 70/30) for landslide prediction and found that none of the ratios meaningfully reduces/increases the validity of the models. Therefore, there is no agreement on which ratio produces better results. However, we noticed that a small training dataset may not cover the spatial variability of the conditioning factors. In contrast, a large training dataset will more likely violate the independent observation assumptions because of spatial autocorrelation (Heckmann et al. 2014).

Therefore, the selection of the sample subdividing strategy must consider (i) the availability of landslide data, (ii) the model type, (iii) the raster resolution, and (iv) the characteristics of the study area. We recommend that when the training dataset is limited, cross-validation and bootstrap subdividing strategies should be used; and when the training dataset is large, stratified random sampling should be considered.

Conditioning factors and their trends

Of the 476 conditioning factors, the use trends of slope, lithology, aspect, elevation, land use/land cover, distance to rivers, distance to roads, distance to faults, and rainfall in the three periods steadily increased with the number of articles (Fig. 11). These findings are basically consistent with Pourghasemi et al. (2018), Reichenbach et al. (2018), and Lee (2019). Topographical factors are the most frequently used in the literature database because these parameters describing the geometric characteristics of hillslope landforms have an important impact on the occurrence of landslides and are easily extracted from DEMs in GIS. Maxwell et al. (2020) revealed that incorporating measures of lithology, soils, and distance to roads and streams did not improve the model performance in comparison to just using 14 topographical factors, which highlighted the value of topographical factors. Therefore, in remote mountainous areas, if geological factors are limited, DEM-derived terrain variables should be used to predict landslides in the preliminary study. In the literature, the range of the resolution of the DEM is from 0.25 m × 0.25 m to 1 km × 1 km, and the commonly used resolutions are 10–30 m. Digital elevation data with a spatial resolution of 30 m are most frequently applied in the literature and are generally available over large spatial extents, such as Advanced Spaceborne Thermal Emission and Reflection Radiometer Global Digital Elevation Model version 3, NASA Shuttle Radar Topography Mission version 3, and Advanced Land Observing Satellite World 3D-30 m. The resolution of DEMs used for LS modelling has increased significantly in recent years, with most of the articles that use DEM resolutions better than 30 m × 30 m. Very high resolution DEMs (< 5 m) derived from the LiDAR, InSAR, and UAV techniques are becoming popular because they are easy to acquire and provide a high level of detail. The source and spatial resolution of DEM files directly affect the quality of the final predictive maps. For instance, Kaminski (2020) found that a 20-m LiDAR DEM obtained a better model accuracy than a 20-m Land Parcel Identification System DEM. Some studies (Jaafari 2018; Arabameri et al. 2019) demonstrated improved precision of results when high-resolution DEMs were utilised, while other studies (Mahalingam & Olsen 2016; Chang et al. 2019) reported that 30 m DEMs provided better LS mapping than their finer counterparts. Due to the scale effects of DEM-derived topographic variables, it remains a challenging task to select an optimal DEM resolution. A coarser DEM has a lower accuracy in describing the terrain, and the secondary derivatives of DEM (such as slope, aspect, and curvature) are highly dependent on resolution. However, very high-resolution DEMs describe the terrain variations at the microscale, which are unlikely to be related to mesoscale processes that induce landslides (Merghadi et al. 2020). Therefore, the appropriate choice of DEM is dependent on the availability of data, the study region area, and the topographic characteristics of the study region.

Geological factors, such as lithology and distance to faults and lineaments, are frequently used in the literature. Generally, these geological data were produced from geological maps with different scales. If the geological boundaries determined by the mesoscale and small-scale geological maps are inaccurate, it would lead to error in the result. Therefore, field verification of geological boundaries is an essential step. The lithology of bedrock is usually described in the geological map, while the surface sediments are rarely mapped into the lithology. However, some rainfall- or earthquake-inducing landslides often occur in these surface sediments. Therefore, LS assessment does not consider the surface sediments, resulting in the uncertainty for the result.

Land cover mainly includes vegetation-, soil-, and land use-related factors. Among them, land use/land cover is the most used, which can be obtained from the visual or automatic interpretation of satellite images. Land use/land cover is a static variable in the short term and a dynamic variable in the long term. Reichenbach et al. (2014) evaluated and quantified the effect of land use change from 1954 to 2009 on LS zoning and found that an increase in unstable zones over a period of 56 years due to the expansion of bare soil and forested areas was destroyed. NDVI quantitatively describes the physical characteristics of the vegetation. We found that the application of NDVI rapidly increased from 2016 to 2020. This specific trend is due to the increased availability of remote sensing-derived products (e.g. NASA’s Landsat satellites and ESA’s Sentinel-2 satellites) (Pourghasemi et al. 2018). Many soil-related factors (soil type, soil depth, soil cohesion, and internal friction angle) are used in the detail scale (0 ~ 10 km2) and medium scale (10 ~ 100 km2) because these parameters need to be input into the deterministic models. The consistency of soil data also affects the use of soil at different study scales. For example, in the USA, detailed soil data were generated at the county level and, therefore, were not consistent between countries.

For hydrological factors, the application of distance to rivers, topographic wetness index (TWI), and SPI increased rapidly from 2016–2020. These three factors were also calculated by adopting the DEMs and certain algorithms in GIS. The river erodes and softens the hillslope toe for a long time, thus disturbing the stability of the slopes. When using distance to rivers, the distance that the river affects the instability of hillslope should be determined.