Abstract

Debris flows are among the most hazardous phenomena in nature, requiring the preparation of susceptibility models in order to cope with this severe threat. The aim of this research was to verify whether a grid cell-based susceptibility model was capable of predicting the debris-flow initiation sites in the Giampilieri catchment (10 km2), which was hit by a storm on the 1st October 2009, resulting in more than one thousand landslides. This kind of event is to be considered as recurrent in the area as attested by historical data. Therefore, predictive models have been prepared by using forward stepwise binary logistic regression (BLR), a landslide inventory and a set of geo-environmental attributes as predictors. In particular, the effects produced in the quality of the predictive models by changing the grid cell size (2, 4, 16 and 32 m) have been explored in terms of predictive performance, robustness, importance and role of the selected predictors. The results generally attested for high predictive performances of the 2, 8 and 16 m model sets (AUROC > 0.8), with the latter producing slightly better predictions and the 32 m showing the worst yet still acceptable performance and the lowest robustness. As regards the predictors, although all the 4 sets of models share a common group (topographic attributes, outcropping lithology and land use), the similarity resulted higher between the 8 and 16 m sets. The research demonstrates that no meaningful loss in the predictive performance arises by adopting a coarser cell size for the mapping unit. However, the largest adopted cell size resulted in marginally worse model performance, with AUROC slightly below 0.8 and error rates above 0.3.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Landslide susceptibility is the likelihood of a landslide occurring in an area on the basis of its local terrain conditions. It estimates “where” landslides are likely to occur without considering either the magnitude of the expected landslides or its temporal probability or time recurrence (Guzzetti et al. 1999). Therefore, a susceptibility model does not only reflect the present instability conditions but also provides a predictive image of the future instability conditions.

Landslide susceptibility modelling based on stochastic methods requires a calibration landslide inventory and the grid layers of a set of geo-environmental attributes, the latter being assumed to directly or indirectly (as proxies) represent those factors that control the slope failures in the study area. By crossing the landslide inventory (the outcome) and the layers of the geo-environmental variables (the predictors), quantitative relationships can be estimated, allowing us to predict and map the future stable/unstable areas, on the basis of the principle stating that “the past is the key to the future” (Carrara et al. 1995).

One of the main characterizing components of a susceptibility model is the appropriate selection of the mapping units. Mapping units are the basic functional spatial elements in which a study area is partitioned and for which a susceptibility assessment method is able to produce a prediction (stable/unstable). Mapping units should be selected on the basis of a trade-off between spatial resolution of input data, adequacy and needs of the final map users (e.g. the scale of the susceptibility map). Among the large set of mapping units has been proposed in scientific literature (e.g. Carrara et al. 1995; Guzzetti et al. 1999; Rotigliano et al. 2011), two main groups of mapping units are mostly used: terrain units (morphological or hydro-morphological units) and regular grid-arranged polygons (typically square cells). Terrain units partitioning, which aims at subdividing the study area into its morphodynamically independent sub-systems (e.g. sub-basins or slope units; Guzzetti et al. 1999; Van Den Eeckhaut et al. 2009; Frattini et al. 2010; Rotigliano et al. 2012), can be performed through expert subjective but time-consuming mapping, unless automatic extraction procedures from a Digital Elevation Model (DEM) are adopted; in that case we have to accept the need to arbitrary blur or dissolve some unavoidable aberrations. Moreover, the use of hydro-morphological units normally conducts to under-exploit high-resolution data such as those which can be locally calculated from a DEM (Guzzetti 2005; Conoscenti et al. 2014). On the contrary, the partitioning of a study area into regular square cells constitutes a simple objective and totally automatic procedure, allowing the model to match the parent spatial structure of a DEM, so that the maximum exploitation of the resolution of all the derived primary and secondary topographic attributes can be achieved. At the same time, even in the case of very shallow landslides, some causes of the failure initiation could be properly defined in a larger and/or not-regularly shaped neighbourhood of a single small cell, so that a model could not recognize the role of some important predictors, as these could be significant only on a larger spatial scale. Moreover, the morphodynamic meaning of a grid cell, especially for large sizes, is somewhat difficult to be directly linked to the morphodynamic of the failure phenomena. However, it is also a matter of processing time costs: high-resolution grid cell partitioning typically produces a number of mapping units which is two–three order of magnitude more (typically, millions) than terrain partitioning (e.g. Van Den Eeckhaut et al. 2009; Rotigliano et al. 2012), which weighs down some of the spatial processing steps in a GIS (Geographic Information System), such us the multiple random spatial extraction of subsets.

The role of the grid cell size in hydro-morphological analysis has been largely faced by several authors (e.g. Dietrich et al. 1995; Wilson et al. 2000; Kienzle 2004; Goméz Gutiérrez 2015). In the framework of landslide susceptibility stochastic models, indeed the topic can be faced from a multi-perspective view. In fact, the grid cell size can control: the cell value of the geo–environmental attributes which are used as predictors (the independent variables); the precision of the spatial coupling between landslides (the dependent variable), mapping units and predictors; and, when exploiting a post-event DEM, the extent to which this is modified by the landslides or, on the contrary, the landslides can be recognized from the morphological features of the earth surface.

In the last 10 years, many studies have been focused on the effects of grid cell in landslide modelling. Classens et al. (2005) explored the effect of using different grid cells (10, 25, 50 and 100 m) in computing some topographic attributes to be included in landslide hazard models, exploiting the approach from Montgomery and Dietrich (1994). Legorreta-Paulin et al. (2010) investigated the effect of the grid cell variation (1, 5, 10 and 30 m) on the cartographic representation of shallow and deep landslides, exploiting a synthetic landslide inventory and two different techniques for modelling (SINMAP: Stability Index MAPpin, and MLR: Multiple Logistic Regression). Lee et al. (2004) compared landslide susceptibility calculated by means of frequency ratio models, using 5, 10, 30, 100 and 200 m resolution data. Tian et al. (2008) applied information model to determine the optimal grid cell size for landslide (slide type) susceptibility assessment depending on differently sized study areas; in particular, they used eleven groups of different grid cell resolutions (5–190 m) in nine study areas having extensions ranging from 62 to 300 km2. Tarolli and Tarboton (2006) evaluated the effects of grid cell size variations on landslide initiation susceptibility modelling by means of SINMAP, and using five different DEM resolutions (2, 5, 10, 20 and 50 m) in a 10 km2 affected by shallow landslides. Penna et al. (2014) evaluated the predictive power of Quasi-Dynamic Shallow Landslide Model (QD-SLaM) to simulate shallow landslide locations in a small part of the Giampilieri catchment, testing four DEM resolutions (2, 4, 10 and 20 m). Palamakumbure et al. (2015) analysed the role of the grid cell size (2, 5, 10, 15, 20, 25, 30 and 40 m) in landslide susceptibility assessment by means of decision trees methods of a 94 km2 area affected by 777 slides.

Although the topic has been widely explored, there are some points which still need further investigations: for example, the previously mentioned studies, only partially focused on the variations of the selected predisposing factors led by the changes in grid cell size, mainly focusing on the global performance of the models. At the same time, differently from the above-mentioned studies, the present research has been focused on the effects for stochastic susceptibility modelling for multiple debris-flows prediction scenarios. Debris flows are worldwide diffused in mountainous areas. They are defined as rapid shallow landslides, triggered on steep slopes by any hydrological phenomena capable to rapidly increase the pore pressure in the weathered mantle (e.g. Igwe et al. 2014; Vilimek et al. 2015).

In the present paper, the relationships between grid cell size and predictive performances for debris-flows susceptibility models are analysed. To cope with this topic, a study was carried out for the Giampilieri catchment (Sicily, southern Italy), which was hit on the 1st of October 2009 by a storm resulting in a Multiple Occurrence of Regional Landslide Events (MORLE; Crozier 2005). For this area, susceptibility models prepared exploiting different grid cell sizes were compared in terms of inner structure (ranking and coefficients of the selected independent variables) and predictive performances (accuracy and robustness). The models were derived by applying binary logistic regression (BLR), based on a before-event DEM and calibrated with the 2009 debris-flows inventory. The investigation is focused in predicting debris-flow source areas, neglecting the runout of the phenomena.

General framework

Setting of the study area

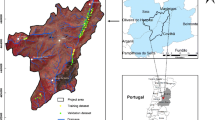

The study area (Fig. 1a) is located in the southernmost part of the Messina Municipality territory, and corresponds to the catchment of the Giampilieri stream. For the spatial continuity, the small secondary hydrographic units, which border the catchment mouth sectors on the coastal area, were also included in the study area and referred to the main hydrographic unit of the Giampilieri stream. The whole study area extends for 10 km2 and includes inhabited areas which are highly exposed to flood and debris-flow hazard being located at the base of very steep slopes (frequently crossing the secondary drainage lines). The main part of the territory is occupied by terraced slopes no longer cultivated and pasture, with wide natural areas with chestnut woods limited to the central part of the catchment.

a Location of the study area; b Geological setting of the Giampilieri catchment (modified from Lentini et al. 2007)

From a geological point of view, the area is located on the Peloritani Mountain Belt (Fig. 1b), which is characterized by the presence of Aquitanian S–SW verging thrusts. The allochthonous pile is formed by several tectono-stratigraphic units (Messina et al. 2004), three of which constitute the geologic structure of the study area. In particular, in the Giampilieri catchment, the outcropping lithology mainly corresponds to paragneiss and mica schists of the Aspromonte and Mela Units, and phyllites and metarenites of the Mandanici Unit. These Units are part of the eo-Varisician basement formed during the Hercynian orogenesis, including also a Meso-Cenozoic cover, which shows Alpine overprint resulting in cataclastic to mylonitic shear zones both in the basement and, locally, in the cover layer (Messina et al. 2004). Starting from the Pliocene, an NNE–SSW extensional tectonics fragmented the Hercynian orogen (Ghisetti and Vezzani 2002; Fiannacca et al. 2008); this active phase is responsible for the recent intense seismicity and uplifting in northeastern Sicily (Lentini et al. 2000).

Both tectonics and regional uplifting are important for the current geomorphological setting of the study area. Indeed, favoured by the tectonically fracturing, the bedrock is very effectively subjected to intense weathering processes, whilst the high uplift rate and the proximity to the coast determine a landscape which is marked by high ridges, limited by steep slopes connecting to deeply incised main and secondary stream valleys at the base. Very steep and small catchments with a highly torrential hydrologic regime have developed both on the eastern and western slopes of the Peloritani Mountain Belt. Although these torrents are usually dry, under strong raining events, due to the high mean steepness of the slopes, the water flow rapidly increases determining frequently floods at the coastal plain sector and causing damage to the infrastructure (especially roads) that are located in the proximities of the river banks.

The climate in the region is a typically Mediterranean Csa (Köppen 1923), with a dry season from April to August and a wet season from September to March with an average yearly rainfall of nearly 900 mm. Locally the weather is influenced by the physiography of the area, the Messina Strait dividing into two parts the Calabria-Peloritani Arc: the Aspromonte and the Peloritani chains situated in Calabria and Sicily, respectively. In fact, both the Ionian and the Tyrrhenian sides of Sicily are exposed to the formation of cumulonimbi because of the complicated orography and the proximity to the warm water of Mediterranean sea (Melani et al. 2013).

The 2009 multiple debris-flows event

The Giampilieri area became sadly known on the 1st October 2009 when thousands of landslides occurred, causing 36 fatalities and hundreds of million euros of damage (Protezione Civile Nazionale 2009). Seven-hundred people were displaced from their territory and still now the poor economy of the villages involved is strongly affected by the event. In particular, on the 1st October 2009, an area of around 50 km2 was hit by a cumulative rainfall event of approximately 160 mm in 6 h which followed two previous rainfalls events on 16th September (76 mm) and 23rd–24th September (190 mm). These last two rains resulted in the complete saturation of the soil (Aronica et al. 2012), so that in the 1st October 2009, thousands of debris flows were triggered in the whole 50 km2 area within less than five hours resulting in a Mediterranean Multiple Occurrence Regional Landslide Event (MORLE; Crozier 2005).

Due to the high water content, as well as the steepness of the slopes and drainage network, a huge number of failures, which mainly activated as shallow landslides, propagated downslope in form of debris flows or avalanches (Varnes 1978; Hutchinson 1988; Hungr et al. 2001, 2014). Once the drainage network was reached, the debris moved downstream onto the main Giampilieri valley, in some cases destroying the roads and the houses of those urbanized areas whose limits intersected the drainage axes.

It is worth to notice that the 2009 storm-triggered landslide event has to be considered as one of the strongest in a time series of similar events which have been recorded during the last century (see Cama et al. 2015 and references therein) in the Giampilieri area. Therefore, given the high recurrence of such events, debris flow susceptibility maps are among the mandatory tools in this sector of Sicily.

Materials

In light of the main topic of the research, high-resolution source data were required for the controlling factors. In particular, a source DEM with 2 m cell size and 0.17 m of vertical accuracy released by ARTA from a LIDAR pre-event coverage dated to 2007 was exploited for calculating all the topographic variables. At the same time, the available 1:50.000 geological map (Fig. 1; Lentini et al. 2007) and the Corine land use map were detailed up to a 1:10.000 scale by means of both field surveys and orthophotos analysis.

The landslide inventory (Fig. 2) was prepared by integrating field and remote survey in the framework of previous researches carried out in the same area (Lombardo et al. 2014, 2015). Soon after the event (5–6 October 2009), a field survey (Agnesi et al. 2009) was carried out for immediate and detailed landslide recognition, aimed at classifying the phenomena and deriving geomorphological landslide models. The systematic debris-flow event inventory (Guzzetti et al. 2012) was then obtained by comparing pre- and post-event high-resolution images.

Debris flow is a specific type of landslide, whose definition (Hungr et al. 2014) is: “Very rapid to extremely rapid surging flow of saturated debris in a steep channel. Strong entrainment of material and water from the flow path”. In a debris flow, it is possible to distinguish initiation (source area), transport and deposition zone. Debris-flow phenomena have to be more clearly distinguished depending on the dynamic that drives the failures, as well as the kinematic of the propagation phase. In particular, on a morphodynamic basis, two types of debris flow can be recognized: channelized debris flows and debris avalanches (Varnes 1978; Hutchinson 1988; Hungr et al. 2001, 2014). However, in the present research, we did not distinguish between the two types under the hypothesis that the relationships between the predictors and the two types of phenomena do not meaningfully differ in the determination of the source mechanisms. In fact, the main task of this research was to produce a map which indicates where a future shallow landslide capable of propagating onto the slope, potentially channelling and reaching the drainage axes, was more likely to activate. For this reason, our inventory is quite different, both in terms of classification and mapping criteria, from similar archives, which have been recently delivered for the same area (Mondini et al. 2011; Ardizzone et al. 2012; Del Ventisette et al. 2012; De Guidi and Scudero 2013). In particular, as shown in Fig. 2a, we decided to separately map each of the source areas even in the case of multiple converging phenomena obtaining an inventory which consists of 1118 single source areas for the Giampilieri basin (Fig. 2; Lombardo et al. 2014). As presented in the Fig. 3, the dimensions of the source areas are characterized by a prevalence of small phenomena, with an average perimeter and area respectively of 87 m and 0.383 km2, with a very elongated shape (length/width ratio centred approximately on 6.60). For each of the source areas, a landslide identification point (LIP) was derived as the highest point along the border of the landslide polygon (Costanzo et al. 2012a, 2014), assuming that it individuates the sector of the slope where the geo-environmental conditions which lead to the past slope failure could be detected.

Methods

Applying a stochastic approach to assess landslide susceptibility requires the adoption of a statistical technique to analyse the relationships between a set of predictors, corresponding to the geo-environmental attributes which control the landslide phenomena, and a dependent variable which is represented by the presence/absence of slope failures. In particular, expert choices are to be taken regarding the selection of suitable statistical techniques, potential predictors, diagnostic areas, mapping units and validation procedures.

In the wide range of stochastic methods which are proposed in the scientific literature (Carrara et al. 1995), BLR results as one of the most adopted (e.g. Guzzetti et al. 2005; Lombardo et al. 2014, 2015), in light also of its suitability in handling nominal and continuous predictors with no need of any variable transformation, so that the final models can be easily interpreted in terms of geomorphology.

As regards the selection of the predictors (e.g. Costanzo et al. 2012a, 2014), geomorphological criteria must be adopted in defining a set of spatial geo-environmental attributes, which can directly or indirectly (as proxies) express the potential landslide controlling factors. Once a set of potential predictors is prepared, some statistical methods assess the importance of each of the variables and restrict the selection to a parsimonious subset of performing ones (e.g. forward/backward stepwise selection or principal components analysis).

The diagnostic areas (Süzen and Doyuran 2004; Rotigliano et al. 2011; Costanzo et al. 2012a, 2014) are landslide or landslide-related areas on a slope, showing the same geo-environmental conditions of those portions where the known landslides activated. Under the assumption that “the past is the key to the future”, a predictive model capable of identifying the spatial distribution of the diagnostic areas is also skilled in depicting a prediction image (the susceptibility map) of the sites where the future landslides will activate. Therefore, the diagnostic areas are the core of the whole stochastic approach as they furnish the constraints for the multivariate relationship between the predictors and the unstable status of the outcome which will drive the regression algorithm in the calibration of the model.

The mapping units (Carrara et al. 1991, 1995, 1999; Guzzetti et al. 1999) are the focus of this research, being introduced in “Introduction” section and further discussed in the following.

Finally, validation procedures (Carrara et al. 2003; Chung and Fabbri 2003; Guzzetti et al. 2006; Frattini et al. 2010; Rossi et al. 2010; Petschko et al. 2014) constitute a mandatory component of a susceptibility assessment method. In particular, the quality of the models has to be assessed in terms of accuracy and precision in the predicted probabilities for both the known and unknown positive and negative cases, as well as of the geomorphological adequacy of the inner structure of the model itself, which is given by the importance and role of the selected predictors.

Binary logistic regression

BLR analysis is a multivariate statistical technique, based on a frequentist approach that is used to investigate and model the response of a binary outcome in relation to changes in a set of independent variables (Hosmer and Lemeshow 2000). BLR proved to be an extremely useful technique in modelling landslide susceptibility (Atkinson and Massari 1998; Dai and Lee 2002, 2003; Ohlmacher and Davis 2003; Süzen and Doyuran 2004; Bai et al. 2010; Yalcin et al. 2011; Costanzo et al. 2014; Lombardo et al. 2014, 2015; Cama et al. 2015) also in comparative studies, in which its model performance has been evaluated with respect to those of other statistical methods (Guzzetti et al. 2005; Mathew et al. 2008; Rossi et al. 2010; Akgün 2012; Vorpahl et al. 2012; Felicísimo et al. 2012; Conoscenti et al. 2015; Lombardo et al. 2014, 2015).

BLR linearizes the relationship between p predictors and the probability π of an outcome x, by defining the latter in terms of a logit function g, which corresponds to the following transformation:

where π(x) is the conditional probability of the outcome (i.e. the event occurs) given the x condition, α is the constant term or intercept, the x’s are the input predictors and the β’s their coefficients. The fitting of the logistic regression model, which is performed by adopting maximum likelihood (L) estimators, allows us to estimate the best intercept and β p coefficients.

Once a BLR model is regressed to match the calibration dataset, its prediction image (Chung and Fabbri 2003) can be directly calculated by combining all the layers of the independent variables, assigning their regressed coefficients to compute the logit and finally exponentiating and rearranging for π(x), obtaining

Based on the maximum likelihood function, the global fitting of the regressed model on the data domain is measured and tested for significance, exploiting the statistic −2LL (negative log-likelihood), which has a χ 2 distribution. The model fitting is also evaluated by exploiting two pseudo-R 2 statistics: the McFadden R 2 and the Nagelkerke R 2. The first is defined as 1 − (L MODEL/L INTERCEPT) being confined between 0 and 1. As a rule of thumb (McFadden 1978), values between 0.2 and 0.4 attest for excellent fit. Nagelkerke R 2 is a corrected pseudo-R 2 statistics, ranging from 0 to 1 (1991).

One of the main advantages in adopting BLR is that this method can accept among the predictors entries of all types (either continuous, dichotomous or polychotomous), simply requiring the designing (Hosmer and Lemeshow 2000) for each nominal variable of a group of binary-derived variables, one for each class. At the same time, the interpretation of binary probabilities for the outcome and linear coefficients for the predictors is very straightforward also in geomorphological terms: the odds ratios (OR), which are calculated by exponentiating the β’s, express the association between each predictors and the outcome, indicating how much more likely (or unlikely) it is for the outcome to be positive (unstable cell) when a unit change of a predictor occurs. Negatively correlated variables will produce negative β’s and ORs limited between 0 and 1; positively correlated variables will result in positive β’s and ORs greater than 1 (Hosmer and Lemeshow 2000). Moreover, by applying stepwise forward selection procedure, only the predictors resulting in a significant increasing of the negative log-likelihood function will be included in the final model. The result is the list of restricted variables that can be submitted to the final BLR, each having its order of importance (i.e. ranking).

The question of whether BLR should be performed either on balanced or on unbalanced datasets of positive (landslides) and negative (not landslides) cases (Atkinson and Massari 1998; Süzen and Doyuran 2004; Nefeslioglu et al. 2008; Van Den Eeckhaut et al. 2009; Bai et al. 2010; Frattini et al. 2010) has recently been debated (e.g. Goméz Gutiérrez et al. 2009; Heckmann et al. 2014). In this research, balanced datasets were prepared, including an equal number of positives (inside the diagnostic area) and negatives (outside the landslide polygons).

An open source software was used (TANAGRA: Rakotomalala 2005) to perform the forward stepwise BLR.

Controlling factors and diagnostic areas

The potential controlling factors were defined in light also of their availability and quality, based on the expected failure mechanisms that were inferred from field and remote landslide surveys and bi-variate analysis of the landslide inventory and the factor maps. Due to the limited spatial extension of the catchment, as well as to the low density of the rain gauges network in this area (Fig. 1), it was not possible to include any rainfall-related predictors among the tested ones. In fact, the spatial variation of such a predictor would be very low and almost heavily controlled by the adopted interpolation algorithm (Minder et al. 2009). In particular, we chose eleven potential controlling factors to apply the BLR multivariate analysis: nine topographic variables, the outcropping lithology (GEO) and the land use (USE). The nine selected topographic variables are height (HGT), slope (SLO), plan (PLC) and profile (PRC) curvatures, aspect (ASP), topographic wetness index (TWI), stream power index (SPI), flow accumulation (FLA) and landform classification (LCL). The Figs. 4 and 5 show the continuous and the discrete variables.

Maps of the discrete variables in the Giampilieri catchment: a aspect; b landform classification; c outcropping lithology; d land use. See Table 1 for legend abbreviations

The elevation of the topographic surface is commonly considered as a good proxy variable for rainfall (Coe et al. 2004; Lombardo et al. 2014). Moreover, it was selected among the most important variable in previous debris-flow susceptibility studies in the same Giampilieri catchment (e.g. Lombardo et al. 2014, 2015; Cama et al. 2015). The steepness of a cell is the maximum first derivative of the height and was calculated applying the spatial analyst tool in ArcGis 9.3. Slope is expected to be among the most important predictor for gravitational process, as it is directly linked to the shear strength acting onto the potential shallow failure surface, being very important in determining the static equilibrium of each cell. In fact, the topographic surface slope is a proxy for the real steepness of the failure surface, which is buried and frequently not-parallel to it. However, particularly for shallow slide/flow failures, the two surfaces (topographic and rupture) do not diverge so much and the slope angle can be considered as a highly reliable proxy. Slope steepness also controls the overland and subsurface flow velocity and runoff rate. The topographic curvatures control the divergence and convergence, both of surface runoff and shallow gravitational stresses (Ohlmacher 2007). In this study, the profile curvature and the plan curvature were used, which were calculated in the parallel and perpendicular directions of the maximum slope, respectively. The curvatures were calculated using the spatial analyst tool on a cell-by-cell basis for the four cell sizes. The aspect is an important factor which controls solar insolation, evapotranspiration, flora and fauna distribution and abundance. Being the erosional processes related with the chemical physical weathering operated by water, temperature and vegetation, it is very important to consider this factor for the determination of landslide susceptibility. Besides, the aspect frequently assumes a role of proxy variable for the attitude of the rock layers. Wetness and stream power indexes are widely used in landslide susceptibility modelling to express the potential water infiltration volumes and erosion power on slopes, respectively (Wilson and Gallant 2000). TWI is defined as ln(As/tanβ), where As is the local upslope area draining through a certain point per unit contour length and β is the local slope angle. It describes the extension and distribution of the saturation zones assuming steady-state conditions and uniform soil properties. SPI, which is calculated as (As*tanβ), expresses on a topographic basis the expected runoff energy, predicting net erosion in areas of profile convexity and tangential concavity (flow acceleration and convergence zones) and net deposition in areas of profile concavity (zones of decreasing flow velocity). Both the two attributes were calculated using the terrain analysis tool in Arcview 3.2. FLA directly furnishes for each cell the number of cells feeding its runoff, so that it plays a role in all the hydrological process and related geomorphic consequences. Landform classification was automatically calculated using the Topography tool (Tagil and Jenness 2008) in ArcGis 9.3. This tool allows us to calculate the Topographic Position Index (TPI), which is necessary to classify the landforms of a landscape. In particular, TPI compares the elevation of each cell in a DEM to the mean elevation of a specified neighbourhood. Negative TPI values represent locations that are lower than their surroundings (valleys) and vice versa. TPI values near zero are either flat areas (where the slope is near zero) or areas of constant slope (where the slope of the point is significantly greater than zero). The landform classification is derived for each cell of a DEM by combining the TPIs computed at two different scales (Weiss 2001). In the Giampilieri catchment, considering the valley/ridge main geometric features, the best classification was calculated with a small area TPI of 50 m and a large area TPI of 250 m.

The outcropping lithology is here exploited as a proxy for the type of regolithic cover and, as a consequence, for its lithotechnical properties. Weathering processes (type and extent) are in fact heavily controlled by the parent rock and its fracturing condition, particularly in an area where variable grade metamorphic rocks outcrop. At the same time, possible anthropogenic effects on the site stability conditions are linked to the variable USE.

With the conversion of the nominal predictors (ASP, LCL, LIT and USE) into binary variables, a total number of 39 predictors for each of the four pixel size models were obtained (Table 1).

Starting from the source layers of the predictors, four different grid cell multivariate layers were prepared, having cell size of 2, 8, 16 and 32 m, corresponding to an interval ranging from the finest DEM resolution to three times the mean width of the mapped landslides (Fig. 3). Each cell was assigned the values from the source layers of the predictors: the majority class inside the cell, for nominal attributes, and the new value obtained by applying a bi-linear interpolation resampling method, for the continuous topographic attributes. We hereafter refer to these layers and the related models with the codes: 2, 8, 16 and 32 m.

In this study, in defining the diagnostic areas, we considered the debris slides and debris flows as local phenomena, whose initiation has to be linked to the geo-environmental conditions in the small neighbourhood of the initiation area, rather than to the general conditions of the whole affected slope units. For this reason, we adopted the LIP presence/absence within the cell as the spatial criterion to set its stable/unstable status.

Model validation

In order to compare the quality of the four models obtained by using the four cell sizes, quantitative and rigorous validation procedures were applied. In fact, any evaluation of the skill and the reliability of a predictive model should consider both its accuracy and robustness (see Carrara et al. 2003; Guzzetti et al. 2006; Frattini et al. 2010; Rossi et al. 2010; Petschko et al. 2014; Lombardo et al. 2014, 2015; Cama et al. 2015). The accuracy is the degree to which the result of the model conforms with the observed cases and is evaluated by comparing the prediction image to the status (stable/unstable) of each mapping unit. To evaluate the accuracy, at least two datasets are needed: a calibration dataset is used to constrain the maximum likelihood estimator when regressing the model through BLR; a validation dataset constitutes the target we want to match (i.e. the future debris-flows source areas) and is made by positive and negative cases which are unknown to the model during the calibration step. Probabilities below and above the threshold values of 0.5 identify negative and positive predictions, respectively, which for a perfect model would match stable and unstable cells. In particular, we refer to goodness of fit and prediction skill, when considering the accuracy of the model in predicting the known (calibration) and the unknown (validation) cases, respectively. In the first case, the statistical procedure consists in a classification of known cases, while in the second case, a real prediction of unknown cases is performed. Therefore, the goodness of fit gives an overestimation of the real predictive performance of the model; in fact, it gives a measurement of how the model fits the same known cases that have been adopted to optimize its coefficients. For this reason, the availability of an independent validation set of positive and negative cases is mandatory for assessing the prediction skill in a validation test.

Among the different methods which can be followed to prepare calibration and validation datasets, in this study, a random partition procedure (Chung and Fabbri 2003; Conoscenti et al. 2008) was adopted dividing each dataset in balanced stable and unstable cases subsets: 75 % was used to calibrate the models, whilst the 25 % left was used to validate them.

Two different measures of the accuracy were then considered to estimate goodness of fit and prediction skill.

A first method is based on classic contingency tables which compare classified/predicted to known/unknown stable and unstable cases, by considering a 0.5 cut-off value for π(x). A partition in true positive (TP) and negatives (TN), and false positive (FP: Error Type I) and negatives (FN: Type II error) arises in this way and, together with the model error rate (TP + TN)/(FP + FN), single estimates of sensitivity or hit rate (TP/(TP + FN)) and 1—specificity (FP/(TP + FN)), it is possible to compute a large number of other metrics which can attest for the accuracy of the model. According to Frattini et al. (2010), we can define this accuracy assessment as a cut-off dependent accuracy estimation. Conversely, a cut-off independent metric for accuracy is based on the ROC (Receiver operating characteristic) plots, showing the trade-off between sensitivity and 1-specificity. These curves allow the interpreter to have an overall estimate of the goodness of fit and prediction skill of the models by using the Area Under the ROC curve (AUROC) as a metrics of its accuracy. ROC curves analysis gives a more complete estimate of the accuracy of the model, as it condenses an infinite number of contingency tables, enabling an estimation of the auto-consistency and linearity of the classification/prediction function in the domain of the two types of errors. Threshold AUROC values of 0.7, 0.8 and 0.9 identify acceptable, excellent and outstanding predictions (Hosmer and Lemeshow 2000).

Since the results of a susceptibility assessment can be strongly sample dependent, it is also necessary to evaluate the robustness of the model (Guzzetti et al. 2006; Guns and Vanacker 2012; Heckmann et al. 2014, Lombardo et al. 2014, 2015; Cama et al. 2015), referring to the stability of the model outputs (both for accuracy and predictors importance) with respect to changes in the dataset exploited for its calibration. In particular, two main sources of variation are identified: the extraction of the negative cases which balance the positives in a dataset; the splitting into calibration and validation subsets of the dataset. The robustness of the predictive results was evaluated on a total number of 80 repetitions for each of the four adopted grid cell size. Eight replicates were first obtained, each including the same positives and a different set of equal number of negative cells, the latter randomly selected outside the landslide polygons. Besides, each replicate was randomly split for 10 times into calibration and validation subsets, respectively, containing 75 and 25 % of balanced (positive/negative) cases.

As larger cells can include more than one LIP, the number of positive cases changed depending on the cell size. In particular, the unstable cells were 1121, 1092, 982 and 786 cells for the 2, 8, 16 and 32 m model sets, respectively. At the same time, the fraction of the area which was included in a single dataset (landslide and no-landslide samples) increases with the cell size, being 0.40 % of the total catchment area for the model set 2 m, 6.29 % for 8 m, 20.01 % for 16 m and 72.4 % for 32 m.

Thanks to the availability of 80 estimations of the predicted probability of each cell, an analysis of the precision of the method was also performed in the spatial domain. Therefore, to complete the comparison of the four different model sets, assuming the 80 replicates as being repetitions of the logit regressions, four susceptibility and error maps were prepared by plotting for each pixel the mean probability and its dispersion, the latter being expressed by a two standard deviations interval (Guzzetti et al. 2006; Rossi et al. 2010).

The whole validation procedure allowed us to estimate the quality of the predictive models according to the four-level validation scheme proposed by Guzzetti et al. (2006): (1) investigating the role of the thematic information in the production of the susceptibility model; (2) determining the model sensitivity and robustness to variations in the input data; (3) determining the error associated with the susceptibility prediction obtained for each mapping unit; (4) testing the model prediction against independent landslide information.

Results

In order to compare the effects of the different grid cell sizes, summary representations of their predictive results as obtained from their 80 replicates have been arranged by considering both the performance of the models (accuracy, errors and robustness) and their inner structure (selected predictors, frequency of selection ν, ranking R and β coefficients). Figure 6 shows the goodness of fit that was achieved for the four model sets. Among the possible indexes (Guzzetti et al. 2006; Frattini et al. 2010), the calibration model error rate, the AUC of the calibration ROC curves and two pseudo-R 2 (McFadden 1978; Nagelkerke 1991) were adopted. A general view of the results attests for a good performance of all the model sets, with low error rates, high pseudo R 2 and excellent AUROCs.

Figure 6 allows us to easily compare the quality of the different model sets in terms of mean and dispersion of the performance indexes. A coherent loss of performance affects the set 32 m, whilst the 2, 8 and 16 m sets showed the same lower error rates and highest AUROCs and pseudo-R 2. The standard deviations of all the indexes of performance highlighted that the robustness decreases for models sets with coarser resolution, the 32 m models having standard deviations for all the four indexes that are twice that of 2 m.

As regards the prediction skill, Table 2 summarizes the AUCs of the calibration ROC curves, together with the difference between calibration and validation AUROCs, whose amplitude or negative sign is an indicator of potential overfitting, and the validation model error rate. Coherently with what observed for the model fitting, all the predictive models performed with high accuracy and robustness, with the 32 m model showing the lowest but acceptable prediction skill (AUROC = 0.794) and higher (0.32) total error rate. Among the three best performing models (AUROC > 0.83), the 16 m showed the lowest total error rate (0.23).

To compare also in the spatial domain the results through the 80 model runs performed for each of the four different model sets, the final susceptibility (Fig. 7) and model error maps (Fig. 8) were prepared. At a low-scale analysis, the four susceptibility maps showed a general coherence, producing in the catchment a very susceptible lower south-eastern sector, an intermediate susceptibility middle sector and an almost unsusceptible uphill north-western zone. At the same time, in the framework of a general valley-symmetry of the susceptibility distribution, in the high susceptible sector centred around the Giampilieri village, an asymmetric distribution can be observed with the steeper and shorter slopes in the external left flank resulting as more susceptible than the longest less steep ones, in the right flank. The asymmetry becomes more evident if increasing the cell size from 2 to 16 m, whilst, passing from 16 to 32 m, the map is symmetrical again.

Focusing on the highly susceptible sector, 8 and 16 m maps highlight a more discriminated distribution of the susceptibility function, whilst 2 and 32 m tend to give prevalence to the highest and the middle–high susceptibility classes, respectively. Taking also into consideration the error maps, a high precision in the susceptibility estimates arose for all the four model sets, with some limited coastal sectors where the error is slightly higher. A loss in precision was also observed, with changing degree depending on the cell size, along the deeply incised valleys. Two enclaves of high model errors are located for the 2 m map in the south-eastern high susceptible sector, where very poorly represented lithologies outcrop (see Fig. 1): clays with sandy levels of the Spadafora Unit (LIT_spdb) and Quaternary deposits (LIT_PMAd).

More details can be explored from the analysis of the inner structure of the models, which was carried out in terms of the selected predictors (Table 3). A first set of predictors (red labels), including elevation (HEIGHT), grassland land use (USE_322), paragneiss to micashists lithology (LIT_mlea) and recent alluvial deposits (LIT_bb), was selected more than 60 times (ν > 60), with very high and stable ranks, in all the model sets. The coefficients are coherent, negative and stable for (HEIGHT), (LIT_mlea) and (LIT_bb), positive for (USE_322). A second set (navy blue labels) included high rank predictors very frequently selected (ν > 60 in at least two model sets), but with some differences for one of the model sets. Among these, steepness (SLO) was systematically selected with positive coefficients and high and stable ranks, for all the 80 repetitions in the 2, 8 and 16 m model sets and 50 times in the 32 m. The urban fabric land use (USE_111) was selected with negative coefficients in the model sets 2, 8 and 16 m, with quite stable medium ranks, but never selected in the 32 m set. A third set (green labels) included predictors which were quite frequently selected (20 < ν < 60) with medium–low ranks, at least in three model sets: South, South-West and North–East slope aspect (ASP_s/sw/ne), silicate marbles lithology (LIT_pmad) and olive grove land use (USE_223). South and South-West aspects increase the probability for a new debris flow and, coherently, North-East aspect, for 2, 8 and 16 m, decreases it. Both silicate marbles lithology and olive grove land use showed negative coefficients.

It is worth to note that the FDNb class is not reported in Table 3, as it was set as the reference category in the conversion of the nominal LIT variable. Therefore, as all the other LIT-classes were included in the model with negative coefficients, FDNb has to be considered as a factor positively correlated with debris flows.

Among the selected predictors, the plan concavity (PLAN_CONC) was selected 40 times in the 16 m and 45 in the 32 m sets, while the profile concavity (PROF_CONC) was selected 40 times in the 8 m and 76 times in the 16 m sets. The types of topographic curvatures which were included in the models change depending on the size of the mapping unit: no curvature for 2 m, concavity of profile curvature (PROF_CONC) for 8 m, concavity of profile and plan curvatures (PROF_CONC and PLAN_CONC) for 16 m, concavity of plan curvature (PLAN_CONC) for 32 m. Both profile and planar convexities are responsible for higher stability of the mapped cells, whilst concavities always result in an increase of susceptibility.

The topographic wetness index, quite surprisingly, is selected for 24 times only in the 32 m model set, with high ranks and negative coefficients. The model sets 2 and 16 m commonly share the class U-shaped valley (LCL_ushp) of the landform classification among the predictors which were selected at least 20 out of the 80 repetitions.

In order to depict in a summarized way the coherence between the four model sets, taking also into account the stability in the regressed coefficients, box plots were prepared of the odd ratios values obtained for each selected predictor (Fig. 9) through the replicates. The general coherence between the model sets is largely evident. The odds ratios of the same predictor but selected in different model sets resulted as being very similar, except for the poorly selected factors.

Discussion

The results which were achieved in this research generally attested for a high suitability of any of the grid cell-based susceptibility models. These results confirm that the causative factors for debris-flow initiations can be defined on the basis of the local cell conditions, so that, once a landslide inventory has been prepared correctly, a simple and automatic derivation of mapping units and diagnostic areas (LIPs and square cells, respectively) leads to high-quality predictive models (Costanzo et al. 2012b; Lombardo et al. 2014, 2015; Cama et al. 2015). In this research, all the indexes of predictive performance showed high scores and good robustness, with smoothed variations through 80 repetitions. Besides, the difference between the calibration and validation AUROCs was systematically positive and negligible, indicating no signs of overfitting.

From a geomorphological perspective, taking into consideration the commonly shared and more frequently selected predictors, debris flows in the Giampilieri catchment are connected to highly steep slopes, characterized by phyllites (FDNb) outcropping, grassland land use and South and South-West aspect (Fig. 7). In fact, phyllites resulted as deeply weathered on the field, while grassland use actually corresponds to old abandoned crops distributed over the head sectors of the slopes, where no more maintained terraces potentially act as areas of high infiltration; slope aspect could be connected with the attitude of the strata or to the combination of the FDNb outcropping in the shorter and steeper slopes of the left flank in the middle-coastal sector of the catchment. The role of the altitude, which resulted in a negative correlation with susceptibility, is to be interpreted as a combined effect of the main characteristics of the 2009 calibration event and of the characteristics of the weathered mantle. In fact, on the one hand, the 1st October 2009 the storm hit with higher intensity the middle–coastal sector of the catchment and debris flows triggered from the head of its low–middle altitude slopes (Lombardo et al. 2014). On the other hand, negatively correlated lithologies such as the paragneiss and mica shists of the Mela Unit (MLEa) largely outcrop in the inner high altitude sector of the catchment.

On a comparative basis, small but measurable differences in the predictive performance of the models were detected, depending on the adopted grid cell size. In fact, the three finer cell-based models resulted in better performances than 32 m, with the 16 m showing the best coupling between AUROC and error rate. This could be related to the typical dimensions of the debris flows in the Giampilieri catchment and to the optimal selection of the diagnostic areas. In particular, a 16 m cell resulted as more suitable in encompassing the whole source sector of the landslide area, where instability conditions arise, triggering the detachment phase of the debris flows. With a near 10 m mean width of the mapped phenomena (Fig. 3), 2 and 8 m model seem to pick little more local conditions, whilst a 32 m cell reflects a too large slope spatial domain.

The best performance of the 16 m model set is evident if looking at the validation model error rate, which is based on a 0.5 cut-off for positive/negative discrimination, suggesting that the optimization of the cell size resulted in an increase of the prediction skill for the unknown cases.

If the focus of the comparison between the results is set on what is here called the “inner structure” of the models (in this referring to the set of selected predictors, each characterized by its rank, regression coefficient and odd ratio), larger differences between the model sets are highlighted. In particular, together with a group of main predictors which have been selected with similar coefficients and high to medium ranks in all the four model sets, a secondary group of predictors has been identified which was selectively extracted by the different model sets. In general, the closest the cell sizes, the more similar the selected secondary predictors. For example, it is very worth to notice how the type of the selected curvatures changed with the grid size: the 16 m model set is the one which mostly exploited these predictors, jointly including in the model the plan and the profile concavities in 40 and 76 out of 80 repetitions, respectively; at the opposite, any of the topographic curvatures was selected for more than five times by the 2 m model sets, suggesting that in the study area, debris flows are evidently controlled by topographic curvatures defined in a space larger than 2 m.

By overlooking the general pattern of the controlling factors (Figs. 4, 5) and the prediction images that have been obtained from the four model sets, clear differences arise both for the susceptibility and the error spatial distributions (Figs. 7, 8). In fact, while the general pattern of the susceptibility depends on the main predictors (red labels, in Table 3), the small scale effects are the results of the secondary predictors: navy blue and green labelled and topographic curvatures in Table 3. On the other hand, the error maps generally highlighted very small standard deviations, but with different spatial distribution for the four model sets.

The comparison between the findings of this research to those obtained by other authors has to be limited case by case to the specific common parts of the experiment design. In fact, many elements could affect the relationships between grid resolution and performance of the susceptibility models: the adopted approach (stochastic/deterministic), the diagnostic areas (whole landslide polygon/source areas/LIP), landslide typology and geomorphological features of the study area. Besides, the adopted methods for the quantitative estimation of the model performance are unfortunately not standardized, so that different metrics are to be compared. In particular, the results of this research are comparable with the findings of Lee et al. (2004), which having tested 5, 10, 30, 100 and 200 m cell sizes, concluded that 5, 10 and 30 m spatial resolutions showed similar best accuracies in the success rate curves, interpreting this results as the effect of the different scales of the input data (1:5000–1:50,000). Unfortunately, no data on prediction skill and robustness of the models, as well as on the role of the predictors, are discussed by the authors. In the present research, the tested grid cell sizes were limited to 16 times the resolution of the source DEM (2 m), whilst the scale of the source data for outcropping lithology and land use was 1:10,000. Moreover, Lee et al. (2004) did not describe their landslide inventory which makes it more difficult to compare the two researches.

More similarly to our research, Tian et al. (2008) based their modelling on a 5 m resolution source DEM and a set of 1:10,000 thematic maps conclude that the spatial resolution of the models affects their accuracy and that “the tendency to use smaller and smaller grid cells appears unjustified”. These authors investigated also the influence of the resolution on the predictor importance, concluding that the DEM-derived factors are those which produce differences in the predictive performance. In particular, they deduced that the optimal size for the grid cell resolution depends on the dimension of the study area, obtaining their optimal model with 90 m grid cell resolution in an area of 135 km2. However, no information is given on the type of landslides which have been studied and no validation of prediction skill and robustness performed. Similarly to what is here obtained, Palamakumbure et al. (2015) found that the topographic predictors are differently exploited by the model, if using different cell sizes; in particular, their best performing model resulted the one based on 10 m cells, although the authors recognize that no standard size for optimal resolution can generally be defined. It is worth to notice that Palamakure et al. (2015) worked with larger landslides (>20,000 m2) and a different typology (slides).

By taking into consideration the results of tests carried out in deterministic or process based susceptibility assessments, it is interesting to highlight that also in these cases, very similar conclusions are derived: the finer available resolution for the grid cell sizes does not corresponds to the higher performing models. Tarolli and Tarborton (2006) conclude that a 10 m pixel resolution is optimal for applying SINMAP to modelling shallow landslides. Penna et al. (2014) demonstrated that the optimal grid cell resolution for the identification of potential instability in terrain stability mapping is not the finer one (2 m) and investigated the direct modifications of the parameters of the QD-SLaM model induced by the grid size changings. In particular, these authors found better performances for 4 and 10 m cell sizes, concluding, in accordance with our findings that “higher DTM resolution does not necessarily mean better model performance”. Unfortunately, although they worked on the same Giampilieri catchment, we could qualitatively verify the general agreement with our susceptibility pattern only for the very limited square sectors for which a susceptibility map is there presented: for the head of the slopes in the left side of the valley it is possible to verify, the prevalence of the high classes and of the middle–high classes if using the 2 m or the 20 m cell size, respectively.

On the basis of our results, it is worth to notice that the higher resolution model (2 m) did not produce a significantly higher predictive performance. This suggests the use of coarser DEMs is not limiting the quality of the susceptibility models, unless a threshold value for the grid cell size is reached. In this case, even the 32 m model set showed good predictive performances. However, attention must be paid to the circumstance that in this research, the topographic variables were derived for all the tested cell sizes from the same high-resolution (2 m) source DEM, by applying a bi-linear interpolation resampling method. For example, a 16 m DEM could have been derived also by digitizing the contour lines of a 1:10,000 topographic map and we cannot exclude that the combined effect of a coarser cell size and a less precise DEM would have driven to different results.

Conclusions

Debris flows are among the most hazardous phenomena in nature, which typically take the form of widespread or regional multiple events triggered by intense driving inputs such as a storm. In order to face this natural threat, landslide susceptibility assessment by means of stochastic models can provide useful tools for risk mitigation, civil defence and land use planning. The shallowness of the failure zone of the debris flows, together with the high controlling role which topography plays in their propagation phase, suggests the use of high-resolution DEM to cope with their modelling. However, being aware that no general rules can be achieved as each study area could give a different response, efforts are required to explore the relationships between the accuracy and the robustness of these susceptibility models and the adopted grid cell sizes. In line with other studies our research demonstrated, for a multiple debris-flow study case, that using the smallest grid cell size as mapping units does not always ensure the best performance in terms of prediction skill. This suggests the possibility to obtain high performing models even in the case of lack of high-resolution data, provided that the source data are adequate to the adopted cell size.

In this research, in particular, the inner structure of the models and its influence on the accuracy and precision of the susceptibility map have been explored for differently sized grid cells stochastic models. Although only small differences in the accuracy and precision of the models were detected, the role of some of the predictors, which were included in the susceptibility models, changed depending on the grid cell size. This effect was clearly detectable for the topographic variables, as these are the ones which more rapidly vary in space. On a geomorphological perspective, the geo-environmental attributes which control the slope failures demonstrated to change their role with the spatial scale to which the landslide phenomena were stochastically modelled. In this sense, from our test, it also comes as recommendable the opportunity to consider in the same models predictors defined at different cell sizes, in the perspective of finding the richer best performing model rather than the single optimal cell size.

In conclusion, the relationships between the grid cell size and the quality of a landslide susceptibility model strictly depend on the type of landslides, the selected diagnostic areas, the geomorphological and topographical features of the study area and the quality of the source data. For this reason, the optimization of the cell size must be evaluated case by case by performing tests such as the ones proposed in this research, without expecting any decrease in the predictive performance of coarser grid-based models.

References

Agnesi V, Rasà R, Puglisi C et al (2009) La franosità diffusa dell’1 Ottobre 2009 nel territorio ionico-peloritano della Provincia di Messina: stato delle indagini e prime considerazioni sulle dinamiche geomorfiche attivate. Geologi di Sicilia 4:23–30

Akgün A (2012) A comparison of landslide susceptibility maps produced by logistic regression, multi-criteria decision, and likelihood ratio methods: a case study at İzmir, Turkey. Landslides 9:93–106. doi:10.1007/s10346-011-0283-7

Ardizzone F, Basile G, Cardinali M et al (2012) Landslide inventory map for the Briga and the Giampilieri catchments, NE Sicily, Italy. J Maps 8:176–180. doi:10.1080/17445647.2012.694271

Aronica GT, Brigandí G, Morey N (2012) Flash floods and debris flow in the city area of Messina, north-east part of Sicily, Italy in October 2009: the case of the Giampilieri catchment. Nat Hazards Earth Syst Sci 12:1295–1309. doi:10.5194/nhess-12-1295-2012

Atkinson PM, Massari R (1998) Generalised linear modelling of susceptibility to landsliding in the central Apennines, Italy. Comput Geosci 24(4):373–385. doi:10.1016/S0098-3004(97)00117-9

Bai SB, Wang J, Lü GN et al (2010) GIS-based logistic regression for landslide susceptibility mapping of the Zhongxian segment in the three Gorges area, China. Geomorphology 115:23–31. doi:10.1016/j.geomorph.2009.09.025

Cama M, Lombardo L, Conoscenti C et al (2015) Predicting storm-triggered debris flow events: application to the 2009 Ionian Peloritan disaster (Sicily, Italy). Nat Hazards Earth Syst Sci 15:1785–1806. doi:10.5194/nhess-15-1785-2015

Carrara A, Cardinali M, Detti R et al (1991) GIS techniques and statistical models in evaluating landslide hazard. Earth Surf Process Landf 16:427–445. doi:10.1002/esp.3290160505

Carrara A, Cardinali M, Guzzetti F, Reichenbach P (1995) GIS technology in mapping landslide hazard. In: Carrara A, Guzzetti F (eds) Geographical information systems in assessing natural hazards. Kluwer Academic Publisher, Dordrecht, pp 135–175

Carrara A, Guzzetti F, Cardinali M, Reichenbach P (1999) Use of GIS technology in the prediction and monitoring of landslide hazard. Nat Hazards 20:117–135. doi:10.1023/A:1008097111310

Carrara A, Crosta G, Frattini P (2003) Geomorphological and historical data in assessing landslide hazard. Earth Surf Process Landforms 28:1125–1142. doi:10.1002/esp.545

Chung C-JF, Fabbri AG (2003) Validation of spatial prediction models for landslide hazard mapping. Nat Hazards 30:451–472. doi:10.1023/B:NHAZ.0000007172.62651.2b

Claessens L, Heuvelink GBM, Schoorl JM, Veldkamp A (2005) DEM resolution effects on shallow landslide hazard and soil redistribution modelling. Earth Surf Process Landf 30:461–477. doi:10.1002/esp.1155

Coe JA, Godt JW, Baum RL et al (2004) Landslide susceptibility from topography in Guatemala. Landslides Eval Stab 1:69–78

Conoscenti C, Di Maggio C, Rotigliano E (2008) GIS analysis to assess landslide susceptibility in a fluvial basin of NW Sicily (Italy). Geomorphology 94(3):325–339. doi:10.1016/j.geomorph.2006.10.039

Conoscenti C, Angileri S, Cappadonia C et al (2014) Gully erosion susceptibility assessment by means of GIS-based logistic regression: a case of Sicily (Italy). Geomorphology 204:399–411. doi:10.1016/j.geomorph.2013.08.021

Conoscenti C, Ciaccio M, Caraballo-Arias NA et al (2015) Assessment of susceptibility to earth-flow landslide using logistic regression and multivariate adaptive regression splines: a case of the Belice River basin (western Sicily, Italy). Geomorphology 242:49–64. doi:10.1016/j.geomorph.2014.09.020

Costanzo D, Rotigliano E, Irigaray C et al (2012a) Factors selection in landslide susceptibility modelling on large scale following the gis matrix method: application to the river Beiro basin (Spain). Nat Hazards Earth Syst Sci 12:327–340. doi:10.5194/nhess-12-327-2012

Costanzo D, Cappadonia C, Conoscenti C, Rotigliano E (2012b) Exporting a Google Earth™ aided earth-flow susceptibility model: a test in central Sicily. Nat Hazards 61:103–114. doi:10.1007/s11069-011-9870-0

Costanzo D, Chacón J, Conoscenti C et al (2014) Forward logistic regression for earth-flow landslide susceptibility assessment in the Platani river basin (southern Sicily, Italy). Landslides 11:639–653. doi:10.1007/s10346-013-0415-3

Crozier MJ (2005) Multiple-occurrence regional landslide events in New Zealand: hazard management issues. Landslides 2:247–256. doi:10.1007/s10346-005-0019-7

Dai FC, Lee CF (2002) Landslide characteristics and slope instability modeling using GIS, Lantau Island, Hong Kong. Geomorphology 42:213–228. doi:10.1016/S0169-555X(01)00087-3

Dai FC, Lee CF (2003) A spatiotemporal probabilistic modelling of storm-induced shallow landsliding using aerial photographs and logistic regression. Earth Surf Process Landf 28:527–545. doi:10.1002/esp.456

De Guidi G, Scudero S (2013) Landslide susceptibility assessment in the Peloritani Mts. (Sicily, Italy) and clues for tectonic control of relief processes. Nat Hazards Earth Syst Sci 13:949–963. doi:10.5194/nhess-13-949-2013

Del Ventisette C, Garfagnoli F, Ciampalini A et al (2012) An integrated approach to the study of catastrophic debris-flows: geological hazard and human influence. Nat Hazards Earth Syst Sci 12:2907–2922. doi:10.5194/nhess-12-2907-2012

Dietrich WE, Reiss R, Hsu ML, Montgomery DR (1995) A process-based model for colluvial soil depth and shallow landsliding using digital elevation data. Hydrol Process 9:383–400. doi:10.1002/hyp.3360090311

Felicísimo ÁM, Cuartero A, Remondo J, Quirós E (2012) Mapping landslide susceptibility with logistic regression, multiple adaptive regression splines, classification and regression trees, and maximum entropy methods: a comparative study. Landslides 10:175–189. doi:10.1007/s10346-012-0320-1

Fiannacca P, Williams IS, Cirrincione R, Pezzino A (2008) Crustal contributions to late hercynian peraluminous magmatism in the Southern Calabria-Peloritani Orogen, Southern Italy: petrogenetic inferences and the gondwana connection. J Petrol 49:1497–1514. doi:10.1093/petrology/egn035

Frattini P, Crosta G, Carrara A (2010) Techniques for evaluating the performance of landslide susceptibility models. Eng Geol 111:62–72. doi:10.1016/j.enggeo.2009.12.004

Ghisetti F, Vezzani L (2002) Normal faulting, transcrustal permeability and seismogenesis in the Apennines (Italy). Tectonophysics 348:155–168. doi:10.1016/S0040-1951(01)00254-2

Gómez Gutiérrez A, Schnabel S, Lavado Contador F (2009) Using and comparing two nonparametric methods (CART and MARS) to model the potential distribution of gullies. Ecol Model 220:3630–3637. doi:10.1016/j.ecolmodel.2009.06.020

Gómez Gutiérrez A, Conoscenti C, Angileri SE et al (2015) Using Topographical attributes to model the spatial distribution of gullying from two Mediterranean basins: advantages and limitations. Nat Hazards. doi:10.1007/s11069-015-1703-0

Guns M, Vanacker V (2012) Logistic regression applied to natural hazards: rare event logistic regression with replications. Nat Hazards Earth Syst Sci 12:1937–1947. doi:10.5194/nhess-12-1937-2012

Guzzetti F, Carrara A, Cardinali M, Reichenbach P (1999) Landslide hazard evaluation: a review of current techniques and their application in a multi-scale study, Central Italy. Geomorphology 31:181–216. doi:10.1016/S0169-555X(99)00078-1

Guzzetti F, Reichenbach P, Cardinali M et al (2005) Probabilistic landslide hazard assessment at the basin scale. Geomorphology 72:272–299. doi:10.1016/j.geomorph.2005.06.00

Guzzetti F, Reichenbach P, Ardizzone F et al (2006) Estimating the quality of landslide susceptibility models. Geomorphology 81:166–184. doi:10.1016/j.geomorph.2006.04.007

Guzzetti F, Mondini AC, Cardinali M et al (2012) Landslide inventory maps: new tools for an old problem. Earth-Sci Rev 112:42–66. doi:10.1016/j.earscirev.2012.02.001

Heckmann T, Gegg K, GeggBecht M A, Becht M (2014) Sample size matters: investigating the effect of sample size on a logistic regression susceptibility model for debris flows. Nat Hazards Earth Syst Sci 14:259–278. doi:10.5194/nhess-14-259-2014

Hosmer DW, Lemeshow S (2000) Applied logistic regression. Ser Probab Stat 2:375. doi:10.1198/tech.2002.s650

Hungr O, Evans SG, Bovis MJ, Hutchinson JN (2001) A review of the classification of landslides of the flow type. Environ Eng Geosci 7:221–238. doi:10.2113/gseegeosci.7.3.221

Hungr O, McDougall S, Bovis M (2005) Entrainment of material by debris flows. Debris-flow Hazards and Related Phenomena. Springer, Berlin, pp 135–158

Hungr O, Leroueil S, Picarelli L (2014) The Varnes classification of landslide types, an update. Landslides 11:167–194. doi:10.1007/s10346-013-0436-y

Hutchinson JN (1988) General report: morphological and geotechnical parameters of landslides in relation to geology and hydrogeology. Landslides 26:3–35

Igwe O, Mode W, Nnebedum O et al (2014) The analysis of rainfall-induced slope failures at Iva Valley area of Enugu State, Nigeria. Environ Earth Sci 71:2465–2480. doi:10.1007/s12665-014-4009-8

Kienzle S (2004) The effect of DEM raster resolution on first order, second order and compound terrain derivatives. Trans GIS 8:83–111. doi:10.1111/j.1467-9671.2004.00169.x

Köppen W (1923) Die klimate der Erde. Walter de Gruyter, Berlin

Lee S, Choi J, Woo I (2004) The effect of spatial resolution on the accuracy of landslide susceptibility mapping: a case study in Boun, Korea. Geosci J 8:51–60. doi:10.1007/BF02910278

Legorreta Paulin G, Bursik M, Lugo-Hubp J, Zamorano Orozco JJ (2010) Effect of pixel size on cartographic representation of shallow and deep-seated landslide, and its collateral effects on the forecasting of landslides by SINMAP and Multiple Logistic Regression landslide models. Phys Chem Earth 35:137–148. doi:10.1016/j.pce.2010.04.008

Lentini F, Catalano S, Carbone S (2000) Note illustrative della Carta Geologica della Provincia di Messina, scala 1: 50.000. Provincia Regionale di Messina, Assessorato Servizio Territorio—Servizio Geologico d’Italia

Lentini F, Carbone S, Messina A et al (2007) Carta Geologica d’Italia scala 1:50.000 Foglio 601 “Messina-Reggio di Calabria”, con note illustrative. APAT (Agenzia per la protezione dell’ambiente e per i servizi tecnici), Dipartimento Difesa del Suolo—Servizio geologico d’Italia

Lombardo L, Cama M, Maerker M, Rotigliano E (2014) A test of transferability for landslides susceptibility models under extreme climatic events: application to the Messina 2009 disaster. Nat Hazards 74:1951–1989. doi:10.1007/s11069-014-1285-2

Lombardo L, Cama M, Conoscenti C et al (2015) Binary logistic regression versus stochastic gradient boosted decision trees in assessing landslide susceptibility for multiple-occurring landslide events: application to the 2009 storm event in Messina (Sicily, southern Italy). Nat Hazards. doi:10.1007/s11069-015-1915-3

Mathew J, Jha VK, Rawat GS (2008) Landslide susceptibility zonation mapping and its validation in part of Garhwal Lesser Himalaya, India, using binary logistic regression analysis and receiver operating characteristic curve method. Landslides 6:17–26. doi:10.1007/s10346-008-0138-z

McFadden D (1978) Quantitative methods for analyzing travel behaviour of individuals: some recent developments. In: Hensher DA, Stopher PR (eds) Behav Travel Model. Croom Helm, London, pp 279–318

Melani S, Pasi F, Gozzini B, Ortolani A (2013) A four year (2007-2010) analysis of long-lasting deep convective systems in the Mediterranean basin. Atmos Res 123:151–166. doi:10.1016/j.atmosres.2012.09.006

Messina A, Somma R, Careri G et al (2004) Peloritani continental crust composition (southern Italy): geological and petrochemical evidences. Boll Soc Geol Ital 123:405–444

Minder JR, Roe GH, Montgomery DR (2009) Spatial patterns of rainfall and shallow landslide susceptibility. Water Resour Res 45:1–11. doi:10.1029/2008WR007027

Mondini AC, Guzzetti F, Reichenbach P et al. (2011) Semi-automatic recognition and mapping of rainfall induced shallow landslides using optical satellite images. Remote Sens Environ 115:1743–1757. doi:10.1016/j.rse.2011.03.006

Montgomery DR, Dietrich WE (1994) A physically based model for the topographic control on shallow landsliding. Water Resour Res 30:1153–1171. doi:10.1029/93WR02979

Nagelkerke NJD (1991) A note on a general definition of the coefficient of determination. Biometrika 78:691–692. doi:10.1093/biomet/78.3.691

Nefeslioglu HA, Gokceoglu C, Sonmez H (2008) An assessment on the use of logistic regression and artificial neural networks with different sampling strategies for the preparation of landslide susceptibility maps. Eng Geol 97:171–191. doi:10.1016/j.enggeo.2008.01.004

Ohlmacher GC (2007) Plan curvature and landslide probability in regions dominated by earth flows and earth slides. Eng Geol 91:117–134. doi:10.1016/j.enggeo.2007.01.005

Ohlmacher GC, Davis JC (2003) Using multiple logistic regression and GIS technology to predict landslide hazard in northeast Kansas, USA. Eng Geol 69:331–343. doi:10.1016/S0013-7952(03)00069-3

Palamakumbure D, Flentje P, Stirling D (2015) Consideration of optimal pixel resolution in deriving landslide susceptibility zoning within the Sydney Basin, New South Wales, Australia. Comput Geosci 82:13–22. doi:10.1016/j.cageo.2015.05.002

Penna D, Borga M, Aronica GT et al (2014) The influence of grid resolution on the prediction of natural and road-related shallow landslides. Hydrol Earth Syst Sci 18:2127–2139. doi:10.5194/hess-18-2127-2014

Petschko H, Brenning A, Bell R et al (2014) Assessing the quality of landslide susceptibility maps—case study lower Austria. Nat Hazards Earth Syst Sci 14:95–118. doi:10.5194/nhess-14-95-2014

Protezione Civile Nazionale (2009) Landslide and mud flood emergency Messina Province, Italy. Application for assistance from the European Union Solidarity Fund (EUSF), Council Regulation (EC), 2012/2002, Article 4

Rakotomalala R (2005) Tanagra: un logiciel gratuit pour l’enseignement et la recherche. Actes De EGC 697–702

Rossi M, Guzzetti F, Reichenbach P et al (2010) Optimal landslide susceptibility zonation based on multiple forecasts. Geomorphology 114:129–142. doi:10.1016/j.geomorph.2009.06.020

Rotigliano E, Agnesi V, Cappadonia C, Conoscenti C (2011) The role of the diagnostic areas in the assessment of landslide susceptibility models: a test in the sicilian chain. Nat Hazards 58:981–999. doi:10.1007/s11069-010-9708-1

Rotigliano E, Cappadonia C, Conoscenti C et al (2012) Slope units-based flow susceptibility model: using validation tests to select controlling factors. Nat Hazards 61:143–153. doi:10.1007/s11069-011-9846-0

Süzen ML, Doyuran V (2004) A comparison of the GIS based landslide susceptibility assessment methods: multivariate versus bivariate. Environ Geol 45:665–679. doi:10.1007/s00254-003-0917-8

Tagil S, Jenness J (2008) GIS-based automated landform classification and topographic, landcover and geologic attributes of landforms around the Yazoren Polje, Turkey. J Appl Sci 8:910–921. doi:10.3923/jas.2008.910.921

Tarolli P, Tarboton DG (2006) A new method for determination of most likely landslide initiation points and the evaluation of digital terrain model scale in terrain stability mapping. Hydrol Earth Syst Sci 10:663–677. doi:10.5194/hess-10-663-2006

Tian Y, Xiao C, Liu Y, Wu L (2008) Effects of raster resolution on landslide susceptibility mapping: a case study of Shenzhen. Sci China Ser E: Technol Sci 51:188–198. doi:10.1007/s11431-008-6009-y

Van Den Eeckhaut M, Reichenbach P, Guzzetti F et al (2009) Combined landslide inventory and susceptibility assessment based on different mapping units: an example from the Flemish Ardennes, Belgium. Nat Hazards Earth Syst Sci 9:507–521. doi:10.5194/nhess-9-507-2009

Varnes DJ (1978) Slope movement: types and process. In: Schuster RL, Krizek RJ (eds) Landslides: analyses and control, transportation research board, vol 176. Natural Academy of Science, Washington, pp 11–33

Vilimek V, Klimes J, Emmer A et al (2015) Geomorphologic impacts of the glacial lake outburst flood from Lake No. 513 (Peru). Environ Earth Sci 73:5233–5244. doi:10.1007/s12665-014-3768-6

Vorpahl P, Elsenbeer H, Märker M, Schröder B (2012) How can statistical models help to determine driving factors of landslides? Ecol Modell 239:27–39. doi:10.1016/j.ecolmodel.2011.12.007

Weiss A (2001) Topographic position and landforms analysis. Poster Present ESRI User Conf San Diego, CA 64:227–245

Wilson JP, Gallant JC (2000) Secondary topographic attributes. Terrain Anal Princ Appl 87–132

Wilson JP, Reppeto PL, Snyder D (2000) Effect of data source, grid resolution, and flow-routing method on computed topographic attributes. Terrain Anal Princ Appl 133–161

Yalcin A, Reis S, Aydinoglu AC, Yomralioglu T (2011) A GIS-based comparative study of frequency ratio, analytical hierarchy process, bivariate statistics and logistics regression methods for landslide susceptibility mapping in Trabzon, NE Turkey. Catena 85:274–287. doi:10.1016/j.catena.2011.01.014

Acknowledgments

The findings and discussion of this research are the results of the activity which was carried out in the framework of the Ph.D. research projects of Mariaelena Cama and Luigi Lombardo at the “Dipartimento di Scienze della Terra e del Mare” of the University of Palermo (XXV cycle), supervisor Prof. E. Rotigliano. Luigi Lombardo Ph.D. thesis was internationally co-tutored with the Department of Geography of the University of Tübingen (Deutschland). This research was supported by the project SUFRA_SICILIA, funded by the ARTA-Regione Sicilia, and the FFR 2012/2013 Project, funded by the University of Palermo. Authors have commonly shared all the part of the research as well as of the manuscript preparation. Clare Hampton has linguistically edited the final version of this text. Authors wish to thank two anonymous reviewers which allowed to improve the quality of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Cama, M., Conoscenti, C., Lombardo, L. et al. Exploring relationships between grid cell size and accuracy for debris-flow susceptibility models: a test in the Giampilieri catchment (Sicily, Italy). Environ Earth Sci 75, 238 (2016). https://doi.org/10.1007/s12665-015-5047-6

Received:

Accepted:

Published: