Abstract

An increasing problem throughout the world, plagiarism and related dishonest behaviors have been affecting Indian science for quite some time. To curb this problem, the Indian government has initiated a number of measures, such as providing plagiarism detecting software to all the universities for free. Still, however, many unfair or incorrect papers are published. For some time, publishers have used an efficient tool to deal with such situations: retractions. A published paper that is later discovered to not deserve publication—which can be for a number of reasons—can be withdrawn (and often removed from the online contents of the journal) by the publisher. This study aims (1) to identify retracted publications authored or co-authored by researchers affiliated to Indian institutions and (2) to analyze the reasons for the retractions. To meet these aims, we searched the SCOPUS database to identify retraction notices for articles authored or coauthored by Indian authors. The first retraction notice was issued back in 1996, an exceptionally early retraction, as the next one was published in 2005. Thus, we analyzed 239 retractions (195 from journals and 44 from conference proceedings) published between 2005 and 3 August 2018 (but most were published after 2010), in terms of the following qualitative retraction-wise parameters: the main reason for retraction, authorship, a collaboration level, collaborating countries, sources of retraction (a journal or conference proceedings), and funding sources of the research. We also detected journals with high retraction frequencies. Mainly two phrases—“Retraction notice to” and “Retracted Article”—were used to retract publications. The most frequent reason for retractions was plagiarism.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The retraction of a scientific publication indicates that the original publication’s data and conclusions should not be used for future research (https://en.wikipedia.org/wiki/Retraction). Hence, retractions are used to alert the readers to cases of a redundant publication (e.g., because of plagiarism), so that they neither use its results nor refer to it (COPE—Committee on Publication Ethics, 2009). A retraction usually consists of two published items: (1) the original article and (2) the announcement of the retraction, often accompanied by reasons (Hesselmann et al. 2017). Traditional (print) journals have no means of removing such a retracted article, but online-only journals can do it by removing the article from all repositories it is stored in. Often, however, it is impossible to remove all copies of the article, for instance, when they are posted on personal web pages. Thus, there is always a risk that some readers will fail to recognize a retracted article.

According to the Office of Research Integrity of the U.S. Department of Health and Human Services, “research misconduct means fabrication, falsification or plagiarism in proposing, performing or reviewing research, or in reporting research results” (https://ori.hhs.gov/definition-research-misconduct). More generally, publication ethics can be violated because of plagiarism, duplicate publication, data manipulation, gift authorship, Machiavellianism, self-promotion, and the like (Sharma 2015).

A rapidly growing problem, scientific retractions have been receiving more and more attention in the scientific and scholarly publication worlds (Marcus and Oransky 2014). Researchers analyze associations between retractions and various bibliometric factors, such as an institution, a country, and other characteristics of authors and journals. He (2013) reported about a ten-fold increase in the number of retracted publications between 2001 and 2010, a worrying number given that the corresponding global rate of increase in the number of publications was 0.47.

Eighty-five percent of the retracted articles reported in Retraction Watch (https://retractionwatch.com/) between 2013 and 2015 were affiliated to 15 countries (Ribeiro and Vasconcelos 2018). This suggests that this problem is unevenly distributed among countries. Interestingly, it is the U.S. who leads the list. [This country was previously reported to be the top country in terms of the number of retractions in cancer research (Bozzo et al. 2017).] Included there are also such scientifically strong countries as Germany, Italy, The Netherlands, Germany, the U.K., Australia, Canada, France and Spain.

Analyzing such data, however, requires some standardization, such as by the total numbers of papers affiliated to these countries. Ribeiro and Vasconcelos (2018) included not only the numbers of retractions but also country-wise numbers of publications, according to Scimago Country Ranking, 2015. Weighing the numbers of retractions (2013–2015) by the numbers of publications (in the same period) gives a different view of the phenomenon. The U.S. did not lead the list anymore, with only 0.059‰ of retracted articles. For India, this parameter was 0.071‰, but for Taiwan 0.218‰. This means that over two articles affiliated to Taiwan were retracted per 1000 articles! For Iran, it was around 1.5 retracted articles per 1000 published articles, while for Germany only 0.3. For the Netherlands, it was almost one, however—a surprising result.

India is ranked eleventh in terms of a misconduct ratio, defined as the ratio of the number of retracted articles to the number of published documents from 2011 to 15th March 2017 (Ataie-Ashtiani 2018). The number of retracted papers affiliated with Indian institutions in the Web of Science database increased four times between 2001–2005 and 2006–2010 (Noorden 2011).

Awareness of what a scientific retraction is is insufficient among Indian researchers. For example, the Department of Mathematics of the University of Delhi listed a retraction notice (Arora and Kalucha 2008) in the list of recent publications of faculty members: This retraction was thus treated as one of the faculty members’ publications. Clearly, the community needs more information about retractions in general, but also about their scale, possible reasons, and publication ethics. Likely most crucial, especially for young researchers, is the knowledge of why articles can be retracted and how to avoid scientific misconduct, the most common reason for retractions.

The scientific community has been growing interest in this topic. Examples of related studies have dealt with retractions of papers by Chinese researchers (Lei and Zhang 2018); papers indexed in MEDLINE and authored or co-authored by Indian researchers (Misra et al. 2017); papers from Korean medical journals (Huh et al. 2016); papers affiliated to Malaysian institutions (Aspura et al. 2018); and papers in dentistry (Nogueira et al. 2017), cancer (Bozzo et al. 2017), and biomedical literature (Wang et al. 2018).

This study aims to analyze India’s retractions. To this end, we will analyze various aspects of retracted publications affiliated to Indian institutions: words and phrases used to retract a publication, collaboration among the authors of retracted publications, sources of retractions, the number of retracted publications, their funding sources, and their reasons.

Data and methods

We searched the SCOPUS database for retractions issued in journals and conference proceedings indexed in this database. Similar studies on retractions have used various search strategies to elicit bibliographic records pertaining to retracted publications. For instance, He (2013) searched the keywords “retraction AND vol” and “retracted AND article” in article titles indexed in the Web of Science. Li et al. (2018) looked for the keyword “retract*” in titles of articles indexed in PubMed but limited the article type to “retracted publications,” while Aspura et al. (2018) looked for the same keyword in Web of Science and SCOPUS. Bar-Ilan and Halevi (2018) searched ScienceDirect for the keyword “retracted” in article titles.

In this study, we looked for the keyword “retract*” in article titles, limiting an affiliation country to “India.” On 3 August 2018, we downloaded the data according to the strategy shown in Fig. 1. The search gave 457 records, of which 217 were not retraction notices, so we discarded them. Further analysis used full texts of the 240 retraction notices. One of them was issued in 1996 while the rest much later, after 2005. Thus, we analyzed this single 1996 retraction independently.

To visualize the data, we used the lattice package (Sarkar 2008) of R (R Core Team 2018). When creating graphs, we omitted the 2018 data, since they did not cover the whole year and would thus be visually deceiving. The analysis, however, used all 239 retractions.

Results and discussion

Yearly trend

The earliest retraction notice for an article indexed in SCOPUS and authored or coauthored by an Indian author was published back in 1996, in Il Nuovo Cimento B. The publisher decided to retract the paper because it repeated results published seven years earlier (by other, non-Indian authors). Nine years passed till a next retraction. Hence, we analyzed 239 retraction notices issued between 2005 and 3 August 2018.

This provides the mean of 17 retractions per year, which is quite a number. Misra et al. (2017) reported only 46 retraction notices (around six per year) for Indian authors in MEDLINE between 1 January 2010 and 4 July 2017 while Liu and Chen (2018) reported 169 retractions (about 22 per year) for Chinese authors between 1 January 2010 and 31 July 2017. Since 2005, the fewest publications (only one) were retracted in 2005 while the most (45) in 2010 (Fig. 2). On the one hand, we can see an increasing trend, with 80 retractions issued in 2005–2010 and 159 retractions in 2011–2018. On the other hand, it is difficult to find any reasonable trend since 2008: The yearly numbers of retractions were changing since then, but no clear trend was hidden behind these fluctuations.

Words and phrases

Table 1 provides words and phrases used to retract a publication, meaning that they accompanied the titles of the original publications in the titles of the retraction notices. Various notations were used. Almost three-fourth retractions used either “Retraction notice to” or “Retracted Article.” Only nine retractions used the single word “Retracted” and four used three other phrases (“this article has been retracted,” “notice of retraction,” and “letter of retraction”).

General characteristics of retractions

Table 2 classifies the retractions on the basis of the following criteria:

-

authorship,

-

document type,

-

institutional (or country) collaboration,

-

sources of retractions, and

-

funding sources of retracted publications.

Ten percent of the retracted publications were written by single authors. Over 40% were written by authors from more than one institution, and only about 16% were collaborations with authors from other countries than India.

In SCOPUS, most retractions were indexed as “Erratum” (though this word was not used in the retraction notices themselves) and only two retractions as “Retracted.” This is worrying because an erratum does not suggest a retraction. Most errata report mistakes and have nothing to do with scientific misconduct. Wang et al. (2018) recommended that retraction notices be indexed as a separate document type, as is done in PubMed.

Collaborative countries

The Indian authors of the retracted articles cooperated with foreign researchers from various countries (Table 3). The United States tops the list (12 retractions), followed by Iran (4) and Malaysia (5), these three countries accounting for over half of the retracted publications that resulted from international cooperation. That the U.S. opens the list is not surprising: It is the main collaborative partner for Indian science (Rajendran et al. 2014; Singh et al. 2016; Elango and Ho 2017). Almost 60% of the retractions resulted from the collaborations with researchers from the G7 countries, which dominate most research fields (Elango et al. 2013; Ho 2014; Elango and Ho 2018).

Journals

Most retractions were by journals: 195 out of 239 (82%). They were issued in 147 journals, most of them being indexed in the Journal Citation Reports (174, about 90%), impact factor ranging from 0.509 to 79.258. Most journals (121) issued only one retraction notice. The journals were scattered among twenty broad subject areas, with most journals (26) and retraction notices (36) being from Biochemistry, Genetics and Molecular Biology.

Out of the 147 journals, only twelve were published in India; they issued 22 retraction notices. Analyzing Chinese retractions, Liu and Chen (2018) reported a different phenomenon: They found no single retraction notice of a paper published by Chinese authors in a Chinese publications.

Table 4 lists the journals that had at least three retracted publications. The top eight journals issued about 20% of the retraction notices. Among these top eight journals, three were Indian: Physiology and Molecular Biology of Plants, Indian Journal of Surgery, and Journal of Parasitic Diseases; they issued thirteen retraction notices. The top journal, Journal of Hazardous Materials, is published by Elsevier and deals with environmental science. Important to note is that this journal publishes a lot of articles every year, which might be one of the reasons for so many retractions.

Funding agencies

We checked all the original articles to determine if they were supported by any funding agency. Table 5 provides the list of top such funding agencies, all those that either funded or co-funded at least two retracted publications. Few studies were funded by more than one funding agency. Sixty percent of the retractions were (co)funded by the Department of Science and Technology, Council of Scientific & Industrial Research, and the University Grants Commission.

Reasons for retraction

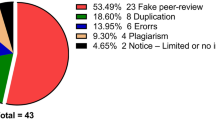

Based on the retraction notices, we have classified the reasons for the retractions into ten categories (Table 6). For nearly 20% of the retractions no actual reason was provided. Aspura et al. (2018) classified such articles into the category “violation of publication principle.” Unlike these authors, we classified them into the category “unknown.” We did so because all the other reasons could be classified as “violation of publication principle,” and we did not want to mix those reasons that were given with those that were not—hence the “unknown” category, which we used for all those notices which hid the reason for the retractions.

Retraction reasons were clearly stated in 81% of the notices. This is quite a share compared to other studies, such as that by Aspura et al. (2018), with 27% of notices, and by Tripathi et al. (2018), with less than 5% of notices explaining the retractions. The most common reason was, unsurprisingly, plagiarism (which includes self-plagiarism). Surprisingly, the number of retraction notices for Indian authors due to plagiarism was lower than that in Chauhan’s (2018) research on plagiarism by Indian authors. He found that there were 385 articles in the SCOPUS database during the period 2002–2016. Figure 3 shows the yearly numbers of retractions due to plagiarism; the trend fluctuated, with ups and downs and an average of 14 retractions due to plagiarism per year. Most retractions due to plagiarism were issued in 2008 and 2016, but 2018 will likely beat them, with 14 retractions till 3 August 2018 (data not shown in Fig. 3). Ironically, one of the articles retracted for plagiarism was itself about plagiarism: It was retracted due to a portion copied from the questionnaire for an expert study that presented a guideline on plagiarism with definitions and strategies to prevent and detect plagiarism (“Development of a guideline to approach plagiarism in Indian scenario: Retraction” Indian Journal of Dermatology, 60 (2), 2015, 210).

Almost one-fourth of the retractions were due to fake data, error, or duplicate publication. The share of duplicate publications (slightly over 5%) was much smaller than that reported for Korean medical journals (58%) (Huh et al. 2016). Author dispute, a fake review process, and a copyright issue together accounted for 5% of the retractions. Only four retractions (1.7%) due to a fake review process draw attention, given that Tumor Biology used this reason to retract as many as 107 articles in a single retraction notice (Stigbrand 2017), all of which were written by Chinese authors (Shan 2017). Similarly, one publisher retracted 58 articles of Iranian scientists due to a fake review process in a single day (Callaway 2016).

In our study, one publication was retracted due to inappropriate citation. Other reasons—including misinterpretation of data, experiments not conducted, and a different experiment conducted instead of the one mentioned in the manuscript—accounted for 5% of retractions.

Chaddah (2014) argued that not all plagiarism cases require retraction. In so doing, he referred to a specific type of plagiarism, which he called “results plagiarism.” In this, scientists repeat an already published experiment and obtain valid data. Chaddah advocated that such reproduction is a useful and common feature of science. While we do agree with this statement, we do not like using the term “plagiarism” to describe repeating an experiment (even if the term is “results plagiarism”). Such repeated experimentation is indeed a normal practice in science and serves the aim of confirming results. This is thus not only an accepted but also expected scientific behavior, one that represents the repeatability of science. In fact, science would not exist without other researchers trying to repeat and then extend already published experiments. Should we call such researchers “results plagiarists”?

To avoid plagiarism of any kind, proper citing and referencing, re-writing, acknowledging, and obtaining written permissions should be considered (Nikumbh 2016). Both plagiarism and related retraction reasons can be avoided by using—by journals’ stuff—plagiarism detection software tools, either commercial (e.g., iThenticate or Turnitin) or free (e.g., www.duplichecker.com or www.smallseotools.com) (Misra et al. 2017). Plagiarism has become a serious issue in India, and the Indian government—through the University Grants Commission (UGC), which overseas higher education across the country—has adopted its first regulation on academic plagiarism. This regulation creates four tier systems of penalties for plagiarism (http://www.sciencemag.org/news/2018/04/india-creates-unique-tiered-system-punish-plagiarism), in order to promote research integrity in academia and curb plagiarism by developing systems to detect it. Another national organization for technical education, All India Council for Technical Education, has moved towards curbing plagiarism: Its chairman declared that faculties and researchers involved in plagiarism would be punished according to the new UGC norms (https://peerscientist.com/news/faculty-researchers-indulging-in-plagiarism-will-be-punished-aicte-chair/). To facilitate this, The Minister of Human Resource Department, the Government of India announced at the conference of Vice Chancellors and Directors of all Indian universities and higher education institutions that Turnitin-like software would be made available at no cost to all such institutions in the country (http://pib.nic.in/PressReleaseIframePage.aspx?PRID=1540488).

Any such software can be fooled, however. For instance, Ison (2018) claimed that Turnitin and similar software do not detect all types of plagiarism.

We have to remember that plagiarism and other unfair behavior in science did not appear without reasons. Some time ago, ambition was likely the most important reason. But plagiarism was not as big a problem half a decade ago as it is now. Necker (2014) found that economic researchers’ perception of pressure correlated with their admission of various kinds of scientific misbehavior. Thus, quite likely this is the publish-or-perish pressure that draws scientists to misconduct.

Conclusion

The analysis showed several interesting phenomena related to an increasing problem in the scholarly publishing world: retractions. Considering SCOPUS-indexed journals, the first retraction of a paper published by an Indian researcher was in 1996, but then 8 years of no retraction followed. In 2005, a second retraction occurred, and ever since, an increasing trend in retractions took place, with an atypical peak of 45 retractions in 2010. The number of retractions of papers authored or co-authored by Indian researchers is thus relatively small. We said “relatively” because every single retraction is a bad thing and should never happen. Still, given the number of researchers in India and the growing problem of retractions worldwide, these 240 retractions that were issued in SCOPUS journals until 3 August 2018 amount to little.

Most of the retracted publications were written with co-authors, often foreign ones—usually from the U.S. Over half of the retracted publications were collaborated at the institutional level, and 31% were financially supported by funding agencies. The most common reason for retraction was plagiarism—an unsurprising result given that the whole science world has been struggling with the growing problem of plagiarism and self-plagiarism—which is consistent with earlier findings (Fang et al. 2012; Lei and Zhang 2018; Moradi and Janavi 2018).

More and more instances of plagiarism are due to a lack of sufficient awareness on publication ethics and research integrity among researchers, in particular on plagiarism (Dhingra and Mishra 2014). This lack of awareness might have been a small problem three or four decades ago, but these days—under the rat race and the publish-or-perish pressure—it has become quite a problem. Such pressure has not passed over India: For academic promotion, most Indian higher education institutions expect their academic as well as research staff to publish at least one paper in a journal indexed in SCOPUS or Web of Science. This does reflect the publish-or-perish phenomenon: What counts is quantity, not quality. It does not matter if you spend years on an experiment ending with a long and exhaustive article or publish a short and dull article on something that adds nothing new or interesting to the current knowledge-if both articles appear in the same journal. Both count as “one article in an indexed journal,” so the requirement for promotion is fulfilled. To overcome this, evaluations in science should use the “what did you publish” scenario rather than “where did you publish” one (Chaddah and Lakhotia 2018). What’s more, far too many academics seem to lack the interest, time, and capability to care enough about research ethics, which—combined with no fear of punishment—can lead to bad behavior (Sharma 2016).

The analysis of words and phrases used to denote retractions suggests there is some confusion in this terminology. Thus, bibliometric databases, like SCOPUS, should consider a devoted document type for retracted publications, irrespective of the publication type of the original article, and for retraction notices themselves. It is important for two reasons. First, this will help the readers learn about retracted articles when using the databases (such articles must be clearly denoted as retracted). Second, this will help organize bibliographic databases, which currently use various terms and document types to describe retracted articles and retraction notices.

The study has some limitations. First, we did not analyze the times that passed from the articles’ publication to their retraction, an interesting phenomenon. Second, we analyzed just one database, SCOPUS. Although it is one of the most important databases in scholarly publishing and most of the retraction notices (considered in this study) were also indexed in the Thomson Reuters (now called the Clarivate Analytics), its coverage is limited. Thus, a combined study of retraction notices collected from other indexing databases, enriched with information collected from Retraction Watch (www.retractionwatch.com), might provide more insight into the phenomenon of retractions in the Indian science. Third, we analyzed the retraction phenomenon from the point of view of journals, funding institutions, and individual articles—but not from the point of view of individual scientists or institutions. Such an analysis might be useful for several reasons. It would be interesting to analyze the share of recidivists among the authors of retracted articles. Has retraction taught them something? Or has it not—because unfair publication is their philosophy, their way of life? In the same context, it might be interesting to study the experience of such authors: Are articles of young (in terms of their experience in science, not of the biological age) researchers more likely to be retracted than those of experienced researchers? Fourth, we assumed that retracted publications are marked as “retracted” (that or another way), but we did not check that. This phenomenon also deserves consideration.

References

Arora, S. C., & Kalucha, G. (2008). Retraction of “quasihyponormal toeplitz operators”. Journal of Operator Theory, 60(2), 445.

Aspura, M. Y. I., Noorhidawati, A., & Abrizah, A. (2018). An analysis of Malaysian retracted papers: Misconduct or mistakes? Scientometrics, 115(3), 1315–1328.

Ataie-Ashtiani, B. (2018). World map of scientific misconduct. Science and Engineering Ethics, 24(5), 1653–1656. https://doi.org/10.1007/s11948-017-9939-6.

Bar-Ilan, J., & Halevi, G. (2018). Temporal characteristics of retracted articles. Scientometrics, 116(3), 1771–1783.

Bozzo, A., Bali, K., Evaniew, N., & Ghert, M. (2017). Retractions in cancer research: A systematic survey. Research Integrity and Peer Review, 2(1), 5.

Callaway, E. (2016). Publisher pulls 58 articles by Iranian scientists over authorship manipulation. Nature. https://doi.org/10.1038/nature.2016.20916.

Chaddah, P. (2014). Not all plagiarism requires a retraction. Nature News, 511(7508), 127.

Chaddah, P., & Lakhotia, S. C. (2018). A policy statement on “Dissemination and Evaluation of Research Output in India” by the Indian National Science Academy (New Delhi). Proceedings of the Indian National Science Academy, 84(2), 319–329.

Chauhan, S. K. (2018). Research on plagiarism in India during 2002–2016: A bibliometric analysis. DESIDOC Journal of Library & Information Technology, 38(2), 69–74.

Dhingra, D., & Mishra, D. (2014). Publication misconduct among medical professionals in India. Indian Journal of Medical Ethics, 11(2), 104–107.

Elango, B., & Ho, Y. S. (2017). A bibliometric analysis of highly cited papers from India in Science Citation Index Expanded. Current Science, 112(8), 1653–1658.

Elango, B., & Ho, Y. S. (2018). Top-cited articles in the field of tribology: A bibliometric analysis. COLLNET Journal of Scientometrics and Information Management, 12(2), 289–307.

Elango, B., Rajendran, P., & Bornmann, L. (2013). Global nanotribology research output (1996–2010): A scientometric analysis. PLoS ONE, 8(12), e81094.

Fang, F. C., Steen, R. G., & Casadevall, A. (2012). Misconduct accounts for the majority of retracted scientific publications. Proceedings of the National Academy of Sciences, 109(42), 17028–17033.

He, T. (2013). Retraction of global scientific publications from 2001 to 2010. Scientometrics, 96(2), 555–561.

Hesselmann, F., Graf, V., Schmidt, M., & Reinhart, M. (2017). The visibility of scientific misconduct: A review of the literature on retracted journal articles. Current Sociology, 65(6), 814–845.

Ho, Y. S. (2014). A bibliometric analysis of highly cited articles in materials science. Current Science, 107(9), 1565–1572.

Huh, S., Kim, S. Y., & Cho, H. M. (2016). Characteristics of retractions from Korean medical journals in the KoreaMed database: A bibliometric analysis. PLoS ONE, 11(10), e0163588.

Ison, D. C. (2018). An empirical analysis of differences in plagiarism among world cultures. Journal of Higher Education Policy and Management, 40(4), 291–304.

Lei, L., & Zhang, Y. (2018). Lack of improvement in scientific integrity: An analysis of WoS retractions by Chinese researchers (1997–2016). Science and Engineering Ethics, 24(5), 1409–1420.

Li, G., et al. (2018). Exploring the characteristics, global distribution and reasons for retraction of published articles involving human research participants: a literature survey. Journal of Multidisciplinary Healthcare, 11, 39–47.

Liu, X., & Chen, X. (2018). Journal retractions: Some unique features of research misconduct in China. Journal of Scholarly Publishing, 49(3), 305–319.

Marcus, A., & Oransky, I. (2014). What studies of retractions tell us. Journal of Microbiology & Biology Education, 15(2), 151–154.

Misra, D. P., Ravindran, V., Wakhlu, A., Sharma, A., Agarwal, V., & Negi, V. S. (2017). Plagiarism: A viewpoint from India. Journal of Korean Medical Science, 32(11), 1734–1735.

Moradi, S., & Janavi, E. (2018). A scientometrics study of Iranian retracted papers. Iranian Journal of Information Processing and Management, 33(4), 1805–1824.

Necker, S. (2014). Scientific misbehavior in economics. Research Policy, 43(10), 1747–1759.

Nikumbh, D. B. (2016). Research vs plagiarism in medical science (cytohistopathology). Archives of Cytology and Histopathology Research, 1(1), 1–3.

Nogueira, T. E., Gonçalves, A. S., Leles, C. R., Batista, A. C., & Costa, L. R. (2017). A survey of retracted articles in dentistry. BMC Research Notes, 10(1), 253.

Noorden, R. V. (2011). The reasons for retractions. http://blogs.nature.com/news/2011/10/the_reasons_for_retraction.html. Accessed August 21, 2018.

R Core Team. (2018). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/. Accessed December 19, 2019.

Rajendran, P., Elango, B., & Manickaraj, J. (2014). Publication trends and citation impact of tribology research in India: A scientometric study. Journal of Information Science Theory and Practice, 2(1), 22–34.

Ribeiro, M. D., & Vasconcelos, S. M. R. (2018). Retractions covered by Retraction Watch in the 2013–2015 period: Prevalence for the most productive countries. Scientometrics, 114(2), 719–734.

Sarkar, D. (2008). Lattice: Multivariate data visualization with R. New York: Springer.

Shan, J. (2017). Journal publisher removes Chinese articles. Global Times, April 21, 2017. http://www.globaltimes.cn/content/1043584.shtml.

Sharma, O. P. (2015). Ethics in science. Indian Journal of Microbiology, 55(3), 341–344.

Sharma, G. L. (2016). Academic plagiarism: an Indian scenario. Paripex – Indian Journal of Research, 5(4), 23–24.

Singh, N., Handa, T. S., Kumar, D., & Singh, G. (2016). Mapping of breast cancer research in India: A bibliometric analysis. Current Science, 110(7), 1178–1183.

Stigbrand, T. (2017). Retraction note to multiple articles in Tumor Biology. Tumor Biology. https://doi.org/10.1007/s13277-017-5487-6.

Tripathi, M., Dwivedi, G., Sonkar, S. K., & Kumar, S. (2018). Analysing retraction notices of scholarly journals: A study. DESIDOC Journal of Library & Information Technology, 38(5), 305–311.

Wang, T., Xing, Q.R., Wang, H., & Chen, W. (2018). Retracted publications in the biomedical literature from open access journals. Science and Engineering Ethics. https://doi.org/10.1007/s11948-018-0040-6.

Acknowledgements

We would like to thank the anonymous referees for their valuable comments on the first version of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Elango, B., Kozak, M. & Rajendran, P. Analysis of retractions in Indian science. Scientometrics 119, 1081–1094 (2019). https://doi.org/10.1007/s11192-019-03079-y

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-019-03079-y