Abstract

Retraction is a self-cleaning activity done in the global science community. In this study, the retraction of global scientific publications from 2001 to 2010 was quantitatively analyzed by using the Science Citation Index Expanded. The results indicated that the number of retractions increased faster compared to the number of global scientific publications. Three very different patterns of retraction existed in each field. In the multi-disciplinary category and in the life sciences, retraction was relatively active. The impact factor strongly correlated with the number of retractions, but did not significantly correlate with the rate of retraction. Although the increases in the number of publications in China, India, and South Korea were faster, their retraction activities were higher than the worldwide average level.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Scientific journals, as the media to publish scientific information, play an important role in knowledge dissemination and academic communication. Scientists stake their claim over scientific discoveries when they publish in these journals. Through publishing, scientists establish their reputations in the scientific community. Presently, the peer-review system is the gatekeeping that regulates the publication of articles in scientific journals. This system is the product of quality self-control mechanisms within the scientific community. However, the current reviewing system cannot completely control the quality of the publications; hence, some articles are retracted for various reasons. The reasons for retracting articles fall into three categories, namely, errors, scientific fraud, and political or ethical reasons.

According to Van Noorden (2011), the number of retractions in scientific publications rapidly increased in the last 20 years. Among them, misconduct (44 %) was the main reason. Undoubtedly, this development posted a new challenge for publishers. Budd et al. (1998) analyzed 235 retractions from 1966 to 1997 based on the MEDLINE database. They found that the reasons for these retractions were errors (38.7 %), unreplicated results (16.2 %), academic misconduct (36.5 %), and no clear reasons (8.5 %). The findings indicated that the main reasons for the retractions in international biomedical literature are result errors and academic misconduct. Similarly, Wager and Williams (2011) performed a statistical analysis of the retractions in the global biomedical literature. The study covered the period from 1980 to 2009. Their results indicated that the trend of retraction in the 1980s increased rapidly. The current situation is the number of retractions weighed at 0.02 % of the total publications. Based on a sample analysis of 312 retractions from 1988 to 2008, they found that authors directly retracted 63 % of the articles. Meanwhile, the editors (21 %), journals (6 %), publishers (2 %), and the institutions (1 %) also accounted for the retraction of the articles. The reasons for the retractions were mainly result errors or were unreplicated (40 %) and academic misconduct (28 %). Other reasons, namely, repeated publication (17 %) or no clear reasons (5 %), were cited as well. Based on the PubMed database, Steen (2011) analyzed 788 retracted papers from 2000 to 2010. The top five countries that had the most retracted papers were USA (260), China (89), Japan (60), India (50), and the UK (45). Although the USA had the most retractions, the fraction of fraud was not the highest. Among the countries with the highest rates of fraud retraction, India and Italy ranked first and second, respectively. Many of the authors of retractions were repeat offenders. Using meta-analysis method, Fanelli (2009) analyzed the scientists’ misconducts in a systematic review of 21 surveys. Among them, 18 surveys were in meta-analysis. There were, in average, of 1.97 % of scientists admitted misconduct by having fabricated, falsified or modified data or results at least once. And up to 33.7 % of scientists admitted other questionable research practices. The higher rates of misconduct were found in clinical, medical and pharmacological researchers than in other fields. Samp et al. (2012) found that the most important reason of retractions in drug therapy studies was scientific misconduct (over 72 %). The misconduct and fraud in drug therapy studies were higher than that in broader biomedical literature. Based on the data from PubMed, Fang et al. (2012) thoroughly analyzed 2,047 retractions. There were 67.4 % of retractions attributable to misconduct. Because of fraud or suspected fraud, the retraction rate was increased 10-fold since 1975. In recent years, the retraction rate increased rapidly due to plagiarism and duplicate publications. The impact factors of the journals which had retractions from plagiarism and duplicate publications were lower than that of the journals which had retractions from errors and scientific fraud. And it was also found that journals with higher impact factors has shorter time-to-retraction period.

The current research on retraction focused on literature in the biomedical fields. In this study, the retraction in global scientific publications from 2001 to 2010 was analyzed by using bibliometrics, which is a method based on a quantitative analysis of the Science Citation Index Expanded (SCIE) database.

Data and method

The data used in this study were obtained from the SCIE of Web of Science (WOS). Published by Thomson-Reuters, SCIE is a general citation index that covers the complete scientific and technical fields. Data collection consisted of two parts. “retraction AND vol” was used as the search strategy in searching titles within the publishing years of 2001 to 2010. The search results were verified and 1,278 retractions were determined. This data set was the main subject of the present study. “retracted AND article” was then used as the search strategy in searching titles from the publishing years of 2001 to 2010. The search only included reviews, articles, and letters. The search results were verified and 1,455 retracted articles were obtained. This data set was mainly used to analyze the distribution of retracted articles across countries. Data collection lasted until 5 February 2012.

Based on the subfields of the journals and the field classification method by Glänzel and Schubert (2003), the retractions were classified into 12 fields. These fields are agriculture and environment (AGRI), biology (BIOL), biosciences (BIOS), biomedical research (BIOM), clinical and experimental medicine (CLIN), neuroscience and behavior (NEUR), chemistry (CHEM), physics (PHYS), geosciences and space sciences (GEOS), engineering (ENGN), mathematics (MATH), and multidisciplinary sciences (MULT). In analyzing country distribution, the integer scheme method was used to assign the papers to each country. The Journal Impact Factors were obtained from the Journal Citation Reports Science Edition (2009).

Results

Trend of retraction

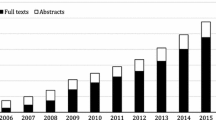

From 2001 to 2010, the increase in the retraction in global scientific articles was astonishing. Figure 1 shows the increasing trend in retraction and in total publication during this period. Although the total publication increased, the rise in retraction was faster during these 10 years. In 1998, only 18 retractions were reported by the global scientific papers. The retractions increased to 300 in 2010, which was a 15.67-fold increase. In the same period, the global publication increased from 782,042 in 2001 to 1,148,253 in 2010, which was only a 0.47-fold increase. The retraction rate over the total publication increased from 0.0023 % in 2001 to 0.026 % in 2010, which was a 10.35-fold increase.

The cause of the rapid increase is unclear, but it could be due to the following reasons. First, research in modern science is comprehensive and complicated. Any subtle negligence can cause an “honest error.” Hence, to avoid the dissemination of these errors, articles are withdrawn from publication. Retraction is the self-cleaning action done in the scientific community. Second, with the increasing activities in academic communication, and the emergence of online journals and professional databases, the discovery of plagiarism, duplicated submissions, and academic misconduct became easier. Consequently, some publishers adapted a CrossCheck, system to filter academic misconduct. Third, the “publish or perish” culture in the scientific world increased the pressure on some authors to commit academic misconduct.

Retraction profile

Owing to the differences in science disciplines, the publication production models were different for each research field. In comparing the retraction profiles across the various fields of science, the Activity Index (AI) was used to analyze the data. AI is a relative index for analyzing publication profiles. AI is the ratio of a given field’s share of publications for a country in that field compared to the given field’s share of publications for that country over all fields of the world (Schubert and Braun 1986). In this study, AI is defined by the formula:

When AI = 1, the retraction activity of the given field (country) and that of all the science fields (countries) were the same. When AI > 1, the retraction activity of the given field (country) was higher than all the science fields (countries). Finally, when AI < 1, the retraction activity of the given field (country) was lesser than all the science fields (countries).

Figure 2 shows the retraction profiles of the different science fields. The highest activity was in MULT. Besides MULT, the retractions in BIOS, BIOM, and CLIN were higher than the global average. The lower retraction activities were in GEOS and MATH. From 2001 to 2010, 97 retractions were found in MULT. Among these retractions, 93 were from three more impact journals, namely, Nature, Science, and Proceedings of the National Academy of Sciences of the United States of America. This figure comprised 96 % of the total retractions in MULT. The indicated retractions mostly happened in the three high impact journals in MULT. During this period, the retraction rate was 0.00134 % on average for the global journals. However, the retraction rate of these three journals was 0.01648 %, which was a 12.33-fold of the average. In analysis of retractions in biomedical fields, Nath et al. (2006) found the highest number of retractions were from the three high impact journals. They believed that the high level of post-publication scrutiny on the articles in these journals could elevate the error rate and, on the other hand, errors in less widely read and cited journals could be easily undetected. Secondly, the complexity and rigour associated with studies published in these journals may lead to a higher risk for error in implementing and replicating the research. They further pointed out that the large volume of publications in these journals could also increase the rate of error among them.

Retractions and impact factors

Garfield (1972) introduced the impact factor (IF), which is considered as the most important indicator for evaluating the influence of journals. IF is related to the average citation rate of publications. A high IF indicated the great influence of the journal in its field. Fang and Casadevall (2011) developed a retraction index based on the retraction rate in publications. They obtained samples from 17 journals in PubMed (IF > 2), and found a surprisingly reliable correlation between journal retraction index and its IF. Considering the different literature citation styles in each scientific discipline and the different data collection in SCI for each discipline, the IFs of the disciplines cannot be directly compared. For convenience, some researchers proposed a normalized impact factor (NIF). Sen (1992) developed the NIF by establishing a coefficient based on the highest IF in the specific journal category, and then multiplying it by the IF of Garfield. Pudovkin and Garfield (2004) developed their NIF based on the number of journals in its specialty category. The IF ranking is expressed as:

where R j is the JCR rank of journal j, and K is the number of journals in its specialty category.

In this study, the method of Pudovkin and Garfield was used to normalize the IFs of the designated journal categories. Then, the journals were ranked according to their NIFs, and were divided into 20 equally distanced sections. Table 1 shows the correlation analysis between the NIF and the retractions. The correlation between the number of retractions and the NIF was significant (p < 0.01). This finding indicated that journals with high IFs had more retractions. To clarify whether the publishing volume of each journal had an effect, the correlation of the retraction rate to the NIFs was also analyzed. The results showed no significant correlation, which indicated that high impact journals published at high volumes was the main reason for more retractions in high impact journals than in low impact journals.

Country of retraction

From 2001 to 2010, 1,455 publications were retracted. Among these cases, 66 countries accounted for 1,387 retractions. Figure 3 shows the country distribution of the retracted publications. The nodes represented the countries/regions, while the areas of each node corresponded to the number of retractions. The thickness of the links represented the frequency of collaboration. The color represented the retraction activity, where dark colors indicated the high incidence of retractions. Developing countries had high activity index. Seven countries were found to have over 50 retractions each. These countries were USA (451), China (327), Germany (123), Japan (123), India (103), the UK (91), and South Korea (60). USA had the most number of publications and retractions. Nonetheless, the retraction activity index for USA was 1.09, which is within the worldwide average level. The scientific production in the USA was huge, and comprised 29 % of the global production from 2001 to 2010. Thus, the activity index, in most cases, was dependent on USA data. China, India, and South Korea retraction activity indexes were 2.73, 2.35, and 1.46, respectively. The retraction activity indexes of Germany, Japan, and the UK were 1.09, 1.07, and 0.80, respectively. From 2001 to 2010, publications in the USA, Germany, Japan, and the UK increased 0.29-, 0.28-, 0.06-, and 0.24-fold, respectively. At the same time, global publications increased 0.47-fold. In addition, publications in China, India, and South Korea increased 2.91-, 1.36- and 1.53-fold, respectively. This result indicated the need for these countries to raise the quality of publications because of the quick rise of publishing in the developed countries.

Conclusion

Although global scientific publications increased in recent years, the increase in the number of retractions was more significant. The increasing challenge in comprehensive scientific research and publishing pressure might lead to more retractions. The retraction patterns varied across different disciplines. The relatively high retraction rates in the multi-disciplinary sciences and in the life sciences require further study. Developing countries, especially those showing rapid increase in publishing, need to perfect continuously their science research policies. These countries also need to control strictly the quality of their scientific production for them to compete in the international level of the sciences. Although the number of retractions in high impact journals was higher than the low impact journals, the correlation between the IFs and the retraction rates was insignificant. Considering that peer-reviews in high impact journals are strictly followed, and other researchers devote more attention and effort to duplicate the results, the defective publications in low impact journals can be overlooked. Hence, this concern could be just the tip of the iceberg. The elimination of the effects of these defective publications in scientific communities is an important challenge to publishers of scientific journals.

References

Budd, J. M., Sievert, M. E., & Schultz, T. R. (1998). Phenomena of retraction: Reasons for retraction and citations to the publications. JAMA, 280(3), 296–297.

Fanelli, D. (2009). How many scientists fabricate and falsify research? A systematic review and meta-analysis of survey data. PLoS One, 4(5), e5738. doi:10.1371/journal.pone.0005738.

Fang, F. C., & Casadevall, A. (2011). Retracted science and the Retraction Index. Infection and Immunity, 79(10), 3855–3859.

Fang, F. C., Steen, R. G., & Casadevalld, A. (2012). Misconduct accounts for the majority of retracted scientific publications. Proceedings of the National Academy of Sciences of the United States of America, 109(42), 17028–17033.

Garfield, E. (1972). Citation analysis as a tool in journal evaluation. Science, 178(4060), 471–479.

Glänzel, W., & Schubert, A. (2003). A new classification scheme of science fields and subfields designed for scientometric evaluation purposes. Scientometrics, 56(3), 357–367.

Nath, S. B., Marcus, S. C., & Druss, B. G. (2006). Retractions in the research literature: misconduct or mistake. Medical Journal of Australia, 185(3), 152–154.

Pudovkin, A.I., & Garfield, E. (2004). Rank-normalized impact factor: A way to compare journal performance across subject categories. Presented at the American Society for Information Science and Technology Annual Meeting, Providence. http://garfield.library.upenn.edu/papers/asistranknormalization2004.pdf.

Samp, J. C., Schumock, G. T., & Pickard, A. S. (2012). Retracted publications in the drug literature. Pharmacotherapy, 32(7), 586–595.

Schubert, A., & Braun, T. (1986). Relative indicators and relational charts for comparative assessment of publication output and citation impact. Scientometrics, 9(5–6), 281–291.

Sen, B. K. (1992). Normalised impact factor. Journal of Documentation, 48(3), 318–325.

Steen, R. G. (2011). Retractions in the scientific literature: do authors deliberately commit research fraud? Journal of Medical Ethics, 37(2), 113–117.

Van Noorden, R. (2011). The trouble with retractions. Nature, 478(7367), 26–28.

Wager, E., & Williams, P. (2011). Why and how do journals retract articles? An analysis of Medline retractions 1988–2008. Journal of Medical Ethics, 37(9), 567–570.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

He, T. Retraction of global scientific publications from 2001 to 2010. Scientometrics 96, 555–561 (2013). https://doi.org/10.1007/s11192-012-0906-3

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-012-0906-3