Abstract

In most empirical studies, once the best model has been selected according to a certain criterion, subsequent analysis is conducted conditionally on the chosen model. In other words, the uncertainty of model selection is ignored once the best model has been chosen. However, the true data-generating process is in general unknown and may not be consistent with the chosen model. In the analysis of productivity and technical efficiencies in the stochastic frontier settings, if the estimated parameters or the predicted efficiencies differ across competing models, then it is risky to base the prediction on the selected model. Buckland et al. (Biometrics 53:603–618, 1997) have shown that if model selection uncertainty is ignored, the precision of the estimate is likely to be overestimated, the estimated confidence intervals of the parameters are often below the nominal level, and consequently, the prediction may be less accurate than expected. In this paper, we suggest using the model-averaged estimator based on the multimodel inference to estimate stochastic frontier models. The potential advantages of the proposed approach are twofold: incorporating the model selection uncertainty into statistical inference; reducing the model selection bias and variance of the frontier and technical efficiency estimators. The approach is demonstrated empirically via the estimation of an Indian farm data set.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Model selection is commonly used in empirical studies and serves as a tool to select the best model among the competing ones. Once a model has been chosen, subsequent analyses are conditional on the selected model without considering the uncertainty regarding model selection. However, model selection can be highly variable and the selected model is not necessarily true by definition.Footnote 1 Even if the data-generating-process (DGP) is fixed, different model selection criteria may result in different choices of models. It is often the case that no single model is clearly superior to the other models. Therefore, it may be risky to base the empirical analysis or prediction on a single model. In this paper, we propose using model averaging to analyze stochastic frontier (SF) models as an alternative to a single model inference.

Since the pioneered works of Aigner et al. (1977), SF analysis has been widely used in productivity and efficiency studies to describe and estimate models of the production frontier. However, there are only limited systematic treatments of tests or model selection criteria in the existing SF literatures. Most empirical applications of SF analysis apply some off-the-shelf model selection scheme of “goodness-of-fit” checking of residuals, or “significant test” of coefficients to arrive at some “best model” that is thought to adequately approximate the true model. In studying the household maize production in Kenya, Liu and Myers (2009) propose an R2 type measure to assess a model’s overall explanatory power of SF regression setting. In model selection, Schmidt and Lin (1984) and Alvarez et al. (2006) propose the use of the likelihood ratio (LR) test, the Lagrange multiplier (LM) test, and the Wald test on zero coefficient restrictions for SF model selection. Lai and Huang (2010), on the other hand, apply the Akaike (1973, 1974) and Takeuchi (1976) information criteria to model selection. However, regardless what method of model selection is used, the model selection uncertainty is always present, as the same data are used for both model selection and the associated parameter estimation and inference. If model selection uncertainty is ignored, the subsequent productivity and efficiency analyses of the selected “best model” would be invalid. In the estimation of the labor input coefficient \( \hat{\beta } \), for example, the sampling variance of the estimator, given the selected best model, should have two components, the conditional variance given the selection mode, \( \text{var} (\hat{\beta }|{\text{selected model)}} \), and the variance of the selected model itself as it is an estimator of the true. Pötscher (1991) has shown that the distribution of estimators and test statistics are dramatically affected by the act of model selection. Lee and Pötscher (2005) also pointed out that ignoring the variance of the model selection component may lead to invalid inference. On most empirical studies, the reported confidence intervals tend to be too short, and a hypothesis rejected at an announced 5% significance level might actually have been tested at a rather higher level. It is, therefore, sometimes advantageous, as proposed in this paper, for the SF analysis to average estimators across several competing models, rather than relying on only the single “best model”.

A model-averaged estimator is a weighted average of estimators obtained from competing models. Model averaging has several practical and theoretical advantages: it incorporates model selection uncertainty into statistical inference; it reduces the model selection bias effects in parameter estimation; it lessens the estimated variance while controlling the omitted variable bias (see Hansen 2005). The subsequent statistical inferences on the model-averaged estimator are conducted based on the entire set of competing models.

In this paper, we propose using the model-averaged estimator to analyze the SF models. The basic framework of SF model selection and the model-averaged estimator is described in Sect. 2. Three model selection criteria, the Akaike information criterion (AIC, Akaike 1973), Takeuchi’s information criterion (TIC, Takeuchi 1976), and the Bayesian information criterion (BIC, Schwarz 1978), are used in constructing the weights of the competing models. A comparison of the model-averaged estimators based on the AIC, BIC, and TIC weights are examined by the bootstrap method. The implementation of the model-averaged estimator, the frontier regression and the technical inefficiencies, are discussed in Sect. 3. A demonstration and application to the Indian farm data set used by Battese and Coelli (1992, 1995), Wang and Schmidt (2002), Alvarez et al. (2006), and Lai and Huang (2010) are given in Sect. 4. A conclusion is given in Sect. 5.

2 Model-averaged estimator

Let \( \{ y_{i} ,x_{i} ,z_{i} \}_{i = 1}^{n} \) be an independent data set on n firms. A general form of the SF model can be written as

where y i denotes the logarithm of output, and the production frontier g(x i ; β) is a function of logged input vector \( x_{i} \in R^{P} \) with the parameter vector β. Following standard SF modeling, the random error v i represents statistical noise and is assumed to be independent to the non-negative random error u i representing the production inefficiency. The other variables z i denote the exogenous factors that may affect u i . In the case of Cobb-Douglas production frontier, the function g(x i ; β) is specified as linear in x i ; in the case of translog production frontier, g(x i ; β) is both linear and quadratic in x i . Following Battese and Coelli (1988), the technical efficiency (TE) of the ith firm can be defined as the conditional expectation,

where ε i = v i − u i is the composite error.

Let \( f(y_{i} |x_{i} ,z_{i} ;\theta ) \) be the conditional probability density function (PDF) of \( y_{i} |(x_{i} ,z_{i} ) \) where \( \theta = \mathop {\left( {\beta^{\text{T}} ,\theta_{v}^{\text{T}} ,\theta_{u}^{\text{T}} } \right)}\nolimits^{\text{T}} \) denotes the vector of parameters in model (1), θ v and θ u represent the vectors of parameters in the conditional PDFs of v and u, respectively. Therefore, the maximum likelihood (ML) estimator of θ can be defined as

Once the ML estimator \( \hat{\theta } = \left( {\hat{\beta }^{\text{T}} ,\hat{\theta }_{v}^{\text{T}} ,\hat{\theta }_{u}^{\text{T}} } \right)^{\text{T}} \) is obtained, the production frontier g(x i ; β) of the ith firm is predicted by

and the TE is estimated by

where \( \hat{\varepsilon }_{i} = y_{i} - x_{i}^{\text{T}} \hat{\beta } \) is the predicted value of the composite error ε i .

Now, suppose there are J competing SF models under consideration,

For example, \( g^{1} (x_{1i} ,x_{2i} ;\beta^{1} ) \) is a Cobb-Douglas function with two inputs, labor and capital inputs, while \( g^{2} (x_{1i} ,x_{2i} ;\beta^{2} ) \) is a translog function, and \( g^{3} (x_{1i} ,x_{2i} ;\beta^{3} ) \) is a Fourier flexible function. The distributions of the noise component v i j and the inefficiency component u i j may differ from model to model with the associated parameter vectors denoted as θ v j and θ u j respectively.Footnote 2 The parameter vector of the Cobb-Douglas specification \( \beta^{1} = \left( {\beta_{0}^{1} ,\beta_{1}^{1} ,\beta_{2}^{1} } \right) \) consists of an intercept and the two coefficients associated with x 1i and x 2i . For the translog specification, the parameter vector \( \beta^{2} = \left( {\beta_{0}^{2} ,\beta_{1}^{2} ,\beta_{2}^{2} ,\beta_{11}^{2} ,\beta_{22}^{2} ,\beta_{12}^{2} } \right) \) consists of three additional coefficients associated with the squared and cross-product of x 1i and x 2i ; and for the Fourier flexible specification, \( \beta^{3} = \left( {\beta_{0}^{3} ,\beta_{1}^{3} ,\beta_{2}^{3} ,\beta_{11}^{3} ,\beta_{22}^{3} ,\beta_{12}^{3} ,{\text{others}}} \right) \) includes additional coefficients associated with the Fourier series in x 1i and x 2i .Footnote 3 In the following analysis, we use M j to denote the jth competing model and \( \hat{\theta }^{j} = \left( {\hat{\beta }^{j\text{T}} ,\hat{\theta }_{v}^{j\text{T}} ,\hat{\theta }_{u}^{j\text{T}} } \right)^{\text{T}} \) to denote the ML estimator of θj defined in (3) under model M j . Given the predicted composite error \( \hat{\varepsilon }_{i}^{j} = (y_{i} - x_{i}^{\text{T}} \hat{\beta }^{j} ), \) the estimated TE of the ith firm under M j is \( \widehat{\text{TE}}_{i}^{j} = {\text{E(}}e^{{ - u_{i} }} |\hat{\varepsilon }_{i}^{j} ,\hat{\theta }^{j} ), \) and the predicted frontier is \( \hat{g}(x_{i} ;\beta^{j} ) = g(x_{i} ;\hat{\beta }^{j} ). \)

Suppose that we are interested in a certain parameter of the SF model in (1), for example, the coefficient β1 of x 1i , the labor input. In a typical model selection procedure, once the jth model has been selected, the estimator \( \hat{\beta }_{1}^{j} \) of the coefficient associated with x 1i of the selected model is considered as the “best” estimator of β1. The estimator \( \hat{\beta }_{1}^{j} \) is called the estimator-post-selection. An alternative to selecting one “best” model and the best estimator involves forming a weighted average of the J estimators \( \hat{\beta }_{1}^{j} \) from all competing models M j (j = 1, 2, … , J), i.e., \( \hat{\beta }_{1} = \sum\nolimits_{j = 1}^{J} {\pi_{j} \hat{\beta }_{1}^{j} } \) with the scaled weights π j assigned to model M j such that \( \sum\nolimits_{j} \,\pi_{j} = 1. \) The estimator \( \hat{\beta }_{1} \) is called the model-averaged estimator. Other than in parameter estimation, the model averaging is also applicable to other predictions of the SF model, such as the firm-specific frontier g(x i ; β) or the firm-specific technical efficiency TE i . The estimator-post-selection of g(x i ; β) is \( g(x_{i} ;\hat{\beta }^{j} ), \) while the model-averaged estimator is \( \hat{g}(x_{i} ;\beta ) = \sum\nolimits_{j = 1}^{J} {\pi_{j} g(x_{i} ;\hat{\beta }^{j} )} . \) Similarly, the estimator-post-selection of TE i is \( \widehat{\text{TE}}_{i}^{j} , \) while the model-averaged estimator is \( \widehat{\text{TE}}_{i} = \sum\nolimits_{j = 1}^{J} {\pi_{j} \widehat{\text{TE}}_{i}^{j} } . \)

In general, let \( \phi_{i} = \phi (x_{i} ,z_{i} ;\theta ) \) be a parameter of interest in a SF model. Here the parameter is defined more broadly as it may be a coefficient β which is constant, or it may be a firm-specific frontier g(x i ; β) or a firm-specific TE \( {\text{E(}}e^{{ - u_{i} }} |\varepsilon_{i} ) \) which is a function of the exogenous variables z i that affect the inefficiency component u i . If we follow Buckland et al. (1997), the model-averaged estimator of the parameter ϕ i is

where \( \hat{\phi }_{i}^{j} \) is the jth model estimator of ϕ i with the assigned weight π j such that \( \sum\nolimits_{j} \pi_{j} = 1. \) The weight π j is interpreted as the weight of evidence in favor of model M j .

We like to emphasize that the above proposed model-average estimator can only be constructed when the parameter of interest ϕ i are comparable in all J competing models. If the J competing models are nested and ϕ i is missing from the model j, say the coefficient of the variable x i with coefficient \( \phi_{i}^{j} \) is missing, then the comparability implies that \( \phi_{i}^{j} = 0 \) is specified, and hence we set \( \hat{\phi }_{i}^{j} = 0 \) in (7) when computing the averaged estimator.Footnote 4 In general, the J competing models may be non-nested in the model’s coefficient vector θj, or the parameter of interest \( \phi_{i}^{j} \) is a function of model’s coefficients, say, \( \phi_{i}^{j} = \phi (x_{i} ,z_{i} ;\theta^{j} ). \) In this case, the model-average estimator is applicable to the comparable parameters \( \phi_{i}^{j} \) and is not applicable to the non-comparability of θj. One example is the model-average estimator of the firm-specific TE,Footnote 5 \( \widehat{\text{TE}}_{i} = \sum\nolimits_{j = 1}^{J} {\pi_{j} {\text{E(}}e^{{ - u_{i} }} |\hat{\varepsilon }_{i}^{j} ,\hat{\theta }^{j} )} . \)

Some commonly used weights are based on the Kullback–Leibler (K–L) information criterion of model selection. The Akaike information criterion of Akaike (1973) for model M j is defined as

where k j is the number of parameters in M j . This equation suggests that the AIC model-averaged weights are

where \( \Updelta_{j}^{\text{AIC}} = {\text{AIC}}_{j} - {\text{AIC}}_{\min } \) measures the relative AIC differences between model M j and the best model among the J competing models.Footnote 6 A smaller value of \( \Updelta_{j}^{\text{AIC}} \) corresponds to a better model, and the best competing models has \( \Updelta_{j}^{\text{AIC}} = 0. \) Therefore, \( \Updelta_{j}^{\text{AIC}} \) serves as a relevant measure of ranking the competing models. Furthermore, since the likelihood value \( L_{n} (\hat{\theta }^{j} ) \) is proportional to \( \exp \left( { - \frac{1}{2}\Updelta_{j} } \right) \), Akaike (1983) called the term \( \exp \left( { - \frac{1}{2}\Updelta_{j} } \right) \) the relative likelihood of model, and the Akaike weight \( \pi_{j}^{\text{AIC}} \) measures the relative importance of model M j among all J competing models.

Alternatively, the Bayesian information criterion (BIC) of Schwarz (1978) for model M j is defined as

where \( \Updelta_{j}^{\text{BIC}} = {\text{BIC}}_{j} - {\text{BIC}}_{\min }. \) The corresponding BIC model-averaged weights are

The third model-averaged weights are defined in terms of the Takeuchi information criterion (TIC) of Takeuchi (1976),

where

is the Fisher information matrix and

is the Hessian matrix. The TIC model-averaged weights are

and \( \Updelta_{j}^{\text{TIC}} = {\text{TIC}}_{j} - {\text{TIC}}_{\min } . \)

Results when comparing AIC, BIC, and TIC performance depend on the nature of the DGP, on whether the competing models contains the truth, on the sample sizes, and also on the objective of the researcher—select the true model or select the K–L best approximating model. The model selection criteria of AIC, TIC, and BIC, take the form of a penalized log-likelihood function with various penalty functions. By comparing the penalty terms in (8), (10) and (12), AIC and TIC may perform poorly in model selection if there are too many parameters in relation to the sample size in the competing models. The BIC of (10) is quite similar to the AIC of (8) with a strong penalty for a more complex competing model with more parameters. Furthermore, if the candidate models contain the truth, BIC selection is consistent in the sense that it detects “the true model” with probability tending to 1 when the sample size increases, but AIC and TIC are not consistent. On the other hand, the selection criteria of AIC and TIC are efficient in the sense that the criteria reach the minimum of mean squared prediction errors as the sample size tends to infinity. Accordingly, when the true model exists and it is one of the candidate models under consideration, BIC is suggested. However, if the true model is complicated or is not one of the candidate models under consideration, then one can only approximate the truth by a set of candidate models. In this case, one may estimate the K-L distance by AIC and TIC and determine which approximating model is best. Both AIC and BIC are more suitable for model selection among nested models, but TIC is not restricted to the nested cases. More specifically, AIC can be treated as an approximation to TIC since \( tr[H(\hat{\theta }^{j} )I(\hat{\theta }^{j} )^{ - 1} ] = k_{j} \) if the “information equality” holds. See Takeuchi (1976) for further details. Consequently, the choice of criteria may depend on the empirical economist’s belief or objective. Applications of AIC and TIC on selection and test of SF models are examined in Lai and Huang (2010).

Once the model-averaged estimator defined in (7) is obtained, it remains to find the estimator for the variance of the model-averaged estimator. Suppose that the weights π j are given by either \( \pi_{j}^{\text{AIC}} , \) \( \pi_{j}^{\text{BIC}} , \) or \( \pi_{j}^{\text{TIC}} , \) and the parameter estimators \( \hat{\phi }_{i}^{j} \) and \( \hat{\phi }_{i}^{k} \) under models M j and M k are correlated with the correlation coefficient ρ jk . By decomposing the mean squares of the model-averaged estimator \( \hat{\phi }_{i}, \) the variance of \( \hat{\phi }_{i} \) can be shown to beFootnote 7

where \( \text{var} \left( {\hat{\phi }_{i}^{j} |M_{j} } \right) \) is the variance under M j and \( \left( {\phi_{i}^{j} - \phi_{i} } \right) \) denotes the model misspecification bias. In practice, however, both ρ jk and \( \text{var} \left( {\hat{\phi }_{i}^{j} |M_{j} } \right) \) are unknown and need to be estimated. Suppose \( \widehat{\text{var}}\left( {\mathop {\hat{\phi }}\nolimits_{i}^{j} |M_{j} } \right) \) and \( \hat{\rho }_{jk} \) are the sample estimates of \( \text{var} \left( {\mathop {\hat{\phi }}\nolimits_{i}^{j} |M_{j} } \right) \) and the sample correlation coefficient of \( \hat{\phi }_{i}^{j} \) and \( \hat{\phi }_{i}^{k} , \) respectively. The corresponding estimate of the variance of the average estimator is then

Empirically, it is likely that the estimates \( \hat{\phi }_{i}^{j} \) and \( \hat{\phi }_{i}^{k} \) under models M j and M k are highly correlated as the same data are used in the estimation. By setting \( \hat{\rho }_{jk} = 1, \) we have the upper bound of \( \widehat{\text{var}}(\hat{\phi }_{i} ), \)

3 Stochastic frontier model-averaged estimator

Two set of parameters are important in the estimation of SF model in (1). One is the frontier g(x i ; β), which requires the estimation of the parameters β, and the other is the TE \( {\text{TE}}_{i} = {\text{E(}}e^{{ - u_{i} }} |\varepsilon_{i} ), \) which requires the estimation of the parameters \( \theta = \left( {\beta^{\text{T}} ,\theta_{v}^{\text{T}} ,\theta_{u}^{\text{T}} } \right)^{\text{T}}. \) In this section, we separately propose the model-averaged estimation of β and TE i .

Suppose \( \beta_{t} \in \beta \) is the tth parameter of the frontier function in g(x i ; β). By (7) the model-averaged estimator is

where \( \hat{\beta }_{t}^{j} \) is the estimator under model M j . However, the computation of the variance of \( \hat{\beta }_{t} \) requires the estimate of the sample correlation coefficient \( \hat{\rho }_{jk} (\beta_{t} ) \) of \( \hat{\beta }_{t}^{j} \) and \( \hat{\beta }_{t}^{k} \) between model M j and model M k .

In the previous works of Buckland et al. (1997) and Burnham and Anderson (1998), they suggest simplifying (15) by assuming \( \hat{\rho }_{jk} (\beta_{t} ) = 1 \) to obtain the upper bound as in (16), or setting \( \hat{\rho }_{jk} (\beta_{t} ) = \rho \) for all t, j and k. Estimation of \( \hat{\rho }_{jk} (\beta_{t} ) \) is clearly infeasible in the ML approach as for each data set there is only one ML estimate \( \hat{\beta }_{t}^{j} \) for a model. Burnham and Anderson (1998) have suggested using the bootstrap approach as an alternative method to obtain the distribution of the averaged estimator \( \hat{\beta }_{t} \) and the variance \( \widehat{\text{var}}(\hat{\beta }_{t} ) \) from the bootstrap samples. This approach requires first estimating the J competing models and then averaging over those bootstrap estimators in order to obtain the averaged estimator and its distribution. A large number of bootstrapped samples is obviously needed to obtain a good approximation.Footnote 8 It is a computation-intensive approach.

To lessen the computation time, we propose an alternative bootstrap approach to estimating the standard error of the averaged estimator. To compute the variance of the averaged estimator \( \widehat{\text{var}}(\hat{\beta }_{t} ) \) in (15), only the estimation of the correlation coefficients \( \hat{\rho }_{jk} (\beta_{t} ) \) between the competing models is required. We therefore propose bootstrapping only the correlation coefficients between the frontier coefficients. The main difference between our bootstrap approach and that of Burnham and Anderson (1998) is that model averaging is not required in our bootstrap procedure because we do not intend to obtain the distribution of the averaged estimator. But the question of how many bootstrap samples are required to obtain a good result will be examined later on by a Monte Carlo simulation.

The proposed bootstrap procedure is stated as follows. A bootstrapped sample is generated by independently drawing from the original data with a replacement, and then using the bootstrapped data to estimate the J competing models. Denote \( \hat{\beta }_{t}^{j} (b) \) as the estimates of \( \beta_{t}^{j} \) from the bth bootstrapped sample. By repeating the same procedure for B times, the estimator of the sample correlation coefficient of \( \hat{\beta }_{t}^{j} \) and \( \hat{\beta }_{t}^{k} \) can be computed,

where \( \bar{\hat{\beta }}_{t}^{j} \) is the sample mean of \( \hat{\beta }_{t}^{j} (b), \) b = 1, … , B. Equations (15) and (18) together suggest that the variance of the averaged estimator for \( \beta_{t}^{j} \) can be estimated by

Similarly, we may define the model-averaged TE estimator of the ith firm to be the weighted average of the J competing estimators,

where \( \widehat{\text{TE}}_{i}^{j} \) defined in (5) is the estimator for firm i’s TE under Model M j . Following standard SF modeling, the distribution of the symmetric error v i j is assumed to be \( N(0,\sigma_{v}^{j2} ) \) and is independent of the nonnegative error u i j which follows the truncated-normal distribution as \( N^{ + } (\mu^{j} ,\sigma_{u}^{j2} ), \) i.e., truncated from below at zero and with the mode at μj. Given the ML estimators \( \hat{\theta }^{j} = \left( {\hat{\beta }^{j} ,\hat{\sigma }_{v}^{j2} ,\hat{\mu }^{j} ,\hat{\sigma }_{u}^{j2} } \right), \) we have

where \( \hat{\mu }_{*i}^{j} = \left( { - \hat{\sigma }_{u}^{j2} \hat{\varepsilon }_{i}^{j} + \hat{\mu }^{j} \hat{\sigma }_{v}^{j2} } \right)/\hat{\sigma }^{j2} , \) \( \hat{\sigma }_{ * }^{j2} = \hat{\sigma }_{v}^{j2} \hat{\sigma }_{u}^{j2} /\hat{\sigma }^{j2} , \) \( \hat{\sigma }^{j2} = \left( {\hat{\sigma }_{v}^{j2} + \hat{\sigma }_{u}^{j2} } \right), \) and \( \hat{\varepsilon }_{i}^{j} = y_{i} - x_{i}^{\text{T}} \hat{\beta }^{j} \) is the predicted value of the composite error \( \varepsilon_{i}^{j} . \)

Following (15), the variance of the model-averaged TE estimator is

Obviously, the estimation of the variance of \( \widehat{\text{TE}}_{i} \) in (22) requires the estimation of the conditional variances, \( \widehat{\text{var}}\left( {\widehat{\text{TE}}_{i}^{j} |M_{j} } \right), \) and the cross-model correlation coefficient \( \hat{\rho }_{jk} \left( {{\text{TE}}_{i} } \right) \) between \( \widehat{\text{TE}}_{i}^{j} \) and \( \widehat{\text{TE}}_{i}^{k} . \) Since the TE is highly nonlinear in \( \hat{\theta }^{j} = \left( {\hat{\beta }^{j} ,\hat{\sigma }_{v}^{j2} ,\hat{\mu }^{j} ,\hat{\sigma }_{u}^{j2} } \right), \) the computation of the conditional variance, \( \widehat{\text{var}}\left( {\widehat{\text{TE}}_{i}^{j} |M_{j} } \right), \) may have to resort to some numerical approximations. However, we have no basis for estimating the cross-model correlation coefficient \( \hat{\rho }_{jk} \left( {{\text{TE}}_{i} } \right) \) other than the bootstrap. Because of the computational intensity required in the computation of individual firm-specific variances \( \widehat{\text{var}}\left( {\widehat{\text{TE}}_{i} } \right), \) only the sample estimate of the model-averaged TE is illustrated without the evaluation of the variances.Footnote 9

4 An empirical illustration

In this section, the proposed model-averaged estimation is applied to a SF production function using Indian farm data. The same data has been used by Battese and Coelli (1992, 1995), Wang (2002), Alvarez et al. (2006), and Lai and Huang (2010). The farm’s output variable Y is the total value of output. The other variables, Land, PILand, Labor, Bullock, Cost, Age, School, and Year, are either the input variable or the exogenous variable that might affect the TE. Land is the total area of irrigated and unirrigated land under operation; PILand is the proportion of operated land that is irrigated; Labor is the total hours of family and hired labor; Bullock is the hours of bullock labor; Cost is the value of other inputs, including fertilizer, manure, pesticides, machinery, etc.; Age is the age of the primary decision-maker in the farming operation; School is the years of formal schooling of the primary decision maker; and Year is the year of the observations involved. The following empirical analysis is programmed in Stata 10.0 software.

Among the relevant discussions on SF models with truncated-normal distributions, various assumptions are imposed on the distributions of the one-sided error. In the previous study of Lai and Huang (2010), they consider the following eight types of settings of u i in the SF models, including:

-

M 1: The generalized exponential mean (GEM) model (Alvarez et al. 2006)

-

$$ u_{i} \sim N^{ + } (e^{{\delta_{0} + \delta^{\text{T}} z_{i} }} , \, e^{{2(\gamma_{0} + \gamma^{\text{T}} z_{i} )}} ). $$

-

M 2: The Stevenson Model (Stevenson 1980)

-

$$ u_{i} \sim N^{ + } \left( {\mu ,\sigma_{u}^{2} } \right). $$

-

M 3: The scaled Stevenson model (Wang and Schmidt 2002)

-

$$ u_{i} = e^{{z_{i}^{\text{T}} \delta }} \cdot \omega_{i} \quad {\text{with}}\quad \omega_{i} \mathop \sim \limits^{i.i.d.} N^{ + } \left( {\mu ,\sigma_{u}^{2} } \right). $$

-

M 4: The KGMHLBC model (Kumbhakar et al. 1991; Huang and Liu 1994; Battese and Coelli 1995)

-

$$ u_{i} \sim N^{ + } \left( {\mu e^{{\delta^{\text{T}} z_{i} }} ,\sigma_{u}^{2} } \right). $$

-

M 5: The RSCFG-μ model

-

$$ u_{i} \sim N^{ + } \left( {\delta_{0} ,\sigma_{u}^{2} e^{{2\gamma^{\text{T}} z_{i} }} } \right). $$

-

M 6: The RSCFG model (Reifschneider and Stevenson 1991; Caudill and Ford 1993; Caudill et al. 1995)

-

$$ u_{i} \sim N^{ + } \left( {0,e^{{2(\gamma_{0} + \gamma^{\text{T}} z_{i} )}} } \right). $$

-

M 7: The HL model (Huang and Liu 1994)

-

$$ u_{i} \sim N^{ + } \left( {\delta_{0} + \delta^{\text{T}} z_{i} ,e^{{2\gamma_{0} }} } \right). $$

-

M 8: The generalized linear mean (GLM) model (Lai and Huang 2010)

-

$$ u_{i} \sim N^{ + } (\delta_{0} + \delta^{\text{T}} z_{i} , \, e^{{2(\gamma_{0} + \gamma^{\text{T}} z_{i} )}} ). $$

The specifications of the frontier function \( g^{j} (x_{i} ;\beta^{j} ) \) are identical for all eight models except that distributions of \( u_{i}^{j} \) are different as indicated in M j , j = 1, … , 8.

The input variables x in our empirical model include: ln(Land), PILAND, ln(Labor), ln(Bullock), ln(Cost), Year, ln(Labor) × Year, School; and the exogenous variables z used in the inefficiency term include: Age, School, and Year. This set of variables has been selected by Lai and Huang (2010) after conducting the AIC and TIC model selection criterion, and also Voung’s LR test. Therefore, we concentrate only on the analyses of SF models with different distribution assumptions on the one-sided error u, while keeping the input variables fixed. The estimated parameters of models M 1–M 8 are summarized in Table 1. The descriptive statistics of the technical efficiencies and the sample average of the marginal effect of the inefficiency determinants on the TE, \( \partial {\text{TE}}_{i}^{j} /\partial z_{i} , \) are also reported in the bottom of Table 1. Since \( {\text{TE}}_{i}^{j} \) is a complicated function of the parameters, the close form of \( \partial {\text{TE}}_{i}^{j} /\partial z_{i} \) is almost intractable. We, therefore, use the numerical method to compute the derivative and obtain these estimates.

The correlation coefficients of the estimated technical efficiencies under models M 1–M 8 are summarized in Table 2. All values are close to one indicates that the predictions of technical efficiencies do not vary much under the eight models.

Three different weights based on AIC, BIC, and TIC are considered in the model-averaged estimators. Table 3 shows the values of AIC j , BIC j , and TIC j under models M 1–M 8 and their respective weights, \( \pi_{j}^{\text{AIC}} , \) \( \pi_{j}^{\text{BIC}} , \) and \( \pi_{j}^{\text{TIC}} . \) Both AIC and TIC select M 8 as the best model while BIC selects M 2 as the best model. However, if M 8 is dropped, then both AIC and BIC choose M 6 as the best model while TIC suggests M 1 as the best model. It seems that different model selection criteria may suggest different models, and rank the competing models in different order. In other words, different model selection criteria may select different best empirical models for inference and thus have inconsistent conclusions. Although both AIC and TIC choose the same best model, the relative importance of models are evaluated differently. For instance, the ranking of the relative importance among the top four models from the highest to the lowest are:

-

AIC ranking: (M 8, M 6, M 5, M 1);

-

BIC ranking: (M 2, M 6, M 5, M 3);

-

TIC ranking: (M 8, M 1, M 5, M 3).

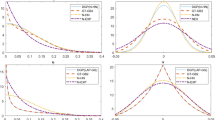

Table 4 gives the relative frequencies of models selected by AIC, BIC, and TIC under various replications of the bootstrapped sample. Each bootstrapped sample is obtained by sampling from the original data with replacement until the bootstrapped sample size is the same as that of the original. The number of replications denoted by B, is 150, 300, 500, and 1,000, respectively. Several findings are observed. First, the order of these models is quite stable under different sizes of B for the three criteria. Second, the ranking of the top four best models are:

-

AIC ranking: (M 8, M 1, M 6, M 4);

-

BIC ranking: (M 2, M 6, M 4, M 5);

-

TIC ranking: (M 8, M 1, M 5, M 3).

Among these three criteria, the order of the eight models under consideration obtained by TIC in Table 4 is virtually consistent with the order of weights calculated according to TIC in Table 3. However, the orders of models based AIC and BIC in Tables 3 and 4 are not consistent. Furthermore, the top two models suggested by AIC weight are M 8 and M 6, with M 8 and M 1 for the bootstrapped data. Therefore, it seems that TIC is a relative stable criterion compared with the other two criteria. Third, BIC tends to select a model with fewer parameters such as M 2 with 11 parameters, and rank lower the model with more parameters, such as, M 1 and M 8 with 17 parameters each.

The model-averaged estimates of the coefficients in the frontier equation from models M 1–M 8, and the descriptive statistics of the model-averaged technical efficiencies computed via (17) and (20) are given in Table 5, where the standard errors of the model-averaged estimates in the frontier function are estimated on the basis of 1,000 bootstrapped data sets. The corresponding upper bounds of these averaged estimates are also obtained according to (16) by setting \( \hat{\rho }_{jk} (\beta_{t} ) = 1 \) for all j, k, and t.

If the number of competing models is large, it may require much computation time when one conducts the bootstrap procedure. In order to examine whether the number of bootstrapped data sets significantly changes the estimated standard errors, we calculated the standard errors for the number of bootstrapped data sets B = 150, 300, 500, and 1,000. The estimated results are summarized in Table 6. It seems that when B = 300, we already have quite good results, which are very close to what we obtained when B = 1,000. Since our objective of using bootstrapped data is not to obtain the distribution, but to compute the correlation coefficient between the coefficient estimates, computation of (18) does not actually require a large bootstrapped number.

5 Conclusion

In this paper, we suggest using the model-averaged estimator based on the multimodel inference in estimating the SF model. The potential advantages of the proposed approach include: (1) the incorporation of the model selection uncertainty into statistical inference; (2) averaging across the frontier coefficients and the predicted technical efficiencies can reduce the model selection bias effects and thus reduce estimated variance.

We demonstrate our approach by utilizing Indian farm data. Three model selection criteria, AIC, BIC, and TIC, are considered in our empirical example. Our results show that among these criteria, TIC seems to be relatively stable in ordering the competing models. Moreover, the main advantage of TIC is that it does not require the true model to be among the competing models, but AIC and BIC do. BIC intends to select as the best model the one that contains the fewest parameters. Finally, we also suggest using the bootstrapped data to estimate the correlation coefficient between the estimated coefficients over the competing models, and show that the estimation does not require a large bootstrapped number.

Notes

Box (1976) is quick to point out that “All models are wrong, but some are useful.”

For example, among the relevant discussions on SF models, the distribution of the inefficiency component is specified either as \( N^{ + } (\delta_{0} + \delta^{\text{T}} z_{i} , \, e^{{2(\gamma_{0} + \gamma^{\text{T}} z_{i} )}} ) \) or as \( N^{ + } (e^{{\delta_{0} + \delta^{T} z_{i} }} , \, e^{{2(\gamma_{0} + \gamma^{T} z_{i} )}} ). \) The former specification is called the generalized linear mean (GLM) model and the latter specification is called the generalized exponential mean (GEM) model (Lai and Huang 2010). As detailed in Sect. 4, various settings of the distributional parameters generate most commonly specified SF models.

See Gallant (1982) for the specification of the Fourier flexible function.

This is exactly the case we confront when we estimate the marginal effect of the inefficiency determinants on the mean of the technical efficiency in our empirical example in Sect. 4. Model M 2 does not specify any inefficiency determinants and thus the all marginal effects are set as zeros. Please see Table 1.

In our empirical, eight models are estimated but model 1 (M 1) and model 8 (M 8) are not nested to each other.

It is merely for the computational purpose of subtracting the minimum AICmin to avoid numerical problems with very large or very small arguments inside the exponential function.

See Buckland et al. (1997) for the derivation.

Burnham and Anderson (1998) recommend 10,000 bootstrap samples and at least 1,000 are required.

Alternatively, we could assume that the correlation between the two models’ prediction on efficiency is independent of firms, i.e., \( \rho_{jk} ({\text{TE}}_{i} ) = \rho_{jk} ({\text{TE}}_{t} ) = \rho_{jk} ({\text{TE}}), \) for all firms, i, t = 1, … , n. Thus, we suggest the estimation of \( \rho_{jk} ({\text{TE}}) \)based on the estimates of the n firm-specific technical efficiencies, \( \hat{\rho }_{jk} ({\text{TE}}) = \frac{{\sum\nolimits_{i = 1}^{n} {\left( {\widehat{\text{TE}}_{i}^{j} - \overline{{\widehat{\text{TE}}^{j} }} } \right)\left( {\widehat{\text{TE}}_{i}^{k} - \overline{{\widehat{\text{TE}}^{k} }} } \right)} }}{{\sqrt {\sum\nolimits_{i = 1}^{n} {\left( {\widehat{\text{TE}}_{i}^{j} - \overline{{\widehat{\text{TE}}^{j} }} } \right)^{2} } } \sqrt {\sum\nolimits_{i = 1}^{n} {\left( {\widehat{\text{TE}}_{i}^{k} - \overline{{\widehat{\text{TE}}^{k} }} } \right)^{2} } } }}, \) where \( \overline{{\widehat{\text{TE}}^{j} }} \) is the sample mean of \( \widehat{\text{TE}}_{i}^{j} , \) for I = 1, … , n.

References

Aigner DJ, Lovell CAK, Schmidt P (1977) Formulation and estimation of Stochastic Frontier production models. J Econom 6:21–37

Akaike H (1973) Information theory and an extension of the maximum likelihood principle. In: Petrov BN, Csaki F (eds) 2nd international symposium on information theory, Akad emia Kiad, Budapest, pp 267–281

Akaike H (1974) A new look at the statistical model identification. IEEE Trans Autom Control 19:716–723

Akaike H (1983) Information measures and model selection. Int Stat Inst 44:277–291

Alvarez A, Amsler C, Orea L, Schmidt P (2006) Interpreting and testing the scaling property in models where inefficiency depends on firm characteristics. J Prod Anal 25:201–212

Battese G, Coelli TJ (1988) Prediction of firm-level technical efficiencies with a generalized frontier production function and panel data. J Econom 38:387–399

Battese G, Coelli TJ (1992) Frontier production functions, technical efficiency and panel data: with applications to paddy farmers in India. J Prod Anal 3:153–169

Battese GE, Coelli TJ (1995) A model for technical inefficiency effects in a stochastic frontier production function for panel data. Empir Econ 20(2):325–332

Box GEP (1976) Science and statistics. J Am Stat Assoc 71:791–799

Buckland ST, Burnham KP, Augustin NH (1997) Model selection: an integral part of inference. Biometrics 53:603–618

Burnham KP, Anderson DR (1998) Model selection and multimodel inference. Springer, New York

Caudill SB, Ford JM (1993) Biases in Frontier estimation due to heteroskedasticity. Econ Lett 41:17–20

Caudill SB, Ford JM, Gropper DM (1995) Frontier estimation and firm-specific inefficiency measures in the presence of heteroskedasticity. J Bus Econ Stat 13:105–111

Gallant AR (1982) Unbiased determination of production technologies. J Econom 20:285–323

Hansen BE (2005) Challenges for econometric model selection. Econom Theory 21:60–68

Huang C, Liu JT (1994) Estimation of a non-neutral stochastic frontier production function. J Prod Anal 5:171–180

Kumbhakar SC, Ghosh S, McGuckin JT (1991) A generalized production Frontier approach for estimating determinants of inefficiency in US dairy farms. J Bus Econ Stat 9:279–286

Lai HP, Huang CJ (2010) Likelihood ratio test for model selection of stochastic frontier models. J Prod Anal 34:3–13

Lee H, Pötscher BM (2005) Model selection and inference: facts and fiction. Econom Theory 21:21–59

Liu Y, Myers R (2009) Model selection in stochastic frontier analysis with an application to maize production in Kenya. J Prod Anal 31:33–46

Pötscher BM (1991) The effect of model selection on inference. Econom Theory 7:163–185

Reifschneider D, Stevenson R (1991) Systematic departures from the Frontier: a framework for the analysis of firm inefficiency. Int Econ Rev 32:715–723

Schmidt P, Lin TF (1984) Simple Tests of alternative specifications in Stochastic Frontier models. J Econom 24(3):349–361

Schwarz G (1978) Estimating the dimension of a model. Ann Stat 6:461–464

Stevenson RE (1980) Likelihood functions for generalized Stochastic Frontier estimation. J Econom 13:57–66

Takeuchi K (1976) Distribution of information statistics and a criterion of model fitting. Suri-Kagaku (Math Sci) 153:12–18 (in Japanese)

Wang HJ (2002) Heteroscedasticity and non-monotonic efficiency effects of a Stochastic Frontier model. J Prod Anal 18:241–253

Wang HJ, Schmidt P (2002) One-step and two-step estimation of the effects of exogenous variables on technical efficiency levels. J Prod Anal 18:129–144

Acknowledgments

We thank Robin Sickles for his helpful comments. We also thank a referee for carefully reading the original version of this manuscript and for preparing helpful and constructive critiques which served to greatly improve the exposition and content. Huang gratefully acknowledges the Institute for Advanced Studies in Humanities and Social Sciences, National Taiwan University, for the research support. Lai gratefully acknowledges the National Science Council of Taiwan (NSC-99-2410-H-194-020) for the research support.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Huang, C.J., Lai, Hp. Estimation of stochastic frontier models based on multimodel inference. J Prod Anal 38, 273–284 (2012). https://doi.org/10.1007/s11123-011-0260-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11123-011-0260-0