Abstract

We present a new, single-parameter distributional specification for the one-sided error components in single-tier and two-tier stochastic frontier models. The distribution has its mode away from zero, and can represent cases where the most likely outcome is non-zero inefficiency. We present the necessary formulas for estimating production, cost and two-tier stochastic frontier models in logarithmic form. We pay particular attention to the use of the conditional mode as a predictor of individual inefficiency. We use simulations to assess the performance of existing models when the data include an inefficiency term with non-zero mode, and we also contrast the conditional mode to the conditional expectation as measures of individual (in)efficiency.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and motivation

In the founding papers of stochastic frontier analysis (SFA), Aigner et al. (1977) and Meeusen and van den Broeck (1977), the authors considered two distributions for the inefficiency term, the Half Normal and the Exponential, creating organically the Normal-Half Normal (NHN) and the Normal-Exponential (NE) specifications for the composite error term. The first would go on and have a spectacular future outside SFA also, after Azzalini (1985) baptized it as the Skew Normal distribution and presented it to the statistical community. The second had already a well established past, since a variant of it under the name “Exponentially modified Gaussian” was being used already from the ’60s in chromatography (for a review see Grushka, 1972).

But no one is a prophet in their own land: Stevenson (1980) noted that in both specifications the inefficiency distribution had its mode at zero, imposing a specific economic/structural assumption: that the most likely occurrence was near-zero inefficiency. While such an assumption could be justified to a degree by invoking purposeful optimizing behavior as well as the forces of competition, Stevenson linked inefficiency to managerial competence and argued that the latter is not distributed monotonically in the population (of managers at least). Based on this argument, the author proposed the use of the Truncated Normal distribution for the inefficiency term as an alternative, as well as the Gamma distribution, restricting the values of its shape parameter so as to obtain a closed-form density for the composite error term.Footnote 1 To this day, these are the main specifications that allow for a non-zero mode of the inefficiency component.Footnote 2 But both these distributions have issues: the maximum likelihood estimator (MLE) has often difficulties converging under a Truncated Normal specification, while the Gamma specification in its general formulation has a non closed-form density that makes it less appealing for empirical implementations. Moreover, Ritter and Simar (1997) found that the shape parameter of the Gamma distribution is weakly identified and imprecisely estimated when the sample size is not really large. But this is the very parameter that allows us to have a non-zero mode.

In this paper we present production and cost stochastic frontier models as well as the two-tier extension (2TSF), where we specify the one sided component(s) to follow the Generalized Exponential distribution, which is a single-parameter distribution that has always a non-zero mode. Assuming in addition that the noise term follows a zero-mean Normal distribution, we obtain the Normal-Generalized Exponential specification (NGE).

Since concerns related to the mode of the inefficiency distribution is what generated this research, it is fitting that we will also pay particular attention to the conditional mode as a measure of individual inefficiency. These measures are sometimes called “JLMS” measures from the paper of Jondrow et al. (1982) where both the conditional expected value and the conditional mode were considered as predictors of individual inefficiency.Footnote 3 In fact this paper dealt with predicting the error component of the logarithmic specification. It was Battese and Coelli (1988) that presented the conditional expectation expressions for the exponentiated error, i.e. for a prediction at the original measurement scale. We extend the approach by examining the conditional modes for the exponentiated case. In practice, the conditional expectation, being an optimal predictor under the Mean-Squared Error criterion, appears to have prevailed as the inefficiency measure of choice. But by considering the mode of the inefficiency distribution we essentially propose a more elaborate investigation of the inefficiency terms, since by having available their (marginal and conditional) distributions, we can go beyond obtaining some predictor of their value and instead form a more complete picture of their stochastic behavior. In addition, the mode always exists and it may also be easier to derive.

In most cases the regression specification used in empirical SFA papers has the dependent variable in logarithmic form, and it is for this equation that distributional assumptions are made. This implies that inefficiency measures ultimately relate to the exponentiated variables. We will focus on this model and present JLMS measures only for the exponentiated inefficiency terms. This is also a way to contain to some degree the sprawling mass of mathematical expressions, since we will develop in detail three different models.

In Section 2 we present the distribution for the one-sided error components that we will use, and provide its main properties. In Section 3, we address certain concerns that are often raised when new specifications for SF models are proposed. In Sections 4 and 5 we present the production and cost SF models respectively, and in Section 6 the two-tier stochastic frontier model. Section 7 concludes with simulations that explore how the familiar NHN and NE specifications perform when the data come from an NGE process and vice-versa, but also, how the conditional expectation and the conditional mode fare as measures of individual inefficiency.

2 The Generalized Exponential distribution

We consider the distribution that has the following density:

Note that the density is two times an Exponential density times the Exponential distribution function with the same scale parameter. Let hE(u; θu) denote the Exponential density with scale parameter θu. Then we can write equivalently,

This additive form will prove convenient in calculating expressions that involve integration, exploiting the linearity of integrals and the already known results from the NE specification. The distribution function is

There are at least three ways to obtain the above distribution. First, as a general consequence of the Probability Integral Transform that states that for every continuous random variable X with support SX, distribution function FX(x) and density fX(x), we have that \({F}_{X}(X) \sim U\left(0,1\right)\). This then implies that

So the function \(2{f}_{X}\left(x\right){F}_{X}\left(x\right)\) is non-negative in the support of X and integrates to unity over it, therefore it is a density. We take this general result and apply it to the Exponential distribution.

Second, \(2{f}_{X}\left(x\right){F}_{X}\left(x\right)\) is the density of the maximum of two i.i.d. random variables—indeed, since its distribution function is \({\left[{F}_{X}\left(x\right)\right]}^{2}\). This representation provides a straightforward way of generating draws from the distribution for simulation purposes.

Third, it can be seen as a special case of the “Generalized Exponential” distribution introduced by Gupta and Kundu (1999), with shape parameter equal to 2 (their α), scale parameter equal to θu (their λ) and location parameter equal to zero (their μ). We will write \(u \sim GE\left(2,{\theta }_{u},0\right)\), and use this name to identify our distribution.

2.1 Moments and other properties

Yet another representation of this distribution, based on results from Gupta and Kundu (1999) and for the specific values of the parameters, is the following: let ei, i = 1, 2 be two i.i.d. Exponentials with scale parameter equal to 1. Then

This makes the derivation of the basic moments of u very simple by using cumulants κ, for which we have κr(cz) = crκ(z), and under independence, κr(e1 + e2) = κr(e1) + κr(e2). It follows that

Then

We see that the distribution has lower skewness and excess kurtosis compared to the Exponential distribution (for which they are 2 and 6 respectively), but exceeds in both the Half-Normal (0.995 and 0.869 respectively). Paired with a Normal error component, these values represent the maximum skewness and kurtosis the composed SF error can accommodate under an NGE specification. Papadopoulos and Parmeter (2021) presented the empirical skewness and kurtosis of the OLS residuals for eight representative SF empirical studies, and in none of them did they exceeded the values of the GE distribution. This is an indication that although lower than the corresponding values for the Exponential distribution, they do not restrict in practice the applicability of the GE distribution.

Further, it is a simple exercise to find the argmax of the density,

The mode of this distribution is equal to the median of an Exponential distribution with the same scale parameter, and it locates the point where 0.25 of probability mass lies to the left of it. In other words, it is equal to the 1st quartile. We also see that the mode cannot be zero, and in that sense the labeling of the distribution as “Generalized Exponential” could be considered as a misnomer, since it does not nest the Exponential.

The quantile function is

from which we can obtain the median and other quantiles.

Finally, by using the additive expression eq. (2) for the density, it is easy to determine that it is log-concave (and therefore so is its distribution function). Since we will combine this distribution with a Normal random-noise component, the resulting composite error density will also be log-concave, since the Normal density is log-concave, and convolutions of log-concave functions retain the property.

3 Why use yet another distributional specification?

Now that we have familiarized ourselves with the aspiring newcomer, it is time to confront certain issues that are routinely raised in relation to distributional specifications like the one we propose here: a fully parametric specification with a log-concave density.

The first issue is the risk that, in case of distributional mis-specification, inference would be unreliable. But this is an argument in favor of abandoning parametric inference altogether. For those scholars that find a net benefit in using it though, increasing the number of available specifications mitigates this problem since it increases the diversity of available models and so our ability to get within tolerable distance from the true data generating process (DGP).

Still under the spectre of distributional misspecification, we can avoid this particular risk when “determinants of inefficiency” are available. Then we can model the distribution parameters of the inefficiency components as functions of observed data (as long as a valid economic argument supports it). Moreover, one can exploit the “scaling property” (see Wang and Schmidt, 2002, for the single-tier and Parmeter, 2018, for the two-tier SF model respectively), that always holds for single-parameter distributions, and, instead of using maximum likelihood estimation, implement non-linear least squares (NLLS) that does not require distributional assumptions. An issue in this approach is the finite-sample reliability of the NLLS estimates: in his simulations, Parmeter (2018) has found a persistent upward bias in the estimates of the coefficients for the determinants of inefficiency for small and medium samples, which would lead to an under-estimation of technical efficiency, if we were to calculate the corresponding exponentiated measure: firms appear less efficient than they actually are.Footnote 4

A third issue relates to all SF models that assume a log-concave density for the random noise component: in standard notation, in an SF production model we would have a composite error term ε = v − u, E(v) = 0, u ≥ 0. Ondrich and Ruggiero (2001) have proven that, if the random noise component has a log-concave density, then the expected value of the inefficiency term conditional on the composite error, E(u∣ε), is a monotonic function of the conditioning variable.Footnote 5 This has the implication that as regards ranking of the observations in the sample with respect to inefficiency, we might as well use the estimation residuals instead of E(u∣ε), and anticipate negligible differences (under correct specification). Moreover, the OLS residuals are more robust in ranking observations according to inefficiency, since they are free of possible misspecification from distributional assumptions.

We respond here by noting that ranking may be important and more safe as an inferential result, but there is more to efficiency analysis than a beauty contest to congratulate the more efficient firms and frown at the less efficient ones. The actual quantitative measurement of inefficiency is what matters as regards the efficient use of scarce resources, and for this we need to go beyond the estimation residuals.

In any case, the result of Ondrich and Ruggiero (2001) relates to the conditional expectation. We will provide proof that it holds also for the conditional mode of the production NGE frontier, and we will obtain simulation evidence as regards differences in ranking.

From a technical point of view, the NGE specification responds to the Stevenson (1980) critique of the NHN and NE specifications by offering a more convenient and, let’s say a more “user-friendly” solution to the non-zero mode desideratum, compared to the specifications involving the Truncated Normal or the Gamma distributions: in single-tier SF models the unknown parameters of the NGE specification are only two and not three, while in the 2TSF NGE model they are only three and not five. Moreover, the fixed investment cost in time and intellectual energy that the NGE specification requires (as any new tool does) is modest.

A weakness of the GE distribution is that it does not nest the zero-mode case. This in theory is an undesirable inflexibility, but the existence of a non-zero mode for the one-sided component is mostly an issue for economic debate rather than a statistical matter. We may not “let the data decide”, but knowledge of the particular industry/market under study in each case should allow for a convincing argument and a safe choice on this matter.

From a statistical point of view, the NGE specification, in addition of having a non-zero mode, is characterized by higher values of skewness and excess kurtosis in the composed error term compared to the NHN specification, and so it can accommodate a larger set of real-world data samples.

Finally, from an economics point of view, by representing the maximum of two i.i.d. underlying random variables it allows us to picture the economic mechanism as operating at high intensity: if notionally there are two possible inefficiency values, the GE specification “selects” the strongest one to affect the outcome. To some, this may appear as unjustified negative bias; to others it would be classified as prudential, connected informally with the concept of entropy and the tendency of things to get to a lower state of energy, which in business terms translates into disorganization and loss of efficiency.

4 The production frontier

In a production frontier setting, the original model is

Typically, y is some measure of output and the regressors are production inputs. We focus on its estimation in logarithms, so the composite error term is ε = v − u and we assume v ~ N(0, σv), u ~ GE(2, θu, 0).

The additive representation of the density of u, eq. (2), means that the density of ε can be obtained as the difference of two convolutions of the Normal density with the Exponential, each being nothing else than the familiar Normal-Exponential SF specification, (see e.g. Kumbhakar and Lovell 2000, for the density formula). So we can easily obtain

where Φ is the standard Normal distribution function, and

This formula is nested in the formulas for the 2TSF model presented in Section 6, by setting there θw = 0. The same holds for the distribution function, which in the production frontier case is

The distribution function will be needed in modeling sample selection bias, and also in models where regressor endogeneity is handled using Copulas rather than instrumental variables (see Tran and Tsionas 2015; Papadopoulos 2020a).

Due to the independence of the error components, the mean and variance of the composed error term are immediately obtained. Regarding skewness and excess kurtosis, Papadopoulos and Parmeter (2021) have shown that they can be expressed as follows:

where the symbol s represents the standard deviation. The skewness expression depends on the assumption that the distribution of v is symmetric, while the excess kurtosis expression on the assumption that it is Normal. Their maximum values (in absolute terms) are the skewness and excess kurtosis of the one-sided component. The authors present a powerful specification test using only sample moments of OLS residuals that is suitable for distributions with constant skewness and excess kurtosis.

Past this stage, the density can be used to implement maximum likelihood estimation. Alternatively, one can apply the Corrected Least Squares estimator (COLS), equating in the first stage the sample means of the 2nd and 3rd power of the OLS residuals with the 2nd and 3rd cumulant of the composite error term respectively, which are,

We note that the COLS estimator is vulnerable to the “wrong skew” issue: the case where the OLS residuals exhibit skew with the opposite sign than the one assumed. Here if \({\widehat{\kappa }}_{3}(\varepsilon )\,>\,{0}\), we cannot compute θu which is constrained to be strictly positive. On the other hand, the simulations presented later showed that the MLE under the NGE specification does not break down when the skew is wrong: in such cases, the value of θu is estimated by MLE as really small but away from zero, around the values 0.03–0.04. But these are not reliable estimates, and so if the sample skew is wrong and the researcher decides to treat is as a sample problem, special treatment is required (for different such methods see Cai et al. 2020; Hafner et al. 2018; Simar and Wilson 2009).

4.1 Assessing and measuring technical (in)efficiency

For the single-output production frontier model estimated in logarithms, the standard measure of technical efficiency is Shephard’s output distance function that here equals \(\exp \{-u\}\equiv {q}_{u}\), qu ∈ (0, 1] (see Sickles and Zelenyuk 2019, pp. 20–24), which is the ratio of actual to maximum output. This is a random variable on its own. To obtain its marginal distribution, we apply the distribution method and we have

while the density is

This is the distribution of the minimum of two i.i.d. Beta random variables, with parameters α = 1/θu, β = 1. Unlike what happens to the Beta distribution when the parameter β is equal to unity, here, the distribution does not have always a monotonic density. This will depend on the actual value of θu. Specifically we have

We note that except when θu = 1 where the distribution becomes the minimum of two i.i.d U(0, 1) random variables, for θu > 1 the density has an asymptote at zero. Also, note that the zero-mode result for θu ≥ 1 is for the variable \(\exp \{-u\}\), so with stronger inefficiency parameter (higher θu) comes lower technical efficiency.

Having the distribution function available allows us also to compute a full representative spectrum of quantiles (not just the median), through the quantile function

Turning to moments, by using the additive expression for the fu(u) density, the unconditional expected value can be calculated through the moment generating function of the Exponential distribution and it is

We can obtain its variance from

The mirror property of the Beta distribution is inherited here, so if one wants to study the relative technical inefficiency \(1-\exp \{-u\}\), the distribution will be that of the minimum of two i.i.d. Betas with parameters α = 1, β = 1/θu.

Closing this section, we mention that the ratio of maximum output over actual, \(\exp \{u\}\), is the “output-oriented Farrell measure of technical efficiency”, and for businesses it is a meaningful measure and perhaps more meaningful to their management, although not as a measure of efficiency but as a guidance for efficiency improvement: a value, say, of \(E(\exp \{u\})=1.15\) would tell us that on average, firms in the sample need to increase their output by 15%, in order to be fully efficient, holding inputs constant. At the level of an individual firm, such a measure would be more relatable to the business mindset, and more directly translatable to specific actions in the pursuit of efficiency (“with given inputs, find new ways to combine and coordinate them better so a to increase output—and you have a 15% increase to chase”). To our knowledge it has not been explored in the efficiency literature and we will not examine it further here. We just note that the results on the marginal distribution of the variable “k” in Section 5.1 apply also for the \(\exp \{u\}\) variable (but not the individual measures of Section 5.2).

4.2 Individual measures

We can obtain information on \({q}_{u,i}=\exp \{-{u}_{i}\},\ i=1,...,n\) through εi. By standard techniques, the conditional density fu,ε(ui∣εi) is

where ϕ is the standard Normal density. We show in the Appendix that

We will use this expected value in our simulations alongside the conditional mode as predictors of inefficiency (we won’t present formulas for conditional expectations for the other models that we deal with here).

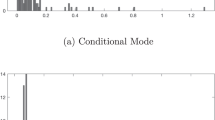

Turning to the conditional mode, in order to compute it we need the conditional density of \({f}_{{q}_{u}| \varepsilon }(q_u| {\varepsilon }_{i})\) which we can readily obtain by applying a change-of-variables in fu∣ε(ui∣εi) to arrive at

This can be maximized numerically with respect to qu (for each estimated value \({\hat{\varepsilon }}_{i}\)), to get the conditional mode as an alternative predictor of individual efficiency. Note that this requires maximizing only the numerator in eq. (9), since the denominator does not include the qu variable.

4.2.1 Monotonicity of the conditional mode in the conditioning variable

We show in the Appendix that the conditional mode qu,* in the NGE production frontier satisfies the following implicit equation:

The variable qu ranges in (0, 1]. This implies that the term in parenthesis is always positive, meaning that the LHS expression is increasing in εi. Also, it is evident that both the term in parenthesis and the term in brackets is decreasing in qu,*. So if we increase the value of εi the LHS will tend to increase, and in order to restore equality with zero we must decrease the LHS and so increase qu,*. But this proves that the conditional mode is monotonically increasing in the conditioning variable. This shows that the result of Ondrich and Ruggiero (2001) mentioned earlier related to conditional expectations extends to the conditional mode of the NGE production specification.

5 The cost frontier

In a cost frontier setting, the original equation is of the form

Here the dependent variable represents production costs, and the regressors are typically input prices and output, and again we focus on its estimation in logarithms. So the composite error term is ε = v + w and we assume v ~ N(0, σv), w ~ GE(2, θu, 0).

The density and distribution function of the composite error term are respectively

and

with

As before these can be used in maximum likelihood estimation, while we can also implement the COLS estimator (only, κ3(ε) would be positive here).

5.1 Assessing and measuring cost (in)efficiency

The standard measure of cost efficiency, is the ratio of minimum cost to actual, in our setting \(CE\equiv {q}_{w}=\exp \{-w\}\in (0,1]\) (see Sickles and Zelenyuk 2019 pp. 80–81). The measure enjoys certain theoretical desirable properties, not least being confined in the (0, 1] interval.Footnote 6 For CE all the formulas from the marginal analysis of the section on the production frontier applies as is (Section 4.1), inserting everywhere w in place of u.

Somewhat more intuitive but less well-behaved would be the ratio of actual cost to minimum, which we could think of as a measure of cost inefficiency. This would give us a “gross inefficiency mark up”: a value, say, 1.25 of this ratio would tell us that actual costs are 25% higher than minimum. In our setting this measure is represented by \(k=\exp \{w\},\ k\in [1,\infty )\). Here we have

This is the distribution of the maximum of two i.i.d Pareto random variables (of “Type I”), with minimum value/scale parameter equal to 1, and shape parameter 1/θw. Its density is

The mode of this distribution is

while its quantile function is

Here, the existence of moments depends on the value of θw: as it is the case for the Pareto distribution itself, for the r-th moment of k to exist and be finite, we must have θw < 1/r. So if θw ≥ 1 not even the mean will exist, while to have a variance we would need θw < 1/2.

In cases where θw < 1, the mean of this distribution is given by

and if moreover θw < 1/2, the variance can be obtained from

The net mark-up on costs due to inefficiency is \(\exp \{w\}\)−1, and this is the maximum of two i.i.d random variables following the Lomax distribution with scale parameter equal to unity (the Lomax distribution is essentially a Pareto law shifted so as its support starts at zero).

5.2 Individual measures

For the cost efficiency measure \(CE=\exp \{-w\}\) the conditional density is

Turning to the cost inefficiency measure \(k=\exp \{w\}\), we note that if θw > 1 and the marginal distribution does not possess moments, we are left only with the conditional mode as an individual measure. This is because the conditional expectation is fundamentally defined only for variables that are absolutely integrable and have finite expected value (see e.g. Williams 1991, Theorem 9.2, p. 84). The conditional density here is

Numerical maximization of the numerator in both expressions with respect to k gives the conditional mode, for given εi.

6 The two-tier frontier

The mathematical derivations of this section can be found in the Technical Appendix of Papadopoulos (2018, pp. 409–444).

The two-tier stochastic frontier (2TSF) model was introduced by Polachek and Yoon (1987) in order to measure informational inefficiency of both employers and employees related to the determination of the wage. Since then it has been applied to many other markets apart from the labor market, notably the housing market and the health services market, but also as a method to measure bargaining power in a bilateral bargaining setting (see Papadopoulos 2020c, for a comprehensive survey).

The model is represented by the equation

and as before we assume that it will be estimated in logarithmic form. The error components follow v ~ N(0, σv), w ~ GE(2, θw, 0), u ~ GE(2, θu, 0), and are jointly independent. Then we have,

The distribution function is

It is straightforward to obtain the moments of ε using the cumulant expressions, since the three components are independent. We have

Implementing the COLS estimator here requires also the use of the sample mean of the 4th power of the OLS residuals that estimates consistently the 4th central moment of the composed error term, and it must be set equal to \({\kappa }_{4}(\varepsilon )+3{\kappa }_{2}^{2}(\varepsilon )\).

The two-tier frontier specification faces no problems with “wrong skew” samples, because its theoretical skewness can be positive or negative. Papadopoulos (2020b) develops a 2TSF model for production in order to measure the effects that unobservable management has on output (management being represented by the error component w).

6.1 Assessing and measuring the net effect

To analyze each one-sided component individually, we can use the results from the single-tier frontiers as regards the marginal distributions, but not the results on individual measures, since these depend on the composite error term which here is different.

But specific to the 2TSF model is the joint presence of opposing forces w and u on the outcome, and so of special interest is their net effect z = w − u, and, for the logarithmic model we are considering, the exponentiated one, \(\xi =\exp \{w-u\}\). After deriving the density and distribution function of z, which we do not present here, we can obtain the density and distribution function of ξ with the same techniques as before,

From this, one can obtain probabilistic events of interest like for example,

The marginal density of ξ is

The mode of this density is

with

and where \(I\left\{\cdot \right\}\) is the indicator function. One can determine that

The proper predictor of the net effect of the two opposing one-sided components is the mode of the difference, not the difference of the marginal modes, since, even if they are independent, the two error components happen concurrently and so we must consider their variation jointly. But the marginal modes are also useful since they predict the value of each component, if it operated without the presence of the other.

As regards expected values, due to independence we have,

and it will exist if θw < 1.

6.2 Individual measures

In the 2TSF setting, of main interest are the variables

Closed-form expressions for the one-sided conditional expectations for the 2TSF NE and NHN specifications have been collected in Papadopoulos (2018, ch.3). Up to now no attention has been given to the conditional mode as a predictor at the individual level. For the NGE specification, closed-formed expressions for conditional expectations can be derived, but they are much more involved and lengthy than for the single-tier models. We also note that even though w and u are assumed independent, they stop being so conditional on ε, so

To obtain predictors based on the mode we need four conditional densities. These are

where \({f}_{{q}_{w}}({q}_{w})\) is \({f}_{{q}_{u}}({q}_{u})\) (eq. (7)) with the symbol w in place of the symbol u. Also,

and finally,

As before, obtaining the conditional mode series requires numerical maximization of these expressions with respect to their variable, for each estimated value of εi.

7 Simulation studies

In this section we provide simulation results related to the NGE production frontier model, using maximum likelihood estimation.

In all simulations the regression equation is

with \({x}_{1} \sim {\chi }_{1}^{2},\ {x}_{2} \sim \,\text{Bern}\,(0.65),v \sim N(0,1).\)

In each simulation we run 1000 replications and we report sample averages over them. We consider two sample sizes n = 200, 1000 and two different values of θu = 0.5, 1.5. For each value we report also the “signal-to-noise” ratio (SNR), the ratio of the standard deviation of the inefficiency error component over that of the random component. This is a model-free measure that summarizes well how strong is the signal that interests us (the inefficiency), relative to noise. Note that it equals the familiar λ = σu/σv only for the NE specification.

We observed that when the data generating process was NGE, the correctly specified MLE failed to converge 2–5% of the times, while when the sample size was small and the SNR large, this percentage rose to ≈15%. We noted earlier that this is not related to the “wrong skew” issue. Rather, it indicates a sensitivity of the NGE likelihood to starting values, and in empirical studies many different sets of initial values for the parameters should be tried.

7.1 Performance when the DGP is NGE

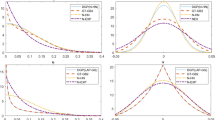

In the first part of our simulation study, the DGP has an NGE composite error term. We report OLS, NHN, and NE estimates also, to see how these fare when the true specification is NGE. Essentially we attempt to answer the question “suppose that the DGP is indeed NGE. Does it matter for inference?” We certainly expect that the correctly specified MLE will fare better compared to a misspecified likelihood, but how much better?

In order to have comparable results between specifications, we present not estimates of the scale parameters of the inefficiency component, but calculated moments based on these estimates and on the assumed specification in each case.

The results are presented in Table 1. They validate the robustness/consistency property for the Skew Normal quasi-MLE (the NHN specification) as regards the regression slope coefficients, proven in Papadopoulos and Parmeter (2020). The same robustness property appears to characterize the NE specification. Regarding the performance of the misspecified likelihoods, we see that the MLE under the NHN specification does remarkably well, perhaps against expectations, with rather small bias in the estimation of average Technical Efficiency. On the other hand, the NE specification leads to high overestimation of TE.

Probing deeper we computed also JLMS conditional expectations for the NHN and NGE models. In each replication we obtained the conditional expectation series, and computed the 0.2, 0.4, 0.6, 0.8 quintiles.

Table 2 presents the median estimate of each quintile over all replications. We observe that sample size does not appear to affect behavior. When the SNR is low, the predictor coming from the NHN misspecified model tends to underestimate Technical Efficiency: for example, it places 40% of firms at a Technical Efficiency level below 0.48–0.49 (1st column 2nd row), while the correctly specified NGE predictor does that for only 20% of firms (2nd column 1st row). When the SNR is large, the two predictors allocate probability mass essentially in the same way up to cumulative probability 0.6, but then the NHN tends to overestimate Technical Efficiency since its 0.8-quantile value is 0.46 while the corresponding quantile value of the NGE predictor is only 0.38.

7.2 Performance when the DGP is NHN

Here the DGP includes a NHN composite error term, and we are interested to see how misleading could estimation be if it is carried based on a misspecified NGE likelihood.

The results from the NHN DGP are in Table 3. When the SNR is low, the now misspecified NGE model leads to an overestimation of Technical Efficiency, but not as much as the misspecified NE model. With high SNR, the NGE model performs comparably with the NHN model in the previous simulation, while the NE model continues to be highly biased.

7.3 Performance when the DGP is NE

Here the DGP includes a NE error term, and the goal is the same as in the previous subsection.

From Table 4 we see that the now misspecified NHN and NGE models perform comparably, and both underestimate the average Technical Efficiency measure.

Overall, there appears to be a certain degree of congruence and “mutual robustness” between the NGE and the NHN specifications, and exploring whether this has some deeper theoretical justification could be an interesting topic of study. When we misspecify the one against the other, results are not that different, although of course it is always preferable to use the correct specification, especially when one is interested not just in sample averages but individual estimates of inefficiency. On the other hand the misspecified NE likelihood visibly overestimates Technical Efficiency against both an NGE and a NHN data generating process, and these two return the favor by underestimating TE when the true model is NE.

7.4 Rankings and correlation

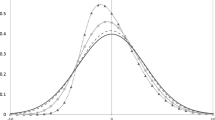

In this subsection we examine how close are the firm rankings obtained by the NGE predictor compared to the rankings based on OLS residuals. We included an additional sample size, n = 5000, and two additional values for θu = 1.0, 2.0. We computed individual efficiency measures for the NGE specification, both conditional expectations and conditional modes (for the exponentiated variable). In Table 5, we compare the observation rankings obtained using them, to those based on OLS residuals. We report the proportion of observations that changed rank, but also the proportion of observations that moved by a ventile, namely those that moved at least five percentiles in the ranking distribution.Footnote 8 We consider this a realistic criterion in order to conclude that the rankings differ in a substantial manner from an economic point of view, since, moving a few positions among hundreds of observations does not signal that the two measures rank really differently an observation.

What we observe in Table 5 is that while the large majority, and even almost all of observations change rank under the JLMS measures from the NGE specification compared to the OLS ranking, very few or even none move by a ventile. This does not invalidate the Ondrich and Ruggiero (2001) result, it just clarifies it appropriately: rankings coming from log-concave densities in SF models don’t differ from OLS rankings in an economically important way. We also see that the conditional mode behaves much like the conditional expectation in that respect, as anticipated from the related theoretical result obtained earlier.

Finally, we examined how close are the two JLMS measures of inefficiency, but also how they correlate with the true value \(\exp \{-{u}_{i}\}\). The results are presented in Table 6.

The first two rows of Table 6 in each block are analogous to the first two rows of Table 5: they tell us how many observations in percentage terms changed rank and how many moved at least by a ventile, when ranked by the conditional expectation and when ranked by the conditional mode. Except when the sample size is small and the inefficiency parameter is the lowest simulated, in all other cases these proportions are both negligible or even zero, indicating that the two JLMS measures result in almost identical rankings, both from a statistical but also from an economic point of view.

The 3rd row gives the average absolute difference in efficiency percentage points, as measured by the two measures. This difference is not negligible: it tells us that on average, the two measures differ in the assessment of individual efficiency by 5 to 10 points (in the 1–100 scale that \(100\times \exp \{-u\}\) lives), which in relative terms may translate to an efficiency score that differs by more than 10–15%, depending on which measure is used.

The 4th and 5th row provide association measures of the JLMS scores with the true variable they predict. The differences are minor to none, with the Conditional Expectation showing a slightly higher linear correlation with \(\exp \{-u\}\). We also note that the sample size appears not to matter for how well these measures correlate with the true variable, but the strength of inefficiency (the values of θu) does. Overall, the behavior of the two measures is very close, which does not help us to choose among them, in light of their previous difference as regards the actual quantitative estimation of the efficiency score.

But perhaps this is as it should be, since each measure may be appropriate for different situations: mid- and long-term analysis of repeated actions may be more secure using the conditional expectation and the approach of “averaging” that it represents, while short-term one-off decisions may be served better by the “most likely” prediction, and hence by the conditional mode.

Notes

This approach was first presented in Materov (1981), that is written in Russian and it is a good example of the universality of the language of mathematics.

The under-estimation of technical efficiency here depends on the determinants of inefficiency being positive variables, and they usually are.

The authors proved this for the variable in levels. Using intuition but also the formal results in Egozcue (2015), it also holds for \(E(\exp \{-u\}| \varepsilon )\).

But it is not immediate to translate it in intuitive terms, because, here, improving cost efficiency means reducing the denominator of the ratio. In fact the value \(1-CE=1-\exp \{-w\}\) is more naturally communicated, being the proportional reduction in costs required to attain full cost efficiency. So if, say, CE = 0.8, we have to reduce costs by 1 − 0.8 = 20% to reach the frontier, holding output constant.

This is not the same as “changing ventile” which could happen if a firm moves from, say, the 5th percentile to the 6th.

References

Aigner DJ, Lovell CAK, Schmidt P (1977) Formulation and estimation of stochastic frontier production function models. J Econom 6:21–37

Azzalini A (1985) A class of distributions which includes the normal ones. Scand J Stat 12:171–178

Battese GE, Coelli TJ (1988) Prediction of firm-level technical efficiencies with a generalized frontier production function and panel data. J Econom 38:387–399

Cai J, Feng Q, Horrace WC, Wu GL (2020) Wrong skewness and finite sample correction in parametric stochastic frontier models. Empir Econ Forthcom

Egozcue M (2015) Some covariance inequalities for non-monotonic functions with applications to mean-variance indifference curves and bank hedging. Cogent Math 2:991082

Erdelyi A, Magnus W, Oberhettinger F, Tricomi FG (eds) (1954) Tables of Integral Transforms: Vol.1. New York, McGraw-Hill

Gradshteyn IS, Ryzhik IM (2007) Tables of integrals, series and functions (7th ed). Burlington MA USA, Academic Press

Greene W (1980) On the estimation of a flexible frontier production model. J Econom 13:101–115

Greene W (1990) A gamma-distributed stochastic frontier model. J Econom 46:141–163

Grushka E (1972) Characterization of exponentially modified gaussian peaks in chromatography. Anal Chem 44:1733–1738

Gupta RD, Kundu D (1999) Generalized Exponential distributions. Austral N. Z. J Stat 41:173–188

Hafner CM, Manner H, Simar L (2018) The wrong skewness problem in stochastic frontier models: a new approach. Econom Rev 37:380–400

Jondrow J, Lovell CK, Materov I, Schmidt P (1982) On the estimation of technical inefficiency in the stochastic frontier production function model. J Econom 19:233–238

Kumbhakar SC, Lovell C (2000) Stochastic Frontier Analysis. Cambridge, Cambridge University Press

Materov I (1981) On full identification of the stochastic production frontier model. Ekonomika i matematicheskie metody 17:784–788

Meeusen W, van~den Broeck J (1977) Efficiency estimation from Cobb-Douglas production functions with composed error. Int Econ Rev 18:435–444

Ondrich J, Ruggiero J (2001) Efficiency measurement in the stochastic frontier model. Eur J Oper Res 129:434–442

Papadopoulos A (2018) The two-tier stochastic frontier framework: Theory and applications, models and tools. PhD Thesis. Athens Greece, Athens University of Economics and Business

Papadopoulos A (2020a) Accounting for endogeneity in regression models using Copulas: a step-by-step guide for empirical studies. Manuscript. Athens University of Economics and Business

Papadopoulos A (2020b) Measuring the effect of management on production: a two-tier stochastic frontier approach. Empir Econ. https://doi.org/10.1007/s00181-020-01946-9

Papadopoulos A, Parmeter CF (2020) Quasi-maximum likelihood estimation for the stochastic frontier model. Manuscript. Athens University of Economics and Business

Papadopoulos A, Parmeter CF (2021) Type II failure and specification testing for the stochastic frontier model. Eur J Oper Res. https://doi.org/10.1016/j.ejor.2020.12.065

Papadopoulos A (2020c) The two-tier stochastic frontier framework (2TSF): measuring frontiers wherever they may exist. In: Parmeter CF, Sickles R (eds) Advances in Efficiency and Productivity Analysis, Cham Switzerland, Springer

Parmeter CF, Kumbhakar SC (2014) Efficiency analysis: a primer on recent advances. Found Trends Econom 7:191–385

Parmeter CF (2018) Estimation of the two-tiered stochastic frontier model with the scaling property. J Product Anal 49:37–47

Polachek SW, Yoon BJ (1987) A two-tiered earnings frontier estimation of employer and employee information in the labor market. Rev Econ Stat 69:296–302

Ritter C, Simar L (1997) Pitfalls of normal-gamma stochastic frontier models. J Product Anal 8:167–182

Sickles R, Zelenyuk V (2019) Measurement of productivity and efficiency. Cambridge, Cambridge University Press

Simar L, Wilson PW (2009) Inferences from cross-sectional, stochastic frontier models. Econom Rev 29:62–98

Stead AD, Wheat P, Greene WH (2019) Distributional forms in stochastic frontier analysis. In: Ten Raa T, Greene WH (eds) The Palgrave Handbook of Economic Performance Analysis, Cham, Swizerland, Palgrave Macmillan

Stevenson R (1980) Likelihood functions for generalized stochastic frontier estimation. J Econom 13:57–66

Tran KC, Tsionas EG (2015) Endogeneity in stochastic frontier models: copula approach without external instruments. Econ Lett 133:85–88

Wang JH, Schmidt P (2002) One-step and two-step estimation of the effects of exogenous variables on technical efficiency levels. J Product Anal 18:129–144

Williams D (1991) Probability with martingales. Cambridge, Cambdridge Univerity Press

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict of interest.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 The conditional expectation in the NGE production frontier

In the production SF model we want to compute

where we have used the additive form of the GE density, eq. (2). Decomposing the integrands,

Combining formulas from Gradshteyn and Ryzhik (2007) and Erdelyi et al. (1954), we have (for a > 0),

For both integrals, we have the common mapping

Moreover we have, using indices 1 and 2 for the two integrands,

Exploiting these results to make some initial simplifications we can write

Now,

Also,

Combining all, we arrive at

1.2 The conditional mode in the NGE production frontier

We want to maximize the conditional density in eq. (9) with respect to q. Ignoring the terms that do not include q, the derivative we want to set equal to zero is

Using the property of the standard Normal density \(\phi ^{\prime} (z)=-z\phi (z)\) we obtain

Setting this expression equal to zero is equivalent to setting the expression in curly brackets equal to zero since the term outside is always positive. Manipulating further,

Taking \({q}^{-1+2/{\theta }_{u}}\) out as common factor we arrive at the expression used in the main text.

Rights and permissions

About this article

Cite this article

Papadopoulos, A. Stochastic frontier models using the Generalized Exponential distribution. J Prod Anal 55, 15–29 (2021). https://doi.org/10.1007/s11123-020-00591-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11123-020-00591-9