Abstract

This paper put forward an improved synchronization problem for neural networks with Markov jump parameters. The traditional Markov jump neural network (MJNN) only considers the basic external time-varying delays, ignoring both the distributed and leakage delays in the internal transmission of the neural network and the small time-varying errors in the mode switching of Markov probability transition rates. In this paper, we focus on the synchronization of MJNN with mixed time-varying delay. And an improved Lyapunov–Krasovskii functional is constructed. The convergence of inequalities is solved by using affine Bessel–Legendre inequalities and Wirtinger double integral inequalities. At the same time, a new method is used to optimize the mathematical geometric area of the time-varying delay and reduce the conservativeness of the system. Finally, a sample point controller is constructed to synchronize the driving system and the corresponding system.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The enlightenment stage of the neural network (NN) is in the middle and late 1980s. After decades of development, it gradually develops to a mature stage and has been extended to all areas of real life. This special nonlinear network which imitates the structure of human brain and the method of processing information have made amazing achievements in many aspects and can solve many problems that are difficult to solve by digital computers. In the past few decades, different kinds of NN have attracted attention. However, the simulation of human brain structure by artificial NN is still a low degree of research. Scholars have been looking for more accurate theories to imitate brain intelligence. Through continuous practice and theoretical research, people have found that chaos and time delay have been found in the nervous system, whether micro-neurons or macro-brain waves. Therefore, researchers focus on chaotic NN with time-varying delay [1, 10, 12, 33]. Chaotic NN is to apply the advantages of NN system to chaotic system, make up for each other’s shortcomings, and there is also a very large possibility of intelligent information processing. However, in traditional NN, only external time-varying delay is considered, and the distributed delay and leakage delay of information transmission within neurons are neglected. Therefore, the first problem that this paper focuses on is the chaotic perturbation of NN with mixed time-varying delay.

In recent years, the stability of NN with Markov jump has become a research hot spot. This model allows NN to have multiple modes, and different modes can be switched under the drive of a Markov chain. Therefore, the study of the stability of Markov jump model has more potential application value [13, 14, 18, 23, 25, 26, 29, 30]. In [25, 29], by constructing suitable LKF and using linear matrix inequality (LMI), the mean-square global exponential stability of a class of reaction-diffusion Hopfield MJNN and the global robust exponential stability of a class of time-varying delay MJNN are studied, respectively. However, the traditional probabilistic transfer matrix of Markov jump parameters often neglects the small time-varying errors in probability transition rates, which may make the switching process unstable and cause the system to collapse in severe cases. Therefore, the second problem that this paper focuses on is the time-varying probabilistic transfer parameters in MJNN.

Synchronization, as a nonlinear phenomenon, has appeared in many practical problems, such as physics, ecology and physiology. Therefore, the application of synchronization theory has been widely studied in different scientific fields. In particular since 1990s, Pecora and Carroll have paid attention to the importance of control and synchronization of chaotic systems. They put forward the concept of drive-response to achieve synchronization of chaotic systems. This method controls the response system by driving the external input of the system to achieve synchronization. So the theory of chaos synchronization and chaos control has been widely studied. In order to achieve synchronization, many control systems have been proposed, such as: synchronization method of driving-response [19]; active–passive synchronization method [20]; synchronization method based on mutual coupling [36]; adaptive synchronization method [9]; feedback control synchronization method [15]; projection synchronization control [11]; and impulse control [7]. Therefore, the third problem that this paper focuses on is how to construct a suitable sample point controller to synchronize MJNN drive system (MJNN-DS) and MJNN response system (MJNN-RS).

On the other hand, the synchronous analysis of MJNN usually constructs a suitable LKF and then converges the inequality. In recent years, scholars have proposed many useful inequality methods, such as: Jensen inequality [37], Wirtinger integral inequality [22], free matrix inequality [32], interactive convex inequality [34] and Bessel–Legendre inequalities [21]. These methods have effectively improved the convergence accuracy, but there is still room for improvement. Wirtinger double integral inequalities and affine Bessel–Legendre inequalities improve Wirtinger integral inequality and Bessel–Legendre inequality, respectively. Therefore, the fourth problem that this paper focuses on is how to use Wirtinger double integral inequalities and affine Bessel–Legendre inequalities to improve the convergence accuracy.

In addition, when discussing the interval range of time-varying delays, the defaults are \(h_{1}\le h\le h_{2}\) and \(d_{1}\le \dot{h}\le d_{2}\), which are conservative and can be optimized in two-dimensional space. Therefore, the fifth problem that this paper focuses on is to discuss the optimization of time-varying delay intervals based on two-dimensional level.

In summary, the contributions of this paper and the difficulties to be solved are as follows: Firstly, how to unify the mixed time-varying delay and time-varying probability transfer under one MJNN. Secondly, how to apply Wirtinger double integral inequalities and affine Bessel–Legendre inequalities to Lyapunov functional processing. Thirdly, how to synchronize MJNN-DS and MJNN-RS through the control of sample point controller. Fourthly, how to optimize the two-dimensional geometric area of time delay. In addition, these methods have the following advantages: the affine Bessel–Legendre inequalities improves the traditional Bessel–Legendre inequality, and with the increase in N, the optimization effect will be better. Compared with the traditional state feedback controller, the sample point controller can better transmit the effective information of the system and achieve better control effect. The traditional two-dimensional geometric area of time delay is a rectangle. We reduce the conservativeness of the system by reducing the area to a parallelogram.

Next, this paper will be based on the following four parts. The first part introduces MJNN-DS and MJNN-RS, sample point controller, and relevant useful lemmas. In the second part, the synchronous analysis of MJNN mixed-time-varying-delayed error system is carried out, and the convergence accuracy of LKF is improved by using Wirtinger double integral inequalities and affine Bessel–Legendre inequalities. In the third part, the range of time-varying delay in two-dimensional space is discussed, and the conservativeness of the system is reduced by reducing the two-dimensional geometric area. In the fourth part, a numerical example is constructed. The parameters of the sample point controller, the chaotic curve of MJNN system, Markov jump response curve, synchronization analysis response curve and error analysis response curve are obtained through actual simulation.

In this paper, “0” represents zero matrix of suitable dimension. \(\mathbf {R}^{n}\) and \(\mathbf {R}^{n\times m}\) represent n-dimensional and \(n\times m\)-dimensional Euclidean spaces, respectively. “T” represents the matrix transposition. \(\{\varOmega , \digamma , \mathcal {P} \}\) represents the probability space.

2 Preliminaries

Consider the following MJNN-DS with mixed time-varying delay:

where \(x(t)=(x_{1}(t),x_{2}(t),\cdots ,x_{n}(t))^\mathrm{T}\in \mathbf {R}^{n}\) is the neuron state vector. \(A(\cdot )\), \(B(\cdot )\), \(C(\cdot )\) and \(D(\cdot )\) are matrices of suitable dimensions with uncertainties, which are expressed as follows:

where \(\varDelta A\), \(\varDelta B\), \(\varDelta C\) and \(\varDelta D\) are uncertain parameter terms, such as:

where G and \(E_{i}(i=1,2,3)\) are real matrices of suitable dimensions, F(t) satisfies: \(F^\mathrm{T}(t)F(t)\le I\).

\(f(\cdot )\) is the neuron excitation function. \(\mathcal {J}\) denotes external disturbances. r(t) represents a Markov jump subset on a finite state space \(S=\{1,\cdot \cdot \cdot ,M\}\). Markov chain is defined in space \(\{\varOmega , \digamma , \mathcal {P} \}\). The transfer rate matrix \(\varPi (t)=(\mu _{ij})_{N \times N}\) is defined as follows:

where \(\mu _{ij} \ge 0\), if \(j\ne i\), \(\mu _{ii}=-\sum ^{N}_{j=1,j\ne i}\mu _{ij}\). \(\sigma \), \(d_{1}(t)\) and \(d_{2}(t)\) represent the leakage delay, the external time-varying delay and the distributed delay, respectively, and the time-varying delay ranges are as follows: \(0\le d_{1}(t)\le d_{1}\), \(h_{1}\le \dot{d}_{1}(t)\le h_{2}\), \(0\le d_{2}(t)\le d_{2}\).

Remark 1

The first item on the right side of the equation is the stable negative feedback of the system, which is often referred to as the “leakage” item. Since the self-attenuation process of neurons is not instantaneous, when the neurons are cut off from the neural network and external inputs, it takes time to reset to the isolated static state. In order to describe this phenomenon, it is necessary to introduce a “leakage” delay. In this paper, \(\sigma \) is called leakage delay.

Consider the following MJNN-RS with mixed time-varying delay:

where \(y(t)=(y_{1}(t),y_{2}(t),\cdots ,y_{n}(t))^\mathrm{T}\in \mathbf {R}^{n}\) is the neuron state vector. The meanings of other symbols are equivalent to MJNN driving system (1). u(t) represents the sample point controller, which is defined as follows:

where \(K(\cdot )\) is the feedback gain matrix of the sample point controller, \(e(t_{k})\) represents the discrete control function, and \(t_{k}\) is the sample point and satisfies:

Assuming that the period of sample points is bounded, for any \(k\ge 0\), there exists a normal quantity \(d_{3}\) satisfying \(t_{k+1}-t_{k}\le d_{3}\).

Remark 2

Obviously, due to the introduction of the discrete term \(e(t_{k})\), the synchronization analysis of the system becomes more difficult. In this paper, the input delay method is used to deal with the discrete term. Define a smooth function:

Easy to get: \(0\le d_{3}(t)\le d_{3}\). In summary, the sample point controller is converted as follows:

Let \(e(t)=y(t)-x(t)\), \(g(e(\cdot ))=f(y(\cdot ))-f(x(\cdot ))\). The MJNN error system with mixed time-varying delay is defined as follows:

Some lemmas are given below, which play a key role in the calculation of this paper.

Lemma 1

(Affine Bessel–Legendre inequalities)[8] If the function \(x(\cdot )\) satisfies \(x(\cdot ):[a,b]\rightarrow \mathbf {R}^{n}\) and \(N\in \mathbf {N}\), given any positive definite matrix \(R=R^\mathrm{T}\), there exists a matrix X such that the following relation holds

where

Remark 3

Unlike the traditional Bessel–Legendre inequalities [21], the right side of the inequality of Lemma 1 is the affine of the length of the integral interval, so it can be easily dealt with by convexity. In addition, Lemma 1 can be transformed into existing inequalities in literature under special conditions, such as affine Jensen inequality [2] and affine Wirtinger integral inequality [6], which shows that the inequality of Lemma 1 is more general.

Remark 4

Lemma 1 has an additional decision variable of \((N+1)(N+2)n^{2}\) because of the addition of additional matrix X to the traditional Bessel–Legendre inequalities [21].

Lemma 2

(Wirtinger Double Integral inequalities)[17] If constants m and n satisfy \(m<n\), for any positive definite matrix \(\mathbb {H}\), and \(x\in [m,n] \rightarrow \mathbf {R}^{n}\), the following inequalities hold

where

Remark 5

Lemma 2 adds a multiple integral on the basis of Wirtinger integral inequality [22]. At the same time, \(\varTheta _{d1}\) and \(\varTheta _{d2}\) on the right side of the inequality contain more sub-terms. Therefore, Lemma 2 can express the internal information of the system more completely in the derivative deformation of Lyapunov functional, so it has lower conservativeness.

Lemma 3

[3]When has the \(M-\varPi (t)\) transfer ratio matrix is located has the border area \(\mathcal {D}\) apex, territory \(\mathcal {D}_{1}\) by the following expression is composed:

where \(\varPi ^{(l)}(l=1,2,\cdot \cdot \cdot ,M)\) are vertices, r(t) is the parameter vector, it is assumed that the changes are known. As a result, \(\dot{r}(t)\) is as follows:

Remark 6

Easy to get \(\sum ^{M}_{l=1}r_{l}(t)=1\) is equivalent to \(\sum ^{M-1}_{l=1}\dot{r}_{l}(t)+\dot{r}_{M}(t)=0\). So \(\dot{r}_{M}(t)\) is expressed by \(\mid \dot{r}_{M}(t)\mid \le \sum ^{M-1}_{l=1}v_{l}\).

Lemma 4

[4] If the vector function x satisfies \(x:[0,\varrho ]\rightarrow \mathbf {R}^{n}\), given any positive definite matrix \(\mathcal {U}\) and positive scalar \(\varrho \), the following relation holds

Lemma 5

[28] For any real matrices D, E, F and scalar \(\varepsilon >0\), when \(F^\mathrm{T}F\le I\) is satisfied, the following inequalities hold

Assumption (A1) The neuron excitation function \(f(\cdot )\) satisfies the following conditions:

where \(u_{i}\) and \(v_{i}\) are arbitrary real numbers, and \(u\ne v\). \(l_{i}\) are known constants.

3 Main results

Theorem 1

Given scalars \(d_{i}>0,i=1,2,3\) and \(\dot{d}_{1}(t)\), and satisfy, \(d_{i}(t)\in [0,d_{i}],i=1,2,3\), \(\dot{d}_{1}(t)\in [h_{1},h_{2}]\), for any delay d(t), MJNN-DS (1) and MJNN-RS (2) achieve complete synchronization, if there exist symmetry matrices \(P_{P}^{(l)}>0\in \mathbf {R}^{7n}\), \(Q_{i}>0,i=1,2,3,4\in \mathbf {R}^{n}\), \(R_{i}>0,i=1,2\in \mathbf {R}^{n}\), \(Z_{i}>0,i=1,2\in \mathbf {R}^{n}\), \(S>0\in \mathbf {R}^{n}\), any matrices \(X_{i},i=1,2,3,4\in \mathbf {R}^{4n\times 3n}\), \(M_{1}\), \(M_{2}\) and \(\chi _{i}\) are matrices of suitable dimensions, such that the following hold:

where

\(e_{i}(i=1,\cdots ,24)\in \mathbf {R}^{n\times 24n}\) are identity matrices. Sample point controller parameters can be obtained: \(K_{i}=M_{2}^{-1}\chi _{i}\).

Proof

An improved LKFs are defined: \(V(x(t),t,r(t))=V_{1}(t)+V_{2}(t)+V_{3}(t)+V_{4}(t)+V_{5}(t)\). where

where

By deriving V(x(t), t, r(t)), meanwhile, Lemma 1 and Lemma 2 are used for \(V_{3}(t)\) and \(V_{4}(t)\) respectively, we get the following results

where

And the definitions of \(\varPhi _{1}\), \(\varPhi _{2}\), M, \(\varPi _{1}\), \(\varPi _{2}\), \(\varPi _{3}\) and \(\varPi _{4}\) are shown in (10)–(13). \(\square \)

The following inequalities are defined according to Assumption (A1)

where \(L=diag\{l_{1},l_{2},\cdots ,l_{n}\}\). Meanwhile, given any positive constant: \(\delta _{1}\), \(\delta _{2}\) and \(\delta _{3}\), the following inequalities can be obtained

From Lemma 4, the following can be obtained

Given any constant \(M_{1}\) and \(M_{2}\), the following equation holds

Add (15)–(19) to \(\dot{V}_{1}(t)\)-\(\dot{V}_{5}(t)\), and then deal with the items. Separating the definite items from the uncertain items in \(A(\cdot )\), \(B(\cdot )\), \(C(\cdot )\) and \(D(\cdot )\), the following results can be obtained:

where \(\bar{\varPhi }_{3}\) is a matrix consisting of uncertain terms and \(\varPhi _{3}\) is a matrix consisting of deterministic terms, as shown in (14). Next, lemma 5 is used for matrix \(\bar{\varPhi }_{3}\), which can be obtained as follows:

For convenience, let \(M_{1}=\varepsilon _{1}M_{2}\) and \(\chi _{i}=M_{2}K_{i}\), \(\varepsilon _{1}\) is an arbitrary real number. To sum up, combined with (20), we can get:

where

Therefore, as long as satisfying (22) is negative definite, then \(\dot{V}(x(t),t,r(t))\) is strictly negative definite in the interval \(d_{1}(t)\in [0,d_{1}]\), \(\dot{d}_{1}(t)\in [h_{1},h_{2}]\). According to Lyapunov stability theory, under the control of the sample point controller, the MJNN-DS (1) and the MJNN-RS (2) are completely synchronized. Sample point controller parameters can be obtained: \(K_{i}=M_{2}^{-1}\chi _{i}\).

Remark 7

In (24), because of the existence of \(\frac{dP_{P}(r(t))}{dt}\), we cannot directly calculate the results by MATLAB, so we use Lemma 3 to deform \(P_{P}(r(t))\). The results are as follows:

where \(P_{P}^{(l)}\) expresses respective polyhedron apex. The time-varying transition rates in \(P_{P}(r(t))\) deforms as follows:

According to (22), we have

where \(\bar{\varUpsilon }_{P}^{(ls)}(d_{i}(t),\dot{d}_{1}(t))\) is equivalent to \(\varUpsilon (d_{i}(t),\dot{d}_{1}(t))\) except \(\bar{\varOmega }_{P}^{(ls)}(d_{i}(t),\dot{d}_{1}(t))\), it is expressed as follows:

We can get (6)–(9) by using the method of dealing with \(\sum ^{M-1}_{n=1}\dot{r}_{n}(t)(P_{P}^{(n)}-P_{P}^{(M)})\) in [3]. Therefore, as long as (6) is satisfied, (22) is strictly negative definite. This completes the proof. \(\square \)

Remark 8

When using Lemma 1 to deal with \(\dot{V}(t)\), we set the Legendre parameter \(N=2\). If we take \(N=1\), we just need to replace \(\eta _{1}(t)\) with \(\bar{\eta }_{1}(t)=[e^\mathrm{T}(t)\quad e^\mathrm{T}(t-d_{1}(t))\quad e^\mathrm{T}(t-d_{1})\quad \int ^{t}_{t-d_{1}(t)}e^\mathrm{T}(s)ds \int ^{t-d_{1}(t)}_{t-d_{1}}e^\mathrm{T}(s)ds]^\mathrm{T}\), and the rest of the processing is basically the same as Theorem 1.

Remark 9

If we increase the Legendre parameter N, we can get a stricter bound for the integral term in \(\dot{V}_{3}(t)\). In this case, Lyapunov functions, especially \(\eta _{1}(t)\) in \(V_{1}\), should be changed appropriately in the order of increasing N to obtain a less conservative stability condition. \(N=1\) and \(N=2\) correspond to \(\int ^{b}_{a}e(s)ds\) and \(\frac{1}{(b-a)}\int ^{b}_{a}\int ^{b}_{\theta }e(s)dsd\theta \) in \(\eta _{1}(t)\), respectively. When \(N>2\), corresponding to the following: \(\frac{1}{(b-a)^{N-1}}\int ^{b}_{a}\int ^{b}_{\alpha _{1}}\cdots \int ^{b}_{\alpha _{N-1}}e(\alpha _{N})d\alpha _{N}\cdots d\alpha _{2}d\alpha _{1}\).

Remark 10

When considering the range of time-varying delays, it is generally set to: \(h_{1}\le h\le h_{2}\), \(d_{1}\le \dot{h}\le d_{2}\). For the convenience of the next discussion, we present the delay and its derivatives in a two-dimensional plane as shown in Fig. 1.

The plane presents a rectangle, and the coordinates of its four vertices are: \((h_{1},d_{1})\), \((h_{1},d_{2})\), \((h_{2},d_{1})\) and \((h_{2},d_{2})\). The area of rectangle is the range of time-varying delays. It is improved by [21]. Change the four vertices into the following: (0, 0), \((h_{1},d_{2})\), \((h_{2},0)\) and \((h_{2},d_{1})\). The shape is shown in Fig. 2. It can be seen that in the same time-delay interval, the area of Fig. 2 is smaller than that of Fig. 1, which indicates that it is less conservative. Therefore, Theorem 1 can be optimized by this theory, and the results are as follows.

Theorem 2

Same as Theorem 1, MJNN-DS (1) and MJNN-RS (2) achieve complete synchronization, if the following hold:

where \(\varUpsilon _{P}^{(ls)}(\cdot )+\varUpsilon _{P}^{(sl)}(\cdot )<0\) is defined in (6).

4 Numerical examples

Firstly, Examples 1 and 2 illustrate the validity of affine Bessel–Legendre inequalities and Wirtinger double integral inequalities. Secondly, Example 3 illustrates the effectiveness of optimizing two-dimensional space of time delay. Finally, Example 4 shows that under the control of sample point controller, MJNN-DS (1) and MJNN-RS (2) can achieve synchronization.

Example 1

Consider the following two modes and the matrix parameters[16]:

with transition rates matrix

Let \(h_{2}=0\), \(\mu _{11}=-7\). We compare the upper bounds of time-varying delays. The results are shown in Table 1. From Table 1, we can see that with the increase in N, the better the effect of affine Bessel–Legendre inequalities is.

Example 2

The following two modes and the matrix parameters[16] are hold:

with transition rates matrix

By setting different upper bounds of delay derivatives \(h_{2}\), we obtain different upper bounds of delay as shown in Table 2. From Table 2, we can see that with the increase in N, the better the effect of affine Bessel–Legendre inequalities is.

Example 3

Consider MJNN-DS (1) and MJNN-RS (2) with two modes and the matrix parameters:

Assume that the transition rate matrix is time-varying in the following vertex polyhedron \(\varPi (t)=sin^{2}(t)\varPi ^{(1)}+cos^{2}(t)\varPi ^{(2)}\):

Let \(\delta _{1}=\delta _{2}=\delta _{3}=\varepsilon =\varepsilon _{1}=0.1\). When \(N=2\), the time-varying delay range obtained from Theorem 1: \(0\le d_{1}(t)\le 1.63\). Similarly, the time-varying delay range obtained from Theorem 2: \(0\le d_{1}(t)\le 1.70\). It can be shown that Theorem 2 is effective in optimizing the two-dimensional geometric space of time delay.

Example 4

Consider MJNN-DS (1) and MJNN-RS (2) with two modes and the matrix parameters:

Assume that the transition rate matrix is time-varying in the following vertex polyhedron \(\varPi (t)=sin^{2}(t)\varPi ^{(1)}+cos^{2}(t)\varPi ^{(2)}\):

Let \(\delta _{1}=\delta _{2}=\delta _{3}=\varepsilon =\varepsilon _{1}=0.1\), the range of mixed time-varying delay is: \(0\le d_{1}(t)\le 1.7\), \(0\le d_{2}(t)\le 0.3\), \(0\le d_{3}(t)\le 1.9\), \(0.1\le \dot{d}_{1}(t)\le 0.6\). Substitute the above data into Theorem 2, we can get the parameters of the sample point controller as follows:

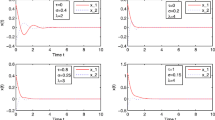

The neuron excitation function is \(f(x)=tanhx\). The initial condition is \(x_{0}(\theta )=[-0.3,2,1.2]\). As shown in Fig. 3, when MJNN (1) takes the above parameters, it shows obvious chaotic characteristics. Figure 4 is a Markov jump response curve with time-varying probability transition perturbations. Figures 5 and 6 describe the time state curves of MJNN-DS (1) and MJNN-RS (2). Figures 7 and 8 describe the convergence behavior of errors between MJNN-DS (1) and MJNN-RS (2). The numerical simulation shows the validity of the sample point controller for the complete synchronization of MJNN-DS (1) and MJNN-RS (2) with mixed time-varying delay and parameter uncertainties.

5 Conclusions

In this paper, a sample point controller is used to synchronize the DS and RS of MJNN with mixed time-varying delay and uncertain parameters. When dealing with error systems, Wirtinger double integral inequalities and affine Bessel–Legendre inequalities are introduced into Lyapunov functional to reduce conservativeness. In addition, when discussing the two-dimensional geometric area with time-varying delays, the conservativeness is reduced by changing the vertex of the polyhedron without changing the range of the delays. Finally, it is verified by numerical simulation that MJNN-DS and MJNN-RS are fully synchronized under the control of sample point controller, and the parameters of the controller are obtained.

References

Ahn, C.K., Shi, P., Wu, L.: Receding horizon stabilization and disturbance attenuation for neural networks with time-varying delay. IEEE Trans. Cybern. 45(12), 2680–2692 (2017)

Briat, C.: Convergence and equivalence results for the Jensen’s inequality—application to time-delay and sampled-data systems. IEEE Transa. Autom. Control 56(7), 1660–1665 (2012)

Ding, Y., Liu, H.: Stability analysis of continuous-time Markovian jump time-delay systems with time-varying transition rates. J. Frankl. Inst. 353(11), 2418–2430 (2016)

Gu, K., Kharitonov, V.L., Chen, J.: Stability of Time-Delay Systems. Birkh-user, Boston (2003)

Guan, H., Gao, L.: Delay-dependent robust stability and \(H_{\infty }\) control for jump linear systems with interval time-varying delay. In: Proceedings of the 26th Chinese Control Conference, pp. 609–614. IEEE, Zhangjiajie (2007)

Gyurkovics, Eva: A Note on Wirtinger-Type Integral Inequalities for Time-Delay Systems. Pergamon Press, Inc, Oxford (2015)

Kasemsuk, C., Oyama, G., Hattori, N.: Management of impulse control disorders with deep brain stimulation: a double-edged sword. J. Neurol. Sci. 374, 63–68 (2017)

Lee, W.I., Lee, S.Y., Park, P.G.: Affine Bessel–Legendre inequality: application to stability analysis for systems with time-varying delays. Automatica 93, S0005109818301687 (2018)

Li, R.G., Wu, H.N.: Adaptive synchronization control based on QPSO algorithm with interval estimation for fractional-order chaotic systems and its application in secret communication. Nonlinear Dyn 92(3), 1–25 (2018)

Lin, F.F., Zeng, Z.Z.: Synchronization of uncertain fractional-order chaotic systems with time delay based on adaptive neural network control. Acta Phys. Sin. 66, 9 (2017)

Mayer, J., Schuster, H.G., Claussen, J.C., et al.: Corticothalamic projections control synchronization in locally coupled bistable thalamic oscillators. Phys. Rev. Lett. 99(6), 068102 (2007)

Mohammadzadeh, A., Ghaemi, S.: Robust synchronization of uncertain fractional-order chaotic systems with time-varying delay. Nonlinear Dyn. 93(4), 1809–1821 (2018)

Nagamani, G., Joo, Y.H., Radhika, T.: Delay-dependent dissipativity criteria for Markovian jump neural networks with random delays and incomplete transition probabilities. Nonlinear Dyn. 91(4), 2503–2522 (2018)

Nagamani, G., Joo, Y.H., Radhika, T.: Delay-dependent dissipativity criteria for Markovian jump neural networks with random delays and incomplete transition probabilities. Nonlinear Dyn. 91(56), 2503–2522 (2018)

Novienko, V., Ratas, I.: In-phase synchronization in complex oscillator networks by adaptive delayed feedback control. Phys. Rev. E 98(4), 042302 (2018)

Nuo, Xu, Sun, L.: An improved delay-dependent stability analysis for Markovian jump systems with interval time-varying-delays. IEEE Access 6, 33055–33061 (2018)

Park, M., Kwon, O., Park, J.H., Lee, S., Cha, E.: Stability of time-delay systems via Wirtinger-based double integral inequality. Automatica 55, 204–208 (2015)

Park, I.S., Kwon, N.K., Park, P.G.: Dynamic output-feedback control for singular Markovian jump systems with partly unknown transition rates. Nonlinear Dyn. 95(4), 1–12 (2019)

Rong, Z., Yang, Y., Xu, Z., et al.: Function projective synchronization in drive—response dynamical network. Phys. Lett. A 374(30), 3025–3028 (2010)

Schibli, T.R., Kim, J., Kuzucu, O., et al.: Attosecond active synchronization of passively mode-locked lasers by balanced cross correlation. Opt. Lett. 28(11), 947–9 (2003)

Seuret, A.: Frdric Gouaisbaut. Stability of linear systems with time-varying delays using Bessel–Legendre inequalities. IEEE Trans. Autom. Control 63(1), 225–232 (2017)

Seuret, A., Gouaisbaut, F.: Wirtinger-based integral inequality: application to time-delay systems. Automatica 49(9), 2860–2866 (2013)

Shu, Y., Liu, X.G., Qiu, S., et al.: Dissipativity analysis for generalized neural networks with Markovian jump parameters and time-varying delay. Nonlinear Dyn. 89(3), 2125–2140 (2017)

Sun, L., Nuo, X.: Stability analysis of Markovian jump system with multi-time-varying disturbances based on improved interactive convex inequality and positive definite condition. IEEE Access 7, 54910–54917 (2019)

Syed, A.M., Marudai, M.: Stochastic stability of discrete-time uncertain recurrent neural networks with Markovian jumping and time-varying delays. Math. Comput. Model. 54(9–10), 1979–1988 (2011)

Tao, J., Wu, Z.G., Su, H., et al.: Asynchronous and resilient filtering for Markovian jump neural networks subject to extended dissipativity. IEEE Trans. Cybern. 99, 1–10 (2018)

Wang, J., Luo, Y.: Further improvement of delay-dependent stability for Markov jump systems with time-varying delay. In Proceedings of the 7th World Congress on Interligent Control and Automation, pp. 6319-6324. IEEE, Chongqing, (2008)

Wang, Y., Xie, L., de Souza, C.E.: Robust control of a class of uncertain nonlinear system. Syst. Control Lett. 19(2), 139–149 (1992)

Wang, Y.F., Lin, P., Wang, L.S.: Exponential stability of reaction-diffusion high-order Markovian jump Hopfield neural networks with time-varying delays. Nonlinear Anal. Real World Appl. 13(3), 1353–1361 (2012)

Wang, J., Chen, X., Feng, J., et al.: Synchronization of networked harmonic oscillators subject to Markovian jumping coupling strengths. Nonlinear Dyn. 91(1), 1–13 (2018)

Xu, S., Lam, J., Mao, X.: Delay-dependent \(H_{\infty }\) control and filtering for uncertain Markovian jump systems with time-varying delays. IEEE Trans. Circuits Syst. I Regul. P. 54(9), 2070–2077 (2007)

Zeng, H.B., He, Y., Wu, M., et al.: Free-matrix-based integral inequality for stablilty analysis of systems with time-varying delay. IEEE Trans. Autumatic Control 60(10), 2768–2772 (2015)

Zhang, X., Lv, X., Li, X.: Sampled-data-based lag synchronization of chaotic delayed neural networks with impulsive control. Nonlinear Dyn. 90(3), 2199–2207 (2017)

Zhang, X.M., Han, Q.L., Seuret, A., et al.: An improved reciprocally convex inequality and an augmented Lyapunov–Krasovskii functional for stability of linear systems with time-varying delay. Automatica 84, 221–226 (2017)

Zhao, X., Zeng, Q.: Delay-dependent stability analysis for Markovian jump systems with interval time-varying-delay. Int. J. Autom. Comput. 7(2), 224–229 (2010)

Zhi, Z., Liu, K., Wang, W.Q., et al.: Robust adaptive beamforming against mutual coupling based on mutual coupling coefficients estimation. IEEE Trans. Veh. Technol. 99, 1–1 (2017)

Zhu, X.L., Yang, G.H.: Jensen integral inequality approach to stability analysis of continuous-time systems with time-varying delay. IET Control Theory Appl. 2(6), 524–534 (2008)

Acknowledgements

Project supported by the National Natural Science Foundation of China (Grant Nos. 61403278, 61503280). The authors are very indebted to the Editor and the anonymous reviewers for their insightful comments and valuable suggestions that have helped improve the academic research.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xu, N., Sun, L. Synchronization control of Markov jump neural networks with mixed time-varying delay and parameter uncertain based on sample point controller. Nonlinear Dyn 98, 1877–1890 (2019). https://doi.org/10.1007/s11071-019-05293-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-019-05293-y