Abstract

This paper focuses on designing state estimators for fractional-order memristive neural networks (FMNNs) with time delays. It is meaningful to propose a suitable state estimator for FMNNs because of the following two reasons: (1) different initial conditions of memristive neural networks (MNNs) may cause parameter mismatch; (2) state estimation approaches and theories for integer-order neural networks cannot be directly extended and used to deal with fractional-order neural networks. The present paper first investigates state estimation problem for FMNNs. By means of Lyapunov functionals and fractional-order Lyapunov methods, sufficient conditions are built to ensure that the estimation error system is asymptotically stable, which are readily solved by MATLAB LMI Toolbox. Ultimately, two examples are presented to show the effectiveness of the proposed theorems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

It is well known that fractional derivatives are a generalization of integer-order derivatives [1, 2]. Because fractional derivatives have the special feature of infinite memory in comparison with integer-order derivatives, fractional-order differential equations are more effective and precise tools in describing the memory and hereditary properties than classical integer-order ones [3, 4]. Research evidence shows fractional-order electric conductance exists in the cell membrane [5]. Both biological neurons and fractional-order derivatives have memory. Therefore, fractional-order neural networks (FNNs) can more accurately describe and simulate neurons in the human brain than classical integer-order neural networks. Researchers become interested in the dynamics of FNNs and dynamical behaviors of FNNs have been addressed in the last years [6,7,8,9,10,11,12,13,14,15,16].

Memristor comes from the words: memory and resistor, which was first presumed as the fourth fundamental circuit element by Chua [17]. The first memristor device was realized in Hewlett-Packard laboratory [18, 19]. Due to the long-term memory property of memristors, memristors were utilized to simulate synapses. With the replacement of resistors by memristors in classical neural networks, a special neural network named memristive neural network has been constructed. The first memristor-based neural networks was proposed by Pavlov [20]. The neural networks contains only three neurons connected by two synapses simulated by memristors, but it can realize the basic function of brain associative memory. Associate memory function was realized in [21], in which the neural network contains three electronic neurons and the synapses were also simulated by memristors. Guo et al. [22] pointed out that the number of equilibria in an n-neuron memristor-based cellular neural network significantly increases up to \(2^{2n^2+n}\) compared with \(2^n\) in a classical cellular neural network. The application of memristor-based cellular neural networks for associative memories will evidently enhance the storage ability. At the same time, some literature showed that memristor-based neural networks also have a great potential application in the nonlinear circuits [23,24,25] and the new generation of powerful brain-like neural computers [26,27,28]. The memristors have varying history-dependent resistance, which makes memristor-based neural networks having more complex and fruitful dynamics compared with classical neural networks. Recently, important papers have been published on the dynamics of MNNs, such as stability [29], synchronization [30,31,32,33,34], attractivity [22], passivity [35].

It should be pointed out that fractional-order systems have the advantage in describing the hereditary pinched hysteresis property of memristors. Therefore, fractional derivatives are naturally introduced to be combined with memristors to build a new kind of neural networks called FMNNS. The Mittag-Leffler stability and synchronization criteria of FMNNs were derived in [36]. By means of a new fractional derivative inequality, the global Mittag-Leffler stabilization for a class of FMNNs was investigated [37]. Time delay is well accepted in neural networks and it is considered as the source of poor system behaviors, such as instability, bifurcation, chaotic attractors [38,39,40]. In this case, it is important to investigate FMNNs with time delays [41,42,43,44,45]. Using fractional-order comparison theorem and linear feedback control, Chen et al. studied the stability and synchronization conditions for FMNNs with time delay [41]. The adaptive synchronization criteria are given for FMNNs with time delay in [42].

Among the application of MNNs, the neuron state information is often needed to cope with some control schemes, such as pinning control or state feedback control [46,47,48,49]. But for MNNs, we often have difficulty in obtaining all the neurons state information because of the existence of network congestion, packet dropouts or missing measurements. Thus, it is a significant way to evaluate the neuron state by means of the obtainable network outputs. Till now, there are some results about the state estimation for integer-order MNNs [50,51,52]. For all we know, there are few results about the state estimation of FMNNs with and without time delay. Our goal is to estimate the system states to better understand the true system information, which will benefit its important applications in control fields.

Motivated by the reasons above, we focus on designing a proper state estimator for a class of FMNNs with time delay. The novelty of this paper includes three aspects. (1) For the first time, state estimators for a class of FMNNs with and without time delays are designed; (2) By means of Lyapunov approaches for fractional-order systems, state estimation theorems are expressed in terms of LMIs. Furthermore, some numerical simulation examples are given to illustrate the validity and applicability of the obtained theorems. (3) The methods used in this paper are also valid for the state estimation problem for FMNNs with discontinuous activation functions.

The content of this paper is as follows. In Sect. 2, we formulate the models under investigation and look back some fundamental definitions and lemmas. In Sect. 3, state estimation criteria are established. In Sect. 4, two examples are used to demonstrate the effectiveness of the proposed theorems. Finally, concluding remarks are given in Sect. 5.

2 Preliminaries

We introduce mathematical models of FMNNs with time delay, and recall Caputo fractional derivative and some lemmas.

Notation Throughout this paper, let \({\mathbb {R}},\) \({\mathbb {R}}^{m\times n}\) and \(R^n\) represent the set of real numbers, \(m\times n\) real matrices and n-dimensional vectors, respectively. \(v^{T}\) (respectively, \(A^{T}\)) denotes the transposition of a vector v (respectively, matrix A). \(I_n\) is the \(n\times n\) identify matrix. \(|\cdot |\) is the Euclidean norm in \({\mathbb {R}}^n\). The notation \(P>0\) refers to a positive definite and symmetric matrix. \(\lambda _\mathrm{max}(A)\) (\(\lambda _\mathrm{min}(A)\)) is the maximum (minimum) eigenvalue of the matrix A. The \(*\) is adopted to refer to the symmetric term in a matrix.

Definition 1

[1, 2] Define q order fractional integral for a function \(\xi \) as follows:

where \(t\ge t_0\) and \(q>0.\)

Definition 2

[1, 2] Suppose \(\xi \in {\mathcal {C}}^m([t_0,+\infty ), {\mathbb {R}}),\) its q order Caputo’s derivative is defined as follows:

where \(t\ge t_0\) and m is a positive integer satisfying \(m-1<q<m\). Specially, when \(0<q<1,\) \(D^{q}\xi (t)=\frac{1}{\varGamma (1-q)}\int _{t_0}^t(t-s)^{-q}\xi '(s)\mathrm{d}s.\)

Consider the following FMNNs with a time delay:

where \(0<\alpha <1,\) \(v(t)=(v_1(t), v_2(t),\ldots , v_n(t))^{T}\in {\mathbb {R}}^n\) is the neuron’ state variable; \(C=\mathrm {diag}\{c_1,\)\( c_2,\ldots ,c_n\}\) \(>0,\) \({\tilde{f}}(v(t-\sigma ))=({\tilde{f}}_1(v_1(t-\sigma )),\)\({\tilde{f}}_2(v_2(t-\sigma )),\ldots , {\tilde{f}}_n(v_n(t-\sigma )))^{T}\) and \({\tilde{f}}(v(t))=({\tilde{f}}_1(v_1(t)),{\tilde{f}}_2(v_2(t)),\) \(\ldots , {\tilde{f}}_n(v_n(t)))^{T}\) are the neuron activation functions with and without time delay; \(\sigma \) denotes the time delay, \(J=(J_1(t), J_2(t),\) \(\ldots , J_n(t))\in {\mathbb {R}}^n\) denotes an external input vector. A(v(t)) \(=[a_{ij}(v_i(t))]_{n\times n}\), \(B(v(t))=[b_{ij}(v_i(t))]_{n\times n}\) are the connection memristive weight matrix at time t and \(t-\sigma \):

\(T_i>0\) are switching jumps; weights \(a^{*}_{ij},\) \(a^{**}_{ij},\) \(b^{*}_{ij}\) and \(b^{**}_{ij}\) are all constants for \(1\le i,~j\le n\).

In this paper, we consider Filippov solution for all systems because of the discontinuity of \(a_{ij}(v_i(t))\) and \(b_{ij}(v_i(t)).\)

- (\(\mathrm {H_{1}}\)):

-

\(\forall i\in \{1,\ldots ,n\},\) \({\tilde{f}}_i(0)=0,\) and \({\tilde{f}}_i\) satisfies Lipschitz conditions:

$$\begin{aligned} |{\tilde{f}}_i(u)-{\tilde{f}}_i(v)|\le l_i|u-v|,~ \forall u, v\in {\mathbb {R}}, \end{aligned}$$where \(l_i>0,\) \(L=\mathrm {diag}\{l_1,l_2,\ldots ,l_n\}.\)

Denote \({\hat{a}}_{ij}=\mathrm {max}\{a^{*}_{ij},a^{**}_{ij}\},\) \({\check{a}}_{ij}=\mathrm {min}\{a^{*}_{ij},a^{**}_{ij}\},\) \({\hat{b}}_{ij}=\mathrm {max}\{b^{*}_{ij},b^{**}_{ij}\},\) \({\check{b}}_{ij}=\mathrm {min}\{b^{*}_{ij},b^{**}_{ij}\},\) \(a_{ij}=\frac{1}{2}({\hat{a}}_{ij}+{\check{a}}_{ij}),\) \({\tilde{a}}_{ij}=\frac{1}{2}({\hat{a}}_{ij}-{\check{a}}_{ij}),\) \(b_{ij}=\frac{1}{2}({\hat{b}}_{ij}+{\check{b}}_{ij}),\) \({\tilde{b}}_{ij}=\frac{1}{2}({\hat{b}}_{ij}-{\check{b}}_{ij}).\)

Based on the fractional differential inclusion [53] and some transformation [54, 55], the FMNNs (1) is equivalent to the following system:

where \({\tilde{A}}=({\tilde{a}}_{ij})_{n\times n},\) \({\tilde{B}}=({\tilde{b}}_{ij})_{n\times n},\) \(A=(a_{ij})_{n\times n}\) and \(B=(b_{ij})_{n\times n};\)

\(E_1=(\sqrt{{\tilde{a}}_{11}}\epsilon _1,\) \(\ldots ,\sqrt{{\tilde{a}}_{1n}}\epsilon _1,\ldots ,\sqrt{{\tilde{a}}_{n1}}\epsilon _n,\ldots , \sqrt{{\tilde{a}}_{nn}}\epsilon _n)_{n\times n^2},\) \(F_1=(\sqrt{{\tilde{a}}_{11}}\epsilon _1,\ldots ,\sqrt{{\tilde{a}}_{1n}}\epsilon _n,\ldots , \) \(\sqrt{{\tilde{a}}_{n1}}\epsilon _1,\ldots ,\sqrt{{\tilde{a}}_{nn}}\epsilon _n)^T_{n^2\times n},\) \(E_2=(\sqrt{{\tilde{b}}_{11}}\epsilon _1,\ldots , \sqrt{{\tilde{b}}_{1n}}\epsilon _1,\ldots ,\sqrt{{\tilde{b}}_{n1}}\epsilon _n,\ldots ,\sqrt{{\tilde{b}}_{nn}}\epsilon _n)_{n\times n^2},\) \(F_2=\)\( (\sqrt{{\tilde{b}}_{11}}\epsilon _1,\ldots ,\) \(\sqrt{{\tilde{b}}_{1n}}\epsilon _n,\ldots ,\sqrt{{\tilde{b}}_{n1}}\epsilon _1, \ldots , \sqrt{{\tilde{b}}_{nn}}\epsilon _n)^T_{n^2\times n},\) \([\varSigma _k(t)]_{n^2\times n^2}=\{\mathrm {diag}\{\theta _{11}^k(t),\ldots , \theta _{1n}^k(t),\ldots ,\theta _{n1}^k(t),\ldots ,\theta _{nn}^k(t)\}:|\theta _{ij}^k|\le 1,\) \(1\le i,j \le n,\) \(k=1,2.\)} \(\epsilon _i\in {\mathbb {R}}^n,\) the ith element of \(\epsilon _i\) is 1 and others are 0;

We can prove \(\varSigma _k^T(t)\varSigma _k(t)\le I, k=1,2.\)

In relatively large-scale memristor-based neural networks which are state-dependent systems, it is not an easy thing to get all the neurons’ state information. The neuron states can be observed from the available network output.

The network measurements \(u(t)\in {\mathbb {R}}^m\) is supposed as follows:

where \(D\in {\mathbb {R}}^{m\times n}\) is a known constant matrix, and \(h:{\mathbb {R}}^+\times {\mathbb {R}}^n\rightarrow {\mathbb {R}}^m\) denotes the neuron-dependent nonlinear disturbance satisfying Lipschitz condition:

with \(H\in {\mathbb {R}}^{m\times n}\) and \(h(t,0)=0.\)

The estimator \({\hat{v}}(t)\in {\mathbb {R}}^n\) is as follows.

the estimator gain \( K\in {\mathbb {R}}^{n\times m}\) will be determined later.

Remark 1

Different initial conditions might cause a mismatch parameter problem between the system state and the estimator state if the estimator system has also memristive weights. To deal with this problem, a proper estimator (5) is designed by transforming system (1) into the equivalent model (2).

For the reason of simplification, let \(\varDelta A=E_1\varSigma _1(t)F_1,\) \(\varDelta B=E_2\varSigma _2(t)F_2,\) and denote the elimination error by \({\tilde{v}}(t)=v(t)-{\hat{v}}(t).\) The error dynamical system is derived from (2) and (5):

where \(f({\tilde{v}}(t))={\tilde{f}}(v(t))-{\tilde{f}}({\hat{v}}(t)),\) \({\tilde{h}}(t,{\tilde{v}}(t))=h(t,v(t))-h(t,{\hat{v}}(t)).\)

The initial condition of (6) is \({\tilde{v}}(s)=\psi (s),~s\in [t_0-\sigma ,t_0]\), where \(\psi (s)\in {\mathcal {C}}([t_0-\sigma ,t_0],{\mathbb {R}}^n).\)

Let \({\bar{v}}(t)=[v^{T}(t),{\tilde{v}}^{T}(t)]^{T}\) be the augmented vector, and the augmented system can be obtained from (2) and (6):

where

\(\xi _1(t)=[{\tilde{f}}^{T}(v(t)),f^{T}({\tilde{v}}(t))]^{T},\) \(\xi _2(t)=[{\tilde{f}}^{T}(v(t-\sigma )),\) \(f^{T}({\tilde{v}}(t-\sigma ))]^{T},\) \(\xi _3(t)=[h^{T}(v(t)),{\tilde{h}}^{T}({\tilde{v}}(t))]^{T}.\)

Definition 3

For every \(\psi \in {\mathcal {C}}([t_0-\sigma ,t_0],{\mathbb {R}}^n),\) system (5) becomes an asymptotical state estimator of system (1), if \(\lim _{t\rightarrow \infty }{\tilde{v}}(t)=0.\)

Lemmas 1–5 play an important role in proving the main theorems.

Lemma 1

[56] Suppose \(u(t)\in {\mathbb {R}}^n\) is continuous and differential, \(Q\in {\mathbb {R}}^{n\times n}\) and \(Q>0\). Then, we have

Lemma 2

[57] Let \(X,~Y\in {\mathbb {R}}^n\), \(\epsilon >0\), then we have:

Lemma 3

(Schur Complement) [58] The matrix

if and only if conditions ① or ② holds:

① \(Q_{22}< 0, Q_{11}-Q_{12}Q_{22}^{-1}Q_{12}^{T}< 0;\)

② \(Q_{11}< 0,Q_{22}-Q_{12}^{T}Q_{11}^{-1}Q_{12}< 0.\)

Lemma 4

[59] The Caputo fractional-order functional differential equation

where \(u_t=u(t+\theta )\in {\mathcal {C}}([t_0-\sigma ,t_0],{\mathbb {R}}^n),-\sigma \le \theta \le 0,\) is globally uniformly asymptotically stable, if conditions (i)–(iii) are satisfied:

-

(i)

\(g:[t_0,\infty )\times {\mathcal {C}}([t_0-\sigma ,t_0],{\mathbb {R}}^n)\rightarrow {\mathbb {R}}^n\) is piecewise continuous and locally Lipschitz,

-

(ii)

\(V(t,u)\in {\mathcal {C}}^1({\mathbb {R}}\times {\mathbb {R}}^n, {\mathbb {R}})\rightarrow {\mathbb {R}}\) and there exists two positive functions \(q_1(s)\) and \(q_2(s)\) (\(s>0\)) satisfying \(q_1(||u||)\le V(t,u)\le q_2(||u||),\) where \(q_1(0)=q_2(0)=0\) and \(q_2(s)\) is strictly increasing.

-

(iii)

There exist two constants \(\epsilon>\mu >0\) such that \(D^{\alpha }V(t,u(t))\le -\epsilon V(t,u(t))+\mu \sup _{-\sigma \le \theta \le 0}\)\(V(t+\mu ,u(t+\theta ))\) for \(t\ge t_0.\)

Lemma 5

[60] The equilibrium point \(u=0\) of the fractional-order differential equation \(D^{\alpha }u(t)=g(t,u(t))\) with initial condition \(u(t_0)=u_{t_0}\) is Mittag-Leffler stable if the following conditions are satisfied:

-

(i)

V(t, u(t)) is a continuously differential function and satisfy

$$\begin{aligned}&\alpha _1\Vert u\Vert ^a\le V(t,u(t))\le \alpha _2\Vert u\Vert ^{ab},\\&\quad D^{\beta }V(t,u(t))\le -\alpha _3\Vert u\Vert ^{ab}, \end{aligned}$$where \(t\ge 0,\) \(0<\beta <1,\) \(\alpha _1,\) \(\alpha _2,\) \(\alpha _3,\) a, b are positive constants.

-

(ii)

\(0\in {\mathcal {D}}\subset {\mathbb {R}}^n,\) for \(u\in {\mathcal {D}},\) V(t, u(t)) is locally Lipschitz. \({\mathcal {D}}={\mathbb {R}}^n\) implies the global Mittag-Leffler stability of \(u=0.\)

Remark 2

The asymptotical stability can be derived from Mittag-Leffler stability.

Remark 3

The common integer-order Lyapunov methods used in the state estimation of neural networks are not suitable to deal with fractional-order ones. For this reason, Lemmas 4 and 5 are adopted to investigate the state estimation of FNNs.

3 Main results

We will present state estimation theorems for FMNNs in this section.

Theorem 1

Suppose that \((\mathrm H_1)\) holds, for given constants \(\epsilon>\mu >0,\) system (5) is an asymptotical state estimator of the delayed FMNN (1), if there exist two real matrices \(P_1>0,\) \(P_2>0,\) one real matrix X, and constants \(\beta >0,\) \(\rho _1>0,\) \(\rho _2>0,\) satisfying the following LMIs:

where

Furthermore, the gain matrix is designed by

Proof

Construct the following Lyapunov functional as

where \(P=\mathrm {diag}\{P_1,P_2\}.\)

According to \((\mathrm {H_1})\), (4) and Lemmas 1, 2, we get

where \({\bar{L}}=\text{ diag }\{L,L\}.\)

By Lemma 3, \(\varPhi <0\) is equivalent to

where

\({\tilde{N}}_1=[F_1L,0_{n^2\times 3n}],~~{\tilde{N}}_2=[F_2L,0_{n^2\times 3n}].\)

Let

It follows from (10) and (11),

where

Based on Lemma 3, \(\varPsi <0\) implies that

Using (9), (10) and (13), we have

Combing the conditions \(\epsilon>\mu >0,\) all the conditions in Lemma 4 are satisfied, therefore, system (7) is globally asymptotically stable. So, system (5) is an asymptotical state estimator of system (1). This completes the proof. \(\square \)

Remark 4

The state estimation criteria given in Theorem 1 are given in terms of LMIs that are of less conservatism and can be solved easily by MATLAB. The conservatism can also be effectively decreased by using the delay-partitioning technique. It is known that the results obtained by delay-partitioning technique are dependent on the partitioning size. The size of the LMIs will get bigger and the computation complexity will increase if delay-partitioning technique is adopted. So, how to balance the partitioning size and the calculation burdens is also an interesting problem.

When \(B(v(t))=0,\) there is no delay in system (1). In this case, we have the following systems and results:

The estimator is of the form

and the error dynamical system and the augmented system are as follows.

Suppose that the activation functions satisfy the following condition:

- (\(\mathrm H_2\)):

-

[61] For \(i\in \{1,2\),\(\ldots ,n\}\), the neuron activation functions \({\tilde{f}}_i(\cdot )\) are continuous and bounded, and satisfy the following conditions:

$$\begin{aligned} \begin{array}{l} l_i^-\le \frac{{\tilde{f}}_i(s_1)-{\tilde{f}}_i(s_2)}{s_1-s_2}\le l_i^+,\forall s_1,s_2\in {\mathbb {R}}(s_1\ne s_2)\\ {\tilde{f}}_i(0)=0,i=1,2,\ldots ,n. \end{array} \end{aligned}$$

Remark 5

The conditions in \((H_2)\) are weaker than the usual Lipschitz conditions because the constants \(l_i^-,\) \(l_i^+\) can be positive, negative, or zero. The lower and upper bounds of the activation functions can be accurately determined. Hence, the conservatism can be effectively reduced by means of LMIs.

Theorem 2

Suppose \((\mathrm H_2)\) holds, system (15) is an asymptotical state estimator of FMNN (14), if there exist positive matrices \(P_1>0,\) \(P_2>0,\) \(\varLambda =\mathrm {diag}\{\lambda _1,\lambda _2,\ldots ,\lambda _n\}>0,\) \(\rho >0,\) \(\gamma >0,\) such that

where

Moreover, the state estimator gain matrix can be designed as

Proof

We construct the following Lyapunov functional

where \(P=\mathrm {diag}\{P_1,P_2\}.\)

Calculating the fractional derivative of V(t) along the solution of (17), we obtain that

From \((\mathrm H_2)\), there exist \({\varLambda }=\text{ diag }\{\lambda _1,\lambda _2,\ldots ,\lambda _n\}>0,\) such that

where \({{\varvec{\Lambda }}}=\text{ diag }\{\varLambda ,\varLambda \},\) \(\mathcal {C}_1=\text{ diag }\{C_1,C_1\},\) \(\mathcal {C}_2=\text{ diag }\{C_2,C_2\},\) \(C_1=\text{ diag }\{l_1^-l_1^+,\ldots ,l_n^-l_n^+\},\) \(C_2=\text{ diag }\{-\frac{l_1^-+l_1^+}{2},\ldots ,\) \(-\frac{l_n^-+l_n^+}{2}\}.\)

From (4), one gets for a positive constant \(\rho >0,\)

where \({\mathcal {H}}=\text{ diag }\{H,H\}.\)

Using (19)–(21), we obtain that

where

\({\tilde{\varPi }}_{11}=-P{\bar{C}}-{\bar{C}}^{T}P+\rho {\mathcal {H}}^{T}{\mathcal {H}}-{{\varvec{\Lambda }}}{{\mathcal {C}}}_1,\) \(\zeta (t)=[{\bar{v}}^{T}(t),\xi _1^{T}(t),\) \(\xi _3^{T}(t)]^{T}.\)

Noticing \({\tilde{\varPi }}=\varOmega _1+\varOmega _1^{'},\) where

\( {\tilde{E}}_1=\left[ \begin{array}{c} P_1E_1\\ P_2E_1\\ 0_{4n\times n^2}\\ \end{array} \right] , \) \({\tilde{N}}_1=[0_{n^2\times 2n},F_1,0_{n^2\times 3n}].\)

Letting \({\tilde{\varOmega }}_1\triangleq \varOmega _1+\gamma {\tilde{N}}_1^{T}{\tilde{N}}_1\) and \( {\tilde{\varOmega }}\triangleq {\tilde{\varOmega }}_1+\gamma ^{-1}{\hat{E}}_1{\hat{E}}_1^{T}, \) by Lemma 3, \({\tilde{\varOmega }}<0\) is equivalent to (18).

Therefore, \({\tilde{\varPi }}\le {\tilde{\varOmega }}<0,\) and

From Lemma 5, \({\bar{v}}(t)=0\) is globally Mittag-Leffler stable. So system (15) is an asymptotical state estimator of system (14), and the proof of Theorem 2 is completed. \(\square \)

Remark 6

The sufficient conditions in Theorems 1, 2 are simple and in terms of LMIs, instead of algebraic conditions, which makes them easy to be checked by MATLAB Toolbox.

4 Numerical examples

In this section, numerical simulations are given to illustrate the effectiveness of the obtained results.

Example 4.1

Consider the FMNNs (1) with the following parameters:

where

The activation functions are taken as \(f_1(v_1)=-0.2\) \(\tanh \) \((|v_1|-1)+0.3\sin (v_1),\) \(f_2(v_2)=\tanh (0.5v_2),\) \(f_3(v_3)=0.5\tanh (v_3),\) \(h_j(v_j)=0.2\sin (v_j)+0.1\) \((j=1,2,3).\) \(J=[1,0.8,-1]^{T},\) \(\sigma =2,\) \(\alpha =0.9.\)

It is easy to verify that

Taking \(\epsilon =1,~\mu =0.8,\) we can verify by MATLAB Toolbox that LMIs (8), (9) are solved and the feasible solutions are given below

\(\beta =13.6123,\) \(\rho _1=14.0888,\) \(\rho _2=14.0872.\)

According to Theorem 1, it can be concluded that system (5) is an asymptotical state estimator of system (1), which is further verified by Figs. 1 and 2. Figure 1 shows the state trajectories of \(v_i(t),\) \({\hat{v}}_i(t),\) \((i=1,2,3)\), respectively; and the initial conditions are taken as \(v(t)=[1.5,1.8,-1.8]^{T},\) \({\hat{v}}(t)=[-1.2,-0.5,2.3]^{T},\) \(\forall t\in [-2,0].\) It can be seen from Fig. 1 that the actual states can be well tracked by their estimators. Figure 2 depicts that the error states \({\tilde{v}}_i(t)\) \((i=1,2,3)\) asymptotically converge to zero, which confirms the effectiveness of the proposed approach to the design of the state estimator for the FMNNS.

Example 4.2

Consider the FMNNS with time delay (1):

where

\(\alpha =0.98,\) \(\sigma =1.\)

The activation functions are taken as \(f_j(v_j)=0.5\tanh \) \((|v_j|),\) \(h_j(v_j)=0.2|v_j|\) \((j=1,2).\)

It is easy to verify that

Letting \(\epsilon =1,\) \(\mu =0.8,\) we use MATLAB LMI Control Toolbox to solve the LMIs in (8), (9), and obtain that the following feasible solution with \(tmin=-0.0247\) (“tmin” is negative, and it shows that LMI has a feasible solution.), \(\beta = 0.7368,\) \(\rho _1=0.8626,\) \(\rho _2=0.8730,\)

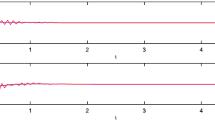

According to Theorem 1, system (5) is an asymptotical state estimator of system (1), which is verified by Figs. 3 and 4. Figure 3 depicts the time evolution of \(v_i(t),\) \({\hat{v}}_i(t),\) \((i=1,2)\) with the initial conditions \(v(t)=[1.5,1.8]^{T},\) \({\hat{v}}(t)=[-1.2,-0.5]^{T},\) \(\forall t\in [-1,0].\) Figure 4 depicts the estimation errors of \({\tilde{v}}_i(t)\) \((i=1,2),\) which tend to zero as \(t\rightarrow \infty \).

5 Conclusions

The dynamics of fractional-order memristor-based neural networks have drawn considerable research attention. However, the problem of state estimation of FMNNs has not been studied. This paper makes up this gap. Based on the fractional-order Lyapunov direct method, several new sufficient conditions are given to ensure the existence of the state estimator. Furthermore, we would like to point out that within the same LMI framework, it is not difficult to extend our main result to the state estimation problem for fractional-order memristor-based neural networks with discontinuous activation functions and this will be considered in future papers.

References

Podlubny, I.: Fractional Differential Equations. Academic Press, New York (1999)

Kilbas, A.A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations. Elsevier, New York (2006)

Dokoumetzidis, A., Macheras, P.: Fractional kinetics in drug absorption and disposition processes. J. Pharmacokinet. Pharmacodyn. 36(2), 165–178 (2009)

Chung, W.S., Jung, M.: Fractional damped oscillators and fractional forced oscillators. J. Korean Phys. Soc. 64(2), 186–191 (2014)

Cole, K.S.: Electric conductance of biological systems. In: Cold Spring Harbor symposia on quantitative biology, vol. 1, pp. 107–116. Cold Spring Harbor Laboratory Press (1933)

Kaslik, E., Sivasundaram, S.: Nonlinear dynamics and chaos in fractional-order neural networks. Neural Netw. 32, 245–256 (2012)

Ding, Z., Zeng, Z., Wang, L.: Robust finite-time stabilization of fractional-order neural networks with discontinuous and continuous activation functions under uncertainty. IEEE Trans. Neural Netw. Learn. Syst. 29(5), 1477–1490 (2018)

Liu, P., Zeng, Z., Wang, J.: Multiple Mittag-Leffler stability of fractional-order recurrent neural networks. IEEE Trans. Syst. Man Cybern. Syst. 47(8), 2279–2288 (2017)

Xiao, M., Zheng, W.X., Jiang, G., Cao, J.: Undamped oscillations generated by Hopf bifurcations in fractional-order recurrent neural networks with Caputo derivative. IEEE Trans. Neural Netw. Learn. Syst. 26(12), 3201–3214 (2015)

Huang, C., Cao, J., Xiao, M., Alsaedi, A., Hayat, T.: Bifurcations in a delayed fractional complex-valued neural network. Appl. Math. Comput. 292, 210–227 (2017)

Wang, H., Yu, Y., Wen, G.: Stability analysis of fractional-order Hopfield neural networks with time delays. Neural Netw. 55, 98–109 (2014)

Wang, F., Yang, Y., Hu, M.: Asymptotic stability of delayed fractional-order neural networks with impulsive effects. Neurocomputing 154, 239–244 (2015)

Stamova, I.: Global Mittag-Leffler stability and synchronization of impulsive fractional-order neural networks with time-varying delays. Nonlinear Dyn. 77(4), 1–10 (2014)

Yu, J., Hu, C., Jiang, H., Fan, X.: Projective synchronization for fractional neural networks. Neural Netw. 49, 87–95 (2014)

Huang, X., Zhao, Z., Wang, Z., Li, Y.: Chaos and hyperchaos in fractional-order cellular neural networks. Neurocomputing 94, 13–21 (2012)

Song, Q., Yang, X., Li, C., Huang, T., Chen, X.: Stability analysis of nonlinear fractional-order systems with variable-time impulses. J. Frankl. Inst. 354(7), 2959–2978 (2017)

Chua, L.O.: Memristor-the missing circuit element. IEEE Trans. Circuit Theory 18(5), 507–519 (1971)

Strukov, D.B., Snider, G.S., Stewart, D.R., Williams, R.S.: The missing memristor found. Nature 453(7191), 80–83 (2008)

Tour, J.M., He, T.: Electronics: the fourth element. Nature 453(7191), 42–43 (2008)

Pavlov, I.P., Anrep, G.V.: Conditioned reflexes: an investigation of the physiological activity of the cerebral cortex. Oxford University Press, London (1928)

Pershin, Y.V., Di Ventra, M.: Experimental demonstration of associative memory with memristive neural networks. Neural Netw. 23(7), 881–886 (2010)

Guo, Z., Wang, J., Yan, Z.: Attractivity analysis of memristor-based cellular neural networks with time-varying delays. IEEE Trans. Neural Netw. Learn. Syst. 25(4), 704–717 (2014)

Itoh, M., Chua, L.O.: Memristor oscillators. Int. J. Bifurc. Chaos 18(11), 3183–3206 (2008)

Kim, H., Sah, M.P., Yang, C., Chua, L.O.: Memristor-based multilevel memory. In: 12th International Workshop on Cellular Nanoscale Networks and Their Applications (CNNA), 2010, pp. 1–6. IEEE (2010)

Petras, I.: Fractional-order memristor-based Chua’s circuit. IEEE Trans. Circuits Syst. II Express Briefs 57(12), 975–979 (2010)

Duan, S., Hu, X., Dong, Z., Wang, L., Mazumder, P.: Memristor-based cellular nonlinear/neural network: design, analysis, and applications. IEEE Trans. Neural Netw. Learn. Syst. 26(6), 1202–1213 (2015)

Jo, S.H., Chang, T., Ebong, I., Bhadviya, B.B., Mazumder, P., Lu, W.: Nanoscale memristor device as synapse in neuromorphic systems. Nano Lett. 10(4), 1297–1301 (2010)

Thomas, A.: Memristor-based neural networks. J. Phys. D Appl. Phys. 46(9), 093001 (2013)

Hu, J., Wang, J.: Global uniform asymptotic stability of memristor-based recurrent neural networks with time delays. In: The 2010 International Joint Conference on Neural Networks (IJCNN), pp. 1–8. IEEE, Barcelona (2010)

Wen, S., Bao, G., Zeng, Z., Chen, Y., Huang, T.: Global exponential synchronization of memristor-based recurrent neural networks with time-varying delays. Neural Netw. 48, 195–203 (2013)

Wu, A., Wen, S., Zeng, Z.: Synchronization control of a class of memristor-based recurrent neural networks. Inf. Sci. 183(1), 106–116 (2012)

Zhang, G., Shen, Y.: New algebraic criteria for synchronization stability of chaotic memristive neural networks with time-varying delays. IEEE Trans. Neural Netw. Learn. Syst. 24(10), 1701–1707 (2013)

Mathiyalagan, K., Anbuvithya, R., Sakthivel, R., Park, J.H., Prakash, P.: Non-fragile \(H_{\infty }\) synchronization of memristor-based neural networks using passivity theory. Neural Netw. 74, 85–100 (2016)

Sakthivel, R., Anbuvithya, R., Mathiyalagan, K., Ma, Y.K., Prakash, P.: Reliable anti-synchronization conditions for bam memristive neural networks with different memductance functions. Appl. Math. Comput. 275, 213–228 (2016)

Anbuvithya, R., Mathiyalagan, K., Sakthivel, R., Prakash, P.: Passivity of memristor-based BAM neural networks with different memductance and uncertain delays. Cognit. Neurodyn. 10(4), 339–351 (2016)

Chen, J., Zeng, Z., Jiang, P.: Global Mittag-Leffler stability and synchronization of memristor-based fractional-order neural networks. Neural Netw. 51, 1–8 (2014)

Wu, A., Zeng, Z.: Global Mittag-Leffler stabilization of fractional-order memristive neural networks. IEEE Trans. Neural Netw. Learn. Syst. 28(1), 206–217 (2017)

Cao, J., Xiao, M.: Stability and Hopf bifurcation in a simplified BAM neural network with two time delays. IEEE Trans. Neural Netw. 18(2), 416–430 (2007)

Lu, H.: Chaotic attractors in delayed neural networks. Phys. Lett. A 298(2), 109–116 (2002)

Wei, J., Ruan, S.: Stability and bifurcation in a neural network model with two delays. Physica D Nonlinear Phenomena 130(3), 255–272 (1999)

Chen, L., Wu, R., Cao, J., Liu, J.B.: Stability and synchronization of memristor-based fractional-order delayed neural networks. Neural Netw. 71, 37–44 (2015)

Bao, H., Park, J.H., Cao, J.: Adaptive synchronization of fractional-order memristor-based neural networks with time delay. Nonlinear Dyn. 82(3), 1343–1354 (2015)

Velmurugan, G., Rakkiyappan, R., Cao, J.: Finite-time synchronization of fractional-order memristor-based neural networks with time delays. Neural Netw. 73, 36–46 (2016)

Velmurugan, G., Rakkiyappan, R.: Hybrid projective synchronization of fractional-order memristor-based neural networks with time delays. Nonlinear Dyn. 83(1–2), 419–432 (2016)

Rakkiyappan, R., Velmurugan, G., Cao, J.: Stability analysis of memristor-based fractional-order neural networks with different memductance functions. Cognit. Neurodyn. 9(2), 145–177 (2015)

Wang, Z., Ho, D.W., Liu, X.: State estimation for delayed neural networks. IEEE Trans. Neural Netw. 16(1), 279–284 (2005)

He, Y., Wang, Q.G., Wu, M., Lin, C.: Delay-dependent state estimation for delayed neural networks. IEEE Trans. Neural Netw. 17(4), 1077–1081 (2006)

Liu, X., Cao, J.: Robust state estimation for neural networks with discontinuous activations. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 40(6), 1425–1437 (2010)

Huang, H., Feng, G., Cao, J.: Robust state estimation for uncertain neural networks with time-varying delay. IEEE Trans. Neural Netw. 19(8), 1329–1339 (2008)

Ding, S., Wang, Z., Wang, J., Zhang, H.: \(H_{\infty }\) state estimation for memristive neural networks with time-varying delays: the discrete-time case. Neural Netw. 84, 47–56 (2016)

Li, R., Cao, J., Alsaedi, A., Hayat, T.: Non-fragile state observation for delayed memristive neural networks: continuous-time case and discrete-time case. Neurocomputing 245, 102–113 (2017)

Liu, H., Wang, Z., Shen, B., Alsaadi, F.E.: State estimation for discrete-time memristive recurrent neural networks with stochastic time-delays. Int. J. Gen. Syst. 45(5), 633–647 (2016)

Henderson, J., Ouahab, A.: Fractional functional differential inclusions with finite delay. Nonlinear Anal. Theory Methods Appl. 70(5), 2091–2105 (2009)

Yang, X., Ho, D.W.: Synchronization of delayed memristive neural networks: robust analysis approach. IEEE Trans. Cybern. 46(12), 3377–3387 (2016)

Liu, L., Han, Z., Li, W.: Global stability analysis of interval neural networks with discrete and distributed delays of neutral type. Expert Syst. Appl. 36(3), 7328–7331 (2009)

Aguila-Camacho, N., Duarte-Mermoud, M.A., Gallegos, J.A.: Lyapunov functions for fractional order systems. Commun. Nonlinear Sci. Numer. Simul. 19(9), 2951–2957 (2014)

Sanchez, E.N., Perez, J.P.: Input-to-state stability (ISS) analysis for dynamic neural networks. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 46(11), 1395–1398 (1999)

Boyd, S., Ghaoui, L.E., Feron, E., Balakrishnan, V.: Linear Matrix Inequalities in System and Control Theory. Studies in Applied and Numerical Mathematics. SIAM, Philadelphia (1994)

Chen, B., Chen, J.: Razumikhin-type stability theorems for functional fractional-order differential systems and applications. Appl. Math. Comput. 254, 63–69 (2015)

Li, Y., Chen, Y., Podlubny, I.: Stability of fractional-order nonlinear dynamic systems: Lyapunov direct method and generalized Mittag-Leffler stability. Comput. Math. Appl. 59(5), 1810–1821 (2010)

Liu, Y., Wang, Z., Liu, X.: Global exponential stability of generalized recurrent neural networks with discrete and distributed delays. Neural Netw. 19(5), 667–675 (2006)

Acknowledgements

This work was jointly supported by the National Natural Science Foundation of China under Grant Nos. 61573291 and 61573096, the Grant of China Scholarship Council 201408505020, the Fundamental Research Funds for Central Universities XDJK2016B036, the Jiangsu Provincial Key Laboratory of Networked Collective Intelligence under Grant No. BM2017002.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that there is no conflict of interest.

Rights and permissions

About this article

Cite this article

Bao, H., Cao, J. & Kurths, J. State estimation of fractional-order delayed memristive neural networks. Nonlinear Dyn 94, 1215–1225 (2018). https://doi.org/10.1007/s11071-018-4419-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-018-4419-3