Abstract

This paper provides finite-time and fixed-time stabilization control strategy for delayed memristive neural networks. Considering that the parameters in the memristive model are state-dependent, which may contain unexpected parameter mismatch when different initial conditions are chosen, in this case, the traditional robust control and analytical methods cannot be carried out directly. To overcome this problem, a brand new robust control strategy was designed under the framework of Filippov solution. Based on the designed discontinuous controller, numerically testable conditions are proposed to stabilize the states of the target system in finite time and fixed time. Moreover, the upper bound of the settling time for stabilization is estimated. Finally, numerical examples are exhibited to explain our findings.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

To reveal the nonlinear relationship between charge and flux, memristor (crasis for memory and resistor) was first theoretically predicted by Chua [1], which was regarded as the missing fourth circuit element. Subsequently, in a seminal paper appeared on the issue of Nature [2], a team from the HP company unveiled the fabrication of a two-terminal nanoscale device, this new device contains pinched hysteresis character [3], which was seen as the starting point for the design of a new class of high density processors and paid unprecedented extensive interest from the research community for its fundamental role in the design of next-generation high-density nonvolatile memories.

The memristor behavior can be epitomized by the fact that its resistance value rests upon the current which has passed through the device, or equivalently, the amount of the charge that has flow through the device determines its resistance. Moreover, the typical \(i-v\) memristor fingerprints are hysteretic that passing through the axis origin. Because of this feature, a number of promising applications can be found in various fields of interest, one immediate application provides possibility for the future computers would turn on instantly without the usual “booting time”. Another important application is located in the construction of artificial neural networks [4].

It is well known that a neural network can be implemented by circuits, for example, when the self feedback connection weights are replaced by a resistor, Hopfield neural networks can be implemented. By the same logic, taking place of the conventional resistor with memristor in artificial neural networks, the memristive model can thus be constructed. It’s worth noting that this kind of system possess more computation power and storage capacity [5], which revealed that the number of equilibria point in n-neuron memristive system can be significantly increased to \(2^{2n^2+n}\), i.e., the information capacity of a memristive system is much larger than some other ones. Thus, memristive neural networks included more attractive applications.

Among which, the stabilization control can be regarded as one of the hottest topics due to its successful employment in many different science and engineering fields. Confronting with these practical utilization, severe time response constraints are essential for security reasons or simply to enhance productivity, which thus igniting renewed interest instability and finite-time stability problems [6,7,8,9,10,11,12]. Finite-time stability, which firstly raised in [13], is a very different stability concept compared with the classical Lyapunov asymptotic stability. It request the control system is Lyapunov stable, further instructions include its trajectories stay within a prescribed scope in a finite-time interval under the designed controller.

While, a fly in the ointment implies that the settling time heavily limited by the initial conditions of a system, which may constraint its widespread application, since the knowledge of initial conditions may not predisposed. To enlarge the scope of the application, another definition named fixed-time stability was proposed in [14], which demands boundedness of the settling time for any initial values. Fixed time stability seems promising if a controller (observer) has to be designed in order to provide some required control (observation) precision in a given time and independently of any initial conditions. This idea can then effectively avoid the convergence time depending on the initial state, for this reason, lots of promising works have been published [15,16,17,18], among which, the fixed-time synchronization of delayed memristive neural networks was stressed in [17], this paper well checking the characteristic of fixed-time problem.

Recent research have showcased a number of promising applications of memristor neural networks [19,20,21,22,23,24,25,26,27]. The stability analysis of memristive model was considered in [19, 21]; the authors in [20] stressed a class reaction–diffusion uncertain memristive neural networks; with nonlinear discontinuous controller, the finite-time stability and instability of delayed memristive system were considered in [27]. Moreover, via sampled data control technique, the authors in [23] indicated synchronization issues for delayed memristive neural networks associated with Markovian jump as well as reaction–diffusion terms. However, the above protocols are only consider the synchronization or stability behavior of a memristive model, as for the synchronization or stability interval, they didn’t mentioned at all. This contribute another main intention of this note.

Motivated by the aforementioned literature, the aim of this technical note is to extend the stability analysis to the finite-time as well as fixed-time stabilization control issues of memristive system. In the framework of differential inclusion theory, some novel discontinuous controller were visualized to achieve the above two categories stabilization control goal and the settling time can be estimated a priori by the control parameters. Moreover, the delayed memristive neural networks are artfully translated into a system with unmatched uncertain parameters, and these unmatched parameters are taken as external disturbance of the target model. All this constitutes the main contribution of this technical note.

The remaining of this paper is organized as follows. In Sect. 2, the problem to be studied is formulated, some necessary definitions, lemmas, as well as assumptions are also informed in this section. Finite-time, Fixed-time stabilization control criteria as well as the designation of some discontinuous controllers are emerged in Sect. 3. Furthermore, two convincing illustrative simulations are carry out in Sect. 4. Section 5 is the conclusion of this paper.

Notations: Through out this paper, solutions of all the systems are intended in Filippov’s sense. \(\mathbb {R}^n\) and \(\mathbb {R}^{n\times m}\) denote, respectively, the n-dimensional Euclidean space and the set of all \(n\times m\) real matrices. For \(r>0\), \(\mathcal {C}([-r,0];\mathbb {R}^{n})\) denotes the family of continuous function \(\varphi \) from \([-r,0]\) to \(\mathbb {R}^{n}\).

2 Model Description and Preliminaries

2.1 Model Description

In this paper, we consider a class of memristive model described by the following form:

for \( i=1,2,\ldots ,n\), where \(y(t)=(y_1(t),y_2(t),\ldots ,y_n(t))^T\in \mathbb {R}^n\) represents the neuron state vector of the system, \(D=\text {diag}(d_{ 1},d_{ 2},\ldots ,d_{ n})>0\) implies the self-feedback connection weights, \(A(y(t))=(a_{ij}(y_i(t)))_{n \times n}\) and \(B(y(t))=(b_{ij}( y_i(t)))_{n \times n}\) are the feedback connection weights and the delayed feedback connection weights, respectively, \(I=(I_1, I_2, \ldots , I_n)^T\) means the input vector, \(\tau (t) \) is the transmission delays and \(g(y(t))=(g_1(y_1(t)), g_2(y_2(t)), \ldots , g_n(y_n(t)))^T\) indicates the neuronal activation functions, which subjected to the following restriction:

\((A_1)\): For \(i=1,2,\ldots ,n\), \(\forall x_1, x_2 \in \mathbb {R}\), \(x_1\ne x_2\), the neural activation function \(g_i\) is bounded and satisfies Lipschitz condition, i.e. there exist \(l_i>0\), and \(M_i>0\), such that

The initial value associated with system (1) is \(y_i(t)=\phi _i(t)\in \mathcal {C}([-\tau ,0];\mathbb {R})\), for \(i=1,2, \ldots , n\).

Interests the feature of the memristor and its typical current-voltage characteristic into account, the state-dependent parameters in (1) are abided by the following conditions:

where

and \(a_{ij}^\prime , a_{ij}^{\prime \prime }, b_{ij}^\prime , b_{ij}^{\prime \prime }\) are known constants with respect to memristances, “unchanged” read that the memristance keeps the current value.

Definition 2.1

[28] For the system \(\dot{x}(t)=f(t,x),x\in \mathbb {R}^n\), with discontinuous right-hand sides, a set-valued map is defined as

where co[E] is the closure of the convex hull of set E, \(B(x,\delta )=\{y:\Vert y-x\Vert \le \delta \}\), and \(\mu (N)\) is Lebesgue measure of set N.

Set \(\overline{a}_{ij}=\max \{ a_{ij}^{\prime }, a_{ij}^{\prime \prime } \}\), \(\underline{a}_{ij}=\min \{ a_{ij}^{\prime }, a_{ij}^{\prime \prime } \}\), \(\hat{a}_{ij}=\frac{1}{2}(\overline{a}_{ij}+\underline{a}_{ij})\), \(\check{a}_{ij}=\frac{1}{2}(\overline{a}_{ij}-\underline{a}_{ij})\), \(\tilde{a}_{ij}=\max \{ |a_{ij}^{\prime }|,|a_{ij}^{\prime \prime }| \}\), \(\overline{b}_{ij}=\max \{ b_{ij}^{\prime }, b_{ij}^{\prime \prime } \}\), \(\underline{b}_{ij}=\min \{ b_{ij}^{\prime }, b_{ij}^{\prime \prime } \}\), \(\hat{b}_{ij}=\frac{1}{2}(\overline{b}_{ij}+\underline{b}_{ij})\), \(\check{b}_{ij}=\frac{1}{2}(\overline{b}_{ij}-\underline{b}_{ij})\), \(\tilde{b}_{ij}=\max \{ |b_{ij}^{\prime }|,|b_{ij}^{\prime \prime }| \}\), for \(i,j=1,2,\ldots ,n\). Then, system (1) can be equally translated into the following line:

where

On the strength of the theory of differential inclusion and Definition 2.1, one can read that

or equivalently, by the measurable selection theorem, there exist measurable functions \(\lambda _{ij}^1(t), \lambda _{ij}^2(t)\in co [-1,1]\) such that,

Remark 2.1

Recalling the definition of the parameters given in (2), we can’t guarantee that \(a_{ij}^{\prime }>(<) a_{ij}^{\prime \prime }\) and \(b_{ij}^{\prime }> (<) b_{ij}^{\prime \prime }\) are always true, as a result, the variables \(\lambda _{ij}^1(t)\) may not be equal to \(\lambda _{ij}^2(t)\).

The main contribution of this paper is to study the stabilization control of the target model, in this case, the existence of an equilibrium point is presupposition of this paper. While, in view of the conditions presented in assumption \((A_1)\), the resentence of the equilibrium point can be guaranteed, which denoted as \(y^*\), then, one has

where \(\lambda _{ij}^3\in [-1,1] \) and \(\lambda _{ij}^4\in [-1,1] \).

Remark 2.2

To discuss the stabilization control of the memristive system (6), its corresponding equilibrium point model (7) is also presented, Due to the different initial conditions of these two system, the measurable functions \(\lambda _{ij}^1(t)\), \(\lambda _{ij}^3 \) as well as \(\lambda _{ij}^2(t)\), \(\lambda _{ij}^4 \) have the same values can’t be guaranteed.

Give consideration to the above lines, one may read that the uncertain parameters of the target system didn’t obey a matching condition that sharing a common time-varying matrix, thus the traditional robust control design method cannot be applied to memristive model directly.

By coordinate transformation \(x(t)=y(t)-y^*\), the following system can be realized:

where \(f_j(x_j(t))=g_j(y_j(t))-g_j(y_j^*)\) and \(f_j(x_j(t-\tau (t)))=g_j(y_j(t-\tau (t)))-g_j(y_j^*)\).

Remark 2.3

Based on the Assumption \((A_1)\), we can concluded that \(f_j(\cdot )\) is bounded and satisfies

In order to realize the finite-time and fixed-time stabilization control purpose, some appropriate controllers are necessary, then, by adding the discontinuous controller \(u_i(t)\) to the right hand of system (8), the following controlled dynamic memristive systems can be derived, which described by the form of differential equation:

where \(u_i(t)\) stands for the controller that will be appropriately designed for an certain stabilization objective.

Remark 2.4

Thought the construction of system (9), one can see that the term \(\sum _{j=1}^{n} \Big ( \check{a}_{ij} \lambda _{ij}^1(t)-\check{a}_{ij} \lambda _{ij}^3 + \check{b}_{ij} \lambda _{ij}^2(t)- \check{b}_{ij}\lambda _{ij}^4 \Big )g_j(y_j^*)\) can be treated as an external perturbation to the stabilization controlled system.

2.2 Definitions and Lemmas

Definition 2.2

The memristive neural network (9) is said to be stabilized in finite time by an appropriate controller, if for any initial state, there exists a constant \(t^*>0\)(\(t^*>0\) depends on the initial state vector value \(x(0)=(x_1^T(0),x_2^T(0),\ldots ,x_n^T(0))^T\)), such that

Definition 2.3

The memristive system (9) is said to reach fixed-time stability, if for any initial condition, there exists \(T_{\max }\) and \(T(x_0(\theta ))\) with \(T(x_0(\theta ))\le T_{\max }\), such that

holds, in which, \(T_{\max }\) is a fixed time and \(T(x_0(\theta ))\) means a settling time function.

Definition 2.4

[29] Function \(V(x):\mathbb {R}^n\longrightarrow \mathbb {R}\) is C-regular, if V(x) is:

-

(1)

regular in \(\mathbb {R}^n\);

-

(2)

positive definite, i.e., \(V(x)>0\) for \(x\ne 0\) and V(0)=0;

-

(3)

radially unbounded, i.e., \(V(x)\longrightarrow +\infty \) as \(\Vert x\Vert \longrightarrow +\infty \).

Note that a C-regular Lyapunov function V(x) is not necessarily differentiable.

Lemma 2.1

[30] Let x(t) be a solution of the control system (9), which is defined on the interval \([0,T_0)\), \(T_0\in (0, +\infty ]\), then, Then, the function |x(t)| is absolutely continuous and

where

Lemma 2.2

[31] For any constant vector \(z\in \mathbb {R}^{n}\) and \(0<r<l\), the following norm equivalence property holds:

and

Lemma 2.3

[32] Suppose that function \(V(x):\mathbb {R}^n\longrightarrow \mathbb {R}\) is C-regular, and that \(x(t):[0,+\infty )\longrightarrow \mathbb {R}^n\) is absolutely continuous on any compact interval of \([0,+\infty )\). Let \(v(t)=V(x(t))\), if there exists a continuous function \(\gamma :[0,+\infty )\longrightarrow \mathbb {R}\) with \(\gamma (\sigma )>0\) for \(\sigma \in (0,+\infty )\), such that

for all \(t>0\), and v(t) is differentiable at t and \(\gamma (\cdot )\) satisfies the condition

then we have \(v(t)=0\) for \(t\ge t_1\). In particular,

-

if \(\gamma (\sigma )=K_1\sigma +K_2\sigma ^\mu \) for all \(\sigma \in (0,+\infty )\), where \(\mu \in (0,1)\) and \(K_1, K_2>0\), then the setting time is estimated by

$$\begin{aligned} t_1= \frac{1}{K_1(1-\mu )}\ln \frac{K_1 v^{1-\mu }(0)+K_2}{K_2} \end{aligned}$$ -

if \(\gamma (\sigma )=K\sigma ^\mu \) for all \(\sigma \in (0,+\infty )\), where \(\mu \in (0,1)\) and \(K>0\), then the setting time is estimated by

$$\begin{aligned} t_1=\frac{v^{1-\mu }(0)}{K(1-\mu )}. \end{aligned}$$

Lemma 2.4

[14] If there exists a continuous radially unbounded function \(V: \mathbb {R}^n\rightarrow \mathbb {R}_+\bigcup \{0\}\) such that

-

(1)

\(V(z)=0 \Longleftrightarrow z=0 \);

-

(2)

for some \(\alpha , \beta >0\), \(0<p<1\), \(q>1\), any solution z(t) satisfies the inequality

then, the origin is globally fixed-time stable and the following estimate holds:

with the settling time bounded by

Lemma 2.5

[33] If there exists a continuous radially unbounded function \(V: \mathbb {R}^n\rightarrow \mathbb {R}_+\bigcup \{0\}\) such that

-

(1)

\(V(z)=0 \Longleftrightarrow z=0 \);

-

(2)

for some \(\alpha , \beta >0\), \(p=1-\frac{1}{2\mu }\), \(q=1+\frac{1}{2\mu }\), \(\mu >1\), any solution of z(t) satisfies the inequality

then the origin is globally fixed-time stable and the following estimate of the settling time function holds:

Remark 2.5

The settling time function is upper bounded by a priori value that rely on the design parameters instead of the system initial states, this implies that the convergence time can be guaranteed in a prescribed manner.

3 Main Results

In this section, we firstly established some sufficient criteria to realize the finite-time stabilization of the target model, subsequently, some similarity conditions for the fixed-time stabilization issues will be retrieved, besides, the corresponding designation for the controller will also be involved.

3.1 Finite-Time Stabilization

Theorem 3.1

Under the Assumption \((A_1)\), the controlled memristive model (9) is finite-time stable under the following discontinuous controller

where \(0<\varepsilon <1\) and

Moreover, the settling time for stabilization can be estimated by

Proof

Let V(t) be a candidate Lyapunov function defined by:

Evidently, V(t) is absolutely continuous, then, taking the time derivative of V(t) along the trajectory of (9) gives

Referring to the definitions of the measurable functions \(\lambda _{ij}^1(t)\), \(\lambda _{ij}^2(t)\), \(\lambda _{ij}^3\), \(\lambda _{ij}^4\), one has

Young inequality is applied to evaluate the second term on the right-hand side of the inequality (14), which gives

Thus, along with (14)–(16), the future estimation for the time derivative of V(t) can be shown as

Before completing the proof, further treatment should be taken for the last term of the inequality in (17). Based on the inequalities defined in (11) and the expression in Lemma 2.3, a more upper bound can be easily established

The claim holds when it follows from Lemma 2.2, i.e., the finite-time stabilization for memristive neural network (9) can be reached and the settling time is estimated by

This concludes the proof. \(\square \)

Next, we will readjust the parameter choice and a brand new settling time can be obtained correspondingly, which directly from Theorem 3.1.

Corollary 3.1

Suppose that all the assert in assumption \((A_1)\) are correct, then the controlled memristive system (9) can be stabilized in finite time by the discontinuous controller (10) with the given control gains \(k_{1i}\):

the other gains \(k_{2i}\) and \(k_{3i}\) have the same expression as given above. Additional, the upper-bound of the settling time for stabilization can be estimated by

Proof

Arguing as we did in the proof of Theorem 3.1, along the trajectories of system (9), one can arrive that

where

This implies that the assertion about the finite-time stabilization control of system (9) can be reached, and it follows from Lemma 2.3, the finite time can be arrived as

In the previous results, sufficient conditions are given to ensure the controlled dynamic memristive model can maintain finite-time stabilization. In the forthcoming lines, some sufficient conditions are presented to test the fixe-time stabilization control model. \(\square \)

3.2 Fixed-Time Stabilization

Theorem 3.2

Consider the system (9) under assumption \((A_1)\) together with the following controller:

where \(0<\alpha <1\), \(\beta >1\), and the other given admissible values are required as below

then, the fixed-time stabilization can be achieved with the following settling time

Proof

The way of reasoning this assertion is very similarity to the above procedure. Next, Consider the same Lyapunov functional, the rest of the proof matches mutatis mutandis a similar proof of Theorem 3.1, one can arrive that:

It follows from the definition as shown in (22) and the assertion in Lemma 2.4, the time derivative of V(t) can be checked after some computations

Thus, it can be checked from Lemma 2.4 that the fixed-time stabilization can be realized, correspondingly, the fixed settling time T is bounded by

As it was mentioned in the previous section, different values of the parameters proposed in Theorem 3.2 may lead to a very different bound of the fixed time. In such situation, select \(\alpha \) and \(\beta \) in Theorem 3.2 as \(\alpha =1-\frac{1}{2\mu }\), \(\beta =1+\frac{1}{2\mu }\) with \(\mu >1\), then, under the framework of Lemma 2.5, a brand new upper bound of the settling time can be developed. \(\square \)

Corollary 3.2

Suppose that all the conditions of Theorem 3.2 hold, then, the system (9) can be stabilized in a finite time under the controller (21), and the fixed settling time T satisfies

Remark 3.1

As far as we know, in the finite-time and fixed-time stabilization control issues, the settling time is expected as short as possible for guaranteeing fast response. By enumerate the expression of the setting time in Theorem 3.1, one can read that the settling time for stabilization is determined by the values of gain parameters \(k_3\), while, in Corollary 3.1, this is determined by the gain values \(k_1\) and \(k_3\), so the upper-bound of the finite-time settling time has no relation with the control gain \(k_2\). Via the same analytical method, one can arrive at the fixed-time setting time only need the information of \(\zeta _3\) and \(\zeta _4\). This means that the selection of the control gains can properly determine the maximum allowable restrictions, which is helpful for us to choose suitable condition and parameters to shorten the settling time for guaranteeing fast response. By doing so, the control cost can be reduces simultaneously.

Remark 3.2

According to the above two stabilization control categories, a noteworthy fact can be observed is that, the settling time proposed in Theorem 3.1 and Corollary 3.1 depends on available a priori knowledge of the initial condition V(0), while, when the initial condition is very large, the setting time is impractical. To avoid this shortcomings, another new algorithm was proposed in Theorem 3.2 and Corollary 3.1, in which these results are independent of initial states. Thus, the pre-specified settling time can be obtained by properly adjust the control parameters.

Remark 3.3

Making comparisons with the finite-time and fixed-time stabilization control algorithm, one may read that only one term like \(-V^P(t)\), \(0<p<1\) can realize finite-time stabilization goal, while, ro arrive at the fixed-time destination, another extra factor \(-V^q(t)\), \(q>1\) is also essential, which can be treat as pulling the system into the region with norm less than 1 in a fixed-time.

4 Numerical Example

In this section, we will perform two examples to state the validity and effectiveness of the proposed theoretical results derived above. To show the important role of the control strategy, the controller in (10) and (21) were simulated to examine its performance.

Example 1

To illustrate the performances of the given controller, in our first experiment, we considering the following two-dimensional mermristive neural networks:

with

Thus, it is obvious that

Moreover, the activation is taken as \(f(s)=\tanh (s)\), it is obvious that the given functions satisfy the condition \((A_1)\) with \(l_1=l_2=1\) and \(M_1=M_2=1\). To retrieve the detailed expression of the controller, we set \(p=2\), then, according to the design algorithm as introduced in (11), one can arrive at

as a result, we can choose \(k_{11}=3\), \(k_{12}=5.4\), \(k_{21}=3\), \(k_{22}=3\). In addition, let the other control gains \(k_{31}=k_{32}=0.2\), and \(\varepsilon =0.5\), then the desired controller can be designed as

By now, all the restrictions in Theorem 3.1 are hold, the we can safely read that system (27) can be finite-time stabilized via controller (28), and the settling time can be estimated as

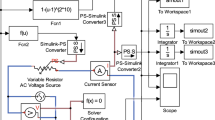

To better illustrate the findings, Figs. 1 and 2 characterized the transient behaviors of system (9) without any control strategy under the initial conditions \(x(0)=(-1,2)^T\). In the framework of the controller (28), the corresponding simulation results are depicted Fig. 3. Based on provided figures, one can see that the controller performs as expected, i.e., the state variables of the controlled system (9) converge to zero in finite time, which implies that the system (27) can be finite-time stabilized via controller (28).

Phase plane behavior of system (9) without any controller under the initial condition \(x(t)=(-1,2)^T\)

Time evolutions of the states \(x_1(t)\) and \(x_2(t)\) in system (9) without any controller under the initial condition \(x(t)=(-1,2)^T\)

Time-domain behavior of the state variables \(x_1(t)\) and \(x_2(t)\) with initial condition \(x(t)=(-1,2)^T\) under controller (28)

Moreover, It is quite obvious that the above parameters meet all the ascertain in Corollary 3.1, then, by a simple calculation along with (19), one can read

in which

as a result, one can get that \(\lambda _1=0.5\), \(\lambda _2=0.4\).

It is readily seen that, the setting time associate with Corollary 3.1 provides a more accurate prediction comparatively to the conditions in Theorem 3.1.

Example 2

With the purpose of showing that the fixed-time stabilization control problem of memristive neural networks, we consider the example below:

where

Then, a standard manipulations lead to

in addition, the time-varying delays are chosen as \(\tau (t)=0.5+0.2 \sin (3 t)\), the functions and some other initial parameters are the same as in Example 1. To retrieve the control gains, the corresponding computational details were presented in the framework of (22):

Calculations show that the control gains in (21) can be picked as \(\zeta _{11}=1\), \(\zeta _{12}=1\), \(\zeta _{21}=3\), \(\zeta _{22}=4\), the others are opt for \(\zeta _{31}=\zeta _{32}=0.5\), \(\zeta _{41}=\zeta _{42}=0.7\). Here, by setting \(\alpha =0.5\), \(\beta =2\) and employing the obtained control gains, the controller can be modified as

The chaotic attractor of the controlled system (9) without any control strategy under initial condition \(x(t)=(-0.6\sin 2t -0.4, -0.1\cos t+1.1)^T\)

Time-domain behaviors of the states \(x_1(t)\) and \(x_2(t)\) in system (9) without any control strategy under initial condition \(x(t)=(-0.6\sin 2t -0.4, -0.1\cos t+1.1)^T\)

Now, all the affirmance in Theorem 3.2 are correct, thus, we may conclude that the target (9) can reach stability in a fixed-time through the designed controller (30), and the upper bounded of the setting time can be determined as

Moreover, some simulations have been done in MATLAB with the initial conditions \(x(t)=(-0.6\sin 2t -0.4, -0.1\cos t+1.1)^T\), which plotted in Figs. 4, 5 and 6, the chaotic behavior and the state trajectories in the controlled system (9) without any controller are depicted in Figs. 4 and 5 respectively, Fig. 6 revealed the dynamic behavior of the controlled system, these simulation figures once again demonstrated the conjecture that the controlled system can reach stability within the fixed-time \(t \le 6.0202\) s.

5 Conclusion

In this technical note, by using the robust analytical techniques and Lyapunov functionals, numerically testable finite-time and fixed time stabilization control criteria for delayed memristive neural networks have been developed, in which, two discontinuous controllers are presented and analyzed, which can ensured the target system towards finite-time and fixed-time stabilization control goals by properly tunes the control gains. In order to guarantee a fast response, it is often reacquire the trajectories of the network states converge to some equilibrium point during a time interval. Thus, the upper bound of the settling time for stabilization have also constructed, which subjected the memristive system parameters and control gains. The simulation results confirm previously given statements and the superiority of this controller in the end.

References

Chua L (1971) Memristor-the missing circut element. IEEE Trans Circuit Theory 18:507–519

Strukov D, Snider G, Stewart D, Williams R (2008) The missing memristor found. Nature 453:80–83

Wang F (2008) Commentary: memristor and memristive switch mechanism. J Nanophotonics 2:020304

Snider G (2007) Self-organized computation with unreliable. Memrisitive nanodevices. Nanotechnology 18:365202

Guo Z, Wang J, Yan Z (2013) Attractivity analysis of memristor-based cellular neural networks with time-varying delays. IEEE Trans Neural Netw Learn Syst 25:704–717

Wang L, Shen Y, Sheng Y (2016) Finite-time robust stabilization of uncertain delayed neural networks with discontinuous activations via delayed feedback control. Neural Netw 76:46–54

Muthukumar P, Subramanian K, Lakshmanan S (2016) Robust finite time stabilization analysis for uncertain neural networks with leakage delay and probabilistic time-varying delays. J Franklin Inst 353:4091–4113

Ren F, Cao J (2006) LMI-based criteria for stability of high-order neural networks with time-varying delay. Nonlinear Anal Real World Appl 7:967–979

Yan Z, Zhang G, Zhang W (2013) Finite time stability and stabilization of linear Itö stochastic systems with state and control dependent noise. Asian J Control 15:270–281

Liu X, Ho WC, Daniel YuW, Cao J (2014) A new switching design to finite-time stabilization of nonlinear systems with applications to neural networks. Neural Netw 57:94–102

Bao H, Cao J (2012) Exponential stability for stochastic BAM networks with discrete and distributed delays. Appl Math Comput 218:6188–6199

Li X, Cao J (2010) Delay-dependent stability of neural networks of neutral type with time delay in the leakage term. Nonlinearity 23:1709–1726

Dorato P (1961) Short time stability in linear time-varying systems. In: Proceedings of the IRE international convention record part 4, New York, USA, pp 83–87

Polyakov A (2012) Nonlinear feedback design for fixed-time stabilization of linear control systems. IEEE Trans Autom Control 57:2106–2110

Levant A (2013) On fixed and finite time stability in sliding mode control. In: Proceedings of 52nd IEEE conference on decision and control, Florence, Italy, pp 4260-4265

Parsegv S, Polyakov A, Shcherbakov P (2013) Nonlinear fixed-time control protocol for uniform allocation of agents on a segment. In: Proceedings of 51nd IEEE conference on decision and control. IEEE, Maui, USA, pp 7732-7737

Cao J, Li R (2017) Fixed-time synchronization of delayed memristor-based recurrent neural networks. Sci China Inf Sci 60:032201

Wan Y, Cao J, Wen G, Yu W (2016) Robust fixed-time synchronization of delayed Cohen-Grossberg neural networks. Neural Netw 73:86–94

Zhang G, Shen Y, Xu C (2015) Global exponential stability in a Lagrange sense for memristive recurrent neural networks with time-varying delays. Neurocomputing 149:1330–1336

Li R, Cao J (2016) Stability analysis of reaction-diffusion uncertain memristive neural networks with time-varying delays and leakage term. Appl Math Comput 278:54–69

Wang Z, Ding S, Huang Z, Zhang H (2015) Exponential stability and stabilization of delayed memristive neural networks based on quadratic convex combination method. IEEE Trans Neural Netw Learn Syst 129:2029–2035

Yang X, Cao J, Liang J (2017) Exponential synchronization of memristive neural networks with delays: interval matrix method. IEEE Trans Neural Netw Learn Syst 28:1878–1888

Li R, Wei H (2016) Synchronization of delayed Markovian jump memristive neural networks with reaction-diffusion terms via sampled data control. Int J Mach Learn Cybern 7:157–169

Rakkiyappan R, Premalatha S, Chandrasekar A, Cao J (2016) Stability and synchronization analysis of inertial memristive neural networks with time delays. Cogn Neurodynamics 10:437–451

Li R, Cao J (2016) Finite-time stability analysis for markovian jump memristive neural networks with partly unknown transition probabilities. IEEE Trans Neural Netw Learn Syst. doi:10.1109/TNNLS.2016.2609148

Ding S, Wang Z (2015) Stochastic exponential synchronization control of memristive neural networks with multiple time-varying delays. Neurocomputing 162:16–25

Wang L, Shen Y (2015) Finite-time stabilizability and instabilizability of delayed memristive neural networks with nonlinear discontinuous controller. IEEE Trans Neural Netw Learn Syst 26:2914–2924

Forti M, Grazzini M, Nistri P, Pancioni L (2006) Generalized Lyapunov approach for convergence of neural networks with discontinuous or non-Lipschitz activations. Phys D Nonlinear Phenomena 214:88–99

Clarke F (1987) Optimization and nonsmooth analysis. SIAM, Philadelphia

Forti M, Nistri P, Papini D (2005) Global exponential stability and global convergence infinite time of delayed neural networks with infinite gain. IEEE Trans Neural Netw Learn Syst 16:1449–1463

Parsegv S, Polyakov A, Shcherbakov P (2013) On fixed and finite time stability in sliding mode control. In: Proceedings of 4th IFAC workshop on distributed estimation and control in networked systems, Koblenz, Germany, pp 110–115

Aubin JP, Cellina A (1984) Differential inclusions. Springer, Berlin

Hardy G, Littlewood J, Polya G (1952) Inequalities, 2nd edn. Cambridge University Press, Cambridge

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was jointly supported by the National Natural Science Foundation of China under Grant Nos. 61573096 and 61272530, the Natural Science Foundation of Jiangsu Province of China under Grant No. BK2012741, the “333 Engineering” Foundation of Jiangsu Province of China under Grant No. BRA2015286, the “Fundamental Research Funds for the Central Universities”, the JSPS Innovation Program under Grant KYZZ16_0115, and Scientific Research Foundation of Graduate School of Southeast University under Grant No. YBJJ1663.

Rights and permissions

About this article

Cite this article

Li, R., Cao, J. Finite-Time and Fixed-Time Stabilization Control of Delayed Memristive Neural Networks: Robust Analysis Technique. Neural Process Lett 47, 1077–1096 (2018). https://doi.org/10.1007/s11063-017-9689-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-017-9689-0