Abstract

In this paper, we study the hybrid projective synchronization problem for a class of fractional-order memristor-based neural networks with time delays. First, we address the basic ideas of fractional-order memristor-based neural networks (FMNNs) with hub structure and time delays. After that we derive the response system can be synchronized from the corresponding drive system, that is, the response system can be synchronized with the projection of the drive system generated through a design scaling matrix which is known as hybrid projective synchronization. By applying the Filippovs solutions, differential inclusion theory, stability theorem of linear fractional-order systems with multiple time delays and employing suitable linear feedback control law, some new sufficient conditions are derived to guaranteeing the projective synchronization of addressed FMNNs with hub structure and time delays. The analysis in this paper is based on the theory of fractional-order differential equations with discontinuous right-hand sides. Finally, a numerical example is presented to show the usefulness of our theoretical results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

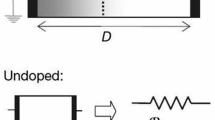

In recent years, the dynamical analysis of memristor-based neural networks is one of the hot field of research due to their prominent applications have been predicted worldwide in various fields such as new high-speed low-power processors, filters, new biological models for associative memory, booting free computers and so on [1, 2]. Moreover, in [3], the author have been established the mathematical proof of the missing relation between the circuit variables charge (q) and flux \(({\varPhi })\). It is known as the fourth basic two-terminal passive circuit element in the circuit theory and named as Memristor (short for memory resistor). Memristor has unique circuit properties, and it behaves differently when compared with the other circuit elements such as resistors, capacitors and inductors in the circuit theory. In [4, 5], the authors in HP labs have been created the first working model of a memristor. The major advantage of the memristor is that the value of resistance would depend on the magnitude and polarity of the voltage applied in it and also have the potential ability to remember the most recent resistance when the applied voltage is turned off. Based on these features, the behavior of the memristor is more and more noticeable, such that many researchers, scientists are paid their attention and increasing interest to analysis the properties of memristors. Some of the researchers have been introduced the memristor in the integrated circuit design of neural networks, that is, in the circuit the resistor element has been replaced by memristors then the new network is called as memristor-based neural networks. Recently, several authors have been extensively investigated the properties of memristor-based neural networks and proposed some interesting results in the literature [6–18]. In [6–12], the authors have been extensively investigated the various stability analysis of memristor-based neural networks with time-varying delays and provided some sufficient conditions to ensure the stability of considered networks. The authors in [6] have been studied the dynamic behaviors of memristor-based neural networks with time-varying delays using local inhibition. In [14], some sufficient conditions were obtained to ensure the exponential synchronization of the considered networks based on drive-response concept, differential inclusions theory and Lyapunov functional method.

Fractional calculus is the generalization of ordinary differentiation and integration to an arbitrary (non-integer) order, and it has been originated at the time of the invention of traditional calculus. Due to lack of solution methods and physical interpretation, fractional calculus do not attract the researchers for a long time. Nowadays, fractional calculus is the subject of interest and getting more and more attention in the field of research because of their promising development in both theory and applications. Thus, fractional calculus has played an important role in the modeling of real-world applications in various fields of science and engineering [19–21]. Moreover, it has been provided an excellent tool for the description of memory and hereditary properties of various materials and processes [22]. That is fractional-order systems provide infinite memory and more accurate result than the integer-order systems. Recently, many of the researchers have focused their interest and much attention to analysis the fractional-order dynamical systems and many important results have been reported in the existing literature [23–25]. Some of the authors introduced the fractional calculus into neural networks due to their incorporation of infinite memory. Therefore, it is necessary to investigate the dynamical analysis of fractional-order neural networks. As we know that, time delay is an unavoidable factor in the practical applications. It follows that, many authors extensively analysis the fractional-order neural networks with time delays and some remarkable results have been proposed in the literature [26–32]. Moreover, some special networks structures have been considered to characterizing the dynamic behavior of the scale-free networks and complex recurrent networks such as hub structure and ring structure. Some of the nodes have several connections (high-degree) than other nodes of the networks which is called hub structure of the networks. These simplified connectivity structures are studied to gain insight into the mechanisms underlying the behavior of complex networks. In [30], the authors have been widely investigated the stability analysis of fractional-order Hopfield neural networks with both ring and hub structure. By using Laplace transforms, stability theorem for fractional-order systems, properties of circulant matrices, some new sufficient conditions were derived for stability of fractional-order neural networks of Hopfield type with hub and ring structure in [31].

In the field of science and engineering, many applications are strongly depend on the dynamic behaviors of the designed networks. Therefore, the study of dynamic behaviors of both integer-order and fractional-order neural networks is very important. In [34], the authors have been discussed the problem of boundary stabilization of a nonlinear viscoelastic equation with interior time-varying delay and nonlinear dissipative boundary feedback and obtained the global existence of weak solutions and asymptotic behavior of the energy by using the Faedo–Galerkin method and the perturbed energy method. By using variable norm technique and modified Lyapunov functional approach, some sufficient conditions were derived to ensure exponential stability of heat flow with boundary time-varying delay effect in [35]. On the other hand, in [36], the authors have been established the synchronization problem of chaotic systems and extensively investigated. In the existing literature, a number of synchronization problems have been exposed and investigated, such as complete synchronization, anti-phase synchronization, projective synchronization and robust synchronization [36–41]. Many control techniques have been used to show the synchronization of considered systems such as linear feedback control, adaptive control, sliding mode control, active control, etc. Recently, the analysis of synchronization problem of fractional-order neural networks have received much attention in the area of nonlinear science and it has been applied many fields such as image processing, secure communication and ecological system. Projective synchronization was first initiated in [42], and it has been providing faster communication with its proportional feature. This feature can be used to extend binary digital to M-nary digital communication for achieving fast communication in [43]. Thus, the study of projective synchronization is a very important concept in both theoretical and application point of view. Recently, several important results have been derived for projective synchronization in the literature [44–50]. The conditions for the global Mittag–Leffler stability and synchronization have been obtained by using Lyapunov method for the memristor-based fractional-order neural networks in [49]. In [44], the authors extensively studied the problem of modified projective synchronization of time-delayed fractional-order chaotic systems. Some new sufficient conditions were obtained to realize projective synchronization of fractional-order neural networks with open loop control and adaptive control in [45].

To the best of our knowledge, there are few results established for projective synchronization of fractional-order memristor-based neural networks. In [16], the authors extensively studied the synchronization problem for memristor-based neural networks with time-varying delays via adaptive controller and feedback controller technique. Projective synchronization of fractional-order memristor-based neural networks has been investigated in [50]. In [17], the authors have been discussed about weak, modified and function projective synchronization for chaotic memristive neural networks with time delays. By using the generalized Halanay inequality and state feedback control technique, some new sufficient conditions were established to ensure the weak, modified and function projective synchronization of the considered networks. Motivated by the above discussion, the problem of hybrid projective synchronization of fractional-order memristor-based neural networks with hub structure and time delays is extensively investigated in this paper. Some new sufficient conditions are obtained to guarantee the hybrid projective synchronization of fractional-order memristor-based neural networks with hub structure and time delays by using Filippovs solution, differential inclusion theory, stability theorem of linear fractional-order systems with multiple time delays and linear feedback control technique. The addressed fractional-order memristor-based neural networks system with hub structure and time delays is solved by numerically using a predictor–corrector scheme [51].

This paper organized as follows. In Sect. 2, some basic definitions of fractional calculus and descriptions of derive and response systems are presented. Some new sufficient conditions for hybrid projective synchronization of fractional-order memristor-based neural networks with hub structure and time delays are obtained in Sect. 3. In Sect. 4, a numerical example is provided to show the effectiveness of our main results. The conclusion of this paper is provided in Sect. 5.

2 Preliminaries

In this section, we provide some basic definitions of fractional calculus and the description of derive and response system. Throughout this paper, we use the Caputo fractional-order derivative.

Definition 1

[19] The fractional integral of order \(\alpha \) for a function h is defined as

where \(t\ge 0\) and \(\alpha >0,\ {\varGamma }(\cdot )\) is the gamma function defined as \({\varGamma }(\alpha )=\int _{0}^{\infty }t^{\alpha -1}e^{-t}\hbox {d}t. \)

Definition 2

[19] The Caputo fractional derivative of order \(\alpha \) for a function h(t) is

where \(t>0\) and n is a positive integer such that \(n-1<\alpha <n\in Z^+\).

Definition 3

[30] The Laplace transform of the Caputo fractional-order derivative is

where \( n-1<\alpha \le n\), H(s) is the Laplace transform of h(t) and \(h^k(0)=0,\ k=1,2,\ldots ,n,\) are the initial conditions.

In this paper, we consider a fractional-order memristor-based neural networks with time delays as drive system is described by the following equations:

for \(i=1,2,\ldots ,n,\) where \(0<\alpha <1\), n corresponds to the number of units in the network. \(u_i(t)\) is the state vector of the ith neuron. \(a_i>0\) is the self-feedback connection weight matrix. \(f_j(u_j(t))\) and \(f_j(u_j(t-\tau ))\) denotes the nonlinear activation functions without and with time delay. \(\tau \) denote the constant time delay of the network. \(\beta _{ij}(u_j(t))\) and \(\gamma _{ij}(u_j(t))\) are the memristor-based connection weight matrices without and with delay, respectively, which are defined as follows

for \(i,j=1,2,\ldots ,n,\) where the switching jumps \(T_j>0,\ \beta _{ij}^{*},\ \beta _{ij}^{**},\ \gamma _{ij}^{*}\) and \(\gamma _{ij}^{**}\) are all constants.

The initial conditions associated with system (3) are of the form

where \(\phi _{i}(s)=(\phi _1(s),\phi _2(s),\ldots ,\phi _n(s))^T\in \mathcal {C}([-\tau ,0],\mathcal {R}^n)\).

Remark 1

The detailed construction of memristor-based neural networks from the characteristics of circuit analysis and memristor physical properties were shown in [2, 6, 14, 15, 17]. Memristor-based neural networks is one of the remarkable type of neural networks model. That is, memristor-based neural networks is the switching nonlinear systems depending on its state. Thus, memristor-based neural networks predicts the undesirable dynamical behaviors. Most of the authors studied the dynamical behaviors of memristor-based neural networks see [6–18].

Remark 2

In [33], the authors have been studied the problem of hybrid projective synchronization of fractional-order neural networks with time delays. In this paper, we consider the problem of hybrid projective synchronization of fractional-order memristor-based neural networks with time delays and some sufficient conditions are derived to ensure the hybrid projective synchronization of considered fractional-order memristor-based neural networks with time delays. Moreover, the connection weights \(\beta _{ij}(u_j(t))\) and \(\gamma _{ij}(u_j(t))\) of (3) are state dependent and change their values as \(\beta _{ij}^*, \ \beta _{_{ij}}^{**}\) and \(\gamma _{ij}^*, \ \gamma _{_{ij}}^{**}\) for \(i,j=1,2,\ldots ,n,\) respectively, based on the state of each subsystem. If assume that \(\beta _{ij}^*=\beta _{i,j}^{**}\) and \(\gamma _{ij}^*= \gamma _{i,j}^{**}\) for \(i,j=1,2,\ldots ,n\), then the system (3) is reduced to the system in [33]. Our results is the extended results of some existing works in the literature.

Definition 4

[52] Let \(E\subseteq \mathcal {R}^n,\ x\mapsto F(x)\) is called a set-valued map for \(E\hookrightarrow \mathcal {R}^n\), if for each point x of a set \(E\subseteq \mathcal {R}^n\), there corresponds a nonempty set \(F(x)\subseteq \mathcal {R}^n\). A set-valued map F with nonempty values is said to be upper semi-continuous at \(x_0\in E\subseteq \mathcal {R}^n\), if for any open set N containing \(F(x_0)\), there exists a neighborhood M of \(x_0\) such that \(F(M)\subseteq N. \ F(x)\) is said to have a closed (convex, compact) image if for each \(x\in E,\ F(x) \) is closed (convex, compact).

Definition 5

[53] For differential system \(\frac{\hbox {d}x}{\hbox {d}t}=f(t,x),\) where f(t, x) is discontinuous in x. The set-valued map of f(t, x) is defined as

where \(B(x,\delta )=\{y:\Vert y-x\Vert \le \delta \}\) is the ball of center x and radius \(\delta \); intersection is taken over all sets N of measure zero and over all \(\delta >0;\ \mu (N)\) is Lebesgue measure of set N.

By using the theory of differential inclusion, the FMNNs (3) can be written as

for \(i=1,2,\ldots ,n,\) the set-valued maps defined as

where \(\underline{\beta }_{ij}=\min \{\beta _{ij}^{*},\beta _{ij}^{**}\},\ \bar{\beta }_{ij}=\max \{\beta _{ij}^{*},\beta _{ij}^{**}\}\) \(\underline{\gamma }_{ij}=\min \{\gamma _{ij}^{*},\gamma _{ij}^{**}\}\) and \(\bar{\gamma }_{ij}=\max \{\gamma _{ij}^{*},\gamma _{ij}^{**}\}\), or equivalently there exist \(b_{ij}(t)\in \hbox {co}\{\underline{\beta }_{ij},\bar{\beta }_{ij}\}\), \(c_{ij}(t)\in \hbox {co}\{\underline{\gamma }_{ij},\bar{\gamma }_{ij}\}\), such that

For our convenience, we can rewritten as

where \(b_{ij}^*=\sup _{t\ge 0}\Vert b_{ij}(t)\Vert \), \(c_{ij}^*=\sup _{t\ge 0}\Vert c_{ij}(t)\Vert \).

Equation (9) can be rewritten as in the vector form as follows

where \(u(t)=(u_1(t),u_2(t),\ldots ,u_n(t))^T\in \mathcal {R}^n,\) \(A=\hbox {diag}(a_1,a_2,\ldots ,a_n)\in \mathcal {R}^{n\times n}\), \(\widehat{\beta }=(b_{ij}^*)_{n\times n}\in \mathcal {R}^{n\times n}\), \(\widehat{\gamma }=(c_{ij}^*)_{n\times n}\in \mathcal {R}^{n\times n}\), \(f(u(t))=(f_1(u_1(t)),f_2(u_2(t)),\ldots ,f_n(u_n(t)))^T\) and \(f(u(t-\tau ))=(f_1(u_1(t-\tau )),\ldots ,f_n(u_n(t-\tau )))^T\).

System (10) can be linearized as follows

where R is the Jacobian matrix of f(u(t)) and \(\bar{u}(t-\tau )=(\sum _{j=1}^{n}c^*_{1j}p_{1j}u_j(t-\tau ),\ldots ,\sum _{j=1}^{n}c^*_{nj}p_{nj}u_j(t-\tau ))^T\) is the linearization vector of \(\widehat{\gamma } f(u(t-\tau ))\) at the equilibrium point. Also, denote \(\tilde{\beta }=\widehat{\beta }R\) and \(\tilde{{\varTheta }}=(c^*_{ij}p_{ij})_{n\times n}\), then (11) can be rewritten as

In this paper, we consider the drive-response synchronization problem. The corresponding response system of (3) is described as the following equation:

for \(i=1,2,\ldots ,n,\) where \(\sigma _i(t)=(\sigma _1(t),\sigma _2(t),\ldots ,\sigma _n(t))^T\) is the control input to be designed for ensuring the synchronization of the drive-response system. Similarly, the parameters of response system (13) is defined as

for \(i,j=1,2,\ldots ,n,\) where the switching jumps \(T_j>0,\ \beta _{ij}^{*},\ \beta _{ij}^{**},\ \gamma _{ij}^{*}\) and \(\gamma _{ij}^{**}\) are all constants.

The initial conditions associated with system (13) are of the form

where \(\pi _{i}(s)=(\pi _1(s),\pi _2(s),\ldots ,\pi _n(s))^T\in \mathcal {C}([-\tau ,0],\mathcal {R}^n)\). By using the theory of differential inclusion, the response system (13) can be written as

for \(i=1,2,\ldots ,n,\) the set-valued maps defined as

where \(\underline{\beta }_{ij}=\min \{\beta _{ij}^{*},\beta _{ij}^{**}\},\ \bar{\beta }_{ij}=\max \{\beta _{ij}^{*},\beta _{ij}^{**}\}\) \(\underline{\gamma }_{ij}=\min \{\gamma _{ij}^{*},\gamma _{ij}^{**}\}\) and \(\bar{\gamma }_{ij}=\max \{\gamma _{ij}^{*},\gamma _{ij}^{**}\}\), or equivalently there exist \(\bar{b}_{ij}(t)\in \hbox {co}\{\underline{\beta }_{ij},\bar{\beta }_{ij}\}\), \(\bar{c}_{ij}(t)\in \hbox {co}\{\underline{\gamma }_{ij},\bar{\gamma }_{ij}\}\), such that

For our convenience, we can rewritten as

for \(i=1,2,\ldots ,n,\) where \(\bar{b}_{ij}^*=\sup _{t\ge 0}\Vert \bar{b}_{ij}(t)\Vert \), \(\bar{c}_{ij}^*=\sup _{t\ge 0}\Vert \bar{c}_{ij}(t)\Vert \). Equation (18) can be rewritten as in the vector form as follows

where \(v(t)=(v_1(t),v_2(t),\ldots ,v_n(t))^T\in \mathcal {R}^n,\) \(A=\hbox {diag}(a_1,a_2,\ldots ,a_n)\in \mathcal {R}^{n\times n}\), \({\beta ^*}=(\bar{b}_{ij}^*)_{n\times n}\in \mathcal {R}^{n\times n}\), \({\gamma ^*}=(\bar{c}_{ij}^*)_{n\times n}\in \mathcal {R}^{n\times n}\), \(\sigma (t)=(\sigma _1(t),\sigma _2(t),\ldots ,\sigma _n(t))^T\), \(f(v(t))=(f_1(v_1(t)),f_2(v_2(t)),\ldots ,f_n(v_n(t)))^T\) and \(f(v(t-\tau ))=(f_1(v_1(t-\tau )),\ldots ,f_n(v_n(t-\tau )))^T\).

Similar analysis technique of linearization of derive system, we have the linearization of response system as follows

where \(\tilde{\beta }^*={\beta }^*R^*\) and \(\tilde{{\varTheta }}^*=(\bar{c}^*_{ij}p^*_{ij})_{n\times n}\), \(R^*\) is the Jacobian matrix of f(v(t)) and \(\bar{v}(t-\tau )=(\sum _{j=1}^{n}\bar{c}^*_{1j}p^*_{1j}v_j(t-\tau ),\ldots ,\sum _{j=1}^{n}\bar{c}^*_{nj}p^*_{nj}v_j(t-\tau ))^T\) is the linearization vector of \({\gamma }^* f(v(t-\tau ))\) at the equilibrium point.

Definition 6

If there exists a real scaling matrix \(B\in \mathcal {R}^{n\times n}\), such that for any two solutions u(t) and v(t) of drive system (12) and response system (20) with different initial values denoted by \(\phi (0)\) and \(\pi (0)\), one has

then, drive system (12) and response system (20) are said to be globally hybrid projectively synchronized.

In this paper, we use the linear feedback control to realize synchronization between the derive system (12) and response system (20). That is, the controller \(\sigma (t)\) is assumed as

where \(K=\hbox {diag}(k_1,k_2,\ldots ,k_n)\in \mathcal {R}^{n\times n}\) is a feedback gain matrix.

3 Main results

In this section, some new sufficient conditions has been derived to ensure that system (12) and (20) is projectively synchronized under linear feedback control with appropriate scaling matrix and gain matrix.

Let us define \(e(t)=v(t)-Bu(t)\) be the synchronization errors. From (12) and (20), the error system can be obtained as

where \(\bar{\beta }=\max \{\tilde{\beta },\tilde{\beta }^*\}\) and \(\bar{{\varTheta }}=\max \{\tilde{{\varTheta }},\tilde{{\varTheta }}^*\}\).

The initial conditions associated with error system (23) is defined as \(e(s)=\delta (s),\ s\in [-\tau ,0],\) where \(\delta (s)=(\delta _1(s),\delta _2(s),\ldots ,\delta _n(s))^T\in \mathcal {C}([-\tau ,0],\mathcal {R}^n)\).

From Definition 3, taking the Laplace transform of Eq. (23), we have

where E(s) is the Laplace transform of e(t) with \(E(s)=L(e(t))\). Moreover, the above equations can be rewritten as follows

where \({\varDelta }(s)\) is the characteristic matrix of system (23) and d(s) is the nonlinear part of system (24), such as

with \(c_i=a_i-k_i-\bar{\beta }_{ii}, \ (i=1,2,\ldots ,n)\) and

Now, we assume that \(\tau =0\), the systems (23) can be rewritten as

where,

Assume that \(A=0, \bar{\beta }=0\) and \(K=0\) then (23) reduces to the system in [24] and we have the following conclusions.

Theorem 1

[24] If all the roots of the characteristic equation det\(({\varDelta }(s))=0\) have negative real parts, then the zero solution of (23) is Lyapunov asymptotically stable.

Theorem 2

[24] If \(\alpha \in (0,1),\ A=0, \bar{\beta }=0, \ K=0,\) all the eigenvalues of \(\mathcal {M}\) satisfy \(|\hbox {arg}(\lambda )|>\frac{\alpha \pi }{2}\) and the characteristic equation det\(({\varDelta }(s))=0\) has no pure imaginary roots for \(\tau >0\), then the zero solution of (23) is Lyapunov asymptotically stable.

If \(\alpha \in (0,1),\ A\ne 0, \bar{\beta }\ne 0,\ K\ne 0\) then Theorem 2 is not valid to investigate the stability of (23). Thus, we have the following conclusions.

Theorem 3

[30] If \(\alpha \in (0,1),\) all the eigenvalues of \(\mathcal {M}\) satisfy \(|\hbox {arg}(\lambda )|>\frac{ \pi }{2}\) and the characteristic equation det\(({\varDelta }(s))=0\) has no pure imaginary roots for \(\tau >0\), then the zero solution of (23) is Lyapunov asymptotically stable.

3.1 The FMNNs with hub structure and time delays

It is well known that, hub structures is a common feature in neural networks, it help us to understand the mechanism happening within complex recurrent networks. Let us consider the FMNNs with hub structure and time delays

where \(a_i>0.\) In system (29), the first neuron is the center of the hub and all the other \(i-1\) neurons are connected directly only to the central neuron and to themselves.

The corresponding response system defined as follows

The linear forms of Eqs. (29) and (30) are as follows

From (31) and (32), the error system can be obtained as

where \(A=\hbox {diag}(a_1,\ldots ,a_n)\), \(\bar{\beta }=\left( \begin{array}{ccccc} \bar{\beta }_{11} &{} \bar{\beta }_{12} &{} \bar{\beta }_{13} &{} \cdots &{} \bar{\beta }_{1n}\\ \bar{\beta }_{21} &{} \bar{\beta }_{22} &{} 0 &{} \cdots &{} 0\\ \cdots &{} \cdots &{} \cdots &{} \cdots &{} \cdots \\ \bar{\beta }_{n1} &{} 0 &{} 0 &{} \cdots &{} \bar{\beta }_{nn} \end{array}\right) \), \({{\bar{{\varTheta }}=\left( \begin{array}{ccccc} \bar{\theta }_{1} &{} 0 &{} 0 &{} \cdots &{} 0\\ 0 &{} \bar{\theta } &{} 0 &{} \cdots &{} 0\\ \cdots &{} \cdots &{} \cdots &{} \cdots &{} \cdots \\ 0 &{} 0 &{} 0 &{} \cdots &{} \bar{\theta } \end{array}\right) }}\),

Theorem 4

When \(\alpha \in (0,1),\ \bar{a}_2-\bar{\theta }>0,\ \bar{a}_1+\bar{a}_2-\bar{\theta }_1-\bar{\theta }>0,\ (\bar{a}_1-\bar{\theta }_1)(\bar{a}_2-\bar{\theta })-\chi >0,\) where \(\bar{a}_1=a_1-k_1-\bar{\beta }_{11},\ \bar{a}_2=a_i-k_i-\bar{\beta }_{ii},\ (i=2,\ldots ,n),\ \chi =\sum _{i=2}^{n}(\bar{\beta }_{i1}\bar{\beta }_{1i}).\)

-

(i)

if \(\chi =0\) and \(\bar{\theta }^2-\bar{a}_2^2\sin ^2\frac{\alpha \pi }{2}<0\) and \(\bar{\theta }_1^2-\bar{a}_1^2\sin ^2\frac{\alpha \pi }{2}<0,\) then the zero solution of (33) is Lyapunov asymptotically stable;

-

(ii)

if \(\chi \ne 0\) and \(\bar{\theta }^2-\bar{a}_2^2\sin ^2\frac{\alpha \pi }{2}<0,\) then the zero solution of (33) is Lyapunov asymptotically stable.

Proof

Taking the Laplace transform of Eq. (33) and using the same method of finding for \({\varDelta }(s)\) in (25), we have

It follows that, \({\varDelta }(s)\) is \(n\times n\) matrix (\(n\ge 3\) in hub structure). Now we find the det(\({\varDelta }(s)\)). It general, the characteristic equation det\(({\varDelta }(s))=0\) satisfies

From (35), if \(\chi =0\) then \(\left( s^\alpha +\bar{a}_2-\bar{\theta }e^{-s\tau }\right) =0\) or

\(\left( s^\alpha +\bar{a}_1-\bar{\theta }_1e^{-s\tau }\right) =0,\) where \(\chi =\sum _{i=2}^{n}\bar{\beta }_{i1}\bar{\beta }_{1i}.\)

Now, we prove that det\(({\varDelta }(s))=0\) has no pure imaginary roots for any \(\tau >0.\) We will prove that by contradiction.

Suppose that there exists \(s=\zeta i=|\zeta |(\cos \frac{\pi }{2}+i \sin (\pm \frac{\pi }{2})),\) that is a pure imaginary root of \(s^\alpha +\bar{a}_1-\bar{\theta }_1e^{-s\tau }=0\), where \(\zeta \) is a real number. If \(\zeta >0,\ s=\zeta i=|\zeta |(\cos \frac{\pi }{2}+i \sin (\frac{\pi }{2}))\) and if \(\zeta <0,\ s=\zeta i=|\zeta |(\cos \frac{\pi }{2}-i \sin (\frac{\pi }{2}))\). Substituting \(s=\zeta i=|\zeta |(\cos \frac{\pi }{2}+i \sin (\pm \frac{\pi }{2}))\) into \(s^\alpha +\bar{a}_1-\bar{\theta }_1e^{-s\tau }=0\) which gives

From (36), we separate the real and imaginary parts

and

Squaring and adding Eqs. (37) and (38), one can obtain

Obviously, when \(0<\alpha <1\) and \(\bar{\theta }_1^2-\bar{a}_1^2\sin ^2\frac{\alpha \pi }{2}<0,\) the above Eq. (39) has no real solutions, i.e., det\(({\varDelta }(s))=0\) has no pure imaginary roots for any \(\tau >0.\) Similarly, if the \(s^\alpha +\bar{a}_2-\bar{\theta }e^{-s\tau }=0,\) we have \(\bar{\theta }^2-\bar{a}_2^2\sin ^2\frac{\alpha \pi }{2}<0.\) If \(\chi \ne 0\) then from (35), we have \(s^\alpha +\bar{a}_2-\bar{\theta }e^{-s\tau }=0\) and \((s^\alpha +\bar{a}_1-\bar{\theta }_1e^{-s\tau }) (s^\alpha +\bar{a}_2-\bar{\theta }e^{-s\tau })-\chi \ne 0.\) It is clear that, we can obtain \(\bar{\theta }^2-\bar{a}_2^2\sin ^2\frac{\alpha \pi }{2}<0.\) Therefore, the conditions (i) and (ii) of Theorem 4 is easily obtained from the above.

Further, we prove that all the eigenvalues of \(\mathcal {M}\) satisfy \(|\hbox {arg}(\lambda )|>\frac{\pi }{2}.\) The coefficient matrix \(\mathcal {M}\) of system (33) satisfies

By choose \(\bar{a}_2-\bar{\theta }>0,\ \bar{a}_1+\bar{a}_2-\bar{\theta }_1-\bar{\theta }>0,\ (\bar{a}_1-\bar{\theta }_1)(\bar{a}_2-\bar{\theta })-\chi >0,\) one can see that the eigenvalues of \(\mathcal {M}\) have negative real parts, i.e., all the eigenvalues of \(\mathcal {M}\) satisfy \(|\hbox {arg}(\lambda )|>\frac{\pi }{2}\). Therefore, the proof of Theorem 4 is completed. \(\square \)

Remark 3

The study of dynamical analysis of some high-degree distribution networks such as scale-free networks, complex networks is generally more complicated. In such network, some of the nodes have high connections than the other nodes of the network which is named as hubs of the network. The existence of hub structure is a common feature, playing an important role in defining the connectivity of the considered networks and also characterizing the dynamic behaviors of such networks. Hence, the analysis of hub structure of the network is necessary and important in the network community. Recently, the authors have been studied the dynamics of fractional-order neural networks with hub structure and ring structure in [30–32]. In this paper, we study the hybrid projective synchronization of fractional-order memristor-based neural networks with hub structure and time delays.

Remark 4

The projection rate of response system with the corresponding derive system is depending on the scaling matrix. Moreover, the projective synchronization is the generalized synchronization of complete synchronization and anti-phase synchronization. In particular, if the scaling matrix \(B=I\) or \(B=-I\), the hybrid projective synchronization of fractional-order memristor-based neural networks with hub structure and time delays is reduced into complete synchronization or anti-phase synchronization of fractional-order memristor-based neural networks with hub structure and time delays, respectively.

Remark 5

In [44], the authors were studied the hybrid projective synchronization of time-delayed fractional-order chaotic systems. By using the stability theorem of linear fractional-order system with multiple time delays and a nonlinear controller to ensure the hybrid projective synchronization of considered systems. In [17], some novel conditions to ensure the weak, modified, function projective synchronization of chaotic memristive neural networks with time delays have been obtained by using the generalized Halanay inequality and a state feedback controller. The problem of projective synchronization of fractional-order memristor-based neural networks was investigated, and by using fractional-order differential inequality and adaptive controller, some new sufficient conditions have been derived to guarantee the projective synchronization of addressed networks in [50]. The problem of hybrid projective synchronization of fractional-order memristor-based neural networks with hub structure and time delays has not investigated in the literature. In this paper, the authors discussed the hybrid projective synchronization of fractional-order memristor-based neural networks with hub structure and time delays by using the stability theorem of linear fractional-order system with multiple time delays and a linear feedback controller.

4 Numerical example

In this section, a numerical example is given to show the effectiveness and feasibility of our main results. The Adams–Bashforth–Moulton predictor–corrector scheme [51] is used for obtain numerical solutions of FMNNs with hub structure and time delays.

Example 1

Consider the following fractional-order memristor-based neural networks with hub structure and time delays as derive system:

where \(\alpha =0.9,\ \tau =0.15, \ f(u(t))=\tanh u(t),\ a_1=3,\ a_2=2,\ a_3=2,\ a_4=2,\)

The corresponding response system defined as follows

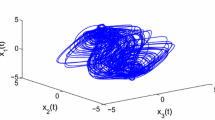

Consider the same parameter values of (40). The condition (i) of Theorem 4 is satisfied for given parameter values. Thus, hybrid projective synchronization between drive system (40) and response system (41) can be achieved with the initial conditions \(\phi (0)=(0.5,-0.2,-0.3,0.1)^T\) and \( \pi (0)=(0.1,-0.2,-0.5,0.3)^T\) and the linear feedback control gain \(K=\hbox {diag}(-90,-90,-92.3,-92.3)\). The chaotic behavior of the derive FMNNs system (40) and the response FMNNs system (41), the convergence behavior of the error state and the state trajectories of derive system (40) and response system (41) are shown in Figs. 1, 2 and 3 respectively, with the scaling matrix \(B=\hbox {diag}(1,1,1,1)\), \(B=\hbox {diag}(-1,-1,-1,-1)\) and \(B=\hbox {diag}(2,2,2,2)\).

First, the chaotic attractors of the FMNNs (40) and FMNNs (41). Second, the error convergence of hybrid projective synchronization between FMNNs (40) and FMNNs (41). Third and fourth are the state trajectories of FMNNs (40) and FMNNs (41) with \(\alpha =0.9\), \(K=\hbox {diag}(-90,-90,-92.3,-92.3)\), \(\tau =0.15\) and scaling matrix \(B=\hbox {diag}(1,1,1,1)\)

First, the chaotic attractors of the FMNNs (40) and FMNNs (41). Second, the error convergence of hybrid projective synchronization between FMNNs (40) and FMNNs (41). Third and fourth are the state trajectories of FMNNs (40) and FMNNs (41) with \(\alpha =0.9\), \(K=\hbox {diag}(-90,-90,-92.3,-92.3)\), \(\tau =0.15\) and scaling matrix \(B=\hbox {diag}(-1,-1,-1,-1)\)

First, the chaotic attractors of the FMNNs (40) and FMNNs (41). Second, the error convergence of hybrid projective synchronization between FMNNs (40) and FMNNs (41). Third and fourth are the state trajectories of FMNNs (40) and FMNNs (41) with \(\alpha =0.9\), \(K=\hbox {diag}(-90,-90,-92.3,-92.3)\), \(\tau =0.15\) and scaling matrix \(B=\hbox {diag}(2,2,2,2)\)

5 Conclusion

In this paper, the problem of hybrid projective synchronization of fractional-order memristor-based neural networks hub structure and time delays have been discussed successfully. Some new sufficient conditions for projective synchronization of addressed fractional-order memristor-based neural networks hub structure and time delays have been obtained based on the framework of Filippovs solutions, differential inclusion theory, stability theorem of linear fractional-order systems and linear feedback control technique. Moreover, a scaling matrix is assumed to be \(B=I\) (\(B=-I\)) where I is the identity matrix; then, the projective synchronization becomes complete synchronization (anti-synchronization) of the considered FMNNs with hub structure and time delays. Finally, a numerical example is given to demonstrate the synchronization effects of considered systems which depending on the different values of scaling matrix.

References

Driscoll, T., Quinn, J., Klein, S., Kim, H.T., Kim, B.J., Pershin, Y.V., Ventra, M.D., Basov, D.N.: Memristive adaptive filters. Appl. Phys. Lett. 97, 093502 (2010)

Pershin, Y.V., Ventra, M.D.: Experimental demonstration of associative memory with memristive neural networks. Neural Netw. 23, 881–886 (2010)

Chua, L.O.: Memristor—the missing circuit element. IEEE Trans. Circuit Theory 18, 507–519 (1971)

Strukov, D.B., Snider, G.S., Stewart, D.R., Williams, R.S.: The missing memristor found. Nature 453, 80–83 (2008)

Tour, J.M., He, T.: The fourth element. Nature 453, 42–43 (2008)

Wu, A., Zeng, Z.: Dynamic behaviors of memristor-based recurrent neural networks with time-varying delays. Neural Netw. 36, 1–10 (2012)

Wu, A., Zhang, J., Zeng, Z.: Dynamic behaviors of a class of memristor-based Hopfield networks. Phy. Lett. A 375, 1661–1665 (2011)

Rakkiyappan, R., Velmurugan, G., Cao, J.: Stability analysis of memristor-based fractional-order neural networks with different memductance functions. Cogn. Neurodyn. 9, 145–177 (2015)

Qi, J., Li, C., Huang, T.: Stability of delayed memristive neural networks with time-varying impulses. Cogn. Neurodyn. 8, 429–436 (2014)

Li, X., Rakkiyappan, R., Velmurugan, G.: Dissipativity analysis of memristor-based complex-valued neural networks with time-varying delays. Inf. Sci. 294, 645–665 (2015)

Rakkiyappan, R., Velmurugan, G., Cao, J.: Finite-time stability analysis of fractional-order complex-valued memristor-based neural networks with time delays. Nonlinear Dyn. 78, 2823–2836 (2014)

Rakkiyappan, R., Cao, J., Velmurugan, G.: Existence and uniform stability analysis of fractional-order complex-valued neural networks with time delays. IEEE Trans. Neural Netw. Learn. Syst. 26, 84–97 (2015)

Yang, X., Cao, J., Yu, W.: Exponential synchronization of memristive Cohen–Grossberg neural networks with mixed delays. Cogn. Neurodyn. 8, 239–249 (2014)

Wu, A., Wen, S., Zeng, Z.: Synchronization control of a class of memristor-based recurrent neural networks. Inf. Sci. 183, 106–116 (2012)

Wu, A., Zeng, Z.: Anti-synchronization control of a class of memristive recurrent neural networks. Commun. Nonlinear Sci. Numer. Simul. 18, 373–385 (2013)

Li, N., Cao, J.: New synchronization criteria for memristor-based networks: adaptive control and feedback control schemes. Neural Netw. 61, 1–9 (2015)

Wu, H., Li, R., Yao, R., Zhang, X.: Weak, modified and function projective synchronization of chaotic memristive neural networks with time delays. Neurocomputing 149, 667–676 (2015)

Wang, L., Shen, Y., Yin, Q., Zhang, G.: Adaptive synchronization of memristor-based neural networks with time-varying delays. IEEE Trans. Neural Netw. Learn. Syst. 26, 2033–2042 (2015)

Podlubny, I.: Fractional Differential Equations. Academic Press, New York (1999)

Koeller, R.C.: Application of fractional calculus to the theory of viscoelasticity. J. Appl. Mech. 51, 294–298 (1984)

Heaviside, O.: Electromagnetic Theory. Chelsea, New York (1971)

Petras, I.: A note on the fractional-order cellular neural networks. In: International joint conference on neural networks, pp. 1021–1024 (2006)

Li, Y., Chen, Y., Podlubny, I.: Mittag–Leffler stability of fractional order nonlinear dynamic systems. Automatica 45, 1965–1969 (2009)

Deng, W., Li, C., Lu, J.: Stability analysis of linear fractional differential system with multiple time delays. Nonlinear Dyn. 48, 409–416 (2007)

Shen, J., Lam, J.: Non-existence of finite-time stable equilibria in fractional-order nonlinear systems. Automatica 50, 547–551 (2014)

Lundstrom, B., Higgs, M., Spain, W., Fairhall, A.: Fractional differentiation by neocortical pyramidal neurons. Nat. Neurosci. 11, 1335–1342 (2008)

Boroomand, A., Menhaj, M.: Fractional-order Hopfield neural networks. Lect. Notes Comput. Sci. 5506, 883–890 (2009)

Chen, L., Chai, Y., Wu, R., Ma, T., Zhai, H.: Dynamic analysis of a class of fractional-order neural networks with delay. Neurocomputing 111, 190–194 (2013)

Wu, R.C., Hei, X.D., Chen, L.P.: Finite-time stability of fractional-order neural networks with delay. Commun. Theor. Phys. 60, 189–193 (2013)

Wang, H., Yu, Y., Wen, G.: Stability analysis of fractional-order Hopfield neural networks with time delays. Neural Netw. 55, 98–109 (2014)

Kaslik, E., Sivasundaram, S.: Nonlinear dynamics and chaos in fractional-order neural networks. Neural Netw. 32, 245–256 (2012)

Wang, H., Yu, Y., Wen, G., Zhang, S.: Stability analysis of fractional-order neural networks with time delay. Neural Process. Lett. (2014). doi:10.1007/s11063-014-9368-3

Velmurugan, G., Rakkiyappan, R.: Hybrid projective synchronization of fractional-order neural networks with time delays. Mathematical Analysis and its Applications. In: Proceedings in Mathematics & Statistics, Springer, p. 143. doi:10.1007/978-81-322-2485-3

Zhang, Z., Huang, J., Liu, Z., Sun, M.: Boundary stabilization of a nonlinear viscoelastic equation with interior time-varying delay and nonlinear dissipative boundary feedback. Abstr. Appl. Anal. 2014, Article ID: 102594, pp. 1–14 (2014)

Zhang, Z., Liu, Z., Miao, X., Chen, Y.: Stability analysis of heat flow with boundary time-varying delay effect. Nonlinear Anal. Theor. 73, 1878–1889 (2010)

Perora, L.M., Carroll, T.L.: Synchronization in chaotic systems. Phys. Rev. Lett. 64, 821–824 (1990)

Zhu, H., He, Z.S., Zhou, S.B.: Lag synchronization of the fractional-order system via nonlinear observer. Int. J. Mod. Phys. B 25, 3951–3964 (2011)

Taghvafard, H., Erjaee, G.H.: Phase and anti-phase synchronization of fractional order chaotic systems via active control. Commun. Nonlinear Sci. Numer. Simul. 16, 4079–4088 (2011)

Wang, B., Jian, J., Yu, H.: Adaptive synchronization of fractional-order memristor-based Chua’s system. Syst. Sci. Control Eng. 2, 291–296 (2014)

Wang, X.Y., He, Y.J.: Projective synchronization of fractional order chaotic system based on linear separation. Phys. Lett. A 372, 435–441 (2008)

Kuntanapreeda, S.: Robust synchronization of fractional-order unified chaotic systems via linear control. Comput. Math. Appl. 63, 183–190 (2012)

Mainieri, R., Rehacek, J.: Projective synchronization in three-dimensional chaotic systems. Phys. Rev. Lett. 82, 3024–3045 (1999)

Chee, C. Y., Xu, D.: Chaos-based M-nary digital communication technique using controller projective synchronization. In: IEE Proceedings G (Circuits, Devices and Systems) 153, pp. 357–360 (2006)

Wang, S., Yu, Y., Wen, G.: Hybrid projective synchronization of time-delayed fractional-order chaotic systems. Nonlinear Anal. Hybrid Syst. 11, 129–138 (2014)

Yu, J., Hu, C., Jiang, H., Fan, X.: Projective synchronization for fractional neural networks. Neural Netw. 49, 87–95 (2014)

Wang, S., Yu, Y.G., Diao, M.: Hybrid projective synchronization of chaotic fractional order systems with different dimensions. Phys. A 389, 4981–4988 (2010)

Zhou, P., Zhu, W.: Function projective synchronization for fractional-order chaotic systems. Nonlinear Anal. Real World Appl. 12, 811–816 (2011)

Wang, X.Y., Zhang, X.P., Ma, C.: Modified projective synchronization of fractional-order chaotic systems via active sliding mode control. Nonlinear Dyn. 69, 511–517 (2012)

Chen, J., Zeng, Z., Jiang, P.: Global Mittag–Leffler stability and synchronization of memristor-based fractional-order neural networks. Neural Netw. 51, 1–8 (2014)

Bao, H.B., Cao, J.: Projective synchronization of fractional-order memristor-based neural networks. Neural Netw. 63, 1–9 (2015)

Bhalekar, S., Daftardar-Gejji, V.: A predictor-corrector scheme for solving nonlinear delay differential equations of fractional order. J. Fract. Calc. Appl. 1, 1–8 (2011)

Aubin, J., Frankowsaka, H.: Set-Valued Analysis. Springer, New York (2009)

Filippov, A.F.: Differential equations with discontinuous right-hand side. Mat. Sb. 93, 99–128 (1960)

Author information

Authors and Affiliations

Corresponding author

Additional information

The work was supported by CSIR Research Project No. 25(0237)/14/EMR-II.

Rights and permissions

About this article

Cite this article

Velmurugan, G., Rakkiyappan, R. Hybrid projective synchronization of fractional-order memristor-based neural networks with time delays. Nonlinear Dyn 83, 419–432 (2016). https://doi.org/10.1007/s11071-015-2337-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-015-2337-1