Abstract

This article studies a direct numerical approach for fractional advection–diffusion equations (ADEs). Using a set of cubic trigonometric B-splines as test functions, a differential quadrature (DQ) method is firstly proposed for the 1D and 2D time-fractional ADEs of order (0, 1]. The weighted coefficients are determined, and with them, the original equation is transformed into a group of general ordinary differential equations (ODEs), which are discretized by an effective difference scheme or Runge–Kutta method. The stability is investigated under a mild theoretical condition. Secondly, based on a set of cubic B-splines, we develop a new Crank–Nicolson type DQ method for the 2D space-fractional ADEs without advection. The DQ approximations to fractional derivatives are introduced, and the values of the fractional derivatives of B-splines are computed by deriving explicit formulas. The presented DQ methods are evaluated on five benchmark problems and the simulations of the unsteady propagation of solitons and Gaussian pulse. In comparison with the algorithms in the open literature, numerical results finally illustrate the validity and accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The differential equations with a fractional derivative serve as superior models in subjects as diverse as astrophysics, chaotic dynamics, fractal network, signal processing, continuum mechanics, turbulent flow and wave propagation [29, 34, 40, 51]. This type of equations admit the non-local memory effects in mathematical mechanism, thereby filling in a big gap that the classical models cannot work well for some of the natural phenomena like anomalous transport. In general, the exact solutions can seldom be represented as closed-form expressions by using elementary functions that presents a tough challenge to derive a sufficiently valid method concerned with analytic approximations, so a keen interest has been attracted to design robust algorithms to investigate them in numerical perspectives.

In this article, we aim to construct an efficient method to numerically solve the general problems:

-

(I)

1D time-fractional ADEs

$$\begin{aligned} \frac{\partial ^\alpha u(x,t)}{\partial t^\alpha }+\kappa \frac{\partial u(x,t)}{\partial x}&-\varepsilon \frac{\partial ^2 u(x,t)}{\partial x^2} =f(x,t), \end{aligned}$$(1)with \(0<\alpha \le 1\), \(\kappa , \varepsilon \ge 0\), \(a\le x\le b\), \(t>0\), and the initial and boundary conditions

$$\begin{aligned}&u(x,0)=\psi (x),\quad a\le x\le b, \end{aligned}$$(2)$$\begin{aligned}&u(a,t)=g_1(t), \quad u(b,t)=g_2(t), \quad t>0; \end{aligned}$$(3) -

(II)

2D time-fractional ADEs

$$\begin{aligned}&\frac{\partial ^\alpha u(x,y,t)}{\partial t^\alpha }+\kappa _x\frac{\partial u(x,y,t)}{\partial x}+\kappa _y\frac{\partial u(x,y,t)}{\partial y}\nonumber \\&\quad -\varepsilon _x\frac{\partial ^2 u(x,y,t)}{\partial x^2}-\varepsilon _y\frac{\partial ^2 u(x,y,t)}{\partial y^2} =f(x,y,t),\nonumber \\ \end{aligned}$$(4)with \(0<\alpha \le 1\), \(\kappa _x, \kappa _y, \varepsilon _x,\varepsilon _y \ge 0\), \((x,y)\in \Omega \), \(t>0\), and the initial and boundary conditions

$$\begin{aligned}&u(x,y,0)=\psi (x,y),\quad (x,y)\in \Omega , \end{aligned}$$(5)$$\begin{aligned}&u(x,y,t)=g(x,y,t), \ \ (x,y)\in \partial \Omega ,\quad t>0, \end{aligned}$$(6)where \(\Omega =\{(x,y):a\le x\le b, c\le y\le d\}\) and \(\partial \Omega \) denotes its boundary;

-

(III)

2D space-fractional ADEs without advection

$$\begin{aligned} \begin{aligned}&\frac{\partial u(x,y,t)}{\partial t}-\varepsilon _x\frac{\partial ^{\beta _1} u(x,y,t)}{\partial x^{\beta _1}}\\&\quad -\varepsilon _y\frac{\partial ^{\beta _2} u(x,y,t)}{\partial y^{\beta _2}} =f(x,y,t), \end{aligned} \end{aligned}$$(7)with \(1<\beta _1, \beta _2<2\), \(\varepsilon _x,\varepsilon _y \ge 0\), \((x,y)\in \Omega \), \(t>0\), and the initial and boundary conditions

$$\begin{aligned} u(x,y,0)&=\psi (x,y),\quad (x,y)\in \Omega , \end{aligned}$$(8)$$\begin{aligned} u(x,y,t)&=0, \ \ (x,y)\in \partial \Omega ,\quad t>0, \end{aligned}$$(9)where \(\Omega \) and \(\partial \Omega \) are given as above.

In Eqs. (1), (4), the time-fractional derivatives are defined in Caputo sense, i.e.,

while in Eq. (7), the space-fractional derivatives are defined in Riemann–Liouville sense, i.e.,

and \(\frac{\partial ^\alpha u(x,y,t)}{\partial t^\alpha }\) is an analog of \(\frac{\partial ^\alpha u(x,t)}{\partial t^\alpha }\), where \(\Gamma (\cdot )\) is the Gamma function. It is noted that Eqs. (1)–(3), (4)–(6), and (7)–(9) reduce into the classical 1D or 2D ADEs if \(\alpha =1\), \(\beta _1=\beta _2=2\) are fixed.

In recent decades, fractional ADEs have been notable subjects of intense research. Except for a few analytic solutions, various numerical methods have been done for Eqs. (1)–(3) without advection, covering implicit difference method [57], high-order finite element method (FEM) [17], Legendre wavelets and spectral Galerkin methods [11, 23], direct discontinuous Galerkin method [13], quadratic spline collocation method [25], cubic B-spline collocation method (CBCM) [38], orthogonal spline collocation method [50], pseudo-spectral method [9], high-order compact difference method [16], implicit radial basis function (RBF) meshless method [24], nonpolynomial and polynomial spline methods [12]. In [3, 5, 37, 56], the algorithms based on shifted fractional Jacobi polynomials, Sinc functions and shifted Legendre polynomials, Haar wavelets and the third kind Chebyshev wavelets functions were well developed via the integral operational matrix or collocation strategy for Eqs. (1)–(3) with variable coefficients. The Gegenbauer polynomial spectral collocation method was proposed in [14] for the same type of equations, and a Sinc-Haar collocation method can be found in [33]. Uddin and Haq considered a radial basis interpolation approach [48]. Cui established a high-order compact exponential difference scheme [6]. Razminia et al. proposed a DQ method for time-fractional diffusion equations by using Lagrangian interpolation polynomials as test functions [35]. Shirzadi et al. solved the 2D time-fractional ADEs with a reaction term via a local Petrov–Galerkin meshless method [39]. Gao and Sun derived two different three-point combined compact alternating direction implicit (ADI) schemes for Eqs. (4)–(6) [10], both of which own high accuracy. High-dimensional space-fractional ADEs are challenging topics in whether analytic or numerical aspects due to the complexity and huge computing burden. The application of an numerical method to Eqs. (7)–(9) did not have large diffusion; for the conventional algorithms, we refer the readers to [15, 28, 36, 44, 52, 54] and references therein.

The trigonometric B-splines are a class of piecewise-defined functions constructed from algebraic trigonometric spaces, which have got recognition since 1964. They are preferred to the familiar polynomial B-splines since they often yield less errors when served as basis functions in interpolation theory. Nevertheless, using these basis splines to set up numerical algorithms is in its infancy and the related works are limited [1, 30]. In this study, a DQ method for the general ADEs is developed with its weighted coefficients calculated based on cubic trigonometric B-spline (CTB) functions. The basis splines are slightly modified for brevity and a few advantages. Difference schemes and Runge–Kutta Gill’s method are introduced to discretize the resulting ODEs. The condition ensuring the stability of the time-stepping DQ method is discussed and found to be rather mild. Also, we propose a new cubic B-splines-based DQ method for the 2D space-fractional diffusion equations by introducing the DQ approximations to fractional derivatives. The weights are determined by deriving explicit formulas for the fractional derivatives of B-splines through a recursive technique of partial integration. The approaches in presence are straight forward to apply and simple in implementation on computers; numerical results highlight the superiority over some previous algorithms.

The remainder is as follows. In Sect. 2, we outline some basic definitions and the cubic spline functions that are useful hereinafter. In Sect. 3, how to determine the weighted coefficients based on these CTB functions is studied and a time-stepping DQ method is constructed for Eqs. (1)–(3) and Eqs. (4)–(6). Section 4 elaborates on its stable analysis. In Sect. 5, we suggest a spline-based DQ method for Eqs. (7)–(9) based on a set of cubic B-splines by explicitly computing the values of their fractional derivatives at sampling points. A couple of numerical examples are included in Sect. 6, which manifest the effectiveness of our methods. The last section devotes to a conclusion.

2 Preliminaries

Let \(M, N\in {\mathbb {Z}}^+\) and a time–space lattice be

with \(\tau =T/N \), \(h=(b-a)/M\) on \((0,T]\times [a,b]\). Then, some auxiliary results are introduced for preliminaries.

2.1 Fractional derivatives and their discretizations

Given a good enough f(x, t), the formulas

define the \(\alpha \)-th Caputo and Riemann–Liouville derivatives, respectively, where \(m-1<\alpha <m\), \(m\in \mathbb {Z}^+\), and particularly, in the case of \(\alpha =m\), both of them degenerate into the m-th integer-order derivative.

The two frequently-used fractional derivatives are equivalent with exactness to an additive factor, i.e.,

see [21, 34] for references. Utilizing \({^{\mathrm{RL}}_0}D^\alpha _t t^l{=}\frac{\Gamma (l+1)t^{l-\alpha }}{\Gamma (l+1-\alpha )}\) and a proper scheme to discretize the Riemann–Liouville derivatives on the right side of Eq. (10), a difference scheme for Caputo derivative can be

with several valid alternatives of the discrete coefficients \(\{\omega ^\alpha _k\}_{k=0}^{n}\) [4]. Typically, we have

whose truncated error is \({\mathscr {R}}_\tau ={\mathscr {O}}(\tau )\), and

with \(\mu =\frac{4}{7+\sqrt{39}\text {i}}\), \(\overline{\mu }=\frac{4}{7-\sqrt{39}\text {i}}\), \(\text {i}=\sqrt{-1}\), and

in which case, the truncated error fulfills \({\mathscr {R}}_\tau ={\mathscr {O}}(\tau ^3)\). Actually, Eq. (14) is the recursive relation of Eq. (12). Moreover, the coefficients \(\{\omega ^\alpha _k\}_{k=0}^{n}\) in Eq. (12) satisfy

-

(i)

\(\omega ^\alpha _0=1, \quad \omega ^\alpha _k< 0\), \(\forall k\ge 1\),

-

(ii)

\(\sum _{k=0}^{\infty }\omega ^\alpha _k=0, \quad \sum _{k=0}^{n-1}\omega ^\alpha _k>0\).

These properties are easily obtained from [34].

Reset \(0<\alpha <1\), (11) thus turns into

It is noteworthy that Eq. (15) gives a smooth transition to the classic schemes when \(\alpha =1\), for instance, Eq. (15) would be the four-point backward difference scheme if \(\alpha =1\) and \(\{\omega ^\alpha _k\}_{k=0}^{n}\) are chosen to be the ones in Eq. (13), because these coefficients also fulfill \(\sum _{k=0}^{\infty }\omega ^\alpha _k=0\) and vanish apart from \(\omega ^\alpha _0\), \(\omega ^\alpha _1\), \(\omega ^\alpha _2\) and \(\omega ^\alpha _3\).

2.2 Cubic spline functions

Let \(x_{-i}=a-ih\), \(x_{M+i}=b+ih\), \(i=1,2,3\) be the six ghost knots outside [a, b]. Then the desirable CTB basis functions \(\{\mathrm{CTB}_m(x)\}_{m=-1}^{M+1}\) are defined as [30, 49]

where

with the notations

The values of \(\mathrm{CTB}_m(x)\) at each knot are given by

and the values of \(\mathrm{CTB}'_m(x)\) at each knot are given by

Using the same grid information, the cubic B-spline basis functions \(\{B_m(x)\}_{m=-1}^{M+1}\) are defined by

with the piecewise functions

Both \(\{\mathrm{CTB}_m(x)\}_{m=-1}^{M+1}\) and \(\{B_m(x)\}_{m=-1}^{M+1}\) are locally compact and twice continuously differentiable. Since the knots \(x_{-1}\), \(x_{M+1}\) lie beyond [a, b] and the weights in relation to the B-splines center at both knots do not participate in practical computation, hereunder, as in [26] for cubic B-splines, we modify the CTBs by

for simplicity, which will result in a strictly tri-diagonal algebraic system after discretization on the uniform grid. \(\{\mathrm{MTB}_m(x)\}_{m=0}^{M}\) are also linearly independent and constitute a family of basis elements of a spline space.

3 Description of CTB-based DQ method

On a 2D domain \([a,b]\times [c,d]\), letting \(M_x, M_y\in {\mathbb {Z}}^+\), we add a spatial lattice with equally spaced grid points with spacing of \(h_x=(b-a)/M_x\) in x-axis and \(h_y=(d-c)/M_y\) in y-axis, i.e.,

DQ method is understood as a numerical technique for finding the approximate solutions of differential equations that reduces the original problem to those of solving a system of algebraic or ordinary differential equations via replacing the spatial partial derivatives by the representative weighted combinations of the functional values at certain grid points on the whole domain [2]. The key procedure of such method lies in the determination of its weights and the selection of the test functions whose derivative values are explicit at the prescribed discrete grid points. As requested, we let

while for 2D problems, we let

where \(s\in {\mathbb {Z}}^+\), \(0\le i\le M_x\), \(0\le j\le M_y\) and \(a_{ij}^{(s)}\), \(a_{im}^{(s)}\), \(b_{jm}^{(s)}\) are the weighted coefficients allowing us to approximate the s-th derivatives or partial derivatives at the given grid points in the DQ methods.

3.1 The calculation of weighted coefficients

In the sequel, we apply \(\{\mathrm{MTB}_m(x)\}_{m=0}^{M}\) to calculate the 1D, 2D unknown weights. Putting \(s=1\) and substituting these basis splines into Eq. (19), we get

with the weighted coefficients of the first-order derivative \(a_{ij}^{(1)}\), \(0\le i,j\le M\), yet to be determined. In view of (18) and the properties (16)–(17), some manipulations on the above equations yield the matrix–vector forms

where \(\mathbf A \) is the \((M+1)\times (M+1)\) coefficient matrix

\(\mathbf a ^{(1)}_k\), \(0\le k\le M\), are the weighted coefficient vectors at \(x_k\), i.e., \(\mathbf a ^{(1)}_k=[a_{k0}^{(1)},a_{k1}^{(1)},\ldots ,a_{kM}^{(1)}]^\mathrm{{T}}\), and the right-side vectors \(\mathbf Z _k\) at \(x_k\), \(0\le k\le M\), are as follows

with \(z=\frac{3}{4}\csc \left( \frac{3h}{2}\right) \), respectively. Thus, \(a_{ij}^{(1)}\) are obtained by solving Eqs. (22) for each point \(x_i\). There are two different way to derive the weighted coefficients \(a_{ij}^{(2)}\) of the second-order derivative: (i) do a similar fashion as above by putting \(s=2\) in Eq. (19) and solve an algebraic system for each grid point; (ii) find the weighted coefficients \(a_{ij}^{(s)}\), \(s\ge 2\), corresponding to the high-order derivatives in a recursive style [41], i.e.,

which includes \(s=2\) as a special case. The former would be less efficient since the associated equations have to be solved as priority, so the latter one will be selected during our entire computing process. Proceeding as before via replacing \(\Omega _h\) by \(\Omega _x\), \(\Omega _y\) leads to a 2D generalization to get \(a^{(1)}_{im}\), \(b^{(1)}_{jm}\) of the first-order partial derivatives with regard to variables x, y in Eqs. (20)–(21) and by them, the following relationships can further be applied, i.e.,

to calculate \(a^{(s)}_{im}\), \(b^{(s)}_{jm}\) with \(s\ge 2\).

A point worth noticing is that \(A_0,\ A_1>0\), when \(0<h<1\), \(0<h_x,\ h_y<1\). Since \(A_0,\ A_1\) can be deemed to be the functions of h, we obtain their derivatives

On letting \(0<h<1\), both are proved to be larger than zero, i.e., \(A_0,\ A_1\) are the increasing functions with respect to h. On the other hand, there exist \(A_0(0)=0.6667\), \(A_1(1)=0.2738\). Then, it suffices to show

which implies \(A_0>2A_1\), and thus \(\mathbf A \) is a strictly diagonally dominant tri-diagonal matrix. Hence, Thomas algorithm can be applied to tackle the algebraic equations as Eqs. (22), which simply requires the arithmetic operation cost \({\mathscr {O}}(M+1)\) and would greatly economize on the memory and computing time in practice.

3.2 Construction of CTB-based DQ method

In this subpart, a DQ method based on \(\{\mathrm{MTB}_m(x)\}_{m=0}^{M}\) (MCTB-DQM) is constructed for Eqs. (1)–(3) and Eqs. (4)–(6). Let \(s=1\), 2. The substitution of the weighted sums (19), (20)–(21) into the main equations gives

with \(i=0,1,\ldots ,M\), and

with \(i=0,1,\ldots ,M_x\), \(j=0,1,\ldots ,M_y\), which are indeed a group of \(\alpha \)-th general ODEs associated with the boundary constraints (3), (6), and involve \(\alpha \in (0,1)\) and \(\alpha =1\) as two separate cases. In what follows, we employ the notations

for the ease of exposition, where \(n=0,1,\ldots ,N\).

3.2.1 The case of fractional order

Discretizing the ODEs above by the difference scheme (15) and imposing boundary constraints, we have

with \(i=1,2,\ldots ,M-1\) and

for Eqs. (1)–(3), and the following scheme

with \(i=1,2,\ldots ,M_x-1\), \(j=1,2,\ldots ,M_y-1\), and

for Eqs. (4)–(6). Eqs. (23)–(24) can further be rewritten in matrix–vector forms, for instance, letting

for Eqs. (24), we have

where

with \(\mathbf I _x\), \(\mathbf I _y\) being the identity matrices in x- and y-axis, “\(\otimes \)” being Kronecker product, and

in which, \(z=x\) if \(w=a\) while \(z=y\) if \(w=b\). The initial states are got from Eqs. (2), (5). As a result, the approximate solutions are obtained via performing the iteration in Eqs. (23)–(24) until the last time level by rewriting them in matrix–vector forms first.

3.2.2 The case of integer order

When \(\alpha =1\), despite \(\omega ^1_0=1.8333\), \(\omega ^1_1=-3\), \(\omega ^1_2=1.5\), \(\omega ^1_3=-0.3333\), being the coefficients of the four-point backward difference scheme, the initial values with the errors of the same convergent rate are generally necessary to start Eqs. (23)–(24). However, this situation would not happen if \(\{\omega _k^\alpha \}_{k=0}^n\) in Eq. (12) are applied. In such a case, to make the algorithm to be more cost-effective, we use Runge–Kutta Gill’s method to handle those ODEs instead, which is explicit and fourth-order convergent. Rearrange the ODEs in a unified form

then the DQ method is constructed as follow

where \(\mathbf u \), \(\mathbf U ^{n}\), \(n=1,2,\ldots ,N\), are the unknown vectors and \(\mathbf F (\cdot )\) stands for the matrix–vector system corresponding to the weighted sums in ODEs and contains \(a^{(s)}_{ij}\) or \(a^{(s)}_{im}\), \(b^{(s)}_{jm}\), \(s=1\), 2, as its elements. Meanwhile, the boundary constraints (3), (6) must be imposed on \(\mathbf F (\cdot )\) in the way as they are done for the fractional cases before we can fully run the procedures for Eqs. (27).

4 Stability analysis

This part makes a attempt to study the matrix stability of Eqs. (26) and the numerical stability of Eqs. (23)–(24). When \(\alpha =1\), we rewrite Eqs. (26) by

where \(\mathbf Q \) is a vector containing the right-hand part and the boundary conditions, and \(\mathbf K \) is the weighted matrix mentioned before. We discuss the homogeneous case. The numerical stability of an algorithm for the ODEs generated by a DQ method relies on the stability of the ODEs themselves. Only when their solutions are stable can a well-known method such as Runge–Kutta Gill’s method yield convergent solutions. It is enough to show their stability that the real parts of the eigenvalues of weighted matrix \(-\mathbf K \) are all non-positive. Denote the row vector consisting of the eigenvalues of \(\mathbf W ^{c}_z\) by \({\varvec{\lambda }}_z^c\), with \(z=x,y\) and \(c=1,2\). In view of the properties of Kronecker product, the eigenvalues of \(\mathbf W ^{c}_y\otimes \mathbf I _x\), \(\mathbf I _y\otimes \mathbf W ^{c}_x\) are \({\varvec{\lambda }}^{c}_y\otimes \mathbf e _x\) and \(\mathbf e _y\otimes {\varvec{\lambda }}^{c}_x\) (see [22]), respectively, and therefore, we have the eigenvalues of \(-\mathbf K \) in Eq. (28), i.e.,

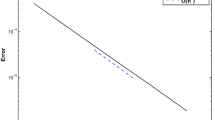

where \(\mathbf e _x\), \(\mathbf e _y\) are the row vectors of sizes \(M_x+1\) and \(M_y+1\), respectively, with all of their components being 1. The exact solution of ODEs is related to \(\varvec{\lambda }\) and the condition \(\text {Re}\{\varvec{\lambda }\}\le 0\) is easy to meet because \({\varvec{\lambda }}_z^2\) are always verified to be real and negative while \({\varvec{\lambda }}_z^1\) be complex with their real parts being very close to zero; see Fig. 1 for example. More than that, we notice that the foregoing analysis is also valid for the 1D cases and the phenomena appearing in Fig. 1 would be enhanced as the grid numbers increase. Hence, we come to a conclusion that the ODEs are stable in most cases.

The discussion about the numerical stability of a fully discrete DQ method is difficult and still sparse [42, 43]. In the sequel, we show the conditionally stable nature of Eqs. (23)–(24) in the context of \(L_2\)-norm \(||\cdot ||\) and the analysis is not just applicable to the fractional case. Without loss of generality, consider the 2D cases and the discrete coefficients \(\{\omega ^\alpha _k\}_{k=0}^{n}\) in Eq. (12). Let \(\tilde{\mathbf{U }}^0\) be the approximation of initial values \(\mathbf U ^0\). Then

On subtracting Eq. (29) from (25) and letting \(\mathbf e ^n=\mathbf U ^n-\tilde{\mathbf{U }}^n\), we have the perturbation equation

where \(\mathbf I \) is the identity matrix in the same size of K. To prove \(||\mathbf e ^n||\le ||\mathbf e ^0||\), we make the assumption

When \(n=1\), by taking \(||\cdot ||\) on both sides of Eq. (30), \(||\mathbf e ^1||\le ||\mathbf e ^0||\) is trivial due to \(\omega ^\alpha _0=1\). Let

Using mathematical induction, it thus follows from the properties of \(\{\omega ^\alpha _k\}_{k=0}^{n}\) stated in Sect. 2 that

Hereinafter, we proceed with a full numerical investigation on the assumption (31) to explore the potential factors which may lead to \(||(\mathbf I +\tau ^\alpha \mathbf K )^{-1}||> 1\). At first, if \(\tau ^\alpha \) continuously varies from 1 to 0, there holds \(||(\mathbf I +\tau ^\alpha \mathbf K )^{-1}||\rightarrow 1\). However, this process can affect the maximal ratio of the coefficients of advection and diffusivity to keep (31); we leave this case to the end of the discussion. To be more representative, we take \(\tau =1.0\times 10^{-3}\), \(\alpha =0.5\), and \(M_x=M_y=5\), unless otherwise stated. The main procedures are divided into three steps: (i) fixing \(\varepsilon _x,\varepsilon _y\), and \(\Omega \), let \(\kappa _x,\kappa _y\) vary and the values of \(||(\mathbf I +\tau ^\alpha \mathbf K )^{-1}||\) as the function of \(\kappa _x,\kappa _y\) are plotted in (a), (b) of Fig. 2; (ii) fixing \(\kappa _x,\kappa _y\), and \(\Omega \), let \(M_x\), \(M_y\) vary and the results are plotted in (c) of Fig. 2, where \(\kappa _x=\kappa _y=500\); (iii) fixing \(\kappa _x,\kappa _y,\varepsilon _x,\varepsilon _y\), let \(a=c=0\) and b, d vary, and the corresponding results are presented in (d) of Fig. 2. It is worthy to note that \(\Omega \) is the unit square except the case of (iii), and the parameters of the same types in x- and y- axis are used as the same, for example, \(\varepsilon _x=\varepsilon _y\). Now, we consider the influence brought by \(\tau \). Resetting \(\tau =1.0\times 10^{-10}\), let \(\varepsilon _x=\varepsilon _y=1\) and \(\kappa _x, \kappa _y\) vary. The behavior of objective quantity is plotted in subfigure (e), from which, we see that the critical ratio between \(\kappa _x, \kappa _y\) and \(\varepsilon _x, \varepsilon _y\) to maintain (31) is about 40, far less than the case of (i), and can further be improved by increasing \(M_x, M_y\).

From the foregoing discussion and figures, we summarize the conclusions as follows: (i) if \(\varepsilon _x\), \(\varepsilon _y\) are not small, the tolerant ranges of \(\kappa _x\), \(\kappa _y\) to guarantee (31) are quite loose and when \(\varepsilon _x\), \(\varepsilon _y\rightarrow \infty \), \(||(\mathbf I +\tau ^\alpha \mathbf K )^{-1}||\) can be very close to zero; (ii) if \(\kappa _x\), \(\kappa _y\) are larger than \(\varepsilon _x\), \(\varepsilon _y\) and \(\varepsilon _x\), \(\varepsilon _y\) themselves are small, \(||(\mathbf I +\tau ^\alpha \mathbf K )^{-1}||\) can be larger than 1; however, such issue can be remedied by increasing the grid numbers; (iii) in general, the larger \(M_x\), \(M_y\), the smaller \(||(\mathbf I +\tau ^\alpha \mathbf K )^{-1}||\); (iv) when the computational domain expands, \(||(\mathbf I +\tau ^\alpha \mathbf K )^{-1}||\) grows at a speed, which may result in the invalidation of (31) if \(\varepsilon _x\), \(\varepsilon _y\) and \(M_x, M_y\) remain unchanged; (v) when \(\tau \rightarrow 0\), the critical ratio between \(\kappa _x, \kappa _y\) and \(\varepsilon _x, \varepsilon _y\) to maintain this assumption appears to decrease, but it would be enhanced as the grid is refined.

Consequently, the assumption is meaningful and essentially a mild theoretical restriction in practise.

5 Description of cubic B-spline DQ method

In this section, a robust DQ method (MCB-DQM) based on the modified cubic B-splines \(\{\mathrm{MB}_m(x)\}_{m=0}^{M}\) is established for Eqs. (7)–(9) by introducing the DQ approximations to fractional derivatives. In the light of the essence of traditional DQ methods, we consider

for fractional derivatives, in constructing the DQ algorithm, where \(0\le i\le M_x\), \(0\le j\le M_y\) and the weighted coefficients \(a_{im}^{(\beta _1)}\), \(b_{jm}^{(\beta _2)}\) satisfy

The validation of Eqs. (32)–(33) is ensured by the linear properties of fractional derivatives. \(a_{im}^{(\beta _1)}\), \(b_{jm}^{(\beta _2)}\) are then determined by tackling the resulting algebraic problems from the above equations for each axis if the values of the fractional derivatives of B-splines \(\{\mathrm{MB}_m(x)\}_{m=0}^{M}\) at all sampling points are known.

5.1 The explicit formulas of fractional derivatives

It is the weakly singular integral structure that makes it difficult to calculate the values of the fractional derivatives for a function as B-spline at a sampling point. In the text that follows, we concentrate on the explicit expressions of the \(\beta \)-th (\(1<\beta <2\)) Riemann–Liouville derivative of B-splines \(\{B_m(x)\}_{m=-1}^{M+1}\) with a recursive technique of integration by parts. Since these basis splines are piecewise and locally compact on four consecutive subintervals, we have

with \(2\le m\le M-2\). The compact supports of \(B_{M-1}(x)\), \(B_{M}(x)\), and \(B_{M+1}(x)\) partially locate on the outside of \([x_0,x_M]\), so do \(B_{-1}(x)\), \(B_{0}(x)\), and \(B_{1}(x)\); nevertheless, \(B_{M-1}(x)\), \(B_{M}(x)\), and \(B_{M+1}(x)\) can be thought of as the special cases of the aforementioned argument, so are omitted here. Further, we have

On the other hand, as the integrands of the integration in fractional derivatives, \(\varphi _i(x)\), \(i=1,2,3,4\), are cubic polynomials, for which, the order shrinks by one each time integration by parts is applied. Being aware of this, we can eliminate the weakly singular integrations by repeating integration by parts four times for each \(\varphi _i(x)\) to derive the fully explicit formulas. The derivation processes are lengthy and tedious; we therefore outline the specific expressions of B-splines \({^{\mathrm{RL}}_{x_0}}D^\beta _xB_m(x)\), \(-1\le m \le M+1\), in “Appendix”.

5.2 Construction of cubic B-spline DQ method

Use the early notations for brevity. On using DQ approximations (32)–(33) to handle fractional derivatives, Eq. (7) is transformed into a set of first-order ODEs

with \(i=0,1,\ldots ,M_x\), \(j=0,1,\ldots ,M_y\). Imposing the boundary constraint (9) on Eq. (36) and applying the Crank–Nicolson scheme in time, we thus obtain the following spline-based DQ scheme

where \(i=1,2,\ldots ,M_x-1\), \(j=1,2,\ldots ,M_y-1\). It is visible that DQ methods are truly meshless and convenient in implementation. Due to the insensitivity to dimensional changes, (37) can easily be generalized to the higher-dimensional space-fractional problems, but do not cause the rapid increase of computing burden.

6 Illustrative examples

In this section, a couple of numerical examples are carried out to gauge the practical performance of MCTB-DQM and new MCB-DQM. In order to check their accuracy, we compute the errors by using the norms

where \(e_N(M)\) is termed by a normalized \(L_2\)-norm. As to \(\{\omega ^\alpha _k\}_{k=0}^{n}\) in the schemes (23)–(24), we use (12) in the first and fifth examples and (13) in the others but not the last two ones. In the computation, our algorithms are implemented on MATLAB platform in a Lenovo PC with Intel(R) Pentium(R) G2030 3.00GHz CPU and 4 GB RAM except the fourth example. The obtained results are comparatively discussed with the early works available in the open literature.

Example 6.1

Let \(\kappa =1\), \(\varepsilon =2\); Eqs. (1)–(3) with \(\psi (x)=\exp (x)\), \(g_1(t)=E_\alpha (t^\alpha )\), \(g_2(t)=exp(1)E_\alpha (t^\alpha )\) and homogeneous forcing term are considered on [0, 1], where \(E(t^\alpha )\) is the Mittag–Leffler function

It is verified that its solution is \(u(x,t)=\exp (x)E(t^\alpha )\). In order to show the convergence of MCTB-DQM, we fix \(\tau =1.0\times 10^{-5}\) so that the temporal errors are negligible as compared to spatial errors. The numerical results at \(t=0.1\) for various \(\alpha \) are displayed in Table 1; the convergent rate is shortly written as “Cov. rate”. As one sees, our method is pretty stable and convergent with almost spatial second-order for this problem.

Example 6.2

In this test, we solve a diffusion equation on [0, 1] with \(\varepsilon =1\), \(\psi (x)=4x(1-x)\), zero boundary condition and right side. Its true solution has the form

For comparison of the numerical results given by FDS-D I, FDS-D II [53] and the semi-discrete FEM [18], we choose the same time stepsize \(\tau =1.0\times 10^{-4}\). Letting \(\alpha =0.1\), 0.5 and 0.95, the corresponding results of these four methods at \(t=1\) are tabulated side by side in Table 2, from which, we conclude that MCTB-DQM is accurate and produces very small errors as the other three methods as the grid number M increases.

Example 6.3

Let \(\kappa =0\), \(\varepsilon =1\); we solve Eqs. (1)–(3) with homogeneous initial and boundary values, and

on [0, 1]. The true solution is \(u(x,t)=t^2\sin (2\pi x)\). The algorithm is first run with \(\alpha =0.8\), \(\tau =2.0\times 10^{-2}\) and \(M=50\). In Fig. 3, we plot the approximate solution and a point to point error distribution at \(t=1\), where good accuracy is observed. In Table 3, we then report a comparison of \(e_2(M)\), \(e_\infty (M)\) at \(t=1\) between MCTB-DQM and CBCM [38], when \(\alpha =0.3\). Here, MCTB-DQM uses \(\tau =5.0\times 10^{-3}\) while CBCM chooses \(\tau =1.25\times 10^{-3}\). As expected, our approach generates the approximate solutions with a better accuracy than those obtained by CBCM.

Example 6.4

We consider a 2D diffusion equation on \([-1,1]\times [-1,1]\) with \(\varepsilon _x=\varepsilon _y=1\), which is referred to by Zhai and Feng as a test of a block-centered finite difference method (BCFDM) on nonuniform grids [55]. The forcing function is specified to enforce

Under \(\tau =1.0\times 10^{-2}\), \(M_x=M_y=60\) and \(\alpha =0.5\), we first plot the approximate solution and a point to point error distribution at \(t=0.5\) in Fig. 4. Then, we compare MCTB-DQM and BCFDM in term of \(e_\infty (M_x,M_y)\) at \(t=0.5\) in Table 4. It is obvious that MCTB-DQM produces significantly smaller errors than BCFDM as the grid number increases despite a smaller time stepsize \(\tau =2.5\times 10^{-3}\) and the nonuniform girds BCFDM adopts; moreover, MCTB-DQM provides more than quadratic rate of convergence for this problem.

Example 6.5

In this test, we simulate the solitons propagation and collision governed by the following time-fractional nonlinear Schrödinger equation (NLS):

with \(\text {i}=\sqrt{-1}\) and \(\beta \) being a real constant, subjected to the initial values of two Gaussian types:

-

(i)

mobile soliton

$$\begin{aligned} \psi (x)=\text {sech}(x)\exp (2\text {i}x); \end{aligned}$$(38) -

(ii)

double solitons collision

$$\begin{aligned} \psi (x)=\sum _{j=1}^2\text {sech}(x-x_j)\exp (\text {i}p(x-x_j)). \end{aligned}$$(39)

When \(\alpha =1\) and \(\beta =2\), the NLS with Eq. (38) has the soliton solution \(u(x,t)=\text {sech}(x-4t)\exp (\text {i}(2x-3t))\). As the solutions would generally decay to zero as \(|x|\rightarrow \infty \), we truncate the system into a bounded interval \(\Omega =[a,b]\) with \(a\ll 0\) and \(b\gg 0\), and enforce periodic or homogeneous Dirichlet boundary conditions. Letting \(u(x,t)=U(x,t)+\text {i}V(x,t)\). Then, the original equation can be recast as a coupled diffusion system

After applying the scheme (23), nevertheless, a nonlinear system has to be solved at each time step. In such a case, the Newton’s iteration is utilized to treat it and terminated by reaching a solution with tolerant error \(1.0\times 10^{-12}\) if \(\alpha =1\), for which, the Jacobian matrix is

When \(\alpha \ne 1\), because the analytic solutions still remain unknown and the Newton’s procedure relies heavily on its initial values, we instead employ the trust-region-dogleg algorithm built into MATLAB to improve the convergence of iteration. At first, taking \(\tau =2.0\times 10^{-3}\), \(M=100\), \(\beta =2\), and \(\Omega =[-10,10]\), the mean square errors at \(t=0.1\) with the initial condition (38) for various \(\alpha \) are reported in Table 5, where the solutions computed by using the coefficients (13) on a very fine time–space lattice, i.e., \(\tau =2.5\times 10^{-4}\), \(M=400\), are adopted as reference solutions (\(\alpha \ne 1\)). As seen from Table 5, our methods are convergent and applicable to nonlinear coupled problems; besides, the scheme (26) is clearly more efficient than (23) since an extra Newton’s outer loop is avoided. Then, retaking \(M=200\) and \(\Omega =[-20,20]\), we display the evolution of the amplitude of the mobile soliton created by (23) for \(\alpha =0.98\) and 1.0 in Fig. 5, respectively. Using the same discrete parameters, we consider the double solitons collision for \(\alpha =0.96\) and 1.0 with \(x_1=-6\), \(x_2=6\), and \(p=\pm 2\) in Fig. 6. It is easily drawn from these figures that the width and height of the solitons have been significantly changed by the fractional derivative. In particular, when \(\alpha =1\), a collision of double solitons without any reflection, transmission, trapping and creation of new solitary waves is exhibited, which says that it is elastic, while in fractional cases, the shapes of the solitons may not be retained after they intersect each other.

Example 6.6

In this test, we simulate an unsteady propagation of a Gaussian pulse governed by a classical 2D advection-dominated diffusion equation on a square domain \([0,2]\times [0,2]\) by using the scheme (26), which has been extensively studied [19, 27, 31, 45]. The Gaussian pulse solution is expressed as

and the initial Gaussian pulse and boundary values are taken from the pulse solution. Letting \(\kappa _x=\kappa _y=0.8\), \(\varepsilon _x=\varepsilon _y=0.01\), we display its true solution at \(t=1.25\) with \(M_x=M_y=50\) and the used lattice points on problem domain in Fig. 7, which describe a pulse centered at (1.5, 1.5) with a pulse height of 1 / 6. Using the same grid number together with \(\tau =5.0\times 10^{-3}\), we present the contour plots of the approximate solutions at \(t=0\), 0.25, 0.75, 1.25 created by MCTB-DQM in Fig. 8. As the graph shows, the pulse is initially centered at (0.5, 0.5) with a pulse height of 1, then it moves toward a position centered at (1.5, 1.5); during this process, its width and height appear to be continuously varying as the time goes by. Besides, the last contour plot in Fig. 8 coincides with the true solution plotted in Fig. 7. Retaking \(\tau =6.25\times 10^{-3}\) and \(M_x=M_y=80\), we compare our results with those obtained by some previous algorithms as nine-point high-order compact (HOC) schemes [19, 31], Peaceman–Rachford ADI scheme (PR-ADI) [32], HOC-ADI scheme [20], exponential HOC-ADI scheme (EHOC-ADI) [46], HOC boundary value method (HOC-BVM) [7], compact integrated RBF ADI method (CIRBF-ADI) [45], coupled compact integrated RBF ADI method (CCIRBF-ADI) [47], and the Galerkin FEM combined with the method of characteristics (CGFEM) [8], at \(t=1.25\) in Table 6. We implement CGFEM on a quasi-uniform triangular mesh with the meshsize \(2.5\times 10^{-2}\) by using both Lagrangian P1 and P2 elements. Also, average absolute errors are added as supplements to evaluate and compare their accuracy. As seen from Table 6, all of these methods are illustrated to be very accurate to capture the Gaussian pulse except the PR-ADI scheme; besides, our method reaches a better accuracy than the others and even shows promise in treating the advection–diffusion equations in the high Péclet number regime.

Example 6.7

In the last test, we consider the 2D space-fractional Eqs. (7)–(9) on \([0,1]\times [0,1]\) with \(\varepsilon _x=\varepsilon _y=1\), \(\psi (x,y)=x^2(1-x)^2y^2(1-y)^2\), and homogeneous boundary values. The source term is manufactured as

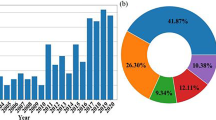

to enforce the analytic solution \(u(x,y,t)=e^{-t}x^2(1-x)^2y^2(1-y)^2\). Letting \(\beta _1=1.1\), \(\beta _2=1.3\), and \(\tau =2.5\times 10^{-4}\), we solve the problem via the FEM proposed by [52] and MCB-DQM, and compare their numerical results at \(t=0.2\) in Table 7, where the P1 element and structured meshes are adopted. The data indicate that DQ method converges toward the analytic solution as the grid numbers increase and admits slightly better results than FEM. More importantly, the implemental CPU times of MCB-DQM are far less than those of FEM, which confirm its high computing efficiency.

7 Conclusion

The ADEs are the subjects of active interest in mathematical physics and the related areas of research. In this work, we have proposed an effective DQ method for such equations involving the derivatives of fractional orders in time and space. Its weighted coefficients are calculated by making use of modified CTBs and cubic B-splines as test functions. The stability of DQ method for the time-fractional ADEs in the context of \(L_2\)-norm is performed. The theoretical condition required for the stable analysis is numerically surveyed at length. We test the codes on several benchmark problems and the outcomes have demonstrated that it outperforms some of the previously reported algorithms such as BCFDM and FEM in term of overall accuracy and efficiency.

In a linear space, spanned by a set of proper basis functions as B-splines, any function can be represented by a weighted combination of these basis functions. While all basis functions are defined, the function remains unknown because the coefficients on the front of basis functions are still unknown. However, when all basis functions satisfy Eqs. (34)–(35), by virtue of linearity, it can be examined that the objective function satisfies Eqs. (34)–(35) as well. This is the essence of DQ methods, which guarantees their convergence.

Despite the error bounds are difficult to determine, the numerical results illustrate that the spline-based DQ method admits the convergent results for the fractional ADEs. The presented approach can be generalized to the higher-dimensional and other complex model problems arising in material science, structural and fluid mechanics, heat conduction, biomedicine, differential dynamics, and so forth. High computing efficiency, low memory requirement, and the ease of programming are its main advantages.

References

Abbas, M., Majid, A.A., Ismail, A.I.M., Rashid, A.: The application of cubic trigonometric B-spline to the numerical solution of the hyperbolicproblems. Appl. Math. Comput. 239, 74–88 (2014)

Bellman, R., Casti, J.: Differential quadrature and long-term integration. J. Math. Anal. Appl. 34(2), 235–238 (1971)

Bhrawy, A., Zaky, M.: A fractional-order Jacobi Tau method for a class of time-fractional PDEs with variable coefficients. Math. Method Appl. Sci. 39(7), 1765–1779 (2016)

Chen, M.H., Deng, W.H.: Fourth order difference approximations for space Riemann–Liouville derivatives based on weighted and shifted Lubich difference operators. Commun. Comput. Phys. 16(2), 516–540 (2014)

Chen, Y.M., Wu, Y.B., Cui, Y.H., Wang, Z.Z., Jin, D.M.: Wavelet method for a class of fractional convection–diffusion equation with variable coefficients. J. Comput. Sci. 1(3), 146–149 (2010)

Cui, M.R.: A high-order compact exponential scheme for the fractional convection–diffusion equation. J. Comput. Appl. Math. 255, 404–416 (2014)

Dehghan, M., Mohebbi, A.: High-order compact boundary value method for the solution of unsteady convection–diffusion problems. Math. Comput. Simulat. 79(3), 683–699 (2008)

Douglas Jr., J., Russell, T.F.: Numerical methods for convection–dominated diffusion problems based on combining the method of characteristics with finite element or finite difference procedures. SIAM J. Numer. Anal. 19(5), 871–885 (1982)

Esmaeili, S., Garrappa, R.: A pseudo-spectral scheme for the approximate solution of a time-fractional diffusion equation. Int. J. Comput. Math. 92(5), 980–994 (2015)

Gao, G.H., Sun, H.W.: Three-point combined compact alternating direction implicit difference schemes for two-dimensional time-fractional advection–diffusion equations. Commun. Comput. Phys. 17(2), 487–509 (2015)

Heydari, M.H.: Wavelets Galerkin method for the fractional subdiffusion equation. J. Comput. Nonlinear Dyn. 11(6), 061014 (2016)

Hosseini, S.M., Ghaffari, R.: Polynomial and nonpolynomial spline methods for fractional sub-diffusion equations. Appl. Math. Model. 38(14), 3554–3566 (2014)

Huang, C.B., Yu, X.J., Wang, C., Li, Z.Z., An, N.: A numerical method based on fully discrete direct discontinuous Galerkin method for the time fractional diffusion equation. Appl. Math. Comput. 264, 483–492 (2015)

Izadkhah, M.M., Saberi-Nadjafi, J.: Gegenbauer spectral method for time-fractional convection–diffusion equations with variable coefficients. Math. Method Appl. Sci. 38(15), 3183–3194 (2015)

Jia, J.H., Wang, H.: A fast finite volume method for conservative space-fractional diffusion equations in convex domains. J. Comput. Phys. 310, 63–84 (2016)

Ji, C.C., Sun, Z.Z.: A high-order compact finite difference scheme for the fractional sub-diffusion equation. J. Sci. Comput. 64(3), 959–985 (2015)

Jiang, Y.J., Ma, J.T.: High-order finite element methods for time-fractional partial differential equations. J. Comput. Appl. Math. 235(11), 3285–3290 (2011)

Jin, B.T., Lazarov, R., Zhou, Z.: Error estimates for a semidiscrete finite element method for fractional order parabolic equations. SIAM J. Numer. Anal. 51(1), 445–466 (2013)

Kalita, J.C., Dalal, D.C., Dass, A.K.: A class of higher order compact schemes for the unsteady two-dimensional convection–diffusion equation with variable convection coefficients. Int. J. Numer. Methods Fluids 38(12), 1111–1131 (2002)

Karaa, S., Zhang, J.: High order ADI method for solving unsteady convection–diffusion problems. J. Comput. Phys. 198(1), 1–9 (2004)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations. Elsevier, Amsterdam (2006)

Laub, A.J.: Matrix Analysis For Scientists & Engineers. Society for Industrial and Applied Mathematics, Philadelphia (2005)

Lin, Y.M., Xu, C.J.: Finite difference/spectral approximations for the time-fractional diffusion equation. J. Comput. Phys. 225(2), 1533–1552 (2007)

Liu, Q., Gu, Y.T., Zhuang, P., Liu, F., Nie, Y.F.: An implicit RBF meshless approach for time fractional diffusion equations. Comput. Mech. 48(1), 1–12 (2011)

Luo, W.H., Huang, T.Z., Wu, G.C., Gu, X.M.: Quadratic spline collocation method for the time fractional subdiffusion equation. Appl. Math. Comput. 276, 252–265 (2016)

Mittal, R.C., Jain, R.K.: Numerical solutions of nonlinear Burgers’ equation with modified cubic B-splines collocation method. Appl. Math. Comput. 218(15), 7839–7855 (2012)

Ma, Y.B., Sun, C.P., Haake, D.A., Churchill, B.M., Ho, C.M.: A high-order alternating direction implicit method for the unsteady convection-dominated diffusion problem. Int. J. Numer. Methods Fluids 70(6), 703–712 (2012)

Meerschaert, M.M., Scheffler, H.P., Tadjeran, C.: Finite difference methods for two-dimensional fractional dispersion equation. J. Comput. Phys. 211(1), 249–261 (2006)

Mainardi, F.: Fractals and Fractional Calculus Continuum Mechanics. Springer, Berlin (1997)

Nazir, T., Abbas, M., Ismail, A.I.M., Majid, A.A., Rashid, A.: The numerical solution of advection–diffusion problems using new cubic trigonometric B-splines approach. Appl. Math. Model. 40(7–8), 4586–4611 (2016)

Noye, B.J., Tan, H.H.: Finite difference methods for solving the two-dimensional advection–diffusion equation. Int. J. Numer. Methods Fluids 9(1), 75–98 (1989)

Peaceman, D.W., Rachford Jr., H.H.: The numerical solution of parabolic and elliptic differential equations. J. Soc. Ind. Appl. Math. 3(1), 28–41 (1955)

Pirkhedri, A., Javadi, H.H.S.: Solving the time-fractional diffusion equation via Sinc–Haar collocation method. Appl. Math. Comput. 257, 317–326 (2015)

Podlubny, I.: Fractional Differential Equations. Academic Press, San Diego (1999)

Razminia, K., Razminia, A., Baleanu, D.: Investigation of the fractional diffusion equation based on generalized integral quadrature technique. Appl. Math. Model. 39(1), 86–98 (2015)

Roop, J.P.: Computational aspects of FEM approximation of fractional advection dispersion equations on bounded domains in \({\mathbb{R}}^2\). J. Comput. Appl. Math. 193(1), 243–268 (2006)

Saadatmandi, A., Dehghan, M., Azizi, M.R.: The Sinc–Legendre collocation method for a class of fractional convection–diffusion equations with variable coefficients. Commun. Nonlinear Sci. Numer. Simul. 17(11), 4125–4136 (2012)

Sayevand, K., Yazdani, A., Arjang, F.: Cubic B-spline collocation method and its application for anomalous fractional diffusion equations in transport dynamic systems. J. Vib. Control 22(9), 2173–2186 (2016)

Shirzadi, A., Ling, L., Abbasbandy, S.: Meshless simulations of the two-dimensional fractional-time convection–diffusion–reaction equations. Eng. Anal. Bound. Elem. 36(11), 1522–1527 (2012)

Shlesinger, M.F., West, B.J., Klafter, J.: Lévy dynamics of enhanced diffusion: application to turbulence. Phys. Rev. Lett. 58(11), 1100–1103 (1987)

Shu, C., Richards, B.E.: Application of generalized differential quadrature to solve two-dimensional incompressible Navier–Stokes equations. Int. J. Numer. Methods Fluids 15(7), 791–798 (1992)

Tomasiello, S.: Stability and accuracy of the iterative differential quadrature method. Int. J. Numer. Methods Eng. 58(9), 1277–1296 (2003)

Tomasiello, S.: Numerical stability of DQ solutions of wave problems. Numer. Algor. 57(3), 289–312 (2011)

Tian, W.Y., Zhou, H., Deng, W.H.: A class of second order difference approximations for solving space fractional diffusion equations. Math. Comput. 84(294), 1703–1727 (2015)

Thai-Quang, N., Mai-Duy, N., Tran, C.-D., Tran-Cong, T.: High-order alternating direction implicit method based on compact integrated-RBF approximations for unsteady/steady convection–diffusion equations. CMES Comput. Model. Eng. Sci. 89(3), 189–220 (2012)

Tian, Z.F., Ge, Y.B.: A fourth-order compact ADI method for solving two-dimensional unsteady convection–diffusion problems. J. Comput. Appl. Math. 198(1), 268–286 (2007)

Tien, C.M.T., Thai-Quang, N., Mai-Duy, N., Tran, C.-D., Tran-Cong, T.: A three-point coupled compact integrated RBF scheme for second-order differential problems. CMES Comput. Model. Eng. Sci. 104(6), 425–469 (2015)

Uddin, M., Haq, S.: RBFs approximation method for time fractional partial differential equations. Commun. Nonlinear Sci. Numer. Simul. 16(11), 4208–4214 (2011)

Walz, G.: Identities for trigonometric B-splines with an application to curve design. BIT Numer. Math. 37(1), 189–201 (1997)

Yang, X.H., Zhang, H.X., Xu, D.: Orthogonal spline collocation method for the two-dimensional fractional sub-diffusion equation. J. Comput. Phys. 256, 824–837 (2014)

Zaslavsky, G.M., Stevens, D., Weitzner, H.: Self-similar transport in incomplete chaos. Phys. Rev. E. 48(3), 1683–1694 (1993)

Zhu, X.G., Nie, Y.F., Wang, J.G., Yuan, Z.B.: A numerical approach for the Riesz space-fractional Fisher’ equation in two-dimensions. Int. J. Comput. Math. 94(2), 296–315 (2017)

Zeng, F.H., Li, C.P., Liu, F.W., Turner, I.: The use of finite difference/element approaches for solving the time-fractional subdiffusion equation. SIAM J. Sci. Comput. 35(6), A2976–A3000 (2013)

Zeng, F.H., Liu, F.W., Li, C.P., Burrage, K., Turner, I., Anh, V.: A Crank–Nicolson ADI spectral method for a two-dimensional Riesz space fractional nonlinear reaction–diffusion equation. SIAM J. Numer. Anal. 52(6), 2599–2622 (2014)

Zhai, S.Y., Feng, X.L.: A block-centered finite-difference method for the time-fractional diffusion equation on nonuniform grids. Numer. Heat Transf. Part B Fundam. 69(3), 217–233 (2016)

Zhou, F.Y., Xu, X.Y.: The third kind Chebyshev wavelets collocation method for solving the time-fractional convection diffusion equations with variable coefficients. Appl. Math. Comput. 280, 11–29 (2016)

Zhuang, P.H., Liu, F.W.: Implicit difference approximation for the time fractional diffusion equation. J. Appl. Math. Comput. 22(3), 87–99 (2006)

Acknowledgements

The authors are very grateful to the reviewers for their valuable comments and suggestions. This research was supported by National Natural Science Foundations of China (Nos. 11471262 and 11501450).

Author information

Authors and Affiliations

Corresponding author

Appendix: The explicit formulas of the fractional derivatives of cubic B-splines

Appendix: The explicit formulas of the fractional derivatives of cubic B-splines

The fractional derivatives center at \(x_{-1}\), \(x_0\), and \(x_1\):

The fractional derivatives center at \(x_{m}\) with \(2\le m\le M+1\):

which contain \({^{\mathrm{RL}}_{x_0}}D^\beta _xB_{M-1}(x)\), \({^{\mathrm{RL}}_{x_0}}D^\beta _xB_M(x)\), and \({^{\mathrm{RL}}_{x_0}}D^\beta _xB_{M+1}(x)\) as special cases:

Rights and permissions

About this article

Cite this article

Zhu, X.G., Nie, Y.F. & Zhang, W. . An efficient differential quadrature method for fractional advection–diffusion equation. Nonlinear Dyn 90, 1807–1827 (2017). https://doi.org/10.1007/s11071-017-3765-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-017-3765-x