Abstract

We consider an identification (inverse) problem, where the state \({\mathsf {u}}\) is governed by a fractional elliptic equation and the unknown variable corresponds to the order \(s \in (0,1)\) of the underlying operator. We study the existence of an optimal pair \(({\bar{s}}, {{\bar{{\mathsf {u}}}}})\) and provide sufficient conditions for its local uniqueness. We develop semi-discrete and fully discrete algorithms to approximate the solutions to our identification problem and provide a convergence analysis. We present numerical illustrations that confirm and extend our theory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Supported by the claim that they seem to better describe many processes, nonlocal models have recently become of great interest in the applied sciences and engineering. This is specially the case when long range (i.e., nonlocal) interactions are to be taken into consideration; we refer the reader to [2] for a far from exhaustive list of examples where such phenomena take place. However, the actual range and scaling laws of these interactions—which determines the order of the model—cannot always be directly determined from physical considerations. This is in stark contrast with models governed by partial differential equations (PDEs), which usually arise from a conservation law. This justifies the need to, on the basis of physical observations, identify the order of a fractional model.

In [12], for the first time, this problem was addressed. The authors studied the optimization with respect to the order of the spatial operator in a nonlocal evolution equation; existence of solutions as well as first and second order optimality conditions were addressed. The present work, in the stationary regime, provides a continuation of these studies and is a quest to make them tractable from an implementation viewpoint. The novelty of our work can be summarized as follows: we address the local uniqueness of minimizers and propose a gradient based numerical algorithm to approximate them. To realize the gradient we approximate the sensitivity of the state using a finite difference scheme. In addition, we study the convergence rates of our method.

To make matters precise, let \(\varOmega \) be an open and bounded domain in \({\mathbb {R}}^n\) (\(n \ge 1\)) with Lipschitz boundary \(\partial \varOmega \). Given a desired state \({\mathsf {u}}_d : \varOmega \rightarrow {\mathbb {R}}\) (the observations), we define the cost functional

where, for some a and b satisfying that \(0 \le a<b \le 1\), \(s \in (a,b)\) and, \(\varphi \in C^2(a,b)\) denotes a nonnegative convex function that satisfies

Examples of functions with these properties are

We shall thus be interested in the following identification (inverse) problem: Find \(({\bar{s}},{{\bar{{\mathsf {u}}}}})\) such that

subject to the fractional state equation

where \((-\varDelta )^s\) denotes a fractional power of the Dirichlet Laplace operator \(-\varDelta \). We immediately remark that, with no modification, our approach can be extended to problems where the state equation is \(L^s {\mathsf {u}}= {\mathsf {f}}\), where \(L {\mathsf {w}}=-\text {div}(A\nabla {\mathsf {w}})\), supplemented with homogeneous Dirichlet boundary conditions, as long as the diffusion coefficient A is fixed, bounded, symmetric and positive definite. In principle, one could also consider optimization with respect to order s and the diffusion A, as this could accommodate for anisotropies in the diffusion process. We refer the reader to [8], and the references therein, for the case when \(s=1\) is fixed and the optimization is carried out with respect to A.

We now comment on the choice of a and b. The practical situation can be envisioned as the following: from measurements or physical considerations we have an expected range for the order of the operator, and we want to optimize within that range to best fit the observations. From the existence and optimality conditions point of view, there is no limitation on their values, as long as \(0 \le a < b \le 1\). However, when we discuss the convergence of numerical algorithms, many of the estimates and arguments that we shall make blow up as \(s \downarrow 0\) or \(s \uparrow 1\) so we shall assume that \(a > 0\) and \(b < 1\). How to treat numerically the full range of s is currently under investigation.

Our presentation is organized as follows. The notation and functional setting is introduced in Sect. 2, where we also briefly describe, in Sect. 2.1, the definition of the fractional Laplacian. In Sect. 3, we study the fractional identification (inverse) problem (3)–(4). We analyze the differentiability properties of the associated control-to-state map (Sect. 3.1) and derive existence results as well as first and second order optimality conditions and a local uniqueness result (Sect. 3.2). Section 4 is dedicated to the design and analysis of a numerical algorithm to approximate the solution to (3)–(4). Finally, in Sect. 5 we illustrate the performance of our algorithm on several examples.

2 Notation and Preliminaries

Throughout this work \(\varOmega \) is an open, bounded and convex polytopal subset of \({\mathbb {R}}^{n}\) \((n \ge 1)\) with boundary \(\partial \varOmega \). The relation \(X \lesssim Y\) indicates that \(X \le CY\), with a nonessential constant C that might change at each occurrence.

2.1 The Fractional Laplacian

Spectral theory for the operator \(-\varDelta \) yields the existence of a countable collection of eigenpairs \(\{ \lambda _k, \varphi _k \}_{k \in {\mathbb {N}}} \subset {\mathbb {R}}^{+} \times H_0^1(\varOmega )\) such that \(\{ \varphi _k \}_{k \in {\mathbb {N}}}\) is an orthonormal basis of \(L^2(\varOmega )\) and an orthogonal basis of \(H_0^1(\varOmega )\) and

With this spectral decomposition at hand, we define the fractional powers of the Dirichlet Laplace operator, which for convenience we simply call the fractional Laplacian, as follows: For any \(s \in (0,1)\) and \(w \in C_0^{\infty }(\varOmega )\),

By density, this definition can be extended to the space

which we endow with the norm

see [5, 6, 9] for details. The space \({\mathbb {H}}^s(\varOmega )\) coincides with \([ L^2(\varOmega ), H_0^1(\varOmega ) ]_{s}\), i.e., the interpolation space between \(L^2(\varOmega )\) and \(H_0^1(\varOmega )\); see [1, Chapter 7]. For \(s \in (0,1)\), we denote by \({\mathbb {H}}^{-s}(\varOmega )\) the dual space to \({\mathbb {H}}^s(\varOmega )\) and remark that it admits the following characterization:

where \({\mathcal {D}}'(\varOmega )\) denotes the space of distributions on \(\varOmega \). Finally, we denote by \(\langle \cdot ,\cdot \rangle \) the duality pairing between \({\mathbb {H}}^s(\varOmega )\) and \({\mathbb {H}}^{-s}(\varOmega )\).

3 The Fractional Identification (Inverse) Problem

In this section we study the existence of minimizers for the fractional identification (inverse) problem (3)–(4), as well as optimality conditions. We begin by introducing the so-called control-to-state map associated with problem (3)–(4) and studying its differentiability properties. This will allow us to derive first order necessary and second order sufficient optimality conditions for our problem, as well as existence results.

3.1 The Control-to-State Map

In this subsection we study the differentiability properties of the control-to-state map \({\mathcal {S}}\) associated with (3)–(4), which we define as follows: Given a control \(s \in (0,1)\), the map \({\mathcal {S}}\) associates to it the state \({\mathsf {u}}= {\mathsf {u}}(s)\) that solves problem (4) with the forcing term \({\mathsf {f}}\in {\mathbb {H}}^{-s}(\varOmega )\). In other words,

where \({\mathsf {f}}_k = \langle {\mathsf {f}},\varphi _k \rangle \) and \(\{\lambda _k, \varphi _k \}_{k \in {\mathbb {N}}}\) are defined by (5). Since \({\mathsf {f}}\in {\mathbb {H}}^{-s}(\varOmega )\), the characterization of the space \({\mathbb {H}}^{-s}(\varOmega )\), given in (9), allows us to immediately conclude that the map \({\mathcal {S}}\) is well-defined; see also [6, Lemma 2.2].

Before embarking on the study of the smoothness properties of the map \({\mathcal {S}}\) we define, for \(\lambda > 0\), the function \(E_\lambda : (0,1) \rightarrow {\mathbb {R}}^+\) by

A trivial computation reveals that

from which immediately follows that, for \(m \in {\mathbb {N}}\), we have the estimate

where the hidden constant is independent of s, it remains bounded as \(\lambda \uparrow \infty \), but blows up as \(\lambda \downarrow 0\); compare with [12, Eq. (2.27)].

With this auxiliary function at hand we proceed, following [12], to study the differentiability properties of the map \({\mathcal {S}}\). To begin we notice the inclusion \({\mathcal {S}}((0,1)) \subset L^2(\varOmega )\) so we consider \({\mathcal {S}}\) as a map with range in \(L^2(\varOmega )\) and we will denote by \(|||\cdot |||\) the norm of \(\mathcal L({\mathbb {R}},L^2(\varOmega ))\).

Theorem 1

(Properties of \({\mathcal {S}}\)) Let \({\mathcal {S}}: (0,1) \rightarrow L^2(\varOmega )\) be the control-to-state map, defined in (10), and assume that \({\mathsf {f}}\in L^2(\varOmega )\). For every \(s \in (0,1)\) we have that

where the hidden constant depends on \(\varOmega \) and \(\Vert {\mathsf {f}}\Vert _{L^2(\varOmega )}\), but not on s. In addition, \({\mathcal {S}}\) is three times Fréchet differentiable; the first and second derivatives of \({\mathcal {S}}\) are characterized as follows: for \(h_1, h_2 \in {\mathbb {R}}\), we have that

where

Finally, for \(m=1,2,3\), we have

where the hidden constants are independent of s.

Proof

Let \(s \in (0,1)\). To shorten notation we set \({\mathsf {u}}= {\mathcal {S}}(s)\). Using (10) we have that

where we used that, for all \(k \in {\mathbb {N}}\), \(0 < \lambda _1 \le \lambda _k\). Since \(\sup _{s \in [0,1]} \lambda _1^{-2s}\) is bounded, we obtain (14).

We now define, for \(N \in {\mathbb {N}}\), the partial sum \(w_N = \sum _{k = 1}^{N} \lambda _k^{-s} {\mathsf {f}}_k \varphi _k\). Evidently, as \(N \rightarrow \infty \), we have that \(w_N \rightarrow {\mathsf {u}}\) in \(L^2(\varOmega )\). Moreover, differentiating with respect to s we immediately obtain, in light of (12), the expression

and, using (12) and (13), that

where we used, again, that the eigenvalues are strictly away from zero. This estimate allows us to conclude that, as \(N \rightarrow \infty \), we have \(D_s w_N \rightarrow D_s {\mathsf {u}}\) in \(L^2(\varOmega )\) and the bound

Let us now prove that \({\mathcal {S}}\) is Fréchet differentiable and that (15) holds. Taylor’s theorem, in conjunction with (12), yields that, for every \(l \in {\mathbb {N}}\) and \(h_1 \in {\mathbb {R}}\), we have

for some \(\theta \in (s -|h_1|,s+|h_1|)\). Now, if \(|h_1| < s/2\), we have that \(\theta ^{-2} < 4 s^{-2}\), and thus, in view of estimate (13), that

This last estimate allows us to write

where the hidden constant is independent of \(h_1\) and s. The previous estimate shows that \({\mathcal {S}}: (0,1) \rightarrow L^2(\varOmega )\) is Fréchet differentiable and that \(D_s {\mathcal {S}}(s)[h_1] = h_1 D_s {\mathsf {u}}(s)\). Finally, using (18), we conclude, estimate (16) for \(m=1\).

Similar arguments can be applied to show the higher order Fréchet differentiability of \({\mathcal {S}}\) and to derive estimate (16) for \(m=2,3\). For brevity, we skip the details.\(\square \)

3.2 Existence and Optimality Conditions

We now proceed to study the existence of a solution to problem (3)–(4) as well as to characterize it via first and second order optimality conditions. We begin by defining the reduced cost functional

where \({\mathcal {S}}\) denotes the control-to-state map defined in (10) and J is defined as in (1); we recall that \(\varphi \in C^2(a,b)\). Notice that, owing to Theorem 1, \({\mathcal {S}}\) is three times Fréchet differentiable. Consequently, \(f \in C^2(a,b)\) and, moreover, it verifies conditions similar to (2). These properties will allow us to show existence of an optimal control. We begin with a definition.

Definition 1

(Optimal pair) The pair \((\bar{s},{\bar{{\mathsf {u}}}}(\bar{s})) \in (a,b) \times {\mathbb {H}}^{\bar{s}}(\varOmega )\) is called optimal for problem (3)–(4) if \({\bar{{\mathsf {u}}}}(\bar{s}) = {\mathcal {S}}(\bar{s})\) and

for all \((s,{\mathsf {u}}(s)) \in (a,b) \times {\mathbb {H}}^s(\varOmega )\) such that \({\mathsf {u}}(s) = {\mathcal {S}}(s)\).

Theorem 2

(Existence) There is an optimal pair \((\bar{s},{\bar{{\mathsf {u}}}}(\bar{s})) \in (a,b) \times {\mathbb {H}}^{\bar{s}}(\varOmega )\) for problem (3)–(4).

Proof

Let \(\{a_l\}_{l \in {\mathbb {N}}}, \{b_l\}_{l \in {\mathbb {N}}} \subset (a,b)\) be such that, for every \(l \in {\mathbb {N}}\), \(a<a_{l+1}<a_l< b_l<b_{l+1}<b\) and \(a_l \rightarrow a\), \(b_l \rightarrow b\) as \(l \rightarrow \infty \). Denote \(I_l = [a_l,b_l]\) and consider the problem of finding

The properties of f guarantee its existence. Notice that, since the intervals \(I_l\) are nested, we have

We have thus constructed a sequence \(\{s_l\}_{l \in {\mathbb {N}}} \subset (a,b)\) from which we can extract a convergent subsequence, which we still denote by the same \(\{s_l\}_{l \in {\mathbb {N}}}\), such that \(s_l \rightarrow {\bar{s}} \in [a,b]\). We claim that f attains its infimum, over (a, b), at the point \({\bar{s}}\).

Let us begin by showing that, in fact, \({\bar{s}} \in (a,b)\). The decreasing property of \(\{f(s_l)\}_{l \in {\mathbb {N}}}\) shows that

which, if \({\bar{s}} = a\) or \({\bar{s}}=b\), would lead to a contradiction with the fact that \(f(s) \ge \varphi (s)\) and (2).

Let \(s_\star \) be any point of (a, b). The construction of the intervals \(I_l\) guarantee that there is \(L \in {\mathbb {N}}\) for which \(s_\star \in I_l\) whenever \(l > L\). Therefore, we have

Which shows that \({\bar{s}}\) is a minimizer.

Since \({\mathcal {S}}\), as a map from (a, b) to \(L^2(\varOmega )\), is continuous—even differentiable—we see that there is \(\bar{\mathsf {u}}\in L^2(\varOmega )\), for which \({\mathcal {S}}(s_l) \rightarrow {{\bar{{\mathsf {u}}}}} \) in \(L^2(\varOmega )\) as \(l \rightarrow \infty \). Let us now show that, indeed, \(\bar{\mathsf {u}}\in {\mathbb {H}}^{{\bar{s}}}(\varOmega )\) and that it satisfies the state equation.

Set \({{\bar{{\mathsf {u}}}}} = \sum _{k\in {\mathbb {N}}} {{\bar{{\mathsf {u}}}}}_k \varphi _k\) and notice that, as \(l \rightarrow \infty \),

Therefore \({{\bar{{\mathsf {u}}}}}_m = \lambda _m^{-{\bar{s}}} {\mathsf {f}}_m\). This shows that \({{\bar{{\mathsf {u}}}}} \in {\mathbb {H}}^{{\bar{s}}}(\varOmega )\) and that \({{\bar{{\mathsf {u}}}}}\) solves (4).

The result is thus proved. \(\square \)

We now provide first order necessary and second order sufficient optimality conditions for the identification (inverse) problem (3)–(4). Their form is standard in constrained minimization, but we record them as they will serve us later to design a numerical method.

Theorem 3

(Optimality conditions) Let \((\bar{s}, {{\bar{{\mathsf {u}}}}})\) be an optimal pair for problem (3)–(4). Then it satisfies the following first order necessary optimality condition

On the other hand, if \(({\bar{s}}, {{\bar{{\mathsf {u}}}}})\), with \({{\bar{{\mathsf {u}}}}} = {\mathcal {S}}({\bar{s}})\), satisfies (20) and, in addition, the second order optimality condition

holds, then \(({\bar{s}}, {{\bar{{\mathsf {u}}}}})\) is an optimal pair.

Proof

Since, as shown in Theorem 2, \({\bar{s}} \in (a,b)\), the first order optimality condition reads:

The characterization of the first order derivative of \({\mathcal {S}}\), given in Theorem 1, allows us to conclude (20). A similar computation reveals that

Using, again, the characterization for the first and second order derivatives of \({\mathcal {S}}\) given in Theorem 1 we obtain (21). This concludes the proof. \(\square \)

Let us now provide a sufficient condition for local uniqueness of the optimal parameter \({\bar{s}}\). To accomplish this task we assume that the function \(\varphi \), that defines the functional J in (1), is strongly convex with parameter \(\xi \), i.e., for all points \(s_1, s_2\) in (a, b), we have that

We thus present the following result.

Lemma 1

(Second-order sufficient conditions) Let \({\bar{s}}\) be optimal for problem (3)–(4) and f be defined as in (19). If \(\Vert {\mathsf {f}}\Vert _{L^2(\varOmega )}\) and \(\Vert {\mathsf {u}}_d \Vert _{L^2(\varOmega )}\) are sufficiently small, then there exist a constant \(\vartheta > 0\) such that

Proof

On the basis of (23), we invoke the strong convexity of \(\varphi \) to conclude that

It thus suffices to control the term \(({\mathcal {S}}({\bar{s}}) - {\mathsf {u}}_d, D_{s}^2 {\mathcal {S}}( {\bar{s}}))_{L^2(\varOmega )}\); and to do so we use the estimates of Theorem 1. In fact, we have that

where \(C_1\) and \(C_2\) depend on \(\varOmega \) and the operator \(-\varDelta \) but are independent of \({\bar{s}}\), \({\mathsf {f}}\) and \({\mathsf {u}}_d\). Since Theorem 2 guarantees that \({\bar{s}} \in (a,b)\), we conclude that the right hand side of the previous expression is bounded. This, in view of the fact that \(\Vert {\mathsf {f}}\Vert _{L^2(\varOmega )}\) and \(\Vert {\mathsf {u}}_d \Vert _{L^2(\varOmega )}\) are sufficiently small, concludes the proof. \(\square \)

As a consequence of the previous Lemma we derive, for the reduced cost functional f, a convexity property that will be important to analyze the fully discrete scheme of Sect. 4, and a quadratic growth condition that implies the local uniqueness of \({\bar{s}}\).

Corollary 1

(Convexity and quadratic growth) Let \({\bar{s}}\) be optimal for problem (3)–(4) and f be defined as in (19). If \(\Vert {\mathsf {f}}\Vert _{L^2(\varOmega )}\) and \(\Vert {\mathsf {u}}_d \Vert _{L^2(\varOmega )}\) are sufficiently small, then there exists \(\delta > 0\) such that

where \(\vartheta \) is the constant that appears in (25). In addition, we have the quadratic growth condition

In particular, f has a local minimum at \({\bar{s}}\). Moreover, this minimum is unique in \(({\bar{s}}-\delta , {\bar{s}} + \delta ) \cap (a,b)\).

Proof

Estimates (26) and (27) follow immediately from an application of Taylor’s theorem and estimate (25); see [14, Theorem 4.23] for details. The local uniqueness follows immediately from (27). \(\square \)

4 A Numerical Scheme for the Fractional Identification (Inverse) Problem

In this section we propose a numerical method that approximates the solution to the fractional identification (inverse) problem (3)–(4). To be able to provide a convergence analysis of the proposed method we make the following assumption.

Assumption 1

(Compact subinterval) The optimization bounds a and b satisfy

Note that we are, essentially, minimizing the univariate function f, given in (19), over a bounded interval (a, b). However, this is not as simple as it initially seems. The evaluation of f(s) requires knowledge of \({\mathsf {u}}(s)\), i.e., the solution of an infinite dimensional nonlocal problem, and this must be numerically approximated. In addition, trying to use a higher order technique, like a Newton method, is not feasible as it requires the evaluation of \(D_s {\mathsf {u}}(s)\), which also needs to be numerically approximated. For these reasons we opt to devise a method that is convergent independently of the initial guess and for which we can derive error estimates. The scheme that we propose below is based on the discretization of the first order optimality condition (20): we discretize the first derivative \(D_s {\mathsf {u}}(s)\) in (20) using a centered difference and then we approximate the solution to the state Eq. (4) with the finite element techniques introduced in [9].

4.1 Discretization in s

To set the ideas, we first propose a scheme that only discretizes the variable s and analyze its convergence properties. We begin by introducing some terminology. Let \(\sigma > 0\) and \(s \in (a,b)\) such that \(s \pm \sigma \in (a,b)\). We thus define, for \(\psi : (a,b) \rightarrow {\mathbb {R}}\), the centered difference approximation of \(D_s \psi \) at s by

If \(\psi \in C^3(a,b)\), a basic application of Taylor’s theorem immediately yields the estimate

We also define the function \(j_{\sigma }: (a,b) \rightarrow {\mathbb {R}}\) by

where \({\mathsf {u}}(s)\) denotes the solution to (4). Finally, a point \(s_\sigma \in (a,b)\) for which

will serve as an approximation of the optimal parameter \({\bar{s}}\).

Notice that, in (30), the definition of \(j_\sigma \) coincides with the first order optimality condition (20), when we replace the derivative of the state, i.e., \(D_s {\mathsf {u}}\), by its centered difference approximation, as defined in (28). The existence of \(s_\sigma \) will be shown by proving convergence of Algorithm 1 which, essentially, is a bisection algorithm. In addition, if the algorithm reaches line 14, since \(j_{\sigma } \in C([s_l, s_r])\) and it takes values of different signs at the endpoints, the intermediate value theorem guarantees that the bisection step will produce a sequence of values that we use to approximate the root of \(j_\sigma \). It remains then to show that we can eventually find the requisite interval \([s_l,s_r] \subset (a,b)\). This is the content of the following result.

Lemma 2

(Root isolation) If \(\sigma \) is sufficiently small, there exist \(s_l\) and \(s_r\) in (a, b) such that \(j_{\sigma }(s_l) < 0\) and \(j_{\sigma }(s_r) > 0\), i.e., the root isolation step in Algorithm 1 terminates.

Proof

We begin the proof by noticing that, for \(s \in (\sigma ,1-\sigma ) \subset (a,b)\), the estimates of Theorem 1 immediately yield the existence of a constant \(C>0\) such that

where C depends on \(\varOmega \), \({\mathsf {u}}_d\) and \({\mathsf {f}}\) but not on s or \(\sigma \).

On the other hand, since property (2) implies that \(\varphi '(s) \rightarrow -\infty \) as \(s \downarrow a\), we deduce the existence of \(\epsilon _{l} > 0\) such that, if \(s \in (a, a+\epsilon _{l})\) then \(\varphi '(s) < -C/\sigma \). Assume that \(\sigma < \epsilon _{l}\). Consequently, in view of the bound (32), definition (30) immediately implies that, for every \(s \in (a+\sigma ,a+\epsilon _{l})\), we have the estimate

Similar arguments allow us to conclude the existence of \(\epsilon _r >0\) such that, if \(s \in (b-\epsilon _r, b)\) then \(\varphi '(s) > C/\sigma \). Assume that \(\sigma < \epsilon _r\) . We thus conclude that, for every \(s \in (b-\epsilon _r,b-\sigma )\), we have the bound

In light of the previous estimates we thus conclude that, for \(\sigma < \min \{\epsilon _l,\epsilon _r\}\), we can find \(s_l\) and \(s_r\) in (a, b) such that \(j_{\sigma }(s_l)<0\) and \(j_{\sigma }(s_r)>0\). This concludes the proof. \(\square \)

From Lemma 2 we immediately conclude that the bisection algorithm can be performed and exhibits the following convergence property.

Lemma 3

(Convergence rate: bisection method) The sequence \(\{ s_k \}_{k \ge 1}\) generated by the bisection algorithm satisfies

In addition, there exists \(s_l\) and \(s_r\) such that \(a<s_l<s_r < b\) and \(s_{\sigma } \in (s_l,s_r)\).

The results of Lemmas 2 and 3 guarantee that, for a fixed \(\sigma \), the bisection algorithm can be performed and exhibits a convergence rate dictated by (33). Let us now discuss the convergence properties, as \(\sigma \rightarrow 0\), of this semi-discrete method. We begin with two technical lemmas.

Lemma 4

(Convergence of \(j_\sigma \)) Let \(j_\sigma : (a, b ) \rightarrow {\mathbb {R}}\) be defined as in (30), then, \(j_\sigma \rightrightarrows f'\), that is uniformly, on (a, b) as \(\sigma \rightarrow 0\).

Proof

From the definitions we obtain that, whenever \(s \in (a,b)\)

where the hidden constant depends on \({\mathsf {u}}_d\) and estimate (14). Since, from Theorem 1, we know that the control-to-state map is three times differentiable, we can conclude that

where we used a formula analogous to (29) and estimate (16). The fact that \(a>0\) (Assumption 1) and the observation that the deduced bound is uniform for \(s \in [a,b]\) allows us to conclude. \(\square \)

With the uniform convergence of \(j_\sigma \) at hand, we can obtain the convergence of its roots to parameters that are optimal.

Lemma 5

(Convergence of \(s_\sigma \)) The family \(\{s_\sigma \}_{\sigma >0}\) contains a convergent subsequence. Moreover, the limit of any convergent subsequence satisfies (20).

Proof

The existence of a convergent subsequence follows from the fact that \(\{s_\sigma \}_{\sigma >0} \subset [a,b]\). Moreover, as in Theorem 2, we conclude that the limit is in (a, b). Let us now show that any limit satisfies (20).

By Lemma 4, for any \(\varepsilon >0\), if \(\sigma \) is sufficiently small, we have that

which implies that \(f'(s_\sigma ) \rightarrow 0\) as \(\sigma \rightarrow 0\). Let now \(\{s_{\sigma _k}\}_{k \in {\mathbb {N}}} \subset \{s_\sigma \}\) be a convergent subsequence. Denote the limit point by \({\underline{s}} \in (a,b)\). By continuity of \(f'\) we have \( f'(s_{\sigma _k}) \rightarrow f'({\underline{s}})\) which implies that

\(\square \)

Remark 1

(Stronger convergence) It is expected that we cannot prove more than convergence up to subsequences, since there might be more than one s that satisfies (20). If there is a unique optimal s, then the previous result implies that the family \(\{s_\sigma \}_{\sigma >0}\) converges to it.

In what follows, to simplify notation, we denote by \(\{s_\sigma \}_{\sigma >0}\) any convergent subfamily. The next result then provides a rate of convergence.

Theorem 4

(Convergence rate in \(\sigma \)) Let \({\bar{s}}\) denote a solution to the identification (inverse) problem (3)–(4) and let \(s_{\sigma }\) be its approximation defined as the solution to Eq. (31). If \(\sigma \) is sufficiently small then we have

where the hidden constant is independent of \({\bar{s}}\), \(s_{\sigma }\), \(\sigma \), \({\mathsf {f}}\) and \({\mathsf {u}}_d\).

Proof

We begin by considering the parameter \(\sigma \) sufficiently small such that \(s_{\sigma } \in ({\bar{s}} - \delta , {\bar{s}} + \delta )\), where \(\delta > 0\) is as in the statement of Corollary 1. Thus, an application of the estimate (26) in conjunction with the fact that \(j_{\sigma }(s_{\sigma }) = 0\) allow us to conclude that

Consequently, following Lemma 4 we obtain that

The theorem is thus proved. \(\square \)

4.2 Space Discretization

The goal of this section is to propose, on the basis of the bisection algorithm of Sect. 4.1, a fully discrete scheme that approximates the solution to problem (3)–(4). To accomplish this task we will utilize the discretization techniques introduced in [9] that provide an approximation to the solution to the fractional diffusion problem (4). In order to make the exposition self-contained and as clear as possible, we briefly review the techniques of [9] in Sect. 4.2.1. In Sect. 4.2.2 we design a fully discrete scheme for our identification problem and present an analysis for it; we emphasize that the results presented in Sect. 4.2.2 are the main novelty of Sect. 4.2.

4.2.1 A Discretization Technique for Fractional Diffusion

Exploiting the cylindrical extension proposed and investigated in [3, 6, 13], that is in turn inspired in the breakthrough by Caffarelli and Silvestre analyzed in [4], the authors of [9] have proposed a numerical technique to approximate the solution to problem (4) that is based on an anisotropic finite element discretization of the following local and nonuniformly elliptic PDE:

Here, \({\mathcal {C}}\) denotes the semi-infinite cylinder with base \(\varOmega \) defined by

and \(\partial _L {\mathcal {C}}= \partial \varOmega \times [0,\infty )\) its lateral boundary. In addition, \(d_s = 2^\alpha \Gamma (1-s)/\Gamma (s)\) and

Finally, \(\alpha = 1-2s \in (-1,1)\). Although degenerate or singular, the variable coefficient \(y^\alpha \) satisfies a key property. Namely, it belongs to the Muckenhoupt class \(A_2({\mathbb {R}}^{n+1})\). This allows for an optimal piecewise polynomial interpolation theory [9].

To state the results of [3, 4, 6, 13], we define the weighted Sobolev space

and the trace operator

where \({{\mathrm{tr_\Omega }}}w\) denotes the trace of w onto \(\varOmega \times \{ 0 \}\).

The results of [3, 4, 6, 13] thus read as follows: Let \({\mathscr {U}}\in {\mathop {H}\limits ^{\circ }}{}_L^1(y^\alpha ,{\mathcal {C}})\) and \({\mathsf {u}}\in {\mathbb {H}}^s(\varOmega )\) be the solutions to (35) and (4), respectively, then

A first step toward a discretization scheme is to truncate, for a given truncation parameter \(\mathcal {Y}>0\), the semi-infinite cylinder \({\mathcal {C}}\) to \({\mathcal {C}}_{\mathcal {Y}}:= \varOmega \times (0,\mathcal {Y})\) and seek solutions in this bounded domain. In fact, let \(v \in {\mathop {H}\limits ^{\circ }}{}_L^1(y^\alpha ,{\mathcal {C}}_{\mathcal {Y}})\) be the solution to

where \({\mathop {H}\limits ^{\circ }}{}_L^1(y^{\alpha },{\mathcal {C}}_{\mathcal {Y}}) = \left\{ w \in H^1(y^\alpha ,{\mathcal {C}}_{\mathcal {Y}}): w = 0\,\, \mathrm{on}\,\, \partial _L {\mathcal {C}}_{\mathcal {Y}} \cup \varOmega \times \{\mathcal {Y}\} \right\} \). Then the exponential decay of \({\mathscr {U}}\) in the extended variable y implies the following error estimate

provided \(\mathcal {Y}\ge 1\), and the hidden constant depends on s, but is bounded on compact subsets of (0, 1). We refer the reader to [9, Sect. 3] for details. With this truncation at hand, we thus recall the finite element discretization techniques of [9, Sect. 4].

To avoid technical difficulties, we assume that \(\varOmega \) is a convex polytopal subset of \({\mathbb {R}}^n\) and refer the reader to [11] for results involving curved domains. Let \({\mathscr {T}}_\varOmega = \{K\}\) be a conforming and shape regular triangulation of \(\varOmega \) into cells K that are isoparametrically equivalent to either a simplex or a cube. Let \({\mathcal {I}}_\mathcal {Y}= \{I\}\) be a partition of the interval \([0,\mathcal {Y}]\) with mesh points

We then construct a mesh of the cylinder \({\mathcal {C}}_\mathcal {Y}\) by \({\mathscr {T}}_\mathcal {Y}= {\mathscr {T}}_\varOmega \otimes {\mathcal {I}}_\mathcal {Y}\), i.e., each cell \(T \in {\mathscr {T}}_\mathcal {Y}\) is of the form \(T = K \times I\) where \(K \in {\mathscr {T}}_\varOmega \) and \(I \in {\mathcal {I}}_\mathcal {Y}\). We note that, by construction, \(\# {\mathscr {T}}_\mathcal {Y}= M \#{\mathscr {T}}_\varOmega \). When \({\mathscr {T}}_\varOmega \) is quasiuniform with \(\# {\mathscr {T}}_\varOmega \approx M^n\) we have \(\# {\mathscr {T}}_\mathcal {Y}\approx M^{n+1}\) and, if \(h_{{\mathscr {T}}_\varOmega } = \max \{ {{\mathrm{diam}}}(K) : K \in {\mathscr {T}}_\varOmega \}\), then \(M \approx h_{{\mathscr {T}}_\varOmega }^{-1}\). Having constructed the mesh \({\mathscr {T}}_\mathcal {Y}\) we define the finite element space

where, \(\Gamma _D = \partial \varOmega \times [0,\mathcal {Y}) \cup \varOmega \times \{ \mathcal {Y}\}\), and if K is isoparametrically equivalent to a simplex, \({\mathcal {P}}(K)={\mathbb {P}}_1(K)\) i.e., the set of polynomials of degree at most one. If K is a cube \({{\mathcal {P}}}(K) = {\mathbb {Q}}_1(K)\), that is, the set of polynomials of degree at most one in each variable. We must immediately comment that, owing to (39), the meshes \({\mathscr {T}}_\mathcal {Y}\) are not shape regular but satisfy: if \(T_1 = K_1 \times I_1\) and \(T_2 = K_2 \times I_2\) are neighbors, then there is \(\kappa >0\) such that

The use of anisotropic meshes in the extended direction y is imperative if one wishes to obtain a quasi-optimal approximation error since \({\mathscr {U}}\), the solution to (35), possesses a singularity as \(y\downarrow 0\); see [9, Theorem 2.7].

We thus define a finite element approximation of the solution to the truncated problem (38): Find \(V_{{\mathscr {T}}_{\mathcal {Y}}} \in {\mathbb {V}}({\mathscr {T}}_\mathcal {Y})\) such that

With this discrete function at hand, and on the basis of the localization results of Caffarelli and Silvestre, we define an approximation \(U_{{\mathscr {T}}_{\varOmega }} \in {\mathbb {U}}({\mathscr {T}}_{\varOmega }) = {{\mathrm{tr_\Omega }}}{\mathbb {V}}({\mathscr {T}}_\mathcal {Y})\) of the solution \({\mathsf {u}}\) to problem (4) as follows:

4.2.2 A Fully Discrete Scheme for the Fractional Identification (Inverse) Problem

Following the discussion in [9] one observes that many of the stability and error estimates in this work contain constants that depend on s. While these remain bounded in compact subsets of (0, 1) many of these degenerate or blow up as \(s \downarrow 0\) or \(s \uparrow 1\). In fact, it is not clear if the PDE (35) is well-posed under the passage of these limits. Even if this problem made sense, the Caffarelli–Silvestre extension property (37) does not hold as we take the limits mentioned above. For this reason, we continue to work under Assumption 1. We begin by defining the discrete control-to-state map \(S_{{\mathscr {T}}}\) as follows:

where \(U_{{\mathscr {T}}_{\varOmega }}(s)\) is defined as in (41). We also define the function \(j_{\sigma ,{\mathscr {T}}}:(a,b) \rightarrow {\mathbb {R}}\) as

where the centered difference \(d_{\sigma }\) is defined as in (28). With these elements at hand, we thus define a fully discrete approximation of the optimal parameter \({\bar{s}}\) as the solution to the following problem: Find \(s_{\sigma ,{\mathscr {T}}} \in (a,b)\) such that

We notice that, under the assumption that the map \(S_{{\mathscr {T}}}\) is continuous in (a, b), the same arguments developed in the proof of Lemma 2 yield the existence of \(s_{r,{\mathscr {T}}}\) and \(s_{l,{\mathscr {T}}}\) in (a, b) such that \(j_{\sigma ,{\mathscr {T}}}(s_{r,{\mathscr {T}}})<0\) and \(j_{\sigma ,{\mathscr {T}}}(s_{l,{\mathscr {T}}}) > 0\). This implies that, if in the bisection algorithm of Sect. 4.1 we replace \(j_{\sigma }\) by \(j_{\sigma ,{\mathscr {T}}}\), the step Root isolation can be performed. Consequently, we deduce the convergence of the bisection algorithm and thus the existence of a solution \(s_{\sigma ,{\mathscr {T}}} \in (a,b)\) to problem (43).

It is then necessary to study the continuity of \(S_{\mathscr {T}}\), but this can be easily achieved because we are in finite dimensions and the problem is linear.

Proposition 1

(Continuity of \(S_{\mathscr {T}}\)) For every mesh \({\mathscr {T}}_{\mathcal {Y}}\), defined as in Sect. 4.2.1, the map \(S_{\mathscr {T}}\) is continuous on (a, b).

Proof

Let \(\{s_k\}_{k\in {\mathbb {N}}} \subset (a,b)\) be such that \(s_k \rightarrow s \in (a,b)\). Since the operator \({{\mathrm{tr_\Omega }}}\), defined as in (36), is continuous [9, Proposition 2.5], it suffices to show that the application \(s \mapsto V_{{\mathscr {T}}_\mathcal {Y}}(s)\) is continuous. Consider

and

Set \(W_s = V_{{\mathscr {T}}_\mathcal {Y}}(s) - V_{{\mathscr {T}}_\mathcal {Y}}(s_k)\) and \(W_k = V_{{\mathscr {T}}_\mathcal {Y}}(s_k) - V_{{\mathscr {T}}_\mathcal {Y}}(s)\) and add these two identities to obtain

We now proceed to estimate each one of these terms.

For the first term we have

as \(k \rightarrow \infty \). This is the case because \(\Vert {{\mathrm{tr_\Omega }}}(V_{{\mathscr {T}}_\mathcal {Y}}(s) - V_{{\mathscr {T}}_\mathcal {Y}}(s_k) \Vert _{L^2(\varOmega )}\) is uniformly bounded [9, Proposition 2.5] and, by Assumption 1, we have that \(d_{s_k} \rightarrow d_s\).

We estimate the second term as follows

Using that we are in finite dimensions, the question reduces to the convergence

which follows from the a.e. convergence of \(y^{1-2s_k}\) to \(y^{1-2s}\), the fact that, for \(0<y < 1\), we have \(0<y^{1-2s_k} \le y^{1-2a} \in L^1(0,1)\) and an application of the dominated convergence theorem.

This concludes the proof. \(\square \)

We now proceed to derive an a priori error bound for the error between the exact parameter \({\bar{s}}\) and its approximation \(s_{\sigma ,{\mathscr {T}}}\) given as the solution (43). We begin by noticing that, following the proof of Lemma 4, using [10, Proposition 28] and Assumption 1 we have

where the hidden constant depends on a and b but is uniform in (a, b). Clearly, for fixed \(\sigma \), this implies the uniform convergence of \(j_{\sigma ,{\mathscr {T}}}\) to \(j_\sigma \) as we refine the mesh. By repeating the arguments of Lemma 5 we conclude the convergence, up to subsequences, of \(\{s_{\sigma ,{\mathscr {T}}}\}_{{\mathscr {T}}}\) to \(s_\sigma \), a root of \(j_\sigma \). Arguing as in Remark 1, we see that we cannot expect convergence of the entire family.

Finally, we denote one of these convergent subsequences by \(\{s_{\sigma ,{\mathscr {T}}}\}_{{\mathscr {T}}}\) and provide an error estimate.

Theorem 5

(Error estimate: discretization in s and space) Let \({\bar{s}}\) be optimal for the identification (inverse) problem (3)–(4) and \(s_{\sigma ,{\mathscr {T}}}\) its approximation defined as the solution to (43). If \(\sigma \) is sufficiently small, \(\#{\mathscr {T}}_\mathcal {Y}\) is sufficiently large and, \({\mathsf {f}}\in {\mathbb {H}}^{1-a}(\varOmega )\), then

where the hidden constant is independent of \({\bar{s}}\), \(s_{\sigma ,{\mathscr {T}}}\), \({\mathsf {f}}\) and the mesh \({\mathscr {T}}_{\mathcal {Y}}\).

Proof

We begin by remarking that, by setting \(\sigma \) sufficiently small and \(\#{\mathscr {T}}_\mathcal {Y}\) sufficiently large, respectively, we can assert that \(s_{\sigma ,{\mathscr {T}}} \in (\bar{s} - \delta , \bar{s} + \delta )\) with \(\delta \) being the parameter of Corollary 1. By invoking the estimate (26) and in view of the fact that \(f'({\bar{s}}) = 0 = j_{\sigma ,{\mathscr {T}}}(s_{\sigma ,{\mathscr {T}}})\), we deduce the following estimate:

We proceed to bound the right hand side of the previous expression. To accomplish this task, we invoke the definition (42) of \(j_{\sigma ,{\mathscr {T}}}\) and repeating the arguments of Lemma 4 we obtain that

We thus examine each term separately. We start with \(\mathrm{II}\): its control relies on the a priori error estimates of [9, 10]. In fact, combining the results of [10, Proposition 28] with the estimate (16) for \(m=1\), we arrive at

where the hidden constant depends on a and b but is independent of \({\bar{s}}\), \(s_{\sigma ,{\mathscr {T}}}\), \({\mathsf {f}}\) and \({\mathscr {T}}_{\mathcal {Y}}\). Notice that here we used Assumption 1 to, for instance, control the term \(s_{\sigma ,{\mathscr {T}}}^{-1}\).

We now proceed to control the term \(\mathrm{I}\) in (46). A basic application of the Cauchy–Schwarz inequality yields

We thus apply the estimate (14) and the triangle inequality to obtain that

We estimate the first term on the right hand side of the previous expression: the definition (28) of \(d_{\sigma }\) and [10, Proposition 28] imply that

we notice that \(\sigma \) is small enough such that \(s_{\sigma ,{\mathscr {T}}} \pm \sigma \in (a,b)\). On the other hand, an estimate similar to (29) yields that

Collecting the previous estimates we arrive at the following bound for the term \(\mathrm{I}\):

On the basis of (46), this bound, and the estimate for the term \(\mathrm{II}\) yield

where the hidden constant depends on a and b, but is independent of \(\sigma \) and \(\#{\mathscr {T}}_\mathcal {Y}\). This concludes the proof. \(\square \)

A natural choice of \(\sigma \) comes from equilibrating the terms on the right-hand side of (45): \(\sigma \approx |\log (\#{\mathscr {T}}_\mathcal {Y})|^{2b/3} (\# {\mathscr {T}}_{\mathcal {Y}})^{-(1+a)/3(n+1)}\). This implies the following error estimate.

Corollary 2

(Error estimate: discretization in s and space) Let \({\bar{s}}\) be optimal for the identification (inverse) problem (3)–(4) and \(s_{\sigma ,{\mathscr {T}}}\) be its approximation defined as the solution to (43). If \(\#{\mathscr {T}}_\mathcal {Y}\) is sufficiently large, the parameter \(\sigma \) is chosen as

and \({\mathsf {f}}\in {\mathbb {H}}^{1-a}(\varOmega )\) then

where the hidden constant depends on a and b but is independent of \({\bar{s}}\), \(s_{\sigma ,{\mathscr {T}}}\), and the mesh \({\mathscr {T}}_{\mathcal {Y}}\).

5 Numerical Examples

In this section, we study the performance of the proposed bisection algorithm of Sect. 4 when applied to the fully discrete parameter identification (inverse) problem of Sect. 4.2.2 with the help of four numerical examples.

The implementation has been carried out within the MATLAB software library iFEM [7]. The stiffness matrices of the discrete system (40) are assembled exactly and the forcing terms are computed by a quadrature rule which is exact for polynomials up to degree 4. Additionally, the first term in (42) is computed by a quadrature formula which is exact for polynomials of degree 7. All the linear systems are solved exactly using MATLAB’s built-in direct solver.

In all examples, \(n=2\), \(\varOmega = (0,1)^2\), and the initial values of \(s_l\), and \(s_r\) are 0.3, and 0.9, respectively. In Algorithm 1, we used as a stopping criterion \(|j_\sigma (s_k)| < \textsf {TOL} = 10^{-14}\), where \(j_\sigma \) is defined as in (30). The truncation parameter for the cylinder \({\mathcal {C}}_{\mathcal {Y}}\) is \(\mathcal {Y}= 1+\frac{1}{3}(\#{\mathscr {T}}_\varOmega )\) which allows balancing the approximation and truncation errors for our state equation, see [9, Remark 5.5]. Moreover,

with \(\epsilon = 10^{-10}\).

Under the above setting, the eigenvalues and eigenvectors of \(-\varDelta \) are:

Consequently, by letting \({\mathsf {f}}= \lambda ^s_{2,2}\varphi _{2,2}\) for any \(s\in (0,1)\) we obtain \(\bar{{\mathsf {u}}}= \varphi _{2,2}\).

In what follows we will consider four examples. In the first one we set \({\bar{s}}=1/2\), \({\mathsf {f}}\) and \(\bar{{\mathsf {u}}}\) as above and we set \({\mathsf {u}}_d = \bar{{\mathsf {u}}}\). The second one differs from the first one in that we set \({\bar{s}} = (3-\sqrt{5})/2\). In our third example, the exact solution is not known. Finally, in our last example we explore the robustness of our algorithm with respect to perturbations in the data. We accomplish this by considering the same setting as in the first example but we add a random perturbation \(r \in (-e,e)\) to the right hand side \({\mathsf {f}}\). We then explore the behavior of the optimal parameter \({\bar{s}}\) as the size of the perturbation e varies.

5.1 Example 1

We recall the definition of the cost function \(J({\mathsf {u}},s)\) from (1) and set \(\varphi (s) = \frac{1}{s(1-s)}\). The latter is strictly convex over the interval (0, 1) and fulfills the conditions in (2). The optimal solution \(\bar{s}\) to (3)–(4) is given by \(\bar{s} = 1/2\).

Table 1 illustrates the performance of our optimization solver. The first column indicates the degrees of freedom \(\# {\mathscr {T}}_{\mathcal {Y}}\), the second column shows the value of \(s_{\sigma ,{\mathscr {T}}}\) obtained by solving (43), and the third column shows the corresponding value \(j_{\sigma ,{\mathscr {T}}}\) at \(s_{\sigma ,{\mathscr {T}}}\). The final column shows the total number of optimization iterations N taken, for the bisection algorithm to converge. We notice that the observed values of \(s_{\sigma ,{\mathscr {T}}}\) matches almost perfectly with \(\bar{s}\). In addition, the pattern in N, as we refine the mesh, indicates a mesh-independent behavior.

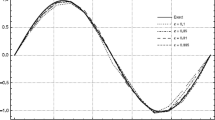

Figure 1 (left panel) shows the computational rate of convergence. We observe that

which is significantly better than the predicted rate of \((\#{\mathscr {T}}_\mathcal {Y})^{-0.22}\) by Corollary 2. Indeed this suggests that our theoretical rates are pessimistic and in practice, our algorithm works much better.

The left panel (dotted curve) shows the convergence rate for Example 1 and the right one for Example 2. The solid line is the reference line. We notice that the computational rates of convergence, in both examples, are much higher than the theoretically predicted rates in Corollary 2

5.2 Example 2

We set \(\varphi (s) = s^{-1} e^{\frac{1}{(1-s)}}\) which is again strictly convex over the interval (0, 1) and fulfills the conditions in (2). The optimal solution \(\bar{s}\) to (3)–(4) is given by \(\bar{s} = (3-\sqrt{5})/2\).

Table 2 illustrates the performance of our optimization solver. As we noted in Sect. 5.1, the numerically computed solution \(s_{\sigma ,{\mathscr {T}}}\) matches almost perfectly with \(\bar{s}\) and the pattern of N, with mesh refinement, again indicates a mesh independent behavior.

Figure 1 (right panel) shows the computational rate of convergence. We again see that

Thus the observed rate is far superior than the theoretically predicted rate in Corollary 2.

5.3 Example 3

In our third example, we take \(\varphi (s) = s^{-1} e^{\frac{1}{(1-s)}}\), \({\mathsf {f}}= 10\), and \({\mathsf {u}}_d = \max \big \{0.5-\sqrt{|x_1-0.5|^2+|x_2-0.5|^2}, 0 \big \}\). We notice that \({\mathsf {f}}\) is large, thus the requirements of Theorem 4 are not necessarily fulfilled. In addition, for \(\mu \le 1/2\), \({\mathsf {f}}\not \in {\mathbb {H}}^{1-\mu }(\varOmega )\) thus the requirements of Corollary 2 are not fulfilled. Nevertheless, as we illustrate in Table 3, we can still solve the problem. We again notice a mesh independent behavior in the number of iterations (N) taken by the bisection algorithm to converge.

5.4 Example 4

In our final example we consider a similar setup to Sect. 5.1. We modify the right hand side \({\mathsf {f}}= \lambda _{2,2}^{\bar{s}} \sin (2\pi x_1) \sin (2\pi x_2)\), with \(\bar{s}=1/2\), by adding a uniformly distributed random parameter \(r \in (-e,e)\). We fix the spatial mesh to \(\#{\mathscr {T}}_\mathcal {Y}= 85{,}529\).

At first we set \(e = 200\), as a result r is more than 200 times the actual signal \({\mathsf {f}}\), see the first row on Table 4. Despite such a large noise, the recovery of \(\bar{s}\) is reasonable. Letting \(e \downarrow 0\), we can recover \(\bar{s}\) almost perfectly.

6 Conclusion

We have proposed a gradient based numerical algorithm to identify the fractional order of the spectral Dirichlet fractional Laplace operator. We have also studied the well-posedness of such an identification (inverse) problem and derived second order sufficient conditions, that ensure local uniqueness, under the assumption that suitable norms of the data are sufficiently small. As a first step for a solution scheme, we discretize, on the basis of a finite difference method, the sensitivity of the solution to the state with respect to the parameter in the first-order optimality condition (20). This leads to a semi-discrete scheme. As a second step, we discretize the solution to the state Eq. (4) using the finite element method of [9]; this leads to a fully discrete scheme. Convergence analysis in both cases has been carried out and the proposed fully discrete algorithm has been tested on several numerical examples.

References

Adams, R.: Sobolev Spaces, Pure and Applied Mathematics, vol. 65. Academic Press [A Subsidiary of Harcourt Brace Jovanovich, Publishers], New York (1975)

Antil, H., Otárola, E.: A FEM for an optimal control problem of fractional powers of elliptic operators. SIAM J. Control Optim. 53(6), 3432–3456 (2015). https://doi.org/10.1137/140975061

Cabré, X., Tan, J.: Positive solutions of nonlinear problems involving the square root of the Laplacian. Adv. Math. 224(5), 2052–2093 (2010). https://doi.org/10.1016/j.aim.2010.01.025

Caffarelli, L., Silvestre, L.: An extension problem related to the fractional Laplacian. Commun. Partial Differ. Equ. 32(7–9), 1245–1260 (2007). https://doi.org/10.1080/03605300600987306

Caffarelli, L., Stinga, P.: Fractional elliptic equations, Caccioppoli estimates and regularity. Ann. Inst. H. Poincaré Anal. Non Linéaire 33(3), 767–807 (2016). https://doi.org/10.1016/j.anihpc.2015.01.004

Capella, A., Dávila, J., Dupaigne, L., Sire, Y.: Regularity of radial extremal solutions for some non-local semilinear equations. Commun. Partial Differ. Equ. 36(8), 1353–1384 (2011). https://doi.org/10.1080/03605302.2011.562954

Chen, L.: iFEM: an integrated finite element methods package in MATLAB. Technical Report, University of California at Irvine, Tech. rep. (2009)

Deckelnick, K., Hinze, M.: Convergence and error analysis of a numerical method for the identification of matrix parameters in elliptic PDEs. Inverse Probl. 28(11), 115015 (2012). https://doi.org/10.1088/0266-5611/28/11/115015

Nochetto, R., Otárola, E., Salgado, A.: A PDE approach to fractional diffusion in general domains: a priori error analysis. Found. Comput. Math. 15(3), 733–791 (2015). https://doi.org/10.1007/s10208-014-9208-x

Nochetto, R., Otárola, E., Salgado, A.: A PDE approach to space–time fractional parabolic problems. SIAM J. Numer. Anal. 54(2), 848–873 (2016). https://doi.org/10.1137/14096308X

Otárola, E.: A piecewise linear FEM for an optimal control problem of fractional operators: error analysis on curved domains. ESAIM Math. Model. Numer. Anal. 51(4), 1473–1500 (2017). https://doi.org/10.1051/m2an/2016065

Sprekels, J., Valdinoci, E.: A new type of identification problems: optimizing the fractional order in a nonlocal evolution equation. SIAM J. Control Optim. 55(1), 70–93 (2017). https://doi.org/10.1137/16M105575X

Stinga, P., Torrea, J.: Extension problem and Harnack’s inequality for some fractional operators. Commun. Partial Differ. Equ. 35(11), 2092–2122 (2010). https://doi.org/10.1080/03605301003735680

Tröltzsch, F.: Optimal Control of Partial Differential Equations, Graduate Studies in Mathematics, Theory, Methods and Applications, vol. 112. American Mathematical Society, Providence, RI (2010). https://doi.org/10.1090/gsm/112. (Translated from the 2005 German original by Jürgen Sprekels)

Author information

Authors and Affiliations

Corresponding author

Additional information

Harbir Antil has been supported in part by NSF Grant DMS-1521590. Enrique Otárola has been supported in part by CONICYT through FONDECYT Project 3160201. Abner J. Salgado has been supported in part by NSF Grants DMS-1418784 and DMS-1720213.

Rights and permissions

About this article

Cite this article

Antil, H., Otárola, E. & Salgado, A.J. Optimization with Respect to Order in a Fractional Diffusion Model: Analysis, Approximation and Algorithmic Aspects. J Sci Comput 77, 204–224 (2018). https://doi.org/10.1007/s10915-018-0703-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-018-0703-0

Keywords

- Optimal control problems

- Identification (inverse) problems

- Fractional diffusion

- Bisection algorithm

- Finite elements

- Stability

- Fully-discrete methods

- Convergence