Abstract

A mixed-type Galerkin variational principle is proposed for a generalized nonlocal elastic model. The solvability and regularity of its solution is naturally derived through the Lax–Milgram lemma, from which a solvability criterion is inferred for a Fredholm integral equation of the first kind. A mixed-type finite element procedure is therefore developed and the existence and uniqueness of the discrete solution is proved. This compensates the lack of solvability proof for the collocation-finite difference scheme proposed in Du et al. (J Comput Phys 297:72–83, 2015). Numerical error bounds for the unknown and the intermediate variable are proved. By carefully exploring the structure of the coefficient matrices of the numerical method, we develop a fast conjugate gradient algorithm , which reduces the computations to \(\mathcal {O}(NlogN)\) per iteration and the memory to \(\mathcal {O}(N)\). The use of the preconditioner significantly reduces the number of iterations. Numerical results show the utility of the method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Nonlocal theory has been used widely in physics and applied science, such as memory-dependent elastic solids, viscous fluids, and electromagnetic solids [12]. Nonlocal theory leads to fractional differential equations which model anomalous diffusion processes occurring in chaotic dynamics [27] and turbulent flow [5]. In solid mechanics nonlocal theory is used to derive a peridynamic model [22], which was used to better model elasticity problems involving fracture and failure of composites, crack instability, the fracture of poly-crystals and nanofiber networks [2, 15, 23, 24].

In recent studies a generalized elastic model was proposed to account for the stochastic motion of several physical systems such as membranes, (semi) flexible polymers and fluctuating interfaces among others [25]. Mathematically, the model is expressed as a composition of a fractional differential equation with a Riesz potential operator. Consequently, many mature numerical techniques, such as finite difference, finite volume, and collocation methods, do not seem to be conveniently applicable to the model. A composition of a fast collocation-finite difference scheme was developed in [11] to numerically solve the model, which fully explores the structure of the dense stiff matrix of the scheme with a significantly reduced memory requirement and computational cost. Numerical results were presented to show the strong potential of the numerical scheme.

However, the development of the numerical scheme in [11] was heuristic as no rigorous mathematical analysis was presented on the solvability, stability and convergence of the numerical scheme. The goals of this paper can be summarized as follows: (1) By fully analyzing its mathematical structure, we reformulate the generalized model into a mixed Galerkin weak formulation that consists of a Riesz potential operator and a fractional differential operator on a carefully chosen Sobolev product space. We then prove the wellposedness of the mixed formulation. As a consequence, the induced Fredholm integral operator of the first kind by the peridynamic model has a bounded inverse, which may provide a solvability criterion for the Fredholm integral equation of the first kind. (2) Based on the mixed Galerkin weak formulation we develop a mixed Galerkin method and prove its wellposedness, which could compensate the lack of solvability proof for the collocation-finite difference scheme proposed in [11]. (3) We prove the convergence of the mixed Galerkin method. (4) We develop a fast algorithm by exploring the Toeplitz structure of the stiff matrix of the method, which reduces the computational cost and a memory requirement from \(\mathcal {O}(N^3)\) and \(\mathcal {O}(N^2)\) of a direct solver to \(\mathcal {O}(N\log N)\) per iteration and \(\mathcal {O}(N)\) respectively. (5) We conduct numerical experiments to substantiate our theoretical results.

2 Model Problem and Auxiliary Lemmas

In this paper, we consider the following generalized nonlocal elasticity model [25]

Here \(d^+ > 0\) and \(d^-> 0\) are the left and right diffusivity coefficients respectively, \(_0 D_x^\beta u(x)\) and \(_x D_1^\beta u(x)\) are the left and right Riemann–Liouville fractional derivatives defined by

where \(_0I_x^{2-\beta }\) and \(_xI_1^{2-\beta }\) stand for the left and right fractional integral operators defined by

with \(\varGamma (x)\) being the Gamma function.

To reformulate (2.1) into a variational framework, in the subsequent development of the paper we shall use the well established relations between the fractional Sobolev spaces \(H^\mu (0,1)\) and the negative fractional derivative spaces \(J_L^{-\mu }(0,1), J_R^{-\mu }(0,1)\) and the fractional derivative spaces \(J_L^\mu (0,1), J_R^\mu (0,1)\). We put the definitions of these spaces in the Appendix.

Lemma 2.1

([7], Theorem 2.6) Let \(\mu >0\), \(\mu \ne n-\frac{1}{2}\), \(n \in N\). Then, the left negative fractional derivative space \(J_L^{-\mu }(0,1)\), the right negative fractional derivative space \(J_R^{-\mu }(0,1)\) and the negative fractional Sobolev space \(H^{-\mu }(0,1)\) are equal with equivalent norms.

Lemma 2.2

([13], Theorem 2.12) Let \(\mu >0\), \(\mu \ne n-\frac{1}{2},n \in N\). Then, the left fractional derivative space \(J^\mu _{L,0}(0,1)\), the right fractional derivative space \(J^\mu _{R,0}(0,1)\) and the fractional Sobolev space \(H^\mu _0(0,1)\) are equal with equivalent semi-norms and norms.

When \(0 \le \mu \le \frac{1}{2}\), the Sobolev space \(H^\mu (0,1)\) can be viewed as the completion of \(C_0^{\infty }(0,1)\), the set of all infinitively differentiable functions that vanish outside a compact subset of (0, 1), with respect to its norm [18].

Lemma 2.3

([18], Theorem 11.1) \(C_0^{\infty }(0,1)\) is dense in \(H^\mu (0,1)\) if and only if \(0\le \mu \le \frac{1}{2}\).

Remark 2.1

For \(0\le \mu < \frac{1}{2}\), the spaces \(J^\mu _{L,0}(0,1),\) \(J^\mu _{R,0}(0,1),\) \(H^\mu _0(0,1)\) and \(H^\mu (0,1)\) are equal with equivalent norms. For notational convenience, we do not differentiate these spaces in this case in subsequent sections, using \(\Vert \cdot \Vert _\mu \) and \(|\cdot |_\mu \) to denote its norm and semi-norm respectively. When \(\mu = 0,\) we understand \(H^0(0,1) = L^2(0,1)\) and simply use \(\Vert \cdot \Vert \) to denote its norm. Moreover, in negative fractional Sobolev space \(H^{-\mu }(0,1)\), we also use \(\Vert \cdot \Vert _{-\mu }\) to denote its norm.

We conclude this section by proving two mapping properties of the integral operators and establishing an equality concerning the relation of the dual pairs and the inner products for later use.

Lemma 2.4

Let \(0\le \mu <{1\over 2}\), the following mapping properties hold:

-

1.

\(_0I_x^{2\mu }: H^{-\mu }(0,1)\rightarrow H^{\mu }(0,1)\) is a bounded linear operator.

-

2.

\(_xI_1^{2\mu }: H^{-\mu }(0,1)\rightarrow H^{\mu }(0,1)\) is a bounded linear operator.

Proof

[19] indicates the operators \(_0I_x^{2\mu }\) and \(_xI_1^{2\mu }\) are linear, it remains to show that they are continuous. For this purpose, we apply Remark 2.1, the semigroup property of the fractional integral operator (Lemma 9.4 in Appendix) and Lemma 2.1 to deduce that

where C is a generic constant independent of v. Here we use another mapping property that \(_0I_x^{\mu }\) is bounded from \(L^2(0,1)\) to \(L^2(0,1)\) [13]. This is the first property of the lemma. A similar argument is applied to derive the second property. \(\square \)

Lemma 2.5

Let \(0\le \mu <{1\over 2}\), \(v,w\in H^{-\mu }(0,1).\) Then, the following relation between the dual pair \(<\cdot , \cdot>\) over \(H^{-\mu }(0,1)\times H^{\mu }(0,1)\) and \(L^2\) inner product \((\cdot ,\cdot )\) holds,

Proof

The completeness of \(C_0^\infty (0,1)\) in \(H^{-\mu }(0,1)\) under its norm \(\Vert {_0I_x^\mu }\cdot \Vert \) implies that, for \(w\in H^{-\mu }(0,1),\) there exists a sequence \(\{w_n\}_{n=1}^\infty \) in \(C_0^\infty (0,1)\) such that

It follows that, from the adjoint property of the fractional integral operators(Lemma 9.5 in Appendix), Lemma 2.4 and the definitions and the equivalence of \(J^{-\mu }(0,1)\) and \(H^{-\mu }(0,1)\),

Noting that

we have

\(\square \)

In subsequent sections, we omit the space interval (0, 1) in any functional spaces whenever no confusion occurs.

3 A Mixed Galerkin Variational Formulation and Its Wellposedness

We start by rewriting the governing Eq. (2.1) as a mixed formulation by introducing an intermediate variable v

We multiply the first equation by any \(w \in H^{-\frac{1-\alpha }{2}}\), integrate the resulting equation over (0, 1) and use Lemma 2.5 to obtain the following

We then multiply the second equation in (3.1) by any \(\varphi \) in \(H_0^{\frac{\beta }{2}}\), integrate the resulting equation over the interval (0, 1) and apply the adjoint property of the fractional differential operator cited by Lemma 9.7 in Appendix to obtain

To summarize, we formulate the following mixed Galerkin weak formulation for problem (2.1): For any given \(f \in H^{\frac{1-\alpha }{2}}\), find \((v,u) \in H^{-\frac{1-\alpha }{2}} \times H_0^{\frac{\beta }{2}}\) such that

Theorem 3.1

The bilinear form \(a(\cdot ,\cdot )\) is coercive and continuous on \(H^{-\frac{1-\alpha }{2}}\times H^{-\frac{1-\alpha }{2}}\). That is, there exist positive constants \(0< C_1 \le C_2 < +\infty \) such that

Similarly, the bilinear form \(b(\cdot ,\cdot )\) is coercive and continuous on \(H_0^{\frac{\beta }{2}} \times H_0^{\frac{\beta }{2}}\). In other words, there exist positive constants \(0< C_3 \le C_4 < +\infty \) such that

Consequently, for any given \(f \in H^{\frac{1-\alpha }{2}}\), the mixed formulation (3.4) has a unique solution \((v,u) \in H^{-\frac{1-\alpha }{2}} \times H_0^{\frac{\beta }{2}}\) with the stability estimates

Proof

Using the definition of \(a(\cdot ,\cdot )\) and Lemma 9.2 in Appendix, we have

We thus prove the coercivity of \(a(\cdot ,\cdot )\). We now use the equivalence between \(J_L^{-\frac{1-\alpha }{2}}\), \(J_R^{-\frac{1-\alpha }{2}}\) and \(H^{-\frac{1-\alpha }{2}}\) in Lemma 2.1 to obtain that for any \(w \in H^{-\frac{1-\alpha }{2}},\)

We thus prove the continuity of \(a(\cdot ,\cdot )\). For any given \(f \in H^{\frac{1-\alpha }{2}}\), Lax–Milgram lemma assures that there exists a unique solution \(v \in H^{-\frac{1-\alpha }{2}}\) to the weak formulation (3.2) which satisfies the last stability estimate in (3.7).

We next turn to the bilinear form \(b(\cdot ,\cdot )\). Note that \(\cos \frac{\beta \pi }{2}<0\) for \(1<\beta <2,\) we use Lemma 9.3 in Appendix to conclude that for any \(u\in H_{0}^{\beta \over 2}\),

where at the last inequality we have used the fractional Poincaré inequality. We thus prove the coercivity of \(b(\cdot ,\cdot )\). To prove the continuity of \(b(\cdot ,\cdot )\) we note that

We note that \(H^{-\frac{1-\alpha }{2}}(0,1)\hookrightarrow H^{-\frac{\beta }{2}}(0,1)\) as \(H_0^{\frac{\beta }{2}}(0,1) \hookrightarrow H_0^{\frac{1-\alpha }{2}}(0,1)\) for \( (1-\alpha )/2< 1/2< \beta /2 < 1\) [1]. Hence, we have \(v \in H^{-\frac{\beta }{2}}\). Lax–Milgram lemma ensures that the weak formulation (3.3) has a unique solution \(u \in H_0^{\frac{\beta }{2}}\) which satisfies the first inequality in (3.7). \(\square \)

We end this section by some comments. Define an operator \(\mathcal {L}: H^{-\frac{1-\alpha }{2}}(0,1)\rightarrow H^{\frac{1-\alpha }{2}}(0,1)\) as

It’s easy to see that \(\mathcal {L}\) is a Fredholm integral operator of the first kind and the peridynamic part in (3.1) can be viewed as

Corollary 1

The Fredholm integral operator of the first kind \(\mathcal {L}\) defined as (3.8) is invertible from \(H^{{1-\alpha }\over 2}(0,1)\) to \(H^{-{{1-\alpha }\over 2}}(0,1).\) Moreover, the inverse operator \(\mathcal {L}^{-1}\) of \(\mathcal {L}\) is also bounded.

Proof

From the first equality of (3.2), the operator can also be expressed equivalently by the bilinear form \(a(\cdot ,\cdot )\)

Then, the coercivity of \(a(\cdot ,\cdot )\) in \(H^{-\frac{1-\alpha }{2}}(0,1)\) suggests that

which implies that the operator \(\mathcal {L}\) is an invertible linear operator with the bound[8, 21]

The proof is completed. \(\square \)

As a consequence, the induced Fredholm integral operator of the first kind by the peridynamic part in (3.1) is invertible from \(H^{\frac{1-\alpha }{2}}(0,1)\) to \(H^{-\frac{1-\alpha }{2}}(0,1), \) which may provide a solvability criterion for the Fredholm integral equation of the first kind with a weak-singular kernal.

4 A Mixed Galerkin Finite Element Method Based on the Variational Formulation

Let N be a positive integer. We define a uniform partition on [0, 1] by \(x_i := ih\) for \(i=0,1,\ldots ,N\) with \(h = 1/N\) and the intervals \(I_i=(x_{i-1},x_{i}) \) for \( i=1,2,\cdots ,N.\)

Let \(P_k\) denote the set of polynomials of degree less than or equal to k. We define two finite element spaces

Considering that \(M_h\) and \(X_h\) don’t need to satisfy any matching constraints, like the LBB-condition required by Raviart-Thomas mixed finite element space [20], we can use a different partition: \(I_{\frac{1}{2}}=(0, \frac{1}{2}),\) \(I_{i+\frac{1}{2}}=(i-\frac{1}{2}h, i+\frac{1}{2}h)\) for \(i=1,\ldots , N-1\) and \(I_{N+\frac{1}{2}}=((N-\frac{1}{2})h, 1)\) for \(M_h\) to keep the flexility of the mixed Galerkin procedure. In this way, the finite element spaces are as follows,

Then the mixed Galerkin method is defined as: find \(v_h \in M_h\) and \(u_h \in X_h\) such that

As the finite element spaces \(M_h\times X_h\subset H^{-\frac{1-\alpha }{2}}\times H_0^{\frac{\beta }{2}}\), Theorem 3.1 ensures the wellposedness of the numerical scheme (4.1) and (4.2).

It is worthy to point out that these two \(M_h\)s do have no essential differences except for the degrees of freedom of the later one is \(k+1\) more than that of the first one. Therefore, we shall use the finite element spaces defined on the later partition with \(k=0, l=1\) to discuss the numerical behaviours of (4.1) and (4.2) in Sects. 6 and 7. In this case,

Consequently the numerical solution \(v_h\) and \(u_h\) can be expressed as

If we denote

we can rewrite (4.1), (4.2) into the following matrix form,

where \(A=(a_{i,j})_{(N+1)\times (N+1)}\), \(B=(b_{i,j})_{(N-1)\times (N-1)}\), \(\varvec{F}=(F_i)_{(N+1)}\) and \(\varvec{V}=(V_i)_{(N-1)}\) are given by

Remark 4.1

In [11], the authors decomposed the generalized nonlocal elastic model (2.1) into (3.1) and discretized the first equation by the collocation method with N collocation points and the second equation by the finite difference method. The resulted coefficient matrices are of \(N\times (N+2)\) and \((N+2)\times N\) respectively. Hence, the solvability for \(v_h\) and \(u_h\) can not been proved, even only for \(u_h.\) Theorem 3.1 here concerning the solvability of the mixed type variational principle can provide a better understanding for the structure of the exact solution, and the wellposedness of the discrete procedure compensates the lack of proof for the solvability in [11]. In this sense, the mixed Galerkin procedure (4.1) and (4.2) is a meaningful improvement of [11].

5 Convergence Analysis

We shall conduct error estimates of the mixed Galerkin method under the norms \(\Vert \cdot \Vert _{-\frac{1-\alpha }{2}}\) and \(\Vert \cdot \Vert _{\frac{\beta }{2}}\). Let \(\varPi _h: C[0,1] \rightarrow X_h\) be the interpolation operator and \(P_h: L^2(0,1) \rightarrow M_h\) be the orthogonal \(L^2\) projection operator. The following approximation properties are well known [4, 7, 8].

Lemma 5.1

[4, 8] Assume that \(u \in H^s(0,1)\) and \(v \in H^m(0,1)\). Then, there exists a positive constant C such that

Lemma 5.2

[4, 7] Assume that \(v \in H^m(0,1)\) for \(m\ge 0\) and \(0\le r \le m\). Then, there exists a positive constant C such that

We are now in the position to prove the error estimates for v and u.

Theorem 5.1

Let \((v,u) \in H^{-\frac{1-\alpha }{2}} \times H_0^{\frac{\beta }{2}}\) be the solution to the weak formulation (3.4) and \((v_h,u_h) \in M_h \times X_h\) be the solution to the corresponding numerical scheme (4.1) and (4.2). Furthermore, we assume that \(u \in H^s_0\) with \(s > \beta \). Then, there exists a positive constant C such that

Proof

We first show that the intermediate variable v defined by the second equality of (3.1) is in \(H^{s-\beta }(0,1) \) and \(|v|_{s-\beta ,(0,1)}\le C|u|_{s,(0,1)}\) as the unknown function \(u\in H_0^s(0,1),\) by Fourier transform \(\mathscr {F}(\cdot )\)(see Appendix) and its properties [19].

Let \(\tilde{u}\) be the extension of u by zero outside (0, 1), then \(u\rightarrow \tilde{u}\) is a continuous mapping from \(H_0^s(0,1)\) to \( H^s(\mathbb {R})\) [18]. That is, there exists a constant C independent of u such that \(|\tilde{u}|_{s,\mathbb {R}}\le C|u|_{s,(0,1)}.\)

Let \(\tilde{v}(x):=-\{d^{+}{_{-\infty }D_x^\beta }\tilde{u}(x)+d^{-}{_xD_{+\infty }^\beta }\tilde{u}(x)\},\) we have, for \(x \in (0,1),\)

Applying the definition of \(|\cdot |_{s-\beta ,\mathbb {R}}\) and the properties of Fourier transform \(\mathscr {F}(\cdot )\) to derive

that is, \(|v|_{s-\beta , (0,1)}\le C |u|_{s,(0,1)}.\)

We then prove (5.3). Since \(M_h \subset H^{-\frac{1-\alpha }{2}}\), we take \(w=w_h \in M_h\) in (3.2) and subtract (4.1) from (3.2) to derive the following error equation,

We use the coercivity and continuity of \(a(\cdot ,\cdot )\) to derive that for \(w_h \in M_h\)

This yields

We combine this with the estimate (5.2) to prove the first estimate in (5.3).

We now turn to the second estimate. As \(X_h \subset H^{\frac{\beta }{2}}_0\), we take \(\varphi =\varphi _h\in X_h\) in (3.3) and subtract (4.2) from (3.3) to derive the error equation

We use the coercivity and the continuity of \(b(\cdot ,\cdot )\) and Young’s inequality to deduce

We absorb the \(\Vert u-u_h\Vert ^2_{\frac{\beta }{2}}-\)term on the right hand side by its analogue on the left, denote \(\frac{2C}{C_3}\) still by a generic constant C, use Lemma 5.1 and the estimate for \(v-v_h\) to obtain

This completes the proof. \(\square \)

Remark 5.1

As the boundary condition states that the solution \(u=0\) for \(x\notin (0,1)\), or \(x\in \mathbb {R}\backslash (0,1),\) this forces that the solution u must lie in \( H^s_0(\varOmega )\) if we require u to possess \(H^s(\varOmega )-\) regularity. Otherwise the solution u may produce strong singularity on the boundary which would destroy the regularity assumption \(u\in H^s(\varOmega ).\) A similar observation was found and proved in [16]. This reflects a major difference between the fractional and second order differential equations due to the non-locality of the fractional operators. Therefore, the regularity assumption \(u\in H^s_0(\varOmega )\) on the solution of (2.1) is natural and reasonable.

Remark 5.2

In the derivation of the last inequality, a sharp estimate for the first term \(\Vert u-\varPi _h u\Vert _{\frac{\beta }{2}}\) can be easily obtained by directly using Lemma 5.1. However, the estimate for the second term may not be sharp since we have to use the bound of \(\Vert v-v_h\Vert _{-\frac{1-\alpha }{2}}\) instead of \(\Vert v-v_h\Vert _{-\frac{\beta }{2}}\) by the embedding inequality

This may make a loss of \(\frac{\alpha +\beta -1}{2}\)—order in the convergence rate.

6 Fast Algorithm and Its Efficient Implementation

When solving the linear equations \(M\varvec{x}=\varvec{y}\) where M is a \(N \times N\) full coefficient matrix, we generally use the Gauss elimination method to obtain the solution \(\varvec{x}\), which requires \(\mathcal {O}(N^3)\) computations and \(\mathcal {O}(N^2)\) storage. In order to reduce the computational cost, some practical iterative algorithms appear. For example, if M is symmetric positive definite, conjugate gradient (CG) method is an efficient iterative algorithm to solve this problem [3]. Furthermore, if the coefficient matrix M is nonsymmetric, the CG method can be applied to its equivalent linear system

which is called conjugate gradient on the normal equation (CGNR) method [3]. This still needs the storage of \(\mathcal {O}(N^2).\) But the major computational cost per iteration is the matrix-vector multiplication \(M\varvec{p}\) with the order reduced to \(\mathcal {O}(N^2)\).

When \(M=C\) is a \(N\times N\) circulant matrix, each row vector is rotated one element to the right relative to the preceding row vector. As a result, we only need to store the first row vector \(\varvec{c}\) of C with the storage reduced to \(\mathcal {O}(N)\). Moreover, a circulant matrix can be decomposed as follows [9, 14],

where \(F_N\) is the \(N \times N\) discrete Fourier transform matrix in which the \((j,l)-\)entry \(F_N(j,l)\) of the matrix \(F_N\) is given by

with \(i^2=-1\). The Fourier transform matrix-vector multiplication can be realized via fast Fourier transform (FFT) for the vector and the computational cost of FFT is \(\mathcal {O}(NlogN)\), so is the circulant matrix-vector multiplication.

When M is a \(N \times N\) Toeplitz matrix whose descending diagonal from left to right is constant, M can be embedded into a \(2N \times 2N\) circulant matrix. Therefore the storage of Toeplitz matrix and the computational cost of Toeplitz matrix-vector multiplication can also be reduced to \(\mathcal {O}(N)\) and \(\mathcal {O}(NlogN)\) respectively [26]. Combined this technique with CG and CGNR method, we call them fast CG and fast CGNR method.

We find that the coefficient matrices A and B generated by discretization for (4.1) and (4.2) possess a Toeplitz-like structure, when the piecewise constant finite element space \(M^0_h\) and the piecewise linear finite element space \(X^1_h\) are used. Hence, we shall carefully explore their characteristics of easy computation and design a fast algorithm based on the CG and CGNR method.

Theorem 6.1

Assume that the base functions of \(M^0_h\times X^1_h\) are defined as in Sect. 4. The symmetric coefficient matrix A generated by the finite element procedure (4.1) can be expressed in the form

Here \(\varvec{\omega }=(\omega _1,\omega _2,\ldots ,\omega _{N-2},\omega _{N-1})^{T}\), \(\varvec{\tilde{\omega }}=(\omega _{N-1},\omega _{N-2},\ldots ,\omega _2,\omega _1)^{T}\) are \(N-1\) vectors. \(\omega _0,\omega _N\) are real numbers. \(\tilde{A}\) is a symmetric \((N-1)\times (N-1)\) Toeplitz matrix.

Proof

Noting that A is a symmetric matrix from the symmetry of the bilinear form \(a(\cdot ,\cdot ),\) we only calculate those entries \(a_{i,j},\) \(i\ge j,j=0,1,\ldots ,N.\) Since \(\phi _0\) and \(\phi _N,\) the base functions at the end semi-interval, are of different forms from the others, we first focus on the calculation of the entries \(a_{i,j},\) \(i\ge j, j=1,2,\ldots ,N-1.\)

Applying (4.5) and the definition of the base function \(\phi _i\) to compute, we have, when \(i-j=0\) or \(i=j,\)

with B(p, q) being the Beta function. When \(i-j=m, m=1,2,\ldots ,N-2,\) similar calculation shows that

This indicates that the value of \(a_{i,j}=a(\phi _{j+m},\phi _j)\) for \(i-j=m\) depends only on the index m, which ensures that the entries in each descending diagonal are same.

We then calculate the remaining entries analogously,

from which we easily find that \(a(\phi _0,\phi _{i})=a(\phi _{N-i},\phi _N),\) that is, \(a_{0,i}=a_{N-i,N}\) for \(i=1,2,\ldots ,N-1.\)

Finally we let

and put them into the matrix A according to their \(i-j\) positions to express A as below,

This shows that \(\tilde{A},\) the centered part of A, is a Toeplitz matrix. We then rewrite A into its block form based on the definitions of \(\varvec{\omega }, \varvec{\tilde{\omega }}\) and \(\tilde{A}\) to complete the proof. \(\square \)

Theorem 6.2

Assume that the base functions of \(M^0_h\times X^1_h\) are defined as in Sect. 4. The coefficient matrix B generated by the finite element procedure (4.2) is a \((N-1)\times (N-1)\) Toeplitz matrix.

Proof

Analogous to the derivation of the matrix A we get

whose entries are given by

As a result, B is a Toeplitz matrix. \(\square \)

For (4.2), Theorem 6.2 indicates that the matrix B is a \((N-1)\times (N-1)\) nonsymmetric Toeplitz matrix. The fast algorithm can be directly formulated based on the CGNR method to solve the linear system, with the storage reduced to \(\mathcal {O}(N)\) and the computational cost reduced to \(\mathcal {O}(NlogN)\) per iteration [26].

For (4.1), Theorem 6.1 tells that the matrix A is a \((N+1)\times (N+1)\) symmetric Toeplitz-like matrix, thus some extra work is necessary. It’s not difficult to find that the matrix A can be regarded as the composition of a Toeplitz matrix and a sparse matrix, that is, \(A=A_1+A_2\), with

and

On one hand, \(A_1\) is a \((N+1) \times (N+1)\) Toeplitz matrix with the storage to be \(\mathcal {O}(N)\) and the computational cost of the matrix-vector multiplication to be \(\mathcal {O}(NlogN)\). On the other hand, \(A_2\) is a \((N+1) \times (N+1)\) sparse matrix whose storage requires \(\mathcal {O}(N)\) memory and matrix-vector multiplication only requires \(\mathcal {O}(N)\) operations. To conclude, when solving (4.1) by CG method, the storage and the computational cost of matrix-vector multiplication for Toeplitz-like matrix can still be reduced to \(\mathcal {O}(N)\) and \(\mathcal {O}(NlogN)\) respectively. That is the following conclusion:

Compared with Gauss elimination method, the computational cost for solving (4.1) and (4.2) can be reduced from \(\mathcal {O}(N^3)\) to \(\mathcal {O}(NlogN)\) and the storage can be reduced from \(\mathcal {O}(N^2)\) to \(\mathcal {O}(N)\) at each iteration.

According to Theorem 6.1, A is a symmetric definite matrix and the iteration number in iterative algorithm is small. Nevertheless, in some cases, the Toeplitz system B may be ill-conditioned and the iterative method may converge very slowly. To overcome such shortcoming, preconditioners always play crucial roles [17]. With a special circulant matrix P, the following linear system is equal to \(B\varvec{u}=\varvec{f}\):

A proper matrix P can further reduce the iterative number in fast CG or fast CGNR method so as to reduce the computing time in solving \(\varvec{u}\). Here we formulate T. Chan’s circulant preconditioner [6] into (6.4). Since both of the matrix-vector multiplication \(B\varvec{u}\) and the inverse of the circulant preconditioner P require \(\mathcal {O}(NlogN)\) operations, the total computational cost for fast PCG or fast PCGNR methods is also \(\mathcal {O}(NlogN)\) at each iteration. The following numerical examples clearly shows the decrease of the iterative number and the computing time.

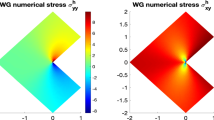

7 Numerical Experiments

In this section, we are going to present two numerical examples, one for smooth solution and the other for non-smooth solution, to illustrate the performance for our mixed-type finite element procedure and fast algorithm.

Example 1

Let \(u=x^2(1-x)^2, \alpha =0.5, \beta =1.5, d^+=d^-=\varGamma (1.5)\). Then the right term can be calculated as

Here, \(u \in H_0^{2+\gamma }(0,1)\) and \(\gamma \in [0, \frac{1}{2})\) can be selected as close to \(\frac{1}{2}\) as possible [7, 16].

Since A is a symmetric matrix according to Theorem 6.1 and the parameters in this example lead to a symmetric positive definite matrix B, fast CG method is enough to deal with the linear systems. Matlab software is used in computing and the numerical results are presented in Tables 1, 2, 3, and 4.

Tables 1 and 2 show the errors as well as the convergence orders for \(\Vert v-v_h\Vert _{-\frac{1-\alpha }{2}}\) and \(\Vert u-u_h\Vert _{\frac{\beta }{2}}\) respectively. In Table 3, we compare the CPU time in solving \(v_h\) by means of Gauss elimination method and the fast CG method. In Table 4, fast PCG method is applied in solving \(u_h\) to verify the decrease of iterative number.

The numerical results in Table 1 indicates that the convergence orders of \(\Vert v-v_h\Vert _{-\frac{1-\alpha }{2}}\) are a little bit lower than \(0.75+\gamma \approx 1.25\), the predicted convergence order by Theorem 5.1. The reason may be that in programming computation of the error \(\Vert v-v_h\Vert _{-\frac{1-\alpha }{2}}\), quadrature formulas are used more often than other calculations and the errors are probably affected. Theoretically, the convergence order for \(\Vert v-v_h\Vert \) should be \(0.5+\gamma \approx 1.\) Table 1 shows that the numerical convergence orders are greater than 1, a little bit higher than theoretical convergence order.

Table 2 reports that the convergence orders of \(\Vert u-u_h\Vert _{\frac{\beta }{2}}\) are about 1.25, which is consistent with the convergence order \(\Vert u-\varPi _hu\Vert _{\frac{\beta }{2}}\) and the theoretically predicted convergence order by Theorem 5.1. The convergence orders for u in \(L^2\) norm are distributed between 1 and 2 since its errors depend on v which is approximated by piecewise constant functions.

Next we check the performance of the fast algorithm. We experiment 10 times and take the mean CPU time as the test datum. Table 3 compares the CPU cost and iterative number between Gauss elimination and the fast CG method for solving (4.3). It’s essential that both of them share the almost same error of \(\Vert v-v_h\Vert _{-\frac{1-\alpha }{2}}\). When \(N=2^{10}\), the Gauss elimination method consumes more than 40 s to finish the calculation while the fast CG method only needs \({>}1\) s to reach the solution. Comparatively speaking, the fast CG method evidently reduces the computation time.

In Table 4, the reduction of the computation time is more apparent. As \(N=2^{10}\), the Gauss elimination method consumes more than an hour. By contrast, the fast algorithm solves the problem within one second. Nevertheless, the iterative number of the fast CG is increasing fast as the mesh size becomes smaller. Therefore, we formulate the fast PCG method to make an improvement. From the experiment results, the fast PCG method may take more time than the fast CG method when \(N\le 2^7\) since the saved time in iterative algorithm does not counteract the consumed time to deal with the perconditioner. However, as N grows, the use of the preconditioner is expected to greatly reduce the iterative number in the fast CG method, also reduce the CPU time.

Example 2

Let \(u=x(1-x), \alpha =0.5, \beta =1.5, d^+=d^-=\varGamma (1.5)\). Then the right term can be calculated as

Here, \(u \in H_0^{1+\gamma }(0,1)\) and \(\gamma \in [0, \frac{1}{2})\) is defined as in Example 1.

Similar to Example 1, Tables 5 and 6 show the convergence results for \(v-v_h\) and \(u-u_h\) respectively. Table 7 compares their CPU time in solving \(v_h\) by Gauss elimination method and fast CG method. In Table 8, fast PCG method is added to explain the advantage of the preconditioner.

Theoretically we can not obtain any convergence order for \(v-v_h\) since \(-\frac{1}{2}+\gamma <0\) and v only lies in \(H^{-\frac{1}{2}+\gamma }(0,1).\) However, parallel to the case of smooth v, one would formally derive \(\Vert v-v_h\Vert _{-\frac{1-\alpha }{2}}\approx \mathcal {O}(h^{-\frac{1}{2}+\gamma -(-\frac{1-\alpha }{2})})\approx \mathcal {O}(h^{0.25}).\) Table 5 presents the numerical results of \(\Vert v-v_h\Vert _{-\frac{1-\alpha }{2}}.\) Although the errors seem to be large due to the contribution of the factor \({\frac{1}{1-2\gamma }}\) in the norm \(\Vert v\Vert _{-\frac{1}{2}+\gamma },\) the convergence orders are a bit higher than and approaching to 0.25 which agrees with the formally derived estimate. This shows that our mixed procedure can still approximate non-smooth solution accurately.

Table 6 gives the numerical results for \(u-u_h.\) The convergence orders of \(\Vert u-u_h\Vert _{\frac{\beta }{2}}\) are about \(\gamma +0.25\approx 0.75,\) which is better than the theoretical order given by Theorem 5.1 and obey the prediction of Remark 5.2. The convergence orders of \(\Vert u-u_h\Vert \) look almost the same as those of \(\Vert u-u_h\Vert _{\frac{\beta }{2}}\) only and the loss of the convergence order may result from poor regularity of v.

The last two tables clearly show the nice performance of the fast algorithm as well as the preconditioner technique analogously to the Example 1. The apparent reductions of the computation time and the iterative number again verify their efficiency.

8 Concluding Remarks

We have established the well-defined mixed-type variational principle for a generalized nonlocal elastic model composed by a fractional differential equation and a peridynamic model in \(H^{-\frac{1-\alpha }{2}}(0,1)\times H_0^{\frac{\beta }{2}}(0,1)\) and developed the mixed-type finite element procedure which approximates the unknown function u and the intermediate variable v. We find that the highlights of our paper at least are: (1) it creates a solvability criterion for the Fredholm integral equation of the first kind by the solvability of the variational principle; (2) it provides a mixed-type finite element scheme and the existence and uniqueness of its solution can compensate the lack of solvability proof for the collocation-finite difference scheme proposed in [11]; and (3) the induced Toeplitz or Toeplitz-like coefficient matrices and the fast algorithm can be applied to reduce the storage to \(\mathcal {O}(N)\) and computational cost to \(\mathcal {O}(NlogN)\).

References

Adams, R.A., Fournier, J.F.: Sobolev Spaces. Elsevier, Singapore (2009)

Askari, E., Bobaru, F., Lehoucq, R.B., Parks, M.L., Silling, S.A., Weckner, O.: Peridynamics for multiscale materials modeling. J. Phys. Conf. Ser. 125, 012078 (2008)

Barrett, R., Berry, M., Chan, T., Demmel, J., Donato, J., Dongarra, J., Eijkhout, V., Pozo, R., Romine, C., van der Vorst, H.: Templates for the Solution of Linear Systems: Building Blocks for Iterative Methods. SIAM, Philadelphia (1994)

Brenner, S., Scott, L.R.: The Mathematical Theory of Finite Element Methods. Springer, New York (1998)

Carreras, B.A., Lynch, V.E., Zaslavsky, G.M.: Anomalous diffusion and exit time distribution of particle tracers in plasma turbulence models. Phys. Plasmas 8(12), 5096–5103 (2001)

Chan, R., Jin, X.: An Introduction to Iterative Toeplitz Solvers. SIAM, Philadelphia (2007)

Chen, H.Z., Wang, H.: Numerical simulation for conservative fractional diffusion equation by an expanded mixed formulation. J. Comput. Appl. Math. 296, 480–498 (2016)

Ciarlet, P.G.: The Finite Element Method for Elliptic Problems. North-Holland, Amsterdam (1978)

Davis, P.J.: Circulant Matrices. Wiley, New York (1979)

Deng, W.H., Hesthaven, J.S.: Discontinuous Galerkin methods for fractional diffusion equations. ESAIM M2AN. Math. Model. Numer. Anal. 47(6), 1845–1864 (2013)

Du, N., Wang, H., Wang, C.: A fast method for a generalized nonlocal elastic model. J. Comput. Phys. 297, 72–83 (2015)

Eringen, A.C.: Nonlocal Continuum Field Theories. Springer, New York (2002)

Ervin, V.J., Roop, J.P.: Variational formulation for the stationary fractional advection dispersion equation. Numer. Methods Partial Differ. Equ. 22, 558–576 (2005)

Gray, R.M.: Toeplitz and circulant matrices: a review. Found. Trends Commun. Inf. Theory 2(3), 155–239 (2006)

Gunzburger, M., Lehoucq, R.B.: A nonlocal vector calculus with application to nonlocal boundary value problems. Multiscale Model. Simul. 8, 1581–1598 (2010)

Jin, B.T., Lazarov, R., Pasciak, J., Rundell, W.: Variational formulation of problems involving fractional order differential operators. Math. Comput. 84, 2665–2700 (2015)

Lei, S.L., Sun, H.W.: A circulant preconditioner for fractional diffusion equations. J. Comput. Phys. 242, 715–725 (2013)

Lions, J.L., Magenes, E.: Non-homogeneous Boundary Value Problems and Applications, vol. I. Springer, New York (1972)

Podlubny, I.: Fractional Differential Equations. Academic Press, New York (1999)

Raviart, P.A., Thomas, J.M.: A mixed finite element method for 2-nd order elliptic problem. Lecture Notes in Mathematics 606, 292–315 (1997)

Royden, H.L., Fitzpatrick, P.M.: Real Analysis. China Machine Press, Beijing (1988)

Silling, S.A.: Reformulation of elasticity theory for discontinuities and long-range forces. J. Mech. Phys. Solids. 48, 175–209 (2000)

Silling, S.A., Lehoucq, R.B.: Convergence of peridynamics to classical elasticity theory. J. Elasticity 93, 13–37 (2008)

Silling, S.A., Lehoucq, R.B.: Peridynamic theory of solid mechanics. Adv. Appl. Mech. 44, 73–168 (2010)

Taloni, A., Chechkin, A., Klafter, J.: Generalized elastic model yields a fractional Langevin equation description. Phys. Rev. Lett. 104, 160602 (2010)

Wang, K.X., Wang, H.: A fast characteristic finite difference method for fractional advection-diffusion equations. Elsevier Adv. Water. Resour. 34, 810–816 (2011)

Zaslavsky, G.M., Stevens, D., Weitzner, H.: Self-similar transport in incomplete chaos. Phys. Rev. E 48(3), 1683–1694 (1993)

Acknowledgments

This work is supported in part by the OSD/ARO MURI Grant W911NF-15-1-0562, by the National Natural Science Foundation of China under Grants 10971254, 11301311, 11471196, 91130010, 11471194, 11571115, and 11171193, and by the National Science Foundation under Grants DMS-1216923 and DMS-1620194. The authors would like to express their sincere thanks to the referees for their very helpful comments and suggestions, which greatly improved the quality of this paper.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

In this appendix, we introduce the definitions and notations of those spaces and the properties of the fractional operators used in this paper. They also can be found in [1, 7, 10, 13, 18, 19] and the references cited therein.

Definition 9.1

(Negative fractional derivative spaces [7, 10]) Let \(\mu >0\). Define the norm

and let \(J_L^{-\mu }(\mathbb {R})\) denote the closure of \(C_0^\infty (\mathbb {R})\) with respect to \(\Vert \cdot \Vert _{J_L^{-\mu }(\mathbb {R})}\). Analogously, define the norm

and let \(J_R^{-\mu }(\mathbb {R})\) denote the closure of \(C_0^\infty (\mathbb {R})\) with respect to \(\Vert \cdot \Vert _{J_R^{-\mu }(\mathbb {R})}\).

By the Fourier transforms

the negative fractional order Sobolev space \(H^{-\mu }(\mathbb {R})\) is defined to be

Definition 9.2

(Negative Sobolev spaces [1, 18]) Let \(\mu >0\). Define the norm

and let \(H^{-\mu }(\mathbb {R})\) denote the closure of \(C_0^\infty (\mathbb {R})\) with respect to \(\Vert \cdot \Vert _{H^{-\mu }(\mathbb {R})}\).

The following equivalences are proved [10].

Lemma 9.1

([10], Theorem 2.5) The three spaces \(J_L^{-\mu }(\mathbb {R})\), \(J_R^{-\mu }(\mathbb {R})\) and \(H^{-\mu }(\mathbb {R})\) are equal with equivalent norms, and

For convenience, we need to restrict the negative fractional derivative spaces to a bounded subinterval of \(\mathbb {R}\), which, in this paper, is denoted by (0, 1). Still, we establish their equivalence with \(H^{-\mu }(0,1)\).

Definition 9.3

(Negative fractional derivative spaces in bounded domain [7]) Define the spaces \(J^{-\mu }_{L}(0,1),J^{-\mu }_{R}(0,1)\) as the closure of \(C_0^{\infty }(0,1)\) under their respective norms.

A similar conclusion with Lemma 9.1 can be derived for the bounded domain (0, 1).

Lemma 9.2

([7], Theorem 2.6) Assume that \(\mu >0.\) Then, the three spaces \(J_L^{-\mu }(0,1)\), \(J_R^{-\mu }(0,1)\) and \(H^{-\mu }(0,1)\) are equal with equivalent norms, and

We then define the fractional derivative spaces introduced in [13].

Definition 9.4

(Fractional derivative spaces [13]) Let \(\mu >0\). Define the semi-norm

and norm

and let \(J^\mu _L(\mathbb {R})\) denote the closure of \(C_0^{\infty }(\mathbb {R})\) with respect to \(\Vert \cdot \Vert _{J^\mu _L(\mathbb {R})}\).

Analogously, we define the right fractional derivative space as follows. Let \(\mu >0\). Define the semi-norm

and norm

and let \(J^\mu _R(\mathbb {R})\) denote the closure of \(C_0^{\infty }(\mathbb {R})\) with respect to \(\Vert \cdot \Vert _{J^\mu _R(\mathbb {R})}\).

We also define the norm for functions in \(H^\mu (\mathbb {R})\) in terms of the Fourier transforms.

Definition 9.5

(Fractional Sobolev spaces [1, 13, 18]) Let \(\mu >0\). Define the semi-norm

and norm

and let \(H^\mu (\mathbb {R})\) denotes the closure of \(C_0^\infty (\mathbb {R})\) with respect to \(\Vert \cdot \Vert _{H^\mu (\mathbb {R})}\).

As stated in [13], the product of the left and the right fractional order derivative for the same real valued function u can be related to \(|\cdot |_{J_L^\mu (\mathbb {R})}\).

Lemma 9.3

([13], Lemma 2.4) Assume that \(\mu >0\). Then for u(x) a real valued function

At last, several good conclusions of the fractional operators will be demonstrated using the following lemmas.

Lemma 9.4

(Semigroup property for fractional integrate operator [19]) Let \(\mu ,\nu >0.\) For any \(u \in L^2(0,1),\) we have

Lemma 9.5

(Adjoint property for fractional integrate operator [19]) Let \(\mu >0.\) For any \(u,v \in L^2(0,1),\) we have

Lemma 9.6

(Semigroup property for fractional derivative operator [13]) Let \(0<s<\mu .\) For any \(u \in J^\mu _{L,0}(0,1)\), we have

and similarly for any \(u\in J^\mu _{R,0}(0,1)\), we have

Lemma 9.7

(Adjoint property for fractional derivative operator [13]) Let \(1<\beta <2\). For any \(\omega \in H^\beta _0(0,1),v \in H_0^{\frac{\beta }{2}}(0,1)\), we have

Rights and permissions

About this article

Cite this article

Jia, L., Chen, H. & Wang, H. Mixed-Type Galerkin Variational Principle and Numerical Simulation for a Generalized Nonlocal Elastic Model. J Sci Comput 71, 660–681 (2017). https://doi.org/10.1007/s10915-016-0316-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-016-0316-4

Keywords

- Generalized nonlocal elastic model

- Fractional derivative equation

- Mixed-type variational principle

- Mixed-type finite element procedure

- Fast algorithm