Abstract

In this paper, we propose a Barzilai–Borwein-like iterative half thresholding algorithm for the \(L_{1/2}\) regularized problem. The algorithm is closely related to the iterative reweighted minimization algorithm and the iterative half thresholding algorithm. Under mild conditions, we verify that any accumulation point of the sequence of iterates generated by the algorithm is a first-order stationary point of the \(L_{1/2}\) regularized problem. We also prove that any accumulation point is a local minimizer of the \(L_{1/2}\) regularized problem when additional conditions are satisfied. Furthermore, we show that the worst-case iteration complexity for finding an \(\varepsilon \) scaled first-order stationary point is \(O(\varepsilon ^{-2})\). Preliminary numerical results show that the proposed algorithm is practically effective.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the following \(L_{1/2}\) regularized problem

where \(\rho >0\), \(\Vert x\Vert _{1/2}^{1/2}=\sum _{i=1}^n|x_i|^{1/2}\), and f is bounded below and Lipschitz continuously differentiable in \(R^n\), that is, there exist two constants \(L_\mathrm{low}\) and \(L_f> 0\) such that

The \(L_{1/2}\) regularized problem is a nonconvex, nonsmooth and non-Lipschitz optimization problem, which has many applications in variable selection and compressed sensing [18, 23]. When \(f(x)=\Vert Ax-b\Vert _2^2/2\) with \(A\in R^{m\times n}\) and \(b\in R^m\) (\(m<n\) or even \(m\ll n\)), problem (1.1) reduces to the following \(L_2\)–\(L_{1/2}\) minimization problem

which is a penalty version of the following constrained \(L_{1/2}\) minimization problem

Problem (1.3) is a special \(L_2\)–\(L_p\) \((0<p<1)\) minimization problem [5, 6, 15]. The purpose of solving this unconstrained (or constrained) optimization problem is to compute the sparse solution of underdetermined linear systems \(Ax=b\) (see, e.g., [22, 23], for details).

Because of nonconvexity and nonsmoothness, many efficient iterative algorithms for optimization problems cannot be directly applied to solve the \(L_{1/2}\) regularized problem and the general \(L_p\) \((0<p<1)\) regularized problem. Recently, a great deal of effort was made to study iterative algorithms for solving this class of optimization problems (see, e.g., [3–6, 15, 17, 18, 21–23], for details).

Bian et al. [3] proposed first-order and second-order interior point algorithms to solve a class of nonsmooth, nonconvex, and non-Lipschitz minimization problems and proved that the worst-case iteration complexity for finding an \(\varepsilon \) scaled first-order stationary point is \(O(\varepsilon ^{-2})\) for first-order interior point algorithm and \(O(\varepsilon ^{-3/2})\) for second-order interior point algorithm. By the use of the first-order necessary condition of (1.3), Wu et al. [21] proposed a gradient based method to solve it and verified that the sequence of iterates converges to a stationary point of (1.3). Li et al. [17] proposed feasible direction algorithms to solve an equivalent smooth constrained reformulation of (1.1) and showed that the iterate sequence generated by the proposed algorithms converges to a stationary point of (1.1).

Iterative reweighted minimization algorithms are another class of important algorithms for solving the \(L_p\) \((0<p<1)\) regularized problem [4, 6, 15, 18, 23]. This class of algorithms was firstly proposed to solve the \(L_2\)–\(L_p\) minimization problem [4, 6, 15, 16, 23] and it consists of solving a sequence of weighted convex optimization problems. According to the type of unconstrained optimization problems solved at each iteration, they were called the iterative reweighted \(L_1\) minimization algorithm (\(\mathrm{IRL_1}\)) [4, 6, 18] and the iterative reweighted \(L_2\) minimization algorithm (\(\mathrm{IRL_2}\)) [15, 16, 18]. Lu [18] extended \(\mathrm{IRL_1}\) and \(\mathrm{IRL_2}\) algorithms and proposed a more general \(\mathrm{IRL_\alpha }\) algorithm to solve the \(L_p\) regularized problem. For the \(L_{1/2}\) regularized problem (1.1), the \(\mathrm{IRL_\alpha }\) algorithm at each iteration solves a weighted optimization problem of the form:

where

with \(\xi >0\) and \(\alpha \ge 1\). When \(\alpha =1\) and \(\alpha =2\), respectively, the \(\mathrm{IRL_\alpha }\) algorithm reduces to the \(\mathrm{IRL_1}\) algorithm [4, 6, 18] and the \(\mathrm{IRL_2}\) algorithm [15, 16, 18]. It is verified [6, 15, 18] that the sequence \(\{x^k\}\) converges to a stationary point of the approximation of the form

The iterative reweighted minimization algorithms need to solve a sequence of large-scale optimization problems. Moreover, they require \(\xi \) to be dynamically updated and approach zero (we refer the reader to [18] for details). This usually brings in lots of computational effort. In order to improve the computational efficiency, one prox-linear iteration was applied to approximately solve (1.4), which yielded the following variant [18]:

where \(\lambda _k\) is chosen to ensure that the new iterate \(x^{k+1}\) satisfies the following inequality

with \(c>0\). We call the variant the accelerated iterative reweighted \(L_\alpha \) minimization algorithm (abbreviated by \(\mathrm{AIRL_\alpha }\)).

There is also an alternative way to derive (1.6). We first apply the prox-linear algorithm to (1.1) and obtain the iteration

The iteration (1.7) is equivalent to solving n one-dimensional \(L_2\)–\(L_{1/2}\) minimization problems. Applying one iteration of the \(\mathrm{IRL_\alpha }\) algorithm to approximately solve these one-dimensional problems yields (1.6). In this way, the prox-linear algorithm can be regarded as outer iteration and the \(\mathrm{IRL_\alpha }\) algorithm is inner iteration.

The one-dimensional \(L_2\)–\(L_{1/2}\) minimization problem has a closed-form solution [22]. Using the closed-form solution, Xu et al. [22] proposed an iterative half Thresholding algorithm (denoted by IHTA) to solve the \(L_2\)–\(L_{1/2}\) minimization problem of the form (1.3). The IHTA iteration can be described as follows:

where \(\mu _k\) is a steplength parameter and \(H_{\alpha ,\frac{1}{2}}: R^n\rightarrow R^n\) is called the half thresholding operator [22], defined by

with

The IHTA algorithm can be seen as an operator splitting algorithm. Based on the operator splitting technique, the iterative soft thresholding algorithm (denoted by ISTA) was also proposed to solve the \(L_1\) regularized problem [7, 9, 13]. IHTA and ISTA algorithms require the steplength to be sufficiently small to guarantee convergence (see [7, 9, 13, 22] for details). A small steplength usually results in slow convergence in practice (see, e.g., [2, 10, 14, 20, 21]). By combining the Barzilai–Borwein (BB) steplength [1] with the nonmonotone line search [11], accelerated ISTA methods were proposed to solve the \(L_1\) regularized problem [14, 20]. Numerical experiments show that the BB steplength significantly improve the speed of ISTA [14, 20].

Motivated by [14, 20], we shall propose a Barzilai–Borwein-like iterative half Thresholding algorithm (abbreviated by BBIHTA) to solve the \(L_{1/2}\) regularized problem (1.1). We firstly apply the prox-linear algorithm to (1.1) and obtain the iteration (1.7), in which the \(\lambda _k\) is closely related to the BB steplength and is chosen such that the new iterate \(x^{k+1}\) satisfies the inequality

where M is a given nonnegative integer, \(\gamma \) is a positive constant and F is defined by (1.1). Noting that the one-dimensional \(L_2\)–\(L_{1/2}\) minimization problem has a closed-form solution, we then solve these one-dimensional \(L_2\)–\(L_{1/2}\) minimization problems exactly. Under appropriate conditions, we study the convergence of the BBIHTA algorithm and show that the worst-case iteration complexity for finding an \(\varepsilon \) scaled first-order stationary point is \(O(\varepsilon ^{-2})\). Finally, we do some preliminary numerical experiments to show the efficiency of the proposed algorithm.

The remainder of the paper is organized as follows. In Sect. 2, we give some preliminaries and propose the BBIHTA algorithm to solve the \(L_{1/2}\) regularized problem (1.1). In Sect. 3, we study the convergence of the proposed algorithm and verify that any accumulation point of the sequence of iterates is a first-order stationary point of (1.1) under mild conditions and is also a local minimizer when additional conditions are satisfied. In Sect. 4, we show that the worst-case iteration complexity for finding an \(\varepsilon \) scaled first-order stationary point is \(O(\varepsilon ^{-2})\). Finally in Sect. 5, we shall numerically compare the performance of the BBIHTA algorithm with that of the other two algorithms.

2 Preliminaries and the Algorithm

The BBIHTA algorithm is based on the iteration (1.7). It is readily seen that the iteration (1.7) can be equivalently reformulated as

For simplicity, we let, in the latter part of the paper,

Then (2.1) can be decomposed into n one-dimensional \(L_2\)–\(L_{1/2}\) minimization problems

where \(x_i^{k+1}\) and \(y^k_i\) are, respectively, the \(i\hbox {th}\) components of \(x^{k+1}\) and \(y^k\). Since the optimization problem (2.3) is nonconvex, it may have more than one local minimizer. The following lemma shows this.

Lemma 2.1

The optimization problem of the form (2.3) has at most two local minimizers.

Proof

Without loss of generality, let us assume that \(y^k_i>0\). Define \(\varphi : R\rightarrow R\) by

Then \(\varphi (t)\) is a monotonically decreasing function of t on the interval \((-\infty ,0]\). Therefore, the optimization problem (2.3) has no local minimizer on the interval \((-\infty ,0)\). Let \(t^*\) be a local minimizer. Then \(t^*=0\) or it satisfies

From (2.5) we deduce that if \(t^*>0\), \((t^*)^{1/2}\) must be a root of the following equation

Let \(t_i\) \((i=1,2,3)\) be the solutions of the equation above and satisfy \(t_1\le t_2\le t_3\). Then we get \(t_1t_2t_3=-\beta _k/4\) and \(t_1+t_2+t_3=0\), which imply that \(t_1<0\) and \(t_2>0\). Therefore, \(\varphi '((t_2)^2)=0\) and \(\varphi '((t_3)^2)=0\). Note that \(\lim \limits _{t\rightarrow 0+}\varphi '(t)=+\infty \). By continuity of \(\varphi '(t)\), we deduce that \(\varphi '(t)>0\) in the interval \((0,(t_2)^2)\), which implies that the function \(\varphi (t)\) is monotonically increasing in this interval. Hence, \((t_2)^2\) is not a local minimizer of the optimization problem (2.3) and this problem has at most one local minimizer in the interval \((0,+\infty )\). On the other hand, since \(\varphi (t)\) is monotonically decreasing on the interval \((-\infty ,0]\) and is monotonically increasing in the interval \((0,(t_2)^2)\), 0 is a local minimizer of the optimization problem (2.3).

The discussion above shows that the optimization problem of the form (2.3) has at most two local minimizers. The proof is complete. \(\square \)

Generally, it is difficult to find an optimal solution (i.e., a global minimizer) of a nonconvex optimization problem. Xu et al. [22] proved that the optimization problem of the form (2.3) has a closed-form solution and derived its expression. We introduce the following two lemmas to show this. For completeness, we provide a simpler proof for Lemma 2.2.

Lemma 2.2

Let \(x_i^{k+1}\in R\) be an optimal solution of problem (2.3). Then \(x_i^{k+1}\ne 0\) if and only if the following inequality holds

Proof

Necessary. Without loss of generality, let us assume that \(x^{k+1}_i>0\). Then from (2.3) we derive that

Moreover, by the first-order necessary condition of (2.3) we know that \(x_i^{k+1}\) satisfies the following equality

This yields

Substituting (2.7) into (2.6), we get

It is not difficult to verify that the function \(\phi (t)\equiv t+\beta _kt^{-1/2}/4\) is monotonically increasing when \(t\ge (\beta _k/2)^{2/3}\). Therefore, we deduce from (2.7) that

Sufficiency. Without loss of generality, we assume that (2.8) holds. Let

Then it holds

where \(\varphi \) is defined by (2.4). By combining (2.4), (2.8) and (2.9), we obtain

This together with (2.10) yields

Hence, \(x_i^{k+1}\ne 0\). The proof is complete. \(\square \)

The following lemma is from [22], which gives an explicit expression for the optimal solution of problem (2.3).

Lemma 2.3

For any \(i=1,2,\ldots ,n\), define

where \(y^k\) and \(\beta _k\) are defined by (2.2) and \((H_{\beta _k,\frac{1}{2}}(y^k))_i\) is calculated according to (1.9) with \(\alpha =\beta _k\). Then \(x_i^{k+1}\) is an optimal solution of problem (2.3).

Now we give a first-order necessary condition for optimality for problem (1.1). Let \(x^*=(x_1^*,x_2^*,\ldots ,x_n^*)^T\in R^n\) be a local minimizer of (1.1). For any \(i=1,2,\ldots ,n\), if \(x_i^*\ne 0\), then simple calculation shows that

that is,

where \(\nabla _if(x)\) denotes the \(i\hbox {th}\) component of \(\nabla f(x)\) and \(\mathrm{sgn}: R\rightarrow \{-1, 0, 1\}\) is defined by

Define a mapping \(\varPsi (x)=(\varPsi _1(x),\varPsi _2(x),\ldots ,\varPsi _n(x))^T:R^n\rightarrow R^n\) with

where \(\mathrm {mid}\{a,b,c\}\) takes the medium value among three variables a, b, c. With the notions above, it is not difficult to verify the following proposition. The proof is omitted here.

Proposition 2.1

If \(x^*\in R^n\) is a local minimizer of problem (1.1), then \(\varPsi (x^*)=0\).

We call a vector \(x\in R^n\) a first-order stationary point if it satisfies that \(\varPsi (x)=0\). From Proposition 2.1 it follows that every local minimizer of problem (1.1) is a first-order stationary point. In [5, 18], similar concepts and properties were also presented.

We now propose the Barzilai–Borwein-like iterative half thresholding algorithm with a nonmonotone line search strategy [11] to solve problem (1.1).

Algorithm 2.1

(Barzilai–Borwein-Like Iterative Half Thresholding (BBIHTA) Algorithm)

Given \(x^0\in R^n\), \(0<\lambda _\mathrm{min}<\lambda _\mathrm{max}<+\infty \), \(\bar{\lambda }\in [\lambda _\mathrm{min},\lambda _\mathrm{max}]\), \(\gamma \in (0,1)\), \(1<\sigma _1<\sigma _2<+\infty \), and integer \(M\ge 0\). Set \(k:=0\).

-

Step 1. If \(\Vert \varPsi (x^k)\Vert _2=0\) stop.

-

Step 2. Set \(\lambda _k\leftarrow \bar{\lambda }\).

-

Step 3. Compute \(y^k\) and \(\beta _k\) by

$$\begin{aligned} y^k=x^k-\nabla f\left( x^k\right) /\lambda _k\qquad \mathrm{and}\qquad \beta _k=2\rho /\lambda _k. \end{aligned}$$ -

Step 4. For each \(i=1,2,\ldots ,n\), compute \(x_i^{k+1}\) by (2.11) and set

$$\begin{aligned} x^{k+1}=\left( x_1^{k+1},x_2^{k+1},\ldots ,x_n^{k+1}\right) ^T. \end{aligned}$$ -

Step 5. (nonmonotone line search)

If

$$\begin{aligned} F\left( x^{k+1}\right) \le \max \limits _{0\le j\le \min (k,M)}F\left( x^{k-j}\right) -\gamma \Vert x^{k+1}-x^k\Vert _2^2, \end{aligned}$$(2.13)then set \(s^k=x^{k+1}-x^k\), \(d^k=\nabla f(x^{k+1})-\nabla f(x^k)\) and go to Step 7; otherwise, go to Step 6.

-

Step 6. Choose

$$\begin{aligned} \lambda _\mathrm{new}\in [\sigma _1\lambda _k,\sigma _2\lambda _k], \end{aligned}$$set \(\lambda _k\leftarrow \lambda _\mathrm{new}\), and go to Step 3.

-

Step 7. Compute \(p^k=(s^k)^Td^k\) and \(q^k=(s^k)^Ts^k\). Define \(\lambda _k^{BB}=p^k/q^k\) and

$$\begin{aligned} \bar{\lambda }=\min \{\lambda _\mathrm{max},\max \{\lambda _\mathrm{min},\lambda _k^{BB}\}\}. \end{aligned}$$(2.14)Let \(k:=k+1\), and go to Step 1.

Remark 2.1

-

(i)

The \(\lambda _k^{BB}\) in Step 7 is the BB steplength and is introduced by Barzilai and Borwein in [1]. The BB gradient method possesses the R-superlinear convergence for two-dimensional convex quadratics. Raydan [19] extended the method to unconstrained optimization by incorporating the nonmonotone line search [11]. The resulting method is globally convergent and is competitive with some standard conjugate gradient codes. Due to the simplicity and efficiency, the BB-like methods have received much attentions in finding sparse approximation solutions to large underdetermined linear systems of equations from signal/image processing [12, 14, 20]. However, it is difficult to analyze the convergence rate of the BB-like methods even if the objective function is convex [8]. The object of (2.14) is to keep \(\bar{\lambda }\) uniformly bounded and this \(\bar{\lambda }\) is always accepted by the non-monotone line search.

-

(ii)

From the previous discussion, we know that \(x^{k+1}\) generated by (2.11) satisfies

$$\begin{aligned} x^{k+1}=\mathrm{arg}\min \limits _{x\in R^n}f(x^k)+\nabla f\left( x^k\right) ^T\left( x-x^k\right) +\frac{\lambda _k}{2}\Vert x-x^k\Vert _2^2+\rho \Vert x\Vert _{1/2}^{1/2}. \end{aligned}$$ -

(iii)

Denote

$$\begin{aligned} \varOmega _0\triangleq \{x: F(x)\le F(x^0)\}. \end{aligned}$$Since f is bounded below, it is not difficult to verify that \(\varOmega _0\) is a closed and bounded set. That is, there exists a constant \(\beta >0\) such that

$$\begin{aligned} \Vert x\Vert _2<\beta ,\qquad \forall x\in \varOmega _0. \end{aligned}$$Moreover, the sequence \(\{x^k\}\) generated by Algorithm 2.1 is contained in \(\varOmega _0\).

Proposition 2.2

The BBIHTA algorithm cannot cycle indefinitely between Step 3 and Step 6.

Proof

Since f is Lipschitz continuously differentiable in \(R^n\), it holds

From (1.2) it follows

which together with (2.15) implies

Assume that \(\lambda _k\ge L_f+2\gamma \). Then by (2.16) we have

From (ii) of Remark 2.1, it follows

Combining (2.17) and (2.18), we obtain

Therefore, when \(\lambda _k\ge L_f+2\gamma \), the \(x^{k+1}\) generated by (2.11) satisfies (2.13).

At \(k\hbox {th}\) iteration, we get, by Steps 2, 6 and 7, \(\lambda _k=\bar{\lambda }\sigma _1^l\ge \lambda _\mathrm{min}\sigma _1^l\), after l cycles between Step 3 and Step 6. Since \(\sigma _1>1\), it holds \(\lambda _k\ge L_f+2\gamma \) when \(l\ge \frac{\log (L_f+2\gamma )-\log (\lambda _\mathrm{min})}{\log (\sigma _1)}\). Therefore, by the discussion above, we deduce that the BBIHTA algorithm cannot cycle indefinitely between Step 3 and Step 6. The proof is complete. \(\square \)

The following proposition shows that the sequence \(\{\lambda _k\}\) generated by Algorithm 2.1 is bounded. This proposition shall be used to prove Theorem 3.1 and Lemma 4.1.

Proposition 2.3

Let \(\{\lambda _k\}\) be a sequence generated by Algorithm 2.1. Then the following inequalities hold

Proof

For any \(k=0,1,\ldots \), by Algorithm 2.1 we obtain \(\lambda _k\ge \bar{\lambda }\ge \lambda _\mathrm{min}\). If \(\lambda _k=\bar{\lambda }\), by (2.14) we get \(\lambda _k\le \lambda _\mathrm{max}\). Otherwise, from Step 6 of Algorithm 2.1 and the proof of Proposition 2.2 it follows that

This means that \(\lambda _k< \sigma _2(L_f+2\gamma )\). Therefore, for any \(k=0,1,\ldots \), the following inequalities hold

The proof is complete. \(\square \)

3 The Convergence of the BBIHTA Algorithm

In this section, we shall study the convergence of the BBIHTA algorithm. To this end, we first show the following lemma.

Lemma 3.1

Suppose that the sequence \(\{x^k\}\) generated by Algorithm 2.1 is infinite. Then we have

Proof

Let l(k) be an integer such that

Then, we have

This together with (2.13) implies

Hence, the sequence \(\{F(x^{l(k)})\}\) is nonincreasing. Moreover we obtain from (2.13) for any \(k>M\),

Since f is bounded below, the function F is also bounded below. Consequently the sequence \(\{F(x^{l(k)})\}\) admits a limit. This together with (3.2) implies

In what follows, we shall prove that \(\lim _{k\rightarrow \infty }\Vert x^{k+1}-x^k\Vert _2=0\). First we show, by induction, that for any given \(j= 1, 2, \ldots , M+1\),

and

(We assume, for the remainder of the proof, that the iteration index k is large enough to avoid the occurrence of negative subscripts.) If \(j=1\), (3.4) follows from (3.3). Moreover, (3.4) implies that (3.5) holds for \(j=1\) since F(x) is uniformly continuous on \(\varOmega _0\). Assume now that (3.4) and (3.5) hold for a given j. Then by (2.13) we get

Taking limits in both sides of the last inequality as \(k\rightarrow \infty \), we have by (3.5)

which implies \(\left\{ \Vert x^{l(k)-j}-x^{l(k)-(j+1)}\Vert _2\right\} \rightarrow 0\). By (3.5) and the uniform continuity of F on \(\varOmega _0\), we get

By the principle of induction, we have proved (3.4) and (3.5) for any given \(j=1,2,\cdots ,M+1\).

Now for any k, it holds that

By (3.1), we have \(l(k+2+M)-(k+1)\le k+2+M-(k+1)=M+1\) and \(l(k+2+M)-(k+1)\ge k+2+M-M-(k+1)=1\). It then follows from (3.4) and (3.6) that

Since \(\{F(x^{l(k)})\}\) admits a limit, by the uniform continuity of F on \(\varOmega _0\), it holds that

By (2.13), we have

Taking limits in both sides of the last inequality as \(k\rightarrow \infty \), we obtain by (3.7)

The proof is complete. \(\square \)

The convergence of the BBIHTA algorithm is stated in the following theorem. This theorem shows that every accumulation point of the sequence of iterates generated by the BBIHTA algorithm is a first-order stationary point of (1.1).

Theorem 3.1

Suppose that the sequence \(\{x^k\}\) is generated by Algorithm 2.1. Then either \(\varPsi (x^j)=0\) for some finite j, or every accumulation point \(\bar{x}\) of \(\{x^k\}\) satisfies \(\varPsi (\bar{x})=0\).

Proof

When \(\{x^k\}\) is finite, from the termination condition, it is clear that there exists some finite j satisfying \(\varPsi (x^j)=0\). Suppose that \(\{x^k\}\) is infinite. Then by Lemma 3.1 we have

Let \(\bar{x}\) be an arbitrary accumulation point of \(\{x^k\}\). Then there exists a subsequence \(\{x^{k_l}\}\subset \{x^k\}\) such that

For any i with \(\bar{x}_i=0\), by (2.12) we get

For any i with \(\bar{x}_i\ne 0\), by (3.9) we deduce that \(x^{k_l}_i\ne 0\) for sufficiently large l. Without loss of generality, let us assume that \(\bar{x}_i>0\). Then it holds that \(x^{k_l}_i>0\) for sufficiently large l. Note that \(x_i^{k_l}\) is an optimal solution of (2.3). By the first-order necessary condition of (2.3), we know that for sufficiently large l, \(x_i^{k_l}\) satisfies

where \(y^{k_l-1}_i=x_i^{k_l-1}-\nabla _i f(x^{k_l-1})/\lambda _{k_l-1}\) and \(\beta _{k_l-1}=2\rho /\lambda _{k_l-1}\). Therefore, it holds

which implies

By Proposition 2.3 we know that the sequence \(\{\lambda _{k-1}\}\) is bounded. From (3.8) and (3.9) we deduce that

So using these facts together with (3.10) and (3.9), we obtain

This together with (2.12) and the assumption \(\bar{x}_i>0\) implies

The discussion above shows

This completes the proof. \(\square \)

Remark 3.1

Since the \(L_{1/2}\) regularized problem (1.1) is a nonconvex optimization problem, its first-order stationary point may not be a local minimizer. To see this, consider the following one-dimensional \(L_{1/2}\) regularized problem

Simple calculation shows that the point \(-1\) is a first-order stationary point of the problem above but not a minimizer.

The following theorem gives a sufficient condition for an accumulation point \(\bar{x}\) of \(\{x^k\}\) to be a local minimizer of (1.1). Before giving the theorem, we introduce some notations on submatrix and subvector. Given index sets \(I\subset \{1, 2, \ldots , n\}\) and \(J\subset \{1, 2, \ldots , n\}\), \(\nabla _{IJ}^2 f(\bar{x})\) denotes the submatrix of the Hessian matrix \(\nabla ^2 f(\bar{x})\) consisting of rows and columns indexed by I and J respectively, \(\nabla _{I} f(\bar{x})\) denotes the subvector of the gradient \(\nabla f(\bar{x})\) consisting of components indexed by I and \(x_I\) denotes the subvector of x consisting of components indexed by I.

Theorem 3.2

Let f be twice continuously differentiable. Assume that \(\{x^k\}\) is a sequence of iterates generated by Algorithm 2.1 and \(\bar{x}\) is an accumulation point of \(\{x^k\}\). If it holds \(\bar{I}_A=\emptyset \), or \(\bar{I}_A\ne \emptyset \) and

then \(\bar{x}\) is a local minimizer of the \(L_{1/2}\) regularized problem (1.1). Here, \(\bar{I}_A:=\{i\;|\;\bar{x}_i\ne 0\}\), and \(\sigma (\nabla _{\bar{I}_A\bar{I}_A}^2 f(\bar{x}))\) denotes the smallest eigenvalue of \(\nabla _{\bar{I}_A\bar{I}_A}^2 f(\bar{x})\).

Proof

For any \(d\in R^n\) sufficiently small, it holds that

where \(\bar{I}_C:=\{i\;|\;\bar{x}_i=0\}\).

Assuming that \(\bar{I}_A=\emptyset \), by (3.12) we have

which implies that for any d sufficiently small, it holds

Therefore, \(\bar{x}\) is a local minimizer of the \(L_{1/2}\) regularized problem (1.1).

Assume that \(\bar{I}_A\ne \emptyset \). Since \(\bar{x}\) is an accumulation point of \(\{x^k\}\), by Theorem 3.1 and (2.12) we get

This together with (3.12) implies that

Simple calculation shows that

where g is a bounded vector. For any \(i\in \bar{I}_A\), it holds

Combining (3.13), (3.14) and (3.15), we get

where the second equality follows because

From (3.11) and (3.16) we deduce that for any d sufficiently small, it holds

Therefore, \(\bar{x}\) is a local minimizer of the \(L_{1/2}\) regularized problem (1.1). The proof is complete.

Similar to Theorem 2.2 of [5], the following theorem derives a bound on the number of nonzero entries in any accumulation point \(\bar{x}\) of \(\{x^k\}\).

Theorem 3.3

Suppose that the sequence \(\{x^k\}\) is generated by Algorithm 2.1 and that \(x^0\) is the initial iterate. Let \(\bar{x}\) be an accumulation point of \(\{x^k\}\). Then the number of nonzero entries in \(\bar{x}\) is bounded by

Proof

Since \(\bar{x}\) is an accumulation point of \(\{x^k\}\) and \(x^0\) is the initial iterate, from Algorithm 2.1 it follows that \(F(\bar{x})\le F(x^0)\). Moreover, by Theorem 3.1 we know that \(\bar{x}\) is a first-order stationary point. Let \(\bar{x}_i\ne 0\). By Theorem 2.2 of [18], we get

where \(L_f\) and \(L_\mathrm{low}\) is defined by (1.2). Note that

Therefore, simple calculation shows that

The proof is complete.\(\square \)

Theorem 3.3 shows that for any given \(x^0\in R^n\), a smaller parameter \(\rho \) may yield a denser approximation. Hence, both \(x^0\) and \(\rho \) should be chosen appropriately.

4 Complexity of the BBIHTA Algorithm

In this section, we shall study the complexity of the BBIHTA algorithm. We verify that the worst-case complexity of the algorithm for generating an \(\varepsilon \) global minimizer or an \(\varepsilon \) scaled first-order stationary point of (1.1) is \(O(\varepsilon ^{-2})\). To this end, we introduce some notions and an important lemma.

Definition 4.1

-

(i)

For \(\varepsilon >0\), x is called an \(\varepsilon \) global minimizer of (1.1) if

$$\begin{aligned} F(x)-F(x^*)\le \varepsilon , \end{aligned}$$where \(x^*\) is a global minimizer of (1.1);

-

(ii)

x is called an \(\varepsilon \) scaled first-order stationary point of (1.1) if it satisfies

$$\begin{aligned} \Vert \varPsi (x)\Vert _2\le \varepsilon . \end{aligned}$$

Lemma 4.1

Let \(\{x^k\}\) be a sequence of iterates generated by the BBIHTA algorithm. Then it holds

where \(\lambda =\max \{\lambda _\mathrm{max},\sigma _2(L_f+2\gamma )\}\), \(\beta \) and \(L_f\) are given by (iii) of Remark 2.1 and (1.2), respectively.

Proof

Since the sequence \(\{x^k\}\) is generated by the BBIHTA algorithm, from the discussion of Sect. 2 we derive that for any k and i, \(x^{k+1}_i\) is an optimal solution of problem (2.3). Assume that \(x^{k+1}_i\ne 0\). Then by the first-order necessary condition for optimality, we get

This together with (2.2) implies that

Simple calculation shows that

and so by combining with (2.12), we obtain

It is easy to see that (4.1) also holds when \(x^{k+1}_i=0\). Combining (4.1), (2.12), (1.2), (iii) of Remark 2.1 and Proposition 2.3 gives

where \(\lambda =\max \{\lambda _\mathrm{max},\sigma _2(L_f+2\gamma )\}\). Therefore,

The proof is complete. \(\square \)

By Lemma 4.1, we can verify the following theorem.

Theorem 4.1

For any \(\varepsilon \in (0,1)\), the BBIHTA algorithm obtains an \(\varepsilon \) scaled first-order stationary point or an \(\varepsilon \) global minimizer of the \(L_{1/2}\) regularized problem (1.1) in no more than \(O(\varepsilon ^{-2})\) iterations.

Proof

Let \(\{x^k\}\) be a sequence of iterates generated by the BBIHTA algorithm. From (2.13) and Lemma 4.1, we obtain

Let l(k) be an integer satisfying (3.1). Then the following inequalities hold

and

Assume that \(\Vert \varPsi (x^{k+1})\Vert _2>\varepsilon \) for all k. From (4.3) it follows that

By combining (4.2) and (4.4), we deduce that for any k, there exist at least \(J\triangleq \lceil k/(M+1) \rceil \) nonnegative integers (denoted by \(k_1\), \(k_2\), \(\ldots \), \(k_J\)) such that

and

This yields

where \(x^*\) is a global minimizer of the \(L_{1/2}\) regularized problem (1.1). Choose

Then by a simple calculation, we get

Therefore, the conclusion holds. The proof is complete. \(\square \)

5 Numerical Experiments

In this section, we report some preliminary numerical results to demonstrate the performance of the BBIHTA algorithm. We shall compare the performance of the BBIHTA algorithm with the IHTA algorithm proposed by Xu et al. [22] and the \(\mathrm{AIRL_1}\) (i.e., \(\alpha =1\)) algorithm proposed by Lu in Section 3.1 of [18]. All codes are written in MATLAB and all computations are performed on a Lenovo PC (2.53 GHz, 2.00 GB of RAM).

We test these algorithms on the sparse signal recovery problem, where the goal is to reconstruct a length-n sparse signal \(x_s\) from m observations b with \(m<n\) via solving the \(L_2\)–\(L_{1/2}\) minimization problem (1.3). The signal \(x_s\), the sensing matrix A and the observation b in the problem are generated by the following Matlab code.

Obviously, \(\Vert x_s\Vert _0=T\). Moreover, the vectors \(x_s\) and b satisfy: \(b=Ax_s\). In order to enforce that \(Ax\approx b\) in (1.3), we must choose \(\rho \) to be extremely small. Unfortunately, choosing a small value for \(\rho \) makes (1.3) extremely difficult to solve numerically. Xu et al. [22] present three schemes to adjust the parameter \(\rho \). In these schemes, the parameter \(\rho \) is updated at each iteration. Following [10] and [13], we adopt a continuation strategy to decrease \(\rho \) for three algorithms. In particular, the \(\rho \) in (1.3) is set according to the following procedure:

-

(P1): set \(\rho :=3\times 10^{-2}\) and \(\mathrm{TOLA}=10^{-8}\);

-

(P2): compute \(\bar{x}\) via approximately solving (1.3) such that

$$\begin{aligned} \Vert \varPsi (\bar{x})\Vert _\infty <\mathrm{TOLA}, \end{aligned}$$where \(\varPsi \) is defined by (2.12);

-

(P3): if \(\Vert A\bar{x}-b\Vert _\infty <10^{-3}\), stop; otherwise, let \(\rho :=0.1\times \rho \), and go to step (P2).

By (5.1) it is not difficult to verify that the eigenvalues of \(A^TA\) are 0 and 1. This means that \(\Vert A^TA\Vert _2=1\). Hence by [24], we set \(\mu _k=0.99\) in the IHTA iteration (1.8). The parameters in the BBIHTA algorithm are set as follows:

The parameters in the \(\mathrm{AIRL_1}\) algorithm are chosen according to [18], that is,

and

with \(\varDelta x=x^k-x^{k-1}\) and \(\varDelta g=\nabla f(x^k)-\nabla f(x^{k-1})\). We use the nonmonotone line search to improve the convergence of the \(\mathrm{AIRL_1}\) algorithm. Additionally, to ensure that the sequence of iterates generated by the \(\mathrm{AIRL_1}\) algorithm converges to a stationary point of (1.3), we define the sequences of \(\{\varepsilon ^r\}\) and \(\{\delta ^r\}\) by

where \(\varepsilon ^0\) and \(\delta ^0\) are given. For each \(\varepsilon ^r\), the \(\mathrm{AIRL_1}\) algorithm is applied to (1.5) for finding \(x^r\) such that

where \(X^r=\mathrm{Diag}(x^r)\) and \(|X^r|=\mathrm{Diag}(|x^r|)\).

The initial guesses for three algorithms are generated by the following three schemes: (1) \(x^0\) being a solution from the \(L_2\)–\(L_1\) minimization problem

where \(\rho =3\times 10^{-2}\); (2) \(x^0=(0,0,\ldots ,0)^T\); and (3) \(x^0=A^Tb\). The \(L_2\)–\(L_1\) minimization problem is solved by the SpaRSA algorithm [20].

We first test the ability of three algorithms in recovering the sparse solutions. In this experiment, we fix \(m=2^6\) and \(n=2^8\) and vary the value of T from 1 to 27. For each T, we construct 400 random pairs \((A, x_s)\), and run these algorithms to obtain an approximation \(\bar{x}\) for each pair \((A, x_s)\). For the \(\mathrm{AIRL_1}\) algorithm, we choose three parameter pairs \((\varepsilon ^0, \delta ^0)\). That is,

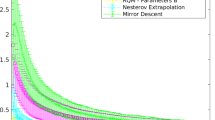

For convenience of presentation, we name the corresponding algorithm as \(\mathrm{AIRL^1_1}\), \(\mathrm{AIRL^2_1}\) and \(\mathrm{AIRL^3_1}\). We consider the recovery a success if \(\Vert x_s-\bar{x}\Vert _\infty \le 10^{-2}\). For each T, Fig. 1 presents the percentages of successfully finding the sparse solutions for these algorithms.

From Fig. 1, we can see that the BBIHTA algorithm gives slightly better success rates than other algorithms. Figure 1 also shows that the success rate of the \(\mathrm{AIRL_1}\) algorithm is dependent on the parameters \(\varepsilon ^0\) and \(\delta ^0\). When \(\varepsilon ^0=10^{-1}\) and \(\delta ^0=10^{-4}\), it gives better success rates.

We then compare the computational efficiency of the BBIHTA algorithm against the IHTA algorithm and the \(\mathrm{AIRL_1^2}\) algorithm, i.e., \(\varepsilon ^0=10^{-1}\) and \(\delta ^0=10^{-4}\). In this experiment, we choose different T, m and n. For each (T, m, n), we construct 400 random pairs \((A, x_s)\), and we run these algorithms to generate an approximation. Table 1 lists average number of iterations and average CPU time. The results in this table show that, the BBIHTA algorithm takes fewer iterations and requires less CPU time than the IHTA algorithm and the \(\mathrm{AIRL^2_1}\) algorithm.

In previous experiments, BBIHTA, IHTA and \(\mathrm{AIRL_1}\) algorithms are used to recover the sparse signal by solving a sequence of \(L_2\)–\(L_{1/2}\) minimization problems and the penalty parameter \(\rho \) is adjusted by a continuous strategy. In the rest of this section, we compare three algorithms by solving the \(L_2\)–\(L_{1/2}\) minimization problem with fixed \(\rho \), i.e., \(\rho =3\times 10^{-2}\). The stopping condition for all the methods is \(\Vert \varPsi (x^k)\Vert _\infty <10^{-8}\) and the initial iterate \(x^0\) is a solution of the corresponding \(L_2\)–\(L_1\) minimization problem. In the experiment, we fix \(m=2^8\) and \(n=2^{10}\), and choose \(T=10, 20, 30, 40\). For each (T, m, n), we construct 400 random pairs \((A, x_s)\), and we run these algorithms to generate an approximation. Table 2 lists average number of iterations and average CPU time. The results show that the BBIHTA algorithm performs better than the other two algorithms.

6 Final Remarks

The \(L_{1/2}\) regularized problem arises in many important applications and is a class of non-Lipschitz, nonconvex optimization problems. This paper proposes a Barzilai–Borwein-like iterative half thresholding algorithm for the \(L_{1/2}\) regularized problem. We verify that any accumulation point of the sequence of iterates generated by the algorithm is a first-order stationary point of this problem under mild conditions and it is also a local minimizer when additional conditions are satisfied. Furthermore, we show that the worst-case iteration complexity for finding an \(\varepsilon \) scaled first-order stationary point is \(O(\varepsilon ^{-2})\). Preliminary numerical results show that the proposed algorithm is practically effective.

References

Barzilai, J., Borwein, J.: Two point step size gradient methods. IMA J. Numer. Anal. 8, 141–148 (1988)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2, 183–202 (2009)

Bian, W., Chen, X., Ye, Y.: Complexity analysis of interior point algorithms for non-Lipschitz and nonconvex minimization. Math. Program. 149, 301–327 (2015)

Candès, E., Wakin, M., Boyd, S.: Enhancing sparsity by reweighted \(L_1\) minimization. J. Fourier Anal. Appl. 14, 877–905 (2008)

Chen, X., Xu, F., Ye, Y.: Lower bound theory of nonzero entries in solutions of \(\ell _2\)-\(\ell _p\) minimization. SIAM J. Sci. Comput. 32, 2832–2852 (2010)

Chen, X., Zhou, W.: Convergence of the reweighted \(L_1\) minimization algorithm for \(L_2\)-\(L_p\) minimization. Comput. Optim. Appl. 59, 47–61 (2014)

Combettes, P.L., Wajs, V.R.: Signal recovery by proximal forward–backward splitting. SIAM J. Multiscale Model. Simul. 4, 1168–1200 (2005)

Dai, Y.H.: A new analysis on the Barzilai–Borwein gradient method. J. Oper. Res. Soc. China 1, 187–198 (2013)

Daubechies, I., De Friese, M., De Mol, C.: An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 57, 1413–1457 (2004)

Figueiredo, M., Nowak, R., Wright, S.: Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal. 1, 586–598 (2007)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 23, 707–716 (1986)

Hager, W.W., Phan, D.T., Zhang, H.: Gradient-based methods for sparse recovery. SIAM J. Imaging Sci. 4, 146–165 (2011)

Hale, E., Yin, W., Zhang, Y.: Fixed-point continuation for \(\ell _1\)-minimization: methodology and convergence. SIAM J. Optim. 19, 1107–1130 (2008)

Hale, E., Yin, W., Zhang, Y.: Fixed-point continuation applied to compressed sensing: implementation and numerical experiments. J. Comput. Math. 28, 170–194 (2010)

Lai, M., Wang, J.: An unconstrained \(L_q\) minimization with \(0<q\le 1\) for sparse solution of under-determined linear systems. SIAM J. Optim. 21, 82–101 (2011)

Lai, M., Xu, Y., Yin, W.: Improved iteratively reweighted least squares for unconstrained smoothed \(L_q\) minimization. SIAM J. Numer. Anal. 51, 927–957 (2013)

Li, D.H., Wu, L., Sun, Z., Zhang, X.J.: A constrained optimization reformulation and a feasible descent direction method for \(L_{1/2}\) regularization. Comput. Optim. Appl. 59, 263–284 (2014)

Lu, Z.: Iterative reweighted minimization methods for \(L_p\) regularized unconstrained nonlinear programming. Math. Program. 147, 277–307 (2014)

Raydan, M.: The Barzilai and Borwein gradient method for the large scale unconstrained minimization problem. SIAM J. Optim. 7, 26–33 (1997)

Wright, S.J., Nowak, R., Figueiredo, M.: Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 57, 2479–2493 (2009)

Wu, L., Sun, Z., Li, D.H.: Gradient based method for the \(L_2\)-\(L_{1/2}\) minimization and application to compressive sensing. Pac. J. Optim. 10, 401–414 (2014)

Xu, Z., Chang, X., Xu, F., Zhang, H.: \(L_{1/2}\) regularization: a thresholding representation theory and a fast solver. IEEE Trans. Neural Netw. Learn. Syst. 23, 1013–1027 (2012)

Xu, Z., Zhang, H., Wang, Y., Chang, X.: \(L_{1/2}\) regularizer. Sci. China Ser. F 52, 1–9 (2009)

Zeng, J., Lin, S., Wang, Y., Xu, Z.: \(L_{1/2}\) regularization: convergence of iterative half thresholding algorithm. IEEE Trans. Signal Process. 62, 2317–2329 (2014)

Acknowledgments

The authors would like to thank two anonymous referees for their valuable suggestions and comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

The work was supported by the National Nature Science Foundation of P.R. China (No. 11501265, 11201197 and 11371154), the Nature Science Foundation of Jiangxi (No. 20132BAB211011), and the Foundation of Department of Education Jiangxi Province (No. GJJ13204).

Rights and permissions

About this article

Cite this article

Wu, L., Sun, Z. & Li, DH. A Barzilai–Borwein-Like Iterative Half Thresholding Algorithm for the \(L_{1/2}\) Regularized Problem. J Sci Comput 67, 581–601 (2016). https://doi.org/10.1007/s10915-015-0094-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-015-0094-4

Keywords

- \(L_{1/2}\) regularized problem

- Sparse optimization

- Prox-linear algorithms

- Barzilai–Borwein steplength

- Iterative half thresholding algorithm