Abstract

The present research introduces an optimum performance soft computing model by comparing deep (multi-layer perceptron neural network, support vector machine, least square support vector machine, support vector regression, Takagi Sugeno fuzzy model, radial basis function neural network, and feed-forward neural network) and hybrid (relevance vector machine) learning models for estimating the pile group settlement. Six kernel functions have been used to develop the RVM model. For the first time, the single (mentioned by SRVM) and dual (mentioned by DRVM) kernel function-based RVM models have been employed for the reliability analysis of settlement of pile group in clay, optimized by genetic and particle swarm optimization algorithms. For that purpose, a database has been collected from the published article. Sixteen performance metrics have been implemented to record the model's performance. Based on the performance comparison and score analysis, models MS3, MS9, MS17, MS23, and MS25 have been recognized as the better-performing models. Furthermore, the regression error characteristics curve, Uncertainty analysis, cross-validation (k-fold = 10), and Anderson–Darling test reveal that model MS23 is the best architectural model in reliability analysis of pile group settlement. The comparison of model MS23 with published models shows that model MS23 has outperformed with a performance index of 1.9997, a20-index of 100, an agreement index of 0.9971, and a scatter index of 0.0013. The compression index, void ratio, and density influence the pile group settlement prediction. Also, the problematic multicollinearity level (variance inflation for > 10) significantly affects the performance and accuracy of the deep learning model.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Piles are one of the most important deep structures that transfer axial building loads into appropriate bearing strata. Unlike shallow foundations, pile foundations can convey axial loads through low-strength soil layers to suitable bodies. Pile foundations are more widely used than shallow foundations as they provide the best efficiency and optimum cost, even in the case of shallow load-bearing soil layers (Smoltczyk 2003). In recent decades, the use of piles has increased due to the reducing raft settlement without decreasing the safety and foundation's performance (Poulos 2001). One of the essential aspects in the design of pile foundations is assessing and investigating the load-settlement performance of a single or group pile. Many parameters affect a pile's behaviour, including the physical and mechanical behaviour of the soil (nonlinear or linear), pile properties, and the pile's installation methods (Berardi and Bovolenta 2005). However, the elastic settlement is a key factor for the final settlement of piles in sandy soil (Murthy 2002). Vesic (1977) computed the immediate settlement of a pile using a semi-empirical method. The elastic settlement of piles is not the major part of the final settlement and contributes to consolidation settlement for saturated clay soils (Poulos and Davis 1980; Murthy 2002). Determining the final settlement of a single or group pile is time-consuming. Therefore, several researchers and scientists used different methods, i.e., theoretical, experimental, and computational, to assess pile settlement. The theoretical procedures for assessing pile settlement are traditional, cumbersome, and less accurate. The computational methods are more accurate and less time-consuming than the traditional methods. The computational methods consist of several techniques, i.e., ANN, SVM, GPR, DT, RF, etc., associated with a conventional, advanced machine, hybrid, and deep learning.

Ray et al. (2023) analyzed the settlement of the shallow foundation on clayey soil using ANFIS and FN computational techniques. The authors predicted shallow foundation settlement using void ratio, compression index, and unit weight as independent variables. The performance of models was evaluated by several metrics, and it was concluded that the FN model is more reliable than ANFIS in predicting shallow foundation settlement. FN model predicted settlement with R2 of 0.9821, RMSE of 0.0012, and VAF of 98.44, showing better curve fitness than ANFIS. The defective pile failure scenario, pile spacing, and pile group configuration play a significant role if the pile group is axially loaded and designed for sand strata (Alhashmi et al. 2023). Zheng et al. (2020) employed the MARS model to assess the liquefaction-induced settlement of shallow foundations. The authors used the finite difference method to create an artificial database utilizing ground motion, structures, and soil properties. The proposed model assessed the settlement with a coefficient of determination of 0.884. Kumar and Samui (2020) employed the Ls-SVM, GMDH, and GPR soft computing approaches to analyze the reliability of the pile group settlement in clay. The authors concluded that the Ls-SVM, GMDH, and GPR soft computing approaches are highly capable of analyzing the settlement reliability for the pile group in clay. Armaghani et al. (2020) applied a neuro-swarm approach to assess pile settlement. The authors developed a particle swarm-optimized neural network model in the reported study. The input variables were the SPT N-value, Pu, Ls/Lt, Lp/D, and UCS. The authors concluded that the optimized model assessed settlement with a determination coefficient of 0.892 in the testing phase, higher than the conventional neural network model. Moreover, the authors also concluded that the optimization algorithm improves the prediction capabilities of the neural network model. In recent decades, the use of artificial neural network (ANN) algorithms has been increased to predict the settlement of group and single piles due to their high efficiency and accuracy (Teh et al. 1997; Alkroosh and Nikraz 2011a, 2011b; Tarawneh and Imam 2014). A model based on recurrent neural network (RNN) was used to assess the load-settlement of axially driven steel piles. The defined RNN model was developed and validated by 23 in situ, full-scale pile load tests and cone penetration test (CPT) datasets. The model's accuracy was evaluated by the root mean square error (RMSE) performance metric. The result of this loss function was determined as 74.5, indicating the defined algorithm's accuracy (Shahin 2014). Kordjazi et al. (2014) employed a support vector machine (SVM) network to predict the settlement of piles as a target factor using 108 collected datasets. The test RMSE was obtained as 318.85 for the SVM model. Nevertheless, limited works and algorithms were applied to estimate the load-settlement behaviour of piles (Abu-Kiefa 1998; Pooya Nejad and Jaksa 2017). Table 1 presents the details of models used in the published research for predicting the single or group pile settlement.

Interestingly, many researchers and scientists have predicted pile settlement using traditional methods. Afnan et al. (2023) presented a theoretical study for investigating the vertical settlement and horizontal displacement of piles through clayey and silty soil bodies using the commercial finite element method. Furthermore, Li and Deng (2023) introduced a method to study the pile group foundation settlement behaviour for linear viscoelastic soil. This evaluation was based on several parameters, including the Poisson ratio on pile and load ratio, elastic modulus and slenderness ratio, pile spacing, and pile-pile interaction factors. Bao et al. (2023) reported that the stress-bearing ratio and axial stress of corner and side piles decrease due to increased pile spacing. Still, the stress-bearing ratio increases with pile spacing for piles at the center. Hakro et al. (2022) utilized a two-dimensional finite element to investigate foundations' settlement and structural behavior on different soil bodies by considering various load combinations and geometry situations. In another study, Oteuil et al. (2022) developed an analytical and numerical framework for the axial and lateral capacity of bored piles based on two parameters, including a conventional and a cone penetration test. Voyagaki et al. (2022) assessed the settlement of the axially loaded piles using the DINGO database. The database consists of pile results obtained from over 500 test piles. For this aim, the authors employed two analytical models to assess the settlement of the foundation. The models predicted foundation settlement accurately, close to actual values. Model 1 was based on the strength design principles, and Model 2 was designed using depth-dependent soil stiffness, elastic-perfectly plastic curve, bilinear force–displacement, and soil yielding. Finally, the authors concluded that models 1 and 2 predicted settlement for 2.5 FOS without significant differences. It was also noted that the settlement prediction mainly depends on soil properties. Ponomaryov and Sychkina (2022) determined the impact of compaction around driven piles in clay. The authors also estimated pile settlement using the numerical method (modeling in Plaxis 2D) and analytical method (based on Russian standards). The published research was carried out for the clay and claystone soils zone. Satisfactory settlement results were obtained from both methods for both zones. Zhang et al. (2021) estimated pile settlement by introducing a novel soil-pile interaction model considering Biot's poroelastic continuum equations and soil pile model. The authors concluded that the final settlement at the failure of the pile is affected by Young's modulus. Gomes Filho and Moura (2021) proposed modifications to the t-z and q-z curves, introduced by Bohn et al. (2017), to analyze the load-settlement ratios in pile groups. The method was implemented for eight soil cases studied by Dai et al. (2012). The authors concluded that the predicted results agreed with the experimental data of the pile group due to soil heterogeneity. Chen et al. (2021a) conducted experimental research for pile cyclic settlement in silt soil. The saturated silt soil strata were considered in the reported work using pore water pressure, soil pressure on the pile surface, and pile settlement. The authors concluded that the pile settlement develops due to Pm, Pu, and Pcyc parameters. Bhartiya et al. (2021) studied the time-dependent settlements of pile raft foundations utilizing 3D FEM and critical state-based soil constitutive modified Cam-Clay (MCC) models. Lee et al. (2020) reported that the inside frictional resistance is a significant parameter for understanding the behaviour of steel pipe piles. Voyagaki et al. (2019) used linear elastic, linear elastic–plastic, and power-law nonlinear soil models and predicted pile settlement. The authors concluded that the adopted theoretical approaches agree well with the actual database. Sychkina (2019) implemented Koltunov's creep kernel in the rheological deformation model to estimate the long-term settlement of piles. Li and Gong (2019) estimated the nonlinear settlement of pile groups in clay strata. The authors estimated settlement using an analytical approach based on the strength and stiffness of the soil. The authors assumed the soil strata elastically under loading. The proposed approach also considers the reinforcement effects of the adjacent pile and pile-pile interaction. The authors summarized that the predicted pile group settlement agrees well with the actual settlement. Cui et al. (2019) studied the long-term time-dependent load settlement for piles in clay. Crispin et al. (2019) evaluated the prediction error using the t-z curve. Also, the authors developed a nonlinear model (using pile shaft and base) compared to the linear elastic-perfectly plastic model developed by linear elastic stiffness. In addition, several researchers utilized the finite element method (including Defpig and Napra) to investigate pile settlement (Katzenbach et al. 2000; Balakumar and Ilamparuthi 2007; Baziar et al. 2009; Sheil 2017). Moreover, the small-scale model, numerical, and analytical approaches were used to assess the settlement of single and group piles in clayey and sandy soil layers (Balakumar et al. 2005; Yamashita et al. 2013; Saha et al. 2015; Lai et al. 2016; Kumar et al. 2016). Wong (2002) performed numerical analyses and parametric studies to assess the responses of pavements and embankments supported by piles. Randolph (2003) addressed estimating pile capacity in clay and siliceous sands using analytical approaches. Mandolini et al. (2005) discussed the effect of the installation method on the bearing capacity and the load-settlement response of a single pile. Poulos (2006) properly estimated the pile group settlements by focusing on pile-soil interaction. Furthermore, considering the multiphase model (soil-pile interaction), the settlement of a vertically loaded piled raft was analyzed and investigated (Bourgeois et al. 2012). Masani and Vanza (2018) investigated the impact of vertical load on the behavior of a group of piles by considering lateral load through laboratory experiments on aluminum pipe piles.

Gap Identification in the Literature Survey The literature study demonstrates that limited soft computing approaches have been employed to predict pile settlement by researchers and scientists. The quality and quantity of the database highly influence the performance of the soft computing models. Most researchers have employed models based on the RNN, SVM, ANN, Ls-SVM, GMDH, and GPR to assess the pile group settlement. It has also been observed that the models based on the Takagi–Sugeno Fuzzy (TSFL), Radial Basis Functional Neural Network (RBFNN), Feed-forward Neural Network (FFNN), and relevance vector machine (RVM) approaches have not been developed and employed for assessing the pile group settlement. Also, the hypothesis tests, such as ANOVA, Z, and Chi-tests, have not been carried out to select the research hypothesis. Furthermore, the impact of multicollinearity has not been investigated in the reliability analysis of pile group settlement. The Anderson–darling test has not been carried out to study the behaviour of the predicted pile group settlement.

Novelty of the Present Research The present research develops, trains, tests, and analyzes the capabilities of deep and hybrid learning approaches. Considering the gap identified in the literature survey, the present research contains the following novelty.

-

The present research checks the capabilities of deep (Multi-Layer Perceptron, Least Square Support Vector Machine, Support Vector Machine, Support Vector Regression, Takagi–Sugeno Fuzzy, Radial Basis Function Neural Networks, Feed-Forward Neural Network) and hybrid (Single Kernel Function-based and Dual Kernel Function-Based, which is optimized by genetic algorithm and particle swarm optimization algorithm) learning soft computing approaches in the reliability analysis of pile group settlement in clay.

-

This study also demonstrates the impact of the database multicollinearity on the performance, accuracy, and overfitting of the developed models.

-

This work draws the hypothesis statement for reliability analysis and performs the ANOVA and Z tests to select the research hypothesis.

-

This research analyzes the impact of input variables, compression index, void ratio, and density in predicting pile group settlement.

-

The score analysis, uncertainty analysis, and regression error characteristics curve are carried out to find the optimum performance soft computing model for reliability analysis of pile group settlement. The Wilcoxon test has been performed to compare the actual and predicted settlement for the best architectural models in terms of confidence intervals.

2 Research Methodology

The present research has been performed to introduce the optimum performance model for reliability analysis of the settlement of pile group in clay. For this purpose, a database has been collected from the article of Kumar and Samui (2020). The database has been screened for training and testing, and missing data points have been removed. The min–max normalization function has been implemented to normalize the data points. The database contains the results of the compression index (Cc), void ratio, density, and pile group settlement. The multicollinearity analysis has been performed to check the multicollinearity level of the data points and to determine the impact of multicollinearity on soft computing models. Also, the ANOVA and Z tests have been performed to identify the research hypothesis. In this research, the deep learning models, MLPNN, SVM, LS-SVM, SVR, TSFL, RBFNN, and FFNN, have been developed, trained, tested, and analyzed. On the other side, hybrid learning models have been developed using the RVM approach. The relevance vector machine is an advanced soft computing approach to support vector machine. The support and relevance vector machines are the kernel function-based approaches. The Gaussian, Exponential, Linear, Laplacian, Sigmoid, and Polynomial kernel functions have been implemented in relevance vector machine models. Furthermore, each GA and PSO optimization algorithm has optimized the employed single kernel function-based (mentioned by SRVM) models. Thus, twelve RVM models (six GA-optimized SRVM and six PSO-optimized SRVM) have been employed. RMSE, MAE, R, MAPE, VAF, WMAPE, NS, PI, BF, NMBE, MBE, LMI, RSR, a20-index, IOA, and IOS performance metrics have been used to measure the performance of the employed models. Based on the performance comparison of six GA-optimized SRVM models, one kernel function has been selected as the best kernel function and mentioned by K1.

Similarly, one kernel function has been identified as the best kernel function by comparing six PSO-optimized SRVM models. The different kernel function combinations have been developed to employ the dual kernel function-based RVM models (mentioned by DRVM). Each GA and PSO-optimization algorithm has also optimized the DRVM models. Thus, ten DRVM models (five GA-optimized DRVM models and five PSO-optimized DRVM models) have been employed. The five GA-optimized DRVM and five PSO-optimized DRVM models have been individually compared. From the comparison, one GA-optimized DRVM and one PSO-optimized DRVM model has been recognized as the best architectural model. Thus, one best architectural model has been obtained from each deep learning, GA-optimized SRVM, PSO-optimized SRVM, GA-optimized DRVM, and PSO-optimized DRVM model. Finally, a performance comparison has been drawn among the five best architectural models to identify the optimum performance model. In addition, the results have been analyzed by performing score analysis, REC curve, cross-validation, Anderson–darling test, and uncertainty analysis. Moreover, the impact of multicollinearity on the overfitting of the best architectural model has been studied and analyzed. Based on the analysis, the obtained results have been discussed, and the optimum performance model has been identified. Figure 1 illustrates the execution of the present research.

3 Data Analysis and Computational Methods

This section presents the database source and analysis, followed by the computation methods adopted in this study. A brief discussion has been drawn for each computational method. Furthermore, the performance metrics, sensitivity analysis, multicollinearity analysis, and hypothesis testing have been discussed in this section.

3.1 Data Analysis

A database of pile group settlement has been collected from the article of Kumar and Samui (2020). In the reported article, the authors have used the pile group of 9 piles embedded in normally consolidated clay. The authors have selected the following parameters in the reported study; (i) length of pile = 5 m, (ii) diameter of pile = 0.5 m, (iii) hard stratum at 7 m, (iv) pile spacing = 0.5 m, (v) dispersion angle = 30°, (vi) vertical load = 500 kN. The authors have also considered the compression index (Cc), void ratio (e), and density in the range of 0.26–0.85 (obtained from Ibrahim et al. 2012), 0.5–1, and 14.8–19 kN/m3 (obtained from Das 2008). Eighty data points have been developed in the reported study. The database has been divided into training and testing databases by randomly picking up 80% and 20% of 80 data points, respectively. The frequency distribution of database variables has been drawn, as shown in Fig. 2.

Figure 2 demonstrates the frequency distribution of normalized data. The database consists of (i) 17, 15, 16, 13, 19 compression index data points, (ii) 20, 17, 18, 14, and 11 void ratio data points, (iii) 18, 18, 19, 19, and 6 density data points, and (iv) 14, 12, 12, 14, and 30 settlement data points in the range of 0 to 0.2, 0.2 to 0.4, 0.4 to 0.6, 0.6 to 0.8, and 0.8 to 1.0, respectively. Furthermore, the Pearson product-moment correlation coefficient method has been used to determine the relationship among the Cc, e, density, and settlement variables. The correlation coefficient ± 0.0 to ± 0.20, ± 0.21 to ± 0.40, ± 0.41 to ± 0.60, ± 0.61 to ± 0.80, and ± 0.81 to ± 1.0 demonstrate very strong, strong, moderate, weak, and no relationship for database pairs (Hair et al. 2013; Khatti and Grover 2023a). Figure 3 demonstrates the relationship between variables in terms of the correlation coefficient.

Figure 3 illustrates that all variables very strongly correlate with each other. The correlation of Cc with e (= 0.9832) and settlement (0.9846) presents the multicollinearity. Also, the settlement has multicollinearity with Cc (= 0.9846) and density (= 0.9784). The descriptive statistics of the overall, training and testing database are given in Table 2.

3.2 Computation Methods

Eight soft computing approaches have been used to develop the different configuration-based models to execute the present study. The theory of the adopted soft computing has been discussed in this section.

3.2.1 Multi-layer Perceptron Neural Network (MLPNN)

An Artificial neural network (MLPNN) is a soft computing system composed of artificial neurons with inputs generating an output signal that could be submitted to several other neurons. A network of connections to which the weight is assigned modifies the input strength. The activation function such as Threshold, Sigmoid, Hyperbolic tangent, and ReLu are used in the network to convert input signals in input layers to the output signal in the output layer (Menhrotra et al. 1990).

The optimum architecture network assumed in this paper is 3-13-1, which means the ANN has three layers, including five neurons and five input parameters in this research. One hidden layer with thirteen neurons, followed by one neuron in the output layer, eventually generates the group pile settlement. The structure of MLPNN is presented in Fig. 4.

The structure of ANN based on the determined database (Samadi et al. 2021a)

3.2.2 Support Vector Machine (SVM)

Support vector machine (SVM) is one of the established methods of supervised machine learning algorithms, applied to classify and predict with small samples and nonlinearity by constructing a hyperplane or set of hyperplanes in a high or infinite-dimensional space. The SVM algorithm is a complicated nonlinear relationship between the target and input parameters, making it a desirable case for this analysis. SVM aims to determine the maximum possible margin between the classes (Cortes and Vapnik 1995). The SVM margin and its description are shown in Fig. 5.

Maximum margin hyperplane (MMH) and margins of an SVM (Samadi et al. 2021a)

3.2.3 Least Square Support Vector Machine (Ls-SVM)

Least-squares support-vector machines (Ls-SVM) are used for prediction and statistical modeling. Least-squares (Ls) is a version of support-vector machines (SVM), a set of related supervised learning methods that analyze data and recognize patterns and are used for classification and regression analysis (Khatti and Grover 2023f). Least-squares SVM classifiers were proposed by Suykens and Vandewalle (1999), which are a class of kernel-based learning methods. There are three types of kernel-based functions, namely linear, polynomial, and Gaussian, which are different in making hyperplane decision boundaries between the data classes. Linear and polynomial kernels are less time-consuming and provide less accuracy than the Gaussian kernels (Hongwei 2011), as presented in Fig. 6. Therefore, the Gaussians kernel-based Ls-SVM is performed in this research.

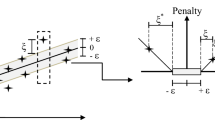

3.2.4 Support Vector Regression (SVR)

Support vector regression (SVR) is a widely used supervised learning network to estimate continuous variables. SVR is similar to the support vector machine (SVM) algorithm principle. The best-fit line in this computing machinery model is the hyperplane with maximum marks (Smola and Scholkopf 2004).

3.2.5 Takagi–Sugeno Fuzzy Model (TSFL)

Takagi and Sugeno (1985) introduced the Takagi–Sugeno fuzzy model to develop a systematic fuzzy rule generation approach by a given input–output dataset. The typical TS fuzzy rule is defined as follows:

where A and B are fuzzy sets in the antecedent, while Z = f (X, Y) is a crisp function in the consequent. Usually, f (X, Y) is a polynomial consisting of input variables X and Y. Any function describing the appropriate output within the fuzzy region specified by the rule's antecedent can be considered F(X, Y). In this research, the TSFL model is used because the Sugeno fuzzy systems are well established using linear weighted mathematical expressions and are well suited to human input adaptive techniques (Takagi and Sugeno 1985), as illustrated in Fig. 7. The output is a weighted mean that is defined as follow:

where Wi is the firing strength of the ith output, transforming a crisp quantity into a fuzzy set is known as Fuzzification. This procedure accurately expresses the crisp input values into linguistic variables.

3.2.6 Radial Basis Function Neural Network (RBFNN)

The values of the RBF method depend on the distance between the inputs and fixed points. This function assigns an actual value to each input and the value predicted by the network, which cannot be negative. Broomhead and Lowe (1988) indicated that RBF is traditionally associated with radial functions in a single-layer network. The structure of this model is shown in Fig. 8. Three-layer were determined in the training stage, including input layers, hidden layers, and output layers. To increase the linear separability of the feature vector, the dimension of the feature vector must be increased. Due to the computational reliability of SVM, it can utilize a radial basis function (RBF) as the best Gaussian kernel function, which is defined as follows (Apostolopoulou et al. 2020):

A view of radial basis function structure (Samadi and Farrokh 2021b)

In this function, W is assumed at the margin between the groups that the RBF algorithm attempts to maximize the W value.

3.2.7 Feed-Forward Neural Network (FFNN)

A feed-forward neural network (FNN) is one of the neural networks that move in only one direction (forward) without any loops in the network structure. The network considers the input nodes and moves through the hidden layer to estimate output factors (Andreas 1994).

3.2.8 Relevance Vector Machine (RVM)

The RVM is a machine learning method used in mathematics that employs Bayesian inference to produce parsimonious solutions for probabilistic classification and regression problems. The RVM offers probabilistic classification yet has a functional form equivalent to the support vector machine (Tipping 2001). In reality, it is similar to a Gaussian process model with a covariance function:

where \(\varphi\) is the kernel function (default Gaussian), \({\propto }_{m}\) are the variances of the prior on the weight vector \(\omega \sim N\left(0,{\propto }^{-1}I\right)\), and \({j}_{1},\dots \dots {j}_{N}\) are the input parameters of the training dataset (Candela 2004). The Gaussian, Linear, Laplacian, Polynomial, Sigmoid, and Exponential kernel functions are used in RVM models (Khatti and Grover 2023g). The mathematical formulation of the kernel functions is

Linear

Polynomial Kernel

Gaussian Kernel

Exponential Kernel

Laplace RBF Kernel

where \({x}_{i},{x}_{j}\) are input and output parameters, c is constant, m is the gradient/slope of a line, d is a degree, D is the scale factor, l is the length-scale hyper-parameter, and \(\sigma\) is the standard deviation. The Bayesian formulation of the RVM eliminates the set of free parameters of the SVM compared to those of support vector machines (SVM). However, because RVMs employ a learning strategy similar to expectation maximization (EM), they are susceptible to local minima. In contrast, SVMs often use sequential minimal optimization (SMO)-based methods, ensuring a global optimum.

Several researchers have solved complex problems associated with geotechnical engineering using the RVM approach (Khatti and Grover 2021; Mittal et al. 2021; Chen et al. 2021; Fattahi and Hasanipanah 2021; Li et al. 2021; Yang et al. 2022; Raja et al. 2022). The published studies demonstrate the efficiency of the RVM approach in solving geotechnical problems. Therefore, relevance vector machine models have been developed using single (SRVM) and dual (parallel mentioned by DRVM) kernel functions. The Gaussian, Exponential, Linear, Laplacian, Sigmoid, and Polynomial kernel functions have been implemented to develop the proposed RVM models in this study. The hyperparameters of the developed RVM models are given in Table 3.

Thus, each GA and PSO algorithm has developed and optimized six single kernels (gaussian, exponential, linear, laplacian, sigmoid, and polynomial) based on SRVM models.

The performance of single kernel-based RVM models optimized by each GA and PSO has been compared to select the primary kernel for dual (parallel) kernel-based RVM model. However, six kernel functions have been used in this research. Therefore, a combination of the primary kernel (kernel 1) and the rest of the kernel (kernel 2, 3, 4, 5, 6) has developed the dual (parallel) kernel-based DRVM models. For example, GA optimized Gaussian kernel-based SRVM model has performed better than other GA-optimized SRVM models. Thus, kernel combinations are Gaussian + Linear, Gaussian + Laplacian, Gaussian + Polynomial, Gaussian + Sigmoid, and Gaussian + Exponential for GA-optimized DRVM models. The same procedure has been used to develop the PSO-optimized dual (parallel) kernel-based DRVM models.

3.3 Performance Metrics

The different statistical parameters are used to determine the performance of soft computing called performance metrics. These performance metrics are linear and nonlinear. In this study, sixteen performance metrics have been implemented to measure the performance of deep and hybrid learning models. The mathematical expression of the implemented performance metrics is as follows (Kumar and Samui 2020; Asteris et al. 2021a, 2021b; Khatti and Grover 2023b, 2023c):

where α and \(\omega\) are the actual and predicted ith value, n presents the total number of data, β is the mean of the actual values, \(\overline{\omega }\) is the mean of the predicted value, \(k\) is the number of independent variables, m20 is the ratio of experimental to the predicted value, varies between 0.8–1.2, and H is the total number of data samples.

The primary advantage of the a20-index is that the proposed model predicts values with a deviation of ± 10% compared to laboratory values. On the other hand, the index of agreement (mentioned in Eq. 23) is bounded by − 1.0 and 1.0 (Willmott et al. 2012). Moreover, the least value of the scatter index (mentioned in Eq. 20) presents a better prediction and accuracy (Mentaschi et al. 2013). The R-squared value higher than 0.95 (R = 0.9747) demonstrates that the developed model is highly reliable and accurate. Also, the value of R more than 0.8 (R2 = 0.64), between 0.2 (R2 = 0.4) to 0.8 (R2 = 0.64), and less than 0.2 (R2 = 0.4) presents the strong, good and weak correlation between pair of data (Smith 1986). A perfect predictive model always has performance indicators value equal to the ideal value, as given in Table 4.

3.4 Sensitivity Analysis

A sensitivity analysis has been performed to determine the most influencing input parameters in predicting soil compaction. The cosine amplitude method (CAM) is utilized in this study to compare the strength of the input parameters with the soil compaction parameters (Ardakani and Kordnaeij 2019). It aids in determining the degree of the correlation between input and output dimensions. In CAM, the data array X (n data samples in the same space) can be expressed as (Hasanzadehshooiili et al. 2012):

Each predictor \({x}_{i}\) of the data array, X is the vector (length m) in Eq. 28 and is defined as:

Thus, Eq. 29 estimates the strength between predictors (\({x}_{i}\)) and output (\({x}_{j}\)) variable (Ghorbani et al. 2020).

The strength between predictors and output is measured between 0 and 1. The value of CAM near 1 demonstrates the higher strength between data points, and the zero value represents no strength. The sensitivity analysis has been performed in this research for the 50%, 60%, 70%, 80%, 90%, and 100% training databases. Figure 9 illustrates the strength of data points for the complete database used in this study.

Figure 9 illustrates that all input variables are highly sensitive to pile group settlement. It can be seen that the soil density (= 0.9991) very strongly influences the prediction of pile group settlement, followed by the compression index (= 0.9926) and void ratio (= 0.9921).

3.5 Multicollinearity Analysis

A relationship between variables resulting in their correlation is called multicollinearity. Multicollinear data are difficult to analyze since they are not independent (Khatti and Grover 2023a). The Pearson product moment-correlation coefficient and variance inflation factor methods determine the multicollinearity database. In this research, the variance inflation factor (VIF) method has been adopted to determine the database multicollinearity, and the results of VIF are presented in Table 5.

Table 4 illustrates that compression index, void ratio, and density have 31.65, 134.89, and 132.66 multicollinearities, respectively. All input variables have a VIF value of more than 10, showing the problematic multicollinearity level (Khatti and Grover 2023a).

3.6 Hypothesis Testing

Hypothesis testing is a process for determining the hypothesis type for a particular study. For this purpose, several parametric and nonparametric statistical tests are used. The parametric ANOVA and Z tests have been performed in this research to identify the hypothesis type. The present study has the following statements for the research hypothesis (HR):

-

Soil compression index, void ratio, and density are essential for assessing pile group settlement.

-

Soil compression index, void ratio, and density are highly related.

-

Multicollinearity does not affect the performance and accuracy of deep and hybrid learning soft computing models.

3.6.1 ANOVA Analysis

Analysis of variance (ANOVA) is a parametric statistical analysis performed to identify the research hypothesis. In the present research, the ANOVA analysis has been performed using the Data Analysis Tool of Microsoft Excell 2021. The results of the ANOVA analysis are given in Table 6.

Table 5 illustrates that the p-value for the Cc, e, and density is less than the significance level, i.e., p < 0.05. Also, the F (F state) is higher than the F critical (F Crit) for each input variable. Finally, the ANOVA analysis rejects the present research's null hypothesis (H0).

3.6.2 Z-Test

Z-test is another parametric statistical test performed to find the hypothesis type. The Z-test has been performed for the complete database used in this study. Table 7 illustrates the results obtained from the Z-test. It can be seen that (i) Z (z state) is higher than Z critical one and two tail, (ii) Z critical one tail is less than z critical two tail, (iii) p-value is less than to significance p-value. After analyzing the results, it has been observed that Z-test accepts the research hypothesis for the study.

4 Results and Discussion

4.1 Simulation of Soft Computing Models

To execute the present research, the models based on the MLPNN, SVM, Ls-SVM, SVR, TSFL, RBF, FFNN, and RVM have been developed, trained, tested, and analyzed for performing reliability analysis of pile group settlement. Each GA and PSO algorithm has been used to develop and optimize the single and dual kernel function-based RVM models. Sixteen performance metrics have been used to measure the training and testing performance of the employed models and reported in supplementary materials (SPM). The training and testing phase results have been discussed and analyzed below.

4.1.1 Deep Learning Models

The deep learning models have been developed, trained, and tested using the MATLAB software. Table A (refer SPM) presents the training and testing performances of the deep learning models. The performance comparison of the MLPNN, RBFNN, and FFNN demonstrates that the FFNN model MS6 has attained over 99% accuracy in the training (R = 0.9971) and testing (R = 0.9973) phase. It has also been observed that model MS6 has outperformed the RBFNN and MLPNN models with the least prediction error in the training (RMSE = 0.0030 m, MAE = 0.0022 m, MAPE = 1.1563%, WMAPE = 0.0110 m, NMBE = 0.0000) and testing (RMSE = 0.0037 m, MAE = 0.0032 m, MAPE = 1.6706%, WMAPE = 0.0156 m, NMBE = 0.0001) phase. The a20, IOA, and IOS demonstrate the superiority of the FFNN model MS7 over RBFNN and MLPNN models. On the other side, the performance comparison of SVM, SVR, and Ls-SVM reveals that the Ls-SVM model MS3 has predicted pile group settlement with RMSE = 0.0016 m, MAE = 0.0015 m, R = 0.9995, and MAPE = 0.7644%, comparatively higher than SVM and SVR models. The TSFL model MS5 has predicted pile group settlement with the RMSE of 0.0080 m, MAE of 0.0079 m, and R of 0.9881.

Furthermore, the performance comparison of models MS3, MS5, and MS7 reveals that the least square support vector machine model MS3 has outperformed the other deep learning models with the higher training (RMSE = 0.0039 m, MAE = 0.0027 m, R = 0.9952, MAPE = 1.4675%, VAF = 98.98, WMAPE = 0.0134, NS = 0.9895, PI = 1.9763, BF = 1.0032, NMBE = 0.0001, MBE = 0.0007, LMI = 0.0814, RSR = 0.1027, a20 = 100.00, IOA = 0.9593, and IOS = 0.0195) and testing (RMSE = 0.0016 m, MAE = 0.0015 m, R = 0.9995, MAPE = 0.7644%, VAF = 99.90, WMAPE = 0.0072, NS = 0.9987, PI = 1.9964, BF = 0.9971, NMBE = 0.0000, MBE = -0.0006, LMI = 0.0378, RSR = 0.0354, a20 = 100.00, IOA = 0.9811, and IOS = 0.0075) performance. Figure 10a depicts the relationship between actual and predicted settlement for the Ls-SVM model MS3. The following observations have been mapped from the performance comparison of the deep learning models.

-

The feed-forward neural network has performed better than RBFNN and MLPNN models because the problem is complex and requires more neurons. Also, the computational process runs in one direction only.

-

The Ls-SVM model MS3 has attained higher performance because of the sum of the square error cost function.

-

The Takagi–Sugeno Fuzzy Logics did not perform better because of the unavailability of proper membership functions and fuzzy rules from numerical data (Hong and Lee 1996).

4.1.2 Hybrid Learning Models

For employing the hybrid learning models, the relevance vector machine approach has been selected in this research. The Gaussian, exponential, linear, laplacian, sigmoid, and polynomial kernel functions have been implemented to develop 22 relevance vector machine (6GA-optimized SRVM, 6PSO-optimized SRVM, 5GA-optimized DRVM, and 5PSO-optimized DRVM) models. The training and testing performance of the RVM models has been summarized in Table A.

Table A (refer SPM) demonstrates that the GA-optimized exponential kernel function-based SRVM model MS9 has attained high performance in both phases (training R = 1.0000, testing R = 1.0000). Model MS9 has predicted the pile group settlement with the least residuals in training (RMSE = 0.0002 m, MAE = 0.0002 m) and testing (RMSE = 0.0003 m, MAE = 0.0002 m) phase. The performance comparison of PSO-optimized SRVM shows that the PSO-optimized laplacian kernel function-based SRVM model has performed better than other PSO-optimized SRVM models. The PSO-optimized model MS17 has predicted pile group settlement with the RMSE of 0.0004 m, MAE of 0.0003 m, and R of 1.0000 in the testing phase.

Models MS9 and MS17 have been developed by implementing the exponential and laplacian kernel functions, and models have attained higher performance in the testing phase. Therefore, five GA-optimized DRVM models have been developed by implementing the different combinations of kernel functions, i.e., Exponential + Gaussian, Exponential + Linear, Exponential + Laplacian, Exponential + Sigmoid, and Exponential + Polynomial. On the other hand, five PSO-optimized DRVM models have been developed using the Laplacian + Gaussian, laplacian + Exponential, Laplacian + Linear, Laplacian + Sigmoid, and Laplacian + Polynomial combinations of kernel functions.

Table A (refer SPM) demonstrates that the GA-optimized exponential + sigmoid dual kernel function model MS23 has achieved higher training (R = 1.0000) and testing (R = 1.0000) performance than other GA-optimized DRVM models. Also, it has been observed that model MS23 has predicted the pile group settlement with the least prediction error in the testing phase, i.e., RMSE = 0.0003 m, MAE = 0.0002 m. The performance comparison of PSO-optimized DRVM models reveals that the PSO-optimized Laplacian + Gaussian kernel function-based DRVM has outperformed the other PSO-optimized DRVM models with R of 1.0000, RMSE of 0.0003 m, and MAE of 0.0003 m, close to the ideal values.

Based on the performance comparison, it has been observed that the Ls-SVM model MS3, GA-optimized SRVM model MS9, PSO-optimized SRVM model MS17, GA-optimized DRVM model MS23, and PSO-optimized DRVM model MS25 has attained higher performance (R > 0.95) in the testing phase and recognized as the better-performing models. A relationship plot with an error histogram has been drawn for models MS9, MS7, MS23, and MS25, as shown in Fig. 10b–e.

The following observations have been drawn from the performance comparison of the GA-optimized SRVM, PSO-optimized SRVM, GA-optimized DRVM, and PSO-optimized DRVM models.

-

The GA-optimized exponential kernel function-based SRVM model MS9 has performed better than other GA-optimized SRVM models because of its simplicity. Still, it decays the prediction error much more quickly.

-

The PSO-optimized laplacian kernel function-based SRVM model MS17 has attained higher performance than other PSO-optimized SRVM models because it decays the error faster.

-

The performance comparison of models MS9 and MS17 shows that model MS9 has predicted the pile group settlement, optimized by genetic algorithm. Hence, it can be stated that the genetic algorithm is the most suitable algorithm for single kernel function-based relevance vector machine models.

-

Model MS23 has been employed using the combination of exponential and sigmoid kernel functions and optimized by the genetic algorithm. Model MS23 has performed better than other PSO-optimized DRVM models because it works as a two-layer perceptron neural network.

-

The PSO-optimized Laplacian + Gaussian kernel function-based DRVM model MS25 has outperformed this study's other PSO-optimized DRVM model. The combination of both kernels demonstrates that the model MS25 is robust because the Gaussian kernel performs better if the prior information is not given, and Laplacian decays the error faster.

-

The performance comparison of models MS23 and MS25 shows that the genetic algorithm is slightly better than the particle swarm optimization algorithm in predicting pile group settlement.

4.2 Analysis of Results

In this section, the performance results have been analyzed and presented by performing different tests and analyses. For this purpose, the score analysis, Anderson–darling test, uncertainty analysis, Wilcoxon test, overfitting, etc., has been performed and discussed.

4.2.1 Score Analysis

The score analysis compares the effectiveness of the best architectural models through statistical analysis. The model for choosing the optimal value for each performance indicator is given a score of n (in this study, n = 29; see best architectural models for soft computing that are taken into account in the analysis). The better and poorer training and testing examples for the models are shown by the higher and lower values of performance indicators in score analysis (Khatti and Grover 2023e). The next step is to add the performance indicator scores from each training and testing phase to determine the final model score. Finally, a model's overall score is computed by adding the training, testing, and validation score. The results obtained from the score analysis have been presented in Table B (refer SPM). Table B demonstrates that model MS23 has gained 417 and 409 scores in the training and testing phase, respectively, followed by models MS9, MS25, and MS17. Model MS3 has attained the lowest score in the training (= 108) and testing (= 205) phases. The graphical comparison of training and testing scores for the better-performing models is shown in Fig. 11 (a) and (b). Figure 11 presents the superiority of model MD23 in predicting pile group settlement, with a high score of 417 (in the training phase) and 409 (in the testing phase). The GA-optimized SRVM model MS9 scored higher than PSO optimized SRVM model MS17, i.e., 400 (in training) and 376 (in testing). Furthermore, the grand score has been calculated and presented in Fig. 12. Figure 12 depicts that model MS23 has attained an overall score of 826, which is higher than other models. Based on the overall score, GA-optimized exponential + sigmoid kernel function-based DRVM model MS23 has been recognized as the best architectural model in predicting the settlement of pile group in clay.

4.2.2 Regression Error Characteristics (REC) Curve

The regression error characteristics curve is a regression version of the 2-D receiver operating characteristics (ROC) curve. The y-axis shows the percentage of predicted points that fall inside the tolerance, and the x-axis shows the error tolerance. The cumulative distribution function of the difference between experimental and expected values is calculated using this curve. The REC curve specifies the error amount as a squared residual or an absolute deviation. The area over the curve (AOC), referred to as the curve area, is a reliable measure of a regression model's effectiveness. AOC should preferably be as small as feasible for a great regression model, and the curve should be positioned parallel to the y-axis. Figure 13 (a and b) represents the REC plot showing the error as "absolute deviation" for the pile group settlement for training and testing databases.

Figure 13a, b shows that model MS23 has attained the most negligible AOC value in the training and testing phase. The value of the AOC of the better-performing models is given in Table 8. It can be seen that the model MS23 has the smallest AOC (training = 6.67E-11 and testing = 4.02E-06) value compared to other models. Hence, model MS23 has been recognized as the best architectural model in assessing pile group settlement.

4.2.3 Cross-Validation of Optimum Performance Model

In the present research, five models, MS3, MS9, MS17, MS23, and MS25, have been identified as the better-performing models in predicting pile group settlement in clay. For the cross-validation of the better-performing models, the computational cost analysis has been performed using k-fold = 10. The developed, trained, and tested models have been developed using k-fold = 5 (Khatti and Grover 2023d). Therefore, a comparison of the computational cost for models MS3, MS9, MS17, MS23, and MS25 has been drawn and presented in Table 9.

Table 9 demonstrates that model MS23 has attained the desired prediction with significantly less computational cost in k-fold 5 and 10. Therefore, it can be stated that model MS23 is the best architectural model for predicting pile group settlement.

4.2.4 Anderson–Darling Test

For the hypothesis testing, parametric and nonparametric tests are performed. In this study, a nonparametric test, the "Anderson–Darling" test (AD), has been performed to investigate the deviation of the outcomes. A statistical test called the AD test determines if a sample of data came from a population with a particular distribution. The AD test has been performed on the better-performing models (only for the validation phase) using the MiniTab Statistical Software.

Table 10 and Fig. 14a–f demonstrate the AD test results and probability plot. It can be seen that the p-value is 0.005 (Model: Actual), found to be less than the significance level, i.e., 0.05. Therefore, the AD test rejects the normality null hypothesis (H0). Also, the AD value of the model MS23 has been calculated closest to the actual value, confirming the superiority of the GA-optimized DRVM model MS23 over the other better-performing models.

4.2.5 Uncertainty Analysis (UA95)

Determining the reliability of any soft computing model is necessary to compute predictive targets accurately. In this research, uncertainly analysis (UA) has been performed to explain the quantitative prediction of error of the applied models in predicting the UCS of cohesive virgin soils. The UA test has been performed for the training and testing phases. Hence, the predictive outputs comparison with these actual data points is significant in predicting the reliability of the applied soft computing models, and UA is ideally suitable for this object. The UA test is performed by computing absolute error, MOE, StDev, SE, ME (@ 95% confidence level), WBC, UB, and LB, and presented in Table 11. A good model always contains a low value for the WCB (Bardhan et al. 2021).

Table 11 demonstrates that model MS23 has achieved the first rank in the training and testing phase in uncertainty analysis. Therefore, model MS23 has been recognized as the best architectural model for predicting the pile group settlement in clay.

4.2.6 Wilcoxon Test

If a normal data distribution cannot be assumed, the one-sample Wilcoxon signed rank test is a nonparametric alternative to the one-sample t-test. It determines whether the sample's median equals a recognized standard value. In the present study, one sample Wilcoxon test has been performed for models MS3, MS9, MS17, MS23, and MS25. The results obtained for the soft computing models have been compared to the result obtained for actual pile group settlement data. Table 12 compares the Wilcoxon test for actual pile group settlement data and soft computing models.

Table 12 reveals that the model MS23 has predicted the pile group settlement with the upper and lower levels close to the actual data in the training and testing phase. Therefore, model MS23 has been recognized as the best architectural model in predicting pile group settlement.

4.2.7 Overfitting of Soft Computing Models

The ratio of test RMSE to train RMSE is called overfitting of the soft computing model (Tenpe and Patel 2020; Khatti and Grover 2023c). Asteris et al. (2019) and Armaghani and Asteris (2021) stated that overfitting is a common problem in computational mechanics. It means the optimal model may predict the data used in training and development. Still, at the same time, for the other input variables than the one used in training and testing, the model may predict unusual values. For that purpose, experimental validation may be carried out by adopting different cases from the literature. In this study, the overfitting of the best architectural models MS3, MS9, MS17, MS23, and MS25 has been computed and graphically presented in Fig. 15.

Figure 15 shows that model MS23 has attained the highest overfitting, i.e., 221.281 because all input variable has problematic multicollinearity (VIF > 10). Still, model MS23 has performed well and predicted the pile group settlement with the least residuals in this research. Model MS3 has attained the least overfitting, i.e., 0.404. Based on the performance comparison, all models have attained performance over 95%, presenting highly capable of predicting pile group settlement in clay.

4.3 Discussion of Results

In this research, MLPNN, SVM, Ls-SVM, SVR, TSFL, RBFNN, FFNN, and RVM models have been developed, trained, tested, and analyzed to determine the optimum performance model for predicting pile group settlement. Each GA and PSO algorithm has developed and optimized the single and dual kernel function-based RVM models. The database has been collected from the published article. The multicollinearity analysis has been performed for the database and found that the input variables contain the problematic multicollinearity level. However, the ANOVA and Z tests reject the null hypothesis for the database used in this research. For performance analysis, sixteen performance metrics have been used and compared. Based on the performance comparison, it has been observed that (i) the FFNN model attains a higher performance than RBFNN and MLPNN models, (ii) the Ls-SVM model performance is better than SVM and SVR models, (iii) also, the Ls-SVM model gains higher performance than FFNN and TSFL models in the presence of multicollinearity. The performance comparison of GA and PSO-optimized SRVM models reveals that the GA-optimized SRVM model has predicted pile group settlement with the least error. Similarly, the GA-optimized DRVM model attains better performance and accuracy than the PSO-optimized DRVM model. The performance comparison also reveals that the GA-optimized DRVM model MS23 has outperformed the adopted soft computing models and is recognized as the best architectural model. Model MS23 has attained a higher score and rank in this research work. The analysis of results for model MS23 demonstrates that the combined kernel function-based RVM model, i.e., DRVM, is a robust model because both kernels strengthen the model's performance.

The complex database has problematic multicollinearity, and overfitting is generated due to (i) if the model is simple and the data is complex or (ii) if the model is complex and the data is simple, or (iii) if both are complex. The present study presents that model MS23 is a complex model that combines exponential and sigmoid kernel functions. Also, it has been optimized by a genetic algorithm. Still, model MS23 has performed better than other models. Furthermore, the performance of model MS23 has been compared with the test performance of previously published models by Kumar and Samui (2020), as shown in Table 13.

Based on the comparison presented in Table 13, model MS23 has been recognized as the optimum performance model for predicting the pile group settlement in clay.

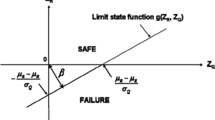

4.4 Reliability Analysis

The qualities of measurement scales and the components that make up the scales can be studied through reliability analysis. In addition to providing data on the correlations between the scale's constituent items, the reliability analysis technique creates various regularly used scale reliability measures. In this research, 80 data points have been used to perform the reliability analysis in predicting the pile group settlement using soft computing approaches. Still, it is questionable whether an amount of data points covers the full range of parameter values sufficient for reliability analysis. Cavaleri et al. (2017), Asteris et al. (2020), Lu et al. (2020), and Huang et al. (2020) reported that a sufficient amount of database is not essential for a high amount of data points, but rather data points that cover a wide range of combination of independent variable values, thus assisting in the ability of models to solve the problem. The requirement of a capable and reliable database is crucial in the case of laboratory databases. The reliability analysis has been performed for MS3, MS9, MS17, MS23, and MS25 models and compared with actual values. The results obtained from the reliability analysis are presented in Fig. 16.

Figure 16 depicts that models MS3, MS9, MS17, MS23, and MS25 can predict the settlement of the pile group. Still, model MS23 is the optimum performance model for predicting the pile group settlement. Model MS23 has attained exact reliability indexes in both phases, i.e., 1.671 (in training) and 2.029 (in testing).

5 Summary and Conclusions

The present study has been carried out to introduce an optimum performance soft computing model for predicting the settlement of pile group in clay. For that purpose, MLPNN, SVM, Ls-SVM, SVR, TSFL, RBFNN, FFNN, and RVM models have been developed, trained, tested, and analyzed using data available in the literature. Twenty-two RVM models (6 GA-optimized SRVM, 6 PSO-optimized SRVM, 5 GA-optimized DRVM, and 5 PSO-optimized DRVM) have been developed and analyzed. Sixteen performance metrics have been implemented in this research to identify the optimum performance model. The following conclusions are mapped through this study.

-

Capabilities of Models (i) FFNN is more competent than RBFNN and MLPNN, (ii) Ls-SVM is better than SVM and SVR, (iii) Ls-SVM is significantly better than FFNN and TSFL, (iv) exponential kernel function is more accurate than other kernel function in case of GA-optimized SRVM model, (v) laplacian kernel function is potent than other kernel function in case of PSO-optimized SRVM models, (vi) the implementation of second kernel function enhance the performance and accuracy of SRVM model, (vii) exponential with sigmoid kernel function is better than other kernel combinations in case of GA-optimized DRVM model, (viii) laplacian with gaussian kernel function is better than other kernel sets in case of PSO-optimized DRVM model, (ix) GA-optimized SRVM and DRVM models perform better than PSO-optimized SRVM and DRVM models.

-

Impact of Multicollinearity (i) multicollinearity affects the performance of deep learning models, i.e., MLPNN, SVM, Ls-SVM, SVR, TSFL, RBFNN, and FFNN, than hybrid learning models, (ii) models based on the hybrid learning approach, i.e., RVM, attains the high overfitting in the testing phase, (iii) the hybrid learning models perform potentially in the presence of multicollinearity.

-

As per the statistical clause for the hypothesis selection, the ANOVA and Z tests accept the research hypothesis for the present research.

-

The results of score analysis, REC plot, computational cost, cross-validation, uncertainty analysis, AD test, Wilcoxon test, and comparison with available models introduce GA-optimized DRVM model MS23 as an optimum performance model for reliability analysis of the settlement of pile group in clay.

To sum up, the GA-optimized DRVM model is successfully employed in this research for reliability analysis of the pile group settlement in clay. The study demonstrates the high capabilities of the GA-optimized DRVM model, and it may be suggested that the GA-optimized DRVM model can be used to solve other geotechnical issues. One of the limitations of the employed machine learning models in this work is to determine the optimal structure using different analyses. Therefore, optimizing the coefficients/weights of the employed models using metaheuristic optimization algorithms is suggested. Different metaheuristics algorithms, such as squirrel search algorithm (SSA), improved squirrel search algorithm (ISSA), grey wolf optimizer (GWO) algorithm, random walk grey wolf optimizer (RW_GWO) algorithm, sailfish optimizer (SAO) algorithm, may be implemented for models and comparison may be drawn with the present study. Also, a large database of pile group settlement can be utilized to develop another hybrid RVM model. The present research will help geotechnical engineers/designers determine the settlement of the pile group without performing experimental procedures. This research will save time and require fewer human resources. As per the author's knowledge, the hybrid relevance vector machine models have been developed, trained, tested, and analyzed for reliability analysis of pile group settlement in clay for the first time.

6 Software Support

MATLAB R2020a: for employing soft computing models, analysis, evaluation, and prediction. Origin Lab 2022b: for graphical presentations and analysis. MiniTab Statistical Software: for statistical analysis.

Data Availability

All data, models, and code generated or used during the study appear in the submitted article. The database used in this research was collected from the literature.

Abbreviations

- 3D:

-

Three-dimensional

- a20:

-

A20-index

- ACP:

-

Axial capacity for driving piles

- ANFIS:

-

Adaptive neuro-fuzzy inference system

- ANN:

-

Artificial neural network

- ANOVA:

-

Analysis of variance

- BCP:

-

Bearing capacity of pile

- BF:

-

Bias factor

- BPNN:

-

Back propagation neural network

- CAM:

-

Cosine amplitude method

- Cc:

-

Compression index

- CPT:

-

Cone penetration test

- D:

-

Footing depth

- df:

-

Degree of freedom

- DRVM:

-

Dual Kernel function-based RVM

- e:

-

Void ratio

- ED:

-

Embedment depth

- F:

-

F State value

- F crit:

-

F critical value

- FAP:

-

Footing net applied pressure

- FE:

-

Finite element

- FEM:

-

Finite element method

- FFNN:

-

Fee-forward neural network

- FN:

-

Functional network

- FOS:

-

Factor of safety

- ɣ:

-

Soil density

- GA:

-

Genetic algorithm

- GMDH:

-

Group method of data handling

- GP:

-

Genetic programming

- GPR:

-

Gaussian process regression

- H0:

-

Null hypothesis

- HR:

-

Research hypothesis

- IOA:

-

Index of agreement

- IOS:

-

Index of scatter

- L:

-

Footing width

- L/W:

-

Length-to-width ratio of footings

- LB:

-

Lower bound

- LL:

-

Lower level

- LLP:

-

Laterally loaded piles

- LMI:

-

Legate and McCabe's index

- Lp/D:

-

Total length of pile/pile diameter

- Ls/Lt:

-

Length of pile in the soil layer/length of pile in the rock layer

- Ls-SVM:

-

Least square support vector machine

- MAE:

-

Mean absolute error

- MAPE:

-

Mean absolute percentage error

- MBE:

-

Mean bias error

- ME:

-

Margin of error

- MOE:

-

Mean of error

- MS:

-

Model structure

- MS:

-

Mean of squares

- NMBE:

-

Normalized mean bias error

- NS:

-

Nash–Sutcliffe efficiency

- ɸ:

-

Angle of internal friction

- Pcyc:

-

Half amplitude of the cyclic load

- PDR:

-

Pile driving records

- PI:

-

Performance index

- PLC:

-

Pile load capacity

- PLT:

-

In-situ pile load test

- PLTC:

-

Pile load test using a calibration chamber

- Pm:

-

Mean value of cyclic load

- PSO:

-

Particle swarm optimization algorithm

- Pu:

-

Ultimate bearing capacity of pile

- R:

-

Coefficient of correlation

- R2 :

-

Coefficient of determination

- RBFNN:

-

Radial basis function neural network

- RMSE:

-

Root mean square error

- RNN:

-

Recurrent neural network

- ROC:

-

Receiver operating characteristics

- RSR:

-

Root mean square error to observation's standard deviation ratio

- RVM:

-

Relevance vector machine

- SA:

-

Actual settlement

- SE:

-

Standard error

- SPM:

-

Supplementary materials

- SPT:

-

Standard penetration test

- SRVM:

-

Single Kernel function-based RVM

- SS:

-

Sum of square

- StDev:

-

Standard deviation

- SVM:

-

Support vector machine

- SVR:

-

Support vector regression

- TSFL:

-

Takagi–Sugeno fuzzy method

- UB:

-

Upper bound

- UBC:

-

Ultimate bearing capacity

- UCS:

-

Unconfined compressive strength

- UL:

-

Upper level

- VAF:

-

Variance accounted for

- VIF:

-

Variance inflation factor

- W:

-

Footing length

- WBC:

-

Width of confidence bound

- WMAPE:

-

Weighted mean absolute percentage error

References

Abu-Kiefa MA (1998) General regression neural networks for driven piles in cohesionless soils. J Geotech Geoenviron Eng ASCE 124(12):1177–1185. https://doi.org/10.1061/(ASCE)1090-0241(1998)124:12(1177)

Adarsh S, Dhanya R, Krishna G, Merlin R, Tina J (2012) Prediction of ultimate bearing capacity of cohesionless soils using soft computing techniques. Int Schol Res Notices

Afnan HA, Abbas HO, Abd SH, Mohamed SM (2023) Behavior of pile group constructed on clayey soil under el-centro seismic. Diyala J Eng Sci 16(1):44–54. https://doi.org/10.24237/djes.2023.16105

Alhashmi AE, El Naggar MH, Oudah F (2023) Performance of axially loaded defective pile groups in sand: capacity and serviceability evaluation. Innov Infrastruct Solut. https://doi.org/10.1007/s41062-023-01086-w

Alkroosh I, Nikraz H (2011a) Correlation of pile axial capacity and CPT data using gene expression programming. Geotech Geol Eng 29:725–748. https://doi.org/10.1007/s10706-011-9413-1

Alkroosh I, Nikraz H (2011b) Simulating pile load-settlement behavior from CPT data using intelligent computing. Central Eur J Eng 1(3):295–305. https://doi.org/10.2478/s13531-011-0029-2

Andreas Z (1994) Simulation neuronaler netze-simulation of neural networks. Addison-Wesley

Apostolopoulou M, Asteris PG, Armaghani DJ, Douvika MG, Lourenço PB, Cavaleri L, Bakolas A, Moropoulou A (2020) Mapping and holistic design of natural hydraulic lime mortars. Cem Concr Res 136:106167. https://doi.org/10.1016/j.cemconres.2020.106167

Ardakani A, Kordnaeij A (2019) Soil compaction parameters prediction using GMDH-type neural network and genetic algorithm. Eur J Environ Civ Eng 23(4):449–462. https://doi.org/10.1080/19648189.2017.1304269

Armaghani DJ, Asteris PG (2021) A comparative study of ANN and ANFIS models for the prediction of cement-based mortar materials compressive strength. Neural Comput Appl 33(9):4501–4532. https://doi.org/10.1007/s00521-020-05244-4

Armaghani DJ, Asteris PG, Fatemi SA, Hasanipanah M, Tarinejad R, Rashid ASA, Huynh VV (2020) On the use of neuro-swarm system to forecast the pile settlement. Appl Sci 10(6):1904. https://doi.org/10.3390/app10061904

Asteris PG, Armaghani DJ, Hatzigeorgiou GD, Karayannis CG, Pilakoutas K (2019) Predicting the shear strength of reinforced concrete beams using Artificial Neural Networks. Comput Concr 24(5):469–488. https://doi.org/10.12989/cac.2019.24.5.469

Asteris PG, Apostolopoulou M, Armaghani DJ, Cavaleri L, Chountalas AT, Guney D, Hajihassani M, Hasanipanah M, Khandelwal M, Karamani C, Koopialipoor M (2020) On the metaheuristic models for the prediction of cement-metakaolin mortars compressive strength. Metaheuristic Comput Appl. https://doi.org/10.12989/mca.2020.1.1.063

Asteris PG, Koopialipoor M, Armaghani DJ, Kotsonis EA, Lourenço PB (2021a) Prediction of cement-based mortars compressive strength using machine learning techniques. Neural Comput Appl 33(19):13089–13121. https://doi.org/10.1007/s00521-021-06004-8

Asteris PG, Lourenço PB, Hajihassani M, Adami CEN, Lemonis ME, Skentou AD, Marques R, Nguyen H, Rodrigues H, Varum H (2021b) Soft computing-based models for the prediction of masonry compressive strength. Eng Struct 248:113276. https://doi.org/10.1016/j.engstruct.2021.113276

Balakumar V, Kalaiarasi V, Ilamparuthi K (2005) Experimental and analytical study on the behavior of circular piled raft on sand. In: Proceedings of the 16th international conference on soil mechanics and geotechnical engineering. Osaka, Japan. https://doi.org/10.3233/978-1-61499-656-9-1943

Balakumar V, Ilamparuthi K (2007) Performance monitoring of a piled raft foundation of twelve storied building and analytical validation. Indian Geotech J 37(2):94–115

Bao X, Cheng Z, Shen J, Zhang X, Chen X, Cui H (2023) Study on bearing capacity of reinforced composite pipe pile group in reclaimed stratum under vertical load. J Mar Sci Eng. https://doi.org/10.3390/jmse11030597

Baziar MH, Ghorbani A, Katzenbach R (2009) Small-scale model test and three-dimensional analysis of pile-raft foundation on medium-dense sand. Int J Civ Eng 7(3):170–175

Berardi R, Bovolenta R (2005) Pile settlement evaluation using field stiffness nonlinearity. Proc Inst Civ Eng Geotech 158:35–44. https://doi.org/10.1680/geng.2005.158.1.35

Bhartiya P, Chakraborty T, Basu D (2021) Prediction of piled raft settlement using soil subgrade modulus in consolidating clays. Pract Period Struct Des Constr 26(4):04021037. https://doi.org/10.1061/(ASCE)SC.1943-5576.0000608

Bohn C, Lopes dos Santos A, Frank R (2017) Development of axial pile load transfer curves based on instrumented load tests. J Geotech Geoenviron Eng ASCE 143(1):04016081. https://doi.org/10.1061/(ASCE)GT.1943-5606.0001579

Bourgeois E, Buhan P, Hassen G (2012) Settlement analysis of piled-raft foundations by means of a multiphase model accounting for soil-pile interactions. Comput Geotech 46:26–38. https://doi.org/10.1016/j.compgeo.2012.05.015

Broomhead D, Lowe D (1988) Radial basis functions, multi-variable functional interpolation and adaptive networks. Royal Signals and Radar Establishment Malvern, United Kingdom

Candela JQ (2004) Learning with uncertainty—gaussian processes and relevance vector machines. Technical University of Denmark, Copenhagen, pp 1–152

Cavaleri L, Chatzarakis GE, Di-Trapani F, Douvika MG, Roinos K, Vaxevanidis NM, Asteris PG (2017) Modeling of surface roughness in electro-discharge machining using artificial neural networks. Adv Mater Res 6(2):169. https://doi.org/10.12989/amr.2017.6.2.169

Chen S, Gu C, Lin C, Zhang K, Zhu Y (2021) Multi-kernel optimized relevance vector machine for probabilistic prediction of concrete dam displacement. Eng Comput 37(3):1943–1959. https://doi.org/10.1007/s00366-019-00924-9

Chen Y, Ma S, Ren Y, Chen R, Bian X (2021a) Experimental study on cyclic settlement of piles in silt soil and its application in high-speed railway design. Transp Geotech 27:100496. https://doi.org/10.1016/j.trgeo.2020.100496

Cortes C, Vapnik V (1995) Support vector networks. Mach Learn 20:273–297. https://doi.org/10.1007/BF00994018

Crispin JJ, Vardanega PJ, Mylonakis G (2019) Prediction of pile settlement using simplified models. Proceedings of the XVII ECSMGE, pp 1–9. https://doi.org/10.32075/17ECSMGE-2019-0388

Cui J, Li J, Zhao G (2019) Long-term time-dependent load-settlement characteristics of a driven pile in clay. Comput Geotech 112:41–50. https://doi.org/10.1016/j.compgeo.2019.04.007

Dai G, Salgado R, Gong W, Zhang Y (2012) Load tests on full-scale bored pile groups. Can Geotech J 49(11):1293–1308. https://doi.org/10.1139/t2012-087

Das BM (2008) Advanced soil mechanics: Tylor & Frances

Fattahi H, Hasanipanah M (2021) Prediction of blast-induced ground vibration in a mine using relevance vector regression optimized by metaheuristic algorithms. Nat Resour Res 30(2):1849–1863. https://doi.org/10.1007/s11053-020-09764-7

Ghorbani B, Arulrajah A, Narsilio G, Horpibulsuk S, Bo MW (2020) Development of genetic-based models for predicting the resilient modulus of cohesive pavement subgrade soils. Soils Found 60(2):398–412. https://doi.org/10.1016/j.sandf.2020.02.010

Gomes Filho FVC, Moura AS (2021) Proposal for considering the group effect in the prediction of settlements in pile groups through load transfer methods. Soils Rocks. https://doi.org/10.28927/SR.2021.061320

Hair JF, Ortinau DJ, Harrison DE (2013) Essentials of marketing research. Oxford University Press, New York

Hakro MR, Aneel K, Zaheer A, Mujahid A, Fahid A, Roman F, Sergey K, Alexander K, Linar S (2022) Numerical analysis of piled-raft foundations on multi-layer soil considering settlement and swelling. Buildings 12(3):356. https://doi.org/10.3390/buildings12030356

Hasanzadehshooiili H, Lakirouhani A, Medzvieckas J (2012) Superiority of artificial neural networks over statistical methods in prediction of the optimal length of rock bolts. J Civ Eng Manag 18(5):655–661. https://doi.org/10.3846/13923730.2012.724029

Hong TP, Lee CY (1996) Induction of fuzzy rules and membership functions from training examples. Fuzzy Sets Syst 84(1):33–47. https://doi.org/10.1016/0165-0114(95)00305-3

Hongwei G (2011) A simple algorithm for fitting a Gaussian function. IEEE Sign Proc Mag 28(9):134–137. https://doi.org/10.1109/MSP.2011.941846

Huang J, Asteris PG, Manafi-Khajeh-Pasha S, Mohammed AS, Hasanipanah M (2020) A new auto-tuning model for predicting the rock fragmentation: a cat swarm optimization algorithm. Eng Comput 38:1–12. https://doi.org/10.1007/s00366-020-01207-4

Ibrahim NM, Rahim NL, Amat RC, Salehuddin S, Ariffin NA (2012) Determination of plasticity index and compression index of soil at Perlis. APCBEE Proc 4:94–98. https://doi.org/10.1016/j.apcbee.2012.11.016

Katzenbach R, Arslan V, Moorman C (2000) Numerical stimulations of combined piled raft foundations for the new high rise building. Max in Frankfurt am main. In: Proceedings of the 2nd international conference on soil structure interaction in urban civil engineering, ETH, Zurich, Switzerland

Khatti J, Grover KS (2021) Computation of permeability of soil using artificial intelligence approaches. Int J Eng Adv Technol 11(1):257–266. https://doi.org/10.35940/ijeat.A3220.1011121

Khatti J, Grover KS (2023a) Prediction of compaction parameters for fine-grained soil: Critical comparison of the deep learning and standalone models. J Rock Mech Geotech Eng in Press. https://doi.org/10.1016/j.jrmge.2022.12.034

Khatti J, Grover KS (2023b) CBR prediction of pavement materials in unsoaked condition using LSSVM, LSTM-RNN, and ANN approaches. Int J Pavement Res Technol. https://doi.org/10.1007/s42947-022-00268-6

Khatti J, Grover KS (2023c) Assessment of fine-grained soil compaction parameters using advanced soft computing techniques. Arab J Geosci 16(3):208. https://doi.org/10.1007/s12517-023-11268-6

Khatti J, Grover KS (2023d) Prediction of UCS of fine-grained soil based on machine learning part 2: comparison between hybrid relevance vector machine and Gaussian process regression. Multiscale Multidiscip Model Exp Des. https://doi.org/10.1007/s41939-023-00191-8

Khatti J, Grover KS (2023e) Prediction of UCS of fine-grained soil based on machine learning part 1: multivariable regression analysis, gaussian process regression, and gene expression programming. Multiscale Multidiscip Model Exp Des. https://doi.org/10.1007/s41939-022-00137-6

Khatti J, Grover KS (2023f) Prediction of compaction parameters of compacted soil using LSSVM, LSTM, LSBoostRF, and ANN. Innov Infrastruct Solut 8(2):76. https://doi.org/10.1007/s41062-023-01048-2

Khatti J, Grover KS (2023g) Prediction of soaked CBR of fine-grained soils using soft computing techniques. Multiscale Multidiscip Model Exp Des 6(1):97–121. https://doi.org/10.1007/s41939-022-00131-y

Kordjazi A, Nejad FP, Jaksa MB (2014) Prediction of ultimate axial load-carrying capacity of piles using a support vector machine based on CPT data. Comput Geotech 55:91–102. https://doi.org/10.1016/j.compgeo.2013.08.001

Kumar M, Samui P (2020) Reliability analysis of settlement of pile group in clay using LSSVM, GMDH. GPR Geotech Geol Eng 38:6717–6730. https://doi.org/10.1007/s10706-020-01464-6

Kumar A, Houdhury D, Katzenbach R (2016) Effect of earthquake on combined pile-raft foundation. Int J Geomech. https://doi.org/10.1061/(ASCE)GM.1943-5622.0000637

Lai JX, Liu HQ, Qiu JL, Chen J (2016) Settlement analysis of saturated tailings dam treated by CFG pile composite foundation. Adv Mater Sci Eng. https://doi.org/10.1155/2016/7383762

Lee IM, Lee JH (1996) Prediction of pile bearing capacity using artificial neural networks. Comput Geotech 18(3):189–200. https://doi.org/10.1016/0266-352X(95)00027-8

Lee K, Shin S, Lee H, Kim D (2020) Analysis of pile behaviors with friction resistance of skin of steel pipe pile in ground where settlement is predicted. J Korean Geotech Soc 36(11):107–117

Li L, Deng Y (2023) Analysis of settlement of group pile foundation in linear viscoelastic soil. Adv Civ Eng Mater. https://doi.org/10.1155/2023/3207304

Li L, Gong W (2019) Prediction of nonlinear vertical settlement of a pile group consisting of new and existing displacement piles in clay strata. Soils Found 59(5):1336–1348. https://doi.org/10.1016/j.sandf.2019.06.001

Li TZ, Pan Q, Dias D (2021) Active learning relevant vector machine for reliability analysis. Appl Math Model 89:381–399. https://doi.org/10.1016/j.apm.2020.07.034

Lu S, Koopialipoor M, Asteris PG, Bahri M, Armaghani DJ (2020) A novel feature selection approach based on tree models for evaluating the punching shear capacity of steel fiber-reinforced concrete flat slabs. Materials 13(17):3902. https://doi.org/10.3390/ma13173902

Mandolini A, Russo G, Viggiani C (2005) Pile foundations: experimental investigations, analysis and design. In: Proceedings of the 16th international conference on soil mechanics and geotechnical engineering, Osaka, vol 1, pp 177–213

Masani AK, Vanza MG (2018) Effect of vertical load on the lateral behavior of group of piles. Int J Adv Res Innovat Ideas Educ 4(2)