Abstract

The NSGA-II algorithm uses a single population single crossover operator, which limits the search performance of the algorithm to a certain extent. This paper presents an improved version of the NSGA-II algorithm, named adaptive multi-population NSGA-II (AMP-NSGA-II) that divides the original population into multiple populations and assigns a different crossover operator to each subspecies. It introduces an excellent set of solutions (EXS), which can make the individuals in the EXS set close to the Pareto front and improve the convergence performance of the algorithm. And based on the analysis of the EXS set, the size of each subpopulation can be dynamically adjusted, which can improve the adaptability for different problems. Finally, the computation results on benchmark multi-objective problems show that the proposed AMP-NSGA-II algorithm is effective and is competitive to some state-of-the-art multi-objective evolutionary algorithms in the literatureis.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In practical engineering applications, multi-objective optimization is a very important research topic [1]. We often need to use the best possible decision-making problem for multiple targets in a given feasible domain, but the improvement of these targets may come into conflict with each other, a set of objectives must be taken to neutral balance. Multi-objective genetic algorithms have good solvability for such problems [17, 18]. Non-dominated Sorting Genetic Algorithm 2 (NSGA-II) is one of the most representative genetic algorithms. NSGA-II was developed by Deb in 2000, which is NSGA improved non-inferior classification genetic algorithm [14], adopts a better accounting strategy and thus reduces the overall running time of the algorithm.

In recent years, many scholars have proposed improved algorithms for the NSGA-II in order to improve the diversity of the population and the convergence of the algorithm. Some researches improved the individual sequencing of NSGA-II by ranking individuals in the population as \(1 \sim m_{r}\), and setting the sequence of repeated individuals in the population to \(m_{r} + 1\) to ensure the uniqueness of individuals in the sequence \(1 \sim m_{r}\), improving the diversity of the population and avoiding the population into local optimum [7]. Some researches improved the crossover operation method of NSGA-II genetic algorithm, such as selecting crossover individuals by using the principle of adjacent maximum, that is, selecting the individuals with the largest distance among adjacent individuals for crossover operation, taking advantage of the hybridization to improve the population Distribution at the forefront of Pareto [16]. In some research, the cross-operation of NSGA-II is replaced by an orthogonal array and Taguchi method to optimize the algorithm performance and improve the convergence of the algorithm [11]. The theory of intermittent equilibrium points out that evolution and generation of new species cannot occur in the core area where a major group of species is located. In the core areas where the population is clustered, there is less pressure for biological survival, simple living environment and poor diversity. Therefore, it is extremely difficult to produce a system that can withstand harsh environments and has a strong adaptability of offspring individuals. Based on this, multi-population genetic algorithms have been proposed and are widely developed [10, 13]. Each subpopulation performs independent genetic operations, effectively improving the diversity of the population.

In order to further improve the population diversity and search performance of NSGA-II, this paper uses the idea of intermittent equilibrium to establish the genetic operation mode of multi-population and multi-crossover operator, to avoid the population from getting into local optimum. According to the contribution of sub-population to EXS solution set, \(Logistic\) model is used to adaptively adjust population. Combined with local search method, the algorithm has strong local optimization ability and improves the individual distribution on the Pareto front, and then proposes an adaptive multi-population non-dominated Sorting Genetic Algorithm II (AMP-NSGA-II). This paper describes the operation process of AMP-NSGA-II by introducing the establishment of multi-population.

2 Adaptive multi-population NSGA-II

2.1 Introducing multi-population and crossover operators

Multi-objective optimization usually does not have a solution that can achieve all the objectives simultaneously. Rather, it needs to obtain a Pareto optimal solution set whose optimal solution is theoretically optimal (also referred to as Pareto front-end of the target space) and should have the best possible approximation and uniformity. NSGA-II is an algorithm for solving this multi-objective optimization problem. It conducts the non-dominated sorting of the initial population, then selects, crosses and mutates to obtain a new population, merges the subpopulation with the parent population, and conducts a non-dominated sorting to obtain a non-dominated solution front. In previous NSGA-II, only one population is maintained during the evolution of the population. The disadvantage of this approach is that population diversity may be poor, because for some multi-objective optimization problems with many local optima, populations tend to converge to local optimal locations. This paper decomposes a single population of NSGA-II into multiple subpopulations, each of which is assigned a unique crossover operator. The blend crossover (denoted as \(\text {BLX} - \alpha \)) [5], the simulated binary crossover (denoted as \({\text {SBX}}\)) [3], the simplex crossover (denoted as \({\text {SPX}}\)) [15], and parent centric crossover (denoted as \({\text {PCX}}\)) [2] operator are proved to be suitable for solving different problems of different ability. Therefore, it is reasonable to adjust the scale of each subpopulation adaptively by assigning different crossover operators to each subpopulation and designing an appropriate management strategy.

In this paper, the population is divided into four parts and assigned with above four different crossover operators, respectively. Different crossover operators have different global search capabilities, different search methods, and different crossover populations. Parents of population I adopt blend crossover (BLX−α) [5] operation. The parent individuals are \({p^{1}} = ({x_{1}^{1}},{x_{2}^{1}}, \cdot \cdot \cdot {x_{n}^{1}})\) and \({p^{2}} = ({x_{1}^{2}},{x_{2}^{2}}, \cdot \cdot \cdot {x_{n}^{2}})\) respectively, and the crossover formula is:

where \({p^{2}} = ({x_{1}^{2}},{x_{2}^{2}}, \cdot \cdot \cdot {x_{n}^{2}})\) and \(\beta = (1 + 2\alpha )U(0,1) - \alpha \). The individuals generated by the crossover operator have better diversity, improve the individual distribution of the population and have good ability to solve the segmentation function. The crossover operator has an advantage of generating diversity offspring, that allows GA to converge, diversity or adapt to changing fitness landscapes without incurring extra parameters or mechanisms. However, there are limitations to the multi-objective problem with strong correlation between variables, such as epistasis problem. Parents of population II adopt binary crossover (SBX) [3] operation, the parents individuals were \({p^{1}} = ({x_{1}^{1}},{x_{2}^{1}}, \cdot \cdot \cdot {x_{n}^{1}})\) and \({p^{2}} = ({x_{1}^{2}},{x_{2}^{2}}, \cdot \cdot \cdot {x_{n}^{2}})\), the formula for the crossover:

where \(\beta (u) = \left \{ {\begin {array}{*{20}{c}} {{{(2u)}^{\frac {1} {{{\eta _{c}} + 1}}}},{\text {u}} \leq 0.5} \\ {{{(2(1 - u))}^{\frac {1} {{{\eta _{c}} + 1}}}},{\text {others}}} \end {array}} \right .\), and u is a uniformly distributed random number in interval [0,1]. The crossover operator can cross two parents to obtain any child individuals and has good solving ability for multi-objective optimization problems with globally optimal upper and lower bounds with narrow global domains. Parents of population III adopt simplex crossover (SPX) [15] operations, and their parents were \({p^{1}} = ({x_{1}^{1}},{x_{2}^{1}}, \cdot \cdot \cdot {x_{n}^{1}})\), \({p^{2}} = ({x_{1}^{2}},{x_{2}^{2}}, \cdot \cdot \cdot {x_{n}^{2}})\) and \({p^{3}} = ({x_{1}^{3}},{x_{2}^{3}}, \cdot \cdot \cdot {x_{n}^{3}})\) respectively. The crossover formula was:

where \(\bar x\) denote the center of the selected individual. The crossover operator can balance the development of the population and explore the performance, so that the formation of the offspring of individuals from the coordinate system independent. Can perform well in multimodal functions and low-dimensional functions with three parents or high-dimensional functions with four parents. However, it is disadvantageous to solve the test function composed of strong correlation. Parents of population IV adopt the parent centric crossover (PCX) [2] operation and the parents are \({p^{1}} = ({x_{1}^{1}},{x_{2}^{1}}, \cdot \cdot \cdot {x_{n}^{1}})\), \({p^{2}} = ({x_{1}^{2}},{x_{2}^{2}}, \cdot \cdot \cdot {x_{n}^{2}})\) and \({p^{3}} = ({x_{1}^{3}},{x_{2}^{3}}, \cdot \cdot \cdot {x_{n}^{3}})\). The crossover formula is:

where \({e^{i}} = {d^{i}}/\left | {{{d}^{i}}} \right |\); \({d^{p}} = {p^{p}} - g\), g is the average vector of \({n_{u}}\) parent individuals; D is the mean of the vertical distance to the vector \({d^{p}}\) for the remaining \({n_{u}} - 1\) individuals. The crossover operator uses an adaptive method to cross-operate and The sub-individuals generated with the crossover operator are not close to the center of the selected parent but close to the parent.

The main process of genetic algorithm shown in Fig. 1, including multi-population genetic manipulation, updating EXS solution set and adjusting the size of the population adaptively and other parts.

In Fig. 1, \({P_{i.t}}\) is the t-generation parent population of i; \({Q_{i.t}}\) is the t-generation sub-population of i. The four sub-populations assigned four different crossover operators. In multi-populations genetic manipulation phase, each subpopulation uses NSGA-II algorithm to perform non-inferior sorting and crowd-distance sorting operations respectively. \({F_{j}}\) is the set of individuals with rank j in population \({R_{i.t}} = {P_{i.t}} \cup {Q_{i.t}}\); and \(P{'_{i.t + 1}}\) is the \(t + 1\)-generation parent population dynamically adjusted by population i. From a global perspective, the application of cross-operation can find some good individuals. In the EXS solution collection update phase, the EXS is updated according to the fitness of individuals, and the dominant individuals in the subpopulations are collected in the EXS solution set. Through the proposed local search method, the crossover operator of the dominant population is used to further increase the search ability of the algorithm and improve the Pareto frontier EXS solution set distribution. In order to get the population closer to the Pareto frontier, individual selection of crossover operations are randomly selected from the population \({P_{i,t}}(i = 1,2,3,4)\), \({Q_{i,t}}(i = 1,2,3,4)\) and the EXS set, respectively, to ensure that new individuals crossing the generation continually approximate the optimal solution set. In adjusting population size phase, the contribution of different populations to the EXS is counted, and the population with a large contribution is considered to be more suitable for solving the target problem. Therefore, the number of the individuals is appropriately increased, otherwise it is decreased. The crossover operator is:

Where k is serial number of four populations. Population \({P_{i.t}}(i = 1,2,3,4)\) were crossed and mutated to obtain population \({Q_{i.t}}\), and population \({R_{i.t}} = {P_{i.t}} \cup {Q_{i.t}}\) was ranked non-inferior and crowded by distance \({P_{1.t + 1}}\), \({P_{2.t + 1}}\), \({P_{3.t + 1}}\), \({P_{4.t + 1}}\). In order to improve the local search ability of the population, this algorithm preserves the mutation operator of the original NSGA-II to adjust some gene values in individual chromosome strings. From a local point of view, the individual is more approximate to the optimal solution set and the genetic algorithm local search Sufficient ability to maintain the diversity of the population, to prevent premature phenomenon. When the algorithm introduces the above crossover operator, the various subpopulation can make up each other and coordinate with each other so that the algorithm can find effective crossover operator for different multi-objective optimization problems, cross better individuals and enhance the adaptation of the algorithm Sexual and population diversity.

The NP individuals are divided into 4 populations \({P_{1.1}}\), \({P_{2.1}}\), \({P_{3.1}}\) and \({P_{4.1}}\), and the initial size of each population is \(^{NP}/_{4}\), respectively. The population was initialized using the method in [8]. From the initial four sub-populations \({P_{i.1}}(i = 1,2,3,4)\), four progeny populations \({Q_{i.1}}\) are generated through the selection, crossover and mutation operation of the binary tournament respectively. By sorting the \({R_{i.1}} = {P_{i.1}} \cup {Q_{i.1}}\) populations non-inferiorly, \(Rank1\) individuals \({b_{i}}\) in \({R_{i.1}}\) populations are added to the EXS solution sets so that the initial EXS solution has a much denser distribution on the Pareto frontier. EXS solution set initialization process is shown in Fig. 2. If \(\left | {EXS} \right | = \sum \limits _{i = 1}^{4} {{b_{i}}} > {N_{EXS}}\), the non-inferior ordering and crowding distance ranking of the EXS solution sets are performed and remove the bad solution from the EXS solution so that \(\left | {EXS} \right | = {N_{EXS}}\). Or if \(\left | {EXS} \right | = \sum \limits _{i = 1}^{4} {{b_{i}}} < {N_{EXS}}\), it will not be processed. When the EXS solution set is updated, the EXS solution sets the number of individual to \({N_{EXS}}\). The process of updating is to replace the inferior solution in

the population of the dominant individual in the population, so that the EXS collection is constantly approaching Pareto optimal solution set. The update steps for the EXS collection are as follows:

-

step 1

If the solution in the population \(i(i = 1,2,3,4)\) is better than the solution in the EXS solution set, the solution in the EXS solution set is removed and the dominant individuals corresponding to the population i are added to the solution set.

-

step 3

If the solution in the population is worse than the solution in the EXS solution, do not deal with it;

-

step 3

If the number of individuals in the EXS solution set exceeds the set value \({N_{EXS}}\), the bad solution is removed according to the non-inferior sorting and crowding distance sorting method of NSGA-II.

2.2 Local search algorithm

To improve the individual distribution of EXS solution sets, the algorithm process is divided into two stages. The first phase is EXS-pop-Update, which updates the EXS solution via populations \({Q_{1.t + 1}}\), \({Q_{2.t + 1}}\), \({Q_{3.t + 1}}\) and \({Q_{4.t + 1}}\). This method can search and exploit the population \({P_{1.t + 1}}\), \({P_{2.t + 1}}\), \({P_{3.t + 1}}\) and \({P_{4.t + 1}}\) well and find out more excellent individuals to improve the distribution of EXS solution sets on the Pareto frontier. The second phase is EXS-self-Update, which is a set of self-updating for the EXS solution. Through the EXS solution of the inter-personal cross-operation, local search the EXS solution, mining EXS solution sets the potential of the optimal solution individual. Based on the analysis of the update process of EXS solution by \({P_{i.t + 1}}(i = 1,2,3,4)\), the contribution of each subpopulation to EXS solution is calculated and the crossover operator in this phase is determined. The specific process shown in Table 1, where j represents the serial number of the four populations, \(N_{EXS}\) represents the number of individuals in the EXS. The individuals with the greatest contribution and the individuals in EXS are randomly selected to cross-mutate and generate sub-individuals, and the disadvantaged individuals in the EXS are replaced by the dominant individuals.

2.3 Adaptive adjustment of population size

The distribution of crossover operators in each subpopulation leads to different distribution of individuals in each population when solving different multi-objective problems. Sub-population \({P_{i.t + 1}}(i = 1,2,3,4)\) differ in their contribution to the EXS solution set during the updating of the EXS solution set. Under the condition of keeping the total number of individuals NP unchanged, by adjusting the size of each population, the density of individuals in front of Pareto is increased, the number of population with fewer density in Pareto frontier is reduced, and each subpopulation can be realized with different adaptive optimization problems, the optimal solution set can be found as soon as possible for different multi-objective optimization problems, which effectively improves the adaptability of the population. Select two populations with large contribution to increase the individual number of the population. With the gradual increase of population size, the individual number of each population can not exceed the maximum population \(p{s_{\max } }\) because of limited resources and the number of increase individuals variable \(n_{inc}^{i}\) should be gradually reduced. Select two small contribution populations to reduce the number of selected individuals, with the gradual reduction of population size, it should ensure that the number of individuals in the sub-population not less than the population minimum \(p{s_{\min } }\). Using the discrete time \(Logistic\) model to adjust the number of individual population, the number of increase individuals and reduce individuals are calculated by (6) and (7) respectively:

Where \(p{s_{g}^{i}}\) denotes the size of the g-th population of population i, \(\left (1 - \frac {{p{s_{g}^{i}}}}{{ps_{\max }^{}}}\right )\) and \(\left (1 - \frac {{p{s_{g}^{i}}}}{{ps_{\min }^{}}}\right )\) are the density dependences, \(ps_{\max } \) and \(ps_{\min } \) are the maximum and minimum number of individuals we have set. The \(Logistic\) models show density dependence, meaning the per capita population growth rates decline as the population density increases. In addition, the negative density dependence \(\left (1 - \frac {p{s_{g}^{i}}}{p{s_{\min } }}\right )\) gradually decreases the decreasing individuals to \(ps_{\min } \). The two selected subpopulations with large (or small) contributions have some differences in updating the number of EXS solutions. Therefore, this paper proposes the following adjustment strategy:

The number of individuals updating the four population-based EXS solution sets is \({c_{1}}^{i}\), \({c_{2}}^{j}\), \({c_{3}}^{k}\), and \({c_{4}}^{l}\), where \(i, j, k, l\) indicates the population label, here \({c_{1}}^{i}\) means the contribution of population i to update the EXS solution set and the subscript 1 means it is the largest among the four populations. The size of sub-population \(i, j, k, l\) are denote by (8)-(11) respectively.

To ensure that the number of individuals in each subpopulation is not less than \(p{s_{\min } }\) and not large than \(p{s_{\max } }\) , constraint the number of individuals \(Su{b_{d}}\) with (12). Properly reduce/increase the individuals of the largest/smallest subpopulations, leaving the total number of individuals unchanged.

After determining the size of each subpopulation \(Su{b_{d}}\left ({d = 1,2,3,4} \right )\), non-destructive sorting of each population, and then proceed as follows:

-

Case 1

If the number of population d\(Su{b_{d}}\) is smaller than the number of original population, delete it from the worst one in the population until the number of population reaches \(Su{b_{d}}\).

-

Case 2

If the number of population d\(Su{b_{d}}\) is greater than the number of original population, do:

-

step 1

Pick an individual randomly from the population d;

-

step 2

Pick an individual (or two individuals randomly from the EXS solution set if the population d is assigned an SPX or PCX crossover operator);

-

step 3

For the two individuals (or three) obtained, new solutions are added to the population d by cross-operation of crossover operators assigned to the population;

-

step 4

If the number of population d reaches \(Su{b_{d}}\), stop. Otherwise, skip to step1.

-

step 1

3 Experimentation

This section is devoted to presenting the experiments performed in this work. The performance of the algorithm is analyzed using 12 standard test problems, including the dual-objective test problems ZDT [19]: ZDT1, ZDT2, ZDT3, ZDT4, ZDT6 and three-target test problems DTLZ [4]: DTLZ1, DTLZ2, DTLZ3, DTLZ4, DTLZ5, DTLZ6, DTLZ7. GD (General Distance) to measure whether the updated EXS solution set converges to the true Pareto optimal solution set. GD represents the distance between the obtained EXS solution set and the true Pareto optimal solution set, the smaller its value is, The optimal solution set converges to the true Pareto optimal solution set. It can be calculated by (13):

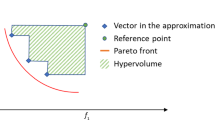

where \(\left | {EXS} \right |\) is the number of individuals in the EXS population; \({d_{i}}\) is the Euclidean distance between the nearest individuals in individuals \(i \in EXS\) and \({P^{*}}\) in the target space. \(I_{h}\) (Hypervolume) index evaluation method is a solution set comprehensive quality evaluation method, which can simultaneously evaluate the convergence, uniformity and universality of the solution set. The larger the value of \(I_{h}\), the comprehensive performance of the optimal solution set obtained The better. In this paper, the objective function is normalized in the range of [0,1]. The standardization formula is:

where \({f_{j}}(x)\) is the j-th objective function value of solution x; \({f_{j}}^{\min } \) (\({f_{j}}^{\max } \) ) is the minimum value (maximum value) of the 6th target value in the solution set obtained by genetic algorithm, and the reference point is set as (1.0,1.0).

According to the flow of the algorithm, it can be seen that the updating process of the EXS set is to replace the more dominant individuals in the four populations into the EXS set. Therefore, the result of EXS(t + 1) (t + 1 means the \(t + 1\) generation of evolution) is definitely better than EXS(t), that is, there is no individual in EXS(t) can dominate any individual in EXS(t + 1), so the algorithm is definitely convergent. Figure 3 shows EXS individuals initial states and its evolution process at generations 50, 200, 500 when the algorithm solves the ZDT1 problem.

Table 2 shows the comparison between the convergence index (GD) and the comprehensive performance index (Ih) of AMP-NSGA-II algorithm and NSGA-II algorithm on 12 test problems and verify whether there is a significant difference between the two algorithms in GD and \(I_{h}\) through independent-samples t-test by SPSS (Statistical Product and Service Solutions) software. The resulting values are the median (xm) and the interquartile range (IRQ) of the results obtained by running 100 times. Better values are in bold style. As is shown, the AMP-NSGA-II algorithm obtained 12 superior GD values out of 12 test problems and obtained 11 superior \(I_{h}\) values out of 12 test problems, except for the ZDT6. This shows that the algorithm has a better adaptability than NSGA-II. Independent-samples t-test showed significant differences (SD) between the two genetic algorithms. Therefore, the proposed AMP-NSGA-II genetic algorithm is superior to the NSGA-II genetic algorithm in terms of convergence and overall performance. A graphical overview of 2 bi-objective problems is given in Fig. 4. As is shown, on the ZDT1 and ZDT3 test problem, a large number of individuals in NSGA-II fall into \({f_{1}} = 0\) and the individuals are unevenly distributed, indicating that the NSGA-II algorithm is easy to fall into local optimum when solving the test problem and individuals in AMP-NSGA-II were uniformly and accurately distributed on the Pareto front. On the ZDT2 problem, AMP-NSGA-II distributed more evenly than NSGA-II, with less deviation from the Pareto front. On the ZDT4 problem, NSGA-II individuals are concentrated in the range of \([0,0.7]\). As \({f_{1}}\) increases, individuals gradually diverge from the Pareto front and is divergent. The Pareto front obtained by NSGA-II and AMP-NSGA-II of the three-objective problem DTLZ4 is shown in Fig. 5. As is shown, in the results of NSGA-II, the distribution of individuals is disorganized, with obvious overlap between individuals. In contrast, the distribution of individuals in AMP-NSGA-II is closer to the Pareto optimal solution and the individual diversity is better. Therefore AMP-NSGA-II distributes more evenly than NSGA-II individuals and has better convergence performance, which proves that the improved method in this paper is effective.

In order to test the competitiveness of proposed AMP-NSGA-II, it was compared with some state-of-the-art multi-objective evolutionary algorithms (MOEAs) such as AbYSS [8], GWASFGA [12], SMPSO [9] and DMOPSO [6]. Source codes of these algorithms are available through the website: https://jmetal.github.io/jMetal/ and their parameters are set as suggested by the original. Tables 3 and 4 show the performance comparison over GD and \(I_{h}\) performance on 12 test problem. As is shown in Table 3, in terms of GD metric in 12 test problems, AMP-NSGA-II obtained 4 best result, including 1 best result of the bi-objective problem and 3 best results of the three-objective problems. It is worth mentioning that DMOPSO obtained 7 best result in GD metric, but in terms of \(I_{h}\) metric, as is shown in Table 4, DMOPSO obtained none best result, and AMP-NSGA-II algorithm obtained 5 best result.

GWASFGA obtained 5 best \(I_{h}\) value, but the convergence of the algorithm is limited and didn’t get a the superior GD value. SMPSO and AbYSS obtained 1 best \(I_{h}\) result and 1 best GD result, respectively, that show a little inferior performance than AMP-NSGA-II, GWASFGA and DMOPSO. Pareto front for each algorithm with the best GD metric for problem DTLZ7 is plotted in Fig. 6. As is shown, though the GD result obtained by AbYSS is the best one, the distribution of individuals are trapped in the first block of the true Pareto front, which is not in line with our expectation. The other five algorithms have similar performance in Fig. 6, but the individuals obtained by DMOPSO have a high overlap in the fourth part of the pareto front and GWASFGA (80 individuals) is more biased toward the boundary of the pareto front. The individuals obtained by AMP-NSGA-II distributes visually more evenly than NSGA-II and SMPSO. In pairwise comparison from Tables 3 and 4 , our AMP-NSGA-II obtained 10 better GD results and 8 not less \(I_{h}\) results than the AbYSS, 11 better GD results and 7 not less \(I_{h}\) results than GWASFGA, 8 better GD results and 11 not less \(I_{h}\) results than SMPSO, 5 better GD results, 11 not less \(I_{h}\) results than DMOPSO. Through the comparison with other four MOEAs, AMP-NSGA-II can be found to have superior results for most of the test problems.

4 Conclusion

In this paper, a multi-objective optimization algorithm for multi-population and multi-crossover operator mode is constructed. Single population in NSGA-II is divided into four subpopulations to improve the diversity of the population. According to the different contribution of sub-population to EXS solution set, \(Logistic\) model is used to realize adaptive adjustment of sub-population. The update of EXS solution set is divided into pop-Update and self-Update two stages, thus the local search program of the algorithm is given. The paper gives a scheme to adjust the size of various groups, so that the population quantity is related to the contribution of EXS solution set. The proposed algorithm is tested by 12 standard test functions, the experimental results show that:

-

1)

The comparison with the original NSGA-II shows that the improvement of the proposed strategy is positive and the t-test shows that the performance of the algorithm is significantly different and has been qualitatively improved.

-

2)

The addition of multiple populations increases the diversity of the population and makes the distribution of individuals more even and overcome local optimality effectively.

-

3)

The adaptive scheme combined with local search algorithm allocate more evolution chances to certain subpopulations with more appropriate crossover operators, making the algorithm guarantee a robust performance for different kinds of multi-objective problems.

-

4)

Under the combination of multiple partial changes, the performance of the proposed algorithm is comparative or superior to some state-of-the-art MOEAs for the ZDT and DTLZ series of problems.

References

Cai Y, Wang J (2015) Differential evolution with hybrid linkage crossover. Inf Sci 320:244–287

Deb K, Anand A, Joshi D (2002a) A computationally efficient evolutionary algorithm for real-parameter optimization. Evol Comput 10(4):371–395

Deb K, Pratap A, Agarwal S, Meyarivan T (2002b) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evol Comput 6(2):182–197

Deb K, Thiele L, Laumanns M, Zitzler E (2005) Scalable test problems for evolutionary multiobjective optimization. In: Abraham A, Jain L, Goldberg R (eds) Evolutionary multiobjective optimization. Advanced information and knowledge processing. Springer, London, pp 105–145. https://doi.org/10.1007/1-84628-137-76 https://doi.org/10.1007/1-84628-137-76

Eshelman LJ, Schaffer JD (1993) Real-coded genetic algorithms and interval-schemata. In: Foundations of genetic algorithms, vol 2. Elsevier, pp 187–202

Lee KB, Kim JH (2014) DMOPSO: Dual multi-objective particle swarm optimization. In: 2014 IEEE Congress on Evolutionary Computation (CEC). IEEE, pp 3096–3102

Li AD, He Z, Zhang Y (2016) Bi-objective variable selection for key quality characteristics selection based on a modified NSGA-II and the ideal point method. Comput Ind 82:95–103

Nebro AJ, Luna F, Alba E, Dorronsoro B, Durillo JJ, Beham A (2008) AbYSS: Adapting scatter search to multiobjective optimization. IEEE Trans Evol Comput 12(4):439–457

Nebro AJ, Durillo JJ, Garcia-Nieto J, Coello CC, Luna F, Alba E (2009) SMPSO: A new pso-based metaheuristic for multi-objective optimization. In: 2009. mcdm’09. ieee symposium on Computational intelligence in miulti-criteria decision-making. IEEE, pp 66–73

Pourvaziri H, Naderi B (2014) A hybrid multi-population genetic algorithm for the dynamic facility layout problem. Appl Soft Comput 24:457–469

Qiao S, Dai X, Liu Z, Huang J, Zhu G (2012) Improving the optimization performance of NSGA-II algorithm by experiment design methods. In: 2012 IEEE International Conference on Computational intelligence for measurement systems and applications (CIMSA). IEEE, pp 82–85

Saborido R, Ruiz AB, Luque M (2017) Global WASF-GA: an evolutionary algorithm in multiobjective optimization to approximate the whole pareto optimal front. Evol Comput 25(2):309–349

Toledo CFM, Franċa P M, Morabito R, Kimms A (2009) Multi-population genetic algorithm to solve the synchronized and integrated two-level lot sizing and scheduling problem. Int J Prod Res 47(11):3097–3119

Tran KD (2009) An improved non-dominated sorting genetic algorithm-ii (ANSGA-II) with adaptable parameters. Int J Intell Syst Technol Appl 7(4):347–369

Tsutsui S, Yamamura M, Higuchi T (1999) Multi-parent recombination with simplex crossover in real coded genetic algorithms. In: Proceedings of the 1st Annual Conference on Genetic and Evolutionary Computation. Morgan Kaufmann Publishers Inc., vol 1, pp 657–664

Yijie S, Gongzhang S (2008) Improved NSGA-II multi-objective genetic algorithm based on hybridization-encouraged mechanism. Chin J Aeronaut 21(6):540–549

Zhong YB, Xiang Y, Liu HL (2014) A multi-objective artificial bee colony algorithm based on division of the searching space. Appl Intell 41(4):987–1011

Zhou A, Qu BY, Li H, Zhao SZ, Suganthan PN, Zhang Q (2011) Multiobjective evolutionary algorithms: a survey of the state of the art. Swarm Evol Comput 1(1):32–49

Zitzler E, Deb K, Thiele L (2000) Comparison of multiobjective evolutionary algorithms: Empirical results. Evol Comput 8(2):173–195

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhao, Z., Liu, B., Zhang, C. et al. An improved adaptive NSGA-II with multi-population algorithm. Appl Intell 49, 569–580 (2019). https://doi.org/10.1007/s10489-018-1263-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-018-1263-6