Abstract

Mitigating the disastrous effects of natural disasters by performing preparation activities is one of the main purposes of relief organizations. However, the high degree of uncertainty associated with disasters impedes the work of aid agencies considerably. In this regard, two-stage stochastic programs are often used in the relevant literature to support decision making in these situations. An accelerated L-shaped method is proposed in this work, which solves realistic large-scale two-stage stochastic problems within a reasonable time-frame, allowing relief organizations to react to short-term forecasts, as e.g. available in case of hurricanes or floods. In particular, computation times needed for solving the resulting sub-problems via a specialized interior-point method are significantly reduced by exploiting the specific structure of second-stage constraints. To show the superiority of this approach with respect to solution times, a realistic large-scale case study is developed for America’s hurricane-prone south-east coast. The accelerated L-shaped method outperforms the standard L-shaped method significantly whereas a commercial solver failed to solve the case study within an acceptable time-frame.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Disasters represent a serious problem for affected communities not only because of their destructive force but also because of their unpredictable nature. To be able to support decision makers like relief organizations, two-stage stochastic programs are often proposed in the disaster management related literature (Grass and Fischer 2016). This modeling approach is popular since decisions made before an uncertain event like a disaster, so-called first-stage decisions, can be improved in terms of, e.g., demand satisfaction by taking possible future developments and interim second-stage decisions into account. For instance, humanitarian organizations often have to purchase and store relief items, e.g. water or medicine, even before a disaster strikes. These first-stage decisions have to be made before information concerning demand as well as with respect to the actual network conditions becomes available. In the aftermath of a disaster, decisions regarding, e.g., the distribution of previously stored relief items to affected or injured people have to be made which belong to the second stage. Note that roads could become impassable and storage decisions have to take such possible circumstances into account in advance.

In order to make the application of the two-stage stochastic models valuable for humanitarian organizations, efficient solution methods are required. However, the relevant literature focuses more on model presentation than on proposing suitable solution algorithms. This constitutes a major obstacle to the use of two-stage stochastic programs in practice. Especially solution times of commercial solvers like CPLEX or Gurobi are much too long for realistic large-scale problems (Zheng et al. 2013; Pay and Song 2017).

A practical example where computation time is crucial is the forecast of hurricanes. In general, hurricanes can be predicted three to five days in advance. Based on these forecasts aid agencies often use existing buildings like hospitals and gyms as temporary warehouses or shelters. However, weather conditions can change the path and strength of a hurricane within few hours as it happened in 2005. Hurricane Wilma strengthened from the lowest category 1 (113 km/h) to the highest 5 (295 km/h) just within 24 h. To ensure fast response also in such cases, efficient solution methods are essential. Therefore, the aim of this paper is to propose an algorithm which solves a whole class of two-stage stochastic programs in disaster management within a reasonable time-frame and with a desired accuracy level. A representative of this class is the model proposed by Rawls and Turnquist (2010) for locating and pre-positioning relief items in preparation for a disaster. Such two-stage programs are commonly solved by the L-Shaped method (Slyke and Wets 1969) which is also known as the stochastic Benders decomposition. Since realistic problems are constantly increasing in size and complexity, decomposition methods are becoming increasingly popular as shown in the recent survey of Rahmaniani et al. (2017). The main idea of decomposition methods is to split up the original problem into smaller sub-problems which are easier to solve. In case of the L-shaped method, the two-stage structure is exploited by separating first and second stage. For the two-stage stochastic programs considered here, the resulting second-stage problems are often very large, requiring an efficient solution algorithm themselves. To solve these sub-problems efficiently, a specialized interior-point method (SIPM) is proposed in this work which exploits the specific structure of second-stage constraints in a new way leading to significant computational time savings.

Interior-point methods are often preferred to solve sub-problems within the L-shaped method in parallel, i.e. simultaneously, see e.g. Nielsen and Zenios (1997) and Fragnière et al. (2000). As the number of iterations remains nearly unaffected by an increased problem size, interior-point methods are particularly suitable for very large linear sub-problems (Gondzio and Sarkissian 2003). In addition, computations within interior-point methods can be executed in parallel, accelerating the solution process further. For instance, De Silva and Abramson (1998) show that their parallel interior-point method outperforms the simplex algorithm despite of its better warm-starting capabilities.Footnote 1 Interior-point methods are also applied by Naoum-Sawaya and Elhedhli (2013) where Benders decomposition is embedded in a Branch-and-Bound algorithm for the multicommodity capacitated fixed charge network design problem and the capacitated facility location problem. The latter is also investigated in Castro et al. (2016) for multiple periods. The approach proposed by these authors is related to the solution method presented in this paper. However, the problem considered there is not a two-stage stochastic program and hence, does not posses the specific structure of second-stage constraints which is discussed in this work.

In the humanitarian literature examples or case studies of only moderate size are usually chosen to ensure the solvability of the proposed model by means of commercial solvers or to evaluate the quality of the applied heuristic. In addition, the lack of adequate data impedes the design of genuine examples. However, large scale problems of pre-positioning relief items become more and more relevant for practical applications and more realistic examples have to be considered to validate methodological developments in realistic settings.

Therefore, a large-scale case study on pre-positioning relief items in the Gulf of Mexico area is developed in this work. Decisions have to be made concerning the location of facilities and the storage quantities of relief items prior to the beginning of the American hurricane season. The case study is based on the one presented by Rawls and Turnquist (2010) which is extended in different ways to make it more realistic. For instance, information from various sources like the National Oceanic and Atmospheric Administration (NOAA) and the International Federation of Red Cross and Red Crescent Societies (IFRC) is integrated. The resulting large-scale case study is solved by the accelerated L-shaped method showing significant advantages compared to commercial solvers and heuristics.

The main contribution of this work is to develop the specialized interior-point method (SIMP) which exploits the specific structure of second-stage constraints inherent in two-stage stochastic programs. Using this algorithm to solve the sub-problems within the standard L-shaped method leads to its accelerated version. Numerical tests show that the newly proposed accelerated L-shaped method can provide fast and near-optimal solutions to realistic large-scale problems. Obtaining solutions fast is especially useful for humanitarian organizations as they have to react as quickly as possible to fast-approaching disasters.

This paper is organized as follows: The two-stage stochastic model for pre-positioning relief items (Rawls and Turnquist 2010) is described in Sect. 2 and used as a reference model for two-stage stochastic programs in disaster management. After highlighting the important second-stage constraint structure of the model, the accelerated L-shaped method is proposed in Sect. 3. For this purpose, the classical L-shaped method is described first in Sect. 3.1. The specialized interior-point method (SIPM) is introduced in Sect. 3.2, leading to the accelerated L-shaped method. This algorithm is then applied to solve the large-scale case study, presented in Sect. 4.1. The numerical results discussed in Sect. 4.2 illustrate the benefits of the newly proposed solution method. Finally, conclusions and future research directions are given.

2 A two-stage stochastic program for pre-positioning relief items

Pre-positioning relief items especially nearby disaster-prone areas can be decisive in mitigating negative impacts of catastrophic events (Turkeš et al. 2017). In addition, storing first-aid items in advance may lead to purchase cost savings.

One of the most influential models in this regard is the one proposed by Rawls and Turnquist in 2010. In addition, it is a classical two-stage stochastic program and therefore used as the reference model in the following. In this model, location and storage decisions are made on the first stage, i.e. prior to a disaster. In the aftermath of a disaster, second-stage decisions are made concerning, e.g. the distribution of these items to areas where they are needed. The network considered in this model contains nodes to represent potential locations where storage facilities can be opened and demand for aid supplies can arise. In case of a disaster, pre-positioned items can be transported to these who are in need on roads, represented as arcs in the respective network. Demand as well as possible damages to storage facilities and roads depend on the location and the magnitude of a disaster which is not known a priori. Such uncertainties are modeled by defining appropriate scenarios with corresponding probabilities of occurrence.

In order to make the description of the newly proposed solution method more self-contained, the model and its notation are given below (Rawls and Turnquist 2010).

Sets:

I: set of demand nodes

K: set of relief items

L: set of facility size categories

N: set of nodes, \(I \subseteq N\)

R: set of arcs in the network

S: set of possible scenarios

Scenario-independent parameters:

\(\mathrm {b}^k\): space requirement per unit for relief item \(k \in \mathrm {K}\)

\(\mathrm {c}_{(i,\,j)}^{k}\): costs for transporting one unit of relief item \(k \in \mathrm {K}\) on link \((i,j) \in R\)

\(\mathrm {F}_{l}\): costs for opening facility of size \(l \in \mathrm {L}\)

\(\mathrm {h}^k\): penalty cost per unit for unused relief item \(k \in \mathrm {K}\), e.g. holding or spoilage costs

\(\mathrm {M}_{l}\): capacity of a facility of size \(l \in \mathrm {L}\)

\(\mathrm {p}^k\): penalty cost per unit for unsatisfied demand of relief item \(k \in \mathrm {K}\)

\(\mathrm {q}^k\): acquisition cost per unit for relief item \(k \in \mathrm {K}\)

\(\mathrm {u}^k\): required transport capacity per unit of relief item \(k \in \mathrm {K}\)

Scenario-dependent parameters:

\(\mathrm {P}_s\): probability of scenario \(s \in \mathrm {S}\)

\(\rho _{i}^{k\,s}\): proportion of relief item \(k\in \mathrm {K}\) at node \(i \in \mathrm {I}\) in scenario \(s \in \mathrm {S}\) which is still usable

\(\mathrm {U}_{(i,\,j)}^{s}\): maximal available arc capacity of link (\(i,\,j) \in \mathrm {R}\) in scenario \(s \in \mathrm {S}\)

\(\mathrm {v}_{i}^{k\,s}\): forecasted demand for relief item \(k\in \mathrm {K}\) at node \(i \in \mathrm {I}\) in scenario \(s\in \mathrm {S}\)

Scenario-independent decision variables (first stage):

\(r_{i}^{k}\): pre-positioned quantity of relief item \(k \in \mathrm {K}\) at node \(i\in \mathrm {I}\)

\(y_{i\,l}\): is 1, if facility of size \(l \in \mathrm {L}\) is opened at node \(i \in \mathrm {I}\); 0 otherwise

Scenario-dependent decision variables (second stage):

\(w_{i}^{k\,s}\): unsatisfied demand for relief item \(k \in \mathrm {K}\) at node \(i \in \mathrm {I}\) in scenario \(s \in \mathrm {S}\)

\(x_{(i,j)}^{k\,s}\): quantity of relief item \(k \in \mathrm {K}\) transported from i to j in scenario \(s \in \mathrm {S}\)

\(z_{i}^{k\,s}\): unused quantity of relief item \(k \in \mathrm {K}\) at node \(i \in \mathrm {I}\) in scenario \(s\in \mathrm {S}\)

The model formulation is as follows:

s.t.

The objective (1) is to minimize costs which are divided into first- and second-stage costs. Opening facilities (\(y_{il}\)) and pre-positioning relief items (\(r^k_i\)) causes costs on the first stage, i.e. before the disaster occurs. Second-stage costs contain transportation costs \(c_{(i,j)}^k\) and penalties for unused items \(h^k\) and unsatisfied demand \(p^k\), respectively. These costs are weighted by the probability of occurrence \(P_s\) for each scenario. Although these second-stage costs are not incurred de facto,Footnote 2 they are useful for supporting decisions on the first stage. Neglecting possible future developments of disasters when deciding where and how much to pre-position in advance (first stage), can have devastating consequences in terms of demand satisfaction later on. Therefore, possible second-stage actions are taken into account already before the catastrophe. In the aftermath of a disaster, actual demand and network conditions become known such that an updated distribution plan can be used in practice to supply affected people.

The first constraint group (2) ensures that not more than one facility is opened at node \(i \in I\). An open facility has a specific capacity \(M_l\), according to the chosen size, which cannot be exceeded by the pre-positioned amount of relief items, see (3). Both constraint groups (2) and (3) belong to the first stage and are therefore scenario independent. However, facility and pre-positioning decisions directly influence second-stage decisions concerning, e.g. transport quantities. According to (4), it is not allowed to transport more items along an arc than the available arc capacity \(U^s_{(i,j)}\). In case of road destructions, where \(U^s_{(i,j)}=0\) in (4) for a specific scenario, delivering relief items is impossible. The possibility of such road network conditions and the related risk has to be considered when location decisions are made. Moreover, facilities can be damaged or even destroyed such that the amount of usable items \(\rho _i^k r_i^k\) can be reduced significantly after a disaster. This has a direct implication for the amount of unsatisfied demand \(w_i^{ks}\) in (5). These flow conservation constraints balance, as usual, the incoming and outgoing flows. In particular, demand \(v_i^{ks}\) at node i is satisfied either by pre-positioned and undamaged items \(\rho _i^k r_i^k\) or by delivered quantities \(x_{(j,i)}^{ks}\). In case of a surplus, relief items remain unused, i.e. \(z_i^{ks}>0\). Constraints (5) are also referred to as linking constraints since the second stage is linked to the first stage through the use of first-stage variables \(r_i^k\).

As stated above, this model can be seen as a representative for two-stage stochastic programs in disaster management, especially in preparation for a disaster. For a unified presentation of the model and the corresponding solution method, a more general notationFootnote 3 of (1)–(8) is used in the following:

For the Rawls and Turnquist (2010) model first-stage vectors and matrix are denoted by \(a\in \mathbb R^{\vert I \vert (\vert K \vert + \vert L \vert )}\), \(\chi =(y ,r) \in \{0,1\}^{\vert I \vert \vert L \vert } \times \mathbb R^{\vert K \vert \vert I \vert }_+\), \(d\in \mathbb R^{2 \vert I \vert }\) and \(G\in \mathbb R^{2 \vert I \vert \times \vert I \vert (\vert K \vert +\vert L \vert )}\), respectively. Constraints (11) and (13) belong to the second stage with \(f,\gamma _s \in \mathbb {R}^{\vert R \vert \vert K \vert + 2 \vert N\vert \vert K\vert +\vert R\vert }_+\), right hand side vector \(t_s \in \mathbb {R}^{\vert R\vert +\vert N\vert \vert K\vert }\) and so-called technology matrix\(T_s \in \mathbb {R}^{\vert R\vert +\vert N\vert \vert K\vert \times \vert I\vert (\vert K\vert +\vert L\vert )}\) for each scenario s. The most important matrix with regard to the design of an efficient solution method is the recourse matrix\(W \in \mathbb {R}^{\vert R\vert +\vert N\vert \vert K\vert \times \vert R\vert \vert K\vert + 2 \vert N\vert \vert K\vert + \vert R\vert }\). If W is independent of s, as it is the case here, the two-stage program is said to have fixed recourse. For the underlying problem (1)–(8), the recourse is even complete, meaning that any first-stage decision \(\chi \) leads to a feasible second-stage decision \(\gamma _s\). This is due to the presence of variables \(w_i^{ks}\) and \(z_i^{ks}\) in (5). For instance, even if no facilities are opened and no relief items are stored, the corresponding second-stage solution is still feasible. In this case, demand remains completely unsatisfied such that the total costs in (9) contain only penalty costs for unsatisfied demand.

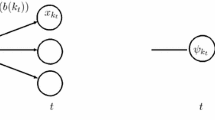

In order to get a better idea of the respective matrix structures, a small example is given below:

Example:

For \(\vert S\vert =3\), \(\vert N\vert =\vert I\vert =3\), \(\vert K\vert =2\) and \(\vert L\vert =2\), the first-stage vector \(\chi \) and matrix G in (10) look as follows:

The first three rows in G belong to constraints (2) and the last three rows to (3).

In case of the Rawls’ and Turnquist’s model, the second-stage decision vector \(\gamma _s\) for each scenario s is:

where slack variables \(g_{(1,1)},\ldots ,g_{\vert R\vert }\) for each arc \((i,j) \in R\) are added to \(\gamma _s\) to convert inequality constraints (4) into equalities. The technology matrix \(T_s\) contains only entries \(\rho _i^{ks}\) which are connected to the first-stage pre-positioning variables \(r_i^k\) in \(\chi \):

As will be shown later, the formulation of the recourse matrix W is decisive for an efficient solution algorithm in terms of fast computation times. The specific form is:

with \(u=[u^1 \cdots u^K 1] \in \mathbb {R}^{1\times (\vert K\vert +1)}\) and \(Q=\left[ \begin{array}{cccccc} -1 &{} &{} &{} 1 &{} &{} \\ &{} \ddots &{} &{} &{} \ddots &{} \\ &{} &{} -1 &{} &{} &{} 1 \end{array} \right] \in \mathbb {R}^{\vert N \vert \vert K\vert \times 2 \vert N\vert \vert K\vert }\). The recourse matrix W is a special lower block triangular matrix, where all entries are zero except on the main diagonal and the last block row. This structure is favorable for the solution method as will be shown in the next section.

Note that 1 in u corresponds to the slack variables \(g_{(1,1)},\ldots ,g_{\vert R\vert }\) as described above.

The last block row of W belongs to the flow conservation constraints (5) and contains block matrices \(H_i\in \mathbb {R}^{\vert N\vert \vert K\vert \times (\vert K\vert +1)}\), \(i=1,\ldots ,\vert R\vert \) such that \(H=[H_1 \;\; H_2 \;\; \cdots H_{\vert R\vert }] \in \mathbb {R}^{\vert N\vert \vert K\vert \times \vert R\vert (\vert K\vert +1)}\). For the example given above, H is as follows:

For instance, the first row in H indicates if relief items of type 1 are supplied to node 1 or transported away from node 1, i.e. \(-x_{(1,2)}^{1\,s}-x_{(1,3)}^{1\,s}+x_{(2,1)}^{1\,s}+x_{(3,1)}^{1\,s}\).

3 The accelerated L-shaped method

3.1 The L-shaped method

The two-stage structure in (9)–(13) is perfectly suited to split up the original problem into smaller sub-problems as it is done by the L-shaped method. Roughly speaking the idea is to solve the first- and second-stage problems separately and then to connect them through a set of optimality cuts; see Birge and Louveaux (2011) for a detailed description. The corresponding pseudo-code is given in Fig. 1.

The L-shaped method [based on Birge and Louveaux (2011, Ch. 5)]

In the initialization step \(\iota =0\), the so-called master problem containing only first-stage constraints (10) and (12) is solved without taking \(\sum _{s \in S} P_s f^T \gamma _s\) in (9) into account. The corresponding first-stage solution \(\overline{\chi }^0\) is included as an input parameter into each of the Ssub-problems in (16), see Fig. 1.

The dual of (16) is

where \(\pi _s\) is the vector of dual variables and is also referred to as the vector of simplex multipliers for each scenario s.Footnote 4 Using slack variables \(z_s\), an equality constrained maximization sub-problem is obtained:

For a given first-stage solution \(\overline{\chi }^0\) and the vector of simplex multipliers \(\overline{\pi }_s^0\), the objective function value of the second-stage sub-problem \(\vartheta ^0= \sum _{s \in S} P_s (t_s-T_s \overline{\chi }^0)^T \overline{\pi }_s^0\) is determined in the second step of the algorithm. If \(\vartheta ^0\) exceeds the current \(\theta ^0\), the counter of iterations \(\iota \) is increased by one and a first optimality cut

is inserted to the master problem, see Step 3 in Fig. 1.

For \(\iota =1\), the master problem in Step 1 of the algorithm is solved resulting in an updated first-stage solution \(\overline{\chi }^\iota \). Afterwards, \(\overline{\chi }^\iota \) is used for solving the sub-problems, leading to an additional optimality cut (15).Footnote 5 Note that \(\chi \) in (15) again is a vector of decision variables whereas \(\overline{\pi }_s^\kappa \) are the simplex multipliers found in previous iterations \(\kappa =0,\ldots ,\iota -1\). As long as the solution is not optimal, i.e. \(\theta ^\iota < \vartheta ^\iota \) for the current \(\overline{\chi }^\iota \), this process has to be repeated.

The main challenge is the efficient solution of the master problem in Step 1 and of the sub-problems in Step 2 of the L-shaped method. Often, integer or binary decision variables occur only on the first stage in the relevant literature, e.g. decisions concerning locations as in Rawls and Turnquist (2010), such that second-stage sub-problems are ’simple’ LP problems. However, these sub-problems can be very large in comparison to the master problem. For instance, assuming the entire American southeast coast with its 900 counties has to be prepared for the upcoming hurricane season, i.e. \(\vert N\vert =\vert I\vert =900\), such that the network contains potentially 810,000 shortest paths between each pair of nodes. While the actual network will likely be sparser, \(\vert R\vert =810{,}000\) arcs may exist in the worst case. If the number of items and possible facility sizes are set to \(\vert K\vert =\vert L\vert =3\), the master problem contains \(\vert I\vert (\vert K\vert +\vert L\vert )=5400\) decision variables (of which 2700 are binary) and \(2\vert I\vert =1800\) constraints. In contrast, each sub-problem has \(\vert R\vert \vert K\vert +2\vert N\vert \vert K\vert +\vert R\vert =3{,}245{,}400\) variables and \(\vert R\vert +\vert N\vert \vert K\vert =812{,}700\) constraints. Note that the respective sub-problem has to be solved S times, i.e. for each scenario, such that solving these problems efficiently is crucial for the overall performance of the L-shaped method. Usually, the linear sub-problems are solved via the simplex algorithm (Rahmaniani et al. 2017). However, as the problem size increases the computational effort of such exact methods becomes prohibitive. Therefore, here an iterative algorithm, namely the specialized interior-point method SIPM, is applied for solving the sub-problems as shown in the flow diagram of the accelerated L-shaped procedure in Fig. 2. In contrast, the master problem is solved via a commercial solver like CPLEX or Gurobi. The motivation behind this approach is that the master problem is of moderate size, e.g. 5400 variables as in the example above, and therefore does not present a significant hurdle for today’s optimization software.

Note that the only difference between the standard L-shaped method and its accelerated counterpart in Fig. 2 is the use of SIPM for the sub-problems.

3.2 A primal–dual interior-point method

In the following, it will be shown how the specific structure of W in (14) is exploited to improve a primal–dual interior-point method for solving sub-problems (16) and (17).Footnote 6

Especially for large scale problems, interior-point methods often outperform the simplex algorithm (Ferris et al. 2007, p. 212). Instead of evaluating vertices of the feasible region until the optimal solution is found, interior-point methods reach the optimal vertex from the interior. As a result, the algorithm can be terminated when the solution is close enough to the boundary, resulting in an approximate solution. This is particularly beneficial for the L-shaped method, since exactness is not required for the construction of valid optimality cuts (15) (Rahmaniani et al. 2017).

From the theory of linear programming it is well-known that a solution of a linear problem as presented in (16) is optimal if the following conditions are satisfied (Ferris et al. 2007, p. 108):

with \(\tilde{t}=t -T \overline{\chi }^\iota \), \(e=(1,\ldots ,1)^T\), \(Z=diag(z_1,\ldots ,z_n)\) and \(\varGamma =diag(\gamma _1,\ldots ,\gamma _n)\). Equation (19) states the primal and (18) the dual feasibility. Note that (20) represents the standard complementarity conditions, i.e. at optimum either \(\gamma _{j}\) or \(z_{j}\) has to be zero (for \(j=1, \ldots ,n\)). In this case the primal–dual solution vector is \((\gamma ^*, \pi ^*, z^*)\) if \(\gamma ^*\) is the solution of the primal problem (16) and \((\pi ^*, z^*)\) the solution of the dual problem (17). The main idea of interior-point methods is to perturb the complementarity condition (20) by introducing the barrier parameter \(\mu >0\):

During the solution process \(\mu \) is successively reduced such that the optimal solution is approached from the interior of the feasible set. In each iteration of interior-point methods, the system (18)–(21) has to be solved, where (20) is replaced by (22). Due to the nonlinearity of (22) the Newton methodFootnote 7 is applied to find the direction \((\triangle \gamma , \triangle \pi , \triangle z)\):

where I is an identity matrix of appropriate size. The right hand side of (23) represents the residuals of the corresponding constraints in (18), (19) and (22), respectively. In Fig. 3, the pseudo code of a general primal–dual interior-point method is given (Ferris et al. 2007).

After choosing an interior starting point \((\gamma ^0,\pi ^0,z^0)\), i.e. \(\gamma ^0,z^0\) are strictly positive, the search direction has to be determined in which the next step is taken. However, the new solution should not be outside the feasible region. This is ensured through a suitable choice of the step length parameter \(\alpha \), see Step 2 in Fig. 3.Footnote 8 If a predefined tolerance level is not reached in the last step of the algorithm (Step 3), the barrier parameter \(\mu ^{\mathbf {k}}\) is reduced and a new search direction is calculated.Footnote 9

3.3 Determining the search direction

The most expensive step within interior-point methods is the determination of search direction \((\triangle \gamma ^{\mathbf {k}},\triangle \pi ^{\mathbf {k}}, \triangle z^{\mathbf {k}})\) by solving (23) in each iteration \(\mathbf {k}\). Now, the idea is to exploit the specific structure of the recourse matrix W in (14) in order to reduce the size of system (23). This leads to the specialized interior-point method (SIPM). To simplify the presentation, the iteration counter \(\mathbf {k}\) is omitted for now.

Eliminating \(\triangle z\) from (23) by setting

leads to the so called reduced KKT-system:

with \(\mathcal {S} \in \mathbb R^{(2 \vert R\vert \vert K\vert +5\vert N\vert \vert K\vert +3\vert R\vert ) \times (2\vert R\vert \vert K\vert +5\vert N\vert \vert K\vert +3\vert R\vert )}\) for the Rawls and Turnquist (2010) model, the diagonal matrix D

with \(n=\vert R\vert \vert K\vert +2\vert N\vert \vert K\vert +\vert R\vert \) and right hand sides

One can even further reduce the KKT-system in (25) by eliminating \(\triangle \gamma \):

such that

and therefore the system of normal equations has to be solved:

with \(rhs=rhs_p+WD^{-1}rhs_d\). Solving (27) instead of (25) has disadvantages, e.g. if dense columns exist in W as it is often the case in practice.Footnote 10 However, due to the specific structure of W in (14), the so-called Schur complement\(WD^{-1}W^T\) of matrix \(\mathcal {S}\) in (25) is a block arrowhead matrix:

where only entries occur on the diagonal, in the last block row and last block column. The pattern of the arrowhead matrix allows the definition of block matrices in the following way:

with diagonal matrix A:

and

Then the system of normal equations (27) can be rewritten as

where \(\triangle \pi \) and the right-hand side rhs in (27) are partitioned accordingly.

Finally, eliminating \(\triangle \pi _1\) from (30)

leads to the equation:

where \(\mathcal {\tilde{S}}=(B-CA^{-1}C^T) \in \mathbb {R}^{\vert N\vert \vert K\vert \times \vert N\vert \vert K\vert }\) represents the Schur complement of \(W D^{-1} W^T\) in (29). As the recourse matrix W has full row rank, \(WD^{-1}W^T\) and thus its Schur complement \(\mathcal {\tilde{S}}\) is positive definite. After obtaining \(\triangle \pi _2\), one can determine the complete search direction \((\triangle \gamma ,\triangle \pi , \triangle z)\) just by back-solving the remaining Eqs. (31), (26) and (24). Note that these equations just require the inverses of diagonal matrices A, D and \(\varGamma \) which are computationally inexpensive. Now, the actual task is to solve (32) efficiently. However, this is not problematic since the major matrix size has been reduced significantly from \(2\vert R\vert \vert K\vert +5\vert N\vert \vert K\vert +3\vert R\vert \times 2\vert R\vert \vert K\vert +5\vert N\vert \vert K\vert +3\vert R\vert \) in (25) to \(\vert N\vert \vert K\vert \times \vert N\vert \vert K\vert \) in (32). In addition, one can exploit the symmetry and positive definiteness of \(\mathcal {\tilde{S}}\) by using the Cholesky factorization (Golub and Van Loan 2013, p. 163). In this way, \(\mathcal {\tilde{S}}\) is factorized such that

where \(\mathcal {L}\) is a lower triangular matrix.

Now, Eq. (32) can be solved through simple forward- and backward-substitutions:

Here, the product \(\vert N\vert \vert K\vert \) (locations multiplied by the number of relief items) and therefore the size of \(\mathcal {\tilde{S}}\) will not be very large in practical applications. Castro (2000) propose a similar solution approach for multi-commodity network problems where \(W D^{-1} W^T\) is also a block arrowhead matrix. However, W does not represent a recourse matrix in his application. In addition, the resulting Schur complement \(\mathcal {\tilde{S}}\) can reach a high degree of density and depends on the number of arcs which is very large in the multi-commodity network problem considered by Castro (2000). Therefore, the author suggests an iterative algorithm, namely the preconditioned conjugate gradient method, instead of Cholesky factorization.

The accelerated L-shaped procedure described in Fig. 2 can be applied to a whole class of two-stage stochastic programs as they are often proposed in disaster management. It is required that the underlying problem fulfills the required conditions for using the standard L-shaped method, i.e. the recourse should be fixed and integer variables occur only on the first stage. This is often the case for models proposed in humanitarian literature where, e.g. location decisions are made on the first stage (binary variables) and transport decisions on the second stage (continuous variables).

The second requirement for applying the accelerated L-shaped method is that the size of the master problem is relatively small. As indicated in Fig. 2, a commercial solver can be used in this case. Indeed, master problems in the humanitarian context are often of manageable size. For instance, first-stage decisions concerning relief locations are most frequently proposed in disaster management (Grass and Fischer 2016). As natural disasters hit usually a specific region with limited coverage, the corresponding locational master problem is therefore of moderate size.

Finally, the block triangular structure of the recourse matrix W in (14) is essential for the applicability of the specialized interior-point method. Various ordering techniques can be found in the literature which can reorder a matrix according to a block triangular form similar to (14). For instance, the reordering approach of Hu et al. (2000) can be applied to any sparse matrix independent of, e.g. symmetry. In this sense, the accelerated L-shaped method can be used to any two-stage stochastic programs fulfilling these conditions even beyond the humanitarian setting.

4 Realistic large-scale case study

In this section a realistic case study is designed to test the efficiency of the solution method proposed in the previous section. For reasons of comparability, this case study is based on the one used in Rawls and Turnquist (2010) considering the hurricane prone Gulf and Atlantic coast, but it is supplemented by more realistic data. For this case study solely hurricanes, i.e. storms with wind speeds of at least 119 km/h, are considered which can be classified into five categories depending on their wind speed, see Table 1.

4.1 Input data

In total, 900 counties at the American southeast coast have been hit by storms or hurricanes in the past. These counties are grouped to 90 nodes where each node represents the geographical center of the respective neighborhood, see Fig. 4. Hence, the number of nodes in the network, i.e. \(\vert I\vert =\vert N\vert \), has been increased from 30 to 90 in contrast to the case study of Rawls and Turnquist (2010). This allows a more detailed analysis since the area covered by each node and the distances between them are reduced. In this way, more precise estimations of affected people and the differentiation between areas which are actually hit by a hurricane and those which are close but less affected, are possible.

4.1.1 Storage facilities

Three different sizes of facilities can be opened with the following costs and capacities which are taken from Rawls and Turnquist (2010) for comparability reasons, see Table 2.

4.1.2 Specifications of relief items

For this case study five common types of relief items are considered with specifications given in Table 3. Water, MREs (meals-ready-to-eat) and tents belong to basic equipment of evacuated persons. Medical sets contain essential medicines for preventing epidemics and treating chronically ill persons whereas first aid kits (FAK) are used for injured people. The specific purchase costs \(q^k\), the consumption share per unit of transport capacity \(u^k\) and space requirement \(b^k\) are based on the South Carolina Emergency Operations Plan (SCEMD 2007) as well as the items catalogue of IFRC (2016).

Based on MSF (2016), costs for transportation \(c_{(i,j)}\) are set to $0.50 per kg and increase linearly with the respective distance.Footnote 11 Relief items can be transported only in case of undamaged arcs, i.e. where arc capacity is large enough. Here, \(U^s_{(i,j)}=100{,}000\) kg should be sufficient and is used for hurricane category 1–3 and for all arcs (i, j). Major hurricanes 4 and 5 cause complete destruction in specific scenarios s such that \(U^s_{(i,j)}=0\) for particular arcs (i, j). According to Rawls and Turnquist (2010), penalty costs for unused items \(h^k\) are one quarter of the purchase costs \(q^k\) and for unsatisfied demand, \(p^k=10\cdot q^k\).

4.1.3 Scenario generation

In order to determine realistic scenarios, the database International Best Track Archive for Climate Stewardship (IBTrACS) of the National Centers for Environmental Information is used, where all storms have been registered since 1900. In this time-frame, the landfall of 203 hurricanes can be identified with a diameter of 161 km on average (URI/GSO 2016). Hence, counties are assumed to be affected if they are located within 80.5 km of a hurricane path. Figure 5 shows areas which were hit by hurricanes of different categories from 1900 to 2014. Especially three regions were hit by hurricanes of the highest category (dark violet areas). One can deduce that these areas were also affected by weaker hurricanes and are therefore under particular threat. In contrast, counties far away from the coast were hit by weaker hurricanes (light pink areas) or by storms which are not classified as hurricanes (green areas).

The database IBTrACS provides information concerning locations, frequencies and strengths of hurricanes of the last 115 years. Hurricanes with similar characteristics, e.g. category and path, are clustered together allowing the definition of 118 scenarios. These scenarios differ in terms of hurricane location, demand for relief items and the destruction level of storage facilities and roads. In contrast, only 15 historical hurricanes are used for the case study proposed by Rawls and Turnquist (2010).

It is assumed that each of the 203 hurricanes which made landfall between 1900 and 2014 had the same probability to occur. As each scenario consists of several hurricanes these probabilities are summed up leading to the corresponding probability of scenario \(P_s\).

4.1.4 Demand

Since demand can be influenced by several factors, like hurricane category, additional floods or the degree of injuries, determining demand is a challenging task. It is assumed that the damage potential of a hurricane, as defined in Table 4, influences the actual demand for each scenario. For instance, 50% of the population in affected counties have to be supplied with pre-positioned relief items in case of a category-5-hurricane.

In addition, aid items should last for two days such that relief organizations have time to determine demand more exactly and can order additional supply. Following the emergency plan of South Carolina, e.g. three liters of water and two meals (MREs) per day and person are required (SCEMD 2007), i.e. one liter of water covers one-third and one meal covers one-half of the recommended daily amount, see Table 5. Hence, six liter of water and 4 MREs for each person have to be pre-positioned for the entire period of two days, as stated in the last column of Table 5.

The resulting case study has a problem size of 4,885,920 decision variables, of which 270 are binary, and over one million constraints (1,009,080). In contrast, a problem with 147,060 (90 binary) variables and 7608 constraints is studied and solved in Rawls and Turnquist (2010).

4.2 Computational results

4.2.1 Implementation details

All numerical experiments in this section were carried out on a Dell Latitude E7470 laptop with a dual 2.60 GHz Intel Core i7 processor and 8 GB of memory.

The commercial optimization solver Gurobi 7.0.0 was used to solve the original problem (1)–(8) directly. In addition, the standard and the accelerated L-shaped method, introduced in the previous section, were implemented in MATLAB 2016b. Note that the algorithm in Fig. 1 is a basic version of the L-shaped method and many acceleration techniques exist in the literature (Rahmaniani et al. 2017). For instance, the one-tree approach allows the implementation of a single search tree where solving the master problem to optimality in each iteration can be avoided (Naoum-Sawaya and Elhedhli 2013). In addition, advanced cut generation techniques, as proposed by, e.g. Saharidis and Ierapetritou (2013) and Sherali and Lunday (2013), can reduce the number of L-shaped iterations. However, the focus of this work is to highlight the superior behavior of SIPM concerning solution times for the sub-problems. As a result, and as will be shown in the following, the L-shaped method can be accelerated significantly. The advantage of the method proposed in Fig. 2 is that acceleration techniques like the one-tree approach and cut improvements can be applied in addition to SIPM. Such further developments of the L-shaped method seem very promising and are left for future research.

The only difference between the algorithm presented in Fig. 1 and the implementation in MATLAB is that a multi-cut approach (Birge and Louveaux 2011, 199) is used. In the classical version of the L-shaped method, a single optimality cut (15) is generated by summing up the multipliers \(\overline{\pi }_s^\kappa \) over all scenarios, potentially loosing valuable information. In the multi-cut approach one cut per scenario s is added to the master problem such that \(\theta \) is replaced by \(\theta _s\):

Hence, optimality cuts (15) are changed to

In this way, several optimality cuts are added to the master problem in every iteration, decreasing the required number of iterations, but also leading to an increased size of the master problem. As stated by Birge and Louveaux (2011), the multi-cut approach is recommended if the number of scenarios does not significantly exceed the number of first-stage constraints, as it is the case here.

According to Fig. 2, the Gurobi interface was used to solve the master problem in every iteration whereas each sub-problem was solved by either MATLAB’s built-in function linprogFootnote 12 (leading to the standard L-shaped method) or by SIPM introduced in Sect. 3.2 (leading to the accelerated L-shaped method). In the latter case a standard primal–dual path-following interior-point method was implemented in MATLAB (Ferris et al. 2007, Ch 8.3) but where the search direction was determined by the procedure described in the previous section. Solving (32) by the Cholesky factorization is the key issue within SIPM. For this purpose MATLAB’s built-in function chol was used to decompose matrix \(\mathcal {\tilde{S}}\) into matrices of lower and upper triangular form.

Both interior-point methods, i.e. linprog and SIPM, were terminated if the sum of relative residuals and the optimality gap

was smaller than 1e−06.Footnote 13

In the following these solution approaches are compared for the realistic large-scale case study.

4.2.2 Solution times for realistic large-scale case study

First, Gurobi 7.0.0 was used to solve the original model (1)–(8), requiring almost 65 h (see column ’Gurobi’ in Table 6).Footnote 14 A time-frame of almost three days is unacceptable especially when new short-term forecasts become available and disaster preparation has to take place in a rather short time-frame. Hence, the standard L-shaped method, i.e. where linprog was used for the solution of the sub-problems, was analyzed (see column ’L-Shaped’ in Table 6). According to Table 6 this approach led to a significant time reduction with an overall solution time of 7541 seconds (about 2 h). Applying the accelerated version of the L-shaped method resulted in further time savings. The solution time of 3968 s (about 1 h) was nearly twice as fast as the standard L-shaped method.

Numerical tests showed that after the relative optimality gap \(\frac{\text {upper bound} -\text {lower bound}}{\text {upper bound}}\) fell below 0.008 it remained almost unchanged in the following L-shaped iterations.Footnote 15 Such a tailing-off effect is a well-known drawback of Benders decomposition methods and several techniques are presented in the literature to overcome this unfavorable behavior (Rahmaniani et al. 2017). However, most of these approaches are problem-dependent and require adjustments for each problem formulation. As already mentioned, the main purpose of this work is to show the efficiency of the specialized interior-point method with respect to computation times and therefore no further acceleration techniques for the L-shaped method were used.

The good performance of the accelerated L-shaped method indicated in Table 6 is due to SIPM. As shown in Table 7, SIPM required on average 0.98 seconds for one sub-problem, i.e. for one scenario. Hence, 115.6 seconds (1.9 min) were needed on average to solve 118 scenarios in each iteration of the accelerated L-shaped method. Almost 80% of the overall time (3121 s of 3968 s) accounted for solving the sub-problems whereas the master problem is computationally inexpensive. In contrast, MATLAB’s built-in function linprog solved each of these 118 sub-problems in 2 seconds on average, i.e. double as much time as the new approach. As a result the standard L-shaped method in Table 6 needed twice the time of its accelerated counterpart.Footnote 16

Although a MATLAB implementation is not competitive compared with codes written in C/C++, it is intuitively easier to understand. Due to MATLAB’s user-friendly interface the accelerated L-shaped method is transparent and can be readily verified. Comparing linprog with the specialized interior-point method is reasonable as both approaches are implemented in MATLAB with the exception that the calculation of the search direction within linprog is coded in FORTRAN.

However, additional tests were carried out where Gurobi was used to solve the sub-problems. With Gurobi a solution time of 1.49 s per sub-problem was achieved on average when the dual simplex was chosen (see ’Dual Simplex’ in Table 7). Using Gurobi’s barrier method instead led to an average time of 0.93 s for each sub-problem. It can be concluded that if a more sophisticated programming language like Fortran or C++ was used, SIPM could outperform Gurobi further. It should be mentioned that calculation times could be reduced even more if the sub-problems were solved in parallel.

4.2.3 Location results for realistic large-scale case study

In this section the results for the specific planning problem are to be presented. Location decisions as determined by the proposed solution method are shown in Fig. 6, where the heuristically found solution of Rawls and Turnquist (2010) is added for comparison.

It is obvious that the solution structures concerning the location of facilities differ significantly. This is mainly due to the way in which scenarios have been defined, namely which areas have been identified as particularly vulnerable. In case of Rawls and Turnquist, two large-sized facilities are opened in areas which were hit by major hurricanes in the past and are therefore under particular threat. One can deduce that these facilities, and relief items stored there, are likely to be destroyed in a future hurricane setting. In this case items have to be supplied from the facility located at Memphis (blue triangle in the North). However, some serious injuries have to be treated with medicine within the first 4 h which cannot be guaranteed in this case. Even if some items are not time-critical, sufficient quantities may be not available due to the small size of this facility. In contrast, the solution of the large-scale case study suggests to locate much more small-sized facilities along the coast and the northern border. The latter can be used as backup storages if coastal facilities are destroyed.

It becomes clear that scenarios which only insufficiently reflect reality can have far-reaching implications with regard to the results and hence to the performance of humanitarian operations. When planning is based on more realistic scenario definitions, as in the proposed case study, storage facilities are located rather away from vulnerable areas, but still close enough to provide first aid. The results can then be used as decision support by humanitarian organizations

Facility locations as suggested by Rawls and Turnquist (2010) (top) and solution for the large-scale case study found by newly proposed method (bottom)

4.2.4 Additional tests

In order to verify the competitiveness of the accelerated L-shaped method additional tests were carried out. In particular, three case studies have been designed with problem sizes shown in Table 8.

The small-scale case study has the same size and data as the one proposed by Rawls and Turnquist (2010). Missing parameter values like distances and arc capacities were completed in the same way as described in Sect. 4.1.

The medium-scale case study is about half the size of the realistic case study with almost 700,000 constraints and two million decision variables.

Finally, the large-scale case study is the same size as the realistic case study but some scenario-dependent parameters were randomly altered. The purpose of this case study is to analyze the performance of the accelerated L-shaped method, and especially of SIPM, in the case of data changes.

According to Table 9 the L-shaped method cannot be recommended for small-sized problems as both versions were much slower than Gurobi, requiring several minutes instead of only seconds. The reason for the poor performance of the L-shaped method is that the master problem has to be solved in each iteration. Overall this is more time-consuming than solving the original problem directly. However, as the problem size increases the computational benefits of the L-shaped method become apparent.

As can be seen in Table 9, Gurobi needed almost 1 h to solve the medium-scale problem whereas the standard L-shaped method could reduce the overall solution time to about 22 min. The accelerated L-shaped method even halved this time again to about 10 min. Note that both versions of the L-shaped method were terminated after 16 iterations to avoid the tailing-off effect. As mentioned before the relative optimality gap showed little change in the following iterations. For reasons of comparability Gurobi was terminated after reaching a relative optimality gap of 0.06. However, as for the realistic case study, an actual gap of 0.02 was achieved.

For the large-scale problem, similar performance results as in Table 6 can be obtained. Moreover, the solution times are similar to the large-scale case study, i.e. almost 1 h for the accelerated method and 2 h for the standard method. Hence, changes in the data do not seem to have a big influence on the performance. In particular, both versions of the L-shaped method were much faster than Gurobi again reducing solution times significantly. Again this is mainly due to the use of SIPM for solving the sub-problems.

In Table 10 different solution approaches for sub-problems are compared.

The table reveals that SIPM is twice as fast as linprog independent of the problem size and input data. In addition, SIPM outperforms Gurobi’s dual simplex for the medium- and large-scale problem. Although written in MATLAB the specialized interior-point method can even compete with the barrier method of Gurobi. Based on these observations it can be recommended to implement SIPM into, e.g. CPLEX’s Benders decomposition to achieve solutions even faster.

As illustrated by the case study, more realistic problems are harder to solve even with state-of-the-art solvers. Here it was shown that exploiting the two-stage structure via the L-shaped method and making use of the special structure of the recourse matrix W in (14) leads to significant time savings. Of course, solution times may be not crucial for strategic location decisions. However, in practice most relief organizations use public buildings like gyms or town halls as provisional warehouses or shelters for evacuees and do not establish new buildings. Therefore, decisions where to store relief items are often made on a short-term basis and as soon as, e.g. a hurricane forecast is available. Hence, fast solution methods are essential to ensure responsiveness.

5 Conclusion and future research directions

Due to their unpredictable and devastating nature, disasters represent a serious threat for those who are affected. One of the main activities to mitigate human suffering is to take appropriate precautions like storing relief items at selected locations before a disaster occurs. Such decisions have to be made under a high degree of uncertainty and two-stage stochastic programming is a preferred approach in this regard. Especially the model proposed by Rawls and Turnquist (2010) is seen as a benchmark in the relevant literature and therefore used here as a representative for two-stage stochastic programs in disaster management. Obtaining solutions of these programs fast, especially in case of short-term forecasts, can be decisive in terms of rapid aid response and live-savings. Unfortunately, commercial solvers may be too memory- and time-consuming for realistic large-scale problems. As a remedy, heuristics are often suggested which have, however, several disadvantages. First, optimality of the resulting solution usually is not guaranteed and the optimality gap is often not measurable. Second, heuristics are usually problem-dependent such that relief organizations need the necessary know-how to use and adjust these heuristics for their applications.

Therefore, the L-shaped method is suggested in this paper which is an exact method but can be terminated when the desired accuracy level is achieved. In order to accelerate the standard L-shaped algorithm, the specialized interior-point method SIPM is used to solve the sub-problems efficiently by exploiting the specific structure of the second-stage constraints. In this way, the most expensive step within interior-point methods, namely the determination of search directions, is facilitated. To illustrate the advantages of this approach, a realistic large-scale case study for the hurricane-prone American south-east coast was designed. Time for solving this problem was reduced significantly in comparison to the Gurobi solver and to the standard L-shaped method. Additional tests confirmed the competitiveness of the newly proposed SIPM against Gurobi’s simplex and barrier method.

An important advantage of the accelerated L-shaped method is that it can be applied to a whole class of two-stage stochastic programs often proposed in the humanitarian literature. In order to extend this class of two-stage stochastic models even more, further developments are needed. For instance, difficult or large-scale master problems can be solved by constructing a single search tree as it is done by, e.g. the Branch-and-Benders-cut algorithm (Naoum-Sawaya and Elhedhli 2013) instead of repeatedly solving the master problem to optimality. Moreover, it would be useful to analyze what kind of cut-generation and branching strategies are particularly suitable for two-stage stochastic programs in disaster management. Extending the accelerated L-shaped method by both approaches represents a valuable research direction. In addition, future research should focus further on parallelized computations of sub-problems to generate several optimality cuts at the same time.

In conclusion, as solution methods which solve the underlying problem within a reasonable time-frame allow the humanitarian organization to react especially to short-term forecasts leading to less supply surplus, reduced costs, higher demand satisfaction and therefore reduced numbers of casualties, they are particularity useful for aid organizations. The L-shaped method and its modifications offer interesting future research opportunities in this regard.

Notes

Warm-starting is to use the solution to the previous problem as a starting point for the next problem. Especially simplex algorithms benefit from such warm-start points and can find the next solution after only few iterations. However, developing efficient warm-start strategies for interior-point methods is an active research area nowadays, see e.g. Cay et al. (2017).

Note that it is very unlikely that a scenario will occur exactly as predefined. Moreover, penalty costs for unsatisfied demand are only fictitious and used to minimize unmet demand.

The reader is referred to Birge and Louveaux (2011) for a thorough description of two-stage stochastic programs in general.

Note that simplex multipliers are also known as shadow prices which are available, e.g. in the final tableau of the simplex algorithm (Diwekar 2008, p. 23). However, the dual sub-problem will be important for the primal–dual interior-point method described in the next section.

For two-stage stochastic models where no complete recourse is given, so-called feasibility cuts have to be included to the master problem in addition to the optimality cuts (Birge and Louveaux 2011, Ch 5).

The approach is presented for one sub-problem such that the subscript s is omitted for now.

See Ferris et al. (2007) for a general description of the Newton method for interior-point algorithms.

An approach for setting the step length \(\alpha \) can be found in Ferris et al. (2007).

In such cases the matrix \(W D^{-1} W^T\) is often dense leading to an increased effort when computing its inverse.

Distances are determined by the ArcGIS software.

The built-in function linprog uses a predictor-corrector primal–dual interior-point method by default.

Such termination criteria are common for interior-point methods (Wright 1997, p. 226).

By default, Gurobi solves the MIP root node via the dual simplex method and run the barrier and simplex method on multiple threads concurrently for LP problems (Gurobi Optimization 2017).

For comparison reasons Gurobi was terminated if the relative optimality gap fell below 0.009. However, the relative optimality gap jumped from 0.173 to 0.004 in the last iteration of Gurobi.

As mentioned above, column ’L-Shaped’ in Table 6 refers to the method where MATLAB’s linprog and ’Accelerated L-Shaped’ where SIPM is used for solving the sub-problems. Since computation times for solving the master problem in each iteration are independent of the sub-problems and insignificant in comparison to the solution times of the sub-problems, the overall time for the L-shaped method consists mainly of computation times for the sub-problems. Therefore, solution times given in Table 7 have a major influence on the overall solution times of the L-shaped method as given in Table 6.

References

Birge, J., & Louveaux, F. (2011). Introduction to stochastic programming. Berlin: Springer.

Castro, J. (2000). A specialized interior-point algorithm for multicommodity network flows. SIAM Journal on Optimization, 10(3), 852–877. https://doi.org/10.1137/S1052623498341879.

Castro, J., Nasini, S., & Saldanha-da Gama, F. (2016). A cutting-plane approach for large-scale capacitated multi-period facility location using a specialized interior-point method. Mathematical Programming,. https://doi.org/10.1007/s10107-016-1067-6.

Cay, S. B, Pólik, I., & Terlaky, T. (2017). Warm-start of interior point methods for second order cone optimization via rounding over optimal Jordan frames. Technical report, ISE technical report 17T-006, Lehigh University, 2017. http://www.optimization-online.org/DB_HTML/2017/05/5998.html.

De Silva, A., & Abramson, D. (1998). A parallel interior point method and its application to facility location problems. Computational Optimization and Applications, 9(3), 249–273.

Diwekar, U. (2008). Introduction to applied optimization. Springer. http://www.ebook.de/de/product/7522360/urmila_diwekar_introduction_to_applied_optimization.html.

Ferris, M., Mangasarian, O., & Wright, S. (2007). Linear programming with MATLAB. Society for Industrial and Applied Mathematics,. https://doi.org/10.1137/1.9780898718775.

Fragnière, E., Gondzio, J., & Vial, J. P. (2000). Building and solving large-scale stochastic programs on an affordable distributed computing system. Annals of Operations Research, 99(1), 167–187. https://doi.org/10.1023/A:1019245101545.

Golub, G. H., & Van Loan, C. F. (2013). Matrix computations (4th ed.). Baltimore: John Hopkins.

Gondzio, J., & Sarkissian, R. (2003). Parallel interior-point solver for structured linear programs. Mathematical Programming, 96(3), 561–584.

Grass, E., & Fischer, K. (2016). Two-stage stochastic programming in disaster management: A literature survey. Surveys in Operations Research and Management Science, 21(2), 85–100. https://doi.org/10.1016/j.sorms.2016.11.002.

Gurobi Optimization. (2017). Parameter documentation. http://www.gurobi.com/documentation/7.5/refman/method.html#parameter:Method. Accessed December 05, 2017.

Hu, Y., Maguire, K., & Blake, R. (2000). A multilevel unsymmetric matrix ordering algorithm for parallel process simulation. Computers & Chemical Engineering, 23(11), 1631–1647. https://doi.org/10.1016/S0098-1354(99)00314-2.

IFRC. (2016). International federation of red cross and red crescent societies—Items catalogue. http://procurement.ifrc.org/catalogue/detail.aspx. Accessed January 01, 2017.

MSF. (2016). Aerzte ohne Grenzen e.V.-Private Communication.

Naoum-Sawaya, J., & Elhedhli, S. (2013). An interior-point Benders based branch-and-cut algorithm for mixed integer programs. Annals of Operations Research, 210(1), 33–55. https://doi.org/10.1007/s10479-010-0806-y.

Nielsen, S. S., & Zenios, S. A. (1997). Scalable parallel Benders decomposition for stochastic linear programming. Parallel Computing, 23(8), 1069–1088. https://doi.org/10.1016/S0167-8191(97)00044-6.

NOAA/AOML. (2016). National oceanic and atmospheric administration/Atlantic oceanographic and meteorological laboratory. http://www.aoml.noaa.gov/hrd/tcfaq/D5.html. Accessed August 18, 2017.

Pay, B. S., & Song, Y. (2017). Partition-based decomposition algorithms for two-stage stochastic integer programs with continuous recourse. Annals of Operations Research,. https://doi.org/10.1007/s10479-017-2689-7.

Rahmaniani, R., Crainic, T. G., Gendreau, M., & Rei, W. (2017). The Benders decomposition algorithm: A literature review. European Journal of Operational Research, 259(3), 801–817. https://doi.org/10.1016/j.ejor.2016.12.005.

Rawls, C. G., & Turnquist, M. A. (2010). Pre-positioning of emergency supplies for disaster response. Transportation Research Part B: Methodological, 44(4), 521–534. https://doi.org/10.1016/j.trb.2009.08.003.

Saharidis, G. K. D., & Ierapetritou, M. G. (2013). Speed-up benders decomposition using maximum density cut (mdc) generation. Annals of Operations Research, 210(1), 101–123. https://doi.org/10.1007/s10479-012-1237-8.

SCEMD. (2007). South Carolina emergency management division-South Carolina logistical operations plan: Appendix 7. http://dc.statelibrary.sc.gov/handle/10827/20614. Accessed August 18, 2017.

Sherali, H. D., & Lunday, B. J. (2013). On generating maximal nondominated benders cuts. Annals of Operations Research, 210(1), 57–72. https://doi.org/10.1007/s10479-011-0883-6.

Slyke, R. M. V., & Wets, R. (1969). L-shaped linear programs with applications to optimal control and stochastic programming. SIAM Journal on Applied Mathematics, 17(4), 638–663. https://doi.org/10.1137/0117061.

Turkeš, R., Cuervo, D. P., & Sörensen, K. (2017). Pre-positioning of emergency supplies: Does putting a price on human life help to save lives? Annals of Operations Research,. https://doi.org/10.1007/s10479-017-2702-1.

URI/GSO. (2016). University of Rhode Island and Graduate School of Oceanography-Hurricanes: Science and Society. http://www.hurricanescience.org/science/science/hurricanestructure/. Accessed August 18, 2017.

Wright, S. J. (1997). Primal–dual interior-point methods. Philadelphia: SIAM. https://doi.org/10.1137/1.9781611971453.bm.

Zheng, Q. P., Wang, J., Pardalos, P. M., & Guan, Y. (2013). A decomposition approach to the two-stage stochastic unit commitment problem. Annals of Operations Research, 210(1), 387–410. https://doi.org/10.1007/s10479-012-1092-7.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Grass, E., Fischer, K. & Rams, A. An accelerated L-shaped method for solving two-stage stochastic programs in disaster management. Ann Oper Res 284, 557–582 (2020). https://doi.org/10.1007/s10479-018-2880-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-018-2880-5