Abstract

Everyday, electricity generation companies submit a generation schedule to the grid operator for the coming day; computing an optimal schedule is called the unit-commitment problem. Generation companies can also occasionally submit changes to the schedule, that can be seen as intra-daily incomplete recourse actions. In this paper, we propose a two-stage formulation of unit-commitment, wherein both the first and second stage problems are full unit-commitment problems. We present a primal-dual decomposition approach to tackle large-scale instances of these two-stage problems. The algorithm makes extensive use of warm-started bundle algorithms, and requires no specific knowledge of the underlying technical constraints. We provide an analysis of the theoretical properties of the algorithm, as well as computational experiments showing the interest of the approach for real-life large-scale unit-commitment instances.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In energy management, a key problem known as “unit-commitment” deals with finding a minimal cost production schedule that satisfies the operational constraints of the production units and that meets customer load as closely as possible. (see the recent review Tahanan et al. 2015). Since operational constraints involve delays (start-up delays, etc.), the computed production schedule is often determined quite ahead of real-time. In electrical systems wherein renewable generation has overall high generation capacity, uncertainty is strongly present and has a key impact on both “feasibility” and “optimality” of the executed production schedule. In practice, spinning reserves and intra-daily changes to the schedule allow the operator to partially account for uncertainty. Highly binding operational constraints might give rise to difficult situations, wherein the quest for “feasibility” induces a heavy cost. As such, computing a schedule having seen at least part of the uncertainty might turn out to be less costly eventually. Ideally, two-stage unit-commitment problems would consider uncertainty on customer load, on renewable generation, on inflows for the hydro reservoirs and on unit availability. In this paper, we consider here the first two sources of uncertainty. The third one could also be integrated in our approach, following, e.g., van Ackooij et al. (2014).

Stochastic unit-commitment models are less common in the literature than deterministic ones, and none of them could capture the situation of this paper. Many existing approaches (including Philpott et al. 2000; Takriti et al. 2000, 1996; Nowak and Römisch 2000; Wu et al. 2007) use scenario trees where uncertainty in each node is known when the decision is taken. The robust unit-commitment models (Zhao and Zeng 2012; Bertsimas et al. 2013) decouple commitment decisions (starting/stopping status of each unit) from dispatch decisions (power output), which are taken only when uncertainty is known. In these approaches, it is unclear what schedule (including power output) has to be sent to the grid operator—which is our practical motivation. The two-stage model of Zheng et al. (2013) allows to adapt some commitment decisions and the resulting stochastic unit-commitment problems are amenable to mixed-integer linear programming solvers by using a technique of Sherali and Fraticelli (2002). However the problems tackled (with only five thermal units) is an order of magnitude smaller that the problems we target.

In this paper, we formalize the situation as two-stage models, wherein both the first and second stage are full unit-commitment problems. We see the occasional changes of schedule as intra-daily recourse actions, incomplete because of technical constraints on generation. We allow a rich modelling of these operation constraints (possibly, non-linear, non-convex, with discrete variables) that lead to large-scale mixed-integer problems, out of reach for direct approaches and even for existing decomposition methods. We propose a tractable primal-dual decomposition approach for these large-scale unit-commitment problems, attacking both stages by duality. Our algorithm uses the same ingredients (deterministic subproblems, cutting plane models, bundle methods, primal recovery heuristics) as in the deterministic case (see e.g., Dubost et al. 2005; Zhuang and Galiana 1988; Feltenmark and Kiwiel 2000). We pay a special attention to hot-starting which is a critical issue in view of tackling large-scale problems.

Here is the outline of this paper. First, Sect. 2 introduces unit-commitment problems in the deterministic and the stochastic cases: notation and assumptions are presented. The state-of-the art is sketched. Section 3 presents the decomposition algorithm, relaxing coupling constraints in both the first and second stages. The method is put into perspective in Sect. 4, where the convexification effect, the interpretation of stopping tests, and the convergence properties are analyzed. In the final section, we present numerical illustrations on real-life unit-commitment instances.

2 Stochastic unit-commitment: notation, assumptions, and state of the art

2.1 A structural viewpoint on deterministic unit-commitment: notation and presentation

Unit-commitment problems are already challenging in a deterministic setting: the units are coupled through constraints such as the offer-demand equilibrium constraint, and are subject to many technical constraints, specific to the their type (thermal, hydraulic, contracts). We consider here m units indexed by \(i=1,\ldots ,m\). We denote the decision variables (including production) of unit i by \(x_i\in \mathbb {R}^{n_i}\), its production cost by \(f_i(x_i)\) and its specific production constraints by \(x_i\in X_i\). The decision variable is thus \(x = (x_1,\ldots ,x_m) \in {\mathbb {R}}^n\) where \(\sum _{i=1}^m n_i = n\). Units are linked through the offer-demand equilibrium constraints, that state that deviation between production and customer load has to remain small. These constraints have the typical form

where \(s^d, s^u \in {\mathbb {R}}^T\) are operator chosen bounds, T is the number of time steps in the considered time horizon, \(D\in \mathbb {R}^T\) is the customer load, and A the \(T \times n\) matrix summing up the decision vector \(x = (x_1,\ldots ,x_m) \in {\mathbb {R}}^n\). An abstract formulation of deterministic unit-commitment has then the following form

Using aggregated objects \(f(x) = \sum _{i=1}^m f_i(x_i)\),

we can write the above unit-commitment problem in a short manner as:

Practical formulations of (2) often lead to mixed-integer problems. Since there now exist strong commercial solvers, this has become the major approach for solving unit-commitment (e.g., Carrión and Arroyo 2006; Morales-España et al. 2013a, b).

However the success of this direct approach strongly hinges on the the modelling detail that we decide to integrate in the subproblems. The sets \(X_i\) can indeed require a large number of modelling variables together with nonlinear terms in the objective and constraints. For example, hydraulic “units” are typically entire hydro valleys operating independently. Key constraints are bilateral bounds on volume in each reservoir, flow constraints, and technical constraints on turbining/pumping operation. Moreover, the value of water is typically computed by a mid-term planning tool. Uncertainty on inflows can also be taken into account, for instance by using joint probabilistic constraints as in van Ackooij et al. (2014). In quite a similar way, thermal units are subject to many technical constraints on power variations, starts, ramping rates, minimum up/down times. Most of these constraints imply non-convexities and typical modelling involves binary variables. Therefore, optimizing a single unit with respect to a price signal can already be quite challenging, see, e.g., Finardi and Silva (2006) for hydro valley units and Frangioni and Gentile (2006), Langrene et al. (2011) for thermal units.

In large-scale systems, or in systems requiring substantial modelling detail, decomposition approaches for (2) appear as the only viable solution. The unit-commitment instances for the French system for example are both large scale and require substantial modelling detail. In order to tackle such problems, the coupling constraints are often dualized, using Lagrangian techniques (see Dubost et al. 2005; Frangioni et al. 2011 and references therein). An important feature of this Lagrangian decomposition approach is that it provides marginal prices that are useful for the operator of the system. Though this approach leads to non-feasible primary solutions, it also gives good starting points for primal recovering heuristics (see Wang et al. 1995; Beltran and Heredia 2002; Dubost et al. 2005; Frangioni et al. 2008; Sagastizábal 2012; Zhuang and Galiana 1988).

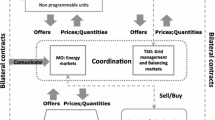

2.2 Recourse in unit-commitment, assumptions

A solution of problem (2) defines a production schedule x (commitment decisions and power output), sent to the grid-operator before being activated and before observing uncertainty. In real time, a new production schedule, redefining both commitment decisions and power output, can be sent to the grid-operator at specific moments in time. This implies that the recourse problem is exactly of the same structure as (2) and has the same complexity, but with a smaller time horizon.

More precisely, we consider an abstract random process \(\xi \in \mathbb {R}^k\) affecting uncertainty on customer load and renewable generation. Observing this process at time step \({\tau }\in \left\{ 1,\ldots ,T\right\} \) results in “observing” the net customer load \(D(\xi ) \in \mathbb {R}^T\). This load consists of \(D(\xi )_1,\ldots ,D(\xi )_{{\tau }}\), the actually observed net customer load of the previous time \(t=1,\ldots ,{\tau }\) and \(D(\xi )_{{\tau }+1},\ldots ,D(\xi )_{T}\), the current best forecast of net customer load after \({\tau }\).

We introduce the appropriate modification of \(X^2\) involving the change in D denoted

and the recourse cost function \(c : \mathbb {R}^n \times \mathbb {R}^k \rightarrow \mathbb {R}\cup \{+\infty \}\) as:

where P is a \(\ell \times n\) matrix having a single non-zero element for each line and column. The equation \(Px=Py\) models the fact that the power output of each unit prior to \({\tau }\) is fixed and that the recourse decision y can only modify power output after \({\tau }\). The segment of y corresponding to decisions taken prior to time \(\tau \) can be seen as duplicated according to the scenarios. The constraint \(Px=Py\) can thus be seen as an non-anticipativity constraint (see, e.g., Carøe and Schultz 1999) since it enforces that the decisions are equal on all scenarios. Note that we make a slight abuse of notation in (3): we use the total cost f(y) instead of the costs restricted to time after \(\tau \); this will simplify the notation in our developments.

To simplify presentation, we consider in this paper that the process \(\xi \) has a discrete distribution: its realizations (called scenarios) are labelled

We refer to Feng et al. (2015) for a recent work on load scenario forecasting. The expected recourse cost function is then naturally defined as

This leads to the following formulation of the two-stage unit-commitment problem, which is the problem we focus on in this paper

The constraints of the problem (5) are the same as the initial unit-commitment problem (2). As explained in the previous section, \(X^1\) can contain many binary variables, implicit constraints, joint probabilistic constraints. In this paper, we do not suppose to know \(X^1\) explicitly; we just make the following assumptions on our problem (5):

-

Practical assumption 1: we can solve (approximatively) the (sub)problems defined as minimizing the sum of \(f_i\) and a linear term over \(X_i\):

$$\begin{aligned} \min _{x_i\in X_i} f_i(x_i) + b_i^{{\mathsf {T}}}x_i. \end{aligned}$$ -

Practical assumption 2: Lagrangian based primal recovery heuristics (e.g., Feltenmark and Kiwiel 2000; Takriti and Birge 2000; Borghetti et al. 2003) are readily available to build a primal feasible solution out of primal iterates and dual information.

-

Theoretical assumption on \(X^1\): each \(X_i\subset \mathbb {R}^{n_i}\) is compact. The compactness of \(X^1\) implies that its convex hull \({{\mathrm{conv}}}(X^1)\) is compact as well [by Hiriart-Urruty and Lemaréchal (1996a, III.1.4.3)]. Thus the sets \(X^1 \cap X^2\) and \({{\mathrm{conv}}}(X^1) \cap X^2\), and \({{\mathrm{conv}}}(X^1 \cap X^2)\), that will appear in our analysis, are also compact.

-

Theoretical assumption on f: each \(f_i:\mathbb {R}^{n_i}\rightarrow \mathbb {R}\) is a closed convex function on \(\mathbb {R}^{n_i}\). In view of the first practical assumption above, \(f_i\) should be simple, as piece-wise linear or quadratic. We also assume the \(f_i\) are bounded from below; without loss of generality, we consider \(f_i \ge 0\).

-

Consistency assumption: observe that (5) has implicit constraints coming from v, whose domain is

$$\begin{aligned} {\mathrm {dom}}(v) := \left\{ x : v(x)<+\infty \right\} = \left\{ x : \text {for all } \xi \in \varXi , \exists \, y\in X^2(\xi ) \text { such that } Px=Py\right\} . \end{aligned}$$Our final assumption is that \({\mathrm {dom}}(v)\) is nonempty, so that there exists a solution to (5).

2.3 Limitations of existing decomposition approaches

Two-stage unit-commitment problems are very difficult in a large-scale setting. In fact, computing \(c(x,\xi )\) for a fixed \((x,\xi )\) is a full unit-commitment problem, which is already difficult when the set \(X^1\) is complex and m large. Solving our problem (5) therefore requires a decomposition approach, that can be either primal (Benders decomposition) or dual (Lagrangian decomposition). Let us sketch these approaches and the existing methods proposed for related stochastic unit-commitment problems.

In a primal approach to (5), the recourse cost function at given x would involve modifications of \(X^1\) with initial condition x and constraints related to time steps after \(\tau \). Making appropriate changes to handle modified constraint sets might involve significant additional modelling, that we want to avoid. Note that, for specific two-stage models Takriti et al. (2000) proposes a Benders decomposition approach plugging the two-stage cost function into the first stage. This primal approach considers a simple form for the second-stage problem (which is fuel requirement problem).

A dual approach to (5), by duplicating variables along scenarios and dualizing the non-anticipativity conditions, would get rid of x, making unclear how to restore feasibility (i.e., \(x\in X^1\) and \(Px=Py\)). The strategy of Carøe and Schultz (1999), that embeds the Lagrangian scheme within a branch and bound method, is not possible in our setting, where the deterministic model is already too large to fit in such a scheme. A dual decomposition is considered in Takriti et al. (1996) for a specific stochastic unit-commitment problem. The commitment decisions are the only binary variables in \(X^1\) and a progressive hedging algorithm (Rockafellar and Roger 1991) is used to average these out. The sets \(X^1\) are still rather simple and one can easily fix the obtained schedule to create a \(X^1\)-feasible solution. This would not be possible when \(X^1\) is defined by many binary variables (e.g., some realistic thermal sub-problems might require up to 100 binary variables per time step). The recent work Cheung et al. (2015) also presents a dedicated progressive hedging algorithm.

Another Lagrangian-based decomposition is proposed by Carpentier et al. (1996) where uncertainty is discretized on a scenario-tree, and an augmented Lagrangian dual is used to relax the coupling constraints of \(X^2(\xi )\). The subproblems are then stochastic 1-unit optimization problems, requiring important modifications of \(X^1\) and special resolution approaches, which we want to avoid here. Similar approaches (Dentcheva and Römisch 1998; Nowak 2000; Nowak and Römisch 2000; Gröwe-Kuska et al. 2002 ) can be viewed as geographical decomposition, following the terminology of Dentcheva and Römisch (2004). These methods all lead to stochastic subproblems requiring special treatments (by dynamic programming, for example as in Nowak and Römisch 2000). Finally, in Nürnberg and Römisch (2003), integer variables remain present in the second stage after decomposition of uncertain system wide constraints by stochastic Lagrange multipliers.

To sum up, all the existing primal or dual approaches

-

either involve significant simplifications of the second stage,

-

or make unclear how to recover feasible first stage solutions \(x \in X^1\),

-

or make significant changes to the set of technical constraints \(X^1\).

None of the existing decomposition approaches could tackle our stochastic unit-commitment problem (5) in our targeted applications. In the next section, we propose a primal-dual decomposition combining good aspects of both primal and dual approaches.

3 Primal-dual decomposition approach to two-stage unit-commitment

This section presents our decomposition algorithm for solving the large-scale stochastic unit-commitment problem (5). Our approach is primal-dual as it uses relaxation for both stages: the second stage dual algorithm constructs linearizations of the objective function in a way that we can store information for later use (Sect. 3.1), and the first stage dual algorithm produces lower bounds on the optimal value (Sect. 3.2). We introduce notation and recall basic properties in the first two sections, then we present our algorithm in Sect. 3.3.

3.1 Dual approach to the 2nd stage: linearizations of the objective

We apply here the standard Lagrangian relaxation mechanism (see e.g. Lemaréchal 2001) to the second stage problems (3) to build a cutting planes model of the recourse function v. For a given \((x,\xi ) \in \mathbb {R}^n \times \mathbb {R}^k\), we relax the linking constraints \(Px=Py\) and the coupling constraints in \(X^2(\xi )\). For any variable \(({\lambda }_1,{\lambda }_2,{\lambda }_3)\) in the dual space \(\varLambda := \mathbb {R}^\ell \times \mathbb {R}_+^T \times \mathbb {R}_+^T\), the dual function has the following structure:

where the function \(\bar{\theta }\), independent from x and \(\xi \), is defined by

The dual function \(\theta _{x,\xi }\) is concave by construction and computing a value and a subgradient amounts to computing \(\bar{\theta }({\lambda }_1,{\lambda }_2,{\lambda }_3)\) and \(g \in \partial \bar{\theta }({\lambda }_1,{\lambda }_2,{\lambda }_3)\). The interest of the Lagrangian approach resides in the fact that this computation decomposes over the production units:

Bundle algorithms [see e.g. Hiriart-Urruty and Lemaréchal (1996b, Chap.XV)] are the methods of choice for maximizing the dual function. We emphasize that all the information on \(\bar{\theta }\) computed during maximizing \(\theta _{x,\xi }\) can be stored and used to warmstart the maximization of \(\theta _{x',\xi '}\) for another \((x',\xi ')\). This will be of importance for the numerical efficiency of the method.

It is well-known that maximizing the dual function provides information on the primal value function c. Specifically, by weak duality, we have for \((x,\xi )\) and any \(({\lambda }_1,{\lambda }_2,{\lambda }_3) \in \varLambda \),

For a fixed \((\bar{x},\xi )\), this yields

We thus have a linearization at \(\bar{x}\) for the mapping \(c(\cdot ,\xi )\) and, obviously, the best linearizations are those given by the optimal dual solutions for \((\bar{x},\xi )\).

By integration over all the scenarios, we directly get a linearization of the recourse function v. Repeating this for several points \(x^1,\ldots ,x^{k-1}\) allows us to define a cutting-plane model of v

with appropriately aggregated \(\bar{g}^i\) and \(\bar{v}^i\). More specifically, by (4), we have

for the dual variables \(\left( {\lambda }_1(x^i, \xi _j),{\lambda }_2(x^i, \xi _j),{\lambda }_3(x^i, \xi _j)\right) \). Note that we aggregate linearizations as above to simplify presentation. As usual in stochastic programming (see e.g. Ruszczyński 2003), the cuts could also be combined in other ways; among them multi-cuts of Birge and Louveaux (1988, 1997) or the partially aggregating cuts of Xiong and Jirutitijaroen (2011). Our algorithm is compatible with any such versions; the effect of multi-cuts is illustrated in Sect. 5.2.

3.2 Dual approach to the first stage problem: lower bound for our problem

We use now the cutting plane model (8) to get a lower bound for our problem (5). For a fixed k, we consider the following approximated first stage optimization problem, wherein v is replaced by \(\check{v}_k\)

We dualize all the coupling constraints: those in \(X^2\) and those provided by \(\check{v}_k\). Gathering the linearizations in \(G_k:=(\bar{g}^i)_{i=1,\ldots ,{k-1}}\in \mathbb {R}^{n\times (k-1)}\) and \(b_k:=(\bar{v}^i)_{i=1,\ldots ,{k-1}}\in \mathbb {R}^k\), the concave dual function of (10) writes

for any dual variables \((\mu ,\nu _1,\nu _2)\in \mathbb {R}_+^{k-1}\times \mathbb {R}_+^T\times \mathbb {R}_+^T\) with \(\sum ^k_{k=1}\mu _k=1\). By weak duality and the fact that \(\check{v}_k(x) \le v(x)\), we have that \(\varTheta _{k}(\mu ,\nu _1,\nu _2)\) is a lower bound on the optimal value of (5). Note that the lower bound thus comes out as the addition of two gaps: the duality gap between (10) and its dual on top of the approximation gap coming from replacing v by \(\check{v}_k\). We need to control these two gaps in the algorithm.

3.3 Description of the algorithm

The material of the previous sections allows us to design the following decomposition approach for the two-stage unit-commitment problem (5).

-

Step 0 (Initialization): Choose the stopping tolerance \({\delta _{\mathtt{tol}}}>0\) and a feasibility detection target \({\theta _{\mathrm{feas}}}>0\) (strictly greater than the optimal value). Set parameters for the first and second stage bundle algorithms. Choose \((\nu ^{0}_1,\nu ^{0}_2)\in \mathbb {R}_+^T\times \mathbb {R}_+^T\), and set \(k=1\).

-

Step 1 (First stage): At iteration \({k}\), use a bundle method to maximize \(\varTheta _{k}\) in (11) starting at the current dual variables \((\mu ^{{k}-1},\nu ^{{k}-1}_1,\nu ^{{k}-1}_2)\). Run this algorithm until convergence or just for a few steps; when it is stopped, it returns new dual variables \((\mu ^{{k}},\nu ^{{k}}_1,\nu ^{{k}}_2)\) such that

$$\begin{aligned} \varTheta _{k}(\mu ^{{k}-1},\nu ^{{k}-1}_1,\nu ^{{k}-1}_2) \le \varTheta _{{k}}(\mu ^{{k}},\nu ^{{k}}_1,\nu ^{{k}}_2) \le \text {optimal value of (5)}. \end{aligned}$$ -

Step 2 (Lagrangian heuristics): Use any heuristic for recovering \(x^{{k}}\in X^1\cap X^2\) a primal feasible solution of (10). Define the observed duality gap by \(\varDelta _G^{k}:= f(x^{{k}}) + \check{v}_{k}(x^{{k}})-\varTheta _{k}(\mu _1^{k},\nu ^{k}_1,\nu ^{k}_2)\).

-

Step 3 (Second stage, model enrichment): For each couple \((x^k,\xi )\) with \(\xi \in \varXi \), maximize \(\theta _{x^k,\xi }\) using a hot-started bundle algorithm, stopped if \(\theta _{x^{{k}},\xi }({\lambda }_1^k,{\lambda }_2^k,{\lambda }_3^k) \ge {\theta _{\mathrm{feas}}}\). Once all second stage problems are processed, add a linearization to the cutting plane model to create \(\check{v}_{k+1}\). Define the approximation error \(\varDelta _A^{k}:= \check{v}_{k+1}(x^{{k}}) - \check{v}_{k}(x^{{k}})\).

-

Step 4 (Stopping Test): If \(\check{v}_{k+1}(x^{{k}}) \le {\theta _{\mathrm{feas}}}\), check if \(\varDelta _A^{k}< {\delta _{\mathtt{tol}}}\) holds, in which case, move to the next step. If not, increment k to \(k+1\) and return to Step 1.

-

Final step (Optional post-treatment): Try to improve \(x^{{k}}\) by using some (costly) heuristic approaches.

This algorithm has the same components as decomposition algorithms for deterministic unit-commitment, namely cutting plane models, bundle methods and primal recovery heuristics (see e.g., the section on Lagrangian decomposition in Tahanan et al. 2015 and references therein). Let us discuss some points.

3.3.1 First stage problem and Lagrangian heuristics

The dual bundle algorithm of Steps 1 provides good lower bounds together with information (primal-dual iterates defining the so-called pseudo-schedule via the optimality conditions) useful for the primal heuristics of Step 2 constructing near optimal solutions of (10), see Feltenmark and Kiwiel (2000), Takriti and Birge (2000), Borghetti et al. (2003). Contrary to the rest of the algorithm, some of these heuristics may use explicit knowledge of \(X^1\). When the problem is large-scale, the duality gap \(\varDelta _G^{k}\) obtained by these heuristics can be lower than 0.5 % (see e.g., Frangioni et al. 2011). Notice that, by definition of \(\varDelta _G^{k}\), we can write for any \(x \in X^1 \cap X^2\)

This shows that, for any k, the iterate \(x^{{k}}\) is a \(\varDelta _G^{k}\)-solution of the k-th approximation problem (10).

3.3.2 Model enrichment

In Step 3, we enrich the model (8) by adding the linearization given by (9) obtained by solving the S dual second stage problems. As the linearization might not be tight, we call it a “suboptimality cut”. In fact, these suboptimality cuts play a double role of being both optimality and feasibility cuts simultaneously. This will be illustrated in the next section and further studied in Sect. 4.1.

Recall that \(\bar{\theta }\) does not depend on \((x^{{k}}, \xi _j)\), so that we can keep the known linearizations of \(\bar{\theta }\) from one point to another, and from one scenario to another, in such a way that solving the S concave problems by bundle methods is not expensive. This will be illustrated numerically in Sect. 5.2.

After adding a row to \(G_k\) and an element to \(b_k\), we increase the size of \(\mu ^{{k}}\) by adding one zero variable. Note that this does not impact the best bounds, since we have \(\varTheta _{{k}+1}((\mu ^{{k}},0),\nu ^{{k}}_1,\nu ^{{k}}_2) = \varTheta _{{k}}(\mu ^{{k}},\nu _1^{{k}},\nu _2^{{k}})\). In practice, we also expand the subgradients stored in the current memory of the bundle method used to maximize \(\varTheta _{k}\). We can then warmstart the maximization of \(\varTheta _{k+1}\) with this new bundle information.

3.3.3 Stopping criteria

When hitting Step 5, the algorithm stops looping over \({k}\) considering the current model \(\check{v}_k\) as sufficiently rich. The test of Step 5 is then a pragmatic rule to stop the algorithm, testing that the model of the recourse function cannot be improved much around the current iterate. Obviously, expecting \(x^{{k}}\) to be the optimal solution of (10) is excessive, in view of the nonconvexities of the problem. The stopping test still has an intrinsic meaning for a convexified version of our problem. This is studied in Sect. 4.

3.3.4 Optional post-treatment

The heuristics of Step 2 are quick procedures that might not be entirely satisfactory. We describe them in Appendix 2. At the end of the algorithm, we might want to employ a more lengthy procedure to improve the solution. For instance we could employ an augmented Lagrangian based heuristic (e.g., Batut and Renaud 1992; Yan et al. 1995). Notice that this aims at decreasing \(\varDelta _G^{k}\) but at the risk of increasing \(\varDelta _A^{k}\). Therefore we quantify the changes to decide to accept the newly generated solution or retain the one that triggered the stopping test. To do so, we run one last additional model enrichment step and compare the sum of gaps \(\varDelta _G + \varDelta _A\).

3.4 Illustration on toy-problem

We illustrate here the behaviour of our algorithm on a toy example. A complete numerical study on large-scale unit-commitment instances will be presented in Sect. 5. The toy problem will also be used as an example in Sect. 4.3.

3.4.1 Description of the problem

The toy generation park has two production units \((i=1,2)\) and two periods (\(t=1,2\) and \(\tau = 1\)). The first unit has a production \(y_1\in \{0,3\}\) with cost \(5y_1\) and the second \(y_2\in [0,1]\) with cost \(10y_2\). For each period, we want a total production matching a load \(D=2\) with bounds \(s^d=-1\) and \(s^u=1\). Moreover, the first unit has the constraint that the production is constant over the two periods. Thus we have for this example

Observe that there are only two feasible solutions as \(X^1\cap X^2 = \{(0,1,0,1), (3,0,3,0)\}\). For the second stage, we generate a 100 load scenarios for \(D(\xi )\) uniformly in [1, 2). Since it does not satisfy the constraint \(1\le D(\xi ) -y^t_1 + y^t_2\), the solution (3, 0, 3, 0) not feasible for the second-stage problems. Therefore the optimal solution of this simple instance of (5) is (0, 1, 0, 1).

3.4.2 Run of the algorithm

We run the algorithm on this example for illustrating its behaviour. Convergence to the optimal solution is obtained in three iterations. At the first iteration, the first stage generates the non-feasible schedule (1, 0, 1, 0) and the primal recovery heuristic finds (3, 0, 3, 0), which is indeed a feasible solution as seen from the first stage. The second stage detects infeasibility of this solution and a suboptimal cut is added. During the second iteration, the bundle method generates (0, 1, 0, 1), which is also the retained primal iterate. The corresponding cut is added to the model. The third (and last) first stage iteration produces (0, 1, 0, 1) as candidate solution, which automatically triggers to the stopping criteria.

4 Putting the method into perspective

In this section, we analyze the theoretical properties of the algorithm: its convexification effect (in Sect. 4.1) and its convergence (in Sect. 4.2). We use standard techniques of convex analysis, and refer frequently to Hiriart-Urruty and Lemaréchal (1996a), but we will end up with subtle properties. In particular, we will see in Sect. 4.3 that our dual approach does not convexify at best the recourse function when the objective function is not linear.

4.1 Convexification effect of the algorithm

We study here the role of a convexified recourse function which sheds light on the behaviour of the algorithm. Our analysis features the convex envelope of the sum of f and the indicator function of \(X^1\), denoted \(f_{X^1}^{**}\). Such “restricted biconjuguates” are a standard tool, tracing back to Falk (1969), studied intensively in Lemaréchal and Renaud (2001), and already used in Dentcheva and Römisch (2004) in the context of stochastic optimization.

We introduce the convexified recourse cost function \(\bar{c} : \mathbb {R}^n \times \mathbb {R}^k \rightarrow \mathbb {R}\cup \{+\infty \}\) as the optimal value of the following (convex) problem with \(f_{X^1}^{**}\) as the objective function and \({{\mathrm{conv}}}X^1\) replacing \(X^1\) in the constraints

In the general convex case, the role of \(\bar{c}(\cdot ,\xi )\) is key in our approach, so we formalize in the next proposition its main properties.

Proposition 1

(Convex recourse cost) For a couple \((x,\xi ) \in \mathbb {R}^n \times \mathbb {R}^k\), \(\bar{c}(x,\xi )\) is the optimal value of the dual problem

Moreover, for any dual variables \(({\lambda }_1,{\lambda }_2,{\lambda }_3)\in \varLambda \), we have

The function \(\bar{c}(\cdot , \xi )\) is closed and convex with respect to x, and if there exists a \(({\lambda }_1(x,\xi ), {\lambda }_2(x,\xi ), {\lambda }_3(x, \xi ))\) attaining the sup in (14), we have \(P^{{\mathsf {T}}} {\lambda }_1(x, \xi ) \in \partial \bar{c}(x, \xi )\).

Proof

By definition of \(\bar{c}\), it follows from Lemaréchal and Renaud (2001, Theorem 2.11/2.12) that \(\theta _{x,\xi }\) defined in (7) is also the dual function of the problem (13). Now the compactness of \(X^1\) allows us to apply a inf/sup theorem as Rockafellar (1970, Cor.37.3.2) to the Lagrangian function associated with (13) to show that there is no duality gap, i.e., the equality (14). The equality also implies (15), by noting that \(\bar{c}(x,\xi ) \le c(x,\xi )\) (as it can been seen from their respective definitions (3) and (13), and the fact that \(f_{X^1}^{**} \le f + \hbox {i}_{X^1}\)). Expressed as a sup in (14), the convexity and closedness of \(\bar{c}(\cdot ,\xi )\) with respect to x is clear from Hiriart-Urruty and Lemaréchal (1996a, IV.2.1.2). To get the value of the subgradient at a fixed \((x,\xi )\), we develop (15) taken with z and \(({\lambda }_1(x,\xi ), {\lambda }_2(x,\xi ), {\lambda }_3(x, \xi ))\)

which ends the proof. \(\square \)

This result has two important consequences for our developments. As a first consequence, we directly get that the “convexified” expected recourse function defined by

is (indeed) convex and that we have a subgradient \(P^{{\mathsf {T}}}{\mathbb {E}}\left( { {\lambda }_1(x, \xi ) } \right) \ \in \ \partial \bar{v}(x)\) at \(x\in {\mathrm {dom}}(\bar{v})\). This yields that the cutting planes model \(\check{v}_k\) of (8)–(9) is not only an inexact cutting plane model for v: it is more precisely an (exact) cutting planes model for \(\bar{v}\) as

and moreover when \(x^i \in {\mathrm {dom}}(\bar{v})\), then \(\bar{v}^i = \bar{v}(x^i)\). Thus, in Step 3 of the algorithm, a linearization of \(\bar{v}\) is computed, and our algorithm sees in fact only \(\bar{v}\) (and not v). This means that our decomposition method, though using only objects defined from data of our initial problem (5), solves implicitly

This will be detailed further in Sect. 4.2. It is nevertheless important to note that \(\bar{v}\) is just a convex surrogate of v, but not its convex hull, as shown in Sect. 4.3.

Before moving to these two points, we emphasize the second important consequence of Proposition 1, about the implicit constraint in (17). When the iterate \(x^i\) does not belong to the domain of \(\bar{v}\), i.e., there exists a scenario \(\xi _{\ell }\) such that \(x^i \not \in {\mathrm {dom}}(c(\cdot ,\xi _{\ell }))\), then Proposition 1 gives that

Let us now argument that the fact that \(\theta _{x^i,\xi _{\ell }}\) tends to \(+\infty \) when \(x^i\) does not belong to the domain of \(\bar{v}\) implies that the (sub)optimality cuts in (16) act like feasibility cuts for \({\mathrm {dom}}(\bar{v})\).

Proposition 2

((Sub)optimality cuts act like feasibility cuts) Assume that we know a bound \(\varDelta >0\) on the duality gap

and a bound \(M>0\) on the objective function

If \(x^i\not \in {\mathrm {dom}}(\bar{v})\), then the (sub)optimality cut (9) allows the algorithm to cut off the point \(x^i\) (i.e., \(x^{{k}}\ne x^i\) for all \(k\ge i\)), provided that \({\theta _{\mathrm{feas}}}\) is large enough, more precisely

Proof

Let \(x^i \in X^1 \cap X^2\) and \(\xi _{\ell } \in \varXi \) be such that \(x^i \not \in {\mathrm {dom}}(c(\cdot ,\xi _{\ell }))\). We have (17) and then the bundle algorithm maximizing \(\theta _{x,\xi }\) in (6) will stop with the test \(\theta _{x_i,\xi _{\ell }}({\lambda }_1^i,{\lambda }_2^i,{\lambda }_3^i) \ge {\theta _{\mathrm{feas}}}\). Let now \(k> i\); we write

By definition of M, this shows that \(x^i\) cannot be an \(\varDelta \)-solution of the approximate problem (10) at iteration k. This implies that \(x^{{k}}\) cannot be \(x^i\), otherwise it would contradict (12). \(\square \)

In practice, we have a reasonable idea of M and \(\varDelta \). Note finally that they could be defined dynamically: in such a situation one would define

where \(\hat{x}^i\) is akin to the current best feasible point. As a consequence of such a setting, points that lack interest (such that \(\theta _{x_i,\xi _{\ell }}({\lambda }_1^i,{\lambda }_2^i,{\lambda }_3^i) \ge {\theta _{\mathrm{feas}}}\)), can no longer be distinguished clearly from points \(x^i \not \in {\mathrm {dom}}(\bar{v})\). This updating rule is similar to the one of the on-demand accuracy bundle of Oliveira and Sagastizábal (2014).

4.2 Convergence analysis

This section provides a convergence analysis of the algorithm. As previously explained, the algorithm uses dual approaches for both first and second stages that cannot distinguish between v and its convex counterpart \(\bar{v}\). This allow us to establish guarantees only for the convexified problem (17). The first result completes (12) about the quality of \(x^{{k}}\) at each iteration.

Proposition 3

(Approximate solution) If \(x^{{k}}\in {\mathrm {dom}}(\bar{v})\), then the approximation error defined in the algorithm satisfies

Consequently, \(x^{{k}}\) is a \((\varDelta _A^{k}+ \varDelta _G^{k})\)-solution of the convexified problem (17).

Proof

Observe that the expression of \(\check{v}_{k}\) in (8) gives that \(\check{v}_{k+1}(x^{{k}}) = \max \{\check{v}_{k}(x^{{k}}), \bar{v}^k\}\), which in turn gives

When \(x^{{k}}\in {\mathrm {dom}}(\bar{v})\), we have \(\bar{v}^k = \bar{v}(x^k)\ge \check{v}_{k}(x^{{k}})\), so that (19) holds. Finally, we deduce from (12) and (19) that for \(x \in X^1 \cap X^2\),

The above inequality means that \(x^{{k}}\) is optimal up to \(\varDelta _G^{k}+\varDelta _A^{k}\) for problem (17). \(\square \)

This gives a better understanding of our stopping test and the following optional improvement step. Roughly speaking, the stopping criteria means that the cutting-plane model is nearly optimal. However it does not give a controllable approximate solution because of the gap error \(\varDelta _G^{k}\) in the above lemma. The gap error is obtained by running heuristics, and, though these heuristics perform well in practice, they have no theoretical guarantee. The optional post-treatment step aims at improving the quality of the solution by decreasing \(\varDelta _G^{k}\).

The second result of this section establishes the convergence of the algorithm, under a technical assumption. The analysis follows usual rationale of standard cutting-plane methods in a convex setting [see e.g., Hiriart-Urruty and Lemaréchal (1996b, XII.4.2) and Ruszczyński (2003, Theorem 7)].

Theorem 1

(Asymptotic convergence) Assume that the algorithm generates a sequence, which after finitely many iterations, is all contained in a compact set C lying in the relative interior of \({{\mathrm {dom}}(\bar{v})}\). Then, the algorithm terminates after finitely many iterations, and the final iterate \(x_{{{\mathrm{final}}}}\) is a \({\delta _{\mathtt{tol}}}+\varDelta _G^{{{\mathrm{final}}}}\)-optimal solution for (17).

Proof

For all \(x \in C\), there exist a \(\varepsilon _x>0\) such that the ball of center x and radius \(\varepsilon _x\) is included in the relative interior of \({\mathrm {dom}}(\bar{v})\). Extracting a finite number of balls recovering the compact set C, we can strengthen the assumption as: there exists a \(\varepsilon >0\) such that the compact set

lies in the relative interior of \({\mathrm {dom}}(\bar{v})\). Together with the convexity of \(\bar{v}\) (consequence of Proposition 1), this gives us that \(\bar{v}\) is Lipschitz-continuous on \(C_\varepsilon \) (Hiriart-Urruty and Lemaréchal (1996b, IV.3.1.2)); let us denote by \(L>0\) the Lipschitz constant.

By assumption, there exists an index \(K \ge 0\) such that \(x^k \in C \subseteq \mathrm{int}{\mathrm {dom}}(\bar{v})\) for all \(k \ge K\). For sake of a contradiction, assume that the method does not terminate, which means that \(\varDelta _A^{k}> {\delta _{\mathtt{tol}}}\) for all \(k>K\). Since \(x^k\in {\mathrm {dom}}(\bar{v})\), (19) yields

Let us use the model \(\check{v}_k\) explicitly, by noting that for all \(K\le i<k\)

which yields, by the Cauchy–Schwarz inequality,

Now note that the subgradient inequality gives in particular that

which, by Lipschitz-continuity on the set \(C_\varepsilon \), implies that \(\textstyle {\Vert } g^i \textstyle {\Vert }\le L\). Finally, using the Lipschitz-continuity of \(\bar{v}\) again, we get from (20)

which contradicts the fact that we can extract a converging subsequence from \((x^k)\) belonging to the compact set C. Thus, the algorithm terminates, and the final approximation of comes from Proposition 3. \(\square \)

Arguably the convergence result is limited since it relies on an ultimately relative recourse like assumption. Looking closely to the proof, we see that the key implication of this assumption is that there exists a bound on the subgradients: \(\left\| \bar{g}_i\right\| \le L\) for all i. Such an assumption is standard in the analysis of cutting-plane method in convex case. For example, it appears explicitly in Ruszczyński (2003, Assumption 6). It also holds in the analysis of generalized Benders decomposition Geoffrion (1972, Theorems 2.4,2.5,Lemma 2.1).

4.3 On the convex envelope of the recourse function

As explained in the previous sections, the algorithm has a convexification effect featuring \(\bar{v}\), which is a “convex surrogate” of v. We show here how \(\bar{v}\) relates to the convex envelope of v. Fix \(\xi \in \varXi \) and introduce \(\tilde{c}: \mathbb {R}^n \times \mathbb {R}^k \rightarrow \mathbb {R}\cup \{+\infty \}\) defined by

as well as its expectation \(\widetilde{v} : \mathbb {R}^n \rightarrow \mathbb {R}\cup \{+\infty \}\) defined by \(\widetilde{v}(x) := {\mathbb {E}}\left( { \widetilde{c}(x,\xi ) } \right) \). These functions also provide convex surrogates of the recourse functions, as established by the next proposition.

Proposition 4

(Convexified functions) For \(x \in \mathbb {R}^n\) and \(\xi \in \varXi \), we have \(c(x,\xi ) \ge \widetilde{c}(x,\xi ) \ge \bar{c}(x,\xi )\), and

Moreover, \(\widetilde{c}(\cdot ,\xi )\) and \(\widetilde{v}\) are closed and convex. When \(X^1\) is convex, we have in fact \(\widetilde{c}(\cdot ,\xi ) = \bar{c}(\cdot ,\xi )\) and \(\widetilde{v} = \bar{v}\).

Proof

The two inequalities come directly by the inclusions of the sets

and the fact that \(f_{X^1}^{**} \le f\) on \(X^1\). Let us argue that \(\widetilde{c}(\cdot ,\xi )\) is a closed convex function, then the result for \(\widetilde{v}\) comes by integration. Write \(\widetilde{c}\) as a “lower-bound function” (see e.g Hiriart-Urruty and Lemaréchal (1996a, IV)):

with \(C =\{(x,r) \in \mathbb {R}^{n+1}: \exists y \in {{\mathrm{conv}}}(X^1 \cap X^2(\xi )) \text { such that } Px = Py \text { and } f_{X^1}^{**}(y)\le r\}\). Together with the properties of \(X^2(\xi )\) and \(f_{X^1}^{**}\), compactness of \(X^1\) (and consequently of \({{\mathrm{conv}}}(X^1 \cap X^2(\xi ))\)) yields that C is a closed convex set. As the lower-bound function of a closed convex set, \(\widetilde{c}\) is a closed convex function (by Hiriart-Urruty and Lemaréchal (1996a, IV.1.3.1)). Finally, in the case when \(X^1\) is convex, the inclusions of (21) are actually equalities, and then the functions are equal by definition. \(\square \)

The above lemma implies that the (closed) convex envelope of v is greater or equal to \(\widetilde{v}\). In fact equality holds in the linear case.

Proposition 5

Assume that f is linear, then the map \(\widetilde{v}\) is the closed convex envelope of v.

Proof

We just have to show that, for an fixed \(\xi \in \varXi \) the map \(x \mapsto \widetilde{c}(x,\xi )\) is the closed convex envelope of \(x \mapsto c(x,\xi )\). For convenience of notation, we drop the dependency on \(\xi \) in the proof. We start with noticing that c can be rewritten with the help of Dentcheva and Römisch (2004, Lem. 1) as

because f is linear and \(X^1 \cap X^2(\xi )\) compact. Let us show now that \(\widetilde{c}\) is equal to the biconjugate \(c^{**}={{\mathrm{conv}}}c\) (see Hiriart-Urruty and Lemaréchal (1996b, X.1.3.6)); the proof consists in establishing the equality between the convex conjugates of the two functions \(-c^* = -(\widetilde{c})^*\) . We start with computing \(-c^*\): for \({\lambda }\in \mathbb {R}^n\)

where \(\sigma _{\{x: Px=Py\}}\) is the support function of the affine space (in x) defined by the equation \(Px=Py\). Thus we get that \(-c^*({\lambda })\) is \(-\infty \) unless \({\lambda }\) lies in the image of \(P^{{\mathsf {T}}}\), and that, in this case,

Since f is affine, the above minimum can be taken on the convex hull of the constraints:

Observe that the right-hand-side is also \(-(\widetilde{c})^*(P^{{\mathsf {T}}}\mu )\), since its expression can be derived in the very same way as we get (22). Thus we have \(-c^* = -(\widetilde{c})^*\), and conjugating a second time gives \(c^{**} = (\widetilde{c})^{**} = \widetilde{c}\) using the fact that \(\widetilde{c}\) is closed and convex (by Proposition 4). \(\square \)

In the general case though, the two functions \(\widetilde{v}\) and \(\bar{v}\) are different: they do not have the same domain and also differ on the intersection of their domains. To see this, let us come back to the toy example of Sect. 3.4 with only one load scenario \(D(\xi )=2\)

Explicit expressions of v, \(\widetilde{v}\) and \(\bar{v}\) can be worked out by elementary considerations (skipped here): we have

and its convexified counterparts are

and, for \(x\in X= \left\{ x\in [0,3]\times [0,1] : 1\le x_1+x_2 \le 3 \right\} \),

As illustrated in Fig. 1, we see that \(\widetilde{v}\) is strictly above \(\bar{v}\). In particular, when restricted to the open segment \(\{(x_1,1-x_1/3), x_1\in (0,3)\}\), we have \( \widetilde{v}(x) = 20 + 10x_1/3 > \bar{v}(x) = \max \{20-10x_1/3,10+20x_1/3\}. \)

5 Numerical Experiments

5.1 Experimental setting

5.1.1 Data set description

We consider a data set coming from the French unit-commitment problems of EDF’s generation assets with 136 thermal units and 22 hydro valleys \((m=158)\) on a time horizon of two days discretized by half-hour \((T=96)\). Subproblems are modeled following classical operational formulations (see e.g., Tahanan et al. 2015): for thermal units, we set up a mixed-integer linear problem similar to the one of Frangioni et al. (2011), and for hydro units, we have a linear problem as in van Ackooij et al. (2014), Merlin et al. (1981). Details on the formulations of subproblems used in the numerical experiments are given in Appendix. Overall, we end up with a deterministic problem with 47,450 continuous variables, 26,112 binary variables and 812,906 constraints.

5.1.2 Load uncertaincy

We generate the uncertain loads as an average load on top of which we add a causal time series model (see, e.g., Bruhns et al. 2005). More precisely, we consider the Gaussian random variable \( D(\xi ) = \bar{D} + \zeta , \) where \(\bar{D}\) is an observed load and \(\zeta \) is an AR(3) model with coefficients \(\varphi := (0.9,0.6,-0.51)\) and Gaussian innovations. Writing the AR(3) process in its causal time series representation (see e.g., Shumway and Stoffer 2005), the covariance matrix of \(D(\xi )\) is \(\varSigma ^D = (C^D S)(S (C^D))^{\mathsf {T}}\), where S is a diagonal matrix with element

and \(C^D\) is the nominal covariance matrix with elements \(C_{ji} = \sum _{k=1}^{j - i + 1}\varphi ^D_k\) defined from

5.1.3 Stochastic unit-commitment instances

In (24), the parameter \(\mathtt{f}\) is a factor reflecting the load dispersion. By setting this parameter to three different values, we create three stochastic unit-commitment: a low-dispersion instance (with \(\mathtt{f}=1.1\)), a medium-dispersion instance (with \(\mathtt{f}=2\)), and a high-dispersion instance (with \(\mathtt{f}=3\)). Figure 2 plots several load scenarios for each level of dispersion. With 50 generated scenarios, each data set gives a global optimization problem (5) with 1,209,975 continuous variables, 665,856 binary variables and 20,729,103 constraints, which is out of reach for mixed-integer linear solvers.

5.1.4 Algorithm

On the three problems, we run the algorithm of Sect. 3.3, with the following setting. We set the stopping threshold to \({\delta _{\mathtt{tol}}}=1\) %. We also set to 300 the size of the limited memory of the second stage bundle [more precisely, this is the size of the storage of the bundle information on \(\bar{\theta }\) of (7)]. We observed that this choice offers a good trade-off for the bundle algorithm between the number of iterations and the cost of its quadratic subprograms. Finally, for the Step 3 of the algorithm, we use the four following Lagrangian heuristics (described in Appendix):

-

CTI: the commitment based heuristic Borghetti et al. (2003) with time independent priority list,

-

CTD: the same as above but with a time dependent list,

-

RH: the recombining heuristic of Takriti and Birge (2000),

-

allH: taking the best of the three above heuristics.

5.2 Numerical results

5.2.1 Results with 50 scenarios

Table 1 presents the computational results on the three stochastic unit-commitment described in the previous section (with \(S=50\) scenarios and with respectively low, medium, and high dispersion of the stochastic load). Let us point out three features in these figures. First we observe that the final gaps are rather small, often lower that 1 %, and quite comparable to those observed when solving deterministic unit-commitment problems. Second, the primal recovery heuristics give different results but without significant changes in the number of calls or in the final gaps. Notice that the result for the hybrid heuristic combining the three others gives the same results as the CTI for the instances with small and large dispersion. More precisely, the heuristic providing the best results is always CTI, except for a single iteration when processing the medium instance. Third, we emphasize that the number of oracle calls remains within reasonable limits. For comparison, using up to 300 oracles calls for solving deterministic unit-commitment problems is common [e.g., Dubost et al. (2005, Table 3.1)]. In our case we have provided a good initial guess for the dual signals and the bundle method converged in 72 iterations.

5.2.2 Effect of hot-starting

Our algorithm solving two-stage unit-commitment problems shows similar results and similar order of numerical complexity as a bundle algorithm solving deterministic unit-commitment problems. Recall though that our algorithm solves \((S+1)\) full unit-commitment problems at each iteration. The main reason for this is the efficiency of the hot-starting procedure for the model enrichment step.

Let us illustrate further this efficiency by showing on Fig. 3a, b the number of iterations of the second-stage for one run of the algorithm on the first instance. We observe that a change of iterates implies that early scenarios require several iterations, but that this effect quickly diminishes and only few additional iterations are needed for other scenarios. The overall computational effort remains rather constant as seen on Fig. 3b: around only 5 bundle iterations are needed in order to process a single scenario. The whole set of 50 scenarios can therefore be processed within approximately 250 bundle iterations. We recall that performing 10 iterations of the algorithm, while using 50 scenarios for representing uncertainty gives a global problem size equivalent to solving 510 full large scale unit-commitment problems.

5.2.3 Larger instances with more scenarios

We now provide some more results with an increased number of scenarios \(S\in \{50,100,250\}\). Let us focus on the high-dispersion instance and the CTI heuristic. Table 2 shows that the average number of oracle calls per iteration and scenario decreases as the number of scenarios increases. It is remarkable that the total number of oracle calls stays reasonable. Notice that we helped the bundle method by increasing the size of the memory of the bundle from 300 to 500 for this experiment. This explains the difference between the first line of Table 2 with the tenth line of Table 1.

5.2.4 Effect on the generation planning

We illustrate the typical effect of our two-stage approach on the final generation plannings, compared to the ones computed by a deterministic model. We see on Fig. 4a–c below that generation is transferred from inflexible but cheap production sources to more expensive but flexible sources. This includes significant changes for several hydro valleys as shown in Fig. 4c. However most hydro valleys retain a similar shaped production profile as illustrated in Fig. 4d.

5.2.5 A variant of the algorithm with multi-cuts

Recall that we use a cutting-plane model for v aggregating the cuts for each scenario in (9). Another option would be to set up a cutting-plane model for each scenario. In this case, the resulting model for v would be tighter, so the convergence possibly faster. On the other hand, the size of the dual multipliers \(\mu \) for problem (10) would be multiplied by S scenarios, and therefore the first-stage dual problems are in larger dimension. In a final experiment (with \(S=50\) scenarios), we investigate the effect of using a multi-cut variant of our algorithm. We take a tolerance \({\delta _{\mathtt{tol}}}=0.25\) %, smaller than before, to have more iterations and then better distinguish the effect of multi-cuts. Table 3 presents the results. The column related to the iteration ratio shows that occasionally the number of iterations increases by 30 % when using the multi-cut version, but the opposite phenomena appears as well. We thus observe that using a multi-cut approach is not always beneficial. The columns related to the first and second stage cost report the ratio of the total number of oracle calls per stage normalized by the total number of iterations. On average the second stage cost is roughly identical, whereas the first stage cost of the multi-cut version is around 12 % less than the mono-cut version. Thus, the increased size of the dual multipliers does not seem to be an issue. Note that the seemingly very poor performance of the multi-cut version on the medium instance with the CTD heuristic is due to a very sudden jump in precision in the mono-cut version. This leads to early termination, whereas in the multi-cut version progression towards the stopping criteria is far more gradual. Note that Table 3 seems to indicate that the increased precision of the multi-cut model is somehow offset by the primal recovery heuristics, even though they perform similarly well in both situations. The fact that disaggregating cutting plane methods does not always lead to a decrease of the number of iterations was also observed in deterministic unit-commitment, in e.g., van Ackooij et al. (2012).

6 Conclusion

In this paper, we have proposed a decomposition algorithm for solving two-stage problems, where both first and second stages are full unit-commitment problems. The algorithm makes no specific assumptions on the set of technical constraints for the units and only uses tools already well in place for deterministic unit-commitment. The working horses of the approach are several hot-started bundle methods with a small overall computational burden. We have analyzed the convergence of the algorithm and studied its convexifying effect. We have shown its efficiency on real life unit-commitment instances, for which a remarkably low number of iterations and oracle calls are needed to reach convergence.

There is room for improvement in the algorithm and its current implementation. It would be interesting to investigate using standard regularization techniques, in particular first stage bundle-like regularizations and second stage augmented Lagrangian regularizations. This would nevertheless add a layer of complexity on the presentation, the theoretical study, and the implementation of the algorithm. This is beyond the scope of this paper, and we differ it to future research. Other points of improvement of the implementation would include the preconditionning of the second-stage bundle Bacaud et al. (2001) and the use of available uncontrolled bundle information Malick et al. (2015).

References

Bacaud, L., Lemaréchal, C., Renaud, A., & Sagastizábal, C. (2001). Bundle methods in stochastic optimal power management: A disaggregate approach using preconditionners. Computation Optimization and Applications, 20(3), 227–244.

Batut, J., & Renaud, A. (1992). Daily scheduling with transmission constraints: A new class of algorithms. IEEE Transactions on Power Systems, 7(3), 982–989.

Beltran, C., & Heredia, F. J. (2002). Unit commitment by augmented lagrangian relaxation: Testing two decomposition approaches. Journal of Optimization Theory and Applications, 112(2), 295–314.

Bertsimas, D., Litvinov, E., Sun, X. A., Zhao, J., & Zheng, T. (2013). Adaptive robust optimization for the security constrained unit commitment problem. IEEE Transactions on Power Systems, 28(1), 52–63.

Birge, J. R., & Louveaux, F. (1988). A multicut algorithm for two-stage stochastic linear programs. European Journal of Operational Research, 34(3), 384–392.

Birge, J. R., & Louveaux, F. (1997). Introduction to stochastic programming. New York: Springer.

Borghetti, A., Frangioni, A., Lacalandra, F., & Nucci, C. A. (2003). Lagrangian heuristics based on disaggregated bundle methods for hydrothermal unit commitment. IEEE Transactions on Power Systems, 18, 313–323.

Borghetti, A., d’Ambrosio, C., Lodi, A., & Martello, S. (2013). Optimal scheduling of a multi-unit hydro power station in a short-term time horizon. Technical report, OR-12-13, University of Bologna.

Bruhns, A., Deurveilher, G., & Roy, J.S. (2005). A non-linear regression model for mid-term load forecasting and improvements in seasonality. In PSCC 2005 Luik.

Carøe, C. C., & Schultz, R. (1999). Dual decomposition in stochastic integer programming. Operation Research Letters, 24, 37–45.

Carpentier, P., Cohen, G., Culioli, J. C., & Renaud, A. (1996). Stochastic optimization of unit commitment: a new decomposition framework. IEEE Transactions on Power Systems, 11(2), 1067–1073.

Carrión, M., & Arroyo, J. M. (2006). A computationally efficient mixed-integer linear formulation for the thermal unit commitment problem. IEEE Transactions on Power Systems, 21(3), 1371–1378.

Cheung, K., Gade, D., Monroy, C. S., Ryan, S. M., Watson, J.-P., Wets, R. J.-B., et al. (2015). Toward scalable stochastic unit commitment—part 2: Solver configuration and performance assessment. Energy Systems, 6(3), 417–438. doi:10.1007/s12667-015-0148-6.

Daniildis, A., & Lemaréchal, C. (2005). On a primal-proximal heuristic in discrete optimization. Mathematical Programming Series A, 104, 105–128.

de Oliveira, W., & Sagastizábal, C. (2014). Level bundle methods for oracles with on demand accuracy. Optimization Methods and Software, 29(6), 1180–1209.

Dentcheva, D., & Römisch, W. (1998). Optimal power generation under uncertainty via stochastic programming. In Marti, K., Kall, P. (Eds.), Stochastic programming methods and technical applications, volume 458 of lecture notes in economics and mathematical systems (pp. 22–56). Springer, Berlin, Heidelberg. ISBN 978-3-540-63924-4.

Dentcheva, D., & Römisch, W. (2004). Duality gaps in nonconvex stochastic optimization. Mathematical Programming, 101(3), 515–535. doi:10.1007/s10107-003-0496-1.

Dubost, L., Gonzalez, R., & Lemaréchal, C. (2005). A primal-proximal heuristic applied to french unitcommitment problem. Mathematical Programming, 104(1), 129–151.

Falk, J. E. (1969). Lagrange multipliers and nonconvex programs. SIAM Journal on Control, 7(4), 534–545. doi:10.1137/0307039.

Feltenmark, S., & Kiwiel, K. C. (2000). Dual applications of proximal bundle methods, including lagrangian relaxation of nonconvex problems. SIAM Journal on Optimization, 10(3), 697–721.

Feng, Y., Rios, I., Ryan, S. M., Spürkel, K., Watson, J.-P., Wets, R. J.-B., et al. (2015). Toward scalable stochastic unit commitment—part 1: Load scenario generation. Energy Systems, 6(3), 309–329.

Finardi, E. C., & Da Silva, E. L. (2006). Solving the hydro unit commitment problem via dual decomposition and sequential quadratic programming. IEEE Transactions on Power Systems, 21(2), 835–844.

Frangioni, A., & Gentile, C. (2006). Solving non-linear single-unit commitment problems with ramping constraints. Operations Research, 54(4), 767–775.

Frangioni, A., Gentile, C., & Lacalandra, F. (2008). Solving unit commitment problems with general ramp contraints. International Journal of Electrical Power and Energy Systems, 30, 316–326.

Frangioni, A., Gentile, C., & Lacalandra, F. (2011). Sequential Lagrangian-MILP approaches for unit commitment problems. International Journal of Electrical Power and Energy Systems, 33, 585–593.

Geoffrion, A. M. (1972). Generalized benders decomposition. Journal of Optimization Theory and Applications, 10(4), 237–260.

Gröwe-Kuska, N., Kiwiel, K.C., Nowak, M.P., Römisch, W., & Wegner, I. (2002). Power management in a hydro-thermal system under uncertainty by lagrangian relaxation. In Greengard, C., Ruszczyński, A. (Eds.), Decision making under uncertainty, volume of 128 The IMA volumes in mathematics and its applications (pp. 39–70). Springer, New York. ISBN 978-1-4419-3014-9.

Hiriart-Urruty, J.B., & Lemaréchal, C. (1996a). Convex analysis and minimization algorithms I. Number 305 in Grundlehren der mathematischen Wissenschaften (2nd ed.). Springer, Berlin.

Hiriart-Urruty, J.B., & Lemaréchal, C. (1996b). Convex analysis and minimization algorithms II. Number 306 in Grundlehren der mathematischen Wissenschaften (2nd ed.). Springer, Berlin.

Jünger, M., Naddef, D. (Eds.). (2001). Computational combinatorial optimization: Optimal or provably near-optimal solutions. Lecture notes in computer science. Springer, Berlin.

Langrene, N., van Ackooij, W., & Bréant, F. (2011). Dynamic constraints for aggregated units: Formulation and application. IEEE Transactions on Power Systems, 26(3), 1349–1356.

Lemaréchal, C. (2001). Lagrangian relaxation. In M. Jünger & D. Naddef (Eds.), Computational combinatorial optimization: Optimal or provably near-optimal solutions (Chapter 4). Berlin: Springer.

Lemaréchal, C., & Renaud, A. (2001). A geometric study of duality gaps, with applications. Mathematical Programming, 90, 399–427.

Malick, J., de Oliveira, W., & Zaourar, S. (2015). Uncontrolled inexact information within bundle methods. EURO Journal of Computational Optimization, http://www.optimization-online.org/DB_HTML/2013/05/3892.html.

Merlin, A., Lauzanne, B., Auge, J., & Ziglioli, J. (1981). Optimization of short-term scheduling of EDF hydraulic valleys with coupling constraints: The OVIDE model. In Proceedings of the PSCC conference 1981 (pp. 345–354).

Morales-España, G., Latorre, J. M., & Ramos, A. (2013a). Tight and compact MILP formulation for the thermal unit commitment problem. IEEE Transactions on Power Systems, 28(4), 4897–4908.

Morales-España, G., Latorre, J. M., & Ramos, A. (2013b). Tight and compact MILP formulation of start-up and shut-down ramping in unit commitment. IEEE Transactions on Power Systems, 28(2), 1288–1296.

Nowak, M.P. (2000). Stochastic Lagrangian relaxation in power scheduling of a hydrothermal system under uncertainty. Ph.D. thesis, Humboldt University, Berlin.

Nowak, M. P., & Römisch, W. (2000). Stochastic lagrangian relaxation applied to power scheduling in a hydro-thermal system under uncertainty. Annals Of Operations Research, 100(1–4), 251–272.

Nürnberg, R., & Römisch, W. (2003). A two-stage planning model for power scheduling in a hydro-thermal system under uncertainty. Optimization and Engineering, 3, 355–378.

Philpott, A. B., Craddock, M., & Waterer, H. (2000). Hydro-electric unit commitment subject to uncertain demand. European Journal of Operational Research, 125, 410–424.

Rockafellar, R. T., & Wets, R. J.-B. (1991). Scenarios and policy aggregation in optimization under uncertainty. Mathematics of Operations Research, 16(1), 119–147.

Rockafellar, R. T. (1970). Convex analysis (1st ed.). Princeton: Princeton University Press.

Ruszczyński, A. (2003). Decomposition methods. In Ruszczyński, A., Shapiro, A. (Eds) Stochastic programming (Chapter 3), Volume 10 of handbooks in operations research and management science. Amsterdam: Elsevier.

Sagastizábal, C. (2012). Divide to conquer: Decomposition methods for energy optimization. Mathematical Programming, 134(1), 187–222.

Sherali, H. D., & Fraticelli, B. M. P. (2002). A modification of benders’ decomposition algorithm for discrete subproblems: An approach for stochastic programs with integer recourse. Journal of Global Optimization, 22, 319–342.

Shumway, R.H., Stoffer, D.S. (2005). Time series analysis and its applications (5th ed.). Springer texts in statistics. Springer, Berlin, http://springerd.bibliotecabuap.elogim.com/book/10.1007%2F978-1-4419-7865-3.

Tahanan, M., van Ackooij, W., Frangioni, A., & Lacalandra, F. (2015). Large-scale unit commitment under uncertainty: A literature survey. 4OR, 13(2), 115–171. doi:10.1007/s10288-014-0279-y.

Takriti, S., & Birge, J. R. (2000). Using integer programming to refine Lagrangian-based unit commitment solutions. IEEE Transactions on Power Systems, 15(1), 151–156.

Takriti, S., Birge, J. R., & Long, E. (1996). A stochastic model for the unit commitment problem. IEEE Transactions on Power Systems, 11, 1497–1508.

Takriti, S., Krasenbrink, B., & Wu, L. S. Y. (2000). Incorporating fuel constraints and electricity spot prices into the stochastic unit commitment problem. Operations Research, 48(2), 268–280.

van Ackooij, W., Doukopoulos, G. (2012). Méthodes de faisceaux pour la gestion de production. Technical report H-R36-2012-02870-FR, EDF R&D.

van Ackooij, W., Henrion, R., Möller, A., & Zorgati, R. (2014). Joint chance constrained programming for hydro reservoir management. Optimization and Engineering, 15, 509–531.

Wang, S. J., Shahidehpour, M., Kirschen, D. S., Mokhtari, S., & Irisarri, G. D. (1995). Short-term generation scheduling with transmission and environmental constraints using an augmented lagrangian relaxation. IEEE Transactions on Power Systems, 10(3), 1294–1301.

Wu, L., Shahidehpour, M., & Li, T. (2007). Stochastic security-constrained unit commitment. IEEE Transactions on Power Systems, 22(2), 800–811.

Xiong, P., & Jirutitijaroen, P. (2011). Stochastic unit commitment using multi-cut decomposition algorithm with partial aggregation. In IEEE power and energy society general meeting.

Yan, H., Luh, P.B., & Zhang, L. (1994). Scheduling of hydrothermal power systems using the augmented Lagrangian decomposition and coordination technique. In American control conference 1994 (Vol. 2, pp. 1558–1562).

Zhao, L., & Zeng, B. (2012). Robust unit commitment problem with demand response and wind energy. In Proceedings of IEEE power and energy society general meeting, 2012.

Zheng, Q., Wang, J., Pardalos, P., & Guan, Y. (2013). A decomposition approach to the two-stage stochastic unit commitment problem. Annals of Operations Research, 210(1), 387–410.

Zhuang, F., & Galiana, F. D. (1988). Towards a more rigorous and practical unit commitment by lagrangian relaxation. IEEE Transactions on Power Systems, 3(2), 763–773.

Acknowledgments

We thank the three referees for their comments and suggestions, that helped us to simplify and clarify the presentation of our work. We acknowledge the support of the Gaspard Monge program for Optimization and Operations Research (PGMO) Project “Consistent Dual Signals and Optimal Primal Solutions”. The second author also acknowledges the support of the CNRS Mastodons project “gargantua/titan” and of the Labex Persyval-Lab project-action “Khronos”.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Description of the unit-commitment model

This appendix provides more information about the models of subproblems used in the numerical experiments of Sect. 5.

1.1 Hydro valley subproblems

The hydro valley subproblems deal with optimizing the power production of turbines and pumps for a given price signal. The turbines and pumps connect various reservoirs together. For a given topology, one readily establishes the flow equations that deal with updating the reservoirs levels through time. These reservoir levels have to remain between a lower and upper bound for all T time steps. Turbines and pumps are moreover subject to natural bounds on production levels. The most challenging feature to take into account is the turbining efficiency function that associates with each turbined quantity \((\mathrm{m}^3 / \mathrm{h})\) and water head (reservoir level in uphill reservoir, in \(\mathrm{m}^3\)) the amount of produced power (MW). This function can be highly non-linear, non-concave and may even contain forbidden zones of production, see e.g., Finardi and Silva (2006), Borghetti et al. (2013).

A common assumption in the French system (see, e.g., Merlin et al. 1981) is that the water-head effect for large reservoirs can be neglected as the volumetric difference that can be achieved during the T time steps is quite small. For smaller reservoirs the effect caused on the amount of produced power would be quite small. Moreover following the set of assumptions made in Merlin et al. (1981), the power efficiency function becomes concave and is approximated with an a priori piecewise linearization. This makes the hydro valley subproblem a linear program. More details can be found in van Ackooij et al. (2014).

1.2 Thermal subproblems

As the thermal subproblems are concerned, we set up a usual model, similar to the one of Frangioni et al. (2011), that incorporates, minimum generation levels, minimal up and down times, start up costs, fixed generation costs and ramping rates. We provide here a short description for convenience. To simplify notation, we do not include a reference to the specific unit the problem belongs to,

The decision variables are \(p \in \mathbb {R}_+^T\) providing the amount of generated power in MW, \(u \in \left\{ 0,1\right\} ^T\) the on/off status of the unit for each time step and \(z \in \left\{ 0,1\right\} ^T\) an auxiliary variable indicating an effective start of the unit. Problem data describing cost are \(c \in \mathbb {R}_+^T\) in €/MWh, a proportional cost of production, \(c_f \in \mathbb {R}_+^T\) in € / h, a fixed production cost and \(c_s \in \mathbb {R}_+^T\) in €, a start up cost. Bounds on production levels expressed in MW, when producing are given by \(p_{\min } \in \mathbb {R}^T_+\) and \(p_{\max } \in \mathbb {R}^T_+\). Ramping rate related data is \(g_+, g_- > 0\) expressed in MW / h and correspond to the ramping up gradient and ramping down gradient respectively. The numbers \(s_+, s_- > 0\) express similar quantities but for starting and stopping ramping rates. Finally \(\tau _+, \tau _-\) expressed in a number of time steps correspond to the minimum up and down times respectively. We make the assumption that when a unit is online for exactly \(\tau _+\) time steps the minimum up constraint is satisfied [while Frangioni et al. (2011) assume this for \(\tau _+ + 1\) time steps]. The optimization problem can then be stated as follows, where \(\lambda \in \mathbb {R}^T\) is a Lagrangian price multiplier (€/MWh):

Here \(\Delta t\) corresponds to the size of each time step expressed in hours, p(0) to the initial power output and \(t_0\) is defined according the amount of time \(\tau _0\) (in time steps) the unit has spend producing or is offline. More specifically,

Obviously \(u(0) = 1\) in the first case and \(u(0) = 0\) in the second.

Appendix 2: Lagrangian heuristics

As in decomposition approaches for deterministic unit-commitment, heuristics play an important role in our algorithm—more precisely in Step 2. In our numerical experiments, we use three heuristics inspired from Borghetti et al. (2003) and Takriti and Birge (2000). This section describes them briefly.

1.1 Three Lagrangian heuristics

The three heuristic use information returned by the bundle algorithm maximizing (11) used in Step 1. More precisely, denoting by p is the number of iterations of this algorithm and \(x^j\) a primal iterate obtained at iteration \(j\in \{1,\ldots ,p\}\), the heuristics use the following quantities:

-

1.

the dual simplicial multipliers \(\alpha \) of the quadratic program solved at the last iteration of the bundle method;

-

2.

the so-called pseudo schedule \(\hat{x}\) is defined as \(\sum _{j=1}^p \alpha _j x^j\), see Daniildis and Lemaréchal (2005), Dubost et al. (2005);

-

3.

the pseudo costs \((\hat{c}_1,\ldots ,\hat{c}_m)\) defined as \(\hat{c}_i = \sum _{j=1}^p \alpha _j c^j_i\) where \(c^j_i\) the pure production cost of subproblem i at iteration j;

-

4.

the pseudo commitment decisions defined as \(\hat{u}^j_i = \sum _{j=1}^p \alpha _j u^j_i\), where \(u^j_i \in \left\{ 0,1\right\} ^T\) are the commitment decisions of each thermal plant for each iteration \(j=1,\ldots ,p\).

Another common ingredient is the resolution of an economic dispatch problem: for a fixed set of commitment decisions, we let a continuous optimization problem adjust production levels in order to generate a solution in \(X^2\).

We begin by remarking that the pseudo-schedule is a technically feasible solution as hydro valleys are concerned (since these sub-problems have convex feasible sets, see the previous section). Also the pseudo-schedule is directly related to offer-demand equilibrium constraints through bundle stopping criteria. We therefore keep the pseudo-schedule as hydro-valleys are concerned and remove their generation from the load D in order to form \(\tilde{D}\). The production parc is such that the obtained net load is always strictly positive. The heuristics are therefore mostly concerned with thermal plants. For convenience of notation we will still use m to index the number of thermal plants.

1.2 Commitment based heuristic

This heuristic is inspired from Borghetti et al. (2003). We begin with an initial guess for the commitment decisions called \(\tilde{u}\), for instance one of the commitment decisions encountered during the optimization of problem (11). We now build a priority list in two different ways. The first is time-independent and related to sorting the pseudo costs divided by total generated pseudo power in increasing order. A lower value indicates a unit with higher priority (best cost to power ratio). The second is a time-dependent priority list in which we divide the pseudo-commitment decisions by the above pseudo cost over pseudo power ratio. A higher value indicates a unit more likely to be started.

Starting from our initial commitment guess \(\tilde{u}\) we first begin by computing the generation envelope, i.e., the minimum and maximum power the plants can generate over the whole time horizon at these commitment decisions. We now move from the first time step to the last one, if \(\tilde{D}\) is in the generation envelope, nothing more needs to be done. If generation is insufficient, we check if we can start the highest priority unit (if not done so already), we continue in this manner until generation covers load. If generation is in excess, we try to decommit the lowest priority unit (if not already off) and continue in this manner until the minimum generation is below load. The hence generated commitment decision is post-processed with an economic dispatch in order to finely adjust generation to actual load. We also post-process any of the generated commitment decisions \(u^j\), \(j=1,\ldots ,p\) in order to retain the best one.

1.3 Recombining heuristic

This method inspired from Takriti and Birge (2000) recombines the earlier obtained primal iterates in order to find a feasible solution. For additional flexibility we add a slack variable, and therefore solve the following mixed-integer problem:

where \(c^{\mathtt{imb}}\) is a large imbalance related cost. The resulting optimal solution builds an initial commitment decision \(\tilde{u}\), which is post-processed as explained previously. In order to keep the mixed-integer program small, we use the dual variables \(\alpha \) and insert only elements of iteration j if \(\alpha _j\) is sufficiently large, (e.g., \(\alpha _j > 10^{-3}\)).

Rights and permissions

About this article

Cite this article

van Ackooij, W., Malick, J. Decomposition algorithm for large-scale two-stage unit-commitment. Ann Oper Res 238, 587–613 (2016). https://doi.org/10.1007/s10479-015-2029-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-015-2029-8

Keywords

- Two-stage integer programming

- Stochastic unit-commitment

- Price decomposition

- Convex duality

- Nonsmooth optimization

- Bundle methods