Abstract

The Unit Commitment problem in energy management aims at finding the optimal production schedule of a set of generation units, while meeting various system-wide constraints. It has always been a large-scale, non-convex, difficult problem, especially in view of the fact that, due to operational requirements, it has to be solved in an unreasonably small time for its size. Recently, growing renewable energy shares have strongly increased the level of uncertainty in the system, making the (ideal) Unit Commitment model a large-scale, non-convex and uncertain (stochastic, robust, chance-constrained) program. We provide a survey of the literature on methods for the Uncertain Unit Commitment problem, in all its variants. We start with a review of the main contributions on solution methods for the deterministic versions of the problem, focussing on those based on mathematical programming techniques that are more relevant for the uncertain versions of the problem. We then present and categorize the approaches to the latter, while providing entry points to the relevant literature on optimization under uncertainty. This is an updated version of the paper “Large-scale Unit Commitment under uncertainty: a literature survey” that appeared in 4OR 13(2):115–171 (2015); this version has over 170 more citations, most of which appeared in the last 3 years, proving how fast the literature on uncertain Unit Commitment evolves, and therefore the interest in this subject.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In electrical energy production and distribution systems, an important problem deals with computing the production schedule of the available generating units, accordingly with their different technologies, in order to meet their technical and operational constraints and to satisfy several system-wide constraints, e.g., global equilibrium between energy production and energy demand or voltage profile bounds at each node of the grid. The constraints of the units are very complex; for instance, some units may require up to 24 h to start. Therefore, such a schedule must be computed (well) in advance of real time. The resulting family of mathematical models is usually referred to as the Unit Commitment problem (UC), and its practical importance is clearly proven by the enormous amount of scientific literature devoted to its solution in the last four decades and more. Besides the very substantial practical and economical impact of UC, this proliferation of research is motivated by at least two independent factors:

-

1.

On the one hand, progress in optimization methods, which provides novel methodological approaches and improves the performances of existing ones, thereby allowing to tackle previously unsolvable problems;

-

2.

On the other hand, the large variety of different versions of UC corresponding to the disparate characteristics of electrical systems worldwide (free market vs. centralized, vast range of production units due to hydro/thermal/nuclear sources,...).

Despite all of this research, UC still cannot be considered a “well-solved” problem. This is partly due to the need of continuously adapting to the ever-changing demands of practical operational environments, in turn caused by technological and regulatory changes which significantly alter the characteristics of the problem to be solved. Furthermore, UC is a large-scale, non-convex optimization problem that, due to operational requirements, has to be solved in an “unreasonably” small time. Finally, as methodological and technological advances make previous versions of UC more accessible, practitioners have a chance to challenge the (very significant) simplifications that have traditionally been made, for purely computational reasons, about the actual behaviour of generating units. This leads to the development of models incorporating considerably more detail than in the past, which can significantly stretch the capabilities of solution methods.

A particularly relevant trend in current electrical systems is the ever increasing use of intermittent (renewable) production sources such as wind and solar power. This has significantly increased the underlying uncertainty in the system, previously almost completely due to variation of users’ demand (which could however be forecast quite effectively) and occurrence of faults (which was taken into account by requiring some amount of spinning reserve). Ignoring such a substantial increase in uncertainty levels w.r.t. the common existing models incurs an unacceptable risk that the computed production schedules be significantly more costly than anticipated, or even infeasible (e.g., Keyhani et al. 2010). However, incorporating uncertainty in the models is very challenging, in particular in view of the difficulty of deterministic versions of UC.

Fortunately, optimization methods capable of dealing with uncertainty have been a very active area of research in the last decades, and several of these developments can be applied, and have been applied, to the UC problem. This paper aims at providing a survey of approaches for the Uncertain UC problem (UUC). The literature is rapidly growing: this is an update of our earlier survey Tahanan et al. (2015), that has also appeared as van Ackooij et al. (2018), and counts over 170 more citations, most of them being articles published in the last 3 years. This expansion of the literature is easily explained, besides by the practical significance of UUC, by the combination of two factors: on the one hand the diversity of operational environments that need to be considered, and on the other hand by the fact that the multitude of applicable solution techniques already available to the UC (here and in the following we mean the deterministic version when UUC is not explicitly mentioned) is further compounded by the need of deciding how uncertainty is modeled. Indeed, the literature offers at least three approaches that have substantially different practical and computational requirements: Stochastic Optimization (SO), Robust Optimization (RO), and Chance-Constrained Optimization (CCO). These choices are not even mutually orthogonal, yielding yet further modelling options. In any case, the modelling choice has vast implications on the actual form of UUC, its potential robustness in the face of uncertainty, the (expected) cost of the computed production schedules and the computational cost of determining them. Hence, UUC is even less “well-solved” than UC, and a thriving area of research. Therefore, a survey about it is both timely and appropriate.

We start with a review of the main contributions on solution methods for UC that have an impact on those for the uncertain version. This is necessary, as the last broad UC survey (Padhy 2004) dates back some 10 years, and is essentially an update of Sheble and Fahd (1994); neither of these consider UUC in a separate way as we do. The more recent survey Farhat and El-Hawary (2009) provides some complements to Padhy (2004) but it does not comprehensively cover methods based on mathematical programming techniques, besides not considering the uncertain variants. The very recent survey Saravanan et al. (2013) focusses mainly on nature-inspired or evolutionary computing approaches, most often applied to simple 10-units systems that can nowadays be solved optimally in split seconds with general-purpose techniques; furthermore these methods do not provide qualified bounds (e.g., optimality gap) that are most often required when applying SO, RO or CCO techniques to the solution of UUC. This, together with the significant improvement of solving capabilities of methods based on mathematical programming techniques (e.g., Lagrangian or Benders’ decomposition methods, MILP approaches,...), justifies why in the UC-part of our survey we mostly focus on the latter rather than on heuristic approaches. This version also significantly updates Tahanan et al. (2015), which appeared roughly simultaneously with Zheng et al. (2015), upon which we also significantly expand and update. Finally, the recent survey (Alqurashi et al. 2016) discusses uncertainty in energy problems in general; that is, besides UC, it also deals with market-clearing and long-term models. However, it does so by leaving out any methodological discussion of optimization algorithms; furthermore, it is somewhat light on certain approaches such as CCO ones. In our view, discussing solution approaches for a model is crucial since it closely ties in with the usefulness of its solutions; for instance, stochastic optimization models are only useful as long as they can be run with an appropriate number of scenarios, and the possibility of doing so depends on the employed solution methods. We therefore believe that limiting the presentation to the models leaves out too much important information that is crucial for properly choosing the right form of uncertainty modelling.

Because the paper surveys such a large variety of material, we provide two different reading maps:

-

1.

The first is the standard reading order of the paper, synthesized in the Table of Contents above. In Sect. 2 we describe the varied technical and operational constraints in (U)UC models which give rise to many different variants of UC problems. In Sect. 3 we provide an overview of methods that deal with the deterministic UC, focusing in particular on methods dealing with large-scale systems and/or that can be naturally extended to UUC, at least as subproblems. In particular, in Sect. 3.1 we discuss Dynamic Programming approaches, in Sect. 3.2 we discuss Integer and Mixed Integer Linear Programming approaches, while in Sects. 3.3 and 3.4 we discuss decomposition approaches (Lagrangian, Benders and Augmented Lagrangian), and finally in Sect. 3.5 we (quickly) discuss (Meta-)Heuristics. UUC is then the subject of Sect. 4: in particular, Sect. 4.2 presents Stochastic Optimization (Scenario-Tree) approaches, Sect. 4.3 presents Robust Optimization approaches, and Sect. 4.4 presents Chance-Constrained Optimization approaches. We end the paper with some concluding remarks in Sect. 5, and with a list of the most used acronyms.

-

2.

The second map is centred on the different algorithmic approaches that have been used to solve (U)UC. The main ones considered in this review are:

-

Dynamic programming approaches, that can be found in Sects. 3.1, 3.2.2, 3.3, 3.5.2, 4.1.1.1, 4.2.1, 4.2.3, 4.2.4, and 4.4;

-

Mixed-integer programming approaches, that can be found in Sects. 3.2, 3.3, 4.1.2.2, 4.2, 4.2.1, 4.2.3, 4.2.4, 4.3, and 4.4;

-

Lagrangian relaxation (decomposition) approaches, that can be found in Sects. 3.2.2, 3.3, 3.5.2, 4.2.1, 4.2.2, 4.2.3, 4.2.4, and 4.4;

-

Benders’ decomposition approaches, that can be found in Sects. 3.2.2, 3.3, 4.2, 4.2.1, 4.2.2, 4.2.3, 4.2.4, and 4.3;

-

Augmented Lagrangian approaches, that can be found in Sects. 3.3, 3.4, and 4.4;

-

Other forms of heuristic approaches, that can be found in Sects. 3.1, 3.2.2, 3.3, 3.5, 4.1.2.1, 4.2.2, and 4.2.3.

-

2 Ingredients of the unit commitment problem

We start our presentation with a very short description of the general structure of electrical systems, presenting the different decision-makers who may find themselves in the need of solving (U)UC problems and their interactions. This discussion will clarify which of the several possible views and needs we will cover; the reader with previous experience in this area can skip to Sect. 2.1 for a more detailed presentation of the various ingredients of the (U)UC model, or even to Sect. 3 for the start of the discussion about algorithmic approaches.

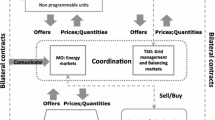

When the first UC models were formulated, the usual setting was that of a Monopolistic Producer (MP). The MP was in charge of the electrical production, transmission and distribution in one given area, often corresponding to a national state, comprised the regulation of exchanges with neighbouring regions. In the liberalized markets that are nowadays prevalent, the decision chain is instead decentralized and significantly more complex, as shown in the (still somewhat simplified) scheme of Fig. 1. In a typical setting, companies owning generation assets (GENCOs) have to bid their generation capacity over one (or more) Market Operator(s) (MO). Alternatively, or in addition, they can stipulate bilateral contracts (or contracts for differences, CfD) with final users or with wholesales/traders. Once received the bids/offers, the MO clears the (hourly) energy market and defines (equilibrium) clearing prices. A Transmission System Operator (TSO), in possession of the high voltage transmission infrastructure, then has the duty—acting in concert with the Power Exchange Manager (PEM)—to ensure safe delivery of the energy, which in turns means different duties such as real time frequency-power balancing, several types of reserve satisfaction, voltage profile stability, and enforcing real-time network capacity constraints. The TSO typically operates in a different way programmable and non programmable units, since for instance only the former can participate on balancing markets. However, very recently the growth of non programmable renewable sources required a greater integration also in the real time balancing market. As a consequence modifications of network codes and new regulation emerged. This is, e.g., the case of the resolution 300/2017/R/eel of the Italian Regulatory Authority for Energy, Networks and Environment (ARERA), which establishes the first guidelines for the active participation of the non programmable renewable sources, of the demand and of the storage in the balancing market. Notably the storage can be included within traditional production units giving birth to the concept of Integrated Production Units.

This basic setting, which can be considered sufficient for our discussion, is only a simplification of the actual systems, which also vary depending on their geographical position. For instance, transmission (and distribution) assets may actually be in possession of different companies that have to offer them under highly regulated fair and non-discriminative conditions, leaving the TSO only a coordination role. Also, the TSO and the MO may or may not be the same entity, the balancing market can actually follow a central dispatch paradigm (as alluded in Fig. 1) or a self dispatch one and so on. We leave aside these other factors, like how many and MOs there are and how exactly these are structured; we refer to Conejo and Prieto (2001), Harris (2011), Oren et al. (1997), Shahidehpour et al. (2002) and Conejo et al. (2010, Chapter 1) for a more detailed description. Because of this complexity, standard optimization models may not be entirely appropriate to deal with all the aspects of the problem, since the behaviour of different/competing decision makers need be taken into account. This may require the use of other methodologies, such as the computation of equilibria or agent-based simulation. We will not deal with any of these aspects, the interested reader being referred to Ventosa et al. (2005), Harris (2011), Oren et al. (1997), Shahidehpour et al. (2002), Leveque (2002), Gabriel et al. (2013), van Ackooij et al. (2018), van Ackooij and de Oliveira (2017), Dempe et al. (2015), Outrata (1990), Dempe and Dutta (2012), Adam et al. (2017) and Surowiec (2010) for further discussion.

2.1 A global view of UC

In broad terms, the (deterministic or uncertain) Unit Commitment problem (both UC in this section unless explicitly stated) requires to minimize the cost, or maximize the benefit, obtained by the production schedule for the available generating units over a given time horizon. As such, the fundamental ingredients of UC are its objective function and its constraints. Of course, another fundamental ingredient is the time horizon itself; UC being a short-term model this is most often a day or two of operations, and up to a week. In the following we will denote it by \(\mathcal {T}\), which is typically considered to be a discrete set corresponding to a finite number of time instants \(t \in \mathcal {T}\), usually hours or half-hours (down to 15 or 5 min). Thus, the typical size of \(\mathcal {T}\) varies from 24 to a few hundred.

In mathematical terms, UC has the general structure

where \(x \in \mathbb {R}^n\) is the decision making vector. Usually (most) elements of x are indexed according to both the generating unit \(i \in U\) and the time instant \(t \in \mathcal {T}\) they refer to. Thus, one often speaks of the subvectors \(x^t\) of all decisions pertaining to time t and/or \(x_i\) of all decisions pertaining to unit i. Also, entries of x are typically split among:

-

1.

Commitment decision discrete variables that determine if a particular unit is on or off at any given time (often denoted by \(u^t_i\));

-

2.

Production decision continuous variables that provide the amount of generated active power by a specific unit at a given time (often denoted by \(p^t_i\)). In this set other variables can be included, such as reactive power or reserve contribution by a specific unit at a given time (often denoted by \(q^t_i\) and \(r^t_i\) respectively);

-

3.

Network decision such as these representing phase angle or voltage magnitudes at each node, describing the state of the transmission or distribution network.

A UC problem not having commitment decisions is often called Economic Dispatch (ED) (e.g. Zhu 2009) or Optimal Power Flow (OPF) when the network is considered, (e.g. Jabr 2008). It could be argued that commitment decisions can be easily derived from production decisions (each time a non-zero production output is present the unit has to be on), but for modeling purposes it is useful to deal with the two different concepts separately, cf. Sect. 3.2. Besides, the point is that in ED or OPF the commitment of units has already been fixed and cannot be changed. We remark that network decisions may also include binary variables that provide the open or close state of a particular branch, as entirely closing a branch is one of the few options that the physic of electrical networks allows for “routing” the electrical current (cf. Sect. 2.8). While ED can be expected to be simpler than UC, and in many cases it is a simple convex program that can nowadays be solved with off-the-shelf techniques, this is not always the case. ED was not only challenging in the past (e.g., Demartini et al. 1998 and the references therein), but can still be so today. Indeed, even when commitment decisions are fixed, the electrical system is highly nonlinear and nonconvex, e.g., due to hydro units efficiency curves (cf. Sect. 2.4) or the transmission network characteristics (cf. Sect. 2.7), so that ED can still be a nontrivial problem that may require ad-hoc approaches (e.g. Heredia and Nabona 1995; Oliveira et al. 2005; Jabr 2006, 2008; Lavaei and Low 2012; Molzahn et al. 2013).

In Eq. (1), \(X_1\) is the set modeling all technical/operational constraints of the individual units and \(X_2\) are the system-wide constraints. The first set is by definition structured as a Cartesian product of smaller sets, i.e., \(X_1 = \prod _{i \in U} X^1_i\), with \(X^1_i \subseteq \mathbb {R}^{n_i}\) and \(\sum _{i \in U} n_i = n\). Moreover, the objective function f typically also allows for a decomposition along the sets \(X^1_i\), i.e., \(f(x) = \sum _{i \in U} f_i(x_i)\) and \(x_i \in X^1_i\). Each of the sets \(X_i^1\) roughly contains the feasible production schedules for one unit, that can differ very significantly between different units due to the specific aspects related to their technological and operational characteristics. In most models, \(X_1\) is non-convex. However, units sharing the same fundamental operational principles often share a large part of their constraints as well. Because of this, these constraints are best described according to the type of the generating unit, i.e.,

While hydro units are arguably a part of renewable generation, in the context of UC it is fundamental to distinguish between those units that are programmable and those that are not. That is, hydroelectric generation systems relying on a flow that can not be programmed are to be counted among renewable generation ones together with solar and wind-powered ones. This is unless these so-called run-of-river (ROR) units are part of a hydro valley, preceded by a programmable hydro one (cf. Sect. 2.4).

The set \(X_2\), which usually models at least the offer-demand equilibrium constraints, is most often, but not always, convex and even polyhedral. This set may also incorporate other system-wide constraints, such as emission constraints, network transmission constraints (cf. Sect. 2.7) or optimal transmission switching constraints (cf. Sect. 2.8).

Solving (1) is difficult when n is large (which usually means that |U| is large) or \(X_1\) is a complex set; the latter occurs e.g. when substantial modelling detail on the operations of units is integrated in the model. Finally, (1) contains no reference to uncertainty, but several sources of uncertainty are present in actual operational environments, as summarized in the following table:

Data | Uncertain for | Severity |

|---|---|---|

Customer load | GENCOs, TSO | Low |

Reservoirs inflows | GENCOs, TSO | Medium |

Renewable generation | GENCOs, TSO | High |

Prices/quantities | GENCOs, traders, loads users) | Medium/high |

Units/network failure | GENCOs, TSO | Medium |

Various ways to incorporate uncertainty in (1) are discussed in Sect. 4.1. Obviously, solving (1) becomes more difficult when uncertainty is present, even when n is small and \(X_1\) relatively simple. Thus, properly exploiting the structure of the problem (the function f and the sets \(X_1\) and \(X_2\)) is crucial to obtain efficient schemes for UC, and even more so for UUC. This is why we now provide some detail on different modeling features for each of these components.

2.2 The objective function

The objective function of UC is one of the main factors reflecting the different types of decision-makers described in the previous section. In fact, when the demand needs to be satisfied (as in the case of the MP, or of a TSO in the balancing market) the objective function fundamentally aims at minimizing energy production costs; this is not necessarily obvious (cf. the case of hydro units below), but the principle is clear. However, in the free-market regime the aim of a single GENCO is typically rather to maximize energy production profits. This again requires estimating the costs, so the same objective as in the MP case largely carries over, but it also requires estimating the revenues from energy selling, as it is the difference between the two that has to be maximized. In particular, if the GENCO is a price maker it may theoretically indulge in strategic bidding (David and Wen 2001), whereby the GENCO withdraws power from the market (by bidding it at high cost) in order to push up market prices, resulting in an overall diminished production from its units but higher profit due to the combined effect of decreased production cost and increased unitary revenue for the produced energy. Of course, the success of such a strategy depends on the (unknown) behavior from other participants in the market, which thereby introduces significant uncertainty in the problem. The electrical market is also highly regulated to rule out such behavior from market participants; in particular, larger GENCOs, being more easily price makers, are strictly observed by the regulator and bid all their available capacity on the market. Yet, the solution of strategic bidding problems is of interest at least to the regulators themselves, who need to identify the GENCOs who may in principle exercise market power and identify possible patterns of abuse. Even in the price taker case, i.e., a GENCO with limited assets and little or no capacity to influence market prices, uncertainty is added by the need of accurately predicting the selling price of energy for each unit and each \(t \in \mathcal {T}\) (Gil et al. 2012). This uncertainty must then be managed, e.g. with techniques such as those of Robust Optimization (Baringo and Conejo 2011).

Energy production costs for fuel-burning units are typically modeled (in increasing order of complexity) as linear, piecewise-linear convex, quadratic convex, or nonconvex functions separable for each \(t \in \mathcal {T}\). In fact, while the fuel-consumption-to-generated-power curve can usually be reasonably well approximated with a piece-wise linear function or a low-order polynomial one, other technical characteristics of generating systems introduce nonconvex elements. The simplest form is that of a fixed cost to be paid whenever the unit is producing at some \(t \in \mathcal {T}\), irrespective of the actual amount of generated power. In alternative, or in addition, start-up costs (and, less frequently, shut-down ones) are incurred when a unit is brought online after a period of inactivity. In their simplest form start-up costs can be considered fixed, but most often they significantly depend on the time the unit has been off before having been restarted, and therefore are not separable for each time instant. The dependency of the start-up cost on time can be rather complex, as it actually depends on the choice between the unit being entirely de-powered (cooling) or being kept at an appropriate temperature, at the cost of burning some amount of fuel during the inactivity period, to make the start-up cheaper (banking). Technically speaking, in the latter case, one incurs a higher boiler cost to offset part of the turbine cost. The choice between these two alternatives can often be optimally made by simple formulae once the amount of idle time is known, but this is typically not true beforehand in UC since the schedule of the unit is precisely the output of the optimization problem. Fortunately, some of the solution methods allow inclusion of the start-up cost at a relatively minor increase of the computational complexity; this is the case e.g. of MILP formulations, cf. Sect. 3.2, exploiting the fact that the optimal start-up cost is nondecreasing as the length of the idle period increases (Nowak and Römisch 2000; Carrión and Arroyo 2006). In other cases start-up cost have basically no additional computational cost, such as in DP approaches, cf. Sect. 3.1. Other relevant sources of nonconvexity in the objective function are valve points (Wood and Wollemberg 1996), corresponding to small regions of the feasible production levels where the actual working of the unit is unstable (e.g., due to transitioning between two different configurations in a Combined Cycle Gas Turbine, CCGT, unit or other technical reasons) and that therefore should be avoided.

Nuclear units are generally considered thermal plants, although they significantly differ in particular for the objective function. Indeed, fuel cost has a different structure and depends on many factors, not only technical but also political (e.g., Cour des Comptes 2012). For convenience, formulae similar to that of conventional thermal plants are often used. However, these units incur additional significant modulation costs whenever variations of power output are required; this cost is therefore again not separable per time instant.

Hydro units are generally assumed to have zero energy production cost, although they may in principle have crew and manning costs. In the self-scheduling case, where profit has to be maximized, this would lead to units systematically depleting all the available water due to the fact that a short-term model such as UC has no “visibility” on what happens after the end of its time horizon \(\mathcal {T}\) (the so-called “border effect”). Because of this, often a value of water coefficient is added to the objective function to represent the expected value of reserves left in the reservoirs at the end of \(\mathcal {T}\). These values, as well as the required reservoir levels (cf. 2.4), are usually computed by means of specific mid-term optimization models. A very standard approach is to value the differential between the initial and end volume of a reservoir against a volume-dependent water value; we refer to van Ackooij et al. (2014) and Cerjan et al. (2011) for details on various other modelling choices. A particular difficulty appears when we wish to integrate the water head effect on turbining efficiency (e.g., Finardi and Silva 2006; Ramos et al. 2012), since this is typically a nonlinear and nonconvex relationship.

In general, the case of profit maximization requires knowledge of the selling and buying price of energy at each \(t \in \mathcal {T}\). Because UC is solved ahead of actual operations, possibly precisely with the aim of computing the bids that will contribute to the setting of these prices (cf. e.g. Borghetti et al. 2003a; Bompard and Ma 2012; Kwon and Frances 2012; Rocha and Das 2012), this requires nontrivial forecast models in order to obtain reasonable estimates of the prices (e.g. Oudjane et al. 2006; Li et al. 2010; Zareipour 2012). Depending on the time horizon and specific application, different price models can be considered. These can be obtained from time series modeling (e.g. Diongue 2005; Muñoz et al. 2010; Pedregal et al. 2012), mathematical finance (e.g. Oudjane et al. 2006; Higgs and Worthington 2008; Benth et al. 2012; Nguyen-Huu 2012; Pepper et al. 2012) or can be based on electricity fundamentals (e.g. van Ackooij and Wirth 2007; Ea 2012). For the case where the producer is a price taker, that is, small enough so that its production can be deemed to have little or no effect on the realized prices, UC can typically be independently solved for each individual unit (thus being styled as the self-scheduling problem), and it is therefore much easier (Arroyo and Conejo 2000), although uncertainty in prices then becomes a critical factor and need be included in the models by appropriate techniques (Conejo et al. 2002; Nogales et al. 2002; Baringo and Conejo 2011; Jabr 2005). Things are significantly different in case the producer can exercise market power, that is, influence (increase) the prices by changing (withdrawing) the power it offers to the market; modeling this effect “ties” all the units back again into an unique UUC (Borghetti et al. 2003a; Conejo et al. 2002; de la Torre et al. 2002; Pereira et al. 2005). Uncertainty in this case is also very relevant, with the behavior of competitors being one obvious primary source (Anderson and Philpott 2002; Wen and David 2001; Vucetic et al. 2001; Pineau and Murto 2003; Wang et al. 2007). The matter is further complicated by the fact that the structure of the PE is usually complex, with more than one auction solved in cascade to account for different kinds of generation (energy, reserve, ancillary services, ...) (Baillo et al. 2004; Triki et al. 2005; Wang et al. 2005) and by the fact that tight transmission constraints may create zonal or even nodal prices, thereby allowing producers who may not have market power in the global context to be able to exercise it in a limited region (Li and Shahidehpour 2005; Peng and Tomsovic 2003; Pereira et al. 2005).

2.3 Thermal units

A thermal power station is a power plant in which the prime mover is steam driven. Technical/operational constraints can be classified as either static or dynamic: the former hold on each time step, whereas the latter link different (most often adjacent) time steps. Most typical static constraints are:

-

1.

Offline when the unit is offline, the power output is less than or equal to zero (negative power output refers to the power used by auxiliary installations, e.g., for nuclear plants).

-

2.

Online when the unit is online, the power output must be between Minimal Stable Generation (MSG) and maximal power output.

-

3.

Starting the unit is ramping up to MSG. The ramping profile depends on the number of hours a unit has been offline (e.g. Le et al. 1990); see also the discussion on starting curves below in the section on dynamic constraints. A unit in this state can in principle still be disconnected, but at a cost.

-

4.

Stopping the unit ramps down from MSG to the offline power output. As for starting, the ramping profile depends on the number of hours a unit has been online; see also the discussion on stopping curves below in the section on dynamic constraints.

-

5.

Generation capacity the production capacity of each unit. For some units the production output has to be selected among a discrete set of values.

-

6.

Spinning reserve the extra generating capacity that is available by increasing the power output of generators that are already connected to the power system. For most generators, this increase in power output is achieved by increasing the torque applied to the turbine’s rotor. Spinning reserves can be valued separately from actively generated power as they represent the main mechanism that electrical systems have to cope with real-time variations in demand levels.

-

7.

Crew constraint number of operators available to perform the actions in a power plant.

Typical dynamic constraints instead are:

-

1.

Minimum up/down time a unit has to remain online/offline for at least a specific amount of time.

-

2.

Operating ramp rate (also known as ramp-down and ramp-up rate): the increment and decrement of the generation of a unit from a time step to another, excluding start-up and shut-down periods, must be bounded by a constant (possibly different for ramp-up and ramp-down).

-

3.

Minimum stable state duration a unit that has attained a specific generation level has to produce at that level for a minimum duration of time.

-

4.

Maximum numbers of starts the number of starts can be limited over a specific time horizon (such a constraint is also implicitly imposed by Minimum Up/Down Time ones, and in fact the two are somehow alternatives).

-

5.

Modulation and stability these constraints are mainly applied to an online nuclear unit. A unit is in modulation if the output level changes in a time interval, whereas it is stable if the power level remains identical to that of the previous time step. The constraints ensure that the unit is “most often stable”, requiring that the number of modulations does not exceed a predefined limit over a given time span (say, 24 h).

-

6.

Starting (stopping) curve (also referred to in literature as start-up/shut-down ramp rate): in order to start (stop) a unit and move it from the offline (online) state to the online (offline) state, the unit has to follow a specific starting (stopping) curve, which links offline power output (zero, or negative for nuclear plants) to MSG (or vice-versa) over the course of several time steps. Each starting (stopping) curve implies a specific cost, and the chosen curve depends on the number of hours the plant has been offline (online). Starting (stopping) may take anything from several minutes (and therefore be typically irrelevant) up to 24 h (and therefore be pivotal for the schedule).

-

7.

Feasible state transition for CCGT These thermal units typically have at least two Gas Turbines and one Steam Turbine and have specific feasible state transition which are non trivial to formulate, e.g. (Fan et al. 2016).

2.4 Hydro units

Hydro units are in fact entire hydro valleys, i.e., a set of connected reservoirs, turbines and pumps that influence each other through flow constraints. When the hydro component is significant UC is often denoted as HUC; this may make the problem significantly more difficult, as the recent survey (Taktak and d’Ambrosio 2016) highlights. Turbines release water from uphill reservoirs to downhill ones generating energy, pumps do the opposite. Note that the power output of ROR units downstream to a reservoir (and up to the following reservoir, if any) must be counted together with that of the turbines at the same reservoir; usually it is possible to do this by manipulating the power-to-discharged-water curve of the unit at the reservoir, and thus ROR units in a hydro valley need not be explicitly modeled. We remark in passing that whether or not a unit is considered ROR depends on the time horizon of the problem: units with small reservoirs can be explicitly modeled in HUC because they do have a degree of modulation over the short term, but they may be considered ROR in longer-term problems since the modulation is irrelevant over long periods of time.

As for thermal units, we distinguish constraints as being either static or dynamic. The typical ones of the first kind are:

-

1.

Reservoir level the level of water in each reservoir has to remain between a lower and upper bound. Frequently these bounds are used to reflect strategic decisions corresponding to optimal long-term use of water (cf. Sect. 2.2), and not necessarily reflect physical bounds. An alternative is to use a nonlinear cost of water that reflects the higher risk incurred in substantially depleting the reservoir level, as water in hydro reservoirs represents basically the only known way of efficiently storing energy on a large scale and therefore provides a crucial source of flexibility in the system. Yet, bounds on the level would ultimately be imposed anyway by physical constraints.

-

2.

Bounds turbines and pumps can operate only within certain bounds on the flowing water. In particular, some turbines might have a minimal production level akin to the MSG of thermal units.

The most common dynamic constraints are:

-

1.

Flow equations these equations involve the physical balance of the water level in each reservoir and connect the various reservoirs together. The reservoir levels get updated according to natural inflows, what is turbined downhill, what is spilled downhill (i.e., let go from the reservoir to the next without activating the turbines), and what is pumped from downhill to uphill. Spilling might not be allowed for all reservoirs, nor all have pumping equipment.

-

2.

Flow delay the water flowing (uphill or downhill) from each unit to the next reservoir will reach it after a given delay, that can possibly be of several hours (and occasionally even more Belloni et al. 2003).

-

3.

Ramp rate adjacent turbining levels have to remain sufficiently close to each other.

-

4.

Smooth turbining over a a given time span (e.g., 1 h), turbining output should not be in a V-shape, i.e., first increase and immediately afterwards decrease (or vice-versa). This constraint is typically imposed to avoid excessive strain on the components, similarly to several constraints on thermal units such as Minimum up/down Time, Maximum Numbers of Starts, Modulation and Stability.

-

5.

Turbining/pumping incompatibility some turbines are reversible and therefore pumping and turbining cannot be done simultaneously. Moreover, switching from turbining to pumping requires a certain delay (e.g., 30 min). Some of these constraints actually only refer to a single time instant and therefore they can be considered as static.

-

6.

Forbidden zones in complex hydro units, effects like mechanical vibrations and cavitation strongly discourage using certain intervals of turbined water, as these would result in low efficiency and/or high output variation (similarly to valve points in thermal units, cf. Sect. 2.2). Therefore, constraints that impose that the turbined water lies outside of these forbidden zones might have to be imposed (Finardi and Scuzziato 2013).

2.5 Renewable generation units

Renewable generation in UC mostly refers to wind farms, solar generation, stand alone ROR hydro units, and geothermal production. The fundamental characteristic of all these sources, as far as UC is concerned, is the fact that they cannot be easily modulated: the produced energy, and even if energy is produced at all (in some wind farms energy is actually consumed to keep the blades in security when wind blows too strongly), is decided by external factors. Some of these sources, most notably solar and wind, are also characterized by their intermittency; that is, it is very difficult to provide accurate forecasts for renewable generation, even for short time horizons (say, day-ahead forecasts). Furthermore, in several cases renewable generation operates in a special regulatory regime implying that they cannot even be modulated by disconnecting them from the grid. This has (not frequently, but increasingly often) led to paradoxical situations where the spot price of energy is actually zero or, where allowed, even negative, i.e., one is paid to consume the energy that renewable sources have the right to produce (and sell at fixed prices) no matter what the demand actually is. All this has lead to significant changes in the operational landscape of energy production systems, that can be summarized by the following factors:

-

1.

The total renewable production cannot be predicted accurately in advance;

-

2.

Renewable generation has high variance;

-

3.

The correlation between renewable generation and the load can be negative, which is particularly troublesome when load is already globally low, since significant strain is added to conventional generation assets which may have to quickly ramp down production levels, only to ramp them up (again rapidly) not much later. This goes squarely against most of the standard operational constraints in classical UC (cf. Sects. 2.3, 2.4).

In other words, in UC terms, renewable generation significantly complicates the problem; not so much because it makes its size or structure more difficult, but because it dramatically increases the level of uncertainty of net load (the load after the contribution of renewables is subtracted), forcing existing generation units to serve primarily (or at least much more often than they were designed to) as backup production in case of fluctuations, rather than as primary production systems. This increases the need of flexible (hydro-)thermal units ready to guarantee load satisfaction at a short notice, which however typically have a larger operational cost. We refer to Bouffard and Galiana (2008), Siahkali and Vakilian (2010), Moura and Almeida (2010) and Miranda et al. (2011).

2.6 Demand response and energy storage

With increasing awareness of the effect that electrical consumption may have on the “environment”, and as a result of economic incentives, users are increasingly willing to take an active part in altering their consumption pattern to accommodate for system needs. In view of testing such potential, experiments have carried out on a voluntary basis (e.g., NICEgrid in France and the pilot projects in Italy after the aforementioned ARERA resolution 300/2017/R/eel). These mechanisms can be seen as a particular type of Demand Response (DR). The novelty in itself is not so much the fact that some customers may be asked to not consume, or postpone their consumption, but rather the scale and size of the considered consumptions profiles. Indeed, traditionally only large industrial clients were addressed, but this has progressively moved to consider larger sets of households. From a modelling perspective, at least three kinds of phenomena can be looked at:

-

A certain amount of load can be shedded, but a limited set of times over a given time horizon (Magnago et al. 2015).

-

A certain amount of load can be shifted from one moment in time to another without implying any change in consumption (zero sum).

-

A certain amount of load can be shifted from a moment in time to another while implying a global increase in consumption. Such is the case, for instance, of an heating system where, after some period of not warming a household, more energy is required to recover a given confort temperature.

In a similar way to Demand Response, energy storage allows to adapt electrical consumption (and generation), catering for flexibility needs. Energy storage gained popularity during the 1970’s, when power generation saw a significant shift from oil to nuclear power in North America and in Europe. Pumped hydro storage was used to complement base-load nuclear and coal plant by absorbing excess energy during periods of low demand and generating to meet peak consumption periods. In the subsequent decades, as generation portfolios diversified to include more flexible plant such as gas-fired units, and the power generation sector became deregulated, the economic and operational incentives justifying the installation of further electrical energy storage disappeared.

Energy storage technologies have regained popularity in recent years due to their capability to balance and facilitate the integration of wind and solar power. The flexibility inherent to most energy storage technologies can decrease wind and solar power curtailment and reduce the cycling burden on conventional generation units. Interest in energy storage is also due to its system service provision capability, including (primary) frequency control, secondary reserve and relieving network congestion. Moreover, synchronous storage technologies can address the inertia challenges that may arise due to the non-synchronous nature of wind and solar power, particularly in small or island power systems (Eyer and Corey 2010).

Although, in large part due to advances in the automotive industry, lithium-ion battery energy storage technologies for grid applications have gained the industry spotlight over the recent years, there exists a wide variety of energy storage technologies that may be employed for power system applications. These may be classified within: mechanical storage (i.e. compressed air energy storage and flywheels), electrochemical storage (including secondary and flow batteries), chemical storage (hydrogen and synthetic natural gas), electrical storage (i.e. double-layer capacitors), and thermal storage systems. As a result of the variety of technologies available, non-hydro energy storage models within UC vary widely depending on the technology implemented and its intended applications. The basic implementation of an energy storage unit performing energy arbitrage, which may apply to most storage assets in their simplest form, will contain the following elements:

-

Maximum and minimum charging and discharging power constraints;

-

Maximum and minimum state of charge constraints;

-

Charge and discharge efficiency.

A generic deterministic and stochastic energy storage model is proposed in Pozo et al. (2014). Some battery UC models may also optimise the lifecycle of the asset by including charge and discharge penalties. Certain energy storage technologies may require additions to the basic model, e.g., compressed air energy storage operates in combination with a gas turbine, therefore, the interactions between both technologies must be modelled (Chen et al. 2016). An increasing number of publications addresses the issue of how the representation of uncertainty within UC formulations may impact the operation and the calculated value of energy storage from both system (Pozo et al. 2014; Suazo-Martinez et al. 2014; Kiran and Kumari 2016) and private investor perspectives (Muche 2014).

2.7 System-wide constraints

The most common form of system-wide constraints are the load constraints, guaranteeing that global energy demand is exactly satisfied for each \(t \in \mathcal {T}\). This kind of constraint is not present in the self-scheduling version of UC, where each unit reacts independently to price signals, but global load satisfaction has to be taken into account, sooner or later, even in liberalized market regimes. For instance, in several countries, after the main energy market is cleared, GENCOs can swap demand between different units in order to better adjust the production schedules corresponding to the accepted bids to the operational constraints of their committed units, that are not completely represented in the auctions (Read 2010). Alternatively, or in addition, an adjustment market is ran where energy can be bought/sold to attain the same result (Palamarchuk 2012; Sauma et al. 2012) In both these cases the production schedules of all concerned units need be taken into account, basically leading back to global demand constraints. Also, in UC-based bidding systems the global impact of all the generation capacity of a GENCO on the energy prices need to be explicitly modeled, and this again leads to constraints linking the production levels of all units (at least, these of the given GENCO) that are very similar to standard demand constraints. Conversely, even demand constraints do not necessarily require the demand to be fully satisfied; often, slacks are added so that small amounts of deviation can be tolerated, albeit at a large cost (e.g., Dubost et al. 2005; Zaourar and Malick 2013).

Another important issue to be mentioned is that the demand constraints need, in general, to take into account the shape and characteristics of the transmission network. These are typically modeled at three different levels of approximation:

-

The single bus model basically the network aspects are entirely disregarded and the demand is considered satisfied as soon as the total production is (approximately) equal to the total consumption, for each time instant, irrespectively of where these happen on the network. This corresponds to simple linear constraints and it is the most common choice in UC formulations.

-

The DC model where the network structure is taken into account, including the capacity of the transmission links, but a simplified version of Kirchhoff laws is used so that the corresponding constraints are still linear, albeit more complex than in the bus model (Lee et al. 1994; Jabr 2010; Fonoberova 2010). In Ardakani and Bouffard (2013) the concept of umbrella constraints is introduced to define a subset of the network DC constraints that are active in order to significantly reduce the size of these constraints.

-

The AC model where the full version of Kirchhoff laws is used, leading to highly nonlinear and nonconvex constraints, so that even the corresponding ED becomes difficult (Murillo-Sanchez and Thomas 1998; Momoh et al. 1999a, b; Sifuentes and Vargas 2007a, b). A recent interesting avenue of research concerns the fact that the non-convex AC constraints can be written as quadratic relations (Jabr 2006, 2008; Lavaei and Low 2012), which paves the way for convex relaxations using semidefinite programming approaches (Molzahn et al. 2013). In particular, in the recent Hijazi et al. (2013) a quadratic relaxation approach is proposed which builds upon the narrow bounds observed on decision variables (e.g. phase angle differences, voltage magnitudes) involved in power systems providing a formulation of the AC power flows equations that can be better incorporated into UC models with discrete variables, notably the ones of cf. Sect. 2.8. A recount of these recent developments can be found in Bienstock (2013).

Although market-based electrical systems have in some sense made network constraints less apparent to energy producers, they are nonetheless still very relevant nowadays; not only in the remaining vertically integrated electrical systems, but also for the TSO that handles network security and efficiency. This requires taking into account a fully detailed network model, even considering security issues such as \(N - 1\) fault resilience, together with a reasonably detailed model of GENCOs’ units (comprising e.g. infra-hour power ramps, start-up costs, and start-up/shut-down ramp rate), when solving the Market Balancing problem. The latter is basically a residual demand, bidding-based UC. From a different perspective, network constraints might also be important for GENCOs that are able to exercise market power in case zonal or nodal pricing is induced by the network structure (Price 2007).

Finally, both for vertically integrated system and in the TSO perspective, other relevant system-wide constraints are spinning reserve ones: the committed units must be able to provide some fraction (at least 3% according to Takriti et al. 1996) of the total load in order to cope with unexpected surge of demand or failures of generating units and/or transmission equipment. Other global constraints linking all units, or some subsets of them, exist: for instance, all (or specific subsets of) fossil-fuel burning units may have a maximum cap on the generation of pollutants (\(\hbox {CO}_2\), \(\hbox {SO}_x\), \(\hbox {NO}_x\), particles,...) within the time horizon (Hsu et al. 1991; Fu et al. 2005; Gjengedal 1996; Kuloor et al. 1992; Wang et al. 1995). Alternatively, a cluster of geographically near units (a plant) burning the same fuel (typically gas) may be served by a unique reservoir, and can therefore share a constraint regarding the maximum amount of fuel that can be withdrawn from the reservoir within the time horizon (Aoki et al. 1987, 1989; Tong and Shahidehpour 1989; Fu et al. 2005; Cohen and Wan 1987). Finally, there may be constraints on the minimum time between two consecutive start-ups in the same plant (Dubost et al. 2005), e.g., due to crew constraints. If a plant comprises a small enough number of units it could alternatively be considered as a single “large” unit, so that these constraints become technical ones of this aggregated generator. The downside is that the problem corresponding to such a meta-unit then becomes considerably more difficult to solve.

In systems with higher degrees of penetration of intermittent generation, such as islands, UC models are sometimes amended with further constraints to help control the frequency in case of a contingency. This is relevant since, generally, intermittent resources such as wind do not provide inertia to the system, although they might through power electronics. In order to evaluate the contribution of installing such additional equipment, the models must become more accurate. Two different ways have been proposed to account for frequency in UC. The first is through a set of indirect constraints that are neither a relaxation nor a restriction of the actually desired ones (e.g., Daly et al. 2015; Ahmadi and Ghasemi 2014; Ela et al. 2014a, b; Restrepo and Galiana 2005). Obvious downsides of such an approach is that one cannot ensure satisfaction of the original constraint, nor control sub-optimality. Directly accounting for frequency related constraints in UC models can be done through a very simplified version of the differential equation system governing the loss of frequency following a contingency (e.g., Teng et al. 2016); we refer to Arteaga (2016) and Cardozo et al. (2017) for a thorough account of different approaches and extensive tests. A more precise approach can also be designed, albeit under some theoretically hard to verify assumptions (Cardozo et al. 2018); the resulting UC models can be solved by Benders-like scheme (Cardozo et al. 2016) exploiting the convexifying effect of Lagrangian relaxations (Lemaréchal and Renaud 2001).

2.8 Optimal transmission switching

Traditionally, in UC models the transmission network has been regarded as a “passive” element, whose role was just to allow energy to flow from generating units to demand points. This is also justified by the fact that electrical networks, unlike most other networks (logistic, telecommunications, gas, water,...) are “not routable”: the current can only be influenced by changing nodal power injection, which is however partly fixed (at least as demand is concerned). Indeed, in traditional UC models there were no “network variables”, and the behavior of the transmission system was only modeled by constraints. However, as the previous paragraph has recalled, the transmission network is by far not a trivial element in the system, and separate network variables are required. Recently, the concept has been further extended to the case where the system behavior can be optimized by dynamically changing the topology of the network. This is a somewhat counterintuitive consequence of Kirchhoff laws: opening (interrupting) a line, maybe even a congested one, causes a global re-routing of electrical energy and may reduce the overall cost, e.g. by allowing to increase the power output of some cheaper (say, renewable) units (Fisher et al. 2008). This effect can be especially relevant in those parts of the network with a high fraction of renewables whose production is sometimes cut off because of network constraints.

Thus, a class of problems, called Optimal Transmission Switching (OTS) or System Topology Optimization (STO), has been defined whereby each line of the network has an associated binary decision (for each \(t \in \mathcal {T}\)) corresponding to the possibility of opening it. This makes the problem difficult to solve even with a very simple model of nodal injections and a simple network model such as the DC one (cf. Sect. 2.7); even more so with the AC model and a complete description of the generating units. The so-called UCOTS models (Fisher et al. 2008; Di Lullo 2013; Hedman et al. 2011a, b; Ruiz et al. 2012; Bienstock and Verma 2011; Villumsen and Philpott 2011; Papavasiliou et al. 2013; O’Neill et al. 2010; Ostrowski et al. 2012; Ostrowski and Wang 2012; Liu et al. 2012a, b; Korad and Hedman 2013; Hedman et al. 2009, 2010; Zhang and Wang 2014) extend UC: almost everything that can be said about UC is a fortiori valid for UCOTS, and therefore in the following we will not distinguish between the two unless strictly necessary.

3 Methods for the deterministic unit commitment

We now proceed with a survey of solution methods for (the deterministic) UC. Our choice to first focus on the case where the several forms of uncertainty arising in UC (cf. Sect. 2.1) are neglected is justified by the following facts:

-

UC already being a rather difficult problem in practice, most work has been carried out in the deterministic setting;

-

Uncertainty can be taken into account through various “engineering rules”: for instance, spinning reserves allow to account for uncertainty on load, tweaking reservoir volumes might allow to account for uncertainty on inflows, and so on;

-

Methods for solving the deterministic UC are bound to provide essential knowledge when dealing with UUC.

As discussed in Sect. 2, UC is not one specific problem but rather a large family of problems exhibiting common features. Since the set of constraints dealt with in the UC literature varies from one source to another, we define what we will call a basic Unit Commitment problem (bUC) which roughly covers the most common problem type; through the use of tables we will then highlight which sources consider additional constraints. A bUC is a model containing the following constraints:

-

1.

Offer-demand equilibrium;

-

2.

Minimum up or down time;

-

3.

Spinning and non spinning reserve;

-

4.

Generation capacities.

The UC literature review (Sheble and Fahd 1994), of which (Padhy 2004) is essentially an update adding heuristic approaches, generally classify UC methodology in roughly eight classes. We will essentially keep this distinction, but regroup all heuristic approaches in “Meta-Heuristics”, thus leading us to a classification in:

-

1.

Dynamic programming;

-

2.

MILP approaches;

-

3.

Decomposition approaches;

-

4.

(Meta-)Heuristics approaches.

We will also add some of the early UC approaches in the Heuristic class such as priority listing. However, we will not delve much on that class of approaches, since the recent surveys (Farhat and El-Hawary 2009; Saravanan et al. 2013) mainly focus on these, while providing little (or no) details on approaches based on mathematical programming techniques, that are instead crucial for us in view of the extension to the UUC case.

3.1 Dynamic programming

Dynamic Programming (DP, see e.g. Bellman and Dreyfus 1962; Bertsekas 2005, 2012) is one of the classical approaches for UC. As discussed below, it is nowadays mostly used for solving subproblems of UC, often in relation with Lagrangian-based decomposition methods (cf. Sect. 3.3); however, attempts have been made to solve the problem as a whole. There have been several suggestions to overcome the curse of dimensionality that DP is known to suffer from; we can name combinations of DP and Priority Listing (DP-PL) (Snyder et al. 1987; Hobbs et al. 1988), Sequential Combination (DP-SC) (Pang et al. 1981), Truncated Combination (DP-TC) (Pang and Chen 1976), Sequential/Truncated Combination (DP-STC) (the integration of the two aforesaid methods) (Pang et al. 1981), variable window truncated DP (Ouyang and Shahidehpour 1991), approximated DP (de Farias and Van Roy 2003) or even some heuristics such as the use of neural network (Ouyang and Shahidehpour 1991) or artificial intelligence techniques (Wang and Shahidehpour 1993). The multi-pass DP approach (Yang and Chen 1989; Erkmen and Karatas 1994) consists of applying DP iteratively, wherein in each iteration the discretization of the state space, time space and controls are refined around the previously obtained coarse solution; usually, this is applied to ED, i.e., once commitment decisions have been fixed. In Pang et al. (1981) three of the aforesaid methods, DP-PL, DP-SC, and DP-STC are compared against a priority list method on a system with 96 thermal units, showing that the DP-related approaches are preferable to the latter in terms of time and performance. The recent Singhal and Sharma (2011) performs a similar study on a bUC with 10 thermal units, but only DP approaches are investigated.

Despite its limited success as a technique for solving UC, DP is important because of its role in dealing with sub-problems in decomposition schemes like Lagrangian relaxation. These typically relax the constraints linking different units together, so that one is left with single-Unit Commitment (1UC) problems, i.e., self-scheduling ones where the unit only reacts to price signals. In the “basic” case of time-independent startup costs 1UC can be solved in linear time on the size of \(\mathcal {T}\). When dealing with time-dependent startup costs instead, this cost becomes quadratic (Bard 1988; Zhuang and Galiana 1988). However, this requires that the optimal production decisions \(p^i_t\) can be independently set for each time instant if the corresponding commitment decision \(u^i_t\) is fixed, which is true in bUC but not if ramp rate constraints are present. It is possible to discretize power variables and keep using DP (Bechert and Kwatny 1972), but the approach is far less efficient and the determined solution is not guaranteed to be feasible. An efficient DP approach for the case of ramp rate constraints and time-dependent startup costs has been developed in Fan et al. (2002) under the assumption that the power production cost is piecewise linear. This has been later extended in Frangioni and Gentile (2006b) for general convex cost functions; under mild conditions (satisfied e.g., in the standard quadratic case), this procedure has cubic cost in the size of \(\mathcal {T}\). DP has also been used to address hydro valley subproblems in Siu et al. (2001) where a three stage procedure is used: first an expert system is used to select desirable solutions, then a DP approach is used on a plant by plant basis, and a final network optimization step resolves the links between the reservoirs. In Salam et al. (1991) expert systems and DP are also coupled in order to solve UC. We also mention the uses of expert systems in Mokhtari et al. (1988).

Most often DP approaches are applied to bUC, but other constraints have been considered such as multi-area, fuel constraint, ramp rates, emission constraints, and hydro-thermal systems. We refer to Table 1 for a complete list.

3.2 Integer and mixed integer linear programming

3.2.1 Early use: exhaustive enumeration

As its name implies, this approach focusses on a complete enumeration of the solution space in order to select the solution with the least cost. bUC is addressed in Kerr et al. (1966) and Hara et al. (1966), while in Hara et al. (1966) the cost function considers penalties for loss of load and over production. In Kerr et al. (1966) a set of 12 thermal units on a 2 h basis is scheduled. In Hara et al. (1966) a problem with two groups, each of which has 5 thermal units is analyzed. This traditional approach obviously lacks scalability to large-scale systems. However, some enumeration may find its way into hybrid approaches such as decomposition methods under specific circumstances, like in Finardi and Silva (2006) where enumeration is used in some of the subproblems in a decomposed hydro valley system.

3.2.2 Modern use of MILP techniques

With the rise of very efficient MILP solvers, MILP formulations of UC have become common. In general, their efficiency heavily depends on the amount of modelling detail that is integrated in the problem. Early applications of MILP can be found in Garver (1962), Muckstadt and Wilson (1968), Cohen and Yoshimura (1983), and in Cohen and Yoshimura (1983) it is stated that the model could be extended to allow for probabilistic reserve constraints. HUC is considered in Dillon et al. (1978), Pereira and Pinto (1983) and Shaw et al. (1985), where constraints regarding hydro units such as flow equations, storage level of reservoirs, pump storage and min/max outflow of each reservoir are incorporated in the model.

Some specific constraints such as the number of starts in a day or particular cost functions with integrated banking costs can be found in Turgeon (1978) and Lauer et al. (1982). In Lauer et al. (1982) the authors combine Lagrangian relaxation (e.g., Muckstadt and Koenig 1977) with a B&B procedure in order to derive valid bounds to improve the branching procedure. The upper bound is derived by setting up a dynamic priority list in order to derive feasible solutions of the UC and hence provide upper bounds. It is reported that a 250 unit UC was solved up to 1% of optimality in less than half an hour, a significant feat for the time. A similar approach is investigated in Parrilla and García-González (2006), where a heuristic approach using, among things, temporal aggregation is used to produce a good quality integer feasible solution to warm-start a B&B procedure.

While MILP is a powerful modelling tool, its main drawback is that it may scale poorly when the number of units increases or when additional modelling detail is integrated. To overcome this problem it has been combined with methods such as DP (Bond and Fox 1986), logic programming (Huang et al. 1998) and Quadratic Programming (QP) (Shafie-Khah and Parsa 2011). In Shafie-Khah and Parsa (2011) a HUC with various constraints is solved; a customized B&B procedure is developed wherein binary variables are branched upon according to their difference from bounds. The approach does not require any decomposition method, and it is reported to reduce solution time significantly in comparison to other methods. The paper builds upon (Fu and Shahidehpour 2007), where a six-step solution is proposed to solve large-scale UC; the algorithm is reported to be capable of solving security-constrained problems with 169, 676 and 2709 thermal units in 27 s, 82 s and 8 min, respectively. This so-called Fast-Security Constraint Unit Commitment problem (F-SCUC) method is based on an ad-hoc way of fixing binary variables and gradually unlock them if needed, using Benders-type cuts to this effect. However, in Frangioni et al. (2008) it is reported that MILP models, where the objective function is piecewise-linearly approximated, are much more effective than the direct use of MIQP models, at least for one specific choice and version of the general-purpose MIQP solver. In Frangioni et al. (2011) MILP and Lagrangian methods are combined, solving problems with up to 200 thermal units and 100 hydro units in a few minutes if the desired accuracy is set appropriately. In Sahraoui et al. (2017) the authors consider specific issues related to numerical errors in MILP in a HUC context and suggest some methods to deal with these errors.

Systems with a significant fraction of hydro generation require a specific mention due to a notable characteristic: the relationship between the power that can be generated and the level of the downstream reservoir (head-to-generated-power function), that can be highly nonlinear (Catalão et al. 2006), and, in particular, nonconvex. This can be tackled by either trying to find convex formulations for significant special cases (Yu et al. 2000), developing ad-hoc approximations that make the problem easier to solve (Catalão et al. 2010), or using the modelling power of MILP to represent this (and other nonconvex) feature(s) of the generating units (Piekutowki et al. 1994; Chang et al. 2001; Dal’Santo and Costa 2016; Chen et al. 2017). However, developing a good approximation of the true behaviour of the function is rather complex because it depends on both the head value of the reservoir and the water flow. MILP models for accurately representing this dependency have been presented in Jia and Guan (2011), and more advanced ones in Borghetti et al. (2008) and Alvarez et al. (2018) using ideas from (d’Ambrosio et al. 2010); while they are shown to significantly improve the quality of the generated schedules, this feature makes HUC markedly more complex to solve. Several solution approaches (MILP, MINLP and LR based decomposition) to represent hydro power generation are compared in Finardi et al. (2016). Through a 3-phase MILP strategy, accurate solutions are found for large-scale HUC instances in Marchand et al. (2018).

3.2.3 Recent trends in MILP techniques

Recently, MIP (and in particular MILP) models have attracted a renewed attention due to a number of factors. Perhaps the most relevant is the fact that MILP solvers have significantly increased their performance, so that more and more UC formulations can be solved by MILP models with reasonable accuracy in running times compatible with actual operational use (Carrión and Arroyo 2006). Furthermore, selected nonlinear features—in particular convex quadratic objective functions and their generalization, i.e., Second-Order Cone Constraints—are nowadays efficiently integrated in many solvers, allowing to better represent some of the features of the physical system. This is especially interesting because MIP models are much easier to modify than custom-made solution algorithms, which—in principle—allow to quickly adapt the model to the changing needs of the decision-makers. However, it has to be remarked that each modification to the model incurs a serious risk of making the problems much more difficult to solve. Two somewhat opposite trends have recently shown up. On one side, tighter formulations are developed that allow to more efficiently solve a given UC problem because the continuous relaxation of the model provides better lower bounds. On the other hand, more accurate models are developed which better reflect the real-world behavior of the generating units and all the operational flexibility they possess (cf. e.g. Hobbs et al. 2001; Lu and Shahidehpour 2005; Makkonen and Lahdelma 2006), thereby helping to produce better operational decisions in practice.

On the first stream, the research has focussed on finding better representations of significant fragments of UC formulations. Standard UC formulations either use three binary variables (3-bin: on/off, startup and shutdown) (Garver 1962) or one single binary variable (1-bin) (Carrión and Arroyo 2006). In recent years, development has gone into improving the tightness in particular of the 3-bin formulation. For instance, (Ostrowski et al. 2012; Morales-España et al. 2013a) develop better representations of the polyhedra describing minimum up- and down-time constraints and ramping constraints. A similar study is carried out in Morales-España et al. (2015), where specific investigations are made to account for generation while starting and stopping a unit (startup/shutdown curves). In a similar vein, Bendotti et al. (2018) provide an extension to many units of the analysis initiated in Rajan and Takriti (2005) as min-up/min-down constraints are concerned; in particular, a set of new valid inequalities is introduced. By extension, the computational complexity is looked at in Bendotti et al. (2017a); not surprisingly, UC is found to be NP-Hard even in simple situations. Further such investigations are related specifically to the consideration of start-up costs as in Brandenberg et al. (2017). In Yang et al. (2017) an alternative two binary variable (2-bin) formulation is proposed and widely tested, proving that it can be competitive with 1-bin and 3-bin ones. A different approach is proposed in Fattahi et al. (2017a), where a conic strengthened semidefinite program (SDP) is constructed for the convex relaxation of a classic UC formulation. The valid inequalities are based on the Reformulation-Linearization-Technique (RLT) (Sherali and Adams 1998) and the so-called triangle inequalities; incorporating them in the UC problem, several test cases—including the large-scale IEEE 300 bus one—can be solved to global optimality using the commercial-grade SDP solver Mosek. Recently, new formulations have been developed inspired by DP approaches (cf. Sect. 3.1), i.e., using state transition variables: these can be shown to represent the convex hull of integer solutions for the case of one single unit (Frangioni and Gentile 2015), even in presence of quadratic costs if perspective reformulation techniques are also employed (Frangioni and Gentile 2006a), and to improve performances in practice (Atakan et al. 2018). Conic programming techniques are also used in Frangioni et al. (2009), Wu (2011) and Jabr (2012), which focus on better piecewise-linear reformulations of the nonlinear (quadratic) power cost function of thermal units. Both approaches, that can be easily combined, have been shown to attain impressive speed-ups in cpu time for a fixed level of modelling detail, thus emphasizing (once more) the importance of carefully investigating the mathematical properties of the underlying optimization problem and its ingredients.

The second stream rather aims at improving the accuracy of the models in representing the real-world operating constraints of units, that are often rather crudely approximated in standard UC formulations. For hydro units this for instance concerns technical constraints (Chang et al. 2001) and the already discussed water-to-produced-energy function, with its dependency from the water head of the downstream reservoir (Piekutowki et al. 1994; Finardi and Silva 2006; Borghetti et al. 2008). For thermal units, improvements comprise the correct evaluation of the power contribution of the start-up and shut-down power trajectories (when a unit is producing but no modulation is possible) (Arroyo and Conejo 2004), which may make the model significantly more difficult unless appropriate techniques are used (Morales-España et al. 2013b), or a clearer distinction between the produced energy and the power trajectory of the units (García-González et al. 2007; Morales-España et al. 2014). Further, recent investigations involve the use of storage devices (Steber et al. 2018).

In the OTS context (cf. Sect. 2.8), special care must be given when modeling the Kirchhoff laws, as this leads to logic constraints that, in MILP models, are typically transformed into “big-M” (hence, weak) linear constraints. Moreover, severe symmetry issues (Ostrowski et al. 2010) must be faced (Di Lullo 2013; Ostrowski et al. 2012), as these can significantly degrade the performances of the B&B approach. Recent symmetry breaking techniques, known as orbital branching (a terminology pinned down in Ostrowski et al. (2011)) or orbitopal fixing (e.g., Kaibel et al. 2011) are applied to UC in Ostrowski et al. (2015) and Bendotti et al. (2017b). All these difficulties, not shared by UC with DC or AC network constraints, require a nontrivial extension of the “classic” MILP UC models. Many approaches use off-the-shelf B&B solvers, while possibly reducing the search space of the OTS binary variables (Ruiz et al. 2012; Ostrowski and Wang 2012; Liu et al. 2012b) and using tight formulations for the thermal units constraints. All these references use classic quadratic cost functions; one exception is (Di Lullo 2013), where a direct MILP approach is combined with a perspective cuts approximation (Frangioni et al. 2009) and a special perturbation of the cost function that successfully breaks (part of the) symmetries. Together with heuristic branching priorities that give precedence to the thermal UC status variables, this is shown to be much better than using a classic quadratic function, with or without perturbations, for solving the IEEE 118 test case. In Kocuk et al. (2016) cycle inequalities are proposed inspired by Kirchoff’s Voltage Law that are used to strengthen the MILP OTS formulation. Several types of valid inequalities are proposed in Kocuk et al. (2017) to strengthen a MISOCP formulation including a full AC model for the transmission constraints. In the very recent Fattahi et al. (2017b) it is proven that finding the “best” M for the disjunctive big-M constraints in the OTS problem is NP-hard in general, and this beyond the fact that problem itself is NP-hard. Yet, a procedure based on Dijkstra’s algorithm is proposed for OTS with a fixed connected spanning subgraph that finds non trivial upper bounds on the M constants, which significantly reduces the computational time in several test systems including the real Polish network. In Shi and Oren (2018) a UCOTS model for systems with high renewable production is shown to be capable of reducing total cost via transmission switching even in the absence of congestion; the proposed approach first decomposes the system into zones, and then solves the problem for each zone in parallel (Table 2).

3.3 Lagrangian and Benders decomposition