Abstract

This paper presents an artificial neural network-based multiscale method for coupling continuum and molecular simulations. Molecular dynamics modelling is employed as a local “high resolution” refinement of computational data required by the continuum computational fluid dynamics solver. The coupling between atomistic and continuum simulations is obtained by an artificial neural network (ANN) methodology. The ANN aims to optimise the transfer of information through minimisation of (1) the computational cost by avoiding repetitive atomistic simulations of nearly identical states, and (2) the fluctuation strength of the atomistic outputs that are fed back to the continuum solver. Results are presented for prototype flows such as the isothermal Couette flow with slip boundary conditions and the slip Couette flow with heat transfer.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the past decade, the study of micro and nanoscale flows has attracted significant scientific and industrial interest due to the increasing number of devices operating on small scales. Applications of micro and nanofluidic devices, for example, have benefited various disciplines spanning from nanomedicine to nanomanufacturing and environmental sciences (Kamholz et al. 1999; McClain and Sims and Ramsey 2003). Due to their large surface-to-volume ratio, the flows in these devices are sensitive to the surface properties. Improving our physical understanding of micro and nanoscale phenomena is thus essential for further exploiting the application of micro and nanofluidics (Nicholls et al. 2012; Gad-El-Hak 2006; Singh et al. 2008, Prasianakis and Ansumali 2011).

In the framework of continuum modelling the microscopic mechanics tends to be neglected and the microscopic effects are usually represented through averaged quantities such as viscosity and thermal conductivity (Sofos et al. 2009; Liu et al. 2007; Priezjev 2007). Therefore, as the operational dimensions are downsized to smaller scales, where the surface properties dominate the flow characteristics, the macroscopic constitutive relations and boundary conditions become inadequate and microscopic models, such as molecular dynamics (MD), have to be employed. Various MD studies have been presented in the literature with respect to effects of surface properties, such as wettability and nanoscale roughness and the slip generated in solid-fluid interfaces (Thompson and Troian 1997; Asproulis and Drikakis 2010; Priezjev et al. 2005; Yang 2006; Asproulis and Drikakis 2010; Niavarani and Priezjev 2010; Asproulis and Drikakis 2011; Nagayama and Cheng 2004; Sofos et al. 2012). However, the applicability of atomistic models to larger temporal and spatial scales is restricted due to their high computational cost (Asproulis et al. 2012; Asproulis and Drikakis 2009; Lorenz et al. 2010; Valentini and Schwartzentruber 2009; Koishi et al. 2005; Plimpton 1995). Aiming to confront the efficiency and accuracy limitations of the molecular and continuum models, respectively, and provide a unified description across the various scales, hybrid continuum-molecular approaches have been developed (Liu et al. 2007; O’connell and Thompson 1995; Hadjiconstantinou and Patera 1997; Delgado-Buscalioni and Coveney 2003; Werder et al. 2005; Ren and Weinan 2005; Delgado-Buscalioni et al. 2008; Lei et al. 2010).

The multiscale methods in the explicit hybrid atomistic continuum context can be broadly classified as geometrical coupling (domain decomposition) and embedded coupling (Karniadakis et al. 2005; Kalweit and Drikakis 2008; Garcia-Cervera et al. 2008). The geometrical coupling methods (O’connell and Thompson 1995; Delgado-Buscalioni and Coveney 2004; Hadjiconstantinou 1999; Nie et al. 2004; Kalweit and Drikakis 2008; Fedosov and Karniadakis 2009; Delgado-Buscalioni et al. 2009; Lei et al. 2011; Borg et al. 2010) embrace a domain decomposition approach for spatial scales separation exploiting the fact that although the continuum equations may fail to describe the phenomena in a particular area, they are still valid in large regions of the domain. Hence, the application of the computationally intensive molecular solver is minimised to a small region and the rest of the computational domain is covered by the less computationally intensive continuum solver. In the embedded methods introduced by Ren and Weinan (2005) as an application of the generic heterogenous multiscale method (HMM) (Weinan et al. 2007), the entire domain is resolved by the macroscopic model and the microscopic solver is used as a local refinement technique to provide data required by the continuum description (Ren and Weinan 2005; Karniadakis et al. 2005; Asproulis et al. 2009). The main difference between the two coupling approaches, geometric and embedded, lies in the treatment of the molecular solver. In the former, atomistic simulations are utilised to model certain regions that have been explicitly defined, whereas in the latter, microscopic simulations are performed locally as high-order resolution to enhance the accuracy of the continuum solver. In the embedded frameworks the atomistic simulations are employed to provide accurate boundary conditions, such as slip velocity or temperature jumps; to substitute constitutive relations; and to calculate transport properties, such as viscosity, thermal conductivity or accommodation coefficients. The embedded multiscale methods are ideal for cases where the characteristic time scales of the flow phenomena are large compared with the microscopic time scales and, therefore, from the molecular perspective the flow is in a quasi-steady state during the continuum time steps. Additionally, in the embedded framework the employed atomistic regions do not have direct communication; instead, they exchange information with the continuum model and establish an indirect communication with each other through the macroscopic solver. The same concept applies to the equation-free approach (EFA) (Kevrekidis et al. 2004) where the patches of the atomistic simulations communicate through temporal and spatial extrapolated equations. The objective of EFA is to bridge the time and length scales and predict the macroscale dynamics by performing only microscopic simulations. The advantage of EFA is that circumvents the need for a closed analytical description of the macroscale systems. Recently, a seamless coupling scheme for speeding up the multiscale process by circumventing the need for re-initialising the microscopic solver at every continuum time step has been proposed (Weinan et al. 2009).

One of the most challenging tasks in the development of hybrid atomistic-continuum is the transfer of information from the continuum description to the atomistic system (Kalweit and Drikakis 2008; Delgado-Buscalioni 2012; Hadjiconstantinou 2005; Steijl and Barakos 2010). The main difficulty lies in the disparity between the degrees of freedom modelled by the atomistic and continuum models. In the geometrical coupling there are two main approaches regarding the boundary condition transfer (BCT):

-

The coupling through fluxes (Delgado-Buscalioni and Coveney 2004; Flekkoy et al. 2005; Wagner and Flekkoy 2004; Ren 2007), where the momentum, mass or energy should be able to flow seamlessly from one description to the other and vice versa.

-

The coupling through state (Werder et al. 2005; Hadjiconstantinou 2005; Hadjiconstantinou 1999; Koumoutsakos 2005; Bugel et al. 2011), where the profiles of the primitive variables such as density, temperature and velocity must be consistent between the two descriptions.

The selection of the most suitable coupling method is a non-trivial task and is primarily problem dependent. Compressible and incompressible formulations are associated with different physical and mathematical hydrodynamics limits (Hadjiconstantinou 2005; Ren 2007; Hadjiconstantinou 2006). Compressibility can be a criterion for determining the most suitable coupling approach with time-explicit coupling through fluxes usually used for compressible formulations and the state coupling for incompressible ones (Hadjiconstantinou 2005). In the vast majority of the geometrical coupling techniques the time scales between the macroscopic and microscopic descriptions are fully coupled. Therefore, the overall computational time is limited to the time scales computed by the microscale solver. A time decoupling approach, applicable only for steady-state cases under the state coupling scheme, has been proposed by Hadjiconstantinou and Patera (1997) utilising Schwartz alternating method (Werder et al. 2005; Hadjiconstantinou 1999). Furthermore, in the multiscale frameworks the size of the overlapping region, where the exchange of information between the continuum and molecular description takes place, has a big impact on the convergence of the macroscopic solver (Kalweit and Drikakis 2008; Kotsalis et al. 2007; Yen etal. 2007). Kotsalis et al. (2009) proposed a control algorithm that has been applied to liquid water and monoatomic liquids and eliminates any spurious density fluctuations introduced by the coupling procedure.

In the embedded formulations the information transferred from the continuum description to the molecular solver is the macroscopic state, meaning the continuum density, velocity and temperature (Ren and Weinan 2005; Mohamed and Mohamad 2010; Ren 2007). The embedded coupling developments lack of a unified framework able to expand their application envelop and employ larger temporal and spatial scales. In the current implementations the macroscopic quantities of interest, measured from the atomistic solver, are fed back to the CFD solver at every continuum time step. This procedure leads to repetitive MD simulations of nearly identical states and hence constitutes a major burden for the efficiency of the multiscale scheme. Additionally, the information transferred to the continuum description is subject to fluctuations that can significantly affect the stability and convergence of the macroscopic solver. Generally, simulating almost identical states allows the molecular fluctuations to propagate in the continuum description and, consequently, to dictate the convergence of the overall procedure.

In the paper a new artificial neural network-based coupling (ANN-b-C) is proposed. This method inherits characteristics of the embedded framework and aims to further improve the computational efficiency of the embedded modelling approaches utilising artificial intelligence techniques such as ANN. The aim of this paper is to provide the basic formulation of the ANN based method by giving a detailed description of the techniques that can be employed for transferring the continuum information to the molecular domain and the numerical optimisation procedures that are utilised for feeding the information back from the microscopic model to the macroscopic one. This paper is organised as follows: Sect. 2 provides a brief overview of the continuum and molecular models that are employed in this study along with a brief introduction to neural networks. In Sect. 3 the ANN-based methodology and the techniques for exchanging information between the macroscopic and microscopic description are presented. Section 4 presents the results of the numerical investigation for the boundary condition problem and, finally, Sect. 5 summarises the conclusions drawn from the present study.

2 Computational models

2.1 Continuum model

For the scope of the present study the incompressible Navier-Stokes numerical model has been employed.

where \({\user2{u}}\) is the velocity field, ρ the density, p the pressure and ν is the kinematic viscosity. The CFD simulations have been performed using a high-resolution parallel solver (Shapiro and Drikakis 2005a, b; Drikakis et al. 1994, 2000). The CFD approach is based on the artificial compressibility method (see Drikakis and Rider 2004 and references therein), characteristics-based schemes up to third-order accurate (Drikakis et al. 1994) and third-order in time explicit Runge-Kutta schemes (Drikakis et al. 1994, 2000) (also Drikakis and Rider 2004 and references therein).

2.2 Atomistic model

For the atomistic model MD simulations are employed. The governing system of equations for MD is a system of Newton’s equation of motion

for each atom i. These are modelled as mass points with position \({\user2{r}}_i\) and mass m i . Each atoms potential energy V i is the sum of semi-empirical analytical functions that model the real interatomic forces. The atomic trajectories are calculated by time integration of Eq. 3 for all atoms. The time integration is performed by a finite difference method such as the predictor-corrector method or the Verlet algorithm (Verlet 1967; Allen and Tildesley 1987). Despite the apparent simplicity, the simulations are very computationally demanding due to the huge number of atoms involved, even for small systems. Only with the use of modern parallel computers can the MD simulations of several millions of atoms (Sutmann 2002) be performed.

In the test cases examined in the current study the interatomic interactions of the particles are modelled by the shifted Lennard-Jones (LJ) 6–12 potential (Hadjiconstantinou 1999). The molecular simulations of the current study have been performed using the LAMMPS software (Plimpton 1995). Additional information regarding the parametrization of the atomistic models such interaction parameters, time-step used and number of time steps employed are case specific and, therefore, are provided in the description of test cases.

2.3 Artificial neural networks

The development of artificial neural networks was originated 50 years ago and was motivated by a desire to understand and mimic the human brain and intelligence. Specifically, neural networks were first introduced in 1943 by McCulloch and Pitts (1990; Krose and Smagt 1996). Since then, neural networks have found applications in a number of disciplines across the board of science and engineering such as electrical engineering, signal and speech processing, medicine, pattern recognition, business and applied mathematics. Their main advantage is their ability of modelling problems where the relationships among certain variables are not explicitly known (Fausett 1994).

The neural networks are information processing systems that have certain performance characteristics in common with the biological neurons. The artificial neural networks have been developed as mathematical models based on the following assumptions:

-

The processing of information occurs in many elements called neurons.

-

There are connection links for passing the signals between the neurons.

-

All the connection links have a corresponding weight.

-

The output is determined by an activation function which is applied at each neuron. The activation function is adding non linearity to the network.

The structure of a typical neuron consists of two parts: the net function and the activation function. The net function determines how the inputs are combined inside the neuron while the activation function determines the output of the neuron. The activation functions are essential parts of the neural network because they introduce non linearity to the network Fig. 1. Without the activation functions the neural networks are not capable of representing non linear relationships between inputs and outputs. Another important element for the neural networks is the training algorithms. The term training characterises the entire procedure that determines the values of a neural network’s weights. This procedure is not unique and is crucial for the behaviour of the network. Generally two types of training can be identified, the supervised and the unsupervised. In the scope of the current study the supervised training and specifically the back-propagation algorithm is employed.

Optimising the architecture of one neural network is a crucial and challenging task. Generally, the neural networks’ architecture is directly related to the performance and behaviour of the networks and at the same time there is no theoretical background or systematic methodology of how this architecture will be found. The traditional methods follow a trial and error process which is time consuming, is based on the developer’s experience and involves a high degree of uncertainty (Benardos and Vosniakos 2007). In the context of hybrid atomistic continuum modelling an optimisation methodology based on genetic algorithms (GA) that search over the networks topology by varying the number of hidden units and layers is employed. An analytic description of the utilised methodology can be found in (Benardos and Vosniakos 2007).

Overfitting is one of the major problems faced during the training procedure of artificial neural networks (Schittenkopf et al. 1997; Huang et al. 2009). In the context of the present study the approach followed to minimize overfitting is twofold: (1) The data available for training are divided to two sets a training and a validation one. The training set contains 80% of the data originally available, the validation set the remaining 20% and during training a cross-validation is performed aiming to minimise the error in both sets; (2) in the second approach during the geometry optimisation procedure neurons with small variance in the output are penalised and removed allowing only the most important information to be transmitted.

3 ANN-based coupling

The basic idea of the ANN-based coupling is to perform tailored atomistic simulations aiming to provide parameters for the accuracy of the macroscopic solver. In the proposed approach information is transferred in both ways, initially from the continuum to the molecular description and, subsequently, the atomistic information is fed back to the continuum solver. The atomistic model is constrained by the continuum information to be consistent with the local macroscopic state under the local equilibrium assumption.

In the ANN-b-C the entire domain is covered with the macroscopic solver and the atomistic model enters as a local refinement. Thus, the results from the microscale are embedded in the continuum simulation and in that sense ANN-b-C inherits characteristics of an embedded framework. This scheme naturally decouples the time scale between the atomistic and continuum description.

The type of the problems that ANN-b-C is applied can be classified as follows (Ren and Weinan 2005):

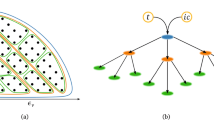

Boundary condition problem: in the majority of the macroscopic simulations the no-slip hypothesis is assumed, or in the cases of rarefied gases in high Knudsen numbers continuum slip models are employed. However, there are cases like the liquid flow over hydrophobic surfaces where further molecular level information is required. For this problem molecular simulations are performed around specific grid points (see Fig. 2) to examine the fluid behaviour in the context of fluid-solid interaction and, consequently, to calculate the appropriate boundary conditions. The MD simulations are constrained through the local continuum state and the slip velocities are calculated by the microscopic simulations and fed back to the continuum solver. The constrained factors for the molecular simulations and the data transfer to the continuum solver may vary depending on the nature of the problem.

Transport coefficient problem accurate knowledge of transport coefficients such as viscosity, thermal conductivity or accommodation coefficients can significantly improve the quality of the continuum model. When these coefficients are not explicitly known particle based methods can be directly applied in order to provide the missing data. For example, in the gas slip simulations the values of the accommodation coefficients can affect significantly the amount of slip generated. However, these values can be affected by local conditions and, therefore, there are problems where they have to be evaluated on the fly.

Constitutive relations problem in the macroscopic simulations the constitutive relations, for example, the relation between the stresses and the strain rate, cannot be explicitly known. For these cases, molecular simulations can be utilised to calculate the constitutive relations that are needed for the continuum solver. The MD simulations are then performed around specific grid points, constrained through the velocity gradients, and the calculated stresses are fed back to the continuum solver.

3.1 From continuum to molecular

The accuracy and efficiency of multiscale approaches depend to a great extent on the BCT method that constrains the atomistic region to the continuum conditions. The problem of imposing macroscopic conditions on a molecular system is a very challenging task and has not yet been addressed for a general case (Kalweit and Drikakis 2008; Hadjiconstantinou 2005; Drikakis and Asproulis 2010). The main difficulty is the disparity between degrees of freedom modelled by the atomistic and continuum models. The current subsection provides a brief description of the BCT techniques employed under the ANN-based framework. Further investigations regarding the accuracy and efficiency implications of the BCT can be found in (Asproulis et al. 2009).

For this type of problems MD simulations are employed in specific grid points near the walls to model molecular interactions and produce more accurate boundary conditions. The local continuum state, for example velocity, density, pressure and temperature, is applied to the microscopic simulations through the appropriate boundary conditions and then the data calculated from the molecular simulations are fed back to the continuum solver. In general continuum boundary conditions can be applied not only at the upper boundary but also at the other faces except the lower one that faces the solid wall. This region plays a twofold role: it ensures that the molecular simulations are consistent with the continuum state and it serves as a particle reservoir for the rest of the molecular domain.

Enforcing the continuum constraints requires to alter the properties of the atoms inside the constrained region to match the continuum velocity, \({\user2{u}}_{\rm{con}},\) and temperature, T con. Generally, in the hybrid frameworks two alternative methods are employed under the scheme for imposing the continuum boundary conditions. The first one is based on periodic re-scaling of the atomic velocities (Liu et al. 2007; Delgado-Buscalioni and Coveney 2003; Delgado-Buscalioni and Coveney 2004; Nie et al. 2004, 2006; Delgado-Buscalioni and Coveney 2003; De Fabritiis et al. 2006), and the second one on a periodic re-sampling from a velocity distribution functions, such as the Maxwell-Boltzmann (Hadjiconstantinou and Patera 1997; Ren and Weinan 2005; Hadjiconstantinou 1999) or the Chapman-Enskog (Garcia and Alder 1998; Schwartzentruber et al. 2007, 2008a, b; Wijesinghe et al. 2004; Wijesinghe and Hadjiconstantinou 2004) distribution. For the scope of the current study re-scaling techniques are employed, and more details for the applicability of each of the two methods can be found in (Drikakis and Asproulis 2010) and (Hadjiconstantinou 2005).

3.2 From molecular to continuum

The transfer of information from the molecular to the continuum description, although less complicated compared to the reverse procedure, is crucial for the efficiency and accuracy of the hybrid scheme. In atomistic simulations the calculation of macroscopic variables is performed through averaging the corresponding microscopic properties. Thus, the information transferred to the continuum description is subject to fluctuations in space and time. The fluctuations introduced can affect the stability and convergence of the continuum solver; however, this is a problem that primarily arises in the geometrical decomposition approach. For ANN-b-C the fluctuations can be reduced simply by increasing the number of atoms and/or the number of time steps from which the respective quantity is calculated. This is achieved by increasing the volume of the cell and the overall simulation time for which the calculations are performed.

In the current implementation of the embedded frameworks, molecular simulations are performed at every time step of the continuum solver. The macroscopic quantities of interest are measured from the MD simulations and fed back to the CFD solver, where they are used to advance the solution forward in time. This basic procedure leads to repetitive MD simulations of nearly identical states and, thus, a more sophisticated algorithm that utilises already performed MD simulations is employed. In the proposed coupling framework two optimisation procedures have been developed: (1) the linear optimisation and (2) the neural network optimisation.

3.2.1 Linear optimisation

For simplicity, consider an example, where the MD simulation of the flow at the boundary have to be performed for specified density, ρ

con, and velocity, u

con. The slip velocity as function of ρ

con and u

con, i.e. u

slip() is fed back to the continuum solver. In the linear optimisation, instead of performing atomistic simulations for every data set required by the continuum solver, the macroscopic variables are discretised based on an initial value, u

in, ρ

in, and an interval, δu, δρ. Therefore, when a set of \(\left(u_{\rm{con}},\rho_{\rm{con}}\right)\) is given as an input, the discrete sets \(\left(u_{\rm{in}}+m\delta u,\ \rho_{\rm{in}}+n\delta\rho\right),\)

\(\left(u_{\rm{in}}+\left(m+1\right)\delta u,\ \rho_{\rm{in}}+n\delta\rho\right),\,\left(u_{\rm{in}}+m\delta u,\ \rho_{\rm{in}}+\left(n+1\right)\delta\rho\right),\) and \(\left(u_{\rm{in}}+\left(m+1\right)\delta u,\ \rho_{\rm{in}}+\left(n+1\right)\delta\rho\right)\) are identified, where \(u_{\rm{in}}+m\delta u<u_{\rm{con}}<u_{\rm{in}}+\left(m+1\right)\delta u\) and \(\rho_{\rm{in}}+n\delta\rho<\rho_{\rm{con}}<\rho_{\rm{in}}+\left(n+1\right)\delta\rho\) and \({m,n\ \in {\mathbb{Z}}}\) (see Algorithm 1). Molecular simulations are performed for the four data sets and through a bilinear interpolation the outcomes for the input \(\left(u_{\rm{con}},\rho_{\rm{con}}\right)\) are calculated. The calculated molecular data are stored and are being utilised if another input is in the same or an adjacent interval. Therefore, as the simulation evolves the number of the performed MD simulations is minimised. Furthermore, depending on the simulation set up, the accuracy requirements and the resources available by modifying the δu, δρ parameters the number of total atomistic simulations will vary; larger values implies less MD simulations.

3.2.2 Neural network optimisation

In the NN optimisation, instead of predefining the input sets for the atomistic simulations the parameters x in and δx, are utilised to define a confidence interval around the input data. If any library data are inside this confidence interval then the output is based on the library data; otherwise, atomistic simulations are performed for the exact continuum input set. For example, assume a continuum input x in and a parameter δx based on which a library search is performed for data x lib with x in − δx < x lib < x in + δx. If data that fulfill the aforementioned requirements are found, then the atomistic outputs are estimated based on neural networks trained with the library’s information. In the event that the information transferred from the continuum is not in the library’s confidence limits, then MD simulations are performed, the outputs are stored in the library, the neural networks are trained to accommodate the new information and are used to provide the atomistic output (see Algorithm 2).

Depending on the efficiency and accuracy requirements the δx i parameters that determine the confidence intervals can be adapted. Smaller values imply higher number of atomistic simulations and, therefore, larger data availability for obtaining statistical averages, training and testing the neural networks. On the other hand, larger values of δx suggest that fewer MD will be performed and more atomistic simulations will be substituted by neural networks contributing to the reduction of the computational workload. Another parameter that can be defined based on the problem’s requirements is the acceptable minimum number of library data that should be included in the input's confidence intervals. As this number increases, more atomistic simulations will be carried aiming to enhance the problem’s computational accuracy.

The main advantage of the neural network optimisation procedure is its flexibility to encompass additional number of continuum input parameters, its implementation simplicity and robustness. Every data provided from the atomistic description to the continuum solver are transferred through the neural networks that act as filters that suppress the inherent fluctuations of the molecular data. The aim of the neural network optimisation is twofold: (1) to initially utilise already performed data for similar states and to minimise the overall computational procedure and (2) to minimise any instabilities induced to the macroscopic description due to propagation of atomistic fluctuations towards the continuum solver

4 Hybrid studies for the boundary condition problem

4.1 Slip Couette flow

The flow of a fluid inside a micro or nanochannel can be significantly influenced by liquid slip conditions at the solid boundary. There are fundamental open questions regarding the applicability of no-slip boundary conditions. The conditions under which the no-slip boundary assumption becomes inaccurate and the relationship of stress and strain rate becomes nonlinear are not known from first principles (Nagayama and Cheng 2004; Sofos et al. 2012; Gad-El-Hak 2005).

In the current example, the number of particles generated in the microscopic domain is defined from the continuum density and their velocities are initialised through a Maxwell-Boltzmann distribution based on the continuum temperature. The macroscopic velocity is imposed through the upper boundary of the molecular domain, in a reservoir region with height h = 4σ, and by utilising velocity rescaling. The simulations are assumed to be isothermal and, therefore, since there is no need to exchange temperature information, the temperature in the entire molecular region is controlled through a Langevin thermostat. The molecular simulations are employed at the beginning of every continuum time step to calculate the slip velocity in the solid–liquid interface, which is transferred to the continuum solver through the velocity boundary conditions.

The solid wall is modelled as two immobile planes of a (111) fcc lattice. The solid surface orientation along with the orientation of the flow have major influence on the total amount of slip (Soong et al. 2007) that is generated due to the nanoscale roughness arising from the arrangement of the wall atoms. For the current test case the (111) fcc plane is employed to minimise the atomic surface roughness and consequently maximise the slip at the boundary.

The shifted Lennard-Jones (LJ) 6–12 potential, with cut off distance r c = 2.2 σ, is employed to model the inter-atomic interactions of the wall and fluid particles. The fluid’s density and temperature are ρ = 0.81 mσ −3 and \(T=1.1\,\epsilon k_{B}^{-1},\) respectively. The wall-fluid interactions are also modelled by the LJ potential with energy \(\epsilon_{wf}\) and length scale σ wf . The parameters used in the simulations are summarised in Table 1.

The first set of parameters is used for creating no-slip boundary conditions and the other two correspond to slip boundary conditions (Thompson and Troian 1997). The heat exchange is controlled by a Langevin thermostat with a random uncorrelated force and a friction term \(\Upgamma=1.0\, \tau^{-1},\) where τ is the characteristic time \(\tau=(m\sigma^{2}/\epsilon)^{1/2}\) (Sofos et al. 2009; Thompson and Troian 1997; Sofos et al. 2012; Yen et al. 2007). The thermostat is only applied in the z direction to avoid any undesirable influences in the flow direction.

4.1.1 Hybrid Couette flow

An important parameter for the realistic behaviour of the hybrid methods is the size of the molecular domain which has to be sufficiently large to capture the physics of the problem. In order to explore the influence of the molecular domain size, a number of MD simulations have been performed with different domain sizes, with the slip length \(L_{s}=\frac{u_{s}}{\left(\frac{\partial u}{\partial n}\right)_{w}}\) used as validation criterion. MD simulations were performed in four domains with different heights \(\Uplambda=5,\ 10,\ 15\) and 20σ and dimensions in the x − z plane 10 × 10 σ2. The height H refers to the size of the molecular domain in the direction y normal to the wall. The variation of the slip length, for various shear rates, with the height of the molecular domain is shown in Fig. 3.

For heights less than 10 σ, the slip length is underestimated and for heights larger than 10 σ the mean value of the slip length for different shear rates exhibits small differences. The results are in good agreement with (Yen et al. 2007), where for channel heights larger than 10 σ the MD results were consistent with the continuum assumptions. Therefore, a height of 10 σ has been selected for the atomistic region. The size of the molecular domain should be minimal to reduce the overall computational cost.

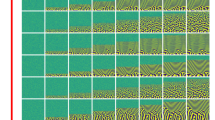

Previous MD studies (Sofos et al. 2009; Thompson and Troian 1997; Sofos et al. 2012; Giannakopoulos et al. 2012) have identified that the degree of slip at the boundary depends on a number of parameters, including the strength of the solid–liquid interaction, the thermal roughness of the interface and the ratio of wall and liquid density. To investigate the effects of the solid–fluid interaction strength, hybrid simulations of Couette flows have been performed. In Fig. 4 the velocity profiles for a channel with height H = 50 σ are presented for three different sets of parameters of the solid–liquid interaction. The time step for the continuum solver was equal to 10τ and the time step of 0.005τ was used in the microscopic solver.

The results obtained from the multiscale procedure are in good agreement with those obtained from other hybrid methods based on the domain decomposition (Wang and He 2007) and those obtained from fully MD simulations (Thompson and Troain 1997), where the maximum deviation for the slip velocity ranges from 0 to 24% of the upper wall velocity u wall for the no-slip and the slip boundary conditions, respectively.

4.1.2 Linear optimisation

In the above Couette flow case MD simulations were performed at the start of every continuum time step. As a consequence, molecular simulations have been carried out for almost identical continuum inputs resulting to an increased computational cost without any subsequent accuracy advantages. In addition to the computational burden, simulating nearly identical continuum states may contribute to the transfer of intrinsic molecular fluctuations to the continuum solver, consequently impacting on its stability and convergence. Figure 6 shows the history of the root mean square (RMS) velocity residual. The fluctuations of the velocity values reveal the presence of the molecular solver. These fluctuations occur due to the hybrid boundary condition applied at the lower wall, however, the overall convergence trend in these cases is not greatly affected.

Aiming to minimise the computational cost of the hybrid simulations and reduce the residual oscillations a numerical optimisation procedure has been employed. The input data for the molecular solver, in the current test case, are essentially the continuum velocity of the first cell above the lower wall and the calculated data are the slip velocity at the solid–liquid interface. In the numerical optimisation procedure MD simulations were performed with \({u_{\rm{in}}^{\rm{con}}}=0.0\ \sigma/\epsilon\) and \(\delta u^{\rm{con}}=0.1\ \sigma/\epsilon.\) The simulations have been carried out for channel height H = 50 σ.

Figure 5 shows the velocity profiles as calculated with and without the presence of the optimisation procedure. The outcomes from both cases are in good agreement. Near the lower wall small deviations are observed mainly due to the inherent fluctuations of molecular solver. One of the advantages of the numerical optimisation is the reduction of oscillations in the data transferred to the continuum domain. This can be identified in Fig. 6 where the fluctuations’ magnitude and frequency have been suppressed. The linear optimisation offers a significant enhancement of the stability and convergence of the continuum solver. The optimisation is dependent on the values of δu. Specifically, in cases where δu is very small or \(\delta u \rightarrow 0\) the advantages of the linear optimisation are eliminated. Although the linear optimisation prohibits the propagation of any instabilities towards the continuum side, it does not take into account the oscillating nature of the atomistic outputs and provides statistically averaged data. To circumvent these problems the discretisation parameters should selected cautiously and in case where small values of δu have to employed more sophisticated interpolation techniques with smoothing capabilities should be adopted.

4.1.3 ANN optimisation

The ANN optimisation has been also employed to study the slip Couette flow case. In the current example one neural network has been used with one input, the continuum velocity, one output, the slip velocity, and two hidden layers with 3 neurons each. The hybrid simulations have been performed for two confidence intervals \(\delta u= 10^{-3},\ 5\times10^{-3}.\) The confidence intervals determine to one extent the number of atomistic simulations that are performed. In the current case, 75 and 50 MD simulations have been performed for δu = 10−3 and \(\delta u=5\times10^{-3},\) respectively. The differences in the outputs for the two confidence intervals are less than 1% showing the consistency of the method along with the predictive abilities of the neural networks that can produce consistent outputs even when trained with different amount of data. Table 2 shows the root mean square differences (RMSD) between velocity outputs, for various values of δu, as produced by hybrid simulations with ANN optimisation with δu = 10−3 and outcomes produced with linear optimisation. The results are generally in good agreement;however, the outcomes produced from the linear optimisation tend to underestimate the slip velocity. The main reason for that behaviour is the lack of statistical smoothing and averaging of the atomistic outputs in the linear optimisation. Linear optimisation takes into account only the outputs for specific inputs and not the averaged output values for adjacent input data. Figure 6 shows the velocity residual for all the cases examined and the spike noticed in the ANN optimisation is primarily due to a change in the architecture of the neural network.

4.2 Heat transfer in Couette flow

The heat transfer in Couette flow with slip boundary conditions has also been investigated. Coupled simulations are employed near the bottom wall to provide adequate information regarding the slip’s magnitude. Slip is not only dependent on the local shear rate and the interfacial interaction parameters between the solid and the fluid but also on the local temperature. Therefore, in the hybrid set-up the information transferred from the continuum to the molecular description includes the local velocity, density and temperature. Additionally, the hybrid simulations will be able to model and capture the temperature jumps noticed near the thermal walls.

In the continuum description the heat transfer is described through the following equation (Liu et al. 2007)

where c u is the specific heat, λ is the thermal conductivity and μ is the dynamic viscosity. The CFL number employed in the continuum solver is 0.25.

4.2.1 Hybrid simulations

For the current case the size of the molecular domain is 12 σ in the x and y direction and 4 σ in the z direction. The upper region with height 2 σ and 10 σ < y < 12 σ is used as a reservoir for the application of the continuum conditions to the atomistic description. In this region the particles’ velocities are rescaled every 100 molecular time steps to match the continuum temperature and velocity figures. A reflective plane is placed parallel to the solid in the upper position along the x − axis to prevent any particles from moving away from the simulation box. The solid wall is modelled as two planes of (111) fcc lattice and its particles are allowed to oscillate around their lattice site with a harmonic potential with stiffness \(\kappa=400\ \epsilon\sigma^{-2}.\) The particle velocities at each wall plane are rescaled independently through a velocity rescaling thermostat to temperature \(T=1.1\ \epsilon k_{B}^{-1}.\) In the remaining molecular area we do not apply any other thermostat and the heat generated during the simulations is dissipated through the thermal wall and the buffer region.

The fluid’s density is ρ = 0.81 mσ −3 resulting to a 1,760 number of fluid particles including the ones in the buffer region. The density employed for the wall is ρ = 4.0 mσ −3 corresponding to 470 solid particles. The interaction parameters for the wall/fluid interface are \(\epsilon_{wf}=0.6 \epsilon,\ \sigma_{wf}=0.75 \sigma\) that correspond (as described in Sect. 4) to apparent slip. The fluid’s viscosity is \(\mu=2.08\ \epsilon \tau \sigma^{-3}, \) the thermal conductivity is λ = 7.7 k B (σ τ)−1 and the specific heat is c u = 2.43 k B /m (Liu et al. 2007).

Hybrid simulations are carried out with molecular modelling being employed near the lower wall aiming to provide accurate boundary condition regarding the slip velocities and the temperature jumps. In particular, the size of the continuum domain is H = 100 σ with the upper wall moving with velocity U wall = 2.0 σ/τ at temperature \(T_{\rm{wall}}=1.3\ \epsilon k_{B}^{-1}.\) MD simulations are performed every continuum time step around the lower grid point for 106 number of time steps and the temperature jumps and slip velocities are mapped back to the continuum solver.

Figure 7 shows the velocity and temperature profiles across the channel. A linear velocity profile is noticed with apparent slip near the lower wall. The slip’s magnitude, for the current shear rate, surface orientation, wall-fluid interactions and surface stiffness, is in perfect agreement with previous molecular studies (Priezjev 2007). A parabolic profile for the temperature is noticed due to the flow of heat generated by viscous dissipation (Liu et al. 2007).

In Fig. 8 the velocity and temperature residuals of the continuum solver are shown. It is observed that as the simulation evolves the residuals fluctuate between 10−2 and 10−3 for the velocity and around 10−3 for the temperature; this is due to the inherent fluctuations of the molecular information transferred. Small changes in the continuum inputs produce atomistic outputs that oscillate around a mean value. The fluctuating nature of the molecular results prohibits the continuum solver of achieving acceptable convergence. Therefore, a numerical optimisation procedure is engaged to minimise the fluctuations of the data transferred from the atomistic solver to the continuum. The goal of this optimisation procedure is twofold: (1) to reduce the fluctuation’s amplitude of the atomistic information transferred; and (2) to optimise the efficiency of the entire simulation procedure by minimising the number of molecular simulations performed.

In the present case the linear optimisation procedure, as described in Sect. 3 and applied in the previous test cases, cannot be applied directly since the number of inputs and outputs has been increased. The aforementioned procedure can be extended for one additional input; however, it is not straight forward to be generalised to accommodate multi-dimensional inputs and outputs. Furthermore, even if the number of input parameters is two, like in the current case, the complexity of implementation for the linear optimisation procedure increases significantly and the computational benefits are not apparent. For example, in the case studied here after the discretisation of the input variables u con, T con the following four input sets are generated \(\left(u_{\rm{in}}+m\delta u,\ T_{\rm{in}}+n\delta T\right),\,\left(u_{\rm{in}}+\left(m+1\right)\delta u,\ T_{\rm{in}}+n\delta T\right),\,\left(u_{\rm{in}}+m\delta u,\ T_{\rm{in}}+\left(n+1\right)\delta T\right),\) and \(\left(u_{\rm{in}}+\left(m+1\right)\delta u, \ T_{\rm{in}}+\left(n+1\right)\delta T\right),\) where \(u_{\rm{in}}+m\delta u<u_{\rm{con}}<u_{\rm{in}}+\left(m+1\right)\delta u\) and \(T_{\rm{in}}+n\delta T<T_{\rm{con}}<\rho_{\rm{in}}+\left(n+1\right)\delta\rho\) and \({m,n\ \in{\mathbb{Z}}.}\) Through this procedure if none of the input sets has been previously calculated then 4 MD simulations have to be performed. Additionally, the four input sets lead to combination of 16 input states, where 0, 1, 2, 3 or 4 atomistic simulations are required. This increases the computational cost and the complexity of the algorithm that searches the library data. Therefore, aiming to overcome these difficulties the Neural Network optimisation procedure is engaged.

4.2.2 ANN optimisation

In the ANN optimisation, instead of predefining the input sets for the atomistic simulations through the parameters x in and δx (where x is any continuum input), we define a confidence interval around every new input and if any library data are inside this confidence interval then the output is based on the library data; otherwise, atomistic simulations are performed for the exact continuum input set. For example, in this case a continuum input u in and a parameter δu are defined. A search is performed in the library for data u lib that belong to the interval u in − δ u < u lib < u in + δu. If any data fulfill the aforementioned requirements then the atomistic outputs are estimated based on neural networks trained with the library’s information. In the event that none of the library data belong in the confidence interval of the continuum input then the following steps are performed: (1) for the exact continuum inputs an atomistic simulation is executed; (2) the atomistic outputs are stored to the library; (3) the neural networks are being re-trained and updated to accommodate the new data; and (iv) the neural nets are utilised to provide the microscopic outputs. The molecular outputs are always estimated through neural networks aiming to utilise the networks’ smoothing abilities and provide data devoid of large fluctuations that may introduce instabilities in the continuum solver. Figure 8 shows the RMS values of the velocity and temperature residuals as have been calculated from the hybrid simulations (1) with the atomistic data fed back directly to the continuum solver and (2) the atomistic data fed back through a neural net.

The velocity residual for the direct coupling case constantly fluctuates and its minimum value is of the order of 10−3. These fluctuations are originated from the molecular solver and represent the fluctuating values of the slip velocity as it is calculated from similar continuum inputs; it can also be easier realised if a logarithmic scale is employed in the y axis (see inset in Fig. 8). The application of neural networks compresses the strength of the fluctuations and permit the continuum solver to achieve residuals of the order of 10−5. Specifically, the residual initially decreases smoothly and afterwards oscillations are noticed, primarily due to the continuous changes in the network’s parameters every time that a molecular output is generated. The same behaviour is also noticed for the temperature residual.

Figure 9 shows slip velocity data transferred to the continuum solver as has been calculated by MD with and without the application of neural networks. This figure shows the smoothing of data achieved with the presence of neural nets, and provides a better insight why neural network optimisation contributes to elimination of any numerical instabilities and artefacts induced to the continuum solver. As the confidence limit increases the neural nets’ outputs are based on fewer data and, therefore, small deviations are observed. Although minimisation of the number of the molecular simulations contribute to the reduction of the computational cost, it implies that fewer data will be utilised for estimating the fluctuating average of the atomistic simulations.

Figure 8 shows the velocity and temperature residuals compared to those obtained from the neural network optimasation with \(\delta u= 5\times10^{-3}.\) In this case, the convergence of the simulation is noticeable faster compared to the extreme case where \(\delta u \rightarrow 0\) and the neural networks have been updated every time step.

Hybrid simulations have been performed for a number of different confidence intervals (δu, δ T) spanning from δ u = δ T = 10−4 to δu = δ T = 0.1. Smaller values of the confidence intervals imply that a larger number of MD simulations will be performed generating larger number of data for the training procedure. Therefore, the neural nets would be able to reduce any uncertainties associated with the oscillating nature of the atomistic outputs. The overall computational cost is dictated by the atomistic simulations.

Figure 10 shows the number of MD simulations as a factor of δu = δ T. As the confidence limit increases, the number of atomistic simulations decreases in a non-linear manner. For the example studied here, for δu = δ T = 10−4 a total number of 114 MD simulations are performed and for δu = δ T = 10−1 the number of molecular simulations is reduced to 8.

Figure 11 shows the root mean square deviation of the atomistic outputs compared with the one obtained with δu = 10−4. The atomistic outcomes produced for different confidence limits are generally in good agreement and primarily for δu ≤ 10−2 the differences are less than 5%.

4.2.3 Neural network architectures

In the present example, for every case two neural networks have been used with two inputs, one output and two hidden layers. Both neural networks take as inputs the continuum velocity and temperature: the first one calculates the slip velocity and the second one the temperature jump. Potentially, instead of two separate networks we could have engaged one with two inputs and two outputs. However, here the choice of the two separate networks perform better in terms of accuracy. Furthermore, this offers flexibility and minimises the risk of generating numerical artefacts due to inappropriate training of the network. For the training of the networks 75% of the data produced from the atomistic solver have been used, while the remaining 25% have been used for validation purposes.

Tables 3 and 4 show the neural architectures that have been created in hybrid simulations under different values for the confidence intervals δu. Specifically, Table 3 summarises the neural networks used for estimating slip velocities and Table 4 those used for estimating temperature jumps. In the first column of both tables the various confidence intervals are shown, in the second one the number of neurons in the first hidden layer, and in the third one the number of neurons in the second hidden layer. Although the potential maximum number of neurons at each hidden layer is 31, it is noted that none of the hidden layers of the neural networks has more than 12 neurons. This fact shows the ability of ANN with fairly simple architectures to model the relationships between the continuum and molecular outputs. The advantages of the ANN will be more apparent in multi-parametric cases, where the molecular outputs depend upon a larger of continuum inputs.

5 Concluding summary

A new multiscale methodology that aims to accommodate larger temporal and length scales and minimise the impact of the atomistic solver was presented. In the literature, several computational frameworks have been proposed for modelling flows in multiple scales. These frameworks specify the information that has to be exchanged between continuum and molecular and facilitate the communication process. However, their applicability to complex fluid flow scenarios experiences limitations due to the computational complexity of the proposed algorithms, primarily due to the computational cost of the microscale solver, which is still dominant.

Numerical optimisation techniques have been developed to avoid the repetition of computationally demanding atomistic simulations for nearly identical continuum inputs. Linear optimisation effectively avoids performing MD simulations for nearly identical continuum states resulting in a significant reduction of the computational cost. By tuning the interval parameter of the interpolation scheme, for example δu, the number of the performed MD simulations can be regulated to balance between accuracy, stability and efficiency. Although the linear optimisation prohibits the propagation of any instabilities towards the continuum side, it does not take into account the oscillating nature of the atomistic outputs and provides statistically averaged data. Concurrently, it cannot be directly extended to accommodate multiple inputs and outputs. Therefore, a more generic procedure was developed based on neural networks. The neural network optimisation compared with the linear one provides an extra flexibility to the framework that facilitates the exchange of information between the continuum and molecular region. The main advantages of the ANN optimisation can be summarised as follows:

-

Generic properties: the ANN optimisation can be extended to accommodate any number of input and output parameters.

-

Consistency: as illustrated in the previous test cases there is a small variability in the neural networks outcomes even in cases where very different confidence limits were employed.

-

Efficiency control: through the ANN optimisation the number of MD simulations can be controlled based on the values of the confidence intervals and can be optimised based on the problem’s accuracy and efficiency requirements. Furthermore, in terms of efficiency ANN re-training is crucial and for the cases examined re-training computational time is less than 1% of the time required for one atomistic simulation.

-

Smoothing properties: the neural networks act as a smoothing operator for reducing the fluctuations in the atomistic outputs.

References

Allen M P, Tildesley DJ (1987) Computer simulation of liquids. Oxford University Press, Oxford

Asproulis N, Drikakis D (2010) Boundary slip dependency on surface stiffness. Phys Rev E 81(6)

Asproulis N, Drikakis D (2009) Nanoscale materials modelling using neural networks. J Comput Theo Nano Sci 6(3):514–518

Asproulis N, Drikakis D (2010) Surface roughness effects in micro and nanofluidic devices. J Comput Theo Nano Sci 7(9):1825–1830

Asproulis N, Drikakis D (2011) Wall-mass effects on hydrodynamic boundary slip. Phys Rev E 84:031504

Asproulis N, Kalweit M, Shapiro E, Drikakis D (2009) Mesoscale flow and heat transfer modelling and its application to liquid and gas flows. J Nanophotonics 3(1):031960–031975

Asproulis N, Kalweit M, Drikakis D (2012) A hybrid molecular continuum method using point wise coupling. Adv Eng Softw 46(1):85–92

Ben Krose, Patrik van de Smagt (1996) An introduction to artificial neural networks. The University of Amsterdam, Amsterdam

Benardos PG, Vosniakos G (2007) Optimizing feedforward artificial neural network architecture. Eng Appl Artif Intell 20(3):365–382

Borg MK, MacPherson GB, Reese JM (2010) Controllers for imposing continuum-to-molecular boundary conditions in arbitrary fluid flow geometries. Mol Simul 36(10):745–757

Bugel M, Galliéro G, Caltagirone J (2011) Hybrid atomistic-continuum simulations of fluid flows involving interfaces. Microfluid Nanofluid 10(3):637–647

De Fabritiis G, Delgado-Buscalioni R, Coveney PV (2006) Modelling the mesoscale with molecular specificity. Phys Rev Lett 97:134501

Delgado-Buscalioni R (2012) Tools for multiscale simulation of liquids using open molecular dynamics. Numerical analysis and multiscale computations, series lecture notes computational science and engineering 82:145–166

Delgado-Buscalioni R, Coveney VP (2003) Continuum-particle hybrid coupling for mass, momentum and energy transfers. Phys Rev E 67:046704

Delgado-Buscalioni R, Coveney PV (2003) Usher: an algorithm for particle insertion in dense fluids. J Chem Phys 119:978–987

Delgado-Buscalioni R, Coveney P (2004) Hybrid molecular-continuum fluid dynamics. Phil Trans R Soc Lond A 362:1639–1654

Delgado-Buscalioni R, Kremer K, Praprotnik M (2008) Concurrent triple-scale simulation of molecular liquids. J Chem Phys 128(11)

Delgado-Buscalioni R, Kremer K, Praprotnik M (2009) Coupling atomistic and continuum hydrodynamics through a mesoscopic model: application to liquid water. J Chem Phys 131(24)

Drikakis D, Asproulis N (2010) Multi-scale computational modelling of flow and heat transfer. Int J Numer Meth Heat Fluid Flow 20(5)

Drikakis D, Rider W (2004) High-resolution methods for Incompressible and low-speed flows. Springer, Ney york

Drikakis D, Govatsos PA, Papantonis DE (1994) A characteristic-based method for incompressible flows. Int J Num Meth Fl 19:667–685

Drikakis D, Iliev OP, Vassileva DP (2000) Acceleration of multigrid flow computations through dynamic adaptation of the smoothing procedure. J Comput Phys 165(2):566–591

Fedosov AD, Karniadakis EG (2009) Triple-decker: interfacing atomistic-mesoscopic-continuum flow regimes. J Comput Phys 228(4):1157–1171

Flekkoy GE, Delgado-Buscalioni R, Coveney VP (2005) Flux boundary conditions in particle simulations. Phys Rev E 72(2):1–9

Gad-El-Hak M (2005) Liquids: The holy grail of microfluidic modeling. Phys Fluids 17:100612

Gad-El-Hak M (2006) Gas and liquid transport at the microscale. Heat Tran Eng 27(4):13–29

Garcia AL, Alder BJ (1998) Generation of the chapman-enskog distribution. J Comput Phys 140(1):66–70

Garcia-Cervera CJ, Ren W, Lu J, Weinan E (2008) Sequential multiscale modeling using sparse representation. Comm Comp Phys 4(5):1025–1033

Giannakopoulos AE, Sofos F, Karakasidis TE, Liakopoulos A (2012) Unified description of size effects of transport properties of liquids flowing in nanochannels. Int J Heat Mass Tran 55(19–20):5087–5092

Hadjiconstantinou NG (2006) The limits of navier-stokes theory and kinetic extensions for describing small-scale gaseous hydrodynamics. Phys Fluids 18(11)

Hadjiconstantinou NG (1999) Hybrid atomistic-continuum formulations and the moving contact-line problem. J Comput Phys 154(2):245–265

Hadjiconstantinou NG (1999) Combining atomistic and continuum simulations of contact-line motion. Phys Rev E 59:2475

Hadjiconstantinou NG (2005) Discussion of recent developments in hybrid atomistic-continuum methods for multiscale hydrodynamics. Bull Pol Acad Sci: Tech Sci 53(4):335–342

Hadjiconstantinou NG, Patera AT (1997) Heterogeneous atomistic-continuum representations for dense fluid systems. Int J Mod Phys C 8(4):967–976

Huang C, Chen L, Chen Y, Chang FM (2009) Evaluating the process of a genetic algorithm to improve the back-propagation network: a monte carlo study. Exp Syst Appl 36(2):1459–1465

Kalweit M, Drikakis D (2008) Coupling strategies for hybrid molecularcontinuum simulation methods. Proceedings of the I Mech Eng Part C J Mech Eng Sci 222:797–806(10)

Kalweit M, Drikakis D (2008) Multiscale methods for micro/nano flows and materials. J Comput Theo Nano Sci 5(9):1923–1938

Kamholz AE, Weigl BH, Finlayson BA, Yager P (1999) Quantitative analysis of molecular interaction in a microfluidic channel: the t-sensor. An Chem 71(23):5340–5347

Karniadakis G, Beskok A, Aluru N (2005) Microflows and nanoflows: fundamentals and simulation. Springer, New York

Kevrekidis IG, Gear CW, Hummer G (2004) Equation-free: the computer-aided analysis of complex multiscale systems. AIChE J 50(7):1346–1355

Koishi T, Yasuoka K, Ebisuzaki T, Yoo S, Zeng XC (2005) Large-scale molecular-dynamics simulation of nanoscale hydrophobic interaction and nanobubble formation. J Chem Phys 123(20)

Kotsalis EM, Walther JH, Koumoutsakos P (2007) Control of density fluctuations in atomistic-continuum simulations of dense liquids. Phys Rev E 76(1)

Kotsalis EM, Walther JH, Kaxiras E, Koumoutsakos P (2009) Control algorithm for multiscale flow simulations of water. Phys Rev E 79(4)

Koumoutsakos P (2005) Multiscale flow simulations using particles. Ann Rev Fluid Mech 37:457–487

Laurene V Fausett (1994) Fundamentals of neural networks. Prentice Hall, New York

Lei H, Caswell B, Karniadakis GE (2010) Direct construction of mesoscopic models from microscopic simulations. Phys Rev E 81(2)

Lei H, Fedosov DA, Karniadakis GE (2011) Time-dependent and outflow boundary conditions for dissipative particle dynamics. J Comput Phys 230(10):3765–3779

Liu J, Chen S, Nie X, Robbins MO (2007) A continuum-atomistic simulation of heat transfer in micro- and nano-flows. J Comput Phys 227(1):279–291

Lorenz CD, Chandross M, Grest GS (2010) Large scale molecular dynamics simulations of vapor phase lubrication for MEMS. J Adhes Sci Technol 24(15–16):2453–2469

McClain MA, Culbertson CT, Jacobson SC, Allbritton NL, Sims CE, Ramsey JM (2003) Microfluidic devices for the high-throughput chemical analysis of cells. An Chem 75(21):5646–5655

McCulloch WS, Pitts W (1990) A logical calculus of the ideas immanent in nervous activity. Bull Math Biol 52(1–2):99–115

Mohamed KM, Mohamad AA (2010) A review of the development of hybrid atomistic-continuum methods for dense fluids. Microfluid Nanofluid 8(3):283–302

Nagayama G, Cheng P (2004) Effects of interface wettability on microscale flow by molecular dynamics simulation. Int J Heat Mass Tran 47(3):501–513

Niavarani A, Priezjev NV (2010) Modeling the combined effect of surface roughness and shear rate on slip flow of simple fluids. Phys Rev E 81(1)

Nicholls W, Borg M, Lockerby D, Reese J (2012) Water transport through (7,7) carbon nanotubes of different lengths using molecular dynamics. Microfluid Nanofluid 12:257–264. doi:10.1007/s10404-011-0869-3

Nie XB, Chen SY, Weinan E, Robbins MO (2004) A continuum and molecular dynamics hybrid method for micro- and nano-fluid flow. J Fluid Mech (500):55–64

Nie X, Robbins MO, Chen S (2006) Resolving singular forces in cavity flow: multiscale modeling from atomic to millimeter scales. Phys Rev Lett 96(13):1–4

O’connell TS, Thompson AP (1995) Molecular dynamics-continuum hybrid computations: a tool for studying complex fluid flows. Phys Rev E 52(6):R5792–R5795

Plimpton S (1995) Fast parallel algorithms for short-range molecular dynamics. J Comput Phys 117:1–19

Prasianakis N, Ansumali S (2011) Microflow simulations via the lattice boltzmann method. Commun Comput Phys 9(5):1128–1136

Priezjev NV (2007) Effect of surface roughness on rate-dependent slip in simple fluids. J Chem Phys 127(14):144708

Priezjev NV, Darhuber AA, Troian SM (2005) Slip behavior in liquid films on surfaces of patterned wettability: comparison between continuum and molecular dynamics simulations. Phys Rev E 71(4)

Ren W (2007) Analytical and numerical study of coupled atomistic-continuum methods for fluids. J Comput Phys 227(2):1353–1371

Ren W (2007) Seamless multiscale modeling of complex fluids using fiber bundle dynamics. Commun Math Sci 5(4):1027–1037

Ren W, Weinan E (2005) Heterogeneous multiscale method for the modeling of complex fluids and micro-fluidics. J Comput Phys 204(1):1–26

Schittenkopf C, Deco G, Brauer W (1997) Two strategies to avoid overfitting in feedforward networks. Neural Networks 10(3):505–516

Schwartzentruber TE, Scalabrin LC, Boyd ID (2007) A modular particle-continuum numerical method for hypersonic non-equilibrium gas flows. J Comput Phys 225(1):1159–1174

Schwartzentruber TE, Scalabrin LC, Boyd ID (2008a) Hybrid particle-continuum simulations of hypersonic flow over a hollow-cylinder-flare geometry. AIAA J 46(8)

Schwartzentruber TE, Scalabrin LC, Boyd ID (2008b) Hybrid particle-continuum simulations of nonequilibrium hypersonic blunt-body flowfields. J Therm Heat Tran 22(1):29–37

Shapiro E, Drikakis D (2005a) Artificial compressibility, characteristics-based schemes for variable density, incompressible, multi-species flows. Part I. Derivation of different formulations and constant density limit. J Comput Phys 210(2):584–607

Shapiro E, Drikakis D (2005b) Artificial compressibility, characteristics-based schemes for variable-density, incompressible, multispecies flows: Part II. multigrid implementation and numerical tests. J Comput Phys 210(2):608–631

Singh SP, Nithiarasu P, Eng PF, Lewis RW, Arnold AK (2008) An implicit-explicit solution method for electro-osmotic flow through three-dimensional micro-channels. Int J Numer Meth Eng 73(8):1137–1152

Sofos F, Karakasidis T, Liakopoulos A (2009) Transport properties of liquid argon in krypton nanochannels: anisotropy and non-homogeneity introduced by the solid walls. Int J Heat Mass Trans 52(3–4):735–743

Sofos F, Karakasidis TE, Liakopoulos A (2012) Surface wettability effects on flow in rough wall nanochannels. Microfluid Nanofluid 12(1–4):25–31

Soong CY, Yen TH, Tzeng PY (2007) Molecular dynamics simulation of nanochannel flows with effects of wall lattice-fluid interactions. Phys Rev E 76(3):036303

Steijl R, Barakos NG (2010) Coupled navier-stokes-molecular dynamics simulations using a multi-physics flow simulation framework. Int J Numer Meth Fluids 62(10):1081–1106

Sutmann G (2002) Quantum simulations of complex many-body systems: from theory to algorithms, lecture notes, chapter Classical molecular dynamics. John von Neumann Institute for Computing, Juelich, NIC Series, vol 10, pp 211–254

Thompson PA, Troian SM (1997) A general boundary condition for liquid flow at solid surfaces. Nature 389(6649):360–362

Valentini P, Schwartzentruber ET (2009) Large-scale molecular dynamics simulations of normal shock waves in dilute argon. Phys Fluids 21(6)

Verlet L (1967) Computer ’experiments’ on classical fluids. I. Thermodynamical properties of Lennard-Jones molecules. Phys Rev 159:98–103

Wagner G, Flekkoy EG (2004) Hybrid computations with flux exchange. Philos Trans: Math Phys Eng Sci (Series A) 362(1821):1655–1665

Wang Y, He G (2007) A dynamic coupling model for hybrid atomistic-continuum computations. Chem Eng Sc 62(13):3574–3579

Weinan E, Engquist B, Li X, Ren W, Vanden-Eijnden E (2007) Heterogeneous multiscale methods: a review. Comm Comp Phys 2(3):367–450

Weinan E, Ren W, Vanden-Eijnden E (2009) A general strategy for designing seamless multiscale methods. J Comput Phys 228(15):5437–5453

Werder T, Walther J, Koumoutsakos P (2005) Hybrid atomistic-continuum method for the simulation of dense fluid flows. J Comput Phys 205:373–390

Wijesinghe HS, Hadjiconstantinou NG (2004) A hybrid atomistic-continuum formulation for unsteady, viscous, incompressible flows. CMES 5(6):515–526

Wijesinghe HS, Hornung RD, Garcia AL, Hadjiconstantinou NG (2004) Three-dimensional hybrid continuum-atomistic simulations for multiscale hydrodynamics. J Fl Eng 126(5):768–777

Yang SC (2006) Effects of surface roughness and interface wettability on nanoscale flow in a nanochannel. Microfluid Nanofluid 2(6):501–511

Yen TH, Soong CY, Tzeng PY (2007) Hybrid molecular dynamics-continuum simulation for nano/mesoscale channel flows. Microfluid Nanofluid 3(6):665–675

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Asproulis, N., Drikakis, D. An artificial neural network-based multiscale method for hybrid atomistic-continuum simulations. Microfluid Nanofluid 15, 559–574 (2013). https://doi.org/10.1007/s10404-013-1154-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10404-013-1154-4