Abstract

In this paper, an efficient and accurate computational method based on the Chebyshev wavelets (CWs) together with spectral Galerkin method is proposed for solving a class of nonlinear multi-order fractional differential equations (NMFDEs). To do this, a new operational matrix of fractional order integration in the Riemann–Liouville sense for the CWs is derived. Hat functions (HFs) and the collocation method are employed to derive a general procedure for forming this matrix. By using the CWs and their operational matrix of fractional order integration and Galerkin method, the problems under consideration are transformed into corresponding nonlinear systems of algebraic equations, which can be simply solved. Moreover, a new technique for computing nonlinear terms in such problems is presented. Convergence of the CWs expansion in one dimension is investigated. Furthermore, the efficiency and accuracy of the proposed method are shown on some concrete examples. The obtained results reveal that the proposed method is very accurate and efficient. As a useful application, the proposed method is applied to obtain an approximate solution for the fractional order Van der Pol oscillator (VPO) equation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, fractional calculus and differential equations have found enormous applications in mathematics, physics, chemistry and engineering because of the fact that, a realistic modeling of a physical phenomenon having dependence not only at the time instant, but also on the previous time history can be successfully achieved by using fractional calculus. The applications of the fractional calculus have been demonstrated by many authors. For example, it has been applied to model the nonlinear oscillation of earthquakes [21], fluid-dynamic traffic [22], frequency dependent damping behavior of many viscoelastic materials [4], continuum and statistical mechanics [38], colored noise [39], solid mechanics [48], economics [5], signal processing [44], and control theory [7]. However, during the last decade fractional calculus has attracted much more attention of physicists and mathematicians. Due to the increasing applications, some schemes have been proposed to solve fractional differential equations. The most frequently used methods are Adomian decomposition method (ADM) [16, 41, 42], homotopy perturbation method [50], homotopy analysis method [20], variational iteration method (VIM) [14, 51], fractional differential transform method (FDTM) [1, 2, 13, 17, 18], fractional difference method (FDM) [40], power series method [43], generalized block pulse operational matrix method [35] and Laplace transform method [45]. Also, recently the operational matrices of fractional order integration for the Haar wavelets [36], Legendre wavelets [23,24,25, 29, 30, 47] and the Chebyshev wavelets of the first kind [26,27,28, 37] and the second kind [53] have been developed to solve the fractional differential equations.

An usual way to solve functional equations is to express the solution as a linear combination of the so-called basis functions. These basis functions can, for instance, be orthogonal or not orthogonal. Approximation by orthogonal families of basis functions has found wide application in science and engineering [30]. The main idea of using an orthogonal basis is that the problem under consideration reduced to a system of linear or nonlinear algebraic equations [30,31,32]. This can be done by truncated series of orthogonal basis functions for the solution of the problem and using the operational matrices [30].

Depending on their structure, the orthogonal functions may be widely classified into four families [49]. The first includes sets of piecewise constant orthogonal functions such as the Walsh functions, block pulse functions, etc. The second consists of sets of orthogonal polynomials such as Laguerre, Legendre, Chebyshev, etc. The third is the widely used sets of sine–cosine functions in Fourier series. The fourth includes orthogonal wavelets such as Haar, Legendre, Chebyshev and CAS wavelets. It is well known that we can approximate any smooth function by the eigenfunctions of certain singular Sturm–Liouville problems such as Legendre or Chebyshev orthogonal polynomials. In this manner, the truncation error approaches zero faster than any negative power of the number of the basis functions used in the approximation [8]. This phenomenon is usually referred to as ‘the spectral accuracy’ [8]. It is worth noting that approximating a continuous function with piecewise constant basis functions results in an approximation that is piecewise constant. On the other hand, if a discontinuous function is approximated with continuous basis functions, the resulting approximation is continuous and can not properly model the discontinuities. In remote sensing, images often have properties that vary continuously in some regions and discontinuously in others. Thus, in order to properly approximate these spatially varying properties it is absolutely necessary to use approximating functions that can accurately model both continuous and discontinuous phenomena. Therefore, neither continuous basis functions nor piecewise constant basis functions taken alone can efficiently or accurately model these spatially varying properties. For these situations, wavelet functions will be more effective. It should be noted that wavelets theory is a relatively new and an emerging area in mathematical research . It has been applied in a wide range of engineering disciplines. Wavelets are localized functions, which are the basis for energy-bounded functions and, in particular, for \(L^{2}(R)\), so that localized pulse problems can be easily approached and analyzed [10,11,12]. They are used in system analysis, optimal control, numerical analysis, signal analysis for wave form representation and segmentations, time-frequency analysis and fast algorithms for easy implementation. However, wavelets are another basis functions which offer considerable advantages over alternative basis ones and allow us to attack problems that are not accessible with conventional numerical methods. Some other advantages of these basis functions are that the basis set can be improved in a systematic way, different resolutions can be used in different regions of space, the coupling between different resolution levels is easy, there are few topological constraints for increased resolution regions, the Laplace operator is diagonally dominant in an appropriate wavelet basis, the matrix elements of the Laplace operator are very easy to calculate and the numerical effort scales linearly with respect to the system size [30]. It is worth mentioning that the CWs as a specific kind of wavelets have mutually spectral accuracy, orthogonality and other mentioned useful properties about wavelets. Therefore, in recent years, these orthogonal basis functions have been widely applied for solving different types of differential equations e.g. [26,27,28, 33, 37, 49]. It is also worth noting that a suitable family of orthogonal basis functions which can be used to obtain approximate solutions for fractional functional equations is a family of the Chebyshev polynomials, because the integrals (3) and (5) can be easily computed and the useful property (6) can also be applied. Therefore, due to the fact that the CWs have mutual properties of the Chebyshev polynomials and mentioned properties of wavelets, we believe that these basis functions are suitable for obtaining approximate solutions for fractional functional equations.

In this paper, we first derive a new operational matrix of fractional integration in the Riemann–Liouville sense for the CWs and also present a general procedure based on collocation method and the HFs for constructing this matrix. Then, we prove an useful theorem about the CWs which will be used further in this paper. Next a new computational method based on these bases functions together with their operational matrix of fractional integration is proposed for solving the following NMFDE:

with the initial conditions:

where \(\gamma _{i}\,(i=1,\ldots ,s)\) are real constant coefficients and also \(q-1<\alpha \le q\), \(0<\alpha _{1}<\alpha _{2}<\ldots<\alpha _{s}<\alpha \) are given real constants. Moreover \(D_{*}^{\alpha }u(x)\equiv u^{(\alpha )}(x)\) denotes the Caputo fractional derivative of order \(\alpha \) of u(x) which will be described in the next section, the values of \(d_{i}\,(i=0,\ldots ,q-1)\) describe the initial state of u(x), G is a known analytic function and f(x) is a given source function. The basic idea of the proposed method is as follows: (i) The NMFDE (1) is converted to a fully integrated form via fractional integration in the Riemann–Liouville sense. (ii) Subsequently, the integrated form of the equation is approximated using a linear combination of the CWs. (iii) Finally, the integrated form of the equation is converted to a nonlinear algebraic equation, by introducing the operational matrix of fractional integration of the CWs.

This paper is organized as follows: In section 2, some necessary definitions and mathematical preliminaries of the fractional calculus are reviewed. In section 3, the CWs and some of their properties are investigated. In section 4, the proposed method is described for solving NMFDE (1). In section 5, the proposed method is applied for some numerical examples. In section 6, the proposed method is applied to obtain approximate solution of the fractional order VPO equation. Finally, a conclusion is drawn in section 7.

2 Preliminaries and notations

In this section, we give some necessary definitions and mathematical preliminaries of the fractional calculus theory which are required for establishing our results.

DEFINITION 2.1

A real function \(u(x),~x>0\), is said to be in the space \(C_{\mu },~\mu \in \mathbb {R}\) if there exists a real number \(p~(>\mu )\) such that \(u(x)=x^{p}u_{1}(x)\), where \(u_{1}(x)\in C[0,\infty ]\) and it is said to be in the space \(C_{\mu }^{n}\) if \(u^{(n)}\in C_{\mu },~n\in \mathbb {N}\).

DEFINITION 2.2

The Riemann–Liouville fractional integration operator of order \(\alpha \ge 0\) of a function \(u\in C_{\mu },~\mu \ge -1\), is defined as [46]

It has the following properties:

where \(\alpha ,\,\beta \ge 0\) and \(\vartheta >-1\).

DEFINITION 2.3

The fractional derivative operator of order \(\alpha >0 \) in the Caputo sense is defined as [46]

where n is an integer \(x>0\) and \(u\in C_{1}^{n}\). The useful relation between the Riemann–Liouvill operator and Caputo operator is given by the following expression:

where n is an integer, and \(u\in C_{1}^{n}\). For more details about fractional calculus, see [46].

3 The CWs and their properties

In this section, we briefly review the CWs and their properties which are used further in this paper.

3.1 Wavelets and the CWs

Wavelets constitute a family of functions constructed from dilation and translation of a single function \(\psi (x)\) called the mother wavelet. When the dilation parameter a and the translation parameter b vary continuously, we have the following family of continuous wavelets [26]:

If we restrict the parameters a and b to discrete values as \(a=a_{0}^{-k}\), \(b=nb_{0}a_{0}^{-k}\), where \(a_{0}>1\), \(b_{0}>0\), we have the following family of discrete wavelets:

where the functions \(\psi _{kn}(x)\) form a wavelet basis for \(L^{2}(\mathbb {R})\). In practice, when \(a_{0}=2\) and \(b_{0}=1\), the functions \(\psi _{kn}(x)\) form an orthonormal basis.

The CWs \(\psi _{nm}(x)=\psi (k,n,m,x)\) have four arguments, \(n=1,2,\ldots ,2^{k}\), k is any arbitrary positive integer, m is the degree of the Chebyshev polynomials and independent variable x is defined on [0, 1]. They are defined on the interval [0, 1] by

where

Here, \(T_{m}(x)\) are the well-known Chebyshev polynomials of degree m, which are orthogonal with respect to the weight function \(w(x)=\frac{1}{\sqrt{1-x^{2}}}\), on the interval \([-1,1]\) and satisfy the following recursive relation [8]:

The set of the CWs is an orthogonal set with respect to the weight function \(w_{n}(x)=w(2^{k+1}x-2n+1)\).

3.2 Function approximation

A function u(x) defined over [0, 1] may be expanded by the CWs as

where

in which (., .) denotes the inner product in \(L_{w_{n}}^{2}[0,1]\). If the infinite series in (11) is truncated, then it can be written as

where T indicates transposition, C and \(\Psi (x)\) are \(\hat{m}=2^{k}M\) column vectors given by

and

By taking the collocation points

we define the wavelet matrix \(\Phi _{\hat{m}\times \hat{m}}\) as

For example, for \(k=1,\,M=3\), we have

3.3 Convergence of the CWs expansion

The convergence of the CWs expansion in one dimension is investigated in the following theorems:

Theorem 3.1

A function u(x) defined on [0, 1] with bounded first and second derivatives \(|u^{\prime }(x)|\le M_{1}\) and \(|u^{\prime \prime }(x)|\le M_{2}\), can be expanded as an infinite sum of CWs, and the series converges uniformly to u(x), that is,

where \(c_{nm}\)’s are defined in (12).

Proof

We consider the following cases:

For m=0 and \(n=1,2,\ldots ,2^{k}\), the CWs form an orthonormal system on [0, 1] as

By expanding the function u(x) in terms of these basis functions on [0, 1], we have

where

By truncating the series in (18), it can be written as

Theorem 3.2

Suppose \(\tilde{u}(x)\) be the truncated expansion of the function u(x) in the above basis functions and \(\tilde{e}(x)=\tilde{u}(x)-u(x)\) be the corresponding error. Then the expansion will converge in the sense that \(\tilde{e}(x)\) approaches zero with the same rate as \(2^{k}\), i.e.,

Proof

By defining the error between u(x) and its expansion over every subinterval as follows:

we have

where we have used the weighted mean value theorem for integrals. From (19) and the weighted mean value theorem, we also have

By substituting (24) into (23), we obtain

Now, since \(|u^{\prime }(x)|\le M_{1}\), then it satisfies the Lipschitz condition on each subinterval, i.e.,

Then from (25) and (26), we have

which leads to

Now, due to disjointness property of these basis functions, we have

By substituting (27) into (29), we obtain

or, in other words, \(\displaystyle \Vert \tilde{e}(t)\Vert =O\left( \frac{1}{2^{k}}\right) \), which completes the proof. \(\square \)

COROLLARY 3.3

Let \(\tilde{u}(x)\) be the expansion of u(x) by the above basis functions, and \(\tilde{e}(x)\) be the corresponding error. Then we have

Proof

This is an immediate consequence of Theorem 3.2. \(\square \)

Remark1

Notice that according to the above information, we have

In the case \(m\ge 1\), it has been proved in [49] that

Therefore, the above cases conclude that the series \(\sum _{n=1}^{\infty }\sum _{m=0}^{\infty }c_{nm}\) is absolutely convergent, and it follows that \(\sum _{n=1}^{\infty }\sum _{m=0}^{\infty }c_{nm}\psi _{nm}(x)\) converges to the function u(x) uniformly. \(\square \)

Theorem 3.4

Suppose u(x) be a function defined on [0, 1], with bounded first and second derivatives \( M_{1}\) and \(M_{2}\) respectively, and \(C^{T}\Psi (x)\) be its approximation with the CWs. Then for the error bound, we have

where

Proof

By considering definition of \(\sigma _{\hat{m}}\), we have

Now by considering (33), (35) and Remark 1, we achieve the desired result. \(\square \)

3.4 Operational matrix of fractional order integration

The fractional integration of order \(\alpha \) of the vector \(\Psi (x)\) defined in (14) can be expressed as

where \(P^{\alpha }\) is the \(\hat{m}\times \hat{m}\) operational matrix of fractional integration of order \(\alpha \). In [37], Li has derived an explicit form of this matrix by employing block pulse functions. In the sequel, we derive a more accurate explicit form of the matrix \(P^{\alpha }\). To this end, we need to introduce another family of basis functions, namely hat functions (HFs). An \(\hat{m}\)-set of these basis functions is defined on the interval [0, 1] as [52]

where \(h=\frac{1}{\hat{m}-1}\). From the definition of the HFs, we have

An arbitrary function u(x) defined on the interval [0, 1] can be expanded by the HFs as

where

and

The important aspect of using the HFs in approximating a function u(x) lies in the fact that the coefficients \(u_{i}\) in (41) are given by

By considering (41) and (44), it can be simply seen that the CWs can be expanded in terms of an \(\hat{m}\)-set of HFs as

In [52], the authors have given the HFs operational matrix of fractional integration \(F^{\alpha }\) as

where

and

Now by considering (36), and using (45) and (46) we obtain

Moreover, from (36) and (49), we have

Thus, by considering (45) and (50), we obtain the CWs operational matrix of fractional integration as

Therefore, we have found the operational matrix of fractional integration for the CWs. To illustrate the calculation procedure, we choose \(\alpha =\frac{1}{2},\,k=1,\,M=3\). Thus we have

3.5 Some useful results for the CWs

In this section, by employing some properties of the HFs, we derive an useful theorem for the CWs, which will be used in this paper. From now on, for any two constant vectors \(C^{T}=[c_{1},c_{2},\ldots ,c_{\hat{m}}]\) and \(D^{T}=[d_{1},d_{2},\ldots ,d_{\hat{m}}]\), we define \(C^{T}\odot D^{T}=[c_{1}d_{1},c_{2}d_{2},\ldots ,c_{\hat{m}}d_{\hat{m}}]\) and \(G\left( C^{T}\right) =[G(c_{1}),G(c_{2}),\ldots ,G(c_{\hat{m}})]\) for any analytic function G.

Lemma 3.5

Suppose \( \tilde{C}^{T}\Phi (x)\) and \(\tilde{D}^{T}\Phi (x)\) be the expansions of u(x) and v(x) by the HFs, respectively. Then we have

Proof

By considering (41) and (44), we have

and

which completes the proof. \(\square \)

COROLLARY 3.6

Suppose \( \tilde{C}^{T}\Phi (x)\) be the expansion of u(x) by the HFs. Then for any integer \(q\ge 2\), we have

Proof

From Lemma 3.5, we have

and by induction, we obtain

This completes the proof. \(\square \)

Theorem 3.7

Suppose G be an analytic function and \(\tilde{C}^{T}\Phi (x)\) be the expansion of u(x) by the HFs. Then we have

Proof

Since G is an analytic function, by considering Maclaurin expansion of G, i.e. \(\displaystyle G(x)=\sum \nolimits _{q=0}^{\infty }\frac{G^{(q)}(0)}{q!}x^{q}\), we have

Now, from Corollary 3.6 and relation (55), we have

Due to the fact that the series in the left-hand side is absolutely and uniformly convergent to G(u), each series in the right-hand side is also absolutely and uniformly convergent to \(G\left( u_{i}\right) \), i.e.,

Thus we have

which completes the proof. \(\square \)

Theorem 3.8

Suppose G be an analytic function and \(C^{T}\Psi (x)\) be the expansion of u(x) by the CWs. Then we have

where

Proof

By considering equation (45) and Theorem 3.7, we have

So from (45) and (57), we have

which completes the proof. \(\square \)

COROLLARY 3.9

Suppose \( C^{T}\Psi (x)\) and \(D^{T}\Psi (x)\) be expansions of u(x) and v(x) by the CWs, respectively and also G and H be two analytic functions. Then we have

where

Proof

By considering Theorem 3.8 and equation (45) and Lemma 3.5, the proof will be straightforward. \(\square \)

4 Description of the proposed method

In this section, we apply the operational matrix of fractional integration for the CWs together with the series expansion and useful properties of these basis functions for solving NMFDE (1). The main idea of the proposed method is as follows:

-

(1)

The NMFDE (1) is converted to a fully integrated form via fractional integration in the Riemann–Liouville sense.

-

(2)

The integrated form of the equation is approximated using a linear combination of the CWs.

-

(3)

Finally, the integrated form of the equation is converted to a nonlinear algebraic equation, by introducing the operational matrix of fractional integration for the CWs and employing some useful properties of these basis functions, which were mentioned in the subsection 3.5.

In the following we show the importance of the proposed method. Applying the Riemann–Liouville fractional integration of order \(\alpha \) on both sides of (1), yields

where \(q_{i}-1<\alpha _{i}\le q_{i}\), \(q_{i}\in \mathbb {N}\). By considering (2) and (60), we obtain the following fractional integral equation:

where

In order to use the Galerkin method with the CWs for solving the integrated problem (61), we approximate u(x) and g(x) by the CWs as follows:

and

where the vector \(\tilde{G}=[g_{1},g_{2},\ldots ,g_{\hat{m}}]\) is given, but \(C=[c_{1},c_{2},\ldots ,c_{\hat{m}}]\) is an unknown vector. Also, from Theorem 3.8, we have

where \(\tilde{C}^{T}=C^{T}\Phi _{\hat{m}\times \hat{m}}\). Now, the Riemann–Liouville fractional integration of orders \((\alpha -\alpha _{i})\) and \(\alpha \) of the approximate solution (62) and (64) can be written as

and

respectively, where \(P^{\alpha }\) is the \(\hat{m}\times \hat{m}\) operational matrix of fractional integration of order \(\alpha \). By employing equations (62)–(66), we can write the residual function R(x) for equation (61) as follows:

As in a typical Galerkin method [8], we generate \(\hat{m}\) nonlinear algebraic equations by applying

where \(\psi _{j}(x)=\psi _{nm}(x)\) and the index j is determined by the relation \(j=M(n-1)+m+1\). These system of nonlinear equations can be solved for the unknown coefficients of the vector C. Consequently, u(x) given in (62) can be calculated.

5 Numerical examples

In this section, we demonstrate the efficiency and accuracy of the proposed method for some numerical examples in the form (1). To show the accuracy of the proposed method, we use the absolute error in some different points in cases that we have the exact solutions as

where u(x) is the exact solution and \(\tilde{u}(x)\) is the approximate solution which is obtained by relation (62).

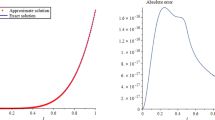

Example1

First, let us consider the NMFDE:

where

subject to the initial conditions \(u(0)=u^{\prime }(0)=0\). Figure 1 shows the behavior of the numerical solution for \(\hat{m}=96\,(k=4,\,M=6)\). The exact solution of this problem when \(\alpha =2\) is \(u(x)=-\ln \left( 1+x^{2}\right) \). From this figure, it can be seen that the numerical solution is in a very good agreement with the exact solution in the case \(\alpha =2\). Therefore, we hold that the solutions for \(\alpha =1.25,\,1.50\) and \(\alpha =1.75\) are also credible.

Numerical solutions of Example 1 for \(\hat{m}=96\) and some values of \(1<\alpha \le 2\).

Example2

Consider the NMFDE:

where

subject to the initial conditions \(u(0)=0,\,u^{\prime }(0)=-1\). The exact solution for this problem is \(u(x)=x^{2}-x\). The behavior of the numerical solutions for \(k=2\) and some different values of M with \(\alpha =1.75\) and \(\beta =0.5\) are shown in figure 2. This figure shows that by increasing M as the degree of the Chebyshev polynomials, the approximate solutions tend to the exact solution faster. Also, in order to show the accuracy of the proposed method (using the new operational matrix of fractional order integration), a comparison between the absolute errors arising by our method via CWs method in [37] for \(\alpha =2\), \(\beta =0.5\) with \(M=4\) and some different values of k in various values of \(x_{i}\) is performed in Table 1. From this table, it can be seen that the absolute errors obtained by our method are less than the error obtained by CWs method in [37]. It illustrates that our method is more accurate. From table 1, we also observe that the proposed method can provide numerical results with high accuracy for this problem. Furthermore, it can be seen that as more basis functions are used, we obtain a good approximate solution with higher accuracy. This table also shows that ‘The spectral accuracy’ holds for the proposed method. So, we conclude that our method is suitable for this problem and also by increasing the number of the CWs, the accuracy of the obtained result is improved.

Comparison between the approximate and exact solutions of Example 2 for some different values of M.

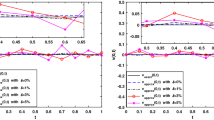

Example3

where

subject to the initial conditions \(u(0)=u^{\prime }(0)=0\). The exact solution for this problem is \(u(x)=\frac{1}{3}x^{3}\). This problem is now solved by the proposed method for \(a=b=c=e=1,\,\alpha _{1}=0.333\) and \(\alpha _{2}=1.234\). The behavior of the numerical solutions for \(M=2\) and some different values of k are shown in figure 3. From this figure, one can see that by increasing the number of the CWs basis functions, the approximate solutions converge to the exact solution with a good speed. In order to show the accuracy of the proposed method, a comparison between the absolute errors arising by our method via CWs method in [37] for different values of k and M in various values of \(x_{i}\) is performed in table 2. From this table, it can seen that the absolute errors obtained by our method are less than the error obtained by CWs method in [37]. It illustrates that our method is more accurate. Table 2 also shows that the proposed method can provide approximate solutions with high accuracy for the problem under study. Furthermore, it can be seen that by increasing the number of basis functions a good approximate solution with higher accuracy will be obtained for this problem. This table also shows that ‘The spectral accuracy’ holds for the proposed method. However, it can be concluded that the proposed method is a suitable tool for this problem.

Comparison between the approximate and exact solutions of Example 3 for some different values of k.

Example4

Finally, consider the NMFDE [15, 37]:

where

subject to the initial conditions \(u(0)=u^{\prime }(0)=0\). This is a Bagley–Torvik equation where the nonlinear term \(u^{3}(x)\) is introduced. This problem has been solved in [15, 37]. The behavior of the approximate solution for this problem with \(\hat{m}=96\) is shown in figure 4 which is in a good agreement with the obtained numerical solutions in [15, 37]. This demonstrates the importance of our method in solving nonlinear multi-order differential equations.

The behavior of the approximate solution for Example 4.

6 Application for nonlinear fractional order VPO equation

The VPO represents a nonlinear system with an interesting behavior that arises naturally in several applications. In the standard form, it is given by a nonlinear differential equation [9, 34]

where \(\mu >0\) is the control parameter. Equation (69) is commonly referred to as the unforced VPO equation [19]. The VPO with a forcing term f(t) is given by [19]

In recent years, the VPO equation and its special properties have been investigated by many authors (for instance, see [3, 6]). A modified version of the classical VPO was proposed by the fractional derivative of order \(\alpha \) in the state space formulation of equation (70). It has the following form [34]

with the initial conditions \(u_{i}(0)=d_{i} \,(i=1,2)\). In order to use the proposed method in section 4, we consider the integral form of (71) as

Now, we approximate \(u_{1}(t)\) and \(u_{2}(t)\) by the CWs as

Also from Theorem 3.8 and Corollary 3.9, we have

where

Moreover, we can approximate the function f(t) by the CWs as follows:

where \(\hat{F}\) is a given known vector. Now by substituting (73)–(75) into (72), and using operational matrix of fractional order integration, we obtain

where e is the coefficient vector of unit step function using CWs. Now, from (76), we can write the residual function \(R_{1}(t)\) and \(R_{2}(t)\) for system (72) as follows:

As in a typical Galerkin method [8], we generate \(2\hat{m}\) nonlinear algebraic equations by applying

where \(\psi _{j}(t)=\psi _{nm}(t)\), and the index j is determined by the relation \(j=M(n-1)+m+1\). These systems of nonlinear algebraic equations can be solved for the unknown coefficients of the vector \(U_{1}\) and \(U_{2}\). Consequently, \(u_{1}(t)\) and \(u_{2}(t)\) given in (73) can be calculated.

Example5

Consider the following fractional Van der Pol equation [34]

The behavior of the approximate solutions for Example 5.

The behavior of the approximate solutions for Example 6.

with the initial conditions

The behavior of the approximate solutions for this problem with \(\hat{m}=48 \) and two different values of \(\alpha \) is shown in figure 5, which is in a good agreement with the numerical solutions in [34]. This demonstrates the importance of our method in solving nonlinear fractional order Van der Pol equation.

Example6

Finally, consider the following fractional Van der Pol equation [34]

with the initial conditions

The exact solution for this problem in the case \(\alpha =1\) is \(u(t)=\sin (t)\). The behavior of the approximate solutions for this problem with \(\hat{m}=48 \) and two different values of \(\alpha \) is shown in figure 6, which is in a good agreement with the numerical solutions in [34]. This demonstrates the importance of our method in solving nonlinear fractional order Van der Pol equation.

7 Conclusion

In this work, a new operational matrix of fractional order integration for the CWs was derived and applied to solve nonlinear multi-order fractional differential equations. Several examples were given to demonstrate the powerfulness of the proposed method. The underlying problems have been reduced to systems of nonlinear algebraic equations. The obtained results were compared with exact solutions and also with the solutions obtained by the CWs method in [37]. It is worth mentioning that the results obtained by the proposed method were in a good agreement with those obtained using the CWs method in [37]. The numerical results illustrated the efficiency and accuracy of the presented scheme for solving nonlinear multi-order fractional differential equations. Furthermore, as a useful application, the proposed method was applied to obtain approximate solution of the fractional order VPO equation.

References

Arikoglu A and Ozkol I, Solution of fractional differential equations by using differential transform method, Chaos Solitons Fractals 34 (2007) 1473–1481

Arikoglu A and Ozkol I, Solution of fractional integro-differential equations by using fractional differential transform method, Chaos Solitons Fractals 40 (2009) 521–529

Atay F M, Van der pols oscillator under delayed feedback, J. Sound Vib. 218(2) (1998) 333–339

Bagley R L and Torvik P J, A theoretical basis for the application of fractional calculus to viscoelasticity, J. Rheol. 27(3) (1983) 201–210

Baillie R T, Long memory processes and fractional integration in econometrics, J. Econom. 73 (1996) 55–59

Barari A, Omidvar M and Ganji D D, Application of homotopy perturbation method and variational iteration method to nonlinear oscillator differential equations, Acta Appl. Math. 104 (2008) 161–171

Bohannan G W, Analog fractional order controller in temperature and motor control applications, J. Vib. Control 14 (2008) 1487–1498

Canuto C, Hussaini M, Quarteroni A and Zang T, Spectral methods in fluid dynamics (1988)

Caponetto R, Dongola G, Fortuna L and Petras I, Fractional order systems, Sangapore: modeling and control applications (2010)

Cattani C, Shannon wavelets for the solution of integro-differential equations, Math. Probl. Eng., vol. 2010, Article ID 408418, 22 pages (2010), https://doi.org/10.1155/2010/408418

Cattani C and Kudreyko A, Harmonic wavelet method towards solution of the Fredholm type integral equations of the second kind, Appl. Math. Comput. 215 (2010) 4164–4171

Cattani C, Shannon wavelets theory, Math. Probl. Eng., Volume 2008, Article ID 164808, 24 pages (2008), https://doi.org/10.1155/2008/164808

Darania P and Ebadian A, A method for the numerical solution of the integro-differential equations, Appl. Math. Comput. 188 (2007) 657–668

Das S, Analytical solution of a fractional diffusion equation by variational iteration method, Comput. Math. Appl. 57 (2009) 483–487

El-Mesiry A E M, El-Sayed A M A and El-Sakaa H A A, Numerical methods for multi-term fractional (arbitrary) orders differential equations, Appl. Math. Comput. 160(3) (2005) 683–699

El-Wakil S A, Elhanbaly A and Abdou M, Adomian decomposition method for solving fractional nonlinear differential equations, Appl. Math. Comput. 182 (2006) 313–324

Erturk V and Momani S, Solving systems of fractional differential equations using differential transform method, J. Comput. Appl. Math. 215 (2008) 142–151

Erturk V S, S Momani and Odibat Z, Application of generalized differential transform method to multi-order fractional differential equations, Commun. Nonlinear Sci. Numer. Simul. 13 (2008) 1642–1654

Guckenheimer J, Hoffman K and Weckesser W, The forced van der Pol equation I: the slow flow and its bifurcations, SIAM J. Appl. Dyn. Syst. 2(1) (2003) 1–35

Hashim I, Abdulaziz O and Momani S, Homotopy analysis method for fractional ivps, Commun. Nonlinear Sci. Numer. Simul 14 (2009) 674–684

He J H, Nonlinear oscillation with fractional derivative and its applications, in: International Conference on Vibrating Engineering 98 (1998) (China: Dalian) pp. 288–291

He J H, Some applications of nonlinear fractional differential equations and their approximations, Bull. Sci. Technol. 15(2) (1999) 86–90

Heydari M H, Hooshmandasl M R, Ghaini M and Cattani C, Wavelets method for the time fractional diffusion-wave equation, Phys. Lett. A 379 (2015) 71–76

Heydari M H, Hooshmandasl M R and Mohammadi F, Legendre wavelets method for solving fractional partial differential equations with Dirichlet boundary conditions, Appl. Math. Comput. 234 (2014) 267–276

Heydari M H, Hooshmandasl M R and Mohammadi F, Two-dimensional Legendre wavelets for solving time-fractional telegraph equation, Adv. Appl. Math. Mech. 6(2) (2014) 247–260

Heydari M H, Hooshmandasl M R, Maalek Ghaini F M and Mohammadi F, Wavelet collocation method for solving multi order fractional differential equations, J. Appl. Math., vol. 2012, Article ID 542401, 19 pages, https://doi.org/10.1155/2012/542401

Heydari M H, Hooshmandasl M R, Mohammadi F and Cattani C, Wavelets method for solving systems of nonlinear singular fractional Volterra integro-differential equations, Commun. Nonlinear Sci. Numer. Simul. 19 (2014) 37–48

Heydari M H, Hooshmandasl M R and Maalek Ghaini F M, Chebyshev wavelets method for solution of nonlinear fractional integro-differential equations in a large interval, Adv. Math. Phys., vol. 2013, Article ID 482083, 12 pages (2013)

Heydari M H, Hooshmandasl M R, Cattani C and Ling M, Legendre wavelets method for solving fractional population growth model in a closed system, Math. Probl. Eng., vol. 2013, Article ID 161030, 8 pages, https://doi.org/10.1155/2013/161030

Heydari M H, Hooshmandasl M R, Maalek F M Ghaini and Fereidouni F, Two-dimensional Legendre wavelets for solving fractional Poisson equation with Dirichlet boundary conditions, Eng. Anal. Bound. Elem. 37 (2013) 1331–1338

Heydari M H, Hooshmandasl M R, Maalek Ghaini F M, Marji M F, Dehghan R, and Memarian M H, A new wavelet method for solving the Helmholtz equation with complex solution, Numer. Methods Partial Differ. Equ. (2015) 1–16

Heydari M H, Hooshmandasl M R, Loghmani G B and Cattani C, Wavelets Galerkin method for solving stochastic heat equation, Int. J. Comput. Math. 93(9) (2016) 1579-1596

Heydari M H, Hooshmandasl M R and Maalek Ghaini F M, A new approach of the Chebyshev wavelets method for partial differential equations with boundary conditions of the telegraph type, Appl. Math. Model. 38 (2014) 1597–1606

Jafari H, Khalique C M and Nazari M, An algorithm for the numerical solution of nonlinear fractional-order van der Pol oscillator equation, Math. Comput. Model. 55 (2012) 1782–1786

Li Y L and Sun N, Numerical solution of fractional differential equation using the generalized block puls operational matrix, Comput. Math. Appl. 62(3) (2011) 1046–1054

Li Y and Zhao W, Haar wavelet operational matrix of fractional order integration and its applications in solving the fractional order differential equations, Appl. Math. Comput. 216 (2010) 2276–2285

Li Y, Solving a nonlinear fractional differential equation using Chebyshev wavelets, Commun. Nonlinear Sci. Numer. Simul. 15(9) (2009) 2284–2292

Mainardi F, Fractional calculus: some basic problems in continuum and statistical mechanics, edited by Carpinteri A, Mainardi F, Fractals and fractional calculus in continuum mechanics (1997) (New York: Springer) pp. 291–348

Mandelbrot B, Some noises with \(1/f\) spectrum, a bridge between direct current and white noise, IEEE Trans. Inf. Theory 13(2) (1967) 289–98

Meerschaert M and Tadjeran C, Finite difference approximations for two-sided space-fractional partial differential equations, Appl. Numer. Math. 56 (2006) 80–90

Momani S and Odibat Z, Numerical approach to differential equations of fractional order, J. Comput. Appl. Math. 207 (2007) 96–110

Odibat Z and Momani S, Numerical methods for nonlinear partial differential equations of fractional order, Appl. Math. Model. 32 (2008) 28–39

Odibat Z and Shawagfeh N, Generalized Taylor’s formula, Appl. Math. Comput. 186 (2007) 286–293

Panda R and Dash M, Fractional generalized splines and signal processing Signal Process. 86 (2006) 2340–2350

Podlubny I, The Laplace transform method for linear differential equations of fractional order (1997) eprint arXiv:funct-an/9710005

Podlubny I, Fractional differential equations (1999) (San Diego: Academic Press)

Rehman M and Kh R A, The Legendre wavelet method for solving fractional differential equations, Commun. Nonlinear Sci. Numer. Simul. 16(11) (2011) 4163–4173

Rossikhin Y A and Shitikova M V, Applications of fractional calculus to dynamic problems of linear and nonlinear hereditary mechanics of solids, Appl. Mech. Rev. 50 (1997) 15–67

Sohrabi S, Comparision Chebyshev wavelets method with BPFs method for solving Abel’s integral equation, Ain Shams Eng. J. 2 (2011) 249–254

Sweilam N H, Khader M M, and Al-Bar R F, Numerical studies for a multi-order fractional differential equation, Phys. Lett. A 371 (2007) 26–33

Sweilam N, Khader M and Al-Bar R, Numerical studies for a multi-order fractional differential equation, Phys. Lett. A 371 (2007) 26–33

Tripathi M P, Baranwal V K, Pandey R K and Singh O P, A new numerical algorithm to solve fractional differential equations based on operational matrix of generalized hat functions, Commun. Nonlinear Sci. Numer. Simul. 18 (2013) 1327–1340

Wang Y and Fan Q, The second kind Chebyshev wavelet method for solving fractional differential equations, Appl. Math. Comput. 218 (2012) 8592–8601

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicating Editor: Neela Nataraj

Rights and permissions

About this article

Cite this article

Heydari, M.H., Hooshmandasl, M.R. & Cattani, C. A new operational matrix of fractional order integration for the Chebyshev wavelets and its application for nonlinear fractional Van der Pol oscillator equation. Proc Math Sci 128, 26 (2018). https://doi.org/10.1007/s12044-018-0393-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12044-018-0393-4

Keywords

- Chebyshev wavelets (CWs)

- nonlinear multi-order fractional differential equations

- operational matrix

- Galerkin method

- Van der Pol oscillator (VPO)