Abstract

We propose a seeding particle-based color-to-depth calibration methodology for three-dimensional color particle tracking velocimetry (3D color PTV) using a single camera and volumetric rainbow gradient illumination. The use of sheet-color illumination from a liquid crystal display projector enables in situ calibration, namely the color-to-depth relationships of particles seeded in a fluid are determined without inserting any calibration equipment or taking a different optical setup. That is, in this methodology, the calibration and application can be performed using the same optical configuration, and only the digital illumination patterns need to be changed. Adopting this calibration allows evaluating actual color-to-depth relationships of the particles in measurements. The calibration is conducted regarding the relationship between spatially distributed particle colors and their depth coordinates by support of an artificial neural network. By combining conventional PTV with the depth estimated by the color, particle trajectories in 3D real space can be reconstructed from the calibration. The performance of the proposed method was evaluated using a rotating flow in a cylindrical tank by comparing its results with the flow fields measured by conventional particle image velocimetry. Good accordance in the comparison at the highly 3D flow suggests the applicability of the present methodology for various flow configurations.

Graphic abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The range of applications of volumetric flow field measurement through tomographic particle image velocimetry (PIV) (e.g., Elsinga et al. 2006; Westerweel et al. 2013) is increasing. The shake-the-box method (Schanz et al. 2016) greatly reduced ghost particle problem arising in tomographic PIV, yielding dense Lagrangian particle tracking. These techniques measuring three-dimensional, three-component (3D-3C) velocity fields are now regarded as standard techniques in fluid flow measurements. However, these require careful optical arrangements of at least four cameras to acquire reliable particle displacements, and thus the cost of a tomographic system may not be affordable for many applications.

To tackle the complexity arising in such optical settings, as well as their high cost, some alternatives using only a single camera have been proposed in the last few decades. Especially under the limited measurement environments of microfluidics, the necessity of implementations on microscopes has motivated developments of such single-view approaches as advanced methods of micro-PIV. Single-view approaches require to record particle depth positions as additional information to planer images; astigmatism particle tracking velocimetry (PTV) utilizes particle distortions (e.g., Cierpka et al. 2010a, b), and three-pinhole aperture PIV uses geometric relationships of triplet particle images (e.g., Pereira et al. 2007; Tien et al. 2014). These techniques yielded a particle image density of \(O(10^{-4})\) particles per pixel (ppp) with a measurable depth of O(10–\(100~{\upmu })\)m. For larger measurable depth without microscopes, holographic PIV increased the particle image density to \(O(10^{-3}\)–\(10^{-2}~\mathrm {ppp})\), as well as the measurable depth to O(1–\(10~\mathrm {mm})\) (e.g., Salazar et al. 2008; Sheng et al. 2008). Recently, light-field PIV has been introduced as a new type of technique (e.g., Shi et al. 2018) and allows a rather easier implementation than the techniques described above with yielding a higher particle image density of \(O(10^{-2}~\mathrm {ppp})\) with the measurable depth of \(O(10~\mathrm {mm})\). These various volumetric measurement techniques using a single camera, however, often require additional special optics.

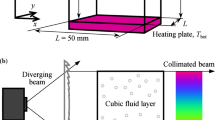

The simplest solution for locating particles seeded in a 3D space without special optics may be the use of color gradient information imposed along the depth (view) direction of a single camera (Matsushita et al. 2004; Prenel and Bailly 2006; Ruck 2011; McGregor et al. 2007; Bendicks et al. 2011; Watamura et al. 2013; Xiong et al. 2017, 2018; Park et al. 2021). This technique is called color PTV or rainbow PTV. Recently, Aguirre-Pablo et al. (2019) proposed a method called intensity PTV using a structured monochromatic illumination. The only required optical equipment is a single camera and a light source that can emit light of multiple wavelengths such as a liquid crystal display (LCD) projector. The optical arrangements are thus substantially less complex than those used in tomographic PIV and other single-view methods. A conceptual image of color PTV is shown in Fig. 1. An LCD projector displays a rainbow gradient, changing the color in the depth direction (the y-direction in the illustration) of the camera. Because the LCD projector is originally designed to illuminate a large space, the light emitted from the LCD projector diverges. Hence, the light is collimated by a collimation lens (a linear Fresnel lens is used in the present study) to form a parallel color beam. This ensures that the relationship between the color and depth coordinate is fixed. The color beam illuminates particles seeded into a test fluid, and a camera captures the particle images. If light with a color gradient is used, as shown in Fig. 1, the particles scatter light, and the color of that light reflects the depth. The particle positions in the in-plane coordinates (x and z) and out-of-plane coordinate (y) thus can be determined from the image coordinates (i and j) and the color, respectively. The concept of color PTV is simple and allows a large measurable depth of \(O(\ge 10~\mathrm {mm})\) and the particle image density of \(O(10^{-2}~\mathrm {ppp})\). However, many issues must be addressed to ensure reasonable accuracy in the practical application of this technique.

To practically apply color PTV in actual flow field measurements, a feasible method for calibrating the color-to-depth relationship is required. McGregor et al. (2007) utilized a microscope slide containing a low-density chalk dust having the same size as the particles for the calibration as shown in Fig. 2a. By placing the slide obliquely in the illuminated region in the x-y plane, color changes in the y direction could be tracked in the x direction of the image plane. Aguirre-Pablo et al. (2019) used a 3D laser-engraved calibration cube that contains micro-cracks that mimic spatially distributed particles as illustrated in Fig. 2b. The known 3D crack positions inside the cube can be used for calibration. Note that, they used a monochromatic illumination instead of rainbow illumination, while Fig. 2b illustrates rainbow colors for easier comparison with the others. They demonstrated successful results for a rotating flow of index-matched fluid in an open tank. The need to use a calibration cube, however, may not be user-friendly and is far from wide applications, as the calibration cube needs to be index-matched with the test fluid. It is therefore not realistic to produce a unique calibration cube for a variety of flow conditions. The light scattering characteristics of seeded particles, however, are not necessarily the same as those of such chalk dust and glass cracks. This is the difficulty in considering the particle color or intensity as additional information to the conventional calibration adopted in the stereoscopic and tomographic PIV, requiring only the relationship between the real and image coordinates.

Watamura et al. (2013) tried to calibrate the color-to-depth relationship in a particle-seeded fluid using a rainbow gradient illumination within the image plane as shown in Fig. 2c. In the calibration step, the particle images were obtained in the x-z plane. The color-to-in-plane relationships obtained in the special optical arrangement only for calibration are used to compute the calibration curves. The curves then are used to convert particle colors to depth positions in the actual measurements which illuminates a rainbow gradient in the depth direction. This calibration, however, requires a strong and unreasonable assumption that the colors scattered by the particles do not change in the two different optical arrangements.

These calibration methods proposed earlier cannot guarantee the actual accuracy in detecting the depth positions of particles seeded in the fluids, because the actual color-to-depth relationships of the particles are not directly used. For these reasons, there are two requirements for an in situ method for color-to-depth calibration as follows. One is to obtain the actual relationships between particle depth positions and their scattered colors in the flow conditions to be measured. Another is to conduct the calibration in the same optical arrangement as the measurements. That is, the color-to-depth calibration needs to be conducted in the particle-seeded fluid used for the actual measurements to satisfy these requirements. The aim of the present study is to establish such a reliable calibration methodology for color PTV that solves these issues. To do this, we adopt three different illumination measurements, full-color, sheet-color, and dark illumination. The conceptual image of the proposed calibration method is shown in Fig. 2d for comparison with the others. The use of an LCD projector allows such flexible illumination (within the resolution of the LCD projector) without changing the optical arrangement. We then propose a color-to-depth calibration method for particle-seeded fluids with series of supporting image processing and artificial neural network (ANN) to solve the multi-regression problem on the calibration. The method was tested in a highly 3D rotating flow generated by an impeller and was validated through comparison with conventional PIV to show reasonable accuracy of the proposed method.

2 Methodology

For the calibration and application of color PTV, all measurements need to be performed using an identical experimental setup. Only the illumination light is changed for the calibration step, as detailed in Sect. 2.2. Thus, the measurement target is used in the first step of the measurements (Sect. 2.1). The methodology comprises four parts: three different illumination measurements (Sect. 2.2), a series of image processing operations (Sect. 2.3), color-to-depth calibration supported by ANN (Sect. 2.4), and particle tracking in the 3D real space (Sect. 2.5). A simplified flowchart of the proposed method is shown in Fig. 3 for the convenience of readers to facilitate their understanding of the subsequent discussion. The section numbers are given above the corresponding processes. A complete flowchart showing the details of each process is provided in the supplementary material.

2.1 Experimental design

We employed a rotating flow in a cylindrical fluid layer driven by an impeller as the measurement target, as schematically illustrated in Fig. 4. Water was used as the test fluid, and a rectangular vessel was filled to a height of \(150~\mathrm {mm}\). An annulus with an inner diameter of \(200~\mathrm {mm}\) was inserted into the rectangular vessel to form a cylindrical fluid layer. Both the rectangular vessel and annulus were filled with water to reduce unwanted optical refraction. Tracer particles (HP20, mean diameter \(0.5~\mathrm {mm}\), mean density 1.01 g/cm3, Mitsubishi Chemical Co.) were seeded in the fluid only inside the annulus. The fluid in the annulus was driven by a propeller-type-impeller with a diameter of \(60~\mathrm {mm}\) comprising four small wings with an attack angle of 30° and rotated constantly at \(\omega = 30~\mathrm {rpm}\) in a clockwise direction. The rotational axis of the impeller was located at the center of the annulus (\(x=y=0\)) and the bottom of the impeller was placed at half the height of the fluid, \(z=75~\mathrm {mm}\).

A rainbow gradient pattern was projected from an LCD projector (\(3000~\mathrm {lm}\), \(1280 \times 800~\mathrm {pixels}\) EB-W420, Seiko Epson Co.) and collimated by a linear Fresnel lens (focal length \(150~\mathrm {mm}\)) set in front of the projector. The collimated volumetric beam had a thickness of \(\updelta y = 60~\mathrm {mm}\) in the depth (y) direction. A color CMOS camera (DFK33UP5000, The Imaging Source Co.) equipped with a lens (focal length \(35~\mathrm {mm}\), aperture F#8, estimated depth of field \(\sim 80~\mathrm {mm}\), Nikkor, Nikon) was set perpendicular to the collimated beam and acquired particle images in the x-z plane. The original images had Bayer patterns and were processed by an advanced linear interpolation method for demosaicing (Malvar et al. 2004). The illuminated volume was 200 × 60 × 150 mm3 in the x-y-z directions. The measurement volume in the annulus was slightly smaller than this and is shown as the region enclosed by red dashed lines in Fig. 4. The arrangement illustrated in Fig. 4 shows a scheme in which the collimated beam illuminates the fluid layer in the range of \(y=-\,50~\mathrm {mm}\) to \(10~\mathrm {mm}\).

Details of the projected color pattern for full-color illumination measurements. a Original color pattern projected by the LCD projector, b brightness values of each RGB channel in 8-bit format, and c 3D-Cartesian plots of the illumination colors. The maximum height in pixels of the LCD projector \(\updelta y = 800~\mathrm {pixels}\) corresponds to the thickness of the collimated color beam \(\updelta y = 60~\mathrm {mm}\). The solid line in c represents the used colors; the colored circles are plotted every \(4~\mathrm {mm}\) for reference

Various illumination patterns have been proposed for single-camera 3D PTV based on an LCD projector. For example, Watamura et al. (2013) used an illumination that cycles through multiple hues over time and Aguirre-Pablo et al. (2019) demonstrated the efficiency of a brightness-changing animation illumination. The employment of such animation effects in the illumination patterns may increase the flexibility of the post-processing, improving the accuracy of depth coordinate estimation. In contrast, the quality of an animation projected by an LCD projector may strongly depend on its specifications, such as refresh rate or time required for color stabilization (transition time), and these mechanical restrictions limit the measurement targets to slowly moving fluid flows, as discussed in Aguirre-Pablo et al. (2019). Thus, we attempted to use a fixed rainbow illumination during measurements so that the generalization of the color PTV method does not depend on the LCD projector. This approach also reduces the complexity arising in the post-processing steps. The rainbow gradient pattern used as an input to the LCD projector in the present study is presented in Fig. 5. The color pattern shown in Fig. 5a is projected by the LCD projector to change color in the depth direction, and the corresponding brightness values of three color channels, R, G, and B, each in 8-bit format, are shown in Fig. 5b. The maximum resolution of the projector, \(800~\mathrm {pixels}\), corresponds to the thickness of the collimated beam \(\updelta y = 60~\mathrm {mm}\). The brightness values in the RGB domain are shown in Fig. 5c. The solid lines shown in Fig. 5a, b represent the used color values. It is clear that the employed rainbow gradient can be well separated in the 3D-RGB domain, and the depth coordinates of the seeded particles should be determined using all three brightness values. Details of the color-to-depth calibration method are addressed in Sect. 2.4.

2.2 Measurement procedure

The measurement procedure comprises measurements under three different illuminations: full-color, sheet-color, and dark illumination. These measurements are used for the subsequent image analyses. During these three measurements, only the projection images are changed while the optical arrangements of the experimental equipment are fixed, and all the measurements can be conducted in the particle-seeded fluid.

2.2.1 Full-color illumination measurements

The full-color illumination pattern, as illustrated in Fig. 4, is the main type of measurement for investigating flow fields. Because the LCD projector illuminates particles seeded in water with a full-color pattern having a thickness of \(\updelta y= 60~\mathrm {mm}\), the depth coordinates of the particles y within the thickness are represented by the colors scattered by the particles in the acquired color images. The total depth of the illuminated volume varied less than \(1~\mathrm {mm}\) (\(< 2\%\)) across the field of view. In the present study, rotating flows provoked by the impeller set in the middle of the cylindrical tank were recorded as consecutive color images with 90 fps for 2 minutes (60 rotations of the impeller).

2.2.2 Sheet-color illumination measurements

To obtain the relationships between the particle color and depth information for color-to-depth calibration, it is necessary to predetermine these relationships under known conditions. To do this, we illuminate particle-seeded fluid by sheet-color patterns, as illustrated in Fig. 6. The digitally segmented sheet-color patterns illuminate particles at known depth positions without changing the optical arrangements. This reduces any mechanical error that could arise in the traversal of the calibration plates. In the present study, we segmented the full-color projection pattern used in the actual measurements into 40 sheet-color patterns by placing black digital slits in front of the full-color pattern. Each sheet possessed a thickness of \(20~\mathrm {pixels}\) in the projected pattern resulting in a 1.5-mm-thick sheet beam in the real dimensions, as the thickness of the full-color pattern was \(\updelta y = 800~\mathrm {pixels} = 60~\mathrm {mm}\). Each sheet yielded a slight color change within the thin thickness. The regions outside the sheet in the sheet-color patterns were filled with black (\(R=G=B=0\)), so as not to illuminate particles outside the sheet region. In principle, particles illuminated by a certain sheet-color pattern exist within a thin sheet region at a known depth coordinate. For all the sheet-color patterns, the sheet thickness varied much less than 5% across the field of view.

We utilized large particles with a mean diameter of \(0.5~\mathrm {mm}\) for better color scattering. Suppose that the particles scatter colors when a part of the particle enters the sheet illumination. Then, the depth positions of particles are detected with an accuracy of \(\pm \, (0.75 + 0.25)~\mathrm {mm} = \pm \, 1.0~\mathrm {mm}\), which is half of the sheet thickness and particle radius. By recording particle images illuminated by the 40 sheet-color patterns, particle colors can be identified at different 40 depth levels with at least this accuracy of \(\pm \, 1.0~\mathrm {mm}\). In the color-to-depth calibration step detailed in Sect. 2.4, this original accuracy given by the system is the target value for the optimization. For each illumination, we recorded more than 1,000 images to obtain a sufficient number of colored particles present in the thin sheet regions. Note that, these sheet-color illumination measurements using 40 patterns can be fully automated by projecting a slowly changing animation of the sheet-color patterns.

Thinner sheet-color patterns can obtain more accurate relationships between the colors and the known depth coordinates. In principle, the thickness of a sheet-color pattern can be as thin as \(1~\mathrm {pixel}\), corresponding to \(0.075~\mathrm {mm}\) in the present condition, if the brightness of the colored particles can be distinguished from those of the non-illuminated particles. At this time, the diameter of the seeded particles also becomes important when capturing scattered color information from the seeded particles, and this needs to be considered when selecting tracer particles.

2.2.3 Dark illumination measurements

Ideally, the full-color and sheet-color illumination measurements only record particles illuminated by the color beams. However, the LCD projector illuminates the space corresponding with the black pixel regions of the sheet-color patterns with weak light intensity. Particles in the black regions are hence recorded on particle images as spots with low brightness and without a strong saturation, as illustrated in Fig. 6. Moreover, such dark particles appear on particle images recorded during the full-color illumination measurements, as the projector leaks light with weak light intensity at the peripherals of the illumination volume. It may not be possible to fully exclude such low-brightness particles during the image acquisition steps. For these reasons, we recorded non-illuminated particles by projecting a fully black pattern of the same size as the full-color image. During this dark illumination measurement, we recorded more than 1000 images as in the sheet-color illumination measurements, to obtain a sufficient number of non-illuminated particles.

2.3 Image processing for colored-particle detection

Series of image processing steps for colored-particle detection, performed for a full-color illumination and b sheet-color illumination measurements. Images in the first column represent the projection color patterns (\(1280 \times 800~\mathrm {pixels}\)) used for the two measurements. Images from the second to seventh columns are magnified views of \(100 \times 100~\mathrm {pixel}\) regions of original color images, grayscale images, background subtracted images, binarized images after background and foreground subtraction, mask images, and masked color images, respectively. Detected particle positions are indicated by circles with central dots in the far-right column, and each circle is colored by the averaged particle color within a region of \(3 \times 3~\mathrm {pixels}\). Particle images in a show the case of \(\sim 0.007~\mathrm {ppp}\)

In the three measurements using different projection patterns described in Sect. 2.2, color images \(\mathbf{I}_\mathrm {c}\) were originally acquired in 24-bit format (8 bits for each R, G, and B channel). To track particle motions in the 3D real space, the seeded particles must be detected along with their color information with excluding non-illuminated particles. We show a series of image processing steps to detect colored-particles combining particle images obtained in the three measurements and standard image processing techniques. Examples of these procedures are shown in Fig. 7. The same image processing scheme for the full-color (Sect. 2.2.1) and sheet-color (Sect. 2.2.2) illumination measurements enables the detection of colored particles, as shown in Fig. 7a, b, respectively. Color patterns projected for the full-color and sheet-color illumination measurements are shown in the first column of Fig. 7, and these have resolutions of \(1280 \times 800~\mathrm {pixels}\), which corresponds to the resolution of the LCD projector. When these illumination patterns were adopted, original particle images were recorded as color images \(\mathbf{I}_\mathrm {c}\), as shown in the second column of Fig. 7. Here, the particle images are magnified views of small \(100 \times 100~\mathrm {pixel}\) regions so that the particles are easily visible. The details of these procedures for colored-particle detection are presented in the following.

2.3.1 Pre-processing

First, color images \(\mathbf{I}_\mathrm {c}\) are converted to grayscale images \(\mathbf{I}_\mathrm {g}\), as shown in the third column of Fig. 7. Because the original images \(\mathbf{I}_\mathrm {c}\) or \(\mathbf{I}_\mathrm {g}\) contain static artifacts such as light scattered from the tank, background subtraction is performed for grayscale images \(\mathbf{I}_\mathrm {g}\) to identify only the particles. Background images \(\mathbf{I}_\mathrm {bg}\) are generated by temporally averaging the grayscale images in each measurement, which is formulated as \(\mathbf{I}_\mathrm {bg} = \overline{\mathbf{I}_\mathrm {g}}\). A background-subtracted image is then obtained as \(\mathbf{I}_\mathrm {g} - \mathbf{I}_\mathrm {bg}\), as shown in the fourth column of Fig. 7. Only the seeded particles are now visible in the background-subtracted images; however, some particles with small brightness and hue values also exist. These uncolored particles should not be included in the particle detection because they do not provide informative color values and should be excluded from the subsequent analyses. Therefore, it is necessary to predetermine a threshold brightness value for excluding non-illuminated particles.

In the dark illumination measurement (Sect. 2.2.3), non-illuminated particle images have already been obtained. For these images, the background subtraction is performed in the manner described above. To determine thresholds for excluding non-illuminated particles, a pathline image \(\mathbf{I}_\mathrm {pl}\) of the non-illuminated particles is compiled by taking the pixelwise maxima of stacked a number of background-subtracted images. This operation is formulated as \(\mathbf{I}_\mathrm {pl} = f_\mathrm {max}(\mathbf{I}_\mathrm {g} - \mathbf{I}_\mathrm {bg})\), where \(f_\mathrm {max}\) represents a pixelwise operation for taking the maximum values of a stacked lot of background-subtracted images. Since the pathline image only contains the non-illuminated particles, it is considered to represent the pixelwise brightness values for the non-illuminated particles in the full-color and sheet-color illumination measurements, which are typically much darker than the colored particles. To ensure spatially homogeneous distribution of the pathline brightness, a Gaussian blur with a kernel size of \(15\times 15~\mathrm {pixels}\) (\(\mathbf{K}_\mathrm {G}\)) is performed. The blurred pathline image of the non-illuminated particles is called a foreground image \(\mathbf{I}_\mathrm {fg} = \mathbf{I}_\mathrm {pl} *\mathbf{K}_\mathrm {G}\) hereafter, where \(*\) represents a convolution operation.

The common foreground image \(\mathbf{I}_\mathrm {fg}\) is subtracted from the background-subtracted images of the full-color and sheet-color illumination measurements. The image is then binarized by a global threshold value after the foreground subtraction to filter out the non-illuminated particles. This is written as \(\mathbf{I}_\mathrm {bin} = f_\mathrm {bin}(\mathbf{I}_\mathrm {g} - \mathbf{I}_\mathrm {bg} - \mathbf{I}_\mathrm {fg})\), where \(f_\mathrm {bin}\) is the step function for binarization. The binarized images \(\mathbf{I}_\mathrm {bin}\) are shown in the fifth column of Fig. 7. The regions of the colored particles remain after this binarization because they are typically brighter than those of the non-illuminated particles. Tiny defects in \(\mathbf{I}_\mathrm {bin}\) are padded by a closing process (kernel size \(3 \times 3~\mathrm {pixels}\)), and each particle region is dilated by a kernel of \(5 \times 5~\mathrm {pixels}\) to ensure the regions are large enough for the subsequent particle detection step. After these procedures, we obtain mask images \(\mathbf{I}_\mathrm {m}\) as shown in the sixth column of Fig. 7. The mask images are used for masking the original color image \(\mathbf{I}_\mathrm {c}\) to extract only the colored-particles. Finally, we obtain masked color images \(\mathbf{I}_\mathrm {mc} = \mathbf{I}_\mathrm {m} \odot \mathbf{I}_\mathrm {c}\), where \(\odot \) represents the Hadamard product, as shown in the seventh column of Fig. 7.

Comparing the original color image \(\mathbf{I}_\mathrm {c}\) (the second column) and the masked color image \(\mathbf{I}_\mathrm {mc}\) (the seventh column), only colored particles are successfully identified in both the full-color and the sheet-color illumination measurements through identical image processing steps. Particle colors at the same depth positions obtained in the full-color and the sheet-color illumination measurements were regarded as statistically the same because the masking procedure fully filtered out particles with insignificant colors.

2.3.2 Colored-particle detection

Particle detection is performed on the masked color images \(\mathbf{I}_\mathrm {mc}\) through various methods, as \(\mathbf{I}_\mathrm {mc}\) only contains the colored-particle regions. Here, we utilized the particle mask correlation method (Takehara and Etoh 1998) using a Gaussian brightness distribution as a template. This method can detect particles allowing for slight particle overlaps and determines particle positions P(i, j) at sub-pixel level.

Color extraction from the detected particles may be performed by a variety of methods. Watamura et al. (2013) proposed seven algorithms for determining the hue values of colored particle images, such as the average hue or saturation-weighted hue of a particle region. Park et al. (2021) attempted to identify representative particle colors from intentionally defocused images to minimize color deviations within the particle regions. The best values may change in each experimental condition, and it is necessary to select the optimal one through case studies. However, use of a representative color value may not fully represent the color information originally recorded in 24-bit format. In the present study, we do not reduce the color information to hue values but instead use all the RGB values at the central pixel (the closest pixel to the sub-pixel center) and its surrounding eight pixels. Thus, we use RGB values in the \(3 \times 3~\mathrm {pixels}\), resulting in 27 brightness values for a single particle, to determine its depth coordinate. For convenience, this \(3 \times 3 \times 3\) matrix is represented as a color matrix \(\mathbf{C}\) hereafter. This systematic color identification will provide the robustness of the proposed method by excluding empirical decisions required for seeking an optimal color identification scheme unique to the measurement environment. With this, the particle position in the masked color image \(\mathbf{I}_\mathrm {mc}\) is represented as \(P(i,j,\mathbf{C})\), where \(\mathbf{C}\) should correspond to depth coordinate y.

In the far right column of Fig. 7, the positions of the detected colored particles are plotted by circles with central dots. Each circle is colored by the average color of color matrix \(\mathbf{C}\). The colored particles are successfully detected, even those that slightly overlap, by the particle mask correlation. Each color is thus well extracted for both the full-color and the sheet-color illumination measurements.

2.4 Color-to-depth calibration based on artificial neural networks

Using the particle images obtained in the sheet-color illumination measurements (Sect. 2.2.2) and subsequent image processing presented in Sect. 2.3, particle colors \(\mathbf{C}\) at a certain depth coordinate y are obtained. These known color-to-depth relationships are used to obtain the color-to-depth calibration. As the color matrix of a particle \(\mathbf{C}\) possesses 27 values, this calibration procedure becomes a multi-regression problem, and thus we employ an artificial neural network (ANN) to solve this problem. Similar efforts to solve multi-regression problems have been performed recently in the color-to-temperature calibration of thermochromic liquid crystals (Moller et al. 2020; Anders et al. 2020).

2.4.1 Construction and training of an ANN

First, the known relationships between the particle position \(P(i,j,\mathbf{C})\) and the depth position y are summarized for all images obtained in the 40 sheet-color illumination measurements. For each sheet position, 5000 colored-particles are randomly selected, and 200,000 (\(=5000 \times 40\)) items of particle information in total are compiled as training data for the ANN calibration. In addition to the training data, the same number of colored-particle data are stored in the same manner as the test data for later evaluation. Here, we consider both the image coordinates (i, j) and color matrix at position \(\mathbf{C}_{i,j}\) as input values for the ANN calibration. This is because the particle color may depend on the position in the image plane, as the color beam from the LCD projector attenuates in the x-direction and diverges to the z-direction in real coordinates corresponding to the i- and j-directions of the image coordinates. Further, color divergence (unwanted mixing of colors) leading to positional dependence of colors may occur as the color beam from the LCD projector was not fully focused across the field of view. Thus, we have 29 values \((i, j, \mathbf{C}_{i,j})\) in an input of the ANN to determine a single output y, and this depth coordinate estimation based on the ANN is conceptually formulated as \(y = {\mathcal {F}}(i,j,\mathbf{C}_{i,j})\). We examined various ANN structures, e.g., the number of nodes and number of hidden layers, in preliminary trials. We determined that an ANN structure with two hidden layers, each containing 800 and 400 nodes, was empirically optimal with respect to accuracy for estimating the depth coordinate from the input values. The training process (optimization of the weight matrices) of the constructed ANN is conducted using the adaptive momentum estimation proposed by Kingma and Ba (2017) with mini-batch training. For the activation function, we employed the rectified linear unit (ReLU).

In Fig. 8, the root-mean-squared error RMSE for the training and test data during the learning process are illustrated. Here, the RMSE value is defined as

where N, \(y_\mathrm {pred}\), and \(y_\mathrm {true}\) represent the number of data (\(N=200,000\)), the depth coordinate predicted by the ANN, and the true depth coordinate (the known y coordinate in the sheet-color illumination measurements), respectively. To construct an optimal ANN system, the performance should be evaluated on the test data. Otherwise, the ANN fits only the training data, which is the so-called over-learning problem, whereas the RMSE for the training data decreases over 1,000 epochs, that for the test data stays almost constant, at slightly below \(1~\mathrm {mm}\). This indicates that the ANN may have over-learned the training data. We therefore chose the weight matrices obtained after 100 epochs as the optimal ones for the constructed ANN system. This selection keeps accuracy \(RMSE < 1~\mathrm {mm}\) sufficiently high because the original accuracy of this experimental configuration is \(\pm \, 1~\mathrm {mm}\) (half of the sheet thickness and particle radius), as mentioned in Sect. 2.2.2.

2.4.2 Evaluation of depth coordinate estimation

Mean deviations at each depth coordinate. Error bars indicate the standard deviations for each plot. The projected full-color image is shown above for reference. The target accuracy \(\pm \, 1~\mathrm {mm}\) is indicated by the red dashed lines, and the thickness of the sheet-color pattern \(\pm \, 0.75~\mathrm {mm}\) is shown as gray-shaded region

Performance of the calibration supported by ANN was evaluated using the test data which has a known relation between the actual depth coordinates and colors. This thus reflects actual accuracy for determining the depth coordinates of the particles seeded in the fluids. The RMSE values shown in Fig. 8 represent a global trend for the whole measurement volume \(\updelta y = 60~\mathrm {mm}\). Furthermore, the depth-dependent deviation between the estimated depth and the true depth at each reference point is required to evaluate the depth coordinate estimation. In Fig. 9, the mean deviation \(\langle y_\mathrm {pred} - y_\mathrm {true} \rangle \) for the test data at each reference point is shown with the corresponding one standard deviation range as an error bar. The projected color is shown above for reference. Overall, the mean deviation stays within \(-\,1.0\) to \(1.0~\mathrm {mm}\), surpassing the target accuracy \(\pm \, 1~\mathrm {mm}\), indicated by red dashed lines in Fig. 9. Considering this, the mean deviation for each reference point is low enough to ensure sufficient accuracy on depth coordinate estimation. Although the other regions show good agreement with the true values, with deviations of less than \(0.5~\mathrm {mm}\), there are two error-prone regions in the projected color, at \(y\sim -\,45\) to \(-\,38~\mathrm {mm}\) (orange) and \(y\sim -\,20\) to \(-\,7~\mathrm {mm}\) (cyan). Similarly, earlier works performed by Watamura et al. (2013) and Park et al. (2021) showed error-prone regions in the hue-to-depth calibration curves. This may occur for various reasons, such as the quality of the color illumination projected by the LCD projector, the light receiving characteristics of the camera, or the light scattering characteristics of the particles. In addition, relatively large deviations in the depth estimation are located in the corner regions of the 3D-RGB domain of illumination colors, as shown in Fig. 5c. The changes in colors of the rainbow gradient in these regions may be smaller than for colors in other regions. It might be better to exclude the error-prone regions from the projected pattern, however, the validated accuracy, less than \(\pm \, 1~\mathrm {mm}\), is sufficiently close to the existing deviation \(\pm \, 1~\mathrm {mm}\) in the present system discussed in Sect. 2.2.2. We conclude that the color-to-depth calibration works well for depth coordinate estimation over the whole illuminated volume.

2.5 Particle tracking in 3D real space

Tracking particles in 3D real space (x, y, z), as in the tomographic PIV technique (Elsinga et al. 2006; Schanz et al. 2016), is a straightforward approach to 3D velocity field measurements. However, it is necessary to consider the accuracy of particle coordinate estimation before the 3D tracking. The depth coordinates of the particles determined from the color information have an accuracy of around \(\pm \, 1~\mathrm {mm}\) in the present case. This is much lower than the sub-pixel accuracy \(O(0.01~\mathrm {mm})\) of the in-plane coordinates. Accordingly, particle tracking in 3D real space may not be feasible, as the particle displacements in the y direction cannot be treated like as those in the x and z directions. To overcome this problem, a slightly more complicated 3D particle tracking must be followed, and an analytic scheme to reconstruct 3D particle trajectories is proposed in this section.

2.5.1 Two-dimensional particle tracking in the image plane

It is possible to track particle trajectories in an image plane projecting the 3D space by adopting the conventional 2D PTV technique for the detected particles. In the present study, we adopted an in-house 2D PTV code that simply link the particle displacements by the nearest-neighbor method (Pereira et al. 2006), and outlier displacements are detected by the universal outlier detection (Westerweel and Scarano 2005). The outlier displacements are then associated with other close particles, and these procedures are iterated until the convergence. It is worth computing the 2D trajectories for the purpose of labeling each particle in the image plane prior to 3D tracking. A 2D trajectory for a single particle is composed of temporally continuous image coordinates (i, j) along with color matrix \(\mathbf{C}_{i,j}\). Conceptually, the particle motion in the 3D real space is smooth, and the recorded trajectory \((i, j, \mathbf{C}_{i,j})\) also provides a smooth change over time. Thus, tracking particles in the 2D image plane enables the temporal smoothness of the particle trajectory to be used to correct unreasonable deviations in the depth coordinate estimation.

2.5.2 Correction of the depth coordinates

As noted above, the color-to-depth calibration provides a probable estimation of depth coordinates from the color information of the particles. This initial estimation by the ANN does not ensure temporal smoothness of the trajectories in the depth direction. Thus, the trajectories in the depth direction need to be corrected to ensure temporal smoothness. A similar approach was already performed by Aguirre-Pablo et al. (2019). In Fig. 10, examples of the depth coordinates estimated by the ANN-based calibration are plotted as uniquely colored squares for each particle. Only 80 to 100 successive frames of eleven trajectories are shown for visibility. As shown in Fig. 10, the depth coordinates estimated by the ANN fluctuate over time, and these fluctuations increase when the particles enter the error-prone region (\(y\sim -\,45\) to \(-\,38~\mathrm {mm}\) and \(y\sim -\,20\) to \(-\,7~\mathrm {mm}\)), as shown in Fig. 9. The deviating particle trajectories are fitted by the robust estimation method proposed by Huber (1964), and the fitted curves are drawn by solid lines. For these fluctuating plots, the robust estimation successfully draws smooth curves while excluding the outlier points that may originate from deviations in the colors of the particles. The fitted curves ensure temporally smooth displacements of the particles in the depth direction, and thus these corrected depths can be used as the measured depth coordinates.

2.5.3 Mapping image coordinates to real coordinates

Image coordinates (i, j) cannot be directly converted to real coordinates (x, z) because the magnification rate differs with respect to depth coordinate y. Thus, after obtaining the corrected depth coordinate, the in-plane real coordinates (x, y) need to be calculated through mapping functions \(x = {\mathcal {G}}(i,j,y)\) and \(z = {\mathcal {H}}(i,j,y)\). The mapping function is best calculated during the 3D calibration process employed in the stereoscopic PIV measurement, which mechanically traverses a calibration plate with dotted or grid patterns in micro-stages (e.g., Soloff et al. 1997; van Doorne and Westerweel 2007). In the present study, a method that requires only particle images to create mapping functions is proposed. The mapping function can be obtained only from the particle images, assuming that the lens aberrations and other optical refraction are negligible. If a sufficient number of tracer particles are seeded in the fluid, the colored particles should appear over the whole illuminated regions during long-term observation. Accordingly, long-term pathline images \(\mathbf{I}_\mathrm {pl}\) compiled using the masked color images of the sheet-color illumination measurements provide particle regions in each thin sheet region. Here, \(\mathbf{I}_\mathrm {pl}\) is newly obtained for all the sheet positions through the same procedure described in Sect. 2.3, resulting in a brighter image compared with the foreground image \(\mathbf{I}_\mathrm {fg}\). Examples of the long-term pathline images are shown in Fig. 11. From the long-term pathline images, the boundaries between the fluid and sidewalls or fluid surface become apparent, as the images possess bright pixels only in the fluid region seeded with the tracer particles. Accordingly, the real coordinates (x, y, z) of the four corners are known, and the corresponding image coordinates (i, j) are also identifiable from the long-term pathline image at each cross section. In the present study, we were able to obtain 160 reference points (four corners of 40 cross sections).

Since the reference points at the four corners of different depth positions are aligned along the circumferences of the cylindrical vessel, these move in a bit complicated manner within the image planes over the different depth. We thus employed a third-degree polynomial fitting to obtain the mapping function \({\mathcal {G}}\) for the x coordinate, and this is described as

Here, \(a_0\) to \(a_{18}\) are constants obtained by the least squares method. In the same manner, the mapping function \({\mathcal {H}}(i,j,y)\) for the z coordinate can be computed. Aguirre-Pablo et al. (2019) employed second-order regression for their cubic geometry, however, the best fitting function may differ depending on the spatial distribution of the reference points. Using these mapping functions, the final in-plane coordinates x and z are obtained, and the particle trajectories in 3D real space P(x, y, z, t) are determined.

Reconstructed 3D flow field in a rotating flow induced by an impeller: a 3D particle trajectories of 2000 particles and b mean velocity field interpolated on a regular grid during \(10~\mathrm {s}\) (five cycles of the impeller). The trajectories in a are colored uniquely to identify each particle. The velocity vectors in b are colored by the velocity magnitude \(\vert \mathbf{u} \vert \), and only 1% of the interpolated vectors are shown for clearer visibility. The measurement volume of the color PTV is enclosed by pink dashed lines

3 Validation of the methodology in a rotating flow

The methodology established in Sect. 2 was validated in a rotating flow experiment in a cylindrical tank, details of which are given in Sect. 2.1. In the presented case, the typical particle image density was \(\sim 0.01~\mathrm {ppp}\) resulting in the tracking of approximately 20,000 particles simultaneously. The reconstructed 3D particle trajectories are shown in Fig. 12a. The trajectories of 2000 particles are shown in Fig. 12a and colored uniquely to identify each particle. The measurement volume of the color PTV is indicated by the pink dashed lines. A rotating flow structure within the measurement volume is qualitatively shown by the trajectories.

To validate the performance of the color PTV quantitatively, a temporal mean velocity field in 3D space was computed using the tracking result. For this, we took the average of all the particle trajectories measured during \(30~\mathrm {s}\) (fifteen rotations of the impeller), and velocity vectors on a regular grid with \(2~\mathrm {mm}\) distancing in any direction were interpolated by Shepard’s method (Shepard 1968). For this interpolation, only the velocity vectors within \(\pm \, 0.75~\mathrm {mm}\) from each grid were used, and the weighted average values were interpolated on each grid using the squares of the inverse distances between the grid and the particles as the weight. The interpolated velocity field is shown in Fig. 12b with the velocity vectors colored by the magnitudes \(\vert \mathbf{u} \vert \).

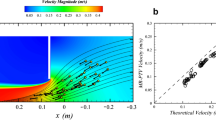

The interpolated velocity field must be compared with the flow field measured by the conventional PIV. For the PIV measurements, a 1.5-mm-thick red laser (DPRLu-5W, Japan Laser Co.) was used for sheet illumination at arbitrary cross sections. In principle, any cross sections can be measured by the PIV because the fluid vessel is fully transparent. The direct cross-correlation method was utilized in the PIV measurement. Mean velocity fields at a vertical cross section of \(y = -30~\mathrm {mm}\) measured by conventional PIV and color PTV are shown in Fig. 13a, b. Here, the velocity field measured by the conventional PIV (Fig. 13a) was obtained by taking the temporal average for the same duration as that of color PTV, and that by color PTV (Fig. 13a) was extracted from the interpolated velocity fields shown in Fig. 12b. The color contours of Fig. 13a, b represent the magnitude of the in-plane velocity \(\sqrt{u^2 + w^2}\), and the in-plane velocity vectors are shown by white arrows. The mean velocity field measured by color PTV seems to be more blurred than that measured by conventional PIV. However, the result of color PTV is found to reconstruct the smallest toroidal vortex structures present in the system. This performance may be sufficiently high in terms of practical applications. Velocity profiles at fixed horizontal lines of \(z = 20\), 74, and \(130~\mathrm {mm}\) are shown in Fig. 13c, and the profiles measured by conventional PIV and color PTV are shown by different lines and symbols, respectively. The corresponding horizontal lines are represented by dashed lines in Fig. 13a, b. Overall, the velocity profiles measured by the two methods show the same trend with respect to magnitudes and gradients. The velocity profiles at \(z=74~\mathrm {mm}\), however, show discrepancies especially at the vicinity of the side wall \(\vert x \vert > 70~\mathrm {mm}\). These discrepancies may originate in low particle density around the region and the grid interpolation scheme, which requires spatial averaging adapted to the data obtained by color PTV. Thus, an increase in the particle image density may solve this problem, and that can be ideally increased up to \(\sim 0.02~\mathrm {ppp}\) in the present configuration, as \(7 \times 7~\mathrm {pixels}\) were required to reconstruct the color matrix \(\mathbf{C}\) with \(3 \times 3~\mathrm {pixels}\) through color demosaicing. Apart from these steep changes around the edge regions, color PTV reconstructs the velocity field well.

In the same manner, the mean velocity fields at a horizontal cross section of \(z = 25~\mathrm {mm}\) measured by the two methods are shown in Fig. 14a, b. The color contours of Fig. 14a, b represent the magnitude of the in-plane velocity \(\sqrt{u^2 + v^2}\), and the in-plane velocity vectors are shown by white arrows. Velocity profiles at fixed horizontal lines of \(y = -40\), \(-20\), and \(0~\mathrm {mm}\) are shown in Fig. 14c, and the profiles measured by conventional PIV and color PTV are shown by different lines and symbols, respectively. Similar to the results shown in Fig. 13, the mean velocity field measured by color PTV is blurred, and observable steep changes, especially around the center of the cylinder, are not fully reconstructed. However, color PTV well represents the circulating fluid motion induced by the impeller in any 3D direction, and the order of the velocity magnitude matches that measured by conventional PIV.

Incorporating all the discussions, we can conclude that color PTV can measure the 3D-3C velocity fields through the methodology proposed in Sect. 2 without changing optical arrangements. In the present study, the upper limit of the particle image density was \(0.02~\mathrm {ppp}\). Ideally, this can be increased up to \(0.05~\mathrm {ppp}\) if a 3-CCD-camera is employed, because it does not require color demosaicing restricting the minimum number of pixels comprising a single particle. This maximum particle image density is equivalent to that of the 2D PTV (Fuchs et al. 2017), while the shake-the-box method yields \(0.125~\mathrm {ppp}\) at the maximum through the multiple camera approach (Schanz et al. 2016). The measurable spatial resolution, however, may depend on the measurement depth since the particles distributing in the 3D domain are projected to the image plane. In the present setting, the number of detected particles in the full-color illumination measurement indicates a particle number density of ~ 0.01 mm−3 per frame with the measurement depth of \(\updelta y = 60~\mathrm {mm}\). To calculate the mean velocity field, the particle number density becomes ~ 27 mm−3 (90 f.p.s for 30 s). This was sufficient to resolve the smallest flow structures \(O(10~\mathrm {mm})\) of the toroidal vortices, while the steep velocity gradients inside the vortices shown in Fig. 13a were underestimated.

4 Concluding remarks

We have proposed a color-to-depth calibration methodology for 3D color PTV and performed a direct accuracy evaluation. The in situ method does not require calibration plates or cubes for associating color-to-depth relationships and is directly applicable to the particle-seeded fluids. Unreasonable assumptions, which have been conventionally taken, arising from the different optical arrangements between the measurements and calibration thus are not required. The use of a consumer-grade LCD projector reduces the cost and complexity of the 3D flow field measurements as the color PTV requires only a single camera and a volumetric rainbow gradient illumination. The proposed method consists of three different illumination measurements and a series of standard image processing steps for enabling conventional 2D PTV. The accuracy of the depth estimation was around \(1~\mathrm {mm}\) out of the full thickness of the volumetric illumination of \(60~\mathrm {mm}\). The performance of the method was evaluated quantitatively by comparing the flow fields measured by the proposed method with those measured by the conventional PIV, and good accordance with the PIV with respect to the outline of the rotating flow was quantitatively confirmed. This result promises that the method can reconstruct 3D flow fields only with the particle-seeded fluid, as long as a sufficient number of colored-particles are detectable to resolve target flow fields.

The present study formulated robust procedures from experimental design to 3D-3C flow field measurements. Each process, however, can be further optimized in terms of selection of illumination color pattern, color identification scheme, color-to-depth regression scheme, 2D PTV algorithm, and so on. For instance, the selection of the projected illumination patterns and the methods to calibrate the color-to-depth relationships may not be critical to the actual implementation of general color PTV, including this study, as long as a sufficiently high accuracy is ensured to resolve measurement targets. This is because variety of procedures have previously been proposed (Matsushita et al. 2004; McGregor et al. 2007; Watamura et al. 2013; Xiong et al. 2018; Aguirre-Pablo et al. 2019; Park et al. 2021), and each has both advantages and disadvantages. Moreover, the best approach may change depending on the conditions in the experimental environments, such as light source, camera, and the materials to be illuminated.

To implement the proposed method in 3D flow measurements, one needs to solve the limitations as follows. First, the actual size of particles should be large enough to scatter informative colors. Also, the size of tracer particles on the image planes needs to be large enough to extract reliable color information, namely more than 3 pixels in the diameter would be better in the present condition. The measurement volume is determined by the camera resolution and mounting lens specification, to solve the particle size limitation as above. The illumination intensity of the LCD projector limits the dynamic range of the velocity, in terms of the shutter speed of the camera. Under these limitations, the particle number density needs to be as much as high to reconstruct fine flow structures. As long as these limitations are properly considered, the proposed method can measure various flows as 3D-3C velocity fields in practical use.

References

Aguirre-Pablo A, Aljedaani AB, Xiong J, Idoughi R, Heidrich W, Thoroddsen ST (2019) Single-camera 3D PTV using particle intensities and structured light. Exp Fluids 60(2):25

Anders S, Noto D, Tasaka Y, Eckert S (2020) Simultaneous optical measurement of temperature and velocity fields in solidifying liquids. Exp Fluids 61:1–19

Bendicks C, Tarlet D, Roloff C, Bordás R, Wunderlich B, Michaelis B, Thévenin D et al (2011) Improved 3-D particle tracking velocimetry with colored particles. J Signal Inf Process 2(02):59

Cierpka C, Rossi M, Segura R, Kähler C (2010) On the calibration of astigmatism particle tracking velocimetry for microflows. Meas Sci Technol 22(1):015401

Cierpka C, Segura R, Hain R, Kähler CJ (2010) A simple single camera 3c3d velocity measurement technique without errors due to depth of correlation and spatial averaging for microfluidics. Meas Sci Technol 21(4):045401

Elsinga GE, Scarano F, Wieneke B, van Oudheusden BW (2006) Tomographic particle image velocimetry. Exp Fluids 41(6):933–947

Fuchs T, Hain R, Kähler CJ (2017) Non-iterative double-frame 2d/3d particle tracking velocimetry. Exp Fluids 58(9):1–5

Huber PJ (1964) Robust estimation of a location parameter. Ann Math Stat 35(1):73–101

Kingma DP, Ba J (2017) Adam: a method for stochastic optimization

Malvar HS, He Lw, Cutler R (2004) High-quality linear interpolation for demosaicing of Bayer-patterned color images. In: 2004 IEEE international conference on acoustics, speech, and signal processing. IEEE, vol 3, pp iii–485

Matsushita H, Mochizuki T, Kaji N (2004) Calibration scheme for three-dimensional particle tracking with a prismatic light. Rev Sci Instrum 75(2):541–545

McGregor T, Spence D, Coutts D (2007) Laser-based volumetric colour-coded three-dimensional particle velocimetry. Opt Laser Eng 45(8):882–889

Moller S, Resagk C, Cierpka C (2020) On the application of neural networks for temperature field measurements using thermochromic liquid crystals. Exp Fluids 61:1–21

Park HJ, Yamagishi S, Osuka S, Tasaka Y, Murai Y (2021) Development of multi-cycle rainbow particle tracking velocimetry improved by particle defocusing technique and an example of its application on twisted savonius turbine. Exp Fluids 62(4):1–15

Pereira F, Stüer H, Graff EC, Gharib M (2006) Two-frame 3d particle tracking. Meas Sci Technol 17(7):1680

Pereira F, Lu J, Castano-Graff E, Gharib M (2007) Microscale 3d flow mapping with \(\mu \)ddpiv. Exp Fluids 42(4):589–599

Prenel J, Bailly Y (2006) Recent evolutions of imagery in fluid mechanics: from standard tomographic visualization to 3d volumic velocimetry. Opt Laser Eng 44(3–4):321–334

Ruck B (2011) Colour-coded tomography in fluid mechanics. Opt Laser Technol 43(2):375–380

Salazar JP, De Jong J, Cao L, Woodward SH, Meng H, Collins LR (2008) Experimental and numerical investigation of inertial particle clustering in isotropic turbulence. J Fluid Mech 600:245

Schanz D, Gesemann S, Schröder A (2016) Shake-the-box: Lagrangian particle tracking at high particle image densities. Exp Fluids 57(5):70

Sheng J, Malkiel E, Katz J (2008) Using digital holographic microscopy for simultaneous measurements of 3d near wall velocity and wall shear stress in a turbulent boundary layer. Exp Fluids 45(6):1023–1035

Shepard D (1968) A two-dimensional interpolation function for irregularly-spaced data. In: Proceedings of the 1968 23rd ACM national conference, association for computing machinery, New York, NY, USA, pp 517–524

Shi S, Ding J, Atkinson C, Soria J, New TH (2018) A detailed comparison of single-camera light-field PIV and tomographic PIV. Exp Fluids 59(3):1–13

Soloff SM, Adrian RJ, Liu ZC (1997) Distortion compensation for generalized stereoscopic particle image velocimetry. Meas Sci Technol 8(12):1441

Takehara K, Etoh T (1998) A study on particle identification in PTV particle mask correlation method. J Vis 1(3):313–323

Tien WH, Dabiri D, Hove JR (2014) Color-coded three-dimensional micro particle tracking velocimetry and application to micro backward-facing step flows. Exp Fluids 55(3):1–14

van Doorne CWH, Westerweel J (2007) Measurement of laminar, transitional and turbulent pipe flow using stereoscopic-PIV. Exp Fluids 42(2):259–279

Watamura T, Tasaka Y, Murai Y (2013) LCD-projector-based 3D color PTV. Exp Therm Fluid Sci 47:68–80

Westerweel J, Scarano F (2005) Universal outlier detection for PIV data. Exp Fluids 39(6):1096–1100

Westerweel J, Elsinga GE, Adrian RJ (2013) Particle image velocimetry for complex and turbulent flows. Annu Rev Fluid Mech 45:409–436

Xiong J, Idoughi R, Aguirre-Pablo AA, Aljedaani AB, Dun X, Fu Q, Thoroddsen ST, Heidrich W (2017) Rainbow particle imaging velocimetry for dense 3d fluid velocity imaging. ACM Trans Graph (TOG) 36(4):1–14

Xiong J, Fu Q, Idoughi R, Heidrich W (2018) Reconfigurable rainbow PIV for 3D flow measurement. In: 2018 IEEE international conference on computational photography (ICCP). IEEE, pp 1–9

Acknowledgements

The authors acknowledge financial support by a Grant-in-Aid for JSPS Fellows (Grant No. JP19J20096).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Noto, D., Tasaka, Y. & Murai, Y. In situ color-to-depth calibration: toward practical three-dimensional color particle tracking velocimetry. Exp Fluids 62, 131 (2021). https://doi.org/10.1007/s00348-021-03220-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00348-021-03220-9