Summary

Real world data analysis is often affected by different types of errors as: measurement errors, computation errors, imprecision related to the method adopted for estimating the data.

The uncertainty in the data, which is strictly connected to the above errors, may be treated by considering, rather than a single value for each data, the interval of values in which it may fall: the interval data. Statistical units described by interval data can be assumed as a special case of Symbolic Object (SO). In Symbolic Data Analysis (SDA), these data are represented as boxes. Accordingly, purpose of the present work is the extension of Principal Component analysis (PCA) to obtain a visualisation of such boxes, on a lower dimensional space pointing out of the relationships among the variables, the units, and between both of them. The aim is to use, when possible, the interval algebra instruments to adapt the mathematical models, on the basis of the classical PCA, to the case in which an interval data matrix is given. The proposed method has been tested on a real data set and the numerical results, which are in agreement with the theory, are reported.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The statistical modelling of many problems must account in the majority of cases of “errors” both in the data and in the solution. These errors may be for example: measurement errors, computation errors, errors due to uncertainty in estimating parameters. Interval algebra provides a powerful tool for determining the effects of uncertainties or errors and for accounting them in the final solution.

Interval mathematics deals with numbers which are not single values but sets of numbers ranging between a maximum and a minimum value. Those sets of numbers are the sets all possible determinations of the errors. A form of interval algebra appeared for the first time in the literature in (Burkill 1924), (Young 1931); then in (Sunaga 1958). Modern developments of such an algebra were started by R.E. Moore (Moore 1966). Main results may be found in (Alefeld & Herzerberger 1983), (Kearfott & Kreinovich 1996), (Neumaier 1990).

The methods which have been proposed for treating errors in the data, may be also applied to different kind of data that in real life are of interval type. For example:

-

Financial data; e. g., (opening value and closing value in a session)

-

Customer satisfaction data (expected or perceived characteristic of the quality of a product).

-

Tolerance limits in quality control.

-

Confidence intervals of estimates from sample surveys.

-

Query on a database.

It is known that statistical methods have been primarily developed for single-valued variables. However, in real life there are many situations in which the adoption of single-valued variables cause a loss of information. This have prompted the development of new methodologies of statistical analysis for treating interval-valued variables, that is variables that may assume not just a single value on the unit on which they have been measured, but an interval of values. Statistical indexes for interval-valued variables have been defined in (Canal & Pereira 1998) as scalar statistical summaries. These scalar indexes, may cause loss of information inherent in the interval data For preserving the information contained in the interval data many researchers and in particular Diday and his school of Symbolic Data Analysis (SDA) have developed some methodologies for interval data which provide interval index solutions that sometimes appear oversized as they include unsuitable elements. An approach, which is typical for handling imprecise data, is proposed by (Marino & Palumbo 2003). The centre and the radius of each considered interval and the relations between these two quantities are taken into account. An alternative approach for treating interval-valued variables is proposed in (Gioia & Lauro 2005). The methodology consists in using both the interval algebra and the optimization theory.

Methods for Factorial Analysis and in particular for Principal Component Analysis (PCA) on interval data, has been proposed by (Cazes et al. 1997), (Chouakria 1998), (Chouakria et al. 1998), (Gioia F. 2001), (Lauro & Palumbo 2000), (Lauro et al. 2000), (Palumbo & Lauro 2003), (Rodriguez 2000). Statistical units described by interval data can be assumed as a special case of Symbolic Object (SO). In Symbolic Data Analysis (SDA), these data are represented as boxes. The purpose of the present work is the extension of Principal Component analysis (PCA) to obtain a visualisation of such boxes, on a lower dimensional space pointing out the relationships among the variables, the units, and between both of them. The approach that we propose, having previously analysed the applicability of the interval algebra tools (Alefeld & Herzberger 1983), (Neumaier 1990), (Kearfott & Kreinovich 1996) is to adapt the mathematical models, on the basis of the classical PCA, to the case in which an interval data matrix is given. With difference to other approaches proposed in the literature that work on scalar recoding of the intervals using classical tools of analysis, we make extensively use of the interval algebra tools combined with some optimization techniques. The introduced methodology, named Interval Principal Component Analysis (IPCA) will embrace classical PCA as special case.

In section 2 of the present work some definitions, notations and main results of the interval algebra are introduced. In section 3 the IPCA methodology is presented. In section 4 and section 5 the interpretation of the obtained interval solutions and some numerical results on a real data set are presented.

2 Definitions notations and basic facts

2.1 Interval algebra

An interval [a,b] with a ≤ b, is defined as the set of real numbers between a and b:

Degenerate intervals of the form [a, a], also named thin intervals, are equivalent to real numbers. The symbols ∈, ⊂, ∪, ∩, will be used in the common sense of set theory. For example by [a,b] ⊂ [c,d] we mean that interval [a,b] is included as a set in the interval [c, d]. Furthermore it is [a,b]=[c,d] ⇔ a=c, b=d.

Let ℑ be the set of intervals. Thus I∈ℑ then I=[a,b] for some a ≤ b. Let us introduce an arithmetic on the elements of ℑ. The arithmetic will be an extension of real arithmetic. If ● is one of the symbols +, −,. /, we define arithmetic operations on intervals by:

except that we do not define [a,b]/[c,d] if 0 ∈ [c,d].

The sum, the difference, the product, and the ratio (when defined) between two intervals is the set of the sums, the differences, the products, and the ratios between any two numbers from the first and the second interval respectively.

Let us write an equivalent set of definitions in terms of formulas for the endpoints of the resultant intervals.

Let [a,b], [c,d] be elements of 3, it is:

if 0 ∉ [c,d], then [a,b]/[c,d]=[a,b]×[1/d,1/c]

It can be easily proved that the addition and the product in (2.1.2) are associative and commutative. Real numbers 0 and 1 can be both regarded as units for addition and for product respectively. Other properties may be found in (Moore 1966).

Definition 2.1.1

A rational expression F(X1, X2,…,Xn) in the intervals X1, X2, … Xn, is a finite combination, with the interval arithmetic operations, of X1, X2, …, Xn and a finite set of constant intervals.

Theorem 2.1.1

If F(X1,X2,…,Xn) is a rational expression in the intervals X1, X2, …, Xn, then

for every set of interval numbers X1, X2, …, Xn for which the interval arithmetic operations in F are defined.

From Theorem 2.1.1 follows that, computing a finite number of interval arithmetic operations, it is possible to bound the range of values of a real rational function over interval of values for each of its arguments.

Proposition 2.1.1

If F(x1, …, xn) is a real rational function in which each variable xi, occurs only once and only at the first power, then the corresponding interval expression F(X1,X2,…,Xn) will compute the actual range of values of F for xi in Xi: \(F\left(X_{1}, X_{2}, \ldots, X_{n}\right)=\left\{y / y=F\left(x_{1}, x_{2}, \ldots, x_{n}\right)\right.\), xi ∈ Xi, i = 1, …,n}.

2.2 Interval matrices

Definition 2.2.1

An n×n interval matrix is the following set:

where \(\underline{X} e \overline{X}\) are n×n matrix which verify:

The inequalities are understood to be component wise.

Introducing the centre matrix and the radius matrix:

the (2.2.1) may be expressed as follow:

Definition 2.2.2

An n×n interval matrix XI is called symmetric if:

where:

From the definition follows the characterisation:

Hence a symmetric interval matrix may contain non-symmetric matrices. Let us indicate by Mnp(R) the set of interval matrices of order n × p. An interval matrix XI ∈Mnp(R) will be represented, in analogy to the case of scalar matrices, by its components as follow: XI=(Xij), where Xij is an interval.

Definition 2.2.3

-

Let XI =(Xij), YI =(Yij) ∈ Mnp(R). Then:

$$X^{I} \pm Y^{I} :=\left(X_{i j} \pm Y_{i j}\right)$$defines the sum interval matrix and the difference interval matrix respectively.

-

Let XI=(Xij) ∈ Mnr(R) and YI =(Yij) ∈ Mrp(R). Then:

$$X^{I} Y^{I} :=\left(\sum_{v=I}^{r} X_{i v} Y_{v j}\right)$$defines the product interval matrix.

In particular:

let XI=(Xij) ∈ Mnr (R) and uI∈ Mrl(R) (interval vector of r interval components), it is:

$$X^{I} \boldsymbol{u}^{I}=\left(\sum_{v=I}^{r} X_{i v} u_{v}\right)$$ -

Let XI ∈ Mnp(R) and K be an interval. Then:

$$K X^{I}=X^{I} K :=\left(K X_{i j}\right).$$

2.3 Interval eigenvalues and interval eigenvectors

Given an interval data matrix XI ∈ Mnp(R), a lot of research has been done in characterizing solutions of the following interval eigenvalues problem:

which has interesting properties (Deif 1991a), (Rhon 1993), and serves a wide range of applications in physics and engineering.

More in details, the interval eigenvalue problem (2.3.1) is solved by determining two sets \(\lambda_{\alpha}^{I}\) and \(\boldsymbol{u}_{\alpha}^{I}\) given by:

where (λα(X),uα(X)) is an eigenpair of X∈XI. The couple \(\left(\lambda_{\alpha}^{I}, \boldsymbol{u}_{\alpha}^{I}\right)\) will be the α-th eigenpair of XI and it represents the set of all α-th eigenvalues and the set of the corresponding eigenvectors of all matrices belonging to the interval matrix XI.

Definition 2.3.1

For x ∈Rn the vector z=sign x may be defined as:

S=diag(sgn x) will indicate the diagonal matrix with sgn x on the principal diagonal.

The above definitions are necessary to enunciate the following theorem (Deif 1991a) which gives an important instrument for calculating the eigenvalues of an interval matrix.

Let XI be an n×n real interval matrix, Xc and ΔX its centre and radius matrix respectively, and let uα(Xc) α=1,…, n, be the eigenvectors of Xc.

Theorem 2.3.1

If XI is symmetric and if Sα=diag (sgn uα(X)), (α=1,…n) calculated for Xc is constant on XI, then the eigenvalue λα of X, X∈ XI ranges over the interval:

Theorem 2.3.1 consents to calculate the interval \(\lambda_{\alpha}^{I}\) in which the α-th eigenvalue of the matrix X, X∈XI lies. The theorem gives an interesting interpretation of \(\lambda_{\alpha}^{I}\) which may be regarded as the α-th eigenvalue of the given interval matrix. The further novelty lies in the fact that while previously the problem of the search of the bounds for \(\lambda_{\alpha}^{I}\)was limited to their “estimate”, now the approach is different inasmuch as it is possible to determine exactly the interval \(\lambda_{\alpha}^{I}\) without recurring to any approximation. The interval eigenvectors may be computed by solving a linear programming problem as described in (Seif et al. 1992):

Theorem 2.3.2

A necessary and sufficient condition for u α (X) to be an eigenvector of X corresponding to λ α (X) is:

where I is the unitary matrix and \(\underline{\lambda}_{\alpha}(X) \leq \lambda_{\alpha}(X) \leq \overline{\lambda_{\alpha}}(X)\).

To obtain bounds for the components of uα(X), we write (2.3.3) as:

where \(\underline{\lambda}_{\alpha}(X) \leq \lambda_{\alpha}(X) \leq \overline{\lambda_{\alpha}}(X)\).

To compute lower and upper bounds for uα(X) we minimize and maximize ∣uiα∣ subject to (2.3.3) for α=1, …,n−1, while keeping ∣uin∣ equal to unity. This type of constrained optimization problems is known as Linear Parametric Programming Problems, the solution of which will be obtained via numerical technique. Bounds for uα(X) are readily obtained by multiplying those for ∣uα (X)∣ by the matrix Sα.

2.4 Interval singular values

The interval singular values of an interval matrix XI can be computed directly from the eigenvalue problem for the matrices XIX, X∈XI (Deif 1991b).

Thus the problem of computing the interval singular values of XI becomes the following: given an interval matrix XI with central matrix Xc ∈ Rn×p, find a description of the set:

Rather than compute bounds for set Σ, to confine our self to the single interval singular values of XI:

the following three assumption must be introduced:

Assumption 1

sign(uα(X)), α=1,…,p, is invariant for each X∈XI, and therefore equals sign (uα(Xc)) evaluated at the centre matrix Xc.

Assumption 2

where ∣δX∣ ≤ ΔX

Assumption 3

sign (Xcuα), α=1,…p, is invariant for each X∈XI and is therefore equal to sign(Xcuα(Xc)), evaluated at the centre matrix Xc.

Conditions for the validity of Assumptions 1,2,3 may be found in (Deif & Rohn 1994). Indicating by:

it can be proved:

Lemma

Values of δX which extremize the singular value σα of the matrix Xc+δX, ∀∣δX∣ ≤ ΔX are given by:

Theorem 2.4.1

Under some Assumptions 1,2,3, the squared singular values σ2 of Xc + δX, ∀∣δX∣ ≤ ΔX, range over the interval:

where:

Thus, once the singular values an interval matrix XI have been computed, a description of the set:

is provided and, in particular, a description of the set of the eigenvalues of any matrix of the form XTX, when X∈XI, is computed.

3 Principal component analysis on interval data

Let us consider an interval data matrix of n units on which p interval-valued variables \(X_{1}^{I}, X_{2}^{I}, \ldots, X_{p}^{I}\), with \(X_{j}^{I}=\left(X_{i j}=\left[\underline{x}_{i j}, \overline{x}_{i j}\right]\right)_{i}\),i = 1,…,n, have been observed:

XI may be visualized as a set of n boxes in a p-dimensional space.

The task is to extend to XI Principal Component Analysis to obtain a visualisation, on a lower dimensional space, of the relationships among the variables, the units, and between both of them.

The aim is to use, when possible, the interval algebra instruments to adapt the mathematical models, on the basis of the classical PCA, to the case in which an interval data matrix is given. Let us suppose that the interval-valued variables have been previously standardized (see Appendix).

It is known that the classical PCA on a real matrix X, in the space spanned by the variables, solves the problem of determining m ≤ p axes uα,α=1,…,m such that the sum of the squared projections of the point-units on uα is maximum:

under the constraints:

The above optimization problem may be reduced to the eigenvalue problem:

When the data are of interval type, XI may be substituted in (3.3) and the interval algebra may be used for the products; equation (3.3) becomes an interval eigenvalue problem of the form:

which has the following interval solutions:

i.e., the set of α-th eigenvalues of any matrix Z contained in the interval product (XI)′ XI, and the set of the corresponding eigenvectors respectively. The intervals in (3.5) may be computed by Theorem 2.3.1.

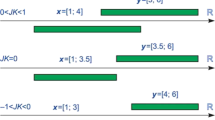

Using the interval algebra for solving problem (3.4) , the interval solutions will be computed but, refer to worse, those intervals are oversized with respect to the intervals of solutions that we are searching for as it will be discussed below. For the sake of simplicity, let us consider the case p=2, thus two interval-valued variables:

have been observed on the n considered units.

\(X_{1}^{I}\) and \(X_{2}^{I}\)assume an interval of values on each statistical unit: we do not know the exact value of the components xi1 or xi2 for i=1,…n, but only the range in which this value falls. In the proposed approach the task is to contemplate all possible values of the components xi1, xi2 each of which in its own interval of values \(X_{i 1}=\left[\underline{x}_{i 1}, \overline{x}_{i 1}\right], X_{i 2}=\left[ \underline{x}_{i 2}, \overline{x}_{i 2}\right]\) for i=1,…n. Furthermore for each different set of values x11,x12,…,xn1 and x12,x22,…,xn2, where \(x_{i j} \in\left[\underline{x}_{i j}, \overline{x}_{i j}\right]\) i = 1,Λ,n,j = 1,2, a different cloud of points in the plane is univocally determined and the PCA on that set of points must be computed. Thus, with interval PCA (IPCA) we mean to determine the set of solutions of the classical PCA on each set of point-units, set which is univocally determined for any different choice of the point-units each of which in its own rectangle of variation.

Therefore, the interval of solutions for which we are looking for are the set of the α-th axes, each of which maximize the sum of square projections of a set of points in the plane, and the set of the variances of those sets of points respectively. This is equivalent to solve the optimization problem (3.3) , and so the eigenvalue problem (3.4) for each matrix X∈XI.

In the light of the above considerations, the background in approaching directly the interval eigenvalue problem (3.4) , comes out by observing that the following inclusion holds:

this means that in the interval matrix (XI)′ XI are contained also matrices which are not of the form X’X. Thus the interval eigenvalues and the interval eigenvectors of (3.4) will be oversized and in particular will include the set of all eigenvalues and the set of the corresponding eigenvectors of any matrix of the form X’X contained in (XI)′ XI.

This drawback may be solved by computing an interval eigenvalue problem considering in place of the product:

the following set of matrices:

i.e., the set of all matrices given by the product of a matrix multiplied by its transpose.

For computing the α-th eigenvalue and the corresponding eigenvector of set Θ, that will still be denoted by \(\lambda_{\alpha}^{I} \boldsymbol{u}_{\alpha}^{I}\), Theorem 2.4.1 may be used.

It is important to remark that Theorem 2.4.1 may be applied under strong hypothesesFootnote 1 on the input matrix as described in §2.4. When the above hypotheses are not verified, considering that the variables have been previously standardized, the eigenvalues and eigenvectors of the correlation interval matrix may be computed by Theorem 2.3.1 which is subject to a reduced number of hypotheses than Theorem 2.4.1. The correlation interval matrix will be indicated by: \(\Gamma^{I}=\left(\operatorname{cor} r_{i j}^{I}\right)\) where \(\operatorname{corr}_{i j}^{I}\)is the interval of correlations between \(X_{i}^{I}, X_{j}^{I}\) (Gioia & Lauro 2005). Notice that while the ij-th component of TI is the interval of correlations between \(X_{i}^{I}\), \(X_{j}^{I}\), the ij-th component of (X′)I XI is an interval which includes that interval of correlations and contains also redundant elements.

It is important to remark that ΘI ⊂ΓI, then the eigenvalues/eigenvectors of ΓI will be also oversized with respect to those of ΘI.

The α-th interval axis or interval factor will be the α-th interval eigenvector associated with the α-th interval eigenvalue in decreasing orderFootnote 2.

The orthonormality between pairs of interval axes must be interpreted according to:

Thus two interval axes are orthonormal to one another if, taking a unitary vector in the first interval axis there exists a unitary vector in the second one so that their scalar product is zero.

In the classical case the importance explained by the α-th factor is computed by: \(\lambda_{\alpha} / \sum_{\beta=1}^{p} \lambda_{\beta}\). In the interval case the importance of each interval factor is the interval:

i.e., the set of all ratios of variance explained by each real factor uα belonging to the interval factor\(\boldsymbol{u}_{\alpha}^{I}\). The analytical form of the bounds in (3.7) has been computed by considering the following chain of equalities:

f has been transformed into a real rational function in which each variables occurs only once and at the first power; therefore according to Proposition 2.1.1, the corresponding interval expression \(f\left(\lambda_{I}^{I}, \lambda_{2}^{I}, \Lambda \lambda_{p}^{I}\right)\) will compute the actual range of values of f for\(\lambda_{\alpha} \in \lambda_{\alpha}^{I} \quad \forall \alpha=1, \Lambda, p\).

Analogously to what already seen in the space Rp, in the space spanned by the units (Rn), the eigenvalues and the eigenvectors of the set:

must be computed; the α-th interval axis will be the α-th interval eigenvector associated with the α-th interval eigenvalue in decreasing order.

Also in this case, Theorem 2.4.1 on the interval matrix (X′)I may be used if all its hypotheses are satisfied, otherwise the eigenvalues/eigenvectors of the standardized interval matrix (SS’)I:

(see Appendix for details) may be computed.

Considering that (Θ)I ⊂ (SS′)I, the eigenvalues/eigenvectors of (SS’)I will be oversized with respect to those of (Θ′)I.

It is known that a real matrix and its transpose have the same eigenvalues and the corresponding eigenvectors connected by a particular relationship. Let us indicate again with \(\lambda_{1}^{I}\), \(\lambda_{2}^{I}\), Λ, \(\lambda_{p}^{I}\)the interval eigenvalues of Σ′ and with \(\boldsymbol{v}_{1}^{I},\), \(\boldsymbol{v}_{2}^{I},\),…,\(\boldsymbol{v}_{p}^{I}\) the corresponding eigenvectors, and let us see how the above relationship applies also for the “interval” case. Let us consider for example the α-th interval eigenvalue \(\lambda_{\alpha}^{I}\)and let\(\boldsymbol{u}_{\alpha}^{I}\), \(\boldsymbol{v}_{\alpha}^{I},\), be the corresponding eigenvectors of ΘI and (Θ′)I associated with \(\lambda_{\alpha}^{I}\) respectively.

Taking an eigenvector of some \(X^{\prime} X \in \Theta^{I} : \boldsymbol{v}_{\alpha} \in \boldsymbol{v}_{\alpha}^{I}\), then:

where the constant kα is introduced for the condition of unitary norm of the vector Xvα.

4 Representation and interpretation

4.1 Units

From classical theory, given an n×p real matrix X we know that the α-th principal component cα is the vector of the coordinates of the n units on the α-th axis. Two different approaches may be used to compute cα:

-

1)

cα may be computed by multiplying the standardized matrix X by the α-th computed axis uα: Xuα.

-

2)

from the relationship (3.8) among the eigenvectors of X’X and XX’, cα may be computed by the product \(\sqrt{\lambda_{\alpha}} \cdot \boldsymbol{v}_{\alpha}\)of the α-th eigenvalue of XX’ with the corresponding eigenvector.

When an n ×p interval-valued matrix XI is given, the interval coordinate of the i-th interval unit on the α-th interval axis, is a representation of an interval which comes out from a linear combination of the original intervals of the i-th unit by p interval weights; the weights are the interval components of the α-th interval eigenvector. A box in a bi-dimensional space of representation, is a rectangle having for dimensions the interval coordinates of the corresponding unit on the pair of computed interval axis. For computing the α-th interval principal component \(\boldsymbol{c}_{\alpha}^{I}=\left(c_{1 \alpha}^{I}, c_{2 \alpha}^{I}, \Lambda, c_{n \alpha}^{I}\right)\) two different approaches may be used:

-

1)

compute by the interval row-column product:\(\boldsymbol{c}_{\alpha}^{I}=X^{I} \boldsymbol{u}_{\alpha}^{I}\)

-

2)

compute the product between a constant interval and an interval vector: \(\boldsymbol{c}_{\alpha}^{I}=\sqrt{\lambda_{\alpha}^{I}} \cdot \boldsymbol{v}_{\alpha}^{I}\).

In both cases, the interval algebra product is used thus, the i-th component \(c_{i \alpha}^{I}\) of \(\boldsymbol{c}_{\alpha}^{I}\) will include the interval coordinate, as it has been defined above, of the i-th interval unit on the α-th interval axis.

We refer to the first approach, for computing principal components, when the theorem for solving the eigenvalue problems (for computing \(\boldsymbol{v}_{\alpha}^{I}\)) cannot be applied if its hypotheses are not verified. Classical PCA gives a representation of the results by means of graphs, which permit us to represent the units on projection planes spanned by pairs of factors. The methodology (IPCA), that we have introduced, permit us to visualize on planes how the coordinates of the units vary when each component, of the considered interval-valued variable, ranges in its own interval of values, or equivalently when each point-unit describes the boxes to which it belongs.

Indicating with UI the interval matrix whose j-th column is the interval eigenvector \(\boldsymbol{u}_{\alpha}^{\mathrm{I}}\) (α=1,…p), the coordinates of all the interval-units on the computed interval axis are represented by the interval product XIUI.

4.2 Interval variables

In the classical case, the coordinate of the i-th variable on the α-th axis is the correlation coefficient between the considered variable and the α-th principal component. Thus variables with greater coordinates (in absolute value) are those which best characterize the factor under consideration.

Furthermore, the standardization of each variable makes the variables, represented in the factorial plane, fall inside the correlation circle.

In the interval case the interval coordinate of the i-th interval-valued variable on the α-th interval axis is the interval correlation coefficient (Gioia & Lauro 2005) between the variable and the α-th interval principal component. The interval variables in the factorial plane however, are represented, not in the circle but in the rectangle of correlations. In fact, computing all possible pair of elements, each of which in its own interval correlation, may happens that pairs with the coordinates that are not in relation one another would be also represented; i.e. pairs of elements which are correlations of different realizations of the two single-valued variables for which the correlation would be considered.

The interval coordinate of the i-th interval-valued variable on the first two interval axes \(\boldsymbol{u}_{\alpha}^{I} \boldsymbol{u}_{\beta}^{I}\), namely, the interval correlation between the variable and the first and second interval principal component respectively, will be computed according to the procedure in (Gioia & Lauro 2005) and indicated as follow:

Naturally the rectangle of correlations will be restricted, in the representation plane, to its intersection with the circle with centre in the origin and unitary radius.

4.3 Contributions

In the case of single-valued variables, the weight of the i-th unit on the variability of the α-th axis, named absolute contribution, is given by:

where \(c_{i j}^{2}\) is the squared coordinate of the i-th unit and \(\sum_{h=1}^{n} c_{h j}^{2}\) is the variance of the projected units on the α-th axis respectively. In the case of interval-valued variables, (4.3.1) must be considered as a function g of ciα which may be transformed as follow:

Proposition 2.1.1 applies to function g, thus the interval:

is the set of all absolute contributions of the i-th unit on the α-th axis varying the squared projections \(c_{i \alpha}^{2}\) in their interval of values. Interval (4.3.2) is the interval absolute contribution of the i-th interval unit on the α-th interval axis.

The contribution of the j-th variable on the α-th axis may be analogously computed. Interval indexes for the quality of representation on that axis might be calculated substituting the denominator in (4.3.1) with the sum of squared coordinates of the units or of the variables. This procedure however would not furnish a good solution for measuring the “quality” of the reconstruction of the original data matrix. To this purpose the introduction of the singular value decomposition for interval matrices is necessary.

5 Numerical results

This section shows an example of the proposed methodology on a real data set: the Oil data set (Ichino 1988) (the table below). The data set presents eight different classes of oils described by four quantitative interval-valued variables: “Specific gravity”, “Freezing point”, “Iodine value” “Saponification”.

The first step of the IPCA consists in calculating the following interval correlation matrix:

The interpretation of the interval correlations must take into account both the location and the span of the intervals. Intervals containing the zero are not of interest because they indicate that “everything may happen”. An interval with a radius smaller than that of another one is more interpretable. In fact as the radius of the interval correlations decreases, the stability of the correlations improves and a better interpretation of the results is possible. In the considered example, the interval correlations are well interpretable because all intervals do not contain the zero, thus each pair of interval-valued variables are positively correlated or negatively correlated. For example we observe a strong positive correlation between Iodine and Specific gravity and a strong negative correlation between Freezing point and Specific gravity. At equal lower bounds, the interval correlation between Iodine value and Freezing point is more stable than that between Iodine value and Saponification.

Eigenvalues and explained variance:

λ1=[2.45,3.40], Explained Variance on the 1st axes: [61%, 86%]

λ2=[0.68,1.11], Explained Variance on the 2nd axes: [15%, 32%]

λ3=[0.22,0.33], Explained Variance on the 1st axes: [4%, 9%]

λ4=[0.00,0.08], Explained Variance on the 1st axes: [0%, 2%].

The choice of the eigenvalues and so of the interval principal components may be done using the interval eigenvalue-one criterion [1,1]. In the numerical example, only the first principal component is of interest because the lower bound of the corresponding eigenvalue is greater than 1. The second eigenvalue respects the condition of the interval eigenvalue-one partially and, moreover, it is not symmetric with respect to 1. Thus the representation on the second axis is not of great interest even though the two first eigenvalues reconstruct most part of the initial variance. Thus, the second axis is not well interpretable.

Interval variables representation:

The principal components representation is made analysing the correlations among the interval-valued variables and the axes, as illustrated below:

The first axis is well explained by the contraposition of the variable Freezing point, on the positive quadrant, with respect to the variables Specific gravity and Iodine value on the negative quadrant. The second axis is less interpretable because all the correlations vary from −0.99 and 0.99.Footnote 3

Here below, the graphical results achieved by IPCA on the input data table are shown. In Figure 1 the graphical representation of the units is presented; in Figure 2 only the two variables: Specific gravity and Freezing point are represented:

The objects (Fig 1) have a position on the first axis which is strictly connected to the “influence” that the considered variables have on that axis. It can be noticed that Beef and Hog are strongly influenced by Saponification and Freezing point; on the contrary Linseed and Perilla are strongly influenced by Specific gravity and Iodine value. The other oils Camilla and Olive, are positioned in the central zone so they are not particularly characterized by the interval-valued variables.

It is important to remark that the different oils are characterized not only by the positions of the boxes but also by their size and shape. A bigger size of a box with respect to the first axis, remarks a greater variability of the characteristics of the oil represented by the first axis. However also the shape and the position of the box can give information on the variability of the characteristics of the oil, with respect to the first and second axis.

Computational cost: the computational cost of each optimization problem reefers to the cost of a constrained nonlinear optimization or nonlinear programming problem. For computing the correlation matrix, p×p optimization problems must be solved. The computational cost for computing the j-th eigenvector reefers to the cost of a linear parametric programming problem.

Notes

1 The method works with intervals which are small with respect to the ratio between the radius and the coordinate of the centre of each interval. Empirically it has been observed that the above ratio must be approximately of 2–3%.

2 Considering that the α-th eigenvalue of Θ is computed by perturbing the α-th eigenvalue of (Xc)Xc, the ordering on the interval eigenvalues is given by the natural ordering of the corresponding scalar eigenvalues of (Xc)’Xc.

1 The absolute contributions on the first axes vary from the interval [0, 0.91] for Linseed and the interval [0,0.16] for Sesame, this reflect the “size” of the individuals on the first axes.

References

Alefeld, G. & Herzerberger, J. (1983), ‘Introduction to Interval computation’, Academic Press, New York.

Billard, L. & Diday, E. (2002), ‘Symbolic regression Analysis’, Proceedings IFCS. In Krzysztof Jajuga et al (EDS.): Data Analysis, Classification and Clustering Methods Heidelberg, Springer-Verlag.

Billard, L. & Diday, E. (2000), ‘Regression Analysis for Interval-Valued Data’, in: Data Analysis, Classification and Related Methods (eds. H.-H. Bock and E. Diday), Springer, 103–124.

Burkill, J. C. (1924), ‘Functions of Intervals’, Proceedings of the London Mathematical Society, 22, 375–446.

Canal, L. & Pereira, M. (1998), ‘Towards statistical indices for numeroid data’, in: Proceedings of the NTTS’98 Seminar, Sorrento Italy.

Cazes, P., Chouakria, A., Diday, E. & Schektman, Y. (1997), ‘Extension de l’analyse en composantes principales à des données de type intervalle’, Revue de Statistique Appliquée, XIV, 3, 5–24.

Chouakria, A. (1998), ‘Extension des méthodes d’analyse factorielle à des données de type intervalle’, Paris IX Dauphine.

Chouakria, A., Diday, E. & Cazes, P. (1998), ‘An improved factorial representation of symbolic objects’, in: KESDA’98 April, Luxembourg.

Deif, A.S. (1991a), ‘The Interval Eigenvalue Problem’, ZAMM 71, 1.61–64, Akademic-Verlag Berlin.

Deif, A. S. (1991b), ‘Singular Values of an Interval Matrix’, Linear Algebra and its Applications 151, 125–133.

Deif, A. S. & Rohn, J. (1994), ‘On the Invariance of the Sign Pattern of Matrix Eigenvectors Under Perturbation’, Linear Algebra and its Applications 196, 63–70.

Gioia, F. (2001), ‘Statistical Methods for Interval Variables’, Ph.D. thesis, Dip. di Matematica e Statistica-Università di Napoli “Federico II”, in Italian.

Gioia, F. & Lauro, C. (2005), ‘Basic Statistical Methods for Interval Data’, Statistica Applicata, 17 (1). In press.

Kearfott, R. B. & Kreinovich, V. (Eds.) (1996), ‘Applications Of Interval Computations’, Kluwer Academic Publishers.

Lauro, C. N. & Palumbo, F. (2000), ‘Principal component analysis of interval data: A symbolic data analysis approach’, Computational Statistics, 15 (1), 73–87.

Lauro, C. N., Verde, R. & Palumbo, F. (2000), ‘Factorial methods with cohesion constraints on symbolic objects’, in: IFCS’00.

Marino, M. & Palumbo, F. (2003), ‘Interval arithmetic for the evaluation of imprecise data effects in least squares linear regression’, Statistica Applicata, 3.

Moore, R. E. (1966), ‘Interval Analysis’, Prentice Hall, Englewood Cliffs, NJ.

Neumaier, A. (1990), ‘Interval methods for systems of equations’, Cambridge University Press, Cambridge.

Palumbo, F. & Lauro, C.N. (2003), ‘A PCA for interval valued data based on midpoints and radii’, in: New developments in Psychometrics, Yanai H. et al. eds., Psychometric Society, Springer-Verlag, Tokyo.

Rodriguez, O. (2000), ‘Classification et Modeles Lineaires en Analyse des Donnes Symboliques’, Doctoral Thesis, Universite de Paris Dauphine IX.

Rhon, J. (1993), ‘Interval Matrices: Singularity and real eigenvalues’, SIAM J. Matrix Anal Apply, 14, 82–91.

Seif, N. P., Hashem, S. & Deif, A. S. (1992), ‘Bounding the Eigenvectors for Symmetric Interval Matrices’, ZAMM 72, 233–236.

Sunaga, T. (1958), ‘Theory of an Interval Algebra and its Application to Numerical Analysis’, Gaukutsu Bunken Fukeyu-kai, Tokyo.

Young, R. C. (1931), ‘The algebra of many-valued quanties’, Math. Ann. 104, 260–290.

Author information

Authors and Affiliations

Appendix

Appendix

Given two single-valued variables: Xr = (xir), Xs =(xis), i = 1, …,n, it is known that the correlation between Xr and Xs may be computed as follow:

Let us consider now the following interval-valued variables:

the interval correlation is computed as follow (Gioia & Lauro 2005):

where h(x1,r,…,xn,r;x1,s,…,xn,s) is the function in (1) .

Analogously, given the single-valued variable Xn the standardized Sj=(sir)i, of Xr is given by:

where \(\overline{x}_{r}\) and \(\sigma_{r}^{2}\) are the mean and the variance of Xr respectively.

When an interval-valued variable \(X_{r}^{I}\) is given, following the same approach of (Gioia & Lauro 2005), the component sir in (2) , for each i=1,…,n, transforms into the following function:

as xir varies in \(\lfloor \underline{x}_{i r}, \overline{x}_{i r} \rfloor,\), i=1,…,n. The standardized interval component \(s_{i r}^{I}\) of \(X_{r}^{I}\) may be computed by minimizing/maximizing function (3) , i.e. calculating the following set:

\(s_{i r}^{I}\) in (4) is the interval of the standardized component sir that may be computed when each component xir ranges in its interval of values. For computing the interval standardized matrix SI of an n×p matrix XI, interval (4) may be computed for each i=1,…,n and each r=1, …,p. Given a real matrix X and indicating by S the standardized of X, it is defined the product matrix:\(S S^{\prime}=\left(s s^{\prime}_{i j}\right)\). Given an interval matrix XI, the product of SI by its transpose will not be computed by the interval matrix product (S’)1/S1 but by minimizing/maximizing each component of SS’ when Xij varies in its interval of values. The interval matrix \(\left(S S^{\prime}\right)^{I}=\left(\left(s s_{i j}^{\prime}\right)^{I}\right)\) is:

Rights and permissions

About this article

Cite this article

Gioia, F., Lauro, C.N. Principal component analysis on interval data. Computational Statistics 21, 343–363 (2006). https://doi.org/10.1007/s00180-006-0267-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-006-0267-6