Data samples with interval uncertainty are analyzed. It is proposed to use the Jaccard measure (index), which is widely used when comparing sets in various problem areas, as a measure (functional) of the consistency of interval values and their samples. Information about interval analysis, classical and complete (Kaucher) interval arithmetic is presented. For interval quantities, the necessary concepts and definitions of operations are introduced, in particular, generalizations of the concepts of intersection and union of sets. The Jaccard measure is generalized to the case of data with interval uncertainty and samples of interval data. Various variants of interval relations are described in detail — from their coincidence to incompatible cases. Various definitions of the Jaccard measure are given, both symmetric and nonsymmetric with respect to the operands. The connections of the proposed measure with the interval mode and the results of calculations with tweens are considered. A practical example of finding the information set of an interval problem using a new measure is given. Two areas of application of both symmetric and asymmetric measures are presented — computational processes (for characterizing iterative computational processes) and data analysis (for characterizing measurement workspaces and classifying data by a set of features).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction. One of the tasks of data analysis is to find out the degree of similarity of the sample elements among themselves or the degree of similarity of different samples. To characterize the degree of similarity, it is necessary to introduce a quantitative measure (similarity coefficient). Let us define the measure of similarity formally. The measure of similarity is a two-place function S(A, B) → [0, 1] — a real function on the set of pairs of objects (A, B), where A, B are arbitrary finite sets. Formally, membership of an operation to similarity measures is determined by a system with the following properties:

-

boundedness 0 ≤ S(A, B) ≤ 1;

-

symmetry S(A, B) = S(A, B) ≥ 0;

-

reflexivity S(A, B) = 1 ⇔ A = B;

-

transitivity A ⊆ B ⊆ C ⇒ S(A, B) ≥ S(A, C).

There are other systems of similarity axioms. The listed properties, also called the t-norm, can be implemented in a variety of ways. Measures of inclusion and similarity have been widely used in practice since the second half of the last century. In the publication [1], various similarity measures and their mutual relationships are considered in detail. The first such measure was proposed by Jaccard in 1901 in relation to biology. The Jaccard coefficient J (index) for sets A, B has the form

where n is the cardinality (number of representatives) of the sets.

In computer applications (image processing, machine learning), the measure of similarity of sets is often referred to as IoU (intersection over union).

The purpose of this work is to give a mathematical definition of the proposed option for calculating the binary measure of similarity of sets, to consider various options for its calculation and to study its properties. The measure is generalized to work with data samples with interval uncertainty. The article gives a number of examples and presents various directions for further research.

Interval arithmetic. In this publication, similarity measures are applicable to mathematical objects with interval uncertainty. The modern notation system for interval objects used in this article is given in [2]. Here and throughout the article, interval objects are indicated in bold italic type, as is customary in interval arithmetic.

Classical interval arithmetic \({\mathbb{I}}{\mathbb{R}}\) is an algebraic system formed by intervals x = [\(\underline{x}\), \(\overline{x}\)] ⊂ \({\mathbb{R}}\) so that for any arithmetic operation "*" from the set {+, –, ·, /} the result of the operation between intervals is defined as x*y = {x*y|x ∈ x, y ∈ y}, where x, y are real numbers; x, y are intervals. The symbol \({\mathbb{I}}\) in the notation \({\mathbb{I}}{\mathbb{R}}\) is used to distinguish interval and real arithmetic, with \({\mathbb{I}}{\mathbb{R}}\) ⊇ \({\mathbb{R}}\).

We introduce the characteristics of the interval a:

where \(\overline{{\varvec{a}}}\), \(\underset{\_}{{\varvec{a}}}\) are the right and left (or upper and lower) boundaries of the interval a.

In a more general setting, the problem of interval analysis can be solved in the so-called complete interval arithmetic or Kaucher arithmetic \({\mathbb{K}}{\mathbb{R}}\) [3]. This arithmetic is obtained by adding improper intervals [x, x], x > x to the set \({\mathbb{I}}{\mathbb{R}}\). The introduction of Kaucher's interval arithmetic is motivated by a number of limitations of classical interval arithmetic: the absence of inverse elements relative to addition and multiplication operations; the impossibility in the general case of taking the minimum by inclusion; the complexity with the formulation of minimax problems. The use of improper intervals allows you to build original methods for solving problems with interval objects.

Generalization of the Jaccard coefficient. To analyze data, it is necessary to compare interval objects in a universal way, regardless of the property of the covering. Let us introduce a numerical characteristic of the degree of consistency of two intervals x, y ⊂ \({\mathbb{I}}{\mathbb{R}}\) in the form JK(x, y) (K indicates the relation to Kaucher's interval arithmetic):

where the measure for the Jaccard coefficient, in contrast to (1), is the width of the interval (2); and instead of the operations of intersection and union of sets, we have the operations of taking the minimum ∧ and maximum ∨, respectively, by including two quantities in the complete interval arithmetic (Kaucher), described by

In the general case, the inclusion minimum in expression (3) may be an improper interval of negative width. To further generalize measure (3) to sets of intervals, we write its numerical expression

In writing formula (4), instead of interval widths, we use explicit expressions for taking the minimum and maximum by inclusion, which provide the universal character of (4), regardless of whether the result of the operation of taking the minimum by inclusion ∧ is a proper or improper interval.

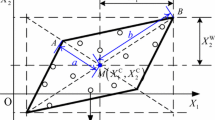

Let us illustrate various measures of binary consistency. Consider different cases of the relative position of intervals x, y and various measures of consistency. Suppose

In Fig. 1 we show the possible options for the relative position of the intervals x, y and the corresponding values of the measure JK (cf. formula (4)). Summing up the indicated options, we obtain the inequality

For non-empty intersections of intervals, the classical Jaccard measure (1) (not shown in Fig. 1) and the new measure JK(x, y) (3), (4) give the same results. For empty intersections of intervals, the classical Jaccard measure is equal to zero, and JK(x, y) numerically describes the measure of inconsistency from 0 to –1.

Asymmetric variants of the measure JK(x, y). Measure (3) is symmetric with respect to its arguments, which may be inconvenient in case of a large difference in the widths of the arguments. It is desirable that, in this case, the sensitivity of the measure also differ significantly when it is taken according to different arguments. In this case, asymmetric options are useful:

Similar expressions in the literature are called similarity measures (similarity, overlapping ratio [4, 5]). Expressions (5), (6) differ from similar ones given in [4, 5] in that they allow the interval to be improper when taking the minimum by inclusion.

Example. Let x = [1; 2], y = [3; 7]. Calculate the measures (5), (6):

The absolute values of expressions (7), (8) reflect the measure of the "dissimilarity" of the x, y intervals relative to their widths. In this case, the value of expression (8) is significantly less. This fact corresponds to the difference in the widths of the intervals x, y and makes it possible to build meaningful constructions on this basis, for example, in regularization procedures.

Jaccard measure for sampling interval data. The consistency measure introduced for two intervals in the form (3) admits a natural generalization to the case of interval sampling. Let there be an interval sample X = {xi}, i = 1, 2, ..., n. Let us define the measure JK(X) as

where

It is important that in the case of an interval sample of two elements, expression (9) becomes expression (3).

Consistency measures for interval values are considered in publications [4,5,6], but only for consistent samples.

Example. Let us calculate the Jaccard coefficient (9) for consistent X1 = {[0; 8], [2; 9], [3; 10]} and inconsistent X2 = {[9; 10], [10; 11], [11; 12]} samples:

Jaccard measure and interval mode. Let us consider the connection of the JK measure with other characteristics of interval samples, for example, with the interval mode of the sample. In the book [7], following the ideas of publication [8], this object (interval) is introduced as follows: "The interval sample mode X is the set of intersection intervals of the largest consistent subsamples of the considered sample." The greatest length of consistent subsamples of a given sample is called the mode frequency.

Let us consider the simplest example illustrating the relationship between the Jaccard measure and the interval mode. In Fig. 2 we show options for calculating the interval mode and the mode consistency measure for a sample of two intervals X = {x, y}, from which follows the relationship between the interval mode and the value of the Jaccard measure. In this case, the interval mode is a complex object that provides additional information about the sample. The interval mode is generally a multi-interval [7]. The nature of the Jaccard measure JK is different and has a numerical character. The numerator of expression (5) contains the inclusion minimum, and its position in multimode distributions may not belong to any sampling interval. In this case, additional expressions are required for the numerical description of the interval mode.

Preliminarily, it can be noted that the value of JK (mode X) in the case of a multi-interval mode is nonpositive. In this case, the absolute value of JK(mode X) depends both on the distance between the multisampling intervals and on the width of their inclusion maximum.

Example. For a noncovering sample X = {[1; 4], [5; 9], [1.5; 4.5], [6; 9]}, the interval mode is the union of two intervals (multi-interval) mode X = [1.5; 4] ∪ [6; 9] and the measure of consistency is defined as

In this case, the inclusion minimum \(\underset{1\le i\le n}{\Lambda }{{\varvec{x}}}_{{\varvec{i}}}=\left[6;4\right]\) for the sample elements X in the numerator of expression (4) is an improper interval, which in the given case is also the inclusion minimum for the interval mode multi-interval elements.

Application of JK to tween arithmetic and presentation of measurement data with errors of several kinds. In practice, the ends of the intervals representing the results of measurements may not be known exactly, so that it becomes necessary to work with intervals that have interval ends. Such objects are known in interval analysis and are called tweens (English tween is an abbreviation for twice interval, double interval).

Tween, as an "interval of intervals" or an interval with interval ends, is represented as

An alternative consideration of tweens as objects that give internal and external estimates of interval computations is proposed in [9]:

where Xin and Xout are intervals of internal and external estimates of interval calculations.

In definitions (10), (11), a tween is defined by four values. The functional JK also depends on four values — the ends of two intervals x, y. If we take the internal and external components of the tween as arguments of JK, we obtain a relative value characterizing the relation of the internal and external estimates of the tween X in the form (11):

Example. Given a temperature tween in the form (10):

We write it in the Nesterov form:

In accordance with construction (12), we have the "relative tween width"

The positive value of δT reflects the consistency of the internal and external estimtes of the interval object, and the absolute value is the "reliability margin" of the estimate. A negative value of δT corresponds to the nonempty intersection of the intervals of the lower and upper estimates of the interval T.

Analysis of data with interval uncertainty. Analysis of interval data emerged in the last decades of the 20th century as an alternative to traditional "probabilistic statistics" based on the methods of probability theory. Many works are devoted to various aspects of the analysis of interval data, in particular [10,11,12,13,14,15,16,17,18,19]. Analysis of data with interval uncertainty (statistics of interval data, analysis of interval data) is considered in the book [8], where the system of concepts and terms related to the processing of the specified data is presented. One of the most important concepts of interval statistics is a covering measurement (measurement) — an interval measurement result that is guaranteed to contain the true value of the measured value [7]. A measurement for which it is impossible to assert that it contains the true value of the measured quantity will be called noncovering. A covering measurement is a guaranteed two-sided "fork" of the values of the measured quantity, while for a noncovering measurement nothing of the kind can be asserted. This gives the covering measurements a fundamentally higher status and makes it possible to build more meaningful constructions on their basis.

Further, we will call a covering sample a set of measurements, the dominant part (most, etc.) of the measurements (observations) of which are covering. On the contrary, a sample is called noncovering if the predominant part of the measurements included in it are noncovering. Possible alternative terms are "inclusive dimension," "encompassing dimension" (their negation is "noninclusive", "nonencompassing"). Suggested English equivalents are enclosing measurement, covering measurement. Such a definition is not strict, which will avoid overly strict and rarely achievable sampling requirements.

When formulating new definitions, we follow the principle of correspondence in the methodology of science — any new scientific theory should include the old theory and its results as a special limiting case. As applied to the measure of consistency, further more general constructions contain (as special cases) the previously defined constructions. Symbolically, this can be expressed as

It is also important that the measures introduced for more general objects, namely, on samples of interval values and multidimensional interval objects, naturally reduce to constructions for simpler objects.

Thus, the measure JK(X) in the form (3) can be defined both for noncovering samples in Kaucher arithmetic and for covering samples in classical interval arithmetic, as well as for real "point" samples.

Example. We will demonstrate the use of the Jaccard index for data processing. The subject area refers to the physics of semiconductors — studies of the photoelectric characteristics of the sensor under test, carried out by specialists from the A. F. Ioffe Laboratory of Photoelectric Converters of the Physico-Technical Institute. There are two data samples with interval uncertainty.Footnote 1 Samples X1, X2, respectively, refer to sensors (photodetector, PD) PD1, PD2 (standard). The number of readings in the samples is 200. The PD1 sensor is calibrated according to the PD2 standard. The dependence between quantum efficiencies of the sensors QEPD1, QEPD2 is assumed constant for each pair of measurement sets:

where IPD1, IPD2 are the measured currents of the detectors.

Let us present the data in such a way as to apply the concepts of data statistics with interval uncertainty. One of the common ways to obtain interval results in primary measurements is the "intervaluing" of point values, when an error interval ε is added to the point (base) value that is read from the indicators of the measuring device:

We set the error interval as a balanced interval

for a specific procedure of measurements ε = 10–4 mV.

Due to a significant drift of the measuring equipment, the data is pre-processed and the drift is subtracted. After calculations, the data have a nonequivariant form, shown in Fig. 3.

According to the terminology of interval analysis, the sample under consideration is a vector of intervals, or an interval vector x = (x1, x2, ..., xn). In the case of estimating a single physical quantity from a sample of interval data, the information set will also be an information interval — an interval containing the values of the estimated quantity that are consistent with the measurements of the sample (consistent with the data of these measurements).

We calculate JK(X) for a set composed of the union of the values of the original samples X = {X1, X2}. Using programs for calculations in interval arithmetic (cf. https://github.com/szhilin/octave-interval-examples), we obtain JK(X) = –0.65. The negative value of JK(X) characterizes the inconsistency of the X sample.

We use the model (13) of the connection between samples for different measurements (R is an unknown factor):

In accordance with the tasks of researching sensors, it is necessary to give point and interval estimates of R. We optimize the parameter R as follows. A point estimate for the ratio of quantum efficiencies is

where

The interval estimate R corresponds to the information set of the problem

Let us carry out calculations using formula (14) and plot JK(R) (Fig. 4), where the area corresponding to condition (15) is highlighted. The optimal value in formulation (14) Ropt = 1.132, the information set of values (15) R = [1.12; 1.14].

The histogram of values of the X′ combined sample at Ropt is shown in Fig. 5. The frequency distribution of histograms is close to symmetrical and unimodal, which positively indicates the correctness of the optimization procedure.

Applications of measure of consistency. For computational processes, two directions of application of the proposed measure can be proposed.

The first direction is the characterization of computational processes. When iteratively solving interval systems of equations, it is necessary to monitor the intersection of sets of successive operations. For this, a convenient means is an asymmetric measure equal to the ratio of the intersection of the sets of successive operations to the width of the iterative operator (Newton, Kravchik, etc.). The second direction is the solution of interval systems of linear algebraic equations (ISLAE). Traditionally, the solvability of ISLAE (in other words, the nonemptiness of solution sets) is studied using the technique of recognizing functionals. Recognizing functionals are constructed in such a way that their sign indicates that the solution sets are nonempty. For the main types of problem setting for solving ISLAE — finding a combined, admissible, and controlled decision sets — it is possible to build recognizing functionals based on symmetric and non-symmetric consistency measures.

For data analysis, the proposed measure can be used to characterize the range of a variable. When measuring any value with a set of sensors, it is necessary to allocate operating domains of parameters or measurement time ranges. A measure of consistency is naturally suitable for this. To select a reliable measurement range, it is necessary to set its practically reasonable threshold. A similar situation arises with multiple measurements of any quantity. For example, in the case of forward and reverse motion in the system under study, a hysteresis type of data may occur, including inconsistent ones. For characterization (identification of hysteresis), a measure of consistency can be used.

In clustering (factorization) problems, the working tool is the classification of data according to a set of features. A popular example is histogramming. If the data is essentially interval, one measurement may fall into more than one interval (bin) of the set according to which the classification is carried out. In this case, an asymmetric measure of consistency is well suited: the choice is made according to its maximum value.

Conclusion. The proposed measure made it possible to generalize the concept of similarity of sets to interval objects: pairs of intervals and data samples with interval uncertainty. It is essential that the measure provides meaningful information for non-joint data samples as well. The above example demonstrated the possibility of obtaining an interval estimate of an unknown model parameter in the problem of dependency recovery. Directions for further research on the application of the proposed measure to characterize computational processes and analyze data with interval uncertainty are outlined.

Acknowledgment. The authors are grateful to the participants of the All-Russian webinar on interval analysis S. I. Zhilin, S. I. Kumkov, A. V. Prolubnikov, E. V. Chausova, and S. P. Shary for creative and constructive cooperation in the field of data analysis with interval uncertainty and also to V. M. Nesterov for discussion of issues related to twin arithmetic.

Works presented in the sections "Jaccard measure and interval mode", "Application JK to twin arithmetic and presentation of measurement data with several types of error," "Data analysis with interval uncertainty", supported by the Russian Science Foundation, project No. 21-72-20007. The works presented in the sections "Interval arithmetic", "Generalization of the Jaccard coefficient" and "Asymmetric variants of the JK(x, y) measure" were supported by the Ioffe Institute RAS (within the framework of the RF state task 0034-2019-0001 and 0040-2019-0023).

Change history

04 August 2023

A Correction to this paper has been published: https://doi.org/10.1007/s11018-023-02223-8

Notes

M. Z. Schwartz, working materials. URL: https://github.com/AlexanderBazhenov/Solar-Data (date of access: 11/18/2022).

References

B. I. Semkin, On the Relation Between Mean Values of Two Measures of Inclusion and Measures of Similarity, Byull. Botanicheskogo sada-instituta DVO RAS, No. 3, 91–101 (2009).

R. B. Kearfott, M. T. Nakao, A. Neumaier, S. M. Rump, S. P. Shary, and P. van Hentenryck, Standardized Notation in Interval Analysis, Comput. Technol., 15, No. 1, 7–13 (2010).

S. Shary, Numerical Computation of Formal Solutions to Interval Linear Systems of Equations, arXiv:1903.10272v1 [math.NA], https://doi.org/10.48550/arXiv.1903.10272.

S. Kabir, C. Wagner, T. C. Havens, D. T. Anderson, and U. Aickelin, IEEE Int. Conf. on Fuzzy Systems (FUZZ-IEEE 2017), 2017, https://doi.org/10.1109/FUZZ-IEEE.2017.8015623.

T. Wilkin and G. Beliakov, IEEE Int. Conf. on Fuzzy Systems (FUZZ-IEEE 2019), 1–6 (2019), 10.1109/ FUZZ-IEEE.2019.8858850.

S. Kabir, C. Wagner, and Z. Ellerby, Towards Handling Uncertainty-at-Source in AI — A Review and Next Steps for Interval Regression, arXiv:2104.07245 [cs.LG], https://doi.org/10.48550/arXiv.2104.07245.

A. N. Bazhenov, S. I. Zhilin, S. I. Kumkov, and S. P. Sharyj, Processing and Analysis of Data with Interval Uncertainty, 2022, available at: http://www.nsc.ru/interval/Library/ApplBooks/InteData Processing.pdf (accessed: 10.11.2022).

C. Hu and Z. H. Hu, On Statistics, Probability, and Entropy of Interval-Valued Datasets, Information Processing and Management of Uncertainty in Knowledge-Based Systems. IPMU 2020. Communications in Computer and Information Science, M. J. Lesot et al. Eds., Cham, Springer, 1239, 2020, https://doi.org/10.1007/978-3-030-50153-2_31.

V. M. Nesterov, Tween Arithmetics and Their Application in Methods and Algorithms of Two-Sided Interval Estimation, Diss. Doct. Phis. Math. Sci. St. Petersburg (St. Petersburg Institute of Informatics and Automation of the Russian Academy of Sciences), 1999.

S. Shary, Comput. Technol., 2, No. 2, 150–172 (2017), https://doi.org/10.14529/mmph170105.

S. Shary, J. Comput. Syst. Sci. Int., 56, No. 6, 897–913 (2017), https://doi.org/10.7868/S0002338817060014.

S. Shary, Identification of Outliers in the Maximum Mat-Ching Method in the Analysis of Interval Data, Proc. All-Russian Conf. on Mathematics Int. Participation "MAC-2018," Barnaul, AltGU Publishing House, 2018, pp. 215–218.

S. Shary, On a Variability Measure for Estimates of Parameters in the Statistics of Interval Data, Comput. Techol., 24, No. 5, 90–108 (2019), https://doi.org/10.25743/ICT.2019.24.5.008.

S. P. Shary, Data Fitting Problem under Interval Uncertainty in Data, Ind. Lab. Diagn. Mater., 86, No. 1, 62–74, (2020), https://doi.org/10.26896/1028-6861-2020-86-1-62-74.

S. I. Zhilin, Reliab. Comput., 11, 433–442 (2005), https://doi.org/10.1007/s11155-005-0050-3.

S. I. Zhilin, Chemometr. Intell. Lab. Syst., 88, No. 1, 60–68 (2007), https://doi.org/10.1016/j.chemolab.2006.10.004.

S. I. Kumkov, Processing of Experimental Data on Ionic Conductivity of Molten Electrolyte by Methods of Interval Analysis, Russian Metallurgy (METALLY), No. 3, 79–89 (2010).

S. I. Kumkov and Yu. V. Mikushina, Reliab. Comput., 19, 197–214 (2013).

H. T. Nguyen, V. Kreinovich, B. Wu, and G. Xiang, Computing Statistics under Interval and Fuzzy Uncertainty. Applications to Computer Science and Engineering, Springer, Berlin-Heidelberg, 2012, https://doi.org/10.1007/978-3-642-24905-1.

Author information

Authors and Affiliations

Corresponding author

Additional information

Translated from Izmeritel'naya Tekhnika, No. 12, pp. 15–22, December, 2022.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bazhenov, A.N., Telnova, A.Y. Generalization of Jaccard Index for Interval Data Analysis. Meas Tech 65, 882–890 (2023). https://doi.org/10.1007/s11018-023-02180-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11018-023-02180-2