Summary

The present paper deals with the study of continuous interval data by means of suitable Principal Component Analyses (PCA). Statistical units described by interval data can be assumed as special cases of Symbolic Objects (SO) (Diday, 1987). In Symbolic Data Analysis (SDA), these data are represented as hypercubes. In the present paper, we propose some extensions of the PCA with the aim of representing, in a space of reduced dimensions, images of such hypercubes, pointing out differences and similarities according to their structural features.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Statistical methods have been mainly developed for the analysis of single valued variables. However, in real life there are many situations in which the use of these variables may cause severe loss of information.

Dealing with quantitative variables, there are many cases in which a more complete information can be surely achieved by describing a set of statistical units in terms of interval data.

For example, daily temperatures registered as minimum and maximum values offer a more realistic view on the weather conditions variations with respect to the simple average values. Another example can be given by the financial series. The minimum and the maximum transaction prices, daily recorded for a set of stocks, represent a more relevant information for experts in order to evaluate the stocks tendency and volatility in the same day.

Most widely used approaches to interval data analysis treat intervals as spread ranges with respect to a central value. The spread is generally assumed as the consequence of a measurement error and is considered as a perturbation in the data. In this paper we do not consider the interval central value, but we only point the attention on the minimum and maximum values. These are evaluated as two different and related aspects of the same phenomenon.

The statistical treatment of interval data has been recently considered in the context of Symbolic Data Analysis (SDA) (Diday, 1996), whose aim is to extend classical statistical methods to the study of more complex data structures with respect to the simple tabular model individuals × variables. The present paper introduces some new Principal Component Analyses (PCA) techniques in order to visualize and compare structures of interval data. Statistical units described by interval variables can be considered as special cases of symbolic data, in which only quantitative variables are considered. Moreover, the SDA approach for the interval data treatment offers many useful tools that can be helpful in the interpretation of results. For these reasons our approach to interval data representation is presented by adopting the notations and definitions of the SDA domain.

2 Basic concepts and notation

Let Ω be a set of ωi (1 ≤ i ≤ n) Symbolic Objects (SO), that are described by p variables or descriptors: \(Y=\{y_{1},\ldots,y_{j},\ldots,y_{p}\}\).

Nowadays the SDA is based either on numerical treatments of suitably coded SO’s followed by symbolic interpretation of results, or on symbolic methods that directly process the symbolic descriptors.

In the following we are set in the first approach framework in order to analyze SO’s described only by quantitative interval variables.

Afterwards, the generic variable yj represents no more a single valued variable, like in the classical data analysis, but refers to the lower \(y_{j}\) and the upper \(\overline{y}_{j}\) bounds of the interval assumed by the j-th variable. The notation O1,…, Oj,…, Op is adopted to define the domains of the p variables. Therefore, the symbolic data matrix Y has dimensions (n × 2p).

A basic kind of SO, denoted as event, is indicated as: \(e_{j}=[y_{j}=V_{j}]\), where Vj ⊆ Oj. The logical conjunction of events defines the so called symbolic assertion object \(a_{i}=\wedge_{j=1}^{p}[y_{j}=V_{j}^{i}]\). In order to process, by numerical methods, SO’s described by interval variables, they are re-coded combining min and max values into the so called vertices data matrix. In the simple case of p = 2, the generic SO ωi description is associated with the ith row of the interval data matrix Y:

The related vertices coordinates with respect to the new variables z1 and — having the same domains of y1 and y2, respectively — correspond to the rows of the matrix Zi:

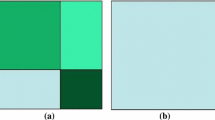

In a geometric view (Figure 1) the generic SO ωi is represented by a rectangle, having \(2^{p}=4\) vertices corresponding to all possible (min, max) combinations.

In the general case of any p variables, each coding matrix Zi will have 2p rows and p columns. The stack coding matrix Z is obtained by superposing the n coding matrices Zi (with 1 ≤ i ≤ n). Matrix Z has \(N=n2^{p}\) rows and p columns, and represents the numerical coding of the n SO’s. Without loss of generality we can assume that zj variables are standardized.

3 Vertices Principal Component Analysis

The extension of the Principal Component Analysis (PCA) to interval data has been proposed by Cazes, Chouakria, Diday and Schektman (1997) and by Chouakria, Diday and Cazes (1998) as “Vertices Principal Component Analysis” (V-PCA).

In the original proposal, the V-PCA consists in performing a classical PCA on the standardized matrix Z. In this way, vertices are elements of the subspace ℝp, whereas the p quantitative descriptors are elements of ℝN. V-PCA looks for a suitable subspace where to represent SO’s and, from a dual point of view, to represent the p variables.

As in classical PCA the optimal sub-space is here spanned by the axes vm (with 1 ≤ m ≤ p), maximizing the sum of squares of projected vertices coordinates \(\psi_{m}=\mathbf{Zv}_{m}\):

with \(\mathbf{v}_{m}^{\prime} \mathbf{v}_{m^{\prime}}=0\) for m ≠ m′ and \(\mathbf{v}_{m}^{\prime} \mathbf{v}_{m^{\prime}}=1\) for \(m=m^{\prime}\). Therefore, the characteristic equation of V-PCA in ℝN is given by:

where vm and λm are the generic eigenvector and the generic eigenvalue respectively, associated to the matrix \(\frac{1}{N}\mathbf{Z}^{\prime}\mathbf{Z}\).

Performing the analysis in the ℝp space we define the equation:

that has same non-zero eigenvalues of (2), but different eigenvectors, holding the following relation: \(\mathbf{v}_{m}=\lambda_{m}^{-1 / 2} \mathbf{Z}^{\prime} \mathbf{w}_{m}\). The principal axes interpretation, also in V-PCA, is accomplished with reference to the variables zj’s having maximal contributions, see Lebart, Morineau and Piron (1995).

In case of normalized variables, contributions are calculated as the squared correlation variable/factor: \(\mathbf{C} \mathrm{TA}_{j, m}=\left(\lambda_{m}^{1 / 2} v_{j, m}\right)^{2} / \lambda_{m}=v_{j, m}^{2}\).

The vertices coordinates of the SO ωi on the principal axes are given by the vector: \(\psi_{i,m}=Z_{i}\mathbf{v}_{m}\). The representation of ωi on the generic axis m is given by the segment including all vertices projections. Adopting the same criterion in a two dimensional space spanned by the axes m and m′, the extreme vertices projections define a rectangle called maximum covering area rectangle (MCAR).

Even if the MCAR representation of SO’s on a plane is coherent with the hypercubes associated to each SO, it does not rarely happen that they are oversized with respect to the real SO in ℝp.

In order to overcome this drawback, Chouakria et al. (1998) proposed to reduce MCAR’s dimensions by retaining in the representation only vertices with high quality representation. The latter is measured in terms of squared cosines criterion: \(\mathrm{CRT}_{q, m}=\sum\nolimits_{j=1}^{p}\left(z_{q, j} v_{j, m}\right)^{2} / \sum\nolimits_{j} z_{q, j}^{2}(1 \leq m \leq p)\), where zq,i is the generic vertex of the SO ωi.

It seems clear that these drawbacks — concerning MCAR’s representations of SO’s — depend on the optimized criterion in V-PCA. In fact, the V-PCA aims at optimizing the vertices representation at the expense of SO’s one; vertices are assumed to be independent and any relationship among vertices belonging to the same SO is lost. The SO’s rebuilding — via MCAR’s — is done ex post and consequently the principal axes interpretation cannot be referred to SO’s main characteristics.

In the next sections, we propose some new approaches allowing to treat, in a consistent way vertices belonging to the same SO [§4] and to stress SO’s size and shape in graphical representation [§5].

4 Symbolic Objects based PCA

In this section, we introduce a new PCA that takes into account the necessary vertices cohesion constraint. In this direction, our proposal is to maximize the variance among SO’s instead of the total vertices variance, as done in V-PCA. Hereinafter, we call the proposed approach Symbolic Object Principal Component Analysis (SO-PCA). The method represents the more realistic PCA extension to interval data and it can be extended — without loss of generality — to any numerically handable data structure.

Analogously to classical PCA, SO-PCA is based on the search of axes maximizing the differences among statistical units which, in this context, are SO’s.

The main idea behind the method consists in maximizing the between SO’s variance matrix:

where we denote by A the boolean matrix (N × n) describing the belonging of the N vertices to the n SO’s.

Looking for the axes that maximize the difference among SO’s, the solution in the space ℝN is obtained by the following characteristic equation:

where \(\tilde{\mathbf{v}}_{m}\) is defined under the orthonormality constraints already expressed in (1). Considering that \(\mathbf{P}_{\mathbf{A}}=\mathbf{A}\left(\mathbf{A}^{\prime} \mathbf{A}\right)^{-1} \mathbf{A}^{\prime}\) is an orthogonal projector matrix, this approach represents a special case of the so called PCA with respect to a reference sub-space (D’Ambra and Lauro, 1982), here spanned by the columns of A.

Vertices coordinates, of the generic SO ωi on the axis m, are given by the vector \(\tilde{\psi}_{i, m}=Z_{i} \tilde{\mathbf{v}}_{m}\).

The analysis can also be exploited in ℝp and leads to the following eigenequation:

Variable contributions are defined like in V-PCA, whereas, we propose to evaluate the SO’s representation considering the whole SO and representing only SO’s having high CRT values. In fact, in V-PCA vertices contributions are individually evaluated and the MCAR’s are built only with vertices having high contribution. The proposed contribution measure \(\mathrm{CRT}_{\omega_{i}}\)is obtained by:

The graphical interpretation of SO’s — as in V-PCA — is made by means of maximum covering area rectangles. Nevertheless, in our approach the adopted constraints on the vertices make the analysis consistent with the aim of PCA on SO’s and offer a more compact SO’s representation.

The previous treatment of interval data remains in the context of the so called multiple PCA (Escofier and Pagés, 1988) where vertices of each SO are referred to the general mean of each variable.

The partial PCA can be alternatively used to better stress the differences among SO’s. The following section shows a partial PCA in which vertices are centered with respect to the min value.

5 A PCA on the range transformation of interval data

In the classical PCA, each statistical unit is represented by a point, while in SDA we have to cope with SO’s shape and size. In order to take into account these SO’s structural elements, we use the range transformation \(V_{j}^{i}=\left[\overline{y_{i, j}}-\underline{y_{i, j}}\right]\), that reveals useful information in studying SO’s size and shape.

In SDA, in fact, an important role is played by the Description Potential (DP) measure, which is the hypervolume of a SO, computed as the Cartesian product \(V_{1}^{i}\times \cdots \times V_{j}^{i} \times \cdots \times V_{p}^{i}\) of the p descriptors associated to the Symbolic Object ωi (1≤i≤ N) (De Carvalho, 1992; De Carvalho, 1997). When intervals descriptors need to be normalized, the DP of the assertion ai is formally defined as:

where \(\overline{V}_{j}^{i}\) indicates the normalised range with respect to the domain Oj: \(\overline{V}_{j}^{i}=V_{j}^{i}/\mathrm{O}_{j}\). As the DP measure tends to zero if at least one \(\overline{V}_{j}\) is close to zero, we prefer to use the following alternative measure, called Linear Description Potential (LDP). The LDP of ai is defined by De Carvalho (1997) as:

Let a1,…, ai,…, aN a set of assertion SO’s described by p interval descriptors, and X indicates the (n × p) matrix having as generic term \(x_{ij}=\sqrt{\overline{V}_{j}^{i}}\).

The method proposed in this section performs a factorial decomposition of the quantity \(\sum\nolimits_{i=1}^{n} \sigma(a_{i})\) allowing a graphical representation that — differently from V-PCA — shows the descriptors influence to the total LDP.

From a geometric point of view, the range transformation implies an affine translation of each object, so that the vertices \(min=\{\underline{y_{i,1}},\ldots, \underline{y_{i,j}},\ldots,\underline{y_{i,p}}\}\) are reported in the origin (see Figure 2).

It is easy to see that, given the orthogonality relationship between couples of sides of each hypercube, the search for a suitable sub-space in order to visualize the size and shape of each SO, can be simply realized on the n max vertices as a PCA with respect to the origin (non centered PCA): \(\overline{y_{i,1}}\),…,\(\overline{y_{i,j}}\),…,\(\overline{y_{i,p}}\). Notice that, in this way the curse of dimensionality, that affects the V-PCA as well as the SO-PCA, can be eliminated. The total number of points is reduced from n2p to n.

We refer to this approach as Principal Component Analysis on the Range Transformation (RT-PCA) of interval variables. As in this PCA the vertices cohesion is automatically respected, hypercubes and their images can be easily visualized by projecting on the factorial plans the other vertices as supplementary points.

The PCA performed on the matrix X decomposes the LDP criterion: \(\left(\operatorname{tr}\left(\mathbf{X X}^{\prime}\right)=\operatorname{tr}\left(\mathbf{X}^{\prime} \mathbf{X}\right)=\sum\nolimits_{i} \sigma\left(a_{i}\right)\right)\), according to the following eigenanalysis:

or equivalently:

being μm the generic eigenvalue \(\left(\sum\nolimits_{m} \mu_{m}=\sum\nolimits_{i} \sigma\left(a_{i}\right)\right)\) and um and tm are the associated eigenvector in the space ℝp and ℝn respectively. Both analyses are defined under the usual ortho-normality constraints.

The SO ωi representation in the optimal sub-space m* < p can be obtained by the matrix Φ, whose elements are the juxtaposition of the first m* (1 ≤ m* ≤ p) axes:

being \(\phi_{m}=\mathbf{Xt}_{m}\)

In the RT-PCA the amount of information contribution associated to each axis is given by the corresponding eigenvalue μm. The ratio between the square coordinate and the eigenvector, both with respect to the axis m:

measures the contribution of the SO ωi to principal axis m. The relative contribution, indicating the quality of the representation, is measured by \(\mathrm{CTR}_{i, m}=\sum\nolimits_{i} \phi_{i, m}^{2} / \sum\nolimits_{j} x_{i, j}^{2}\), with \(m=\{1,\ldots,m^{*}\}\)

Having the matrix X has all positive entries, also the eigenvector u1 and the factor t1 have all positive values, so that the first axis is easily interpreted as SO’s size factor, while the following ones discriminate SO’s according to their shape features. Their interpretation depends on the contribution (squared coordinates) of the original variables to the axis: \(\mathrm{CTA}_{j,m}=\mathrm{t}_{j,m}^{2}\)

It is worth noticing that in this PCA on the range transformation, SO’s can be, more simply, represented by single points (Max vertices coordinates). Therefore, close points refer to SO’s whose LDP’s are mainly influenced by the same variables. In other words, the closeness indicates that SO’s are characterized by hypercubes having similar shape and size.

A direction for future research could be the definition of new SO’s coding techniques that can directly cope with interval variables. In this framework, we propose to consider, for each SO, the following (p + 2)characteristics: [(\(V_{1}^{i}\),…,\(V_{j}^{i}\),…,\(V_{p}^{i}\)); Li; cos αi,], where Li is the lenght of the main diagonal of the i-th hypercube (i.e. the euclidean distance between the vertices \(\underline{y_{j}^{i}}\) and \(\overline{y_{j}^{i}}\)), αi the angle that the segment Li forms with the variable y1. These variables include all the SO’s characteristics but define a data structure easier to handle.

6 SO-PCA: a mixed strategy

In the above sections, we have considered two different approaches to the analysis of the interval data. The SO-PCA permits to evaluate SO’s with respect to their positioning in the space of the recoded descriptors. On the other hand, RT-PCA processes interval data putting in evidence SO’s size and shape. This section provides a mixed strategy combining the SO-PCA [§4] and the RT-PCA [§5] in order to improve SO’s representation taking into account their differences in terms of scale and structural (size and shape) characteristics.

With this aim, we consider the following three-steps approach:

-

i)

perform the RT-PCA of X in order to extract the principal axes that better represent the size and shape of SO’s;

-

ii)

transform Z as \(\hat{\mathbf{Z}}=\mathbf{P}_{\mathbf{A}}\mathbf{Z}\), that permits to take into account the SO vertices cohesion;

-

iii)

perform a PCA on the projections of the rows of \(\hat{\mathbf{Z}}\)on Φ by PΦ projection matrix (defining \(\mathbf{P}_{\mathbf{\Phi}}=\mathbf{\Phi}\left(\mathbf{\Phi}^{\prime} \mathbf{\Phi}\right)^{-\mathbf{1}} \mathbf{\Phi}^{\prime}\)), in order to stress the size and shape information as extracted by X in [§5].

The approach leads to the following eigenanalysis:

where the diagonal matrix (A′A)−1 has been decomposed in the product (A′A)−1/2(A′A)−1/2 in order to ensure the symmetry and sm and ρm are the generic eigenvector and the eigenvalue, respectively, calculated under the orthonormality constraints (1).

The Figure 3 shows an intuitive scheme of the procedure. The upper side represents vertices in the ℝz space, the two ellipses refer to two different SO’s. By means of PΦ, these are projected into the space ℝt, where the V-PCA is performed. The analysis final result is represented by the sub-space spanned by s1 and s2 where SO’s are represented by means of MCAR’s.

The interpretation of results depends on the choice of PΦ. In fact, the projection matrix allows putting in evidence different aspects of the considered set of SO’s, being PΦ a weighting system with respect to the SO’s size and shape. The generic diagonal term of PΦ is equal to the quantity in (11): \(\phi_{i}\left(\phi_{i}^{\prime} \phi_{i}\right)^{-1} \phi_{i}^{\prime}=\sum\nolimits_{m} \phi_{i, m}^{2} / \mu_{m}\).

Aiming at showing the SO’s size we shall include the first principal component ϕ1 in the definition of PΦ; alternatively, the shape aspects could be put in evidence by dropping the first principal component.

7 Example on a real data-set

This section shows an example of the proposed methodology on a real data-set. We use the Oils’ data set (Ichino, 1988) (reproduced in Table 1) largely used in SDA applications, whose characteristics are well-known to people working on the topic. The data set presents eight different classes of oils described by five variables, we only refer to the four quantitative interval variables: “Specific gravity”, “Freezing point”, “Iodine value” and “Saponification”.

In the following, we confine the presentation to the graphical results of the different approaches proposed in the paper.

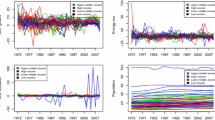

In Figure 4, we show the results with respect to the first two axes (first plane), achieved by the V-PCA. Notice that the 88.4% of the total inertia is explained by the first two axes. In the figure, closeness among MCAR’s indicates SO’s mainly influenced by the same descriptors. We cannot give any interpretation of the similarity in size and shape among MCAR’s. As supplementary points we have also represented the variables, even if these should have been represented in the space ℝN. However, this simultaneous representation is very useful to the interpretation, provided that variables must be only evaluated with respect to their directions.

SO’s associated to MCAR’s representations on the left side of the plane refer to oils mainly characterized by high values of Saponification and Freezing point, whereas, on the opposite side we have representations of oils characterized by Specific gravity and Iodine high values.

In Figure 5, we represent the result obtained by the SO-PCA approach. The interpretation shall be done following the same rules of the V-PCA. In this case, the total inertia associated to the first plane is equal to 90. 7%. Comparing the two outputs, we note how the vertices cohesion constraints reduce the MCAR’s and make the SO’s representation more clear. In fact, the MCAR’s overlapping is considerably reduced, in particular with respect to the SO’s in the axes origin.

It is very important to stress the attention on the interpretation of the MCAR’s dimensions. The Linseed MCAR is represented along the directions of Iodine value and Specific gravity variables meaning that high values have been registered for these two variables. Nevertheless, we cannot give any indication on which variables have mainly influenced its size and shape. This could be done by looking at the results of the RT-PCA analysis showed in the rest of the paragraph.

In the two above examples, we gave an interpretation with respect to MCAR’s position but to their size and shape. Here, we start analyzing these two aspects by adopting the approach discussed in [§5].

At first we have to compute the variables domains (defined in [§5]) as the ranges between the lower minimum value and the higher maximum value for each variable. They resulted equal to: Specific gravity = 0.08; Freezing point = 65.0; Iodine value = 168.0; Saponification = 84.0.

Sums of normalized ranges define the SO’s LDP as showed in the last right column of the table 1.

Looking at variable contributions to LDP, we have the following values: Specific gravity = 0.775; Freezing point = 0.723; Iodine value = 0.720; Saponification = 1.619. Variables have almost the same contribution but Saponification that has a much larger value (1.619). A large amount of this contribution comes from the Linseed oil.

Figure 6 shows the descriptors positioning with respect to the first two factors. The total amount of LDP explained by these two factors is equal to 96.7%.

Looking at Figures 6 and 7 at the same time we note that: the biggest SO is Linseed Oil (Fig. 7) and its dimension is mainly determined by Saponification descriptors (Fig. 6).

Dealing with very few SO’s, these statements can also be easily verified by looking at the original data table (Table 1). Moreover, Linseed, Cotton and Hog oils have their LDP mainly influenced by Saponification and Iodine value, whereas the rest of oils have been mainly influenced by Freezing point and Specific gravity descriptors.

The last approach proposed is the SO-PCA mixed strategy [§6], in which all the information is involved. Figure 8 shows the related representation, however, in this example, due to the small number of variables, we cannot appreciate very relevant changes in the representation with respect to the V-PCA. This approach, in fact, became useful in case of many variables influencing the SO’s structure. Whereas, in the considered example, structures are mainly influenced only by Saponification variable.

8 Perspective and conclusion

In the present paper we proposed some new approaches to the PCA for interval data in the SDA definition. From this point of view, we represented the SO’s on the factorial planes putting in evidence both structural aspects (size and shape) and their positioning in the factorial sub-space.

The proposed methodologies represent a first step in the SDA analysis and should be generalized to any typology of symbolic descriptor (modal and multinomial variables) and, at the same time, to take into account rules and taxonomies that could be defined in the symbolic data structure (Bock and Diday, 2000).

Another relevant aspect concerns the graphical representation that could be made with more efficient geometric tools than the MCAR. For example, we indicate convex hulls and maximum inertia diagonals (Verde and De Angelis, 1997).

As a final point, we indicate the axes interpretation that, in order to ensure the necessary consistency, should be made using the SDA language and tools. Also in this direction there are some contributions (Gettler-Summa, 1997) in literature that could be extended to the proposed factorial analyses.

References

Bock, H. H. and Diday, E. (eds): 2000, Analysis of Symbolic Data, Springer, (in press).

Cazes, P., Chouakria, A., Diday, E. and Schektman, Y.: 1997, Extension de l’analyse en composantes principales à des données de type intervalle, Revue de Statistique Appliquée XIV(3), 5–24.

Chouakria, A., Diday, E. and Cazes, P.: 1998, An improved factorial representation of symbolic objects, KESDA’98 27–28 April, Luxembourg.

D’Ambra, L. and Lauro, C. N.: 1982, Analisi in componenti principali in rapporto a un sottospazio di riferimento, Rivista di Statistica Applicata 15(1), 51–67.

De Carvalho, F. A. T.: 1992, Méthodes Descriptives en Analyse de Données Symboliques, Thése de doctorat., Université Paris Dauphine, Paris.

De Carvalho, F. A. T.: 1997, Clustering of constrained symbolic objects based on dissimilarity functions, Indo-French Workshop on Symbolic Data Analysis and its Applications, University of Paris IX.

Diday, E.: 1987, Introduction á l’approche symbolique en analyse des données, Journées Symbolique-Numerique, Université Paris Dauphine.

Diday, E.: 1996, Une introduction á l’analyse des données symboliques, SFC, Vannes, France.

Escofier, B. and Pagés, J.: 1988, Analyse factorielles multiples, Dunod, Paris.

Gettler-Summa, M.: 1997, Symbolic marking application on car accident reports, Applied Stocastic Models and Data Analysis, Vol. Invited and Specialised Session Papers, ASMDA, Anacapri, pp. 299–306.

Ichino, M.: 1988, General metrics for mixed features — the cartesian space theory for pattern recognition, International Conference on Systems, Man and Cybernetics.

Lauro, C. and Palumbo, F.: 1998, New approaches to principal components analysis to interval data, International Seminar on New Techniques & Technologies for Statistics, NTTS’98, 4/6 nov. 1998, Sorrento, Italy.

Lebart, L., Morineau, A. and Piron, M.: 1995, Statistique exploratorie multidimensionelle, Dunod, Paris.

Meccariello, G.: 1999, Analisi in componenti principali per dati ad intervallo, Tesi di Laurea in Statistica Universitá di Napoli “Federico II”.

Verde, R. and De Angelis, P.: 1997, Symbolic objects recognition on a factorial plan, NGUS’97, Bilbao Spain.

Acknowledgements

This paper has been developed in the ISO-3D Esprit project at DMS of University of Naples “Federico II.”.@@The output in [§7] has been realised with the collaboration of Dr. G. Meccariello (1999).

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Lauro, C.N., Palumbo, F. Principal component analysis of interval data: a symbolic data analysis approach. Computational Statistics 15, 73–87 (2000). https://doi.org/10.1007/s001800050038

Published:

Issue Date:

DOI: https://doi.org/10.1007/s001800050038