Abstract

Time-dependent reliability (failure probability) aims at measuring the probability of the normal (abnormal) operation for structure/mechanism within the given time interval. To analyze the maximum probable life time under a required time-dependent failure probability (TDFP) constraint, an inverse process corresponding to the time-dependent reliability is proposed by taking the randomness of the input variables into consideration. The proposed inverse process employs the monotonicity between the TDFP and the upper boundary of the given time interval which reflects the life time, and an adaptive single-loop sampling meta-model for the time-dependent limit state function is presented to estimate the TDFP at the given time interval flexibly. Since the TDFP is generally monotonic to the upper boundary of the given time interval, thus by adjusting the probable upper and lower boundaries of the time interval in which the corresponding TDFPs include the required TDFP constraint, the proposed approach can always search the maximum probable life time at the required TDFP by the dichotomy. By introducing the time variable as an input which is the same level as the input random variables and constructing the adaptive single-loop sampling meta-model for the time-dependent limit state function in a longer time interval with the TDFP bigger than the required TDFP, the TDFP in any subintervals of the time interval involved in the constructed meta-model can be estimated as a byproduct of the constructed meta-model without any additional actual limit state evaluations. Then the efficiency for analyzing the maximum probable life time is improved by the dichotomy and the unified meta-model of the time-dependent limit state function. Two examples are employed to illustrate the accuracy and the efficiency of the proposed approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Time-dependent reliability analysis aims at estimating the time-dependent failure probability (TDFP) of a structural or mechanical system with respect to a prescribed failure criterion during the given time interval [0, t e ]. Since material properties may be deteriorating in time and loading may be randomly varying with time (Hu and Du 2013; Singh et al. 2010), the time-dependent reliability analysis is very significant in engineering. The time-dependent reliability is a function with respect to the given time interval and the distribution parameters of the random inputs. Reliability-based design optimization (RBDO) for the time-dependent problem is a trade-off between obtaining higher reliability (lower TDFP) and lower cost during the certain time interval [0, t e ] (Wang and Wang 2012; Bisadi and Padgett 2015). The aim of the RBDO is to design the distribution parameters of the basic input variables within the certain time interval. From another point of view, if the distribution parameters are assured, i.e., the epistemic uncertainty (Krzykacz-Hausmann 2006) isn't considered. The mainly concerned variable is the time t, i.e., given the required TDFP constraint to determine the maximum probable life time. To analyze the maximum probable life time under a required TDFP constraint, an inverse process is proposed corresponding to the TDFP in this paper. Due to that the epistemic uncertainty of the random inputs isn't considered in this paper, the time-dependent reliability becomes a function with respect to the upper boundary of the time interval, i.e., t e and the randomness of the inputs. Generally, the TDFP is monotonic to the upper boundary of the time interval so that the dichotomy can be efficiently employed in the inverse process to determine the maximum probable life time under the required TDFP, whose solution needs to repeatedly evaluate the straightforward model. Thus, an efficient straightforward model analysis is a quite vital factor for the inverse process.

At present, three mainstream approaches are widely investigated to estimate the TDFP, i.e., first-passage based approaches (Rice 1944; Andrieu-Renaud et al. 2004; Lutes and Sarkani S. Reliability analysis of system subject to first-passage failure. NASA Technical Report 2009; Hu et al. 2013), extreme performance based approaches (Li et al. 2007; Chen and Li 2007; Zhang et al. 2014; Wang and Wang 2015) and the meta-model based approaches (Wang and Wang 2015). The key to the first approach is the computation of the upcrossing rate. The PHI2 approach (Andrieu-Renaud et al. 2004) is relatively popular of the first-passage based approaches, but for the highly nonlinear limit state function, the error of the time-dependent reliability estimation may be very notable since the first order reliability approach is integrated. Besides, for some cases of larger TDFP, the error is often notable as the assumption that all the upcrossings are independent doesn't hold. The extreme performance based approaches equivalently define the TDFP according to the extreme performances to convert the time-dependent reliability analysis into the time-independent reliability analysis. Thus, the approaches in static reliability analysis (Zhou et al. 2015; Du and Sudjianto 2004; Au and Beck 2001; Zhao and Ono 2001) can be introduced in the time-dependent reliability analysis. Li and co-workers (Li et al. 2007; Chen and Li 2007) developed the probability density evolution and Zhang et al. (Zhang et al. 2014) introduced the maximum entropy approach based on the fractional moment constraints to approximate the extreme distribution of the time-dependent limit state function. Through the integral of the extreme distribution in the failure domain, the TDFP can be readily obtained. Although the sample size of the random inputs is significantly reduced by the maximum entropy approach, yet it is extremely expensive to obtain the maximum performance during the time interval [0, t e ] for each random input sample point as they convert the continuous time into a series of discrete time instants. The composite limit state (CLS) (Singh and Mourelatos 2010) also discretizes the time interval into a series of subintervals and establishes the limit state by assembling all instantaneous limit states in all time subintervals, which is also expensive. To extremely reduce the high computational cost in the extreme performance based time-dependent reliability analysis, a confidence-based meta-model approach was proposed in Ref. (Wang and Wang 2015), which uses a nested extreme response surface approach to surrogate the relationship between the random inputs and the extreme performance of the time-dependent limit state function with a double-loop adaptive sampling (DLAS). To further reduce the computational cost, a single-loop Kriging (SILK) surrogate modeling approach is proposed by Hu and Mahadevan (Hu and Mahadevan 2016), in which they removed the optimization loop used in the DLAS approach and generated the random variables and the time variable at the same level. However, the learning function of the SILK surrogate modeling approach only can guarantee the accurate estimation of the TDFP within the given time interval [0, t e ]. Since that for the failure point x*, if ∃ t ∈ [0, t e ] makes ĝ(x*, t) < 0, and for the safe point x ', if ∀ t ∈ [0, t e ] makes ĝ(x ', t) ≥ 0, the SILK surrogate modeling approach will think the surrogate is successfully constructed to accurately estimate the TDFP within the time interval [0, t e ]. ∃ t ∈ [0, t e ] making ĝ(x*, t) < 0 always can't identify all the failure time instants of the failure point x*, thus, the existing SILK surrogate modeling approach can't simultaneously estimate the TDFPs within any subintervals of the given time interval [0, t e ] by the Kriging model constructed within the time interval [0, t e ]. Along with the SILK surrogate modeling approach proposed by Hu and Mahadevan, another single-loop adaptive sampling (SLAS) meta-model is constructed in this paper, in which time is regarded as a random variable uniformly distributed in the interval [0, t e ] and the sample points of the time variable are generated at the same level of the input random variables. The condition of the surrogate accuracy in the proposed SLAS meta-model approach can guarantee the correct classification between all the instantaneous failure events and all the instantaneous safe events. Therefore, the proposed SLAS approach can cover all the instantaneous failure events during the certain time interval [0, t e ] while the existing SILK surrogate modeling approach merely can identify whether failure or not at each realization of the input random variables within the time interval [0, t e ]. Thus, the proposed SLAS meta-model not only inherit the advantages of the existing SILK surrogate modeling approach but also can simultaneously obtain the TDFPs within any subintervals of the given time interval without any extra model evaluations, which the existing approach can't provide concurrently. Compared with the DLAS meta-model approach, the proposed approach reduces the computational cost by transforming the double-loop into the single-loop. In addition, the proposed approach can cover all the instantaneous failure events during the certain time interval while the DLAS meta-model approach only covers the extreme failure events.

Based on the SLAS meta-model approach, the repeatedly evaluating the straightforward model, i.e., evaluating any TDFPs within the subintervals of the larger given time interval, can be efficiently implemented.

The paper is outlined as follows. Section 2 reviews the definition of the time-dependent reliability/TDFP and defines the maximum probable life time under the required TDFP constraint. Section 3 reviews the existing mainstream approaches for the TDFP analysis. Section 4 describes the proposed SLAS meta-model approach for the time-dependent reliability/TDFP analysis. Section 5 gives the implementation progress of the maximum probable life time analysis with the integration of the dichotomy and the proposed time-dependent reliability/TDFP analysis approach. Section 6 illustrates the accuracy and the efficiency of the proposed time-dependent reliability/TDFP analysis approach especially compared with the nested extreme response surface based DLAS meta-model and other existing approaches. In addition the accuracy and the efficiency of the proposed maximum probable life time analysis are demonstrated by the examples. Conclusions are summarized in section 7.

2 The concept of the maximum probable life time

Let X = (X 1, X 2, …, X n )T denote the n -dimensional random vector, t e be the designed life time or univariate motion input of interest, and g(X, t) represent the time-dependent limit state function, the time-dependent reliability within [0, t e ] can be described as

where the time-dependent limit state equation can be determined by g(X, t) = 0.

Analogously, the TDFP is defined as

where the lower boundary of the time interval can be any constant for the practical applications, for convenience we fix it on zero, and P f (0, t e ) + R(0, t e ) = 1.

The time-dependent reliability analysis is a straightforward analysis. In some cases, engineers may desire to know the maximum probable life time for a required TDFP constraint under the assumed distribution functions of the input variables, i.e., determine the [0, t*] to ensure the TDFPs within any subintervals of [0, t*] are smaller than the limit P * f . t* is defined as the maximum probable life time and can be determined by the following equation

Obviously, the solution of (3) is an inverse process corresponding to solving the TDFP, i.e., determining the maximum upper boundary of the time interval under the required TDFP. Generally, P f (0, t) is monotonic to the upper boundary of the time interval, i.e., t so that the dichotomy can be employed in the inverse process to efficiently determine the maximum probable life time t* under the given P * f , i.e., determine the root of ξ(t*) = Pr{∃ t ∈ [0, t*], g(X, t) < 0} − P * f = 0. In the process of the dichotomy, the straightforward analysis, i.e., the time-dependent reliability analysis, is indispensable.

In the next section, this paper will briefly review the existing mainstream TDFP analysis approaches and analyze the applicability of these approaches in the inverse process. Based on inheriting the advantages of the existing TDFP analysis approaches, a new SLAS meta-model approach is presented which can be repeatedly utilized to estimate the TDFPs within any subintervals of the given larger time interval in the constructed meta-model to efficiently solve the inverse problem.

3 Review of the time-dependent reliability analysis approaches

At present, the first-passage based approaches, the extreme performance based approaches and the meta-model based approaches are the representative time-dependent reliability analysis approaches, thus the following subsections provide brief reviews on the out-crossing rate-based approach, the nested extreme response surface approach, and the existing SILK meta-model approach.

3.1 Out-crossing rate-based approach

In the out-crossing rate-based approach, the latent assumption is that all the crossing events are independent. The instantaneous out-crossing rate at time instant t is defined as

The TDFP within the time interval [0, t e ] is estimated as (Hu and Du 2012)

where P f (0, 0) is the failure probability which is performed at the initial time instant t e = 0.

The key to the out-crossing rate-based approach is to calculate the out-crossing rate which is inconvenient to estimate. When the time-dependent limit state function is a certain stochastic process like stationary Gaussian processes, an analytical out-crossing rate is available (Breitung 1988). While for the practical applications, the type of the stochastic process is anomalistic, thus the asymptotic integration is extensively used to calculate the out-crossing rate (Breitung and Rackwitz 1998). Sometimes, the latent independent upcrossing assumption doesn't hold for some applications, especially for the cases that the time interval of interest is long and many upcrossings exist.

3.2 Nested extreme response surface approach

Compared with the out-crossing rate-based approach, the nested extreme response surface approach based on the extreme performance during the time interval [0, t e ] converts the time-dependent reliability analysis into the time-independent one, i.e.,

where T(X) is the extreme response surface associating with the input vector X and the time interval. For the given time interval [0, t e ], T(X) only depends on the input vector, i.e.,

For this time-independent reliability analysis, many time-independent reliability analysis approaches can be integrated into the time-dependent reliability analysis like FOSM (Hu and Du 2015), importance sampling based approaches (Singh et al. 2011), moment method (Zhang et al. 2014; Xu 2016), meta-model method (Sundar and Manhar 2013) and so on. The straightforward way to estimate the extreme time at a certain input X is to discrete the time interval of interest into a series of time instants, which is prohibited in practical engineering. To efficiently identify the extreme time response, Ref. (Wang and Wang 2015) constructed the Gaussian process based time meta-model.

Although the nested meta-models significantly decrease the computational cost in the time-dependent reliability analysis, yet it always contains DLAS processes. Besides, it only provides the extreme response information and the time-dependent reliability in the time interval [0, t e ] and can't provide other instantaneous failure events in the time interval [0, t e ] as well as the time-dependent reliability in any subintervals of the [0, t e ] which is involved in the constructed extreme response surface.

3.3 SILK surrogate modeling approach

To overcome the two drawbacks of the DLAS meta-model based approach analyzed in Ref. (Hu and Mahadevan 2016), i.e., (1) the accuracy of the identification of the extreme response in the inner loop will influence the accuracy of the extreme response surface in the outer loop, (2) the large computational cost in identifying the extreme value in the inner loop with stochastic processes through a long time interval, a SILK surrogate modeling approach is proposed in Ref. (Hu and Mahadevan 2016). The SILK surrogate modeling approach generates the random variables and the time variable at the same level in place of two separate levels to construct the Kriging surrogate model ĝ(X, t). Although the SILK surrogate modeling approach can overcome the two drawbacks of the DLAS and efficiently estimate the TDFP within the given time interval [0, t e ], yet it can't use the constructed Kriging model in the given time interval [0, t e ] to estimate the TDFPs in any subintervals of the given time interval [0, t e ] for the following indicator defined being used to refine the surrogate model ĝ(X, t), i.e.,

where \( U\left({\boldsymbol{x}}^{(i)},{t}^{(j)}\right)=\frac{\left|{g}_K\left({\boldsymbol{x}}^{(i)},{t}^{(j)}\right)\right|}{\sigma_{g_K}\left({\boldsymbol{x}}^{(i)},{t}^{(j)}\right)} \), g K (⋅) and \( {\sigma}_{g_K}\left(\cdot \right) \) are the expected value and standard deviation of the prediction, N t is the number of the time instants generated by discretizing the time interval [0, t e ], and u e is any number so that u e > 2.

It can be seen that within the given time interval [0, t e ], the constructed Kriging model by the adaptive refined indicator of (8) can accurately classify the failure sample points and the safe sample points of the model inputs. While, for the failure sample point x*, the (8) only can guarantee at least one failure time instant is accurately identified. Therefore, the constructed SILK surrogate can't identify all the instantaneous failure events which include different failure time instants at the same realization of the model inputs when there are many failure instants within the time interval [0, t e ], which means that the constructed SILK surrogate can't simultaneously estimate the TDFPs in any subintervals of the given time interval [0, t e ]. Therefore, the existing SILK surrogate modeling approach can't be efficiently and directly utilized in solution of the (3).

To inherit the advantage that regard the model input variables and the time variable at the same level in the SILK surrogate modeling approach and make the constructed meta-model within the time interval [0, t e ] be used to simultaneously estimate the TDFPs in any subintervals of the given time interval [0, t e ] without any extra model evaluations, a revised SILK surrogate modeling approach, i.e., SLAS meta-model approach is proposed in which the maximum confidence enhancement (MCE) based sequential sample scheme (Wang and Wang 2014) is employed to identify all the instantaneous failure events within the involved time interval [0, t e ]. The next section will describe the SLAS meta-model approach in detail.

4 SLAS meta-model based time-dependent reliability analysis approach

To cover all the instantaneous failure events, a SLAS meta-model based approach is proposed in this section.

In the time-dependent limit state function g(X, t), X is the model input variables following their distributions and t is a time variable within the given time interval. To identify all the potential instantaneous failure events, in the proposed approach, the time interval is treated as a uniform variable \( \overline{t} \) in the interval [0, t e ], and the samples of \( \overline{t} \) can be generated as the same level as the X, which is similar to the existing SILK surrogate modeling approach. Then the meta-model between \( g\left(\boldsymbol{X},\overline{t}\right) \) and \( \left\{\boldsymbol{X},\overline{t}\right\} \) is constructed by the Kriging-based (Kaymaz 2005) meta-model approach. The difference between the proposed SLAS meta-model approach and the existing SILK surrogate modeling approach is the stopping criterion in improving the fidelity of the time-dependent limit state surface identification. The stopping criterion in the existing SILK surrogate modeling approach only can guarantee the accurate identification of the failure model inputs samples and can't identify when the failure occurs and how many failure instants exists within the time interval [0, t ε ] of interest. In the revised SILK surrogate model approach in this paper, a maximum confidence enhancement approach (Wang and Wang 2014) is utilized to update the Kriging model and enhance the fidelity of the time-dependent limit state surface identification consecutively which can consider all the discrete time instants for each realization of model inputs with the time interval [0, t ε ] of interest. Thus, all the instantaneous failure events accurately are identified with the given time interval [0, t e ] by the proposed SLAS meta-model based time-dependent reliability analysis approach.

4.1 Kriging model

The Kriging approach is a semi-parametric interpolation technique based on the statistical theory (Sacks et al. 1989), which involves two parts, i.e., the parametric linear regression part and the nonparametric stochastic process. Its mathematical expression is

where \( \boldsymbol{f}\left(\boldsymbol{X},\overline{t}\right)={\left[{f}_1\left(\boldsymbol{X},\overline{t}\right),{f}_2\left(\boldsymbol{X},\overline{t}\right),\dots, {f}_p\left(\boldsymbol{X},\overline{t}\right)\right]}^{\mathrm{T}} \) is base functions of vector \( \left(\boldsymbol{X},\overline{t}\right) \) which can offer a global simulation, β = [β 1, β 2, …, β p ]T is the regression coefficient vector which needs to be determined, and p denotes the number of base functions. \( \boldsymbol{Z}\left(\boldsymbol{X},\overline{t}\right) \) is a stationary Gaussian process with zero mean and covariance which can be defined as follows:

where N is the number of experimental points, σ 2 is the variance and \( \boldsymbol{R}\left(\left({\boldsymbol{x}}_i,{\overline{t}}_i\right),\left({\boldsymbol{x}}_j,{\overline{t}}_j\right)\right) \) is the correlation function. Generally, Gaussian correlative model is selected (Koehler and Owen 1996; Welch et al. 1992).

Define \( \boldsymbol{R}=\left[\begin{array}{ccc}\hfill R\left(\left({\boldsymbol{x}}_1,{\overline{t}}_1\right),\left({\boldsymbol{x}}_1,{\overline{t}}_1\right)\right)\hfill & \hfill \cdots \hfill & \hfill R\left(\left({\boldsymbol{x}}_1,{\overline{t}}_1\right),\left({\boldsymbol{x}}_N,{\overline{t}}_N\right)\right)\hfill \\ {}\hfill \vdots \hfill & \hfill \ddots \hfill & \hfill \vdots \hfill \\ {}\hfill R\left(\left({\boldsymbol{x}}_N,{\overline{t}}_N\right),\left({\boldsymbol{x}}_1,{\overline{t}}_1\right)\right)\hfill & \hfill \cdots \hfill & \hfill R\left(\left({\boldsymbol{x}}_N,{\overline{t}}_N\right),\left({\boldsymbol{x}}_N,{\overline{t}}_N\right)\right)\hfill \end{array}\right] \), F is a vector of \( \boldsymbol{f}\left(\boldsymbol{X},\overline{t}\right) \) and g is corresponding vector of the limit state functions calculated at each experimental points \( \left({\boldsymbol{x}}_i,{\overline{t}}_i\right)\left(i=1,2,\dots, N\right) \), the unknown β and σ 2 can be estimated as follows:

Then, for any unknown points X, the Best Linear Unbiased Predictor of model \( {g}_K\left(\boldsymbol{X},\overline{t}\right) \) is shown to be a Gaussian random \( {g}_K\left(\boldsymbol{X},\overline{t}\right)\sim N\left({\mu}_{g_K}\left(\boldsymbol{X},\overline{t}\right),{\sigma}_{g_K}\left(\boldsymbol{X},\overline{t}\right)\right) \) where the mean and the variance are given as follows:

where \( {\boldsymbol{r}}^{\mathrm{T}}\left(\boldsymbol{X},\overline{t}\right)={\left[R\left(\left(\boldsymbol{X},\overline{t}\right),\left({\boldsymbol{x}}_1,{\overline{t}}_1\right)\right),\dots, R\left(\left(\boldsymbol{X},\overline{t}\right),\left({\boldsymbol{x}}_N,{\overline{t}}_N\right)\right)\right]}^{\mathrm{T}} \).

4.2 Single-loop adaptive sampling approach based on maximum confidence enhancement

To identify all the instantaneous failure events in the time-dependent reliability, the relationship between \( \left\{\boldsymbol{X},\overline{t}\right\} \) and g is surrogated by the Kriging model \( {g}_K\left(\boldsymbol{X},\overline{t}\right) \). Let \( \varOmega =\left\{\left(\boldsymbol{X},\overline{t}\right)\Big|g\left(\boldsymbol{X},\overline{t}\right)<0\right\} \) denote the instantaneous failure domain, thus the time-independent instantaneous FP is estimated by

where E[⋅] denotes the expectation operator and \( I\left(\boldsymbol{X},\overline{t}\right) \) is a failure domain indicator function defined as

Due to that a few number of samples are selected to construct the Kriging model, there may exist the problem of fidelity of the initial model. To boost the fidelity of the current Kriging model to identify all the instantaneous failure events including different failure time instants at the same realization of the model inputs, the maximum confidence enhancement (MCE) based sequential sample scheme (Wang and Wang 2014) is employed in this paper. In MCE, the probability of correct classification (Echard et al. 2011) for the sample point \( \left({\boldsymbol{x}}_i,{\overline{t}}_j\right) \) is

where | ⋅ | is the absolute operator. Thus the cumulative confidence level of the reliability approximations for Kriging model is measured by

where N is the number of sample points of the model inputs generated by their probability density functions (PDF) and N t is the number of sample points of the time variable uniformly generated within the given time interval. Generally, the longer the time interval is, the larger the N t needs to be.

Measurement C measures the average identification accuracy of the Monte Carlo simulation (MCS) sample pool which is constituted by the N sample points of the model inputs and the N t sample points of the time variable, i.e., \( \left[\left({\boldsymbol{x}}_1,{\overline{t}}_1\right),\left({\boldsymbol{x}}_1,{\overline{t}}_2\right),\dots, \left({\boldsymbol{x}}_1,{\overline{t}}_{N_t}\right),\left({\boldsymbol{x}}_2,{\overline{t}}_1\right),\left({\boldsymbol{x}}_2,{\overline{t}}_2\right),\dots, \left({\boldsymbol{x}}_2,{\overline{t}}_{N_t}\right),\dots, \left({\boldsymbol{x}}_N,{\overline{t}}_1\right),\left({\boldsymbol{x}}_N,{\overline{t}}_2\right),\dots, \left({\boldsymbol{x}}_N,{\overline{t}}_{N_t}\right)\right] \). Note that measurement C is a positive value within (0.5, 1] and the bigger the value is, the more failure instants of each realization of model inputs are accurately identified so that the more accurate of the time-dependent reliability approximations in any subintervals are. While the (8) only can guarantee the accurate identification of each failure point and each safe point for model inputs within the time interval [0, t e ]. For each failure point of model inputs, the (8) can't make the Kriging model accurately identify when the failure occurs and all the failure instants within the time interval [0, t e ]. From Fig. 1, it can be seen that for the failure points x*, the time-dependent structure/mechanism belongs to failure when t ∈ [t 1, t 2] or t ∈ [t 3, t 4]. Only one failure time instant belonging to the interval [t 1, t 2] or [t 3, t 4] is identified, will the Kriging model constructed by the SILK approach think the accurate identification of the failure point x*. While for the proposed SLAS approach, the Kriging model thinks the accurate identification of the failure point x* only when all the failure instants in the time intervals [t 1, t 2] and [t 3, t 4] are accurately identified. Therefore, the proposed SLAS can distinguish all the failure instants from all the safe instants so that it can be utilized to estimate any TDFPs of different subintervals of the time interval of interest involved in the Kriging model.

However, Pr c doesn't reflect the global fidelity of the current Kriging model. To make up it, an adaptive sampling based on MCE is developed (Wang and Wang 2014). In MCE, the most contributive sample point is selected in the Monte Carlo simulation (MCS) sample pool \( \left[\left({\boldsymbol{x}}_1,{\overline{t}}_1\right),\left({\boldsymbol{x}}_1,{\overline{t}}_2\right),\dots, \left({\boldsymbol{x}}_1,{\overline{t}}_{N_t}\right),\left({\boldsymbol{x}}_2,{\overline{t}}_1\right),\left({\boldsymbol{x}}_2,{\overline{t}}_2\right),\dots, \left({\boldsymbol{x}}_2,{\overline{t}}_{N_t}\right),\dots, \left({\boldsymbol{x}}_N,{\overline{t}}_1\right),\left({\boldsymbol{x}}_N,{\overline{t}}_2\right),\dots, \left({\boldsymbol{x}}_N,{\overline{t}}_{N_t}\right)\right] \) by the following criterion

where \( \left(1-{ \Pr}_c\left({\boldsymbol{x}}_i,{\overline{t}}_j\right)\right) \) measures the probability of incorrect classification, \( {f}_{\boldsymbol{X},\overline{t}}\left({\boldsymbol{x}}_i,{\overline{t}}_j\right) \) is the probability density value at \( \left({\boldsymbol{x}}_i,{\overline{t}}_j\right) \) which reflects the importance of \( \left({\boldsymbol{x}}_i,{\overline{t}}_j\right) \), and \( {\sigma}_{g_K}\left({\boldsymbol{x}}_i,{\overline{t}}_j\right) \) is the estimated mean square error of the prediction of Kriging model. Thus, the most contributive sample point is selected by maximizing ψ(⋅) from the MCS sample pool, i.e.,

By adding the most contributive sample point sequentially in the current Kriging model, the fidelity of the current model is enhanced till the criterion C satisfies a predefined confidence target.

Through transforming time t into a uniform random variable \( \overline{t} \), an efficient time-dependent reliability analysis meta-model is established which can cover all the instantaneous failure events within the given time interval [0, t e ] by the principle of selecting the most contributive sample point from the MCS sample pool and the stopping criterion, i.e., (20) and (18) so that the direct MCS can be involved to estimate the time-dependent reliability/TDFPs in any subintervals of the given time interval [0, t e ] based on the unified meta-model of the time-dependent limit state function. Not only that, the meta-model also can provide the time-dependent limit state function with respect to time for the input variables taking their realizations so that its first time to failure can be conveniently obtained, but the nested meta-model extreme performance based approach and the existing SILK surrogate modeling approach can't provide.

Consider this simple example: g(X, t) = 10 − Xt 2 where X is a normal distribution variable with mean 10 and standard derivation 1, and t is the time variable varying within [0, 1]. Set C > 0.999999, only 11 model evaluations are needed to construct the Kriging model of \( g\left(\boldsymbol{X},\overline{t}\right) \) in which 8 training points are involved in the initial Kriging model and 3 extra points are subsequently selected to update the initial model by MCE. The nested meta-model extreme performance based approach merely provides the extreme response at any nominal points of X within the time interval [0, t e ]. While our proposed approach not only can provide what the nested meta-model extreme response based approach provide, but also can provide the responses at any time instants within [0, t e ] for a nominal point of X like the first time to failure. Figure 2 plots the response varying with time at x* = 12.5393. From Fig. 2, we not only can obtain the extreme time i.e. t min = 1, but also can obtain the first failure time at x* = 12.5393, i.e., t = 0.8930.

4.3 Time-dependent reliability analysis

Based on the Kriging model \( {g}_K\left(\boldsymbol{X},\overline{t}\right) \), direct MCS approach integrated with the (6) is used to estimate the TDFP. Firstly, generate N × n sample matrix A of random inputs X according to their PDFs and N t × 1 sample vector T of time t uniformly distributed in [0, t e ], i.e.,

Secondly, define the matrix B (i)(i = 1, 2, …, N), i.e.,

Take the each row B (i) j (j = 1, 2, …, N t , i = 1, 2, …, N) of matrix B (i)(i = 1, 2, …, N) in \( {g}_K\left(\boldsymbol{X},\overline{t}\right) \) to find the minimum value and check whether \( \underset{1\le j\le {N}_t}{ \min }{g}_K\left({\boldsymbol{B}}_j^{(i)}\right)<0 \).Traverse all the matrixes B (i) from B (1) to B (N) to count the number of \( \underset{1\le j\le {N}_t}{ \min }{g}_K\left({\boldsymbol{B}}_j^{(i)}\right)<0 \) and denote it as N f . Thus, the TDFP is estimated as

5 The implementation of the maximum probable life time analysis

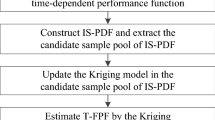

An elaborate summary of the implementation of the maximum probable life time analysis by the meta-model integrated with the dichotomy approach in a more efficient way is demonstrated in this section. The flowchart of the proposed approach is given in Fig. 3. It can be simply divided into nine steps and the procedure is revealed as follows:

-

Step 1

Input the required TDFP constraint P * f

-

Step 2

Input the upper boundary t u of the time interval for the dichotomy which is problem-dependent, generally, the larger the better, to guarantee that P f (0, t u ) > P * f .

-

Step 3

Generate N 0 sample points of \( \left(\boldsymbol{X},\overline{t}\right) \) in which \( \overline{t} \) is uniformly distributed within [0, t u ] and evaluate corresponding time-dependent limit state function of the sample points. In the initial step, N 0 can be small, and the sample points can be generated by making use of some low discrepant sampling approaches like Latin hypercube sampling approach, Sobol' sequence sampling approach (Sobol 1976, 1998), and so on.

-

Step 4

Construct the Kriging model using the selected experimental points, generate N sample points of X and N t sample points of \( \overline{t} \) to constitute the MCS sample pool \( \left[\left({\boldsymbol{x}}_1,{\overline{t}}_1\right),\left({\boldsymbol{x}}_1,{\overline{t}}_2\right),\dots, \left({\boldsymbol{x}}_1,{\overline{t}}_{N_t}\right),\left({\boldsymbol{x}}_2,{\overline{t}}_1\right),\left({\boldsymbol{x}}_2,{\overline{t}}_2\right),\dots, \left({\boldsymbol{x}}_2,{\overline{t}}_{N_t}\right),\dots, \left({\boldsymbol{x}}_N,{\overline{t}}_1\right),\left({\boldsymbol{x}}_N,{\overline{t}}_2\right),\dots, \left({\boldsymbol{x}}_N,{\overline{t}}_{N_t}\right)\right] \) and commonly N and N t are large, and calculate the cumulative confidence level C of these sample points and judge whether it is larger than C_given. If so, turn to step 6 directly.

-

Step 5

Select the \( {\left(\boldsymbol{x},\overline{t}\right)}^{\hbox{'}}=\underset{i,j}{ \arg } \max \psi \left({\boldsymbol{x}}_i,{\overline{t}}_j\right)\kern0.5em \left(i=1,2,\dots, N,\kern0.5em j=1,2,\dots, {N}_t\right) \) and evaluate the actual limit state value, then add this sample point into the set of experimental points and turn to step 4.

-

Step 6

Calculate the P f (0, t u ) denoted as P u f and judge whether P u f is larger than P * f . If so, execute the next step continuously, otherwise, let t u = t u + Δt u , and turn to step 3.

-

Step 7

Input the lower boundary of the dichotomy t l . Because [0, t l ] is a subinterval of [0, t u ], the TDFP of P f (0, t l ) denoted as P l f can be conveniently obtained by the current meta-model which doesn't need extra model evaluations.

-

Step 8

Judge whether P l f is smaller than P * f . If so, execute the next step continuously, otherwise, let t l = t l − Δt l , and turn to step 7.

-

Step 9

Use the dichotomy to efficiently determine the root of the equation ξ(t*) = Pr{∃ t ∈ [0, t*], g(X, t) < 0} − P * f = 0 and output the root t* as the maximum probable life time under the required TDFP P * f .

6 Case studies

In this section, two examples, a mathematical and a four-bar function generator mechanism problem are used to illustrate the accuracy and the efficiency of the proposed approach in the time-dependent maximum probable life time analysis.

6.1 Case study I : a mathematical problem

A time-dependent limit state function g(X, t) is given by

where t is the time variable, X 1 and X 2 are random inputs normally distributed, i.e., X 1 ~ N(3.5, 0.32) and X 2 ~ N(3.5, 0.32). To compare with the result estimated in Ref. (Wang and Wang 2015), the time interval [0, 5] is chosen. Then the SLAS Kriging model \( {g}_K\left(\boldsymbol{X},\overline{t}\right) \) is constructed till the confidence level C > 0.99999. Table 1 shows the initial sample, updating sample, and the corresponding responses. From Table 1, it is shown that the total number of model evaluations is 20. To illustrate the efficiency of the proposed approach, Table 2 gives the estimations of the TDFP within the time interval [0, 5] by the proposed approach, PHI2, CLS, DLAS and MCS. The data of PHI2, CLS, DLAS and MCS are directly employed which are provided in Ref. (Wang and Wang 2015). Ref. (Wang and Wang 2015) has demonstrated the superiority of DLAS compared with PHI2 and CLS, which totally needs 40 number of model evaluations. While, our proposed SLAS merely requires 20 number of model evaluations which is a half of DLAS. Besides, the accuracy of the SLAS also is superior to that of the DLAS.

Compared with the DLAS, the proposed SLAS not only can provide the extreme response value of any sample points of the random inputs within the given time interval, but also can provide response values of any sample points and any time instants within the time interval [0, 5]. Therefore, the SLAS can catch all the instantaneous failure events within the time interval [0, 5] so that the proposed SLAS can simultaneously obtain the TDFPs in any different subintervals of the time interval [0, 5]. Figure 4 plots the TDFPs varying with time t with the time interval [0, 3], the subintervals of [0, 5], thus the process doesn't need to run the actual limit state function. It is observed that the abundant amount of information can be provided by the proposed approach.

To verify the efficiency and the accuracy of the proposed approach in the inverse process to determine the maximum probable life time, let P * f = 0.01. Firstly we attempt to fix t u = 1 and construct the Kriging model \( {g}_K\left(\boldsymbol{X},\overline{t}\right) \) with \( \overline{t}\in \left[0,1\right] \). The initial samples, updating samples, and the corresponding responses are displayed in Table 3. From Table 3, we can see that only 18 model evaluations are needed to construct the Kriging model where the confidence level C > 0.9999. Using direct MCS under the current Kriging model, the TDFP with upper limit of time is estimated to be 0.0614. Fortunately, the first attempt of t u satisfies the condition that P u f > P * f , if the first attempt fails, let t u = t u + Δt u and repeat the calculation till P u f > P * f is satisfied. Then, choose t l which is smaller than the current t u , in the first attempt t l = 0.4 is chosen. Under the current Kriging model, the TDFP with time interval [0, 0.4] can be estimated by the constructed meta-model without extra actual time-dependent limit state evaluation due to that [0, 0.4] ∈ [0, 1], and P l f = 0.0062 which is smaller than P * f . Thus, the upper and lower boundaries of the dichotomy can be given by [a, b] = [0.4, 1]. Set the limit to the error of the dichotomy, ε = 0.5 × 10− 2. Through the (26), the least number k of bipartition can be determined.

Table 4 gives the procedure of the dichotomy, where we can determine the solution of the inverse process, namely, the maximum probable life time t max for the given TDFP constraint 0.01 is t max = 0.5172. To illustrate the accuracy of the results we use the result calculated by MCS as a reference and the results are shown in Table 5. In MCS, 106 input variable samples are generated and the time interval is discreted into 100 time instants evenly. Results demonstrate that the accuracy and the efficiency of the proposed approach are very high in the inverse process to determine the maximum probable life time.

To avoid the extra model evaluations generated from selecting the interval of the dichotomy, i.e., repeatedly executing the procedure of step 3 to step 6, generally, the maximum interval of interest can be chosen, which is problem-dependent. In this case, [0, 5] is chosen to construct the Kriging model \( {g}_K\left(\boldsymbol{X},\overline{t}\right) \) which totally utilized 20 training points. In addition, [0, 5] is used in dichotomy as the boundary to determine the maximum probable life time in the inverse process. The procedure of the dichotomy is shown in Table 6. The result of the inverse process is 0.5029296875, and the relative error referring to MCS is 1.9 %, which also is accurate. To further improve the accuracy of the result, the confidence level C of the constructed Kriging model can be set to a larger one to guarantee the fidelity of the Kriging model to identify all the failure events within the given time interval.

6.2 Case study II : a four-bar generator mechanism

Case study I confirms the superiority of the proposed approach, in this case study a four-bar generator mechanism in Ref. (Du 2014) is concerned to demonstrate the engineering application of the proposed approach.

The four-bar mechanism is shown in Fig. 5 and the geometric dimension variables are X = (R 1, R 2, R 3, R 4) and they are assumed to follow normal distribution with means μ 1 = 53.0 mm, μ 2 = 122.0 mm, μ 3 = 66.5 mm, μ 4 = 100.0 mm and standard derivations σ 1 = σ 2 = σ 3 = σ 4 = 0.1 mm. The motion output can be easily derived by the following loop equations:

where θ is the motion input, φ and δ are the two motion outputs. By solving (27), the two motion output can be derived as:

where D = − 2R 1 R 3 sin θ, E = 2R 3(R 4 − R 1 cos θ) and F = R 22 − R 21 − R 23 − R 24 + 2R 1 R 4 cos θ. The motion output of concern is φ and the desired motion output function is assumed to be

Thus, by treating the motion input θ as the time variable t in time-dependent reliability analysis, the instantaneous limit state function of the four-bar function generator can be expressed as

where c is the allowable threshold and we set 0.6 in this case. The lower limit of t is 95.5°.

Firstly, SLAS is used to construct a Kriging model in which the time interval is [95.5°, 225.5°]. The total number of model evaluations is 182, i.e., 32 initial sample points and 150 updating sample points. The confidence level of the current Kriging model is larger than 0.99999999. Figure 6 shows the TDFPs varying with the upper boundary of time interval in [95.5°, 225.5°]. From Fig. 6 we can see that the accuracy and the efficiency of the proposed approach are satisfied.

To inversely obtain the maximum probable life time for the required TDFP 0.09. We directly choose [95.5°, 225.5°] as the boundary of the dichotomy. The range of the TDFPs in the interval [95.5°, 225.5°] is 0 ~ 1 which includes 0.09. Set the error limit 0.5 × 10− 2 in the dichotomy and the procedure is shown in Table 7. The inverse results are shown in Table 8. From Table 8 we can see that the maximum probable life time of the given TDFP constraint 0.09 is 118.544° and the relative error compared with MCS only is 0.24 % while the total number of model evaluations is 182 in the time-dependent reliability and the maximum probable life time analysis by the proposed approach, and it is shown that the proposed approach is much more efficient than MCS.

7 Conclusion

In this paper, the maximum probable life time is proposed and analyzed. Determining the maximum probable life time is an inverse process of the time-dependent reliability analysis. The solution of the inverse process needs repeating of the direct process. Thus, the paper firstly introduces a confidence-based meta-model approach for the direct time-dependent reliability analysis, referred to SLAS, in which the time is regarded as a uniform variable within the time interval. The meta-model can identify all the instantaneous failure events so that the proposed approach can estimate the TDFPs in any subintervals of the given time interval involved in the constructed meta-model, which provides much more information than the DLAS approach and the existing SILK surrogate modeling approach. Commonly, the TDFP is monotonic to the upper boundary of the time interval so that the dichotomy and the SLAS meta-model based approach can be efficiently integrated in the inverse process to determine the maximum life time under the required TDFP constraint. The results illustrate that the accuracy and the efficiency of the proposed approach is superior to the existing approaches in terms of the time-dependent reliability and the maximum probable life time analysis.

References

Andrieu-Renaud C, Sudret B, Lemaire M (2004) The PHI2 method: a way to compute time-variant reliability. Reliab Eng Syst Saf 84(1):75–86

Au SK, Beck JL (2001) Estimation of small failure probabilities in high dimensions by subset simulation. Probab Eng Mech 16(4):263–277

Bisadi V, Padgett JE (2015) Explicit time-dependent multi-hazard cost analysis based on parameterized demand models for the optimum design of bridge structures. J Comput Aided Civ Infrastruct Eng 30:541–554

Breitung K (1988) Asymptotic crossing rates for stationary Gaussian vector processes. Stoch Process Their Appl 29(2):195–207

Breitung K, Rackwitz R (1998) Outcrossing rates of marked Poisson cluster processes in structural reliability. Appl Math Model 12(5):482–490

Chen JB, Li J (2007) The extreme value distribution and dynamic reliability analysis of nonlinear structures with uncertain parameters. Struct Saf 29:77–93

Du XP (2014) Time-dependent mechanism reliability analysis with envelope functions and first-order approximation. ASME J Mech Des 136(8):081010

Du XP, Sudjianto A (2004) The first order saddle point approximation for reliability analysis. AIAA J 42(6):1199–1207

Echard B, Gayton N, Lemaire M (2011) AK-MCS: an active learning reliability method combining kriging and monte Carlo simulation. Struct Saf 32(2):145–154

Hu Z, Du XP (2012) Reliability analysis for hydrokinetic turbine blades. Renew Energy 48(1):251–262

Hu Z, Du XP (2013) Time-dependent reliability analysis with joint upcrossing rates. Struct Multidiscip Optim 48:893–907

Hu Z, Du X (2015) First order reliability method for time-variant problems using series expansions. Struct Multidiscip Optim 51(1):1–21

Hu Z, Mahadevan S (2016) A single-loop kriging surrogate modelling for time-dependent reliability analysis. J Mech Des ASME 138(6):061406

Hu Z, Li HF, Du XP (2013) Simulation-based time-dependent reliability analysis for composite hydrokinetic turbine blades. Struct Multidiscip Optim 47:765–781

Kaymaz I (2005) Application of kriging methods to structural reliability problems. Struct Saf 27(2):133–151

Koehler JK, Owen AB (1996) Computer experiments, in: handbook of statistics. Elsevier Science, New York, pp 261–308

Krzykacz-Hausmann B (2006) An approximate sensitivity analysis of results from complex computer models in the presence of epistemic and aleatory uncertainties. Reliab Eng Syst Saf 91(10–11):1210–1218

Li J, Chen JB, Fan WL (2007) The equivalent extreme-value event and evaluation of the structural system reliability. Struct Saf 29:112–131

Lutes, LD, Sarkani S. (2009) Reliability analysis of system subject to first-passage failure. NASA Technical Report 2009; No. NASA/CR-2009-215782

Rice SO (1944) Mathematical analysis of random noise. Bell Syst Tech J 23(2):282–332

Sacks J, Schiller SB, Welch WJ (1989) Design for computer experiment. Technometrics 31(1):41–47

Singh A, Mourelatos ZP (2010) On the time-dependent reliability of non-monotonic, non-repairable systems. SAE Paper No.2010-01-0696.

Singh A, Mourelatos ZP, Li J (2010) Design for lifecycle cost using time-dependent reliability. J Mech Des ASME 132(9):091008

Singh A, Mourelatos Z, Nikolaiclis E (2011) Time-dependent reliability of random dynamic system using time-series modeling and importance sampling. SAE Technique paper

Sobol IM (1976) Uniformly distributed sequences with additional uniformity properties. USSR Comput Math Math Phys 16:236–242

Sobol IM (1998) On quasi-monte Carlo integrations. Math Comput Simul 47:103–112

Sundar VS, Manhar CS (2013) Time variant reliability model updating in instrumental dynamical systems based on Girsanov's transformation. Int J Non Linear Mech 52:32–40

Wang ZQ, Wang PF (2012) A nested extreme response surface approach for time-dependent reliability-based design optimization. J Mech Des ASME 134:121007

Wang ZQ, Wang PF (2014) A maximum confidence enhancement based sequential sampling scheme for simulation-based design. J Mech Des ASME 136(2):021006

Wang ZQ, Wang PF (2015) A double-loop adaptive sampling approach for sensitivity-free dynamic reliability analysis. Reliab Eng Syst Saf 142:346–356

Welch WJ, Buck RJ, Sacks J (1992) Predicting and computer experiments. Technometrics 34(1):15–25

Xu J (2016) A new method for reliability assessment of structural dynamic systems with random parameters. Struct Saf 60:130–143

Zhang XF, Pandey MD, Zhang YM (2014) Computationally efficient reliability analysis of mechanisms based on a multiplicative dimensional reduction method. J Mech Des ASME 136(6):061006

Zhao YG, Ono T (2001) Moment method for structural reliability. Struct Saf 23(1):47–75

Zhou CC, Lu ZZ, Zhang F, Yue ZF (2015) An adaptive reliability method combining relevance vector machine and importance sampling. Struct Multidiscip Optim 52:945–957

Acknowledgment

This work was supported by the Natural Science Foundation of China (Grant 51475370) and the fundamental research funds for the central university (Grant 3102015 BJ (II) CG009).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yun, W., Lu, Z., Jiang, X. et al. Maximum probable life time analysis under the required time-dependent failure probability constraint and its meta-model estimation. Struct Multidisc Optim 55, 1439–1451 (2017). https://doi.org/10.1007/s00158-016-1594-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-016-1594-z