Abstract

In time-dependent reliability analysis, an upcrossing is defined as the event when a limit-state function reaches its failure region from its safe region. Upcrossings are commonly assumed to be independent. The assumption may not be valid for some applications and may result in large errors. In this work, we develop a more accurate method that relaxes the assumption by using joint upcrossing rates. The method extends the existing joint upcrossing rate method to general limit-state functions with both random variables and stochastic processes. The First Order Reliability Method (FORM) is employed to derive the single upcrossing rate and joint upcrossing rate. With both rates, the probability density of the first time to failure can be solved numerically. Then the probability density leads to an easy evaluation of the time-dependent probability of failure. The proposed method is applied to the reliability analysis of a beam and a mechanism, and the results demonstrate a significant improvement in accuracy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Reliability is the probability that a product performs its intended function over a specified period of time and under specified service conditions (Choi et al. 2007). Depending on whether the performance of the product is time-dependent or not, reliability can be classified into two types: time-variant (time-dependent) reliability and time-invariant reliability.

For a time-invariant performance, its reliability and probability of failure remain constant over time. The time-invariant probability of failure is defined by

where X = \((X_{1}\), \(X_{2}\),\(\cdots \quad X_{n})\) is a random vector, g(·) is a time-invariant performance function or limit-state function, D is a performance variable, e is a limit state, and Pr{·} stands for a probability. Many reliability methods are available for calculating the time-invariant reliability, including the First Order Second Moment Method (FOSM), FORM, and Second Order Reliability Method (SORM) (Du et al. 2005; Huang and Du 2008; Zhang and Du 2010; Madsen et al. 1986; Banerjee and Smith 2011; Kim et al. 2011; Millwater and Feng 2011).

On the other hand, limit-state functions may vary over time. For instance, over the service life of the Thermal Barrier Coating (TBC) of aircraft engines, the stresses and strains of the TBC are time dependent (Miller 1997). Many mechanisms also experience time varying random motion errors due to random dimensions (tolerances), clearances, and deformations of structural components (Dubowsky et al. 1984; Dupac and Beale 2010; Tsai and Lai 2008; Meng and Li 2005; Szkodny 2001). In the systems of wind turbines, hydrokinetic turbines, and aircraft turbine engines, the turbine blade loading always varies over time. Likewise, the wave loading acting on offshore structures fluctuates randomly with time (Nielsen 2010; Kuschel and Rackwitz 2000; Ditlevsen 2002). Almost all dynamic systems involve time-dependent parameters (Rice and Beer 1965; Richard and Mircea 2006; Song and Der 2006). For all the above problems, reliability is a function of time and typically deteriorates with time.

Therefore, a general limit-state function is a function of time t. In addition to random variables X \((X_{1}, X_{2}, \cdots X_{n})\), stochastic processes Y \((t) = (Y_{1}(t), Y_{2}(t),\cdots Y_{m}(t))\) may also appear in the limit-state function. A stochastic process can be considered as a random variable that varies over time. Hence a general time-dependent limit-state function is given by

If we want to know the likelihood of failure at a particular instant of time t, we can still use the time-invariant probability of failure because \(t \) is fixed at the instant. Using (1), we obtain the instantaneous probability of failure

The aforementioned methods, such as FOSM, FORM, or SORM, are ready to calculate \(p_{f}(t)\).

For time-dependent problems, we are more concerned with the time-dependent probability of failure because it provides us with the likelihood of a product performing its intended function over its service time, or a system fulfilling its task during its mission time. The time-dependent probability of failure over a time interval [\(t_{0}\), \(t_{s}\)] is defined by

where \(t_{0}\) is the initial time when the product is put into operation, and \(t_{s}\) is the endpoint of the time interval, such as the service time of the product.

Let the first time to failure (FTTF) be T 1, which is the time that \(g(\cdot )\) reaches its limit state for the first time. T 1 is also the working time before failure and is obviously a random variable. \(p_{f}(t_{0}, t_{s})\) can also be given by

where \(F_{T_{1} } ( \cdot )\) is the cumulative distribution function (CDF) of the FTTF.

Time-dependent reliability methodologies are classified into two categories. The first includes the extreme-value methods, which use the time-invariant reliability analysis methods (FOSM, FORM, SORM, etc.) if one can obtain the distribution of the extreme value of \(g(\textnormal {\textbf {X}},\textnormal {\textbf {Y}}(\tau ))- e(\tau )\) over [\(t_{0}, t_{s}\)] (Chen and Li 2007, 2008; Li et al 2007; Wang and Wang 2012). The reason is that the failure event \(\left \{{g \left ({\textnormal {\textbf {X}},\textnormal {\textbf {Y}}(\tau ),\tau } \right )>e(\tau ),\;\exists \tau \in [t_{0} ,t_{s}]} \right \}\) is equivalent to the event \(\left \{ {\max \limits_{\tau }} \left [{g\left ({\textnormal {\textbf {X}},\textnormal {\textbf {Y}}(\tau )} \right )-e( \tau )} \right ]>0,\;\exists \tau \in [t_{0},t_{s}] \right \}\). However, it is difficult to obtain the distribution of the extreme value. The extreme distribution may be available for limit-state functions in the form of \(D(t) = g\)(X, t) (Wang and Wang 2012) or \(D(t)=g(\textnormal {\textbf {X}},Y(t))\) (Hu and Du 2013). The associated methods, however, are not applicable for the general problems as indicated in (2). Therefore, in most cases, the methods in the second category are used.

The second category includes the first-passage methods because they directly use the first-passage time or the first time to failure (FTTF) T 1 in (5). The failure event \(\left \{ {g\left ( {{\rm \textbf {X}},{\rm \textbf {Y}}\left ( \tau \right ),\tau } \right )>e( \tau ),\;\exists \tau \in \left [ {t_{0} ,t_{s} } \right ]} \right \}\) is equivalent to the event that at least a failure occurs over [\(t_{0}, t_{s}\)] or equivalent to the event of \(t_{0}\) \(\le \,T_{1}\le \,t_{s}\). The most commonly used method is the Rice’s formula (Rice 1944), which is based on the concept of upcrossing.

Define \(N(t_{0}, t_{s})\) as the number of upcrossings that g(·) reaches the limit state e from the safe region g(·) < 0 over the time period [\(t_{0}, t_{s}\)]. The basic probability theory shows that \(N(t_{0}, t_{s})\) follows a binomial distribution. When the probability of upcrossing is very small, it is equal to the mean number of upcrossings per unit time (the upcrossing rate). Because the binomial distribution converges to the Poisson distribution when the time period is sufficiently long or the dependence between crossings is negligible, the upcrossings are assumed to be statistically independent (Rui 1985). With this assumption, the upcrossing rate becomes the first-time crossing rate or the failure rate. Then the probability of failure can be estimated from the upcrossing rate.

Since the development of the Rice’s formula, many improvements have been made (Schall et al. 1991; Engelund et al. 1995; Rackwitz 1998; Lutes and Sarkani 2004, 2009; Sudret 2008; Zhang and Du 2011; Lin 1967; Cramer 1966; Hagen 1992; Li and Melchers 1994; Parkinson 1999; Streicher and Rackwitz 2004). For example, an analytical outcrossing rate (Lutes and Sarkani 2004) has been derived for Gaussian stationary processes. An analytical outcrossing rate has also been given for general Gaussian stochastic processes (Lutes and Sarkani 2009; Sudret 2008) and has been applied to mechanism analysis (Zhang and Du 2011). An important sampling method has been proposed to approximate the outcrossing rate (Singh et al. 2011), and a lifecycle cost optimization method was developed using the outcrossing rate as the failure rate (Singh et al. 2010). If upcrossing events are rare over the considered time period (Zhang and Du 2011), the Poisson assumption-based approaches (Schall et al. 1991; Engelund et al. 1995; Rackwitz 1998; Lutes and Sarkani 2004, 2009; Sudret 2008; Zhang and Du 2011; Lin 1967; Cramer 1966; Hagen 1992; Li and Melchers 1994; Parkinson 1999; Streicher and Rackwitz 2004) have shown good accuracy.

When upcrossings are strongly dependent, however, the above approaches may leads to large errors. In this case, the memory of failure should be considered to guarantee that the obtained first passage failure is indeed the first. Even though the Markov process methods have a property of memory, such memory is weak and is only valid for Markov or similar processes (Yang and Shinozuka 1971, 1972). Vanmarcke (1975) and Preumont (1985) have made some empirical modifications to the Poisson assumption-based formulas. These modifications are good for Gaussian processes.

A promising way to improve accuracy is to relax the independent assumption for upcrossing events. In other words, we may consider the dependence between two or more instants of time (Bernard and Shipley 1972; Madsen and Krenk 1984), instead of considering a single upcrossing at one instant. The accuracy improvement has been shown in Madsen and Krenk (1984) for a Gaussian process in vibration problems.

Inspired by the work in Madsen and Krenk (1984), we develop a time-dependent reliability analysis method with joint upcrossing rates, which extends the method in Madsen and Krenk (1984) to more general limit-state functions that involve time, random variables, and stochastic processes. Because the method combines the joint upcrossing rates (JUR) and First Oder Reliability Method (FORM), we call it JUR/FORM.

In Section 2, we review the commonly used time-dependent reliability analysis methods upon which JUR/ FORM is built. We then discuss JUR/FORM in Section 3 followed by two case studies in Section 4. Conclusions are made in Section 5.

2 Review of time-dependent reliability analysis methods

In this section, we review the integration of the Poisson assumption based method with the First Order Reliability Method (FORM). By this method, \(p_{f}(t_{0}, t_{s})\) is calculated by (Zhang and Du 2011, Madsen and Krenk 1984, and Hu and Du 2012)

where \(p_{f}(t_{0})\) is the instantaneous probability of failure at the initial time point \(t_{0}\), and \(v^{+}(t)\) is the upcrossing rate at t.

\(p_{f}(t_{0})\) can be calculated by any time-invariant reliability methods, such as FOSM, FORM, and SORM. If we know \(v^{+}(t)\), then we can calculate \(p_{f}(t_{0}\), \(t_{s})\) by integrating \(v^{+}(t)\) over [\(t_{0}, t_{s}\)] as indicated in (6).

For a general limit-state function \(D(t)=g({\textbf X}, {\textbf Y}(t), t)\), at a given instant t, the stochastic proceses Y(t) become random variables. If we use FORM, we first transform random variables (X, Y(t)) into standard normal variables U(t) = (U X , U Y (t)) (Du et al. 2005; Huang and Du 2008; Zhang and Du 2010; Madsen et al. 1986; Banerjee and Smith 2011; Zhang and Du 2011). Then we search for the Most Probable Point (MPP) \(\widetilde {{\rm {\bf U}}}( t )=\left ( {\widetilde {{\rm \textbf {U}}}_{\rm \textbf {X}} ,\widetilde {{\rm \textbf {U}}}_{\rm \textbf {Y}} ( t )} \right )\). The MPP is a point at the limit sate, and at this point the limit-state function has its highest probability density. After the limit-state function is linearized at the MPP, the failure event \(g({\textbf X}, {\textbf Y}(t),t) > e(t)\) is equivalent to the event given by Choi et al. (2007).

where

\(\beta (t)\) is the reliability index, which is the length of \(\widetilde {{\rm {\bf U}}}(t)\). \(T(\cdot )\) is the operator of transforming non-Gaussian random variables (X, Y(t)) into Gaussian random variables U(t). \(\Vert \cdot \Vert \) stands for the magnitude of a vector.

Then the upcrossing rate \(v^{+}(t)\) is (Andrieu-Renaud et al. 2004)

where \(\dot {\alpha }(t)\) and \(\dot {\beta } (t)\) are the derivatives of \(\mathbf {\alpha }(t)\) and \(\beta (t)\), respectively, with respect to time t, and \(\Psi (\cdot )\) is a function defined by

in which \(\phi (x)\) and \(\Phi (-x)\) stand for the probability density function (PDF) and cumulative density function (CDF) of the standard normal random variable, respectively.

As mentioned previously, the above method may produce large errors if upcrossings are strongly dependent. Next we use the joint upcrossing rate to improve the accuracy of time-dependent reliability analysis.

3 Time-dependent reliability analysis with joint upcrossing rates and FORM

In this section, we first provide the equations given in Madsen and Krenk (1984) for a Gaussian stochastic process. Based on these equations and FORM, we then derive complete equations in the subsequent subsections.

3.1 Time-dependent reliability analysis with joint upcrossing rates

We now summarize the methodology in Madsen and Krenk (1984) where the joint upcrossing rates are used. Based on the methodologies, necessary equations are developed in Sections 3.2 and 3.3.

For a general stochastic process Q(t), suppose its failure event is defined by {Q(t) > e(t)}. \(p_{f} ( {t_{0} ,t} )=\Pr \left \{ {Q\left ( \tau \right )}\right .\) \(\left .{>e( \tau ),\;\exists \tau \in [ {t_{0} ,t_{s} } ]} \right \}\) is then given by

or

where \(f_{T_{1} } (t)\) is the probability density function (PDF) of the first time to failure (FTTF). The first term in the above equation is the probability of failure at the initial time, and the second term is the probability of failure over [\(t_{0}, t_{s}\)] and no failure occurs at \(t_{0}\).

The upcrossing rate \(v^{+}(t)\) is the probability that an upcrossing occurs at time t per unit of time. It is equal to the summation of two probabilities. The first probability is the PDF \(f_{T_{1} } ( t )\), which is the upcrossing rate occurring for the first time at t. The second probability is the probability rate that the upcrossing occurred at time t given that the first-time upcrossing occurs at time \(\tau \) prior to t. Thus (Madsen and Krenk 1984)

According to the characteristics of conditional probability for two events A and B, we have P(A|B) = P(A, B) / P(B). Thus, the conditional probability \(v^{+}(t \vert \tau )_{\thinspace }\) is equal to \(v^{++}(t,\tau )/v^{+}(\tau )\), and (13) is rewritten as

where \(v^{++}(t,\tau )\) is the second order upcrossing rate or the joint outcrossing rate at t and \(\tau \). It indicates the joint probability that there are outcrossings at both t and \(\tau \).

Equation (14) is a Volterra integral equation, for which a closed-form solution may not exist. Numerical methods are therefore necessary (Burchitz and Meinders 2008; Dickmeis et al. 1984; Diethelm 1994; Navascués and Sebastián 2012; Ujević 2008). In this work, we use the compounded trapezoidal rule method (Diethelm 1994). Other integration methods can also be used. How to solve the Volterra integral equation is briefly presented below.

We first discretize the time interval into p time intervals or p + 1 time instants with \(t_{i}=t_{0}\) + (i–1)h, where \(h=\frac {t_{s} -t_{0} }{p}\) and i = 1, 2, ⋯, p + 1. With the compounded trapezoidal rule (Diethelm 1994), \(\int _{t_{0} }^{t_{s} } {{v^{++}( {t_{s} ,\tau } )f_{T_{1} } ( \tau )} \mathord {\left / {\vphantom {{v^{++}\left ({t_{s},\tau }\right )f_{T_{1}}(\tau )} {v^+( \tau )d\tau }}} \right . \kern -\nulldelimiterspace } {v^+(\tau )d\tau }} \) is approximated as follows:

Combining (15) with (14) yields

Applying (16) to every time instant t t , i = 1, 2, ⋯, p + 1, we obtain

Equation (17) forms a matrix given by

The discretized \(f_{T_{1} } ( t )\) is then be solved by the following equation:

After \(f_{T_{1} } ( t )\) is solved numerically, we can obtain \(p_{f}(t_{0}, t_{s})\) with (12).

The above methodology is applicable for a single stochastic process. We now extend it to a general limit-state function D (t) = g(X, Y(t),t). As D(t) can be converted into a Gaussian process at the MPP, the extension is possible. From (19), it can be found that the single upcrossing rate \(v^{+}(t)\) and joint upcrossing rate \(v^{++}(t,\tau )\) are the bases for solving \(f_{T_{1}}(\tau )\), we first derive equations for these two rates by using FORM and Rice’s formula. We then discuss how to obtain the time-dependent probability of failure based on these rates.

3.2 Single upcrossing rate v+(t)

Recall that after the MPP is found, the general limit-state function g(X, Y(t), t) becomes W(U(t), t), and the failure event is W(U(t),t) = \(\alpha \)(t)U(t)T > β(t). According to the Rice’s formula (Rice 1944, 1945), the single upcrossing rate v +(t) is given by

where \(\omega (t)\) is the standard deviation of \(\dot {W}(t)\), which is the time derivative process of W(t). ω 12(t) is given in terms of the correlation function ρ(t 1, t 2) of W(t) as follows:

We use the finite difference method to estimate \(\dot {\beta }(t)\). This means that we need to perform the MPP search twice. Andrieu-Renaud et al. (2004) also uses the finite difference method but introduces additional random variables for the second MPP search. As will been seen, the method presented here does not introduce any extra random variables.

As mentioned above, W(t) = α(t)U(t)T, and from (8), we have \(\left \| {\alpha (t)} \right \|=1\). W(t) is therefore a standard normal stochastic process, and its coefficient of correlation is given by

where C(t 1, t 2) is the covariance matrix of U(t 1) and U(t 2).

Since U(t) = (U x , U Y (t)) is a vector of standard normal random variables and stochastic processes, C(t 1, t 2) is given by:

where I \(_{n\times n}\) is an n × n identity matrix, which is the covariance matrix of the normalized random variables U X from X. The covariance matrix of the normalized stochastic processes U Y (t) from Y(t) is given in terms of its correlation coefficients as

where C(·, ·) standard for the covariance, \(C^{Y_{i} }\left ( {t_{1} ,t_{2} } \right )\) is the covariance of the normalized stochastic process \(U_{Y_{i} } ( t )\) at time instants \(t_{1}\) and \(t_{2}\). \(\rho ^{Y_{i} }\) is the corresponding correlation function of the normalized stochastic process \(U_{Y_{i} } ( t )\) at these two time instants and is given by

Substituting (22) into (21) yields

Since we perform the MPP search at two instants and (26) also needs two instants (t, t), we now derive equations for two general instants t 1 and t 2 . For time derivatives, such as \(\dot {\beta }(t)\), we let \(t_{1}=t_{1}\), \(t_{2}=t_{1}+ \Delta t\), where \(\Delta t\) is a small step size.

Differentiating (23), we obtain

and

\(\dot {{\rm \textbf {C}}}_{1}^Y (t_{1},t_{2}), \quad \dot {{\rm \textbf {C}}}_{2}^Y (t_{1},t_{2}),\) and \(\ddot {{\rm \textbf {C}}}_{12}^Y (t_{1},t_{2})\) are given by

and

Specially, for a pair of the same time instant (t, t), we have

and

Therefore, (26) is rewritten as

where \(\ddot {{\rm \textbf {C}}}_{12} (t,t)\) is computed by substituting (\(t_{1},t_{2})\) with \((t,t)\) in (29), and \(\dot {\alpha }(t)\) and \(\dot {\beta }(t)\) are calculated by

and

We have obtained all the equations for the single upcrossing rate \(v^{+}(t)\) in (20).

3.3 Joint upcrossing rate v++(t\(_{1}\), t\(_{2})\)

Now we derive the joint upcrossing rate \(v^{++}(t_{1}\), \(t_{2})\) between two arbitrary time instants \(t_{1}\) and \(t_{2}\). The joint upcrossing rate \(v^{++}(t_{1}\), \(t_{2})\), which indicates the joint probability that outcrossing events occur at both \(t_{1}\) and \(t_{2}\), is defined by the Rice’s formula as follows (Rice 1944, 1945):

where \(f_{\dot {{\rm \textbf {W}}} {\rm \textbf {W}}} \left ({\dot {{\rm {\bf W}}},{\rm \textbf {W}}} \right )\) is the joint normal density function of \(\dot {{\rm \textbf {W}}} = (\dot {W} (t_{1}), \dot {W} (t_{2}))\), and W = \((W(t_{1})\), \(W(t_{2}))\), β \(= (\beta _{1}\), \(\beta _{2})\), \(\beta _{1} = \beta (t_{1})\), and \(\beta _{2} = \beta (t_{2})\). The covariance matrix of \(\dot {{\rm {\bf W}}}\) and W is given by Madsen and Krenk (1984)

in which

and

Substituting (22) into (45)–(48) yields

and

in which

and

C(\(t_{1}\), \(t_{2})\), \(\dot {{\rm \textbf {C}}}_{1} (t_{1} ,t_{2} )\), \(\dot {{\rm \textbf {C}}}_{2} (t_{1},t_{2})\), and \(\ddot {{\rm {\bf C}}}_{12} (t_{1},t_{2})\) in (49)–(52) are computed using (23), and (27) through (29).

With the above equations derived, we can now use the equations in Madsen and Krenk (1984) directly to calculate \(v^{++}(t_{1},t_{2})\). The equations are summarized blow.

in which

\(\mu_{1}\) and \(\mu_{2}\), \(\lambda_{1}\) and \(\lambda_{2}\), \(\kappa \) are the mean values, standard deviations, and correlation coefficient of \(\left . {\dot {W} (t_{1})} \right | \beta \) and \(\left . {\dot {W} (t_{2})} \right |\beta ,\) respectively. They are calculated by substituting the covariance matrix in (43) into the following equations

After the derivation of \(v^{+}(t)\) and \(v^{++}(t_{1}\), \(t_{2})\), \(p_{f}(t_{0}\), \(t_{s})\) is computed with (12), (19), (20), and (55).

3.4 Numerical implementation

There are many equations involved in JUR/FORM. In this section, we summarize its numerical implementation. From (11) and (12), we know that to obtain \(p_{f}(t_{0}\), \(t_{s})\), we need to integrate the PDF \(f_{T_{1}}(t)\) over [\(t_{0}\), \(t_{s}\)] numerically. At each of the integration point between \(t_{0}\) and \(t_{s}\), the integral equation in (14) should be solved. To maintain good efficiency, we propose the following numerical procedure.

We start to evaluate the PDF at the last instant \(t_{s}\). To do so, we discretize the time interval [\(t_{0}\), \(t_{s}\)] into p + 1 instants \(t_{i}\) \(( {i=0,1,\;2,\;\cdots ,\;p} )\), at each of which the integral equation in (14) for \(f_{T_{1}}(t_{s})\) will be solved. We will then obtain the PDFs at all these instants. Thus the total number of the MPP will be 2(p + 1). This procedure is summarized below, and the associated flowchart is given in Fig. 1.

-

Step 1:

Initialize the random variables and stochastic processes, including transforming non-Gaussian variables into Gaussian ones, discretizing the time interval [\(t_{0}\), \(t_{s}\)] into p + 1 time instants \(t_{0} ,t_{1} ,\cdots ,t_{i} ,\cdots ,t_{p+1} =t_{s} \), and setting a time step \(\Delta t\) for the MPP search at \(t_{i} +\Delta t\;\;( {i=1,2,\cdots ,p+1} )\).

-

Step 2:

Perform the MPP search at every discretized point \(t_{i}\), as well as at \(t_{i}+\Delta t\); calculate \(\alpha (t_{i})\), \(\beta t_{i})\), \(\dot {\alpha } (t_{i} )\), \(\dot {\beta } (t_{i})\), covariance matrix \({\rm {\bf C}}( {t_{i} ,\;t_{j} } )\;\left ( {i,\;j=1,\;2,\;\cdots ,\;p+1} \right )\), and c by using (23), (40), (41) and (43)–(54).

-

Step 3:

Solve for thesingle upcrossing rate \(v^{+}(t_{i})\) using (20), joint upcrossing rate \(v^{++}( {t_{i} ,\;t_{j} } )\) \(\left ( {i,\;j=1,\;2,\;\cdots ,\;p+1} \right )\) using (55), and compute the PDF \(f_{T_{1}}(t_{i})\) at each time instant using (19).

-

Step 4:

Calculate \(p_{f}(t_{0}\), \(t_{s})\).

4 Numerical examples

In this section, two examples are used to demonstrate the developed methodology. The first one is the reliability analysis of a corroded beam under time-variant random loading, and the second one is the reliability analysis of a two-slider crank mechanism. The two examples are selected because they represent two kinds of important applications. Specifically, the first example involves both of a stochastic process and random variables in the input of the limit-state function. The stochastic process is the time-variant random load acting on the beam. In the second example, there are no stochastic processes in the input of the limit-state function. But the limit-state function is still time-dependent because it is an explicit function of time.

To show the accuracy improvement of JUR/FORM, we compare its results with those of the traditional Poisson assumption based single upcrossing rate method, which has been reviewed in Sec II. Because the exact solutions are not available, we use Monte Carlo Simulation (MCS) as a benchmark.

In order to investigate the effects of parameter settings on the accuracy of JUR/FORM, numerical studies were also performed for Example 1. The effects studied include the effects of number of discretization points for the time interval \([t_{0} ,t_{s} ]\), the time step size \(\Delta t\), the level of probability of failure, and the dependency of the limit-state function between two successive time instants.

Next we briefly review the MCS that we used.

4.1 Monte Carlo simulation

When there are stochastic processes involved in the limit-state function, to generate the samples of the stochastic process \(Y_{i}\), we treat the stochastic process as correlated random variables \(Y_{i} =(Y_{i} (t_{1} ),\;Y_{i} (t_{2} ),\cdots ,Y_{i} (t_{N} ))^T\) after discretizing the time interval [\(t_{0}\), \(t_{s}\)] into N instants. For a Gaussian stochastic process, the correlated random variables Y i are generated after transforming the correlated random variables into uncorrelated ones as follows (Gupta et al. 2000)

where \(\xi =( {\xi _{1} ,\;\xi _{2} ,\cdots ,\;\xi _{N} } )^T\) is the vector of N independent standard normal random variables; \(\mu _{Y_{i} } =\left ({\mu _{Y_{i} } ( {t_{1} } ),\;\mu _{Y_{i} } \left ( {t_{2} } \right ),\cdots ,\;\mu _{Y_{i}} (t_{N})} \right )^T\) are the vector of mean values of \({\rm \textbf {Y}}_{i} =\left ( {Y_{i} \left ( {t_{1} } \right ),\;Y_{i} ( {t_{2} } ),\cdots ,\;Y_{i} ( {t_{N} } )} \right )^T\); and L is a lower triangular matrix obtained from the covariance matrix of Y i .

Let matrix A \(_{{N}_{\times _{N}}}\) be the covariance matrix of Y \(_{i}\). L can be obtained by

in which D is a diagonal eigenvalue matrix of the covariance matrix A, and P is the N × N square matrix whose i-th column is the i-th eigenvector of A.

4.2 Example 1: Corroded beam under time-variant random loading

4.2.1 Problem statement

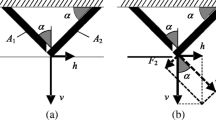

The beam problem in Andrieu-Renaud et al. (2004) is modified as our first example. As shown in Fig. 2, the cross section A-A of the beam is rectangular with its initial width \(a_{0}\) and height \(b_{0}\). Due to corrosion, the width and height of the beam decrease at a rate of r. A random load F acts at the midpoint of the beam. The beam is also subjected to a constant load due to the weight of the steel beam.

A failure occurs as the stress of the beam exceeds the ultimate stress of the material, and the limit-state function is given by

where \(\sigma _{u}\) is the ultimate strength, \(\rho _{st}\) is the density, and L is the length of the beam.

The variables and parameters in (61) are provided in Table 1.

The covariance function of F(t) is given by

where

in which \(\zeta = 1\) year is the correlation length. The auto-correlation becomes weaker with a longer time interval \(t_{2}-t_{1}\), and \(\sigma ^F(t_{1})=\sigma ^F(t_{2})=700\;N\) is the standard deviation of \(F(t)\) at time instants \(t_{1}\) and \(t_{2}\).

Since F(t) is a Gaussian stationary stochastic process, we have

in which \(\rho _{U_{F} } (t_{1} ,\;t_{2} )\) is the auto-correlation function of the underlying Gaussian standard stochastic process \(U_{F}(t)\).

4.2.2 Results

Following the numerical procedure of JUR/FORM in Fig. 1, we computed the time-dependent probabilities of failure over different time intervals up to [0, 30] years. The time intervals were discretized into 80 small intervals, and the time size for the second MPP search was taken as 0.001 years. To eliminate the accuracy difference caused by different numerical integration methods, for the traditional method, we used the same integration method as the proposed method; namely, we discretized the time interval into 80 small intervals and then used the rectangle integration method to calculate the integral in (6). For MCS, the evaluated time intervals were discretized into 600 time instants with a sample size of 2 × 106 at each time instant to generate the stochastic loading F(t). The results of the three methods are plotted in Fig. 3 and are given in Table 2. The relative errors, ε, with respect to the MCS solutions, and the confidence intervals (CI) of the MCS solutions, are also given in Table 2.

The results indicate that the proposed JUR/FORM method is much more accurate than the traditional method. The traditional method leads to unacceptable errors while JUR/FORM shows excellent agreement with the MCS solution.

In Table 3, we give the numbers of function calls, \(N_{func}\), as a measures of efficiency. The number of function calls is defined as the times that the limit-state function is evaluated with the inputs of x, y(\(t_{i})\) and \(t_{i}\). The actual computational cost (times) is also given. The computational times were based on a Dell computer with Intel (R) Core (TM) i5-2400 CPU and 8 GB system memory that we used.

With the same integration method, the results show that the accuracy improvement from JUR/FORM indeed comes from the consideration of the dependencies between upcrossing events. Table 3 also indicates that the numbers of function calls by both methods are almost the same. This is because of the use of the same integration method.

The traditional method, however, may need less number of function calls because other integration methods could be used. We also applied the cursive adaptive Lobatto quadrature method to the traditional method. The probabilities of failure obtained are identical to those given in Table 2, but with fewer numbers of function calls and less computational time as shown in Table 4. This means that the traditional method is more efficient than the proposed method for this example.

The results given in Tables 1, 2, 3 and 4 demonstrated that JUR/FORM produced much higher accuracy with a cost of increased computational effort, but the increased computational cost is moderate.

4.2.3 Numerical studies

-

(a)

Effect of discretization and time step size

As shown in the numerical procedure, the time interval [\(t_{0}\), \(t_{s}\)] is discretized into p + 1 time instants \(t_{i} ( {i=0,1,2,\cdots ,p} )\) or p small intervals. The number of discretization points may affect the accuracy of the analysis result. If the number is too small, the error will be large. On the contrary, if the number is too large, the error will be small but the efficiency will be low. To study the effect of the number of discretization points, we discretized the time interval [0, 30] years into 20, 30, 40, 50, 60, 70 and 80 small intervals.

Table 5 shows the results from JUR/FORM with different numbers of discretization points. When the time interval is divided into 20 small intervals, as expected, the error is the largest; however, the result is still more accurate than the traditional method. With the higher number of discretization points, the accuracy of JUR/FORM is higher.

In addition to the number of discretization, there is another parameter that may affect the performance of JUR/FORM. This parameter is the time-step size Δt, which is used for numerically evaluating the derivatives \(\dot {\alpha } (t_{i} )\) and \(\dot {\beta } (t_{i} )\) in (40) and (41), respectively. We used \(\Delta t\) = 0.0005, 0.001, 0.005 and 0.01 to study its effect. Table 6 provides the results, which show that the time-step size does affect the accuracy, but the effect is not significant. The general discussions regarding the effect of a step size for numerical derivatives can be also found in Madsen and Krenk (1984), Hu and Du (2012), and Andrieu Renaud et al. (2004).

-

(b)

Effect of larger probability of failure

To investigate the accuracy of JUR/FORM when the probability of failure becomes larger, we compared the results of MCS, JUR/FORM and traditional method for six cases at different probability levels. Table 7 show that the larger is the probability of failure, the worse is the traditional method, while JUR/FORM is always much more accurate than the traditional method.

-

(c)

Effect of the auto-covariance of the limit-state function

JUR/FORM is developed to better account for dependent failures over a time period. To demonstrate this, we analyzed the accuracy of JUR/FORM for five cases with different levels of dependency. In the five cases, the coefficients of auto-correlation ρ, ranging from 0.108 to 0.961, between two successive time instants [t i ,t i +1, i = 1, 2, . . . , 99 over [0, 30] years. Note that the coefficient of auto-correlation of the limit-state function is almost constant given the auto-correlation function of the stochastic process for the external force in (62).

Table 8 shows that the error of the traditional method decreases when the dependency becomes weaker while the accuracy of JUR/FORM method is always better than the traditional effort.

4.3 Example 2: Two-slider crank mechanism

A two-slider crank mechanism is shown in Fig. 4. This type of mechanism is widely used in engines. The crank is rotating at an angular velocity of ω. The motion error is defined as the difference between the desired displacement difference and the actual displacement difference between sliders A and B. The error should not exceed 0.94 mm over one motion cycle.

The limit-state function is given by

in which

The variables and parameters in the limit-state function are given in Table 9.

This mechanism problem is different from the beam problem in the follow two aspects. First, this problem does not involve any input stochastic processes, but the limit-state function is still a stochastic process because it is a function of time. Second, the dependence of the limit-state function at any two time instants is strong. The auto-dependence does not decay with a longer time period. On the contrary, in the first problem, the auto-dependency between the performance values at t 1 and t 2 will be weaker when t 2 − t 1 becomes larger as indicated in (62).

The angular velocity of the crank is ω = π rad/s, and the time period of one motion cycle is then [0, 2] seconds. Following the numerical procedure of JUR/FORM, we computed the probabilities of failure over different time intervals. Each of the evaluated time intervals were discretized into 60 smaller intervals. The step size for the second MPP search was 8 × 10−5 seconds. The traditional method and MCS with a sample size of 106 were also applied. We also used the same integration method for both the traditional method and the proposed method to eliminate the accuracy difference caused by different numerical integration methods. We discretized the time interval into 60 small intervals and then used the rectangle integration method to calculate the integral in (6). The results from the three methods are plotted in Fig. 5 and are given in Table 10.

The results indicate that JUR/FORM is significantly more accurate than the traditional method. With the same integration method, Table 10 indicates that the accuracy improvement is indeed due to the consideration of dependent upcrossings by JUR/FORM.

The number of function calls andMPP searches are given in Table 11, which shows that the proposed method is almost as efficient as the traditional method.

As what we did in Example 1, we also solved (6) using the direct cursive adaptive Lobatto quadrature method. The probabilities of failure obtained are almost identical to those in Table 10. Contrary to Example 1, The efficiency of the traditional method, however, varies for different time periods as shown in Table 12.

The results show that the increased computational cost by JUR/FORM is reasonable given its significantly improved accuracy.

5 Conclusion

Time-dependent reliability analysis is needed in many engineering applications. When multiple dependent upcrossings occur over a time interval, the single upcrossing rate method with Poisson assumption may produce large errors in estimating the time-dependent probability of failure.

This work demonstrates that the joint upcrossing rates proposed in Madsen and Krenk (1984) can be extended to a general time-dependent limit-state function with much higher accuracy. This work integrates the FORM with the joint upcrossing rates so that high computational efficiency can be maintained. Analytical expressions of the single and joint upcrossing rates are also derived based on FORM.

The proposed method has shown good accuracy when the probability of failure is small and the dependency between failures is strong. When the probability of failure becomes larger or the dependency becomes weaker, the proposed method remains more accurate than the traditional upcrossing rate method. Since the proposed method requires a numerical method in solving the integral equation and derivatives, its accuracy may be affected by the number of discretization points and the time size between two consecutive MPP searches.

The proposed method can be used for general stochastic processes, including non-Gaussian non-stationary processes. To do this, we need at first to transform a general stochastic process into a standard Gaussian process. The transformation should make not only the CDF functions but also the auto-covariance functions be equal to each other before and after the transformation.

Possible future work includes improving the efficiency and robustness of the method and applying it to time-dependent reliability-based design optimization.

References

Andrieu-Renaud C, Sudret B, Lemaire M (2004) The PHI2 method: a way to compute time-variant reliability. Reliab Eng Syst Saf 84(1):75–86

Banerjee B, Smith BG (2011) Reliability analysis for inserts in sandwich composites. Adv Mater Res 275:234–238

Bernard MC, Shipley JW (1972) The first passage problem for stationary random structural vibration. J Sound Vib 24(1):121–132

Burchitz IA, Meinders T (2008) Adaptive through-thickness integration for accurate springback prediction. Int J Numer Methods Eng 75(5):533–554

Chen JB, Li J (2007) The extreme value distribution and dynamic reliability analysis of nonlinear structures with uncertain parameters. Struct Saf 29(2):77–93

Chen JB, Li J (2008) The inherent correlation of the structural response and reliability evaluation. Jisuan Lixue Xuebao/Chinese J Comput Mech 25(4):521–528

Choi SK, Grandhi RV, Canfield RA (2007) Reliability-based structural design. Springer, New York. (ISBN-10: 1846284449; ISBN-13: 978-1846284441)

Cramer H (1966) On the intersections between the trajectories of a normal stationary stochastic process and a high level. Ark Mat 6:337–349

Dickmeis W, Nessel RJ, van Wickeren E (1984) A general approach to counterexamples in numerical analysis. Numer Math 43(2):249–263

Diethelm K (1994) Modified compound quadrature rules for strongly singular integrals. Comput 52(4):337–354

Ditlevsen O (2002) Stochastic model for joint wave and wind loads on offshore structures. Struct Saf 24(2–4):139–163

Du X, Sudjianto A, Huang B (2005) Reliability-based design with the mixture of random and interval variables. J Mech Des Trans ASME 127(6):1068–1076

Dubowsky S, Norris M, Aloni E, Tamir A (1984) Analytical and experimental study of the prediction of impacts in planar mechnical systems with clearances. J Mech Trans Autom Des 106(4):444–451

Dupac M, Beale DG (2010) Dynamic analysis of a flexible linkage mechanism with cracks and clearance. Mech Mach Theory 45(12):1909–1923

Engelund S, Rackwitz R, Lange C (1995) Approximations of first-passage times for differentiable processes based on higher-order threshold crossings. Probabilistic Eng Mech 10(1):53–60

Gupta AK, Móri TF, Székely GJ (2000) How to transform correlated random variables into uncorrelated ones. Appl Math Lett 13(6):31–33

Hagen O (1992) Conditional and joint failure surface crossing of stochastic processes. J Eng Mech 118(9):1814–1839

Hu Z, Du X (2012) Reliability analysis for hydrokinetic turbine blades. Renew Energy 48:251–262

Hu Z, Du X (2013) A sampling approach to extreme value distribution for time-dependent reliability analysis. doi:10.1115/1.4023925

Huang B, Du X (2008) Probabilistic uncertainty analysis by mean-value first order Saddlepoint approximation. Reliab Eng Syst Saf 93(2):325–336

Kim DW, Jung SS, Sung YH, Kim DH (2011) Optimization of SMES windings utilizing the first-order reliability method. Trans Korean Inst Electr Eng 60(7):1354–1359

Kuschel N, Rackwitz R (2000) Optimal design under time-variant reliability constraints. Struct Saf 22(2):113–127

Li CW, Melchers RE (1994) Structural systems reliability under stochastic loads. Proc Inst Civ Eng Struct Build 104(3):251–256

Li J, Chen JB, Fan WL (2007) The equivalent extreme-value event and evaluation of the structural system reliability. Struct Saf 29(2):112–131

Lin YK (1967) Probabilistic theory of structural dynamics. McGraw-Hill, New York

Lutes LD, Sarkani S (2004) Random vibrations: analysis of structural and mechanical systems. Elsevier, Burlington

Lutes LD, Sarkani S (2009) Reliability analysis of systems subject to first-passage failure

Madsen PH, Krenk S (1984) Integral equation method for the first-passage problem in random vibration. J Appl Mech Trans ASME 51(3):674–679

Madsen HO, Krenk S, Lind NC (1986) Methods of structural safety. Englewood Cliffs, New Jersey

Meng J, Li Z (2005) A general approach for accuracy analysis of parallel manipulators with joint clearance. In: IEEE IRS/RSJ international conference on intelligent robots and systems, IROS 2005; Edmonton, AB. Canada, pp 790–795

Miller RA (1997) Thermal barrier coatings for aircraft engines: history and directions. J Therm Spray Technol 6(1):35–42

Millwater H, Feng Y (2011) Probabilistic sensitivity analysis with respect to bounds of truncated distributions. J Mech Des Trans ASME 133(6)

Navascués MA, Sebastián MV (2012) Numerical integration of affine fractal functions. J Comput Appl Math 252:169–176

Nielsen UD (2010) Calculation of mean outcrossing rates of non-Gaussian processes with stochastic input parameters—reliability of containers stowed on ships in severe sea. Probabilistic Eng Mech 25(2):206–217

Parkinson DB (1999) Second order stochastic simulation with specified correlation. Adv Eng Softw 30(7):489–494

Preumont A (1985) On the peak factor of stationary Gaussian processes. J Sound Vib 100(1):15–34

Rackwitz R (1998) Computational techniques in stationary and non-stationary load combination—a review and some extensions. J Struct Eng (Madras) 25(1):1–20

Rice SO (1944) Mathematical analysis of random noise. Bell Syst Techn J 23:282–332

Rice SO (1945) Mathematical analysis of random noise. Bell Syst Tech J 24:146–156

Rice JR, Beer FP (1965) First-occurrence time of hih-level crossings in a continuous random process. J Acoust Soc Am 39(2):323–335

Richard VF, Mircea DG (2006) Reliability of dynamic systems under limited information. Probabilist Eng Mech 24(1):16–26

Rui VMP (1985) The theory of statistics of extremes and El Nino phenomena-a stochastic approach. Massachusetts Institute of Technology, Boston

Schall G, Faber MH, Rackwitz R (1991) Ergodicity assumption for sea states in the reliability estimation of offshore structures. J Offshore Mech Arctic Eng 113(3):241–246

Singh A, Mourelatos ZP, Li J (2010) Design for lifecycle cost using time-dependent reliability. J Mech Des Trans ASME 132(9):0910081–09100811

Singh A, Mourelatos Z, Nikolaidis E (2011) Time-dependent reliability of random dynamic systems using time-series modeling and importance sampling. SAE Int J Mater Manuf 4(1):929–946

Song J, Der Kiureghian A (2006) Joint first-passage probability and reliability of systems under stochastic excitation. J Eng Mech 132(1):65–77

Streicher H, Rackwitz R (2004) Time-variant reliability-oriented structural optimization and a renewal model for life-cycle costing. Probabilistic Eng Mech 19(1):171–183

Sudret B (2008) Analytical derivation of the outcrossing rate in time-variant reliability problems. Struct Infrastruct Eng 4(5):353– 362

Szkodny T (2001) The sensitivities of industrial robot manipulators to errors of motion models’ parameters. Mech Mach Theory 36(6):673–682

Tsai MJ, Lai TH (2008) Accuracy analysis of a multi-loop linkage with joint clearances. Mech Mach Theory 43(9):1141–1157

Ujević N (2008) An application of the Montgomery identity to quadrature rules. Rendiconti del Seminario Matematico 66(2):137–143

Vanmarcke EH (1975) On the distribution of the first-passage time for normal stationary random processes. J Appl Mech Trans ASME 42(1):215–220

Wang Z, Wang P (2012) A nested extreme response surface approach for time-dependent reliability-based design optimization. J Mech Des Trans ASME 134(12):12100701–12100714

Yang JN, Shinozuka M (1971) On the first excursion probability in stationary narrow-band random vibration. J Appl Mech Trans ASME 38(4):1017–1022

Yang JN, Shinozuka M (1972) On the first-excursion probability in stationary narrow-band random vibration. J Appl Mech Trans ASME 39 Ser E(3):733–738

Zhang J, Du X (2010) A second-order reliability method with first-order efficiency. ASME J Mech Des Trans 132(10):101006-1–101006-8

Zhang J, Du X (2011) Time-dependent reliability analysis for function generator mechanisms. J Mech Des Trans ASME 133(3)

Acknowledgments

The authors gratefully acknowledge the support from the Office of Naval Research through contract ONR N000141010923 (Program Manager – Dr. Michele Anderson), the National Science Foundation through grant CMMI 1234855, and the Intelligent Systems Center at the Missouri University of Science and Technology.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hu, Z., Du, X. Time-dependent reliability analysis with joint upcrossing rates. Struct Multidisc Optim 48, 893–907 (2013). https://doi.org/10.1007/s00158-013-0937-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-013-0937-2