Abstract

Modal transducers can be designed by optimizing the polarity of the electrode which covers the piezoelectric layers bonded to the host structure. This paper is intended as a continuation of our previous work (Donoso and Bellido J Appl Mech 57:434–441, 2009a) to make better the performance of such piezoelectric devices by simultaneously optimizing the structure layout and the electrode profile. As the host structure is not longer fixed, the typical drawbacks in eigenproblem optimization such as spurious modes, mode tracking and switching or repeated eigenvalues soon appear. Further, our model has the novel issue that both cost and constraints explicitly depend on mode shapes. Moreover, due to the physics of the problem, the appearance of large gray areas is another pitfall to be solved. Our proposed approach overcomes all these difficulties with success and let obtain nearly 0-1 designs that improve the existing optimal electrode profiles over a homogeneous plate.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The concept of piezoelectric modal transducers, i.e., those which let isolate a particular mode of a structure from the rest, dates back to Lee and Moon (1990). In that remarkable work, the authors first derived theoretically expressions for one-dimensional modal transducers, and then tested experimentally the devices manufactured. What makes the former possible it is precisely the orthogonality principle among the mode shapes of beam-type structures. As the eigenmodes are orthogonal to each other with respect to the unit weight function, then modal transducers are found by tailoring the surface electrode with areas of positive, negative or null polarity, according to the curvature of the mode of interest. Besides, they also proved that patterns for the pair modal sensor/actuator which measures/excites the same mode shape coincided thanks to the reciprocity of the piezoelectric effect.

Unfortunately, this approach cannot be extended to the 2d case mainly due to the non-validity of the aforementioned orthogonality principle for plate-type structures with arbitrary boundary conditions. However, even in the cases for it can, an intermediate-values polarity distribution is required, and this could be really difficult to achieve in practice, as pointed out in Clark and Burke (1996). Many authors have studied the underlined problem in detail to date and just some of them are mentioned here. In Kim et al. (2001), though the results obtained from genetic algorithms over rough meshes are satisfactory, the implementation requires extra interface circuits. Sun et al. (2002) proposed structures composed of many small piezo patches of different and uniform thickness. Preumont et al. (2003) introduced a new porous distributed electrode concept. In Jian and Friswell (2007), the shape of a sensor is optimized. More recently, electrode-shaping techniques have been performed in Pulskamp et al. (2012) to detect modes only, but not to cancel others. Also Zhang et al. (2014) reduce the sound radiation in shells under harmonic excitations.

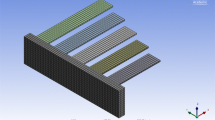

In accordance with the ideas described in Jian and Friswell (2007) and using the topology optimization method (Bendsøe and Sigmund 2003), we point up the technique described in Donoso and Bellido (2009a). In that work, a systematic procedure to design piezoelectric modal transducers for plates moving out-of-plane was developed. Modal transducers were found as solutions of an optimization problem, where the design variable (polarity) that controls the electrode profile takes exclusively −1 or 1 values. As the polarity never takes on value 0, it means that the host structure is covered throughout by an electrode in the optimum, as we can see in Fig. 1 (notice that they are really pure 0-1 designs). This approach was generalized to the in-plane case (Donoso and Bellido 2009b) and to shells (Donoso et al. 2010). Later, the investigation followed by designing micro-transducers that were manufactured and tested showing quite good performance in Sánchez-Rojas et al. (2010).

Electrodes profiles (a) and (c) to isolate the sixth mode (b) and (d) for a plate fixed at its left edge when considering the first 20 out-of-plane modes only, and the first 12 in-plane modes only, respectively. Black and white areas mean regions with opposite polarity, and that is the reason why it does not matter whether there are disconnected black areas or not. Both simulations have been extracted from Donoso and Bellido (2009a) and Donoso and Bellido (2009b), respectively

Motivated by many applications, particularly in microelectronics, it is interesting to investigate whether making the layout of the host structure free as a design variable, together with the electrode profile, it could improve the performance of the designed transducers.

In the last decade, some authors have performed precisely simultaneous design of both host structure and piezoelectric layers by using topology optimization. Kögl and Silva (2005) considered the optimization of the piezoelectric part together with the polarization. Carbonari et al. (2007), and Luo et al. (2010), among others, optimized simultaneously the host structure and the piezoelectric distribution. Other authors have gone further, on including as a third design variable the spatial distribution of the control voltage in the optimization problem, in some way connected with the polarity of the piezoelectric layers (Kang et al. 2011, 2012).

In contrast to the works commented above regarding simultaneous optimization, the problem studied in this paper assumes that the piezoelectric material is surface bonded to the structure wherever it is. Hence the design variables are essentially two, the structure layout of the whole structure and the electrode profile (or polarity). Actually, that approach has recently been explored successfully in Ruiz et al. (2013) for designing in-plane piezoelectric sensors for the static case.

In this paper, we just continue our previous investigation in modal filtering by using simultaneous optimization in both the structure layout and the electrode polarity. We would like to remark that the main novelty here is that both objective function and constraints will depend on the eigenmodes. As their shape and order will change during the optimization process because of the host structure is no longer fixed, they will appear the classical difficulties involved when dealing with eigenfrequencies and eigenmodes such as spurious modes, mode tracking and switching or repeated eigenfrequencies.

On the other hand, it is worth mentioning that just few papers dealing with eigenmodes optimization appear in the literature. Next those are briefly discussed. In Hansen (2005), a specific (single) mode shape of a fiber laser package is designed in order to minimize the elongation of the fiber under dynamic excitation. In Maeda et al. (2006), a multi-objective function is formulated in order to find optimal configurations that simultaneously satisfy (simple) eigenfrequency, eigenmode, and stiffness requirements at certain points of a vibrating structure. A similar problem is treated in Nakasone and Silva (2010). The novelty there is to include the electromechanical coupling coefficient in the objective function so that the energy conversion is maximized for a specific mode. In Tsai and Cheng (2013), eigenmodes appear in the constraints only. One of the objectives of that work is to determine the material distribution of a structure that maximizes the fundamental frequency and at the same time synthesize the first two modes. However, the list is more extensive when talking about eigenfrequencies optimization. The readers are referred to Ma et al. (1994), Díaz and Kikuchi (1992), Jensen and Pedersen (2006) and Du and Olhoff (2007), among many others.

Having all this in mind, we reconsider the problem of designing piezoelectric modal transducers from a new perspective: find the design (both structure and electrode) that maximizes the response of a specific mode shape and suppress as much as possible the response of the other selected mode shapes among a collection of J modes. Notice that now we will focus on following a prescribed mode shape rather than a mode number. Although the method proposed is not restricted to the size of the transducers, all designs have been obtained at the micro-scale, thinking of the wide range for MEMS applications, like micro-grippers, surface probes or micro-optical devices.

The layout of the paper is as follows. Section 2 is dedicated to the description and formulation of the problem. In Section 3 the discrete formulation is presented. Section 4 is devoted to the sensitivity analysis depending on whether the eigenvalues are simple or double. In Section 5, our numerical approach in a pseudocode way is explained. Several numerical examples for different boundary conditions are included in Section 6. Finally, some conclusions are discussed in the last section.

2 Continuous formulation of the problem

We start considering, as our design domain, a L x ×L y rectangular plate of arbitrary boundary conditions (see Fig. 2). The host structure is bonded to both the top and the bottom surfaces with two piezoelectric layers of negligible stiffness and mass compared to the plate.

As explained in the introduction, given a set of J mode shapes, the design problem consists in determining simultaneously the structure layout and the electrode profile so that a specific eigenmode is isolated from the rest. Therefore, two design variables χ s and χ p are used. χ s is a characteristic function that represents the structure layout such that χ s ∈{0,1} meaning void and structure (and piezo as well), respectively. χ p is another characteristic function that represents the polarity of the electrode, that is, χ p ∈{−1,1}, negative or positive polarity, respectively. Actually, it will be more convenient to work with 2χ p −1, being now χ p ∈{0,1}. This will be used in the discrete problem.

Regarding the physics of the problem, and following Lee and Moon (1990), on deforming the whole structure, an electric signal is induced owing to the piezoelectric effect. Assuming that the piezoelectric constants are the same in both spatial directions, x, y, and piezo axes are coincident with the geometric ones of the plate, the charge q collected by the sensor can be expressed, up to a scaling factor, by

where (u, v, w) is the displacement vector, h s is the thickness of the plate and h p is the one of the piezoelectric layers.

Notice that the role of the electrode is here crucial because only the area of the piezoelectric sensor covered by an electrode will be electrically affected. That is the physical reason why we have not considered that χ p = 0 (null polarity) at the beginning, otherwise the charge of the device would be lower. There is also a mathematical reason for that: in Donoso and Bellido (2009a) it is shown that whenever the host structure is fixed, the piezoelectric electrode will cover the whole structure (either with positive or negative polarity, i.e. χ p = 1 or χ p = −1) in the optimum.

By using modal expansion, the displacements u, v, w are written as

where Φ j (x, y)=(ϕ j (x, y), ψ j (x, y), φ j (x, y)) is the j-th mode shape, and η j (t) the j-th modal coordinate. Inserting (2) in (1) we arrive at

that is, the response of the sensor can be rewritten in such a way that the spatial terms appear separated from the temporal ones, and therefore the coefficient

depends on the j-th mode shape only.

It is important to remark that such coefficients depend explicitly on χ p and implicitly on χ s through the eigenmode equation that we denote hereafter by

As we work with a plate-type model, the curvature is null and then in-plane modes appear decoupled from out-of-plane modes. That means that eq. (4) will not be considered, and shorter expressions will be used instead. In this way, when considering in-plane modes only (both piezo layers are in-phase polarized), then Φ j = (ϕ j , ψ j ,0) and

Oppositely, when considering out-of-plane modes only (both piezo layers are out-of-phase polarized), then Φ j = (0,0, φ j ) and

where the term −(h s + h p )/2, being a constant multiplicative factor, is removed.

At this point and based on our assumptions, it is important to notice that the piezoelectric properties are not needed in the problem formulation and just appear as a scaling factor that we will omit for the sake of simplicity.

In our model we are interested in mode shapes rather than frequencies in the spectrum. For that reason we are not numbering modes according to the position in the spectrum of the corresponding frequencies. As it is very well known, this may be unpractical when working with this kind of problems due to mode switching (Ma et al. 1994). Indeed, what we do is, for a given design structure χ s , and among the modes for this structure, to select the closest to a prescribed mode shape of the homogeneous square plate. Of course the term closest needs to be made precise and concrete. We will do this in the next section when dealing with the discrete problem.

Once the physics of the problem is clear, we can state the optimization problem to be studied. We start by selecting J mode shapes, and for the sake of simplicity we number these eigenmodes and their corresponding eigenfrequencies increasingly from 1 to J, dropping the rest in (2) (i.e., we truncate the sum (2) at j = J). Then the design problem aims to find a modal sensor that filters the k-th mode (i.e. the coefficient F k is maximized) among the set of J modes. This is mathematically formulated as:

subject to

In principle, the parameter α will be a small tolerance, but of unknown value. As a bad choice of α could imply a lack of solutions for the problem, we have opted here for using a bound-type formulation to reformulate the optimization problem. This basically implies to consider α now as an extra non-negative variable, rather than an input parameter. In this way, we maximize F k −α so that the response to the k-th mode is maximized whereas α is decreased, minimizing then the response of the rest of modes. Taking this into account and dropping the design variables in the coefficients for the sake of clarity, the new (bound) formulation is the following:

subject to

The variable α in our problem takes a very small value in all examples, as we will see below. Notice that this variable is introduced to suppress as much as possible the amplitudes of the rest of the modes. Of course, in case it could take bigger values, a very good idea would be normalize it as the rest of design variables.

It is also important to note the absence of a volume constraint. This constraint in not necessary mathematically for the problem to be non trivial, and further it does not add anything meaningful in the model from a physical point of view.

3 Discrete formulation of the problem

At this time we proceed in the usual way. First of all, we replace the designs variables χ s , χ p , taking values on {0,1}, by their continuous versions, ρ s , ρ p , taking values on [0,1]. Then the design domain in discretized in N e finite elements with two variable densities as design variables per each, that is, \({\rho _{s}^{e}}\) the structural density and \({\rho _{p}^{e}}\) the polarity density. Now, once the design variables have been relaxed, we include in the model a penalization according to the SIMP methodology (Bendsøe and Sigmund 1999). However, the way that \({\rho _{s}^{e}}\) appears in the stiffness term is a delicate issue when working in eigenproblems optimization. Often a straightforward use of the SIMP method to penalize it leads to the appearance of the so-called localized or spurious modes. The point is that the stiffness term basically depends on the power p of the element density \(({\rho _{s}^{e}})^{p}\) (typically p = 3), and the mass term is just linearly dependent on the element density \({\rho _{s}^{e}}\). As \({\rho _{s}^{e}}\) tends to zero, the ratio of the stiffness and the mass goes towards zero too, which means that very low eigenfrequencies may appear in low densities regions, spoiling the frequency analysis. To eliminate these artificial modes, the use of tailored interpolation functions for both stiffness and mass terms are required.

The first idea to overcome this problem was proposed in Pedersen (2000). It consists first, in linearizing the stiffness part for low densities (below a threshold value) and second, not taking into account the nodes surrounded by these elements, when computing the eigenvectors. Other options are the approach of Tcherniak (2002), which consists in setting the mass term to 0 in these low density regions, or the RAMP interpolation (Stolpe and Svanberg 2001) which has a non-zero gradient for null density values. This is precisely the approach used here to alleviate problems concerning spurious modes. In such a case, the term E s χ s wherever appears in (5) is replaced by the interpolation function

where E m i n is a very small Young’s modulus assigned to void regions in order to prevent the stiffness matrix from becoming singular, E s is the Young’s modulus of the host structure, and q is a penalization factor. Recall that E s χ s is the coefficient regarding the stiffness of the plate in the eigenproblem system (5).

On the other hand, the term m s χ s concerning the mass density in (5) is replaced by the linear interpolation function

where m s is the material density.

Concerning the polarity density, no penalization is needed for it, that is, the polarization term (2χ p −1) is just substituted for

This is consequence of having checked that no significant effects appear in the final solutions when penalizing this design variable in our previous work (Ruiz et al. 2013). It is worth mentioning here the self-penalization effect of piezoelectric materials pointed out in Wein et al. (2011). Further as we mentioned above, this is a mathematical fact: for any given structure χ s , the optimal electrode profile is a classical one χ p ∈{0,1} (Donoso and Bellido 2009a).

Another drawback of this problem is the appearance of large gray areas, and this is precisely due to the physics of the problem. Because of this, the use of a mesh-independent filter in conjunction with a projection method is not helpful here (Guest et al. 2004; Sigmund 2007). What really happens is that strain in these low density areas is larger than in the rest of the design, increasing the cost and being favored during the optimization process. We found a heuristic way to overcome this problem in our previous work (Ruiz et al. 2013): using an interpolation function \(R({\rho _{s}^{e}})\), premultiplying the coefficients, that penalizes in a progressive way the occurrence of gray areas. The mathematical expression for such an interpolation scheme was given by

where η and ζ are tuning parameters.

We have observed that the lack of differentiability in R has had a negative effect in the numerical results of this work, causing a certain (minor) lost symmetry in some of them. That is the reason why we have used a smoothed version of it,

where γ is an adjusting parameter. This expression clearly reminds us the erode operator introduced by Sigmund (2007) to control feature sizes in void regions. Now the use of the density filtering (Bruns and Tortorelli 2001; Bourdin 2001) together with the penalization function (16) appearing in the cost only as a premultiplying factor, solves the symmetry problems aforementioned.

With all this is mind, the discrete expression for the j-th coefficient via FEM would be

where

B is the usual FE strain-displacement matrix that will adopt an expression or another one depending on each case (in-plane or out-of-plane). In this study, we have considered plane rectangular bilinear elements (8 degrees of freedom per element) for the in-plane case, and rectangular Kirchhoff plate elements (12 degrees of freedom per element) for the out-plane case. In the former, elemental matrix B e is 3 by 8, and in the latter, is 3 by 12. Finally, both global stiffness and mass matrices can be obtained by assembling all elemental contributions, which are expressed as

where V e denotes the volume of an element, E is the material property matrix, N is the shape function matrix, and m is the mass density. Again, the expressions of these global matrices depend on the case study. The reader is referred to a reference book on FEM such as Cook et al. (1989) to see how they can be obtained.

Φ j is the j-th global eigenvector obtained from the equations

and

where μ j is the j-th eigenvalue, namely, the square of the j-th eigenfrequency. K and M are the global stiffness and mass matrices, respectively. Both of them are symmetric and positive definite, so that all eigenvalues of our problem, μ j , are real and positive.

We start by choosing J desired mode shapes of the homogeneous square plate, that we number increasingly from 1 to J, \(\overline {\boldsymbol {\Phi }}_{1},\dots ,\overline {\boldsymbol {\Phi }}_{J}\). Among those, we would like to isolate one, say the k-th, from the rest as explained in the previous section. To make sure that we follow those mode shapes during the optimization process we make use of the modal assurance criterion, MAC (Kim and Kim 2000; Tsai and Cheng 2013). MAC uses the following correlation criterion for vectors: the quantity

is clearly between 0 and 1, and MAC(Φ, Ψ)=1 if and only if Φ = ±Ψ. Thus, given a design ρ s , and once we have computed the modes of the structure given by \(\{ {\boldsymbol {\Phi }}_{l}\}_{l=1}^{L}\) (with L>J, large enough), we select Φ j , j = 1⋯ , J as the solution of the discrete optimization problem

The eigenvectors corresponding to the L lowest eigenvalues are computed by using the subspace iteration method (Bathe and Wilson 1976). As usual, they are orthonormalized with respect to the mass matrix. There is an issue with the fact that the eigenvectors are unitary (unit norm) with respect to the mass matrix, since it depends on the design, and furthermore the coefficients F j depends linearly on the length of the eigenvectors. Due to this, comparison of cost and constraints one iteration to the next in which the design has changed, and so the mass matrix, may cause convergence troubles of the numerical algorithm. We have fixed this issue by forcing eigenvectors to have unit norm, simply dividing each one by its norm, so that all eigenvectors belong to the unit sphere. Then, by doing this to the set of eigenvectors obtained by the subspace iteration method, we have a set of unitary (with respect to the identity matrix) eigenvectors, but orthogonal with respect to the mass matrix. From a mathematical viewpoint, we have continuous and bounded cost and constraints, and so existence of solution for the discrete problem is guaranteed. Another advantage on using this normalization is that, in case of interest, the sensitivity analysis of the MAC, if introduced as a constraint in the problem, for mode tracking (Kim and Kim 2000), is performed much easier and shorter than using the normalization with respect to the mass matrix (Tsai and Cheng 2013). With this normalization, MAC(Φ, Ψ)=(Φ T Ψ)2 for Φ T Φ = Ψ T Ψ = 1, and thus derivatives are simpler to compute.

The discrete problem written in the usual topology optimization format would be

subject to

Working with a set of eigenmodes orthogonal and unitary with respect to the mass matrix is also perfectly possible, and it can be carried out exactly with the same approach we introduce here (only having into account this fact for the sensitivity analysis). However, we have contrasted that, working with unitary eigenmodes with respect to the identity matrix, our numerical algorithm works better. Further to that, our model is a way, maybe not the only possible one, of designing filters that isolate a single eigenmode from others, and to this end our approach works out, as it can be checked in the examples shown in Section 5.

4 Sensitivity analysis

In this section, we are concerned with the computation of derivatives of the coefficients F j with respect to design variables. These derivatives will be necessary for the numerical method that we are going to implement to simulate the problem. For a given element of the discretization e, we have to compute the derivative of F j with respect to \({\rho _{s}^{e}}\) and \({\rho _{p}^{e}}\). Derivative with respect to \({\rho _{p}^{e}}\) is trivial since this variable only appears in the coefficients F j , not in the eigenproblem equations, and it does linearly. Then we focus here on the computation of the derivative with respect to \({\rho _{s}^{e}}\). From now on, prime stands for derivatives with respect to material densities \((\;)' = \frac {\partial (\;)}{\partial {\rho _{s}^{e}}}\). That said, we have to compute the derivative of the j-th coefficient,

which in turn leads to calculate the derivative of the associated eigenvector \(\boldsymbol {\Phi }_{j}^{\prime }\). Recall that Y is not a constant vector and rather it depends on \({\rho _{s}^{e}}\) and \({\rho _{p}^{e}}\). Indeed

It is very well-known that in order to compute derivatives of eigenvectors, multiplicity of the corresponding eigenvalues is crucial, and so we will distinguish two cases according to whether this eigenvalue is simple or multiple.

Recall that, according to the formulation of problem (25), we have J modes Φ 1,…, Φ J which are M-orthogonal

and unitary with respect to the identity matrix

4.1 Simple eigenfrequencies

Whenever the eigenvalue μ j is simple, the derivative of its eigenvector, \(\boldsymbol {\Phi }_{j}^{\prime }\), with respect to an element material density is the solution of the problem

where

The system (31) is obtained just differentiating (21) and (22). Equation (32) is found multiplying (31) by \(\boldsymbol {\Phi }_{j}^{T}\) and making use of the top equation in (31). Equation (31) is a linear system of N unknowns and N+1 equations. Matrix (K−μ j M) is singular as it has a redundant row that has to be substituted by last line in (31) in order to compute \({\boldsymbol {\Phi }}_{j}^{\prime }\). The best choice to solve this system seems to be Nelson’s method (see Lee et al. 1996 and the references therein). The drawback of Nelson’s method is that it is quite expensive, since for computing \({\boldsymbol {\Phi }}_{j}^{\prime }\) we have to solve system (31), and at the end do the same for each design variable \({\rho _{s}^{e}}\). That is, we have to repeat this process N times for a given eigenvector. Looking at our problem carefully, we need not to compute the eigenvector derivative, \({\boldsymbol {\Phi }}_{j}^{\prime }\), but the coefficient derivative \(F_{j}^{\prime }\). Tcherniak (2002), in a similar problem, noticed this and found a cheaper way of computing \(F_{j}^{\prime }\) without computing the eigenvector derivative. We adopt his approach in the following calculation, being a bit more tedious since vector Y is not constant in our case.

Using the adjoint method instead, we consider the augmented function

where P j and λ j are the vector and scalar Lagrange multipliers associated to the j-th mode. Indeed, c = Y T Φ j .

Differentiating and rearranging terms, we arrive at

Now, choosing the pair (P j , λ j ), if possible, such that

we arrive at

Multiplying (35) by Φ j , then we find 2λ j = F j , so that the adjoint state P j satisfies the adjoint problem

Now we have to justify that this last system admits a solution P j , and therefore there exits a pair (P j , λ j ) satisfying (35).

As (31), again (37) is a system of N unknowns and N+1 equations, and last equation is used to replace one of the redundant equations of the first row of the system, and this justifies existence of solution for this system. For solving it we use Nelson’s method, that we describe here for the convenience of the reader. The advantage now is that the aforementioned technique is applied just once -rather than N times- for a given eigenvector, since the independent term in system (37) does not depend on the design variable.

Nelson’s method proceeds in the following way. The complete solution P j in (37) can be expressed in terms of a particular solution Q j and a homogeneous solution γ Φ j

The particular solution is found by identifying the component of Φ j with the largest absolute value and constraining this component (say the i-th) in P j to zero. That is to say, Q j is the solution of the linear system

where G j is the regular matrix obtained from (K−μ j M) by zeroing out the i-th row and column and setting the i-th diagonal element to 1, and f j is the column vector Y−F j Φ j by zeroing out the i-th element. The unknown coefficient γ can be obtained by substituting the expression (38) into the last equation of (37), finding

4.2 Repeated eigenfrequencies

Multiple eigenvalues may appear in different ways. The most obvious one is due to certain symmetry in the boundary conditions, like in a simply-supported plate. However, a simple eigenvalue could become multiple during the optimization process without any apparent reason. Another situation that could origin repeated eigenvalues is whenever mode switching happens and then two eigenvalues cross in the spectrum diagram. In this case, at least during a few iterations, two eigenvalues can be considered as identical until they definitely separate into simple ones again or not.

In our situation, multiple eigenvalues issue happens in most of the examples, as we will see below, so we need to compute derivative of eigenvectors in such cases. Nelson’s method is exclusive for simple eigenvalues, since it assumes that rank(K−μ j M) = N−1, and it only happens when μ j is simple. This is not the only difficulty in computing derivatives of eigenvectors corresponding to multiple eigenvalues. The main one is that there are infinitely many M-orthogonal basis associated to a multiple eigenvalue, and only for one of those, we can compute the derivative of the eigenvectors forming that basis! The point is that when we perturb a design variable the eigenvalue splits into m (being m the eigenvalue multiplicity) distinct eigenvalues, as pointed out in Dailey (1989). In order that eigenvector derivatives exist, the eigenvector basis has to be adjacent to the m distinct eigenvectors that appear when we perturb such design variable. If we do not restrict ourselves to that adjacent basis, eigenvectors of any other basis are discontinuous with respect to the design variable, and consequently non-differentiable.

We would like to remark that, as it happened for the case of simple eigenvalues, for computing eigenvector derivatives we do not need to compute eigenvalues derivatives first, and indeed with the method that we are using we do it all at once. Rather, for eigenfrequency optimization problems, there are more direct methods to compute the eigenvalue derivatives straightforward without computing eigenvector derivatives (see for instance Seyranian et al. 1994).

In order to compute eigenvector derivatives in this case we find that the method developed in Dailey (1989) is the most convenient one for our purposes, and we will follow it. For the sake of simplicity we assume we have an eigenvalue of multiplicity two. In fact, in our examples whenever multiple eigenfrequencies occurs they are double, and we denote μ 1 = μ 2 this double eigenvalue, and Φ 1, Φ 2 a M-orthogonal, unitary basis of eigenvectors for μ 1. For convenience we collect this two eigenvectors together stored by columns in the matrix Φ = (Φ 1, Φ 2).

The first step is to compute the new (adjacent) eigenvectors for which the derivatives can be calculated. They are found from the initial selection of eigenvectors Φ as

where the columns of Z are the new eigenvectors and Γ is a orthonormal matrix (Γ T Γ = I), to be computed. Indeed Z does not depend on the initial election of eigenvectors Φ. It is elementary to check that the columns of Z are M-orthogonal and unitary. That is to say, Z T M Z is a diagonal matrix but not the identity matrix, since the same happens to matrix Φ T M Φ. Obviously, Z satisfies the eigenvalue equation

where Λ is the eigenvalue matrix,

Derivating (42), and rearranging terms we have that

Now multiplying this expression by Φ T we arrive at

that is, a small eigenproblem whose dimension is equal to the multiplicity of the repeated eigenvalue, dimension two in our case, where

and Λ ′ is the diagonal matrix of the eigenvalue derivatives, that is,

Obviously dividing by a matrix means multiplying by its inverse. Notice that, although we do not need eigenvalues derivatives those are computed at the same time that Γ. A word must be said now on eigenvalues derivatives for multiple eigenfrequencies. In the case we are dealing with of a double eigenvalue, even μ 1 = μ 2 and there are indeed only one eigenvalue, but we have two values for their derivatives as a result of the double multiplicity. For the double eigenvalue μ 1, the subgradient, i.e. the set of slopes of any tangent rect to the graph of μ 1, is a closed interval (Clarke 1990), and the extreme points of such an interval are just \(\mu _{1}^{\prime }\) and \(\mu _{2}^{\prime }\).

The second step is to compute the derivatives of the eigenvectors Z ′. In this case rank(K−μ 1 M) = N−2 and Nelson’s method does not work, and then we use Dailey’s method that proceeds in the following way. Z ′ can be expressed as

V is the solution of the linear system

where G is the (singular) matrix (K−μ 1 M) by zeroing out the two rows containing the largest elements, and setting both diagonal elements to 1. f is the column vector MZ Λ ′−(K ′−μ 1 M ′)Z by zeroing out the same rows. Finally, C is a matrix built as

where

and

For more details in this calculation for computing Z ′ we refer the interested readers to Dailey (1989). A remark is required at this point. As pointed out in Friswell (1996), Dailey’s methods breaks down when \(\mu _{1}^{\prime }=\mu _{2}^{\prime }\), and in that paper, a method was introduced for computing eigenvector derivatives in such a case. If \(\mu _{1}^{\prime }=\mu _{2}^{\prime }\), then the subgradient of μ 1 is a singleton, and that means that μ 1 is differentiable (Clarke 1990), what can only happens if the equality μ 1 = μ 2 occurs independently on the chosen variable. This does not happen in our case, not even if we consider symmetric boundary conditions, and thus Dailey’s method is enough for our purposes.

We have not yet finished. Since in our problem we follow mode shapes, we cannot replace Φ by Z in the problem formulation. We need an expression for Φ ′, in order to update design variables in our numerical algorithm. As pointed out by Friswell (1996), eigenvectors of multiple eigenvalues are not differentiable (not even continuous), and there is only one basis of eigenvectors for which we can compute the eigenvector derivatives, namely Z. For computing Φ ′, we proceed heuristically in the following way. First we notice that for our fixed design variable \({\rho _{s}^{e}}\), the matrix Γ is constant and

and therefore we can compute Φ ′ as

With this procedure we can construct a matrix containing the derivatives of a given mode shape with respect to design variables, understanding that it is not its gradient with respect to design variables, but the partial derivatives matrix due to lack of differentiability in general.

5 Numerical approach

In this section, we sketch the numerical algorithm implemented in order to simulate our optimization problem. The optimizer used is the method of moving asymptotes, MMA, (Svanberg 1987). As we will see in the numerical examples shown in the next section, our method works out even for the multiple eigenvalues case, using the computation of sensibilities as explained above, without the need of a more sophisticated optimization algorithm based on non-smooth analysis.

The optimization algorithm flow is written as follows:

-

1.

Choose J modal shapes of the homogeneous square plate with same boundary conditions than the one of our problem

$$ \{ \overline{\boldsymbol{\Phi}}_{j}\}_{j=1}^{J} $$(55)They will be our reference in the first iteration step.

-

2.

Initialize the design variable ρ = (ρ s , ρ p ), and the iteration step to i = 1.

-

3.

Compute L mode shapes (L>J, large enough) for the plate to be optimized

$$ \{ \boldsymbol{\Phi}_{l}^{(i)}\}_{l=1}^{L} $$(56) -

4.

By means of MAC, identify the J closest modes to the ones of reference, among the set of previously computed L modes. Relabel the sequence again from 1 to J

$$ \{ \boldsymbol{\Phi}_{j}^{(i)} \}_{j=1}^{J} $$(57) -

5.

Check the multiplicity of the eigenvalues. In order to do this, we check whether the averaged distance between two consecutive eigenvalues is greater or not than a certain tolerance, \(\left \| \frac {\mu _{j+1}-\mu _{j}}{\mu _{j}}\right \|\le \tau \). In our examples we choose τ = 5×10−2 for such a tolerance, and it is updated dividing its value by 1.5 each 10 iterations, until it reaches the minimal value of 10−5. For the j-th eigenvalue we have two options:

-

it is simple: then compute the coefficient \(F_{j}^{(i)} \) and its derivative \((F_{j}^{(i)})' \) by using Nelson’s method.

-

it is multiple: we assume the j-th eigenvalue multiplicity to be two (that is what happens when multiple eigenvalue occurs in all examples we have simulated) and for simplicity in the exposition that it coincides with the (j+1)-th eigenvalue. Then we proceed in the following way:

-

(a)

given a M-orthogonal basis of eigenvectors (for instance, the one given by the subspace iteration method), \(\{ \boldsymbol {\Psi }_{j}^{(i)}, \boldsymbol {\Psi }_{j+1}^{(i)}\}\), we find a new basis of eigenvectors, \(\{\tilde {\boldsymbol {\Phi }}_{j}^{(i)}, \tilde {\boldsymbol {\Phi }}_{j+1}^{(i)}\}\), that follows the previous mode shapes as

$$ (\tilde{\boldsymbol{\Phi}}_{j}^{(i)}, \tilde{\boldsymbol{\Phi}}_{j+1}^{(i)})= (\boldsymbol{\Psi}_{j}^{(i)}, \boldsymbol{\Psi}_{j+1}^{(i)}) \mathbf{H}, $$(58)with H a orthogonal matrix solution of the finite dimensional optimization problem

$$ \max\limits_{\left\{\textbf{H}\; : \; \mathbf{HH}^{T}=\mathbf{I}\right\}}: \quad \|(\boldsymbol{\Phi}_{j}^{(i-1)} ,\boldsymbol{\Phi}_{j+1}^{(i-1)})\textbf{M}^{(i)}({\tilde{\boldsymbol{\Phi}}}_{j}^{(i)}, {\tilde{\boldsymbol{\Phi}}}_{j+1}^{(i)})\|. $$(59) -

(b)

Normalized \({\tilde {\boldsymbol {\Phi }}}_{j}^{(i)}, {\tilde {\boldsymbol {\Phi }}}_{j+1}^{(i)}\) as

$$ \boldsymbol{\Phi}_{j}^{(i)}=\frac{1}{\|{\tilde{\boldsymbol{\Phi}}}_{j}^{(i)}\|}{\tilde{\boldsymbol{\Phi}}}_{j}^{(i)}, \quad \boldsymbol{\Phi}_{j+1}^{(i)}=\frac{1}{\|{\tilde{\boldsymbol{\Phi}}}_{j+1}^{(i)}\|}{\tilde{\boldsymbol{\Phi}}}_{j+1}^{(i)} $$(60) -

(c)

Compute the coefficients \(F_{j}^{(i)}\) and \(F_{j+1}^{(i)}\) using these eigenvectors.

-

(d)

Calculate, according to Section 4.2, the new eigenvectors Z (i) = Φ (i) Γ being

$$ \boldsymbol{\Phi}^{(i)} = (\boldsymbol{\Phi}_{j}^{(i)}, \boldsymbol{\Phi}_{j+1}^{(i)}) $$(61)and Γ the solution of the (small) eigenproblem in eq. (45).

-

(e)

Get their derivatives (Z (i))′ by using Dailey’s method.

-

(f)

Find the required derivatives as

$$ (\boldsymbol{\Phi}^{(i)})^{\prime} = (\textbf{Z}^{(i)})^{\prime} \boldsymbol{\Gamma}^{T}. $$(62) -

(g)

Compute the derivative of the coefficients \((F_{j}^{(i)})^{\prime } \) and \((F_{j+1}^{(i)})^{\prime } \).

-

(a)

-

-

6.

Update design variables by using MMA.

-

7.

Until convergence, go back to step 3, taking \(\{\boldsymbol {\Phi }_{j}^{(i)}\}_{j=1}^{J}\) as the new references.

The flowchart of computations for the approach proposed is detailed in Fig. 3.

6 Numerical examples

Next we illustrate our approach with several examples corresponding to different boundary conditions. Common to all of them is the design domain, a square plate, and the number of modes to be considered, that is, J = 4, for both in-plane and out-of-plane cases. Due to possible mode switching we have to compute more than 4 modes since it might happen that any of the modes from the 5-th on could switch to the forth, for instance. In all examples that we show here we compute the first 8 modes, and that is enough. Then, according to the previous notation we fix L = 8. The mesh used is 50 by 50, which means N e = 2500 elements. From now on, wherever appears the expression “j-th mode shape” really means “mode shape similar to the initial j-th mode shape”. We say that because when tracking a specific mode, it is more than likely to change its order in the spectrum due to mode switching.

6.1 Example 1: plate clamped at its left and right edges

The first example considered is a plate clamped at its left and right edges (see Fig. 4), first moving in-plane.

We are interested in finding the design that follows the second mode shape of the homogeneous square plate and cancels as much as possible the first, the third and the fourth. These are our initial references. Both structure layout and electrode profile are showed in Fig. 5. White color indicates void and black color means structure covered by electrode with opposite polarities (red and blue). Actually the electrode profile (or the polarity) contains all information needed to understand the results. Hence, hereafter this will be the figure to be shown instead of both.

In Fig. 6, compares the optimized polarity when using simultaneous optimization and the one when considering single optimization (only the electrode profile is optimized over a homogeneous square plate). As the host structure is not optimized in the latter case, white areas do not appear. As expected, the former design is better and this is expressed through coefficients normalized with respect to the coefficient corresponding to the second mode shape under single optimization, which takes the unit value. For this particular example the percentage gain (defined as the ratio of the normalized coefficient maximized under simultaneous optimization to the one under single optimization minus 1, and then altogether multiply by 100) is almost 20 %. Judging from the bar graph, we can observe that the influence of the rest of the modes is canceled at all, which means that α is really close to zero. The convergence curve of the coefficient F 2 is showed in Fig. 7.

In this example, we can see how the second mode shape interchanges its position first with the third mode shape and later with the fourth one during the optimization process. This is represented with small circles in both Figs. 8 and 9. That means that our fourth mode shape optimized is the most similar to the second mode shape of the homogeneous square plate, as illustrated in Fig. 11. This fact can also be corroborated just checking that the MAC between the fourth mode shape and the reference, which changes during the optimization but initially is the second mode shape of the homogeneous square plate, is really close to 1.

When eigenvalues coalesce in a certain iteration they become double, and this has to be taken into account to compute the derivatives correctly. But even more, mode switching could also occurred for higher modes as it is. Fortunately, it does not affect to the final results, as we are worried about the first four modes only. However this clearly justifies why more than four modes (for this example) should be considered from the very beginning.

Figure 10 shows the rest of electrode profiles corresponding to isolating the first, the third and fourth mode shape, respectively. It is noticed that the void region is in general small, and it will be empty in some examples, as we will see later. Finally, we illustrate in Fig. 11 the correspondence between our four mode shapes optimized and the first four mode shapes of the homogenous square plate.

The same analysis can be done whether the plate is moving now out-of-plane. Electrode profiles for such situations are depicted in Fig. 12.

6.2 Example 2: plate clamped at its left edge

Now the plate is clamped at its left edge only (see Fig. 13).

In Figs. 14 and 15 are showed the electrode profiles for these new boundary conditions, corresponding to the in-plane and out-of-plane cases, respectively. Again, the multiple eigenvalues issue is present in most of the examples. For the sake of brevity, we have not included more figures, such as the ones concerning eigenfrequencies histories or bar graphics relating to the gain obtained in each case. We just note that the void region in Figs. 14b and in 15a is empty and then the gain is null. However the gain in the electrode in Fig. 14a is more than 100 %, in contrast to the two previous examples.

6.3 Example 3: plate clamped at all four edges

A plate clamped at all four edges is chosen as the final example (see Fig. 16).

Owing to these boundary conditions, it is well-known that, for instance, the first eigenvalue for a homogeneous square plate moving in-plane is double. In this case we have taken as references the mode shapes with horizontal and vertical nodal lines for the first and the second mode shapes, respectively. Even though the eigenvalues of the designs subject to the optimization process are repeated at the beginning, they become simple after a few iterations because of the appearance of small void regions. Electrode profiles make perfect sense at the end of the optimization process, as we have finished with optimized designs (Fig. 17a and b) whose nodal lines have the same orientation than the the ones of the references. As expected, the optimized electrode for the second mode is the same as the one for the first mode but rotated 90∘. In both cases the gain obtained is around 10 %. It is also observed in this case that when maximizing either the third or the fourth mode shape, the eigenfrequencies corresponding to the first two modes are always double.

When the plate is now moving out-of-plane, the first couple of repeated eigenvalues is the one formed by the second and the third modes. Electrode profiles are showed in Fig. 18 for this case. In a similar way to the in-plane case, now the eigenfrequencies corresponding to the second and the third modes are double all the time, whenever isolating either the first or the fourth mode shape.

7 Conclusions

In Donoso and Bellido (2009a) a method for designing piezoelectric modal sensors/actuators was introduced. The idea was to optimize the polarization profile of the piezoelectric bonded to a fixed host structure (typically a rectangular plate). In order to get better transducers, in this paper we deepen in our previous approach by proposing a model in which we optimize simultaneously the host structure and the polarization profile of the piezoelectric. In this model both cost and constraints depend explicitly on mode shapes, and we would like to remark that this is a novelty in topology optimization problems. Typical problems appearing in eigenproblems optimization also show up here: spurious modes, mode switching, mode shapes tracking, multiple eigenvalues and sensitivities in this case. Eigenmode sensitivity in the multiple eigenvalue case is an issue here since eigenmodes are not differentiable in general. We have overcome this problem by defining a matrix of partial derivatives with respect to design variables as shown in Section 4.2., and our numerical results have shown this to be enough when using standard optimizers like MMA. Further, the physics of this problem generates optimized designs with large gray areas, and this has been fixed by using a suitable interpolation penalizing intermediate values. Finally, we honestly believe that the examples shown in the last section are clear and striking enough to convince the reader that our approach works out.

References

Bathe KJ, Wilson EL (1976) Numerical methods in finite element analysis. Prentice-Hall, New Jersey

Bendsøe MP, Sigmund O (1999) Material interpolation schemes in topology optimization. Arch Appl Mech 69:635–654

Bendsøe MP, Sigmund O (2003) Topology optimization: theory, methods and applications. Springer, Berlin

Bruns TE, Tortorelli DA (2001) Topology optimization of non-linear elastic structures and compliant mechanisms. Comput Methods Appl Mech Eng 190(26–27):3443–3459

Bourdin B (2001) Filters in topology optimization. Int J Numer Methods Eng 50(9):2143–2158

Carbonari RC, Silva ECN, Nishiwaki S (2007) Optimal placement of piezoelectric material in piezoactuator design. Smart Mater Struct 16:207–220

Clarke FH (1990) Optimization and nonsmooth analysis. Classics in Applied Mathematics, SIAM

Clark RL, Burke SE (1996) Practical limitations in achieving shaped modal sensors with induced strain materials. J Vib Acoust 118:668–675

Cook RD, Malkus DS, Plesha ME (1989) Concepts and applications of finite element analysis, 3rd edn. Wiley, New York

Dailey RL (1989) Eigenvector derivatives with repeated eigenvalues. AIAA J 27(4):486–491

Díaz AR, Kikuchi N (1992) Solutions to shape an topology eigenvalue optimization problems using a homogenization method. Int J Numer Meth Engng 35:1487–1502

Donoso A, Bellido JC (2009a) Systematic design of distributed piezoelectric modal sensors/actuators for rectangular plates by optimizing the polarization profile. Struct Multidisc Optim 38:347–356

Donoso A, Bellido JC (2009b) Tailoring distributed modal sensors for in-plane modal filtering. Smart Mater Struct 18:037002

Donoso A, Bellido JC, Chacón JM (2010) Numerical and analytical method for the design of piezoelectric modal sensors/actuators for shell-type structures. Int J Numer Meth Engng 81:1700– 1712

Du J, Olhoff N (2007) Topological design of freely vibrating continuum structures for maximum values of simple and multiple eigenfrequencies and frequency gaps. Struct Multidisc Optim 34:91–110

Friswell MI (1996) The derivatives of repeated eigenvalues and their associated eigenvectors. Trans ASME J Vib Acoust 118:390–397

Guest J, Prevost J, Belytschko T (2004) Achieving minimum length scale in topology optimization using nodal design variables and projection functions. Int J Numer Meth Engng 61(2):238– 254

Hansen LV (2005) Topology optimization of free vibrations of fiber laser packages. Struct Multidisc Optim 29:341–348

Jensen JS, Pedersen NL (2006) On maximal eigenfrequency separation in two-material structures: the 1D and 2D scalar cases. J Sound Vib 289:967–986

Jian K, Friswell MI (2007) Distributed modal sensors for rectangular plate structures. J Intell Mater Syst Struct 18:939–948

Kang Z, Tong L (2011) Combined optimization of bi-material structural layout and voltage distribution for in-plane piezoelectric actuation. Comput Methods Appl Mech Engrg 200:1467– 1478

Kang Z, Wang X, Luo Z (2012) Topology optimization for static control of piezoelectric plates with penalization on intermediate actuation voltage. J Mech Des 134:051006

Kim J, Hwang JS, Kim SJ (2001) Design of modal transducers by optimizing spatial distribution of discrete gain weights. AIAA J 39(10):1969–1976

Kim TS, Kim YY (2000) Mac-based mode-tracking in structural topology optimization. Comput Struct 74:375–383

Kögl M, Silva ECN (2005) Topology optimization of smart structures: design of piezoelectric plate and shell actuators. Smart Mater Struct 14:387–399

Lee IW, Jung GH, Lee JW (1996) Numerical method for sensitivity analysis of eigensystems with non-repeated and repeated eigenvalues. J Sound Vib 195(1):17–32

Lee CK, Moon FC (1990) Modal sensors/actuators. J Appl Mech 57:434–441

Luo Z, Gao W, Song C (2010) Design of multi-phase piezoelectric actuators. J Intell Mater Syst Struct 21:1851–1865

Ma ZD, Cheng HC, Kikuchi N (1994) Structural design for obtaining desired eigenfrequencies by using the topology and shape optimization method. Comput Syst Eng 5(1):75–89

Maeda Y, Nishiwaki S, Izui I, Yoshimura M, Matsui Tereda K (2006) Structural topology optimization of vibrating structures with specified eigenfrequencies and eigenmode shapes. Int J Numer Meth Engng 67:597–628

Nakasone PH, Silva ECN (2010) Dynamic design of piezoelectric laminated sensors and actuators using topology optimization. J Intell Mater Syst Struct 21:1627–1652

Pedersen NL (2000) Maximization of eigenvalues using topology optimization. Struct Multidisc Optim 20:2–11

Preumont A, Francois A, De Man P, Piefort V (2003) Spatial filters in structural control. J Sound Vib 265(1):61–79

Pulskamp JS, Bedair SS, Polcawich RG, Smith GL, Martin J, Power B, Bhave SA (2012) Electrode-shaping for the excitation and detection of permitted arbitrary modes in arbitrary geometries in piezoelectric resonators. IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control 59(5):1043– 1060

Ruiz D, Bellido JC, Donoso A, JL Sánchez-Rojas (2013) Design of in-plane piezoelectric sensors for static response by simultaneously optimizing the host structure and the electrode profile. Struct Multidisc Optim 48:1023–1026

Sánchez-Rojas JL, Hernando J, Donoso A, Bellido JC, Manzaneque T, Ababneh A, Seidel H, Schmid U (2010) Modal optimization and filtering in piezoelectric microplate resonators. J Micromech Microeng 20:055027

Seyranian AP, Lund E, Olhoff N (1994) Multiple eigenvalues in structural optimization problems. Struct Multidisc Optim 8:207–227

Sigmund O (2007) Morphology-based black and white filters for topology optimization. Struct Multidisc Optim 33(4–5):401–424

Stolpe M, Svanberg K (2001) An alternative interpolation scheme for minimum compliance topology optimization. Struct Multidisc Optim 22:116–124

Sun D, Tong L, Wang D (2002) Modal actuator/sensor by modulating thickness of piezoelectric layers for smart plates. AIAA J 40(8):1676–1679

Svanberg K (1987) The method of moving asymptotes - a new method for structural optimization. Int J Numer Methods Eng 24:359–373

Tsai TD, Cheng CC (2013) Structural design for desired eigenfrequencies and mode shapes using topology optimization. Struct Multidisc Optim 47(5):673–686

Tcherniak D (2002) Topology optimization of resonating structures using SIMP method. Int J Numer Meth Engng 54:1605–1622

Wein F, Kaltenbacher M, Kaltenbacher B, Leugering G, Bänsch E, Schury F (2011) On the effect of self-penalization of piezoelectric composites in topology optimization. Struct Multidisc Optim 43:405–417

Zhang X, Kang Z, Li M (2014) Topology optimization of electrode coverage of piezoelectric thin-walled structures with CGVF control for minimizing sound radiation. Struct Multidisc Optim 50:799–814

Acknowledgments

This work has been supported by the Ministerio de Economía y Competitividad (Spain) through grant MTM2013-47053-P, and Consejería de Educación, Cultura y Deportes of the Junta de Comunidades de Castilla-La Mancha (Spain) and the European Fund for Regional Development through grant PEII-2014-010-P. We would like to thank J.L. Sánchez-Rojas for several discussions on the model subject of this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ruiz, D., Bellido, J.C. & Donoso, A. Design of piezoelectric modal filters by simultaneously optimizing the structure layout and the electrode profile. Struct Multidisc Optim 53, 715–730 (2016). https://doi.org/10.1007/s00158-015-1354-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-015-1354-5