Abstract

Numerous studies have examined how predator diets influence prey responses to predation risk, but the role predator diet plays in modulating prey responses remains equivocal. We reviewed 405 predator–prey studies in 109 published articles that investigated changes in prey responses when predators consumed different prey items. In 54 % of reviewed studies, prey responses were influenced by predator diet. The value of responding based on a predator’s recent diet increased when predators specialized more strongly on particular prey species, which may create patterns in diet cue use among prey depending upon whether they are preyed upon by generalist or specialist predators. Further, prey can alleviate costs or accrue greater benefits using diet cues as secondary sources of information to fine tune responses to predators and to learn novel risk cues from exotic predators or alarm cues from sympatric prey species. However, the ability to draw broad conclusions regarding use of predator diet cues by prey was limited by a lack of research identifying molecular structures of the chemicals that mediate these interactions. Conclusions are also limited by a narrow research focus. Seventy percent of reviewed studies were performed in freshwater systems, with a limited range of model predator–prey systems, and 98 % of reviewed studies were performed in laboratory settings. Besides identifying the molecules prey use to detect predators, future studies should strive to manipulate different aspects of prey responses to predator diet across a broader range of predator–prey species, particularly in marine and terrestrial systems, and to expand studies into the field.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

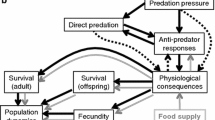

Prey possess a variety of characteristics that allow them to survive among potential consumers. Although some prey possess fixed traits to deter consumers (e.g., spines, armor), many prey use plastic responses including changes in behavior, morphology, or life history that are initiated or modified in situations where risk of injury or death is imminent (Kats and Dill 1998). Behavioral responses by prey to predation risk include reducing activity (Persons et al. 2001; Large et al. 2011), altering foraging behavior (Relyea 2002), and/or moving to a different location (Preisser et al. 2005, 2007; Flynn and Smee 2010) to reduce predatory encounters. Some prey alter their morphology to make it more difficult for predators to eat them (Schoeppner and Relyea 2005) or manufacture higher levels of chemical defenses to deter potential consumers (Baldwin 1998; Hay 2009). Life history changes, such as reproductive timing, can change with predation risk, and prey may accelerate or delay reproduction depending upon context (Covich and Crowl 1990; Fraser and Gilliam 1992; Li and Jackson 2005). Reducing predation risk may involve combinations of strategies across different temporal scales. For example, prey may react to predation risk with immediate behavioral responses while simultaneously initiating morphological changes which take longer to develop (Schoeppner and Relyea 2005). In other situations, prey may react only by changing their behavior or morphology, but not both (Schoeppner and Relyea 2005), or may incur a change in morphology caused by repeated behavioral alterations and not in direct response to predators (Bourdeau 2010a).

Prey must balance conflicting demands of predator avoidance with critical activities such as energy acquisition, growth, and reproduction. Predator avoidance or deterrence often incurs costs stemming from lost foraging opportunities or a diversion of resources away from growth and fecundity. To minimize these costs, risk assessment is essential, so that predator avoidance or deterrence is implemented in situations posing significant risk of injury or death. The most effective systems of risk analysis should integrate the most valuable information available regarding local predation risk on the detecting prey species, and prey may use visual, mechanical, or chemical cues alone or in combination to gage predatory threats (Munoz and Blumstein 2012).

Chemical cues are commonly used to gather information regarding predation risk by many taxa ranging from microbes to vertebrates (Hay 2009), even among organisms that rely heavily on other sensory modalities such as vision (Weissburg et al. 2014). This is perhaps because chemical cues often provide accurate, accessible information regarding the proximity and intentions of other organisms as predators may be cryptic and lie motionless to minimize visual, auditory, or mechanical cues. However, they cannot completely avoid releasing metabolites through waste products and body secretions (Brown et al. 2000a). Prey often evaluate the degree of predation risk from predator exudates, and cues in different concentrations and combinations allow for an unlimited degree of potential threat recognition (Weissburg et al. 2014).

For this review, we concentrated on chemoreception by prey to predator exudates, and how predator diet affected prey responses. Changes in predator foraging activity can trigger different responses by prey. Prey may interpret changes in predator diet to be indicative of changes in predation risk, particularly when a predator’s foraging activity reflects the immediate risk level and is predictive of future predation events (Sih 1980, 1984; Lima and Dill 1990). For instance, a predator consuming or having recently consumed conspecifics may be treated more cautiously by prey as they likely pose an immediate danger (Hews 1988; Madison et al. 1999; Hoefler et al. 2012). Yet, evidence for the importance of predator diet in modulating prey defensive decisions is equivocal. Diet cues can be an important source of information, sometimes necessary to induce prey responses to predators at all (Bronmark and Pettersson 1994; Stabell et al. 2003). In contrast, many prey species react to predators regardless of what species the predator has consumed recently (Smee and Weissburg 2006a, b; Large and Smee 2010). These differences may reflect the degree to which predator diet predicts future predation events or the availability of risk cues and prey ability to detect them. Here, we sought to develop a better understanding of how a predator’s diet affects responses of potential prey organisms by reviewing and compiling studies that examined the role of predator diet in modulating prey responses to predation risk.

Methods and terminology

In this review, we summarized studies from terrestrial, freshwater, and marine environments that examined how predator diet influenced the induction of plastic defenses in prey. We performed literature searches on ISI Web of Science and Google Scholar using the key words “predator diet” and “prey defenses.” We then expanded our search for studies by identifying references from the literature cited sections of papers found in the initial literature search that were cited as being relevant to the topic, but for which predator diet was not explicitly listed in the title, key words, or abstract. The result of this search was 109 primary publications and 5 reviews that are referenced in this article. Although many of these studies also included investigations of the role of alarm cues (e.g., injured conspecific) as an important source of information regarding predation risk, we focused solely on the responses of prey to either active predation (predators consuming prey) or digestive cues released by predators post-consumption to determine the role of predator diet.

The primary research articles are cataloged in the Supplementary Table 1 (supplementary materials), with publications given an entry for each independent experiment performed, each difference in diet studied, and each prey response type (i.e., behavioral, morphological, etc.) measured. This resulted in 203 investigations of differences in predator diet which were used to calculate the values in Table 6. Studies of predator diet can be highly complex, with the reviewed publications including variation in dietary differences studied (see Dietary Differences in Predator Cues section), number and types of diet treatments tested, the extent of pairwise comparisons between treatments, study systems, the number of predator and prey species tested, the number and type of responses measured, and investigations of other uses of diet cue discussed below. In many cases, complicated response patterns resulted in prey responses to some, but not all, combinations of these factors. To incorporate all this complexity, including contrasting or interacting results, the investigations were broken down into 405 studies of prey responses to predators reared on different diets. For example, a publication which investigated activity level and refuge use in two prey species would be counted as four separate studies, one for each combination of prey species and response variable. This approach ensured responses were not over-generalized if, for example, prey species responded differently or if diet cues induced responses in one measured variable (e.g., activity), but not in another (e.g., refuge use). As some publications focused narrowly and some included large amounts of variation in these factors, this approach allowed us to consider the relative amount of information provided by each study. For each study, we recorded the following information in Supplementary Table 2: study system, experiment type (laboratory or field), and response types measured (e.g., changes in behavior or morphology). We also report whether prey produced responses different from controls to 4 basic diet treatments which were: predators fed conspecifics, predators fed prey phylogenetically related to or living in the same habitat as the tested species, predators fed prey unrelated to the tested species, and predators which had been starved. This table also includes a description of how diet cues are used by prey relating to predator labeling and learning (discussed below). If diet cues significantly changed prey reactions from control treatments or if responses to tested diets were significantly different from controls and from one another, we counted this study as a positive finding for the role of predator diet in modulating prey responses. Please see Supplementary Table 2 for greater detail. This table was used to calculate the values in Tables 1, 2, 3, and 4. The information on diet response was further summarized in Supplementary Table 3, where studies are organized by the types of cues tested by each study and the types and number of cues which produced responses. Supplementary Table 3 was used to calculate the values in Table 5.

Many studies which investigated the role of predator diet cues, either explicitly or implicitly, did not take steps to avoid prey exposure to undigested prey alarm cues. Thus, it was difficult to ascertain if prey were reacting to predators because those predators were excreting cues after digesting prey or if cues from consumed prey remained on the predators following an attack. The 405 studies reviewed here include exposures of prey to predators that were actively consuming prey as well as to cues released by predators having previously consumed various diets. For clarity, we define the terms we use to describe these situations here. We defined alarm cues as cues produced by prey organisms that induce a response in other prey organisms (sensu Kats and Dill 1998 among others). Active predation was defined as the presence of both predator and prey cues as a result of prey exposure to a predator actively feeding during experiments. We defined digestive cues as cues to which prey were exposed that were produced by the predator post-consumption, with any alarm cues present only as a result of passage through the predator’s digestive system. Diet cues refer collectively to exposure to active predation and to digestive cues alone, but not to injured prey cues only.

Below we summarize the costs and benefits of prey responses to predator diet cues, the dietary differences to which prey respond, what (little) is known about the chemical nature of predator diet cues, and some potential avenues for future research.

Costs and benefits of using predator diet cues

The benefits of responding to predator diets cues will depend on the value of information provided by those cues in predicting future predator foraging behavior. That is, do cues in a predator’s diet reflect a difference in degree of risk posed by that predator? Using diet cues to scale responses to predators would allow prey to prioritize non-defensive processes, such as growth and reproduction, when predation risk is low, such as when a predator’s future diet is likely to consist of other species. The use of diet cues can also reduce the costs of prey defenses and/or extend their benefits if prey utilize them for learning or labeling of predators.

Prey survival

Prey survival, the most obvious benefit of inducible defenses, may increase after prior exposure to predators, and this positive effect can be strongly influenced by a predator’s diet. Thirteen studies were reviewed that examined if predator diet affected prey survival in future interactions with predators, and 8 found diet cues to significantly increase prey survival in future encounters (Table 1). For example, juvenile brook charr Salvelinus fontinalis exposed to cues from adult yellow perch Perca flavescens fed charr or rainbow trout Oncorhynchus mykiss had higher rates of survival in ensuing encounters with predators as compared to those exposed to predators fed stickleback Culaea inconstans (Mirza and Chivers 2003). Prior exposure to predators fed conspecifics also increased survival in goldfish Carassius carassius in subsequent predatory encounters with pike Esox lucius, as opposed to those exposed to pike fed swordtails Xiphophorus helleri (Zhao et al. 2006).

In contrast to these results, defensive responses of the wolf spider Pardosa milvina increase significantly with exposure to its predator, another wolf spider Hogna helluo, but do not impact survival in laboratory conditions (Persons et al. 2001). Similar results were seen for P. milvina exposed to hungry or satiated H. helluo, with hungry predators inducing stronger responses that did not translate into increased survival (Bell et al. 2006). Hungry spiders were more dangerous predators, suggesting that P. milvina increased their responses to hungry H. helluo to maintain the same level of survival as the latter become more efficient or determined predators (Bell et al. 2006). Thus, maintaining a constant level of survival against a more dangerous predator might actually indicate an increased benefit from diet cues, even though these studies concluded no benefit to survival in laboratory settings in our analysis.

Generalist versus specialist

The value of predator diet cues lies in the ability of prey to predict future predation risk based on prior predation events. Thus, prey will receive benefits from responding more strongly to predators consuming “dangerous” diets if predators are likely to consume similar diets in the future. For example, specialist predators prey upon a narrow range of species, although they may switch prey targets seasonally. Diet cues would be invaluable by indicating to non-target organisms that risk is low and by indicating to potential target organisms when their risk is high.

This is the case for the predatory wolf spider H. helluo that shows a stronger preference for paper exposed to its prey, another wolf spider P. milvina, when is has recently consumed P. milvina, suggesting H. helluo pose a greater risk to prey they have recently consumed (Persons and Rypstra 2000). Predictably, P. milvina hatch earlier and at smaller sizes in the presence of H. helluo that have recently consumed conspecifics, decreasing the time spent in the vulnerable egg state (Li and Jackson 2005). Predator-naïve fall field crickets Gryllus pennsylvanica responded with increased speeds to H. helluo and to two other species of predatory wolf spider which had consumed crickets (Storm and Lima 2008). Results from both studies suggest this preference may be found with other prey species.

However, if predators are equally likely to consume a variety of species regardless of their previous diet, there is little additional value in diet cues beyond identifying predator presence. Prey that only react to predators consuming conspecifics would be at great risk when predators consume other species, but still pose a threat to them. This would be the case for generalist predators which are more opportunistic and consume a wider variety of prey than specialists (Meng et al. 2006). Diet cues would then provide little additional information regarding the threat posed by generalist predators, since their last meal would have little influence on what they might eat next.

Three studies were reviewed that specifically compared prey responses between generalist and specialist predators. All three found responses were always present and strong for specialists, but were weaker or absent to generalists (Ferrari et al. 2007; Crawford et al. 2012; Osburn and Cramer 2013; Table 6). For example, fathead minnows P. promelas responded stronger to feces of northern pike E. lucius, a piscivorous predator, than to cues of a more generalist predator, brook trout S. fontinalis (Ferrari et al. 2007). Porcupines reacted stronger to scent from the fisher Martes pennanti, a specialist on porcupines, than to coyote Canis latrans urine (a generalist). In contrast, hares Lepus americanus reacted similarly to different predator scents (Osburn and Cramer 2013). These studies suggest that diet cues provide more valuable information to prey when released by specialist predators, because their previous diet provides accurate information about future risk. However, prey may react more strongly to generalist predators when they consume conspecifics if diet cues from those predators contain higher amounts of ‘risk’ cues such as combinations of predator and alarm cues. Generalists may also provide avenues for alternate use of diet cues, such as cue learning. For example, fathead minnows can learn the alarm cues of consumed heterospecifics when they are consumed with fathead minnows by the generalist yellow perch P. flavescens (Mirza and Chivers 2001b). Such overlap of known and novel cues would be unlikely in the diet of specialist predators and may provide selective pressure for responses to diet cues in generalist predators.

Within a population of generalist predators, individuals may actually specialize on a few prey types, and thus diet cues would provide an indication to potential prey about the threat an individual predator poses. For example, although cobras H. haemachatus are generalist predators, individual snakes show strong preferences for only one or two prey species (Alexander 1996, Greene 1997). Consequently, striped mice R. pumilio responded more strongly to feces from H. haemachatus fed conspecifics than to those that had eaten house mice M. musculus (Pillay et al. 2003). Laboratory and field studies of three trout species found individual trout often specialize on a few prey types (Bryan and Larkin 1972). Although generally short-lived, trout feeding preferences could last up to six months. Further studies of visual (Jackson and Li 2004) and chemical (Melcer and Chiszar 1989) search image formation in spiders and rattlesnakes, respectively, suggest the process results in a tradeoff between the efficiency of capture for one prey species with the ability to recognize other prey species, suggesting specialization may occur at the individual level in a number of species considered to be generalist predators.

For this review, we encountered great difficulty determining whether species were classified as generalist or specialist foragers since predators classified as generalist often have individuals that specialized on a few prey types. We therefore only used studies which explicitly investigated the role of predator specialization to compare responses of generalist and specialist predators, relying on the labels utilized by the authors to make distinctions between the two predator groups. We did not attempt to quantify differences in prey responses to generalist vs. specialist predators and the role of predator diet for the remaining studies, although this topic would be a fruitful area for future research endeavors given the limited number of studies explicitly testing this dietary difference.

Marine versus freshwater

Freshwater and marine systems differ in degree of isolation and allopatric division of populations, which has led to greater specialization of predator prey systems in freshwater environments (Wellborn et al. 1996). In freshwater systems where specialists are common and diet cues valuable in predicting risk, there is likely greater selective pressure on prey to respond to predators that are consuming conspecifics because these predators pose the greatest risk. In contrast, marine environments experience high connectivity with few barriers between habitats and have generalist predators feeding on a vast array of species. Therefore, in marine systems selection may favor general mechanisms of predator recognition, such as responses to predator exudates regardless of recent foraging history and reacting to injured con- and heterospecifics. If use of diet cues by prey is common for marine species, cues relating to dietary classification (i.e., carnivore, herbivore, etc.), such as those investigated by Dixson et al. (2012), that reveal a particular habitat contains high numbers of predators, are likely to be more useful and are possibly more prevalent. Of the 405 studies reviewed, 332 (>80 %) of those focused on aquatic systems (including studies of larval amphibians and aquatic larval insects), and 282 (70 %) were in freshwater (if studies of organisms which are transitional between marine and freshwater, such as salmon, are considered marine; Table 2). Forty-two of the 50 studies (85 %) reviewed in marine systems found predator diet to have some effect on prey responses, a greater percentage than what is observed in freshwater and terrestrial environments (~57 %; Table 2). However, there is a dearth of research in marine (and terrestrial) systems, which prevents accurate comparisons regarding the role of predator diet cues in prey evaluation or risk. For example, studies of differences in diet based on prey relationships generally see high usage of diet cues (80–100 %, Supplementary Table 4), but are poorly tested in marine and terrestrial environments. Even in freshwater systems, testing prey responses to predators consuming phylogenetically related species accounted for the majority of studies and was tested twice as often as all other aspects of diet cues combined. This, in combination with recognized publication bias in favor of significant results, limits our ability to determine the relative role of predator diet in different environments. More research of all types of dietary differences will be necessary to test the extent of diet cue effects on prey responses, and these studies are especially needed in terrestrial and marine systems.

Primary and secondary information

The extent to which prey rely on predator diet cues to determine response induction may reflect the costs and benefits of such cues. Several studies have found prey to rely on predator diet cues as a primary or sole source of information, meaning they respond only when predators eat conspecifics or closely related species, but not to alarm cues from injured prey, to predators consuming other diets, or to starved predators (Brown and Godin 1999, Murray and Jenkins 1999, Stabell et al. 2003, Jacobsen and Stabell 2004, Griffiths and Richardson 2006, Dixson et al. 2012). For example, Daphnia (spp.) produced morphological defenses in response to cues from predators fed conspecifics, but not to predators fed earthworms or starved. They do not respond to undigested alarm cues alone or when they are paired with predator cues (Stabell et al. 2003), indicating that consumption of conspecifics by predators is necessary to induce responses in this interaction (Stabell et al. 2003). Primary dependence on predator diet cues could be an effective way for prey to mitigate costs associated with predator avoidance or deterrence when certain diets indicate low risk, as with specialist predators discussed above. However, by limiting responses to predators in this manner, prey may leave themselves vulnerable to predators that have been foraging on other species or that have not recently eaten (and are likely more motivated and dangerous). Thirty-seven percent (142 of 385) of reviewed studies which tested a conspecific diet found prey to limit predator responses to situations when predators are consuming conspecifics and not to any other tested diet treatments. Of these 142 studies, 37 also tested prey responses to conspecific alarm cues, and 17 found no response to alarm cues, evidence for strict, primary usage of diet cues as the sole trigger for responding. Thus, it seems rare for organisms to fully limit responses to primary use of predator diet cues, suggesting it may be maladaptive for prey to completely ignore all other risk cues. Failure to detect potential predators likely causes injury or death, and thus there is stronger selective pressure on prey to avoid predators than on predators to consume prey (i.e., life-dinner principle, Dawkins and Krebs 1979). This suggests prey should be selected to play it safe and may explain why severe limitation of prey responses solely to predators consuming conspecifics appears to be a rare occurrence.

In contrast, prey may rely on predator diet cues as a secondary source of information to more accurately assess risk and fine tune responses to it. In such cases, use of diet cues may function analogously to using multiple sensory modalities for risk evaluation. Integration between multiple sensory modalities, such as vision and chemoreception, can provide more accurate assessment of risk than either sensory modality in isolation (Munoz and Blumstein 2012). In much the same way, many prey use diet cues in combination with other chemical cues to more accurately detect and evaluate risk (Jones and Paszkowski 1997, Brown et al. 2000b, Brodin et al. 2006, Bourdeau 2010b). In this scenario, prey react to predators regardless of diet, but react more strongly to diets which indicate increased risk. For example, gray tree frog tadpoles H. versicolor responded to alarm cues from crushed prey, but reacted more strongly to consumed prey, with graded responses proportional to phylogenetic distances between the consumed and responding prey species (Schoeppner and Relyea 2005). This suggests tadpoles interpret crushed conspecifics as an indication of predation risk, but consumed conspecifics as a more reliable cue because the source of prey mortality can be definitively attributed to predators. In addition, the degree of similarity between the current meal and the responding prey presumably reflects the current preference for the responding prey indicating increased risk. Using diet cues as a secondary source of information may represent a compromise by prey which allows them to gain benefits from more fine-tuned anti-predator responses without suffering the costs of failing to respond to dangerous predators which have not consumed a particular diet.

Some species use diet cues as primary information required before reacting to predators under some circumstances, but then use diet cues as a secondary information source to fine tune responses to predators in other circumstances. For example, the red-backed salamander P. cinereus forages at night to reduce exposure to its predators that are primarily active during the day. At night, when predator avoidance incurs the greatest costs via loss of time spent foraging, it uses dietary cues as a primary source of information and does not react to predators unless they have eaten conspecifics. During the day, when the salamanders are typically less active and predators most active, they use diet cues as a secondary source of information (Madison et al. 1999). A number of species that use chemical labeling to learn novel predator cues (see Learning and predator labeling section) initially respond only to cues from predators consuming conspecifics, but learn to respond to predators eating other prey after they have been exposed to these predators eating conspecifics and identified them as dangerous (Mathis and Smith 1993; Mirza and Chivers 2001a; Cai et al. 2011). These examples further represent a compromise by species which allows them to maximize benefits and minimize costs of responding to predator diet cues.

Types of prey responses to predator diet cues

Inducible defenses can include any changes in prey response which decrease their chance of consumption by predators, including changes in prey behavior, morphology, physiology, and life history. Response types often vary in lag times, reversibility, and cue sensitivity. Prey may require more reliable cues or cues that indicate a greater level of risk, such as cues from predators eating conspecifics, to produce responses which are more difficult to reverse or which represent a greater energy investment. For example, gray treefrog tadpoles Hyla versicolor produced behavioral responses, but not morphological responses to crushed prey cues. However, they produced stronger behavioral responses and altered their morphology when exposed to predators that had consumed related prey (Schoeppner and Relyea 2005).

Morphological changes in response to predation risk are often more costly to prey and are harder to reverse than are behavioral responses. We hypothesized that more reliable cues, such as those from a predator’s diet, would be required to trigger morphological as opposed to behavioral reactions by prey. Fourteen of the 109 publications reviewed studied both behavioral and morphological responses of prey to predators (Supplementary Table 2). In these studies, behavioral responses, which have short lag times and are easy to reverse, were induced by predator diet cues >70 % of the time and morphological responses, which are more expensive and difficult to reverse, were induced only 40 % of the time. When considering all the reviewed studies (405), diet cues influenced >60 % of tested behavioral responses and 50 % of morphological responses (Table 1), suggesting morphological responses are induced by a more limited range of predator diet cues. For organisms which produce both types of responses, more sensitive behavioral responses may be reduced after morphological responses are turned on to prevent redundancy of defenses. For example, when goldfish Carassius auratus change to a deep-bodied morph to defend against gape-limited predators, they displayed significantly weaker behavioral responses than shallow-bodied morphs (Chivers et al. 2007). This may reduce the prevalence of behavioral responses and mask the greater sensitivity to various diets. Thus, the small difference in response sensitivity here and the great difference in sensitivity for studies which tested both types of responses suggest use of diet cues may provide a means for prey to ensure more expensive or less flexible responses are produced only when risk is high.

It bears consideration, however, that the literature on predator diet cues strongly focuses on behavioral responses, with 75 % (299) of our 405 studies measuring behavior (Table 1). The next most commonly studied response type, morphological, is only studied in <20 % (77) of these interactions and physiological and life history changes are each the focus of 2 % (8) of these interactions (the remaining 13 interactions examined survival responses, discussed above). Thus, there is limited ability to statistically test the differences noted here and comparisons between responses types, as between systems, are influenced strongly by unbalanced data and publication bias. Additional work is needed regarding these other response types before the value of predator diet cues in inducing these responses or the use of diet cues for secondary induction of more expensive response types can be truly investigated.

Learning and predator labeling

The immediate value of predator diets cues is to label or identify riskier predators (Chivers and Smith 1998). But, the value of responding to diet cues can be extended outside the initial predator encounter if prey use them to learn novel risk cues and respond to these risk cues in subsequent encounters. The cues which label predators are often alarm substances produced by conspecifics that are innately recognized (Batabyal et al. 2014; Manek et al. 2014). Many prey species possess the ability to learn novel cues as dangerous when they are paired with innately recognized alarm cues (e.g., injured conspecific). Prey can learn to recognize cues released by predators (Magurran 1989; Brown and Smith 1996), heterospecifics (Chivers et al. 1995), and even habitats (Mathis and Unger 2012) that are indicative of predation risk. Thus, use of predator diet cues can be valuable for its potential to label current as well as future risk.

Predator labeling was examined in 342 studies and found to occur in 226 of them (65 %, Table 4). For example, fathead minnows P. promelas did not respond to unknown predatory pike E. lucius fed swordtails X. helleri, but did respond when pike were fed conspecific minnows (Mathis and Smith 1993). Similarly, naïve damselflies Enallagma spp. responded to pike fed damselflies or fathead minnows, but not to predators fed mealworms Tenebrio molitor (Chivers et al. 1996). Thirty-four studies directly tested predator diet as a mechanism for learning novel cues, and 31 (>90 %, Table 4) of them found diet to be a significant factor used by prey for learning predator cues. Additionally, 24 (~90 %) of 27 studies found learning via diet cues was necessary for prey to produce any responses at all to predator cues in the absence of alarm cues. For example, minnows and damselflies respond to swordtail and mealworm diets, respectively, only when they have previously experienced predators fed “dangerous” diets containing conspecifics (Chivers et al. 1996).

Some prey can learn the alarm cues of heterospecifics when they are present in the diet of predators along with those of conspecifics or when the predator has previously been labeled as dangerous. Minnows responded to the alarm cue of brook sticklebacks C. inconstans, a heterospecific prey, after exposure to perch fed minnows and sticklebacks, but not when perch had been fed sticklebacks and swordtails (Mirza and Chivers 2001b; Chivers et al. 2002). Learning of heterospecific alarm cues may provide value for responses to diet cues produced by generalist predators. Although the immediate labeling of the predator may not provide information about the risk it presents, overlap between conspecific and heterospecific cues is unlikely in the diets of specialist predators. Thus, it may be valuable for prey to respond to generalist predator diets if it allows prey to learn novel heterospecific alarm cues and identify a larger range of dangerous situations in the future.

Biotic gradients

Inducible defenses represent a balance of defensive cost against survival benefits. This balance occurs against a backdrop of ecological factors which influence the relative costs and benefits prey experience when determining when to produce defenses. The value of cues, such as predator diet cues, and their use are often influenced by alteration of defense costs and benefits. Generally speaking, prey should make use of diet cues at intermediate risk levels, when risk is great enough to justify reaction to predation cues (Laurila et al. 1997, 1998), but is not so high as to justify strong responses to all risk cues (Madison et al. 1999; Jacobsen and Stabell 1999; Vilhuen and Hirvonen 2003; Ferrari et al. 2010). The role of predator diet cues in relation to other pressures on prey such as variation in resources, competition, abiotic conditions, and temporal variation in predation risk have received limited attention.

Prey susceptibility to predation influences the cost:benefit ratio of inducible defenses as well as the use of diet cues for risk assessment. Species that possess alternate defenses make less use of predator diet cues because they have reduced sensitivity to predation cues in general (Laurila et al. 1997, 1998; Chivers et al. 2007). Increased use of diet cues occurs among smaller individuals that are more susceptible to predators (Bronmark and Pettersson 1994; Levri 1998). Greater abundances of individuals can also decrease predation risk through the dilution effect, resulting in increased vigilance and decreased individual risk (Foster and Treherne 1981; Turner and Pitcher 1986). For example, Artic charr S. alpinus tested in schools were found to respond to brown trout S. trutta only when trout were fed charr (Hirvonen et al. 2000), but to respond to trout regardless of diet when tested as solitary individuals (Vilhuen and Hirvonen 2003).

Resource availability can alter the value of energy allocated to defenses, causing prey responses to predator diet cues to wane as prey hunger level increases (Laurila et al. 1998; Brown and Cowan 2000; Bell et al. 2006). Competition may also alter resource availability and prey responses to predator diet cues. Some organisms can evaluate the potential risk from predators and competitors and adjust their behavior and morphology so as to react to the greatest threat (Relyea 2000). Toad tadpoles B. bufo react significantly less to predation risk than R. temporaria tadpoles, and Laurila et al. (1997) attributed this finding to high natural densities of toads resulting in intense competition for resources at this life stage. In contrast, Kiesecker et al. (2002) suggest red-legged tadpoles R. aurora use diet cues and alter their life history only in response to conspecific diet treatments because consumption of heterospecifics indicates reduced predation risk and competition.

The benefits of defending, and the costs prey are willing to incur to achieve them, are altered by the degree of risk a predator presents. Prey species utilize diet cues when predators are less aggressive or at times when they are less active, even when they respond equally to all predation cues under riskier conditions (Madison et al. 1999; Jacobsen and Stabell 1999; Vilhuen and Hirvonen 2003; Ferrari et al. 2010). Additionally, R. dalmatina tadpoles respond more strongly to newts T. vulgaris which have consumed greater densities of prey, possibly due to greater quantities of cue produced, suggesting newts pose a risk proportional to tadpole consumption (Hettyey et al. 2010). Ambient predation pressure is also known to influence prey use of predation cues generally (Large and Smee 2012) and likely influences use of diet cues in a similar manner, with less selective responses seen when pressure is greater. Investigating the relationships between ambient predation pressure and prey use of predator diet cues requires further investigation.

Evolutionary history and invasive species

Using predator diet cues to learn novel cues can be especially beneficial under conditions of shifting predator landscapes, such as the presence of invasive or exotic predators (Grostal and Dicke 2000; Cai et al. 2011; Roberts and de Leaniz 2011). For innate recognition of predation cues to occur, a shared history between the predator and prey species is needed to allow time for the recognition to develop through selection (Mathis et al. 1993; Chivers et al. 1995; Chivers and Mirza 2001; Schoeppner and Relyea 2009). Local predators may be recognized as dangerous regardless of diet, whereas prey may respond to novel species only when diet cues indicate they are a threat (Mathis and Smith 1993; Stabell et al. 2003; Marquis et al. 2004; Dixson et al. 2012; Rosell et al. 2013). For example, when nine species of tadpole were tested for responses to predator diet cues of native dragonfly Aeshna spp. and invasive crayfish P. clarkii predators, eight species responded to dragonflies regardless of their diet whereas five of nine responded to crayfish only when crayfish consumed prey conspecifics (Nunes et al. 2013). However, novel cues in general are often treated with caution (Crawford et al. 2012; Brown et al. 2013), and this response is likely to be heightened by the presence of diet cues recognized as dangerous (Brown et al. 2000b). Such a response to predator diet cues would be adaptive for species and communities faced with species invasion (Cai et al. 2011). Conversely, exotic prey species may benefit and be able to successfully colonize new habitats if they can recognize new predatory threats in their invasive range. Evolutionary history may diminish in importance for responses to diet cues over the course of an invasion as prey are selected for the ability to detect the new predator or learn to do so. In some cases, prey may even react more strongly to the invasive species over time if it poses a greater threat than native predators (Large and Smee 2010; Large et al. 2012).

Dietary differences in predator cues

In addition to studies assessing whether prey could distinguish between predators eating conspecifics versus an alternate diet, three other aspects of changes in prey responses to predator diet were reviewed. These three areas for diet cue investigations determined: (1) if diet reflected the hunger state of a predator and indicated if a predator was more or less dangerous; (2) if prey could generally distinguish between the type of consumer (e.g., carnivore vs. herbivore), and (3) if related prey in a predators diet yield stronger reactions and reflected greater risk (Table 6).

Hunger state of predators

A hungry predator may pose a greater threat than one that has recently eaten, but often, prey are less responsive or do not respond at all to predators that are starved. In some instances, prey can distinguish between satiated and starved predators or demonstrated a graded response based upon both the content of a predator’s last meal and the time since it last ate. We found four scenarios regarding prey reactions to predators based upon hunger state: (1) prey do not differentiate between fed and starved predators, reacting similarly to both (Saglio and Mandrillon 2006; Nunes et al. 2013); (2) prey react stronger to starved predators (Licht 1989; Bell et al. 2006; Shin et al. 2009; Nunes et al. 2013); (3) prey react to both starved and satiated predators, but react more strongly to predators that had eaten conspecifics (Brown et al. 2000b; Brown and Schwarzbauer 2001; Vilhuen and Hirvonen 2003; Schoeppner and Relyea 2005); and (4) prey do not react to starved predators (Jachner 1997; Yamada et al. 1998; Madison et al. 1999, 2002; Brown et al. 2001; van Buskirk and Arioli 2002; Marquis et al. 2004; Saglio and Mandrillon 2006; Smee and Weissburg 2006a; Griffiths and Richardson 2006; Mortensen and Richardson 2008; Kesavaraju and Juliano 2010; Large and Smee 2010; Morishita and Barreto 2011; Mogali et al. 2012; Nunes et al. 2013).

Hungry predators are often more motivated and dangerous (Jachner 1997; Bell et al. 2006). Presumably, prey that can differentiate between satiated and hungry predators benefit by responding more strongly to more dangerous hungry predators. However, 36 studies investigated predator hunger and its effects on prey responses, and 16 of these contained at least one prey species that failed to react to a starved predator (Table 6). This finding may seem counter intuitive, but it likely results from an inability of prey to detect starved predators because they release fewer exudates than those recently fed (Large and Smee 2010). For predators, decreased cue production could increase their success as their need to find a meal increases. Under these circumstances, responses to injured prey cues may be an effective strategy for prey in allowing nearby conspecifics to detect the hungry foragers despite their reduced cue production, but only after it has succeeded in acquiring a meal (Smee and Weissburg 2006a; Morishita and Barreto 2011).

Dietary classifications

Most studies of predator diet investigate changes in predator diet from species X to species Y, but 31 interactions tested changes in diet at a larger scale (i.e., changes in dietary classification, such as from carnivore to herbivore) (Table 6). Utilizing such diet cues could allow prey to identify potential predators by their general dietary classification (i.e., carnivorous, piscivorous, insectivorous), without the need to recognize unique compounds for each predator or to have prior experience with a novel predator. Two publications investigated the ability of mammals to recognize carnivorous predators when they were consuming a diet lacking meat. Four species of rodents fed significantly more from bowls marked with urine of coyotes Cavia latrans fed cantaloupe than from coyotes fed meat (Nolte et al. 1994). Chemical analysis suggested rodents reacted to sulfurous compounds resulting from the consumption of meat and that sulfurous compounds may be a general diet cue utilized by a number of species to identify carnivorous predators (Nolte et al. 1994). Similarly, predator-naïve mouse lemurs Microcebus murinus avoided areas at which they were conditioned to expect a reward when the area was marked with the feces of native or introduced predators, but not when the feces were of a non-predator, indicating an innate ability of mouse lemurs to recognize metabolites resulting from the digestion of meat (Sundermann et al. 2008).

Habitat selection by prey may also be affected by the presence of predator diet cues. Juvenile anemonefish A. percula responded to naturally non-piscivorous fish fed a piscivorous diet, but not an invertebrate diet (Dixson et al. 2012). In the field, coral reefs manipulated to release piscivorous diet cues saw significantly fewer fish recruits compared to those releasing cues from invertebrate-fed fish and un-manipulated control patches. These results indicate that reef fish use large scale diet cues to quickly recognize new predators after settlement on the reef. Such large scale cues may be especially valuable in habitats, such as coral reefs, where the predator base is too diverse to learn individual predator cues and where prey must quickly learn to recognize predators, as reef fish must in the post settlement stage when mortality is very high (Dixson et al. 2012).

These findings are not universal, however, as C. carassius altered their morphology only when predators were consuming prey conspecifics and not simply to a piscivorous diet (Stabell and Lwin 1997). This result may be explained by the higher costs of altered morphology (compared to behavioral changes), which can require a more accurate indication of predation risk (see section on Types of prey responses). Regardless, general diet cues may provide an important source of information on predation risk for many prey species, but this has not been thoroughly investigated. In addition, many studies which are not necessarily focused on general diet cues, but which choose vastly unrelated diets for the predators producing the cues may inadvertently provide information on the use of general diet cues by prey species (Hirvonen et al. 2000; Van Buskirk and Arioli 2002).

Relationship

The relationship between prey consumed by the predator (the consumed prey) and other prey organisms detecting the predator (the responding prey) often influences how predator diet affects prey responses. Relationships between prey organisms can be through relatedness via shared evolutionary history or an ecological relationship in which prey live in the same habitat and are preyed upon by the same predator(s). Evolutionary relationships depend on prey responding more strongly to the consumption of other prey when phylogenetic distances between the two organisms are shorter. Prey that are more closely related may produce similar alarm cues or cause similar compounds to be present in predator excretions, which can lead to effects of predator diet on prey responses (Chivers and Smith 1998). This is commonly seen within the superorder Ostariophysi. The alarm cue for this group, an unknown compound or group of compounds referred to as Schreckstoff, appears to be highly conserved (Brown et al. 2000b, 2003). For this reason, species in this superorder are often used for investigations of diet cues with swordtails, a non-ostariophysan species, as a control diet. The level of evolutionary relationship prey can detect varies among interactions and environments, and some prey can differentiate between predators eating the same species from different populations, such as the salamander P. cinereus (Sullivan et al. 2005).

Knowing if an evolutionarily unrelated, but sympatric organism has been eaten by a predator may provide valuable information regarding predation risk in situations where unrelated prey organisms live in the same habitat and are eaten by the same predators. Selection for the ability of prey to detect consumption of ecologically related species [i.e., in the same habitat and are vulnerable to the same predator(s)] depends on the value of responding to the consumption of other organisms with which prey share a habitat, a predator, and/or a trophic level. Such value can be influenced by factors such as species density, overlap in ontogeny, and competitive interactions (Laurila et al. 1997; Huryn and Chivers 1999). Fewer studies tested ecological relationships as compared to evolutionary relationships, but 19 investigations were found that tested prey responses to predators consuming unrelated, but co-occurring species, and in 17 (89.5 %) prey reactions to predators were stronger when predators consumed co-occurring species compared to control and unrelated diet treatments (Table 6). For example, red-backed salamanders P. cinereus and dusky salamanders Desmognathus ochorphaeus both share habitat with the two-lined salamander Eurycea bislineata, but do not overlap each other anywhere in their ranges. Both red-backed and dusky salamanders responded to garter snakes T. sirtalis fed conspecifics or sympatric two-lined salamanders, but not to snakes fed the third, allopatric salamander species (Sullivan et al. 2004, 2005). And predatory mites N. cucumeris responded to the heteropteran bug O. laevigatus only when O. laevigatus have been consuming a shared prey, the western flower thrip Frankiniella occidentalis (Magalhaes et al. 2004). The authors suggest responses are seen to the consumption of thrips and not prey conspecifics because N. cucumeris encounter O. laevigatus when they are both consuming thrips, suggesting the ecological relationship between bugs and thrips is more important than the evolutionary relationship between conspecifics.

Schoeppner and Relyea (2009) investigated the relative importance of these two types of relationships in inducing responses to predator diet cues. They compared responses of gray tree frog tadpoles H. versicolor to dragonfly predators fed one of seven diets of co-occurring species along a gradient of evolutionary relatedness and a single species of closely related, but allopatric prey. Although gray tree frog tadpoles H. versicolor react to predators that have eaten co-occurring species, their responses to predators fed evolutionarily related organisms were stronger.

Other factors in predator diet affecting prey responses

Life history may be an important diet factor for many species whose predator base changes with life stage by providing an avenue to learn new predators quickly and avoid responding to predators which no longer pose a threat. For example, juvenile western toads B. boreas react to red-spotted garter snakes Thamnophis sirtalis fed juvenile, but not larval toads (Belden et al. 2000). Belden et al. (2000) was the only study reviewed that investigated the ability of prey to recognize life stage differences in predator diet. Besides life stage, prey morphology may influence prey reactions. Prey may have different morphologies and may react differently to predators depending upon the morphology of the conspecifics consumed (Brown et al. 2004). For example, goldfish C. auratus react to gape-limited pike predators E. lucius by growing a deeper body to reduce their likelihood of being consumed. Chivers et al. (2007) tested responses of goldfish to pike that had eaten either a deep-bodied type or a shallow-bodied type without the morphological response. Goldfish behavioral responses were strongest to pike that had eaten goldfish with the same body type.

Rana dalmatina tadpoles responded more strongly to predators fed greater densities of conspecifics (Hettyey et al. 2010). The authors suggested the density of consumed prey potentially indicated the age or available prey options of predatory newts, as newts are more dangerous when fully grown or when lacking alternate prey. Similar results were found for pool frog tadpoles R. lessonae reacting to dragonfly predators (van Buskirk and Arioli 2002). However, this finding may be an issue of quantitative cue concentration rather than qualitative diet differences as predators consuming more food may simply exude more cues for prey to detect. Nonetheless, predators regularly consuming greater quantities of prey and producing greater quantities of cue are likely more dangerous, and more intense responses to these differences by prey are likely adaptive.

Some species can act as predator and prey when individuals practice cannibalism, presenting a unique situation as the cues produced by predators may be the same as those produced by damaged or consumed prey. Larvae of the long-toed salamander Ambystoma macrodactylum have been used in several studies of the role of predator diet cues in cannibalistic systems, as the species produces varying numbers of a cannibalistic morph depending on environmental cues which makes cannibalistic predation pressure unpredictable (Wildy and Blaustein 2001). The ability to differentiate between typical and cannibalistic morphs and to avoid the latter is therefore an advantage for individuals of this species. The salamanders are able to differentiate and respond accordingly to cannibalistic morphs, with stronger responses to cannibals fed conspecifics (Chivers et al. 1997), although individuals respond to salamanders which have been consuming conspecifics regardless of morph (Wildy et al. 1999). These responses are likely learned as naïve salamanders respond only to cannibals consuming conspecifics while older, experienced individuals respond to cannibals regardless of diet (Wildy and Blaustein 2001).

Nature of diet cues

The inability to identify the chemical compound(s) modulating prey reactions to predator diet cues limits much of the work in this area of chemical ecology. Unlike plant chemical defenses, diet cues are often waterborne, present at low concentrations, and likely to be a blend of primary metabolites rather than a single secondary metabolite, making their identification and quantification challenging (Weissburg et al. 2002). These methodological limitations make it difficult to ascertain how alarm and predator cues work together and whether concentration or the chemical make-up of the cue(s) is responsible for differences in prey responses observed. Yet, by manipulating predator diet, a better understanding of the role of diet cues in modulating prey responses can be obtained.

Prey may utilize cues emanating from two sources to determine predation risk, cues from injured prey (e.g., alarm cues) and cues from the predators themselves. Predator diet cues may contain both of these types of cues, either from active predation, which mingles alarm cues in the environment with predator exudates, or from the presence of alarm cues released in predator waste products, referred to here as digestive cues. The combination of both cues may inherently indicate an elevated risk by suggesting a predator is in the area and has already harmed or consumed other prey organisms. The greater reliability of combinations of risk cues could result in concomitant increases in prey responses. Alternatively, there may simply be a larger quantity of risk cues present, creating a situation in which prey are more likely to detect the cues and/or to detect greater cue concentrations. Prey may respond more strongly to greater concentrations of predation cues and, thus, an increase in cue concentration alone may explain the greater responses seen to diet cues (Weissburg et al. 2014). These possibilities are not mutually exclusive, and both may influence prey responses simultaneously.

If prey respond to the quality, rather than the quantity, of predatory diet cues, there are three possible sources for digestive dietary cues: (1) alarm cues are released unchanged in predator waste products, (2) digestion of prey changes the alarm cue in a manner to reflect that a prey was eaten, or (3) a novel cue is excreted by the predator after consuming a particular prey item. For the first possibility, prey alarm substances may be conserved during digestion, meaning prey are receiving alarm cues indirectly alongside predator cues post-consumption in essentially the same undigested manner as they would during the attack. For example, nudibranchs Aeolidia papillosa which have fed on sea anemones contain the anemone alarm substance anthopleurine (Howe and Harris 1978). In the second case, the alarm cue of the prey would be altered by digestion to indicate the prey organism has been consumed. Daphnia (spp.) do not respond to either predator or alarm cues alone or in combination unless the alarm cues have been consumed by predators or homogenized with enzyme rich tissue from the predator intestines or liver (Stabell et al. 2003). The final possible source of dietary information is completely novel cues produced during digestion which are in no way related to prey alarm cues, but indicate what a predator has eaten. Although a limited selection of studies, such as those presented here, lend support for one cue source or another, our understanding of the nature of cues sources is restricted by our limited understanding of predation cues at the molecular level. Further chemical analysis of these cues will be necessary to distinguish which of these sources produces predator diet cues. It is possible sources are different between systems and are not mutually exclusive.

Predator counterresponses

Predator labeling may act as a selective force on predators to evolve counterresponses that may minimize the usefulness of diet cues to prey. In their review of alarm signaling in aquatic systems, Chivers and Smith (1998) suggest several ways in which predators may circumvent labeling by dietary cues, including breakdown of alarm cues in the digestive tract of predators. Our search did not find any studies empirically testing these possibilities, perhaps because of challenges associated with identifying waterborne chemical cues. However, behavioral counterresponses have been demonstrated in northern pike E. lucius which practice localized defecation. Pike readily consume fathead minnows P. promelas (Brown et al. 1995, 1996), and pike feeding on minnows defecated at the end of the tank farthest away from their “home range” where cover was provided and their ambush foraging strategy possible. Pike defecated all around the tank when feeding on mice Mus musculus or swordtails S. helleri that do not cause predator labeling (Brown et al. 1996). The elapid snake Hemachatus haemachatus also used this strategy and defecated away from its retreat when preying on striped mice Rhabdomys pumilio (Pillay et al. 2003). These results suggest such selective pressures do exist and further study will be needed to determine whether physiological counter-adaptations also exist.

Future directions

Prey response to predation risk can affect entire food webs, often generating trophic cascades that occur without changes in the density of predators or prey (reviewed by Preisser et al. 2005, 2007; Weissburg et al. 2014). Understanding the mechanisms by which prey detect, evaluate, and respond to risk is necessary to more accurately predict and model nonlethal predator effects in food webs as well as to enhance understanding of context-dependent predator–prey interactions. To conclude our review, we offer several areas for future research that we believe will yield considerable insights into the nature and role of predator diet cues. Although not an exhaustive list, these research approaches will yield new insights into how predator diet affects prey responses.

There is a clear need to identify the chemical cues in predator diets that modulate prey responses. This will enhance understanding of the value of diet cues by providing insights into areas such as the conservation of risk cues across taxa, the role of cue quantity versus quality, temporal variation in risk, and the selective role of diet cues from a predator perspective. Although the specific identity of chemical structures will ultimately be necessary, many authors can assist in preliminary determination of the relative roles of alarm cues and direct predator cues by carefully considering predator diet in studies of inducible defenses and by explicitly describing predator treatments in detail. Obviously all studies cannot manipulate predator diet, but many fail to mention how predators were maintained [i.e., what they were fed, how much, and how often, whether prey were exposed to active predation or simply to digestive cues, refer to Weissburg et al. (2014) for discussion]. Doing so will allow for general assessment of prey responses to alarm versus predator cues and the role of cue combination, as is seen with active predation events, versus cue digestion and release by predators. Further, prey diet should also be quantified because it may influence prey reactions and susceptibility to risk (Brooker et al. 2015).

Inducible prey defenses have been well studied, but this body of work has been biased towards freshwater species and studies on changes in prey behavior. Researchers need to investigate the role of diet cues in different habitat types and taxa, across different response types, and to differences in predator diet. The studies reviewed here showed a strong bias for studies in freshwater environments, testing behavioral responses, and focusing on relationship differences in predator diets. Over half (210) of the 405 studies reviewed here investigated behavioral responses in freshwater systems (Table 7). All reviewed life history responses were studied in freshwater environments and only two response types (behavior and survival) were measured in terrestrial environments. A large number of studies were performed with particular model species (31 studies performed with fathead minnows P. promelas and 121 on anurans, Supplementary Table 2). A broader testing of diet cues in other predator–prey interactions in marine and terrestrial habitats will provide important information on trends in the use of predator diet cues and the value and costs associated with detecting and responding to the information they provide.

Of the 405 experimental studies of predator diet cues reviewed here, only 8 were conducted in the field (Table 3). Although 5 (>60 %) found a significant effect of predator diet, many more such studies will be needed to determine the importance of patterns detected under laboratory conditions in nature. In addition, laboratory studies should work to increase the complexity of study design to incorporate factors which are potentially important under natural conditions. For example, very few studies have investigated mixed predator diets consisting of more than one prey species. Only 9 of 203 reviewed investigations contained treatments where predators fed on more than one species (Table 6). Further, only 2 of these studies explicitly tested mixed predator diets (Brown and Zachar 2002; Hoefler et al. 2012), while 4 had a single mixed diet treatment (Levri 1998; Huryn and Chivers 1999; Mirza and Chivers 2001a, b; Large et al. 2012) and 3 tested mixed diets by changing predator diets and testing the effects of previous diet (Murray and Jenkins 1999; Stabell et al. 2003; Meng et al. 2006). While feeding predators a single species may be realistic when predators are specialists or switch seasonally between prey species, under natural circumstances, many prey will encounter predators that have recently consumed multiple species of prey. The results of these studies suggest predator labeling occurs when other prey species are present, but the extent of their applicability is limited until more studies are completed. Lab studies should also investigate the role of factors such as environmental conditions and habitat complexity, which have been shown to influence induced defenses.

Finally, quantifying the costs of responding to diet cues in term of growth, fecundity, and provisioning of offspring for prey and for a prey’s offspring is needed. Although many responses have been demonstrated to be costly and produce survival benefits, it is rare for researchers to explicitly test the effects of altering general responses to predation cues based on dietary information. This can be further complicated if the risk presented by predators, such as hungry predators which are more motivated, causes shifts in prey survival that mask the effectiveness of more finely tuned responses.

References

Alexander GJ (1996) Thermal physiology of Hemachatus haemachatus and its implications to range limitation. PhD thesis, University of the Witwatersrand, Johannesburg, South Africa

Baldwin IT (1998) Jasmonate-induced responses are costly but benefit plants under attack in native populations. Proc Natl Acad Sci 95:8113–8118

Batabyal A, Gosavi S, Gramapurohit N (2014) Determining sensitive stages for learning to detect predators in larval bronzed frogs: importance of alarm cues in learning. J Biosci 39:701–710

Belden LK, Wildy EL, Hatch AC, Blaustein AR (2000) Juvenile western toads, Bufo boreas, avoid chemical cues of snakes fed juvenile, but not larval, conspecifics. Anim Behav 59:871–875

Bell RD, Rypstra AL, Persons MH (2006) The effect of predator hunger on chemically mediated antipredator responses and survival in the wolf spider Pardosa milvina (Araneae: Lycosidae). Ethology 112:903–910

Bourdeau PE (2010a) An inducible morphological defence is a passive by-product of behaviour in a marine snail. Proc R Soc 277:455–462

Bourdeau PE (2010b) Cue reliability, risk sensitivity and inducible morphological defense in a marine snail. Oecologia 162:987–994

Brodin T, Mikolajewski DJ, Johansson F (2006) Behavioural and life history effects of predator diet cues during ontogeny in damselfly larvae. Oecologia 148:162–169

Bronmark C, Pettersson LB (1994) Chemical cues from piscivores induce a change in morphology in crucial carp. Oikos 70:396–402

Brooker RM, Munday PL, Chivers DP, Jones GP (2015) You are what you eat: diet-induced chemical crypsis in a coral-feeding reef fish. Proc R Soc B 282:20141887

Brown GE, Cowan J (2000) Foraging trade-offs and predator inspection in an ostariophysan fish: switching from chemical to visual cues. Behaviour 137:181–195

Brown GE, Godin J (1999) Who dares, learns: chemical inspection behaviour and acquired predator recognition in a characin fish. Anim Behav 57:475–481

Brown GE, Schwarzbauer EM (2001) Chemical predator inspection and attack cone avoidance in a characin fish: the effects of predator diet. Behaviour 138:727–739

Brown GE, Smith RJF (1996) Foraging trade-offs in fathead minnows (Pimephales promelas, Osteichthyes, Cyprinidae): acquired predator recognition in the absence of an alarm response. Ethology 102:776–785

Brown GE, Zachar MM (2002) Chemical predator inspection in a characin fish (Hemigrammus erythrozonus, Characidae, Ostariophysi): the effects of mixed predator diets. Ethology 108:451–461

Brown GE, Chivers DP, Smith RJF (1995) Localized defecation by pike: a response to labelling by cyprinid alarm pheromone? Behav Ecol Sociobiol 36:105–110

Brown GE, Chivers DP, Smith RJF (1996) Effects of diet on localized defecation by northern pike, Esox lucius. J Chem Ecol 22:467–475

Brown GE, Adrian JC, Smyth E, Leet H, Brennan S (2000a) Ostariophysan alam pheromones: laboratory and field tests of the functional significance of nitrogen oxides. J Chem Ecol 26:139–154

Brown GE, Paige J, Godin J (2000b) Chemically mediated predator inspection behaviour in the absence of predator visual cues by a characin fish. Anim Behav 60:315–321

Brown GE, Golub JL, Plata DL (2001) Attack cone avoidance during predator inspection visits by wild finescale dace (Phoxinus neogaeus): the effects of predator diet. J Chem Ecol 27:1657–1666

Brown GE, Adrian JC, Naderi NT, Harvey MC, Kelly JM (2003) Nitrogen oxides elicit antipredator responses in juvenile channel catfish, but not in convict cichlids or rainbow trout: conservation of the ostariophysan alarm pheromone. J Chem Ecol 29:1781–1796

Brown GE, Foam PE, Cowell HE, Fiore PG, Chivers DP (2004) Production of chemical alarm cues in convict cichlids: the effects of diet, body condition and ontogeny. Ann Zool Fenn 41:487–499

Brown GE, Ferrari MCO, Elvidge CK, Ramnarine I, Chivers DP (2013) Phenotypically plactic neophobia: a response to variable predation risk. Proc R Soc A 280:20122712

Bryan JE, Larkin PA (1972) Food specialization by individual trout. J Fish Res Board Can 29:1615–1624

Cai F, Wu Z, He N, Wang Z, Huang C (2011) Can native species crucian carp Carassius auratus recognizes the introduced red swamp crayfish Procambarus clarkii? Curr Zool 57:330–339

Chivers DP, Mirza RS (2001) Predator diet cues and the assessment of predation risk by aquatic vertebrates: a review and prospectus. Chem Signals Vertebr 9:277–284

Chivers DP, Smith RJF (1998) Chemical alarm signalling in aquatic predator–prey systems: a review and prospectus. Ecoscience 5:338–352

Chivers DP, Brown GE, Smith JF (1995) Acquired recognition of chemical stimuli from Pike, Esox lucius, by Brook Sticklebacks, Culaea inconstans (Osteichthyes, Gasterosteidae). Ethology 99:234–242

Chivers DP, Wisenden BD, Smith RJF (1996) Damselfly larvae learn to recognize predators from chemical cues in the predator’s diet. Anim Behav 52:315–320

Chivers DP, Wildy EL, Blaustein AR (1997) Eastern long-toed salamander (Ambystoma macrodactylum colurnbianum) larvae recognize cannibalistic conspecifics. Ethology 103:187–197

Chivers DP, Mirza RS, Johnston JG (2002) Learned recognition of heterospecific alarm cues enhances survival during encounters with predators. Behaviour 139:929–938

Chivers DP, Zhao X, Ferrari MCO (2007) Linking morphological and behavioural defences: prey fish detect the morphology of conspecifics in the odour signature of their predators. Ethology 113:733–739

Covich AP, Crowl TA (1990) Predator-induced life history shifts in a fresh-water snail. Science 246:949–951

Crawford BA, Hickman CR, Luhring TM (2012) Testing the threat-sensitive hypothesis with predator familiarity and dietary specificity. Ethology 118:41–48

Dawkins R, Krebs JR (1979) Arms races between and within species. Proc R Soc Lond B 205:489–511

Dixson DL, Pratchett MS, Munday PL (2012) Reef fishes innately distinguish predators based on olfactory cues associated with recent prey items rather than individual species. Anim Behav 84:45–51

Ferrari MCO, Brown MR, Pollock MS, Chivers DP (2007) The paradox of risk assessment: comparing responses of fathead minnows to capture-released and diet-released alarm cues from two different predators. Chemoecology 17:157–161

Ferrari MCO, Wisenden BD, Chivers DP (2010) Chemical ecology of predator–prey interactions in aquatic ecosystems: a review and prospectus. Can J Zool 88:698–724

Flynn AM, Smee DL (2010) Behavioral plasticity of the soft-shell clam, Mya arenaria (L.), in the presence of predators increases survival in the field. J Exp Mar Biol Ecol 383:32–38

Foster WA, Treherne JE (1981) Evidence for the dilution effect in the selfish herd from fish predation on a marine insect. Nature 293:466–467

Fraser DF, Gilliam JF (1992) Nonlethal impacts of predator invasion: facultative suppression of growth and reproduction. Ecology 3:959–970

Greene HW (1997) Snakes. The evolution of mystery in nature. University of California Press, Berkeley

Griffiths CL, Richardson CA (2006) Chemically induced predator avoidance behaviour in the burrowing bivalve Macoma balthica. J Exp Mar Biol Ecol 331:91–98

Grostal P, Dicke M (2000) Recognising one’s enemies: a functional approach to risk assessment by prey. Behav Ecol Sociobiol 47:258–264

Hay ME (2009) Marine chemical ecology: chemical signals and cues structure marine populations, communities, and ecosystems. Ann Rev Mar Sci 1:193–212

Hettyey A, Zsarnoczai S, Vincze K, Hoi H, Laurila A (2010) Interactions between the information content of different chemical cues affect induced defences in tadpoles. Oikos 119:1814–1822

Hews DK (1988) Alarm response in larval western toads, Bufo boreas: release of larval chemicals by a natural predator and its effects on predator capture efficiency. Anim Behav 36:125–133

Hirvonen H, Ranta E, Piironen J, Laurila A, Peuhkuri N (2000) Behavioural responses of naïve Arctic charr young to chemical cues from salmonid and non-salmonid fish. Oikos 88:191–199

Hoefler CD, Durso LC, McIntyre KD (2012) Chemical-mediated predator avoidance in the European house cricket (Acheta domesticus) is modulated by predator diet. Ethology 118:431–437

Howe NR, Harris LG (1978) Transfer of the sea anemone pheromone, Anthopleurine, by the nudibranch Aeolidia papillosa. J Chem Ecol 4:551–561

Huryn AD, Chivers DP (1999) Contrasting behvaioral responses by detritivorous and predatory mayflies to chemicals released by injured conspecifics and their predators. J Chem Ecol 25:2729–2740

Jachner A (1997) The response of bleak to predator odour of unfed and recently fed pike. J Fish Biol 50:878–886

Jackson RR, Li D (2004) One-encounter search-image formation by araneophagic spiders. Anim Cogn 7:247–254

Jacobsen HP, Stabell OB (1999) Predator-induced alarm responses in the common periwinkle, Littorina littorea: dependence on season, light conditions, and chemical labelling of predators. Mar Biol 134:551–557

Jacobsen HP, Stabell OB (2004) Antipredator behaviour mediated by chemical cues: the role of conspecific alarm signalling and predator labelling in the avoidance response of a marine gastropod. Oikos 104:43–50

Jones HM, Paszkowski CA (1997) Effects of exposure to predatory cues on territorial behaviour of male fathead minnows. Environ Biol Fishes 49:97–109

Kats LB, Dill LM (1998) The scent of death: chemosensory assessment of predation risk by prey animals. Ecoscience 5:361–394

Kesavaraju B, Juliano SA (2010) Nature of predation risk cues in container systems: mosquito responses to solid residues from predation. Entomol Soc Am 103:1038–1045

Kiesecker JM, Chivers DP, Anderson M, Blaustein AR (2002) Effect of predator diet on life history shifts of red-legged frogs, Rana aurora. J Chem Ecol 28:1007–1015

Large SI, Smee DL (2010) Type and nature of cues used by Nucella lapillus to evaluate predation risk. J Exp Mar Biol Ecol 396:10–17

Large SI, Smee DL (2012) Biogeographic variation in behavioral and morphological responses to predation risk. Oecologia. doi:10.1007/s00442-012-2450-5

Large S, Smee D, Trussell G (2011) Environmental conditions influence the frequency of prey responses to predation risk. Mar Ecol Prog Ser 422:41–49

Large SI, Torres P, Smee DL (2012) Behavior and morphology of Nucella lapillus influenced by predator type and predator diet. Aquat Biol 16:189–196

Laurila A, Kujasalo J, Ranta E (1997) Different antipredator behavior in two anuran tadpoles: effects of predator diet. Behav Ecol Sociobiol 40:329–336

Laurila A, Kujasalo J, Ranta E (1998) Predator-induced changes in life history in two anuran tadpoles: effects of predator diet. Oikos 83:307–317

Levri EP (1998) Perceived predation risk, parasitism, and the foraging behavior of a freshwater snail (Potamopyrgus antipodarum). Can J Zool 76:1878–1884

Li D, Jackson RR (2005) Influence of diet-related chemical cues from predators on the hatching of egg-carrying spiders. J Chem Ecol 31:333–342

Licht T (1989) Discriminating between hungry and satiated predators: the response of guppies (Poecilia reticulata) from high and low predation sites. Ethology 82:238–243

Lima SL, Dill LM (1990) Behavioral decisions made under the risk of predation: a review and prospectus. Can J Zool 68:619–640

Madison DM, Maerz JC, McDarby JH (1999) Optimization of predator avoidance by salamanders using chemical cues: diet and diel effects. Ethology 105:1073–1086

Madison DM, Sullivan AM, Maerz JC, McDarby JH, Rohr JR (2002) A complex, cross-taxon, chemical releaser of antipredator behavior in amphibians. J Chem Ecol 28:2271–2282

Magalhaes S, Tudorache C, van Maanen R, Sabelis MW, Janssen A (2004) Diet of intraguild predators affects antipredator behavior in intraguild prey. Behav Ecol 16:364–370

Magurran A (1989) Acquired recognition of predator odour in the European minnow (Phoxinus phoxinus). Ethology 82:216–223

Manek AK, Ferrari MCO, Niyogi S, Chivers DP (2014) The interactive effects of multiple stressors on physiological stress responses and club cell investment in fathead minnows. Sci Total Environ 476–477:90–97

Marquis O, Saglio P, Neveu A (2004) Effects of predators and conspecific chemical cues on the swimming activity of Rana temporaria and Bufo bufo tadpoles. Arch Fur Hydrobiol 160:153–170

Mathis A, Smith RJF (1993) Chemical labeling of northern pike (Esox lucius) by the alarm pheromone of fathead minnows (Pimephales promelas). J Chem Ecol 19:1967–1979

Mathis A, Unger S (2012) Learning to avoid dangerous habitat types by aquatic salamanders, Eurycea tynerensis. Ethology 118:57–62

Mathis A, Chivers DP, Smith RJF (1993) Population differences in responses of fathead minnows (Pimephales promelas) to visual and chemical stimuli from predators. Ethology 93:31–40

Melcer T, Chiszar D (1989) Striking prey creates a specific chemical search image in rattlesnakes. Anim Behav 37:477–486

Meng R, Janssen A, Nomikou M, Zhang QW, Sabelis MW (2006) Previous and present diets of mite predators affect antipredator behaviour of whitefly prey. Exp Appl Acarol 38:113–124

Mirza RS, Chivers DP (2001a) Do juvenile yellow perch use diet cues to assess the level of threat posed by intraspecific predators? Behaviour 138:1249–1258