Abstract

In this article, we propose a new source separation method in which the dual-tree complex wavelet transform (DTCWT) and short-time Fourier transform (STFT) algorithms are used sequentially as dual transforms and sparse nonnegative matrix factorization (SNMF) is used to factorize the magnitude spectrum. STFT-based source separation faces issues related to time and frequency resolution because it cannot exactly determine which frequencies exist at what time. Discrete wavelet transform (DWT)-based source separation faces a time-variation-related problem (i.e., a small shift in the time-domain signal causes significant variation in the energy of the wavelet coefficients). To address these issues, we utilize the DTCWT, which comprises two-level trees with different sets of filters and provides additional information for analysis and approximate shift invariance; these properties enable the perfect reconstruction of the time-domain signal. Thus, the time-domain signal is transformed into a set of subband signals in which low- and high-frequency components are isolated. Next, each subband is passed through the STFT and a complex spectrogram is constructed. Then, SNMF is applied to decompose the magnitude part into a weighted linear combination of the trained basis vectors for both sources. Finally, the estimated signals can be obtained through a subband binary ratio mask by applying the inverse STFT (ISTFT) and the inverse DTCWT (IDTCWT). The proposed method is examined on speech separation tasks utilizing the GRID audiovisual and TIMIT corpora. The experimental findings indicate that the proposed approach outperforms the existing methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Source separation (SS) is a procedure for isolating a set of source signals from an observed or mixed signal. Single-channel SS (SCSS) has become important in many real-world applications, such as communication, multimedia, and the cocktail-party problem. Although devices for SCSS have many obvious possible applications in hearing aids or as preprocessors in speech recognition systems, existing devices still show considerable room for improvement in performance. Research on SCSS for speech signals began a few decades ago [14, 20, 36] and continues to be conducted [21]. It is also a broadly examined issue in the machine learning community. Many signal models that consider numerous parameters (e.g., phase, magnitude, amplitude, frequency, energy, and the spectrogram of the speech signal) have been proposed.

S.T. Roweis suggested factorial hidden Markov models (HMMs), which have been incredibly successful for a single speaker [28, 29]. Jang and Lee [13] used a maximum likelihood approach to separate the mixed source signal that is perceived in a single channel. Pearlmutter and Olsson [24] exploited linear program differentiation on overcomplete dictionaries for maximally sparse representations of a speech corpus. Over the last several years, nonnegative matrix factorization (NMF) has become very popular with researchers for the separation of single-channel source signals. NMF was first introduced by Paatero and Tapper [3] and was proposed for use in SS by Lee and Seung [23]. NMF refers to a group of methods for multivariate analysis in which a matrix is decomposed into two other nonnegative matrices according to its components and weights.

Sliding windows and various types of mask-related NMF methods [4] have been used to decompose mixed signal magnitude spectra into weighted combinations of basis vectors for both sources. NMF-based algorithms are used to iteratively optimize a cost function [5]. Discriminative learning in NMF [41] is used to optimize all basis vectors jointly to reconstruct both clean and mixed signals. The magnitude spectrogram of a speech signal is primarily a two-dimensional matrix, and speech signals are typically sparse; thus, sparse NMF (SNMF) is applied to factorize them [9]. SCSS using SNMF was proposed by Schmidt and Olsson [30]. SNMF can learn a sparse representation of data [30] to solve the problem of separating multiple speech sources from a single microphone recording. SNMF enforces sparsity in both the basis matrix and the coefficient matrix. For signal detection, however, sparsity is enforced in only the coefficient matrix [40]. Wang et al. [40] showed that the performance is improved with a suitable choice of basis vectors. Group sparse NMF with divergence and graph regularization [25] has been utilized to separate source signals. Variable sparsity regularization factor-based SNMF [43] has also been used to separate monaural speech signals.

Dictionary learning (DL)-based algorithms [1, 7, 33, 34, 42] are another effective class of methods for model-based SCSS. Sequential discriminative DL (SDDL) was presented in [42], where both the distinctive and similar parts of varying speaker signals were considered. The authors of [33] constructed a joint dictionary method with a common subdictionary (CJD) in which a common subdictionary was built using similar atoms between identity subdictionaries trained using source speech signals corresponding to each speaker, and these similar were then discarded from the identity subdictionaries. In [34], the authors proposed a new optimization function for preparing a joint dictionary with multiple identity subdictionaries and a common subdictionary.

Recently, the wavelet transform (WT) algorithm has been utilized in many different fields, for example, speech recognition [8], noise reduction [22, 26], and electrocardiography [32]. The authors of [37] proposed an improved model for separating the mixed speech signals. In this model, the high-frequency components of the signal are rejected to reduce the computation time, and the low-frequency components are separated by using the WT. Specifically, the signal is decomposed by using the discrete wavelet transform (DWT), and the signal coming from the highest 50% of the frequency band is considered noise and is replaced with zero. The DWT does not yield a good estimate of the critical subband decomposition because the high-frequency portion of the signal is fully rejected; hence, the performance of the speech separation process is degraded. The authors of [39] offered a speech enhancement (SE) approach that utilizes the discrete wavelet packet transform (DWPT) and provides adequate information both for analysis and synthesis of the original signal, with a remarkable reduction in computation time. The authors of [11] presented an SE method based on the stationary wavelet transform (SWT) and NMF that overcomes the time-variation-related problem. The authors of [12] offered another SE method with limited redundancy and time-invariant properties, which outperforms conventional methods at low signal-to-noise ratios (SNRs).

Short-time Fourier transform (STFT)-based SS faces problems in time and frequency resolution because it cannot precisely determine which frequencies exist at what time. DWT-based SS [37] faces time-variation-related issues that hamper its separation performance. The DWPT suffers from the shift-variance problem; i.e., small shifts in the input signal can cause large variations in the distribution of energy among coefficients at different levels, causing signal reproduction errors [39]. The SWT introduces redundancy-related problems [11]. Our proposed method can solve all of the above-mentioned issues to a certain extent.

In our work, we propose a dual-transform SS strategy in which the DTCWT is applied to decompose the time-domain signal and produce a set of subband signals. Then, the STFT is applied to each subband signal, to convert each subband signal into the time–frequency domain, and a complex spectrogram is built for each subband signal. Finally, SNMF is applied to the magnitude spectrogram to obtain the weighted basis vectors to be used in the testing phase to separate the speech signals.

The contributions of this paper are briefly listed below:

-

i.

We first use the DTCWT to divide the input signal into small parts in order to separate the low- and high-frequency components. Then, the STFT is applied to each subband signal, which tends to be stationary and to provide a better transformation than other transforms. The sequential use of the DTCWT and STFT improves the separation capability of the model due to the approximate shift invariance and perfect reconstruction capabilities of the DTCWT.

-

ii.

SNMF is applied separately to the magnitude spectrogram of each subband signal to produce the weighted basis vectors that are then used during the testing process. The feature vectors can be more effectively extracted by applying SNMF to each subband signal.

-

iii.

Several WT- and STFT-based separation methods are compared, and our method is found to outperform the previous strategies mentioned in this paper.

The rest of the paper is organized as follows. Section 2 presents a mathematical description of the single-channel speech separation problem. Section 3 provides a brief explanation of the WT and SNMF methods. Section 4 presents the existing SS algorithms. Section 5 presents the details of the proposed algorithm. Section 6 describes the experimental setup and speech database and compares the experimental results. Section 7 concludes the presented work and is followed by the references. The nomenclature is provided in Table 1.

2 Problem Formulation

We consider two sources in our SS process, where the first source signal is \({\mathbf{x}}\left( {\text{t}} \right) = \left[ {{\text{x}}\left( 1 \right);{\text{x}}\left( 2 \right); \ldots ;{\text{x}}\left( {\text{T}} \right)} \right]\), and the second source signal is \({\mathbf{y}}\left( {\text{t}} \right) = \left[ {{\text{y}}\left( 1 \right);{\text{y}}\left( 2 \right); \ldots ;{\text{y}}\left( {\text{T}} \right)} \right]\); here, \({\text{T}}\) and \({\text{t}}\) denote the number of samples and the time instance, respectively. The mixed signal \({\mathbf{z}}\left( {\text{t}} \right)\) is prepared by summing the two source signals. The expression for the mixed signal is defined in Eq. (1).

Now, the \({\text{DTCWT}}\) is applied to Eq. (1), as is shown in Eq. (2), to obtain the \({\text{DTCWT}}\) subbands as presented in Eq. (3).

where \({\mathbf{z}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\), \({\mathbf{x}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\), and \({\mathbf{y}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) represent the mixed, first source, and second source subband signals, respectively, and \({\text{J}}\), \({\text{b}}\), and \({\text{tl}}\) denote the level of the \({\text{DTCWT}}\), the subband index, and the tree level, respectively. The \({\text{STFT}}\) of Eq. (3) is defined in Eq. (4) and yields the complex matrices represented in Eq. (5).

where \({\mathbf{Z}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} \left( {{\uptau },{\text{f}}} \right)\), \({\mathbf{X}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} \left( {{\uptau },{\text{f}}} \right)\), and \({\mathbf{Y}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} \left( {{\uptau },{\text{f}}} \right)\) are the \({\text{STFT}}\) coefficients of \({\mathbf{z}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\), \({\mathbf{x}}_{{{\text{b}},{\text{t}}}}^{{\text{J}}}\), and \({\mathbf{y}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\), respectively, and \({\text{f}}\) and \({\uptau }\) are the frequency and time bin indexes, respectively. \({\tilde{\mathbf{X}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} \left( {{\uptau },{\text{f}}} \right)\) and \({\tilde{\mathbf{Y}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} \left( {{\uptau },{\text{f}}} \right)\) are the unknown complex coefficients for the sources and must be estimated via \({\text{SNMF}}\), the subband binary ratio mask of signal \({\mathbf{x}} \)(\({\text{SBRMX}}\)), and the subband binary ratio mask of signal \({\mathbf{y}}\) (\({\text{SBRMY}}\)) from Eq. (5) using only the magnitude part. Finally, the estimated first and second source signals are calculated via the following equations:

where \({\tilde{\mathbf{x}}}\left( {\text{t}} \right)\) and \({\tilde{\mathbf{y}}}\left( {\text{t}} \right){ }\) are the estimated first and second source signals, respectively, and \({\text{ISTFT}}\) and \({\text{IDTCWT}}\) denote the inverse \({\text{STFT}}\) and inverse \({\text{DTCWT}}\), respectively.

3 Review of the WT and SNMF

The WT algorithm generates an assortment of time–frequency representations of a signal with various resolutions. The WT utilizes a completely versatile adjusted window that is moved along the signal, and the spectrum is computed for every position. Recently, the \({\text{DWT}}\) has been effectively adopted in many signal processing applications because of its ability to provide an efficient time–frequency analysis of a signal. The \({\text{DWT}}\) decomposes the time-domain signal \({\mathbf{x}}\left( {\text{n}} \right)\) using a pair of low-pass (\({\mathbf{h}}\left( {\text{n}} \right)\)) and high-pass (\({\mathbf{g}}\left( {\text{n}} \right)\)) filters, and the signal is then downsampled by a factor of two. The output of the low-pass filter is known as an approximation coefficient \(({\mathbf{x}}_{1}^{1}\)), where the superscript and subscript of \({\mathbf{x}}\) denote the \({\text{DWT}}\) level and subband index, respectively, and represent the high-frequency part of the signal. The output of the high-pass filter is called at the detail coefficient \(\left( {{\mathbf{x}}_{2}^{1} } \right)\) and represents the low-frequency part of the signal. For the next level of decomposition, only \({\mathbf{x}}_{1}^{1}\) is passed through similar low-pass and high-pass filters and is then downsampled to obtain \({\mathbf{x}}_{1}^{2}\) and \({\mathbf{x}}_{2}^{2}\), and so on. Figure 1 illustrates the filter bank (FB) implementations of the \({\text{DWT}}\) and \({\text{IDWT}}\).

The \({\text{DWPT}}\) is a generalized variant of the \({\text{DWT}}\). At the first level, the \({\text{DWPT}}\) decomposes the time-domain signal \({\mathbf{x}}\left( {\text{n}} \right)\) using a pair of low-pass (\({\mathbf{h}}\left( {\text{n}} \right)\)) and high-pass (\({\mathbf{g}}\left( {\text{n}} \right)\)) filters and performs downsampling by a factor of two. In second-level decomposition, both the approximation and detail coefficients are subjected to similar low-pass and high-pass filters and downsampling to obtain the approximation and detail coefficients at the next level, and so on. Figure 2 illustrates the FB implementations of the \({\text{DWPT}}\) and \({\text{IDWPT}}\). Although the \({\text{DWT}}\) and \({\text{DWPT}}\) have practical computational advantages, they also have some drawbacks, such as shift variance, oscillation, aliasing, and a lack of directionality.

In the \({\text{SWT}}\), the downsampling process after filtration at each level is removed, and consequently, the coefficient length is the same as the length of the original time-domain signal. In the \({\text{SWT}}\), the time-domain signal is passed through the high-pass and low-pass filters at the first level to obtain the corresponding approximation and detail coefficients. Then, at the second level, the low-pass and high-pass filters are upsampled by adding a zero between each pair of the adjacent filter of components from the previous level, and only the approximation coefficient is passed through the newly updated low-pass and high-pass filters; a similar process is repeated at each subsequent level. Thus, the \({\text{SWT}}\) eliminates shift-invariance issues by disposing of downsampling operators; however, it introduces redundancy. Figure 3 shows the second-level block diagrams of the \({\text{SWT}}\) and \({\text{ISWT}}\).

To mitigate the issues of shift variance and redundancy simultaneously, Kingsbury [17] presented a computationally advantageous method called the \({\text{DTCWT}}\), which is shift-invariant and has limited redundancy. In the first level, it decomposes the time-domain signal into four subband signals corresponding to two trees, where the first tree provides the real part of the transform, while the second tree provides the imaginary part. The upper and lower trees each yield one approximation coefficient (\({\mathbf{x}}_{1,1}^{1}\)) and one detail coefficient (\({\mathbf{x}}_{2,1}^{1}\)), where the superscript denotes the \({\text{DTCWT}}\) level, and the first and second subscripts represent the subband index and tree level, respectively. Then, each subband signal is downsampled. In the second level of decomposition, only the approximation coefficients are passed through the filters to produce second-level subband signals, and so on. Figure 4 illustrates the FB implementation of the \({\text{DTCWT}}\) and \({\text{IDTCWT}}\).

\({\text{NMF}}\) is an algorithm for analysis in which a matrix of nonnegative elements is factorized into two nonnegative matrices according to its bases and weights. In the factorization process, the matrix \({\mathbf{Z}} \in {\mathbb{R}}^{{{\text{F}} \times {\text{T}}}}\) is decomposed as a linear combination of bases \({\mathbf{W}} \in {\mathbb{R}}^{{{\text{F}} \times {\text{R}}}}\) and weights \({\mathbf{H}} \in {\mathbb{R}}^{{{\text{R}} \times {\text{T}}}}\), where the inner dimension \({\text{R}}\) is much smaller than the product of \({\text{F}}\) and \({\text{T}}\) of the original matrix \({\mathbf{Z}}\):

\({\text{NMF}}\) has been a popular method for modeling speech signals, especially in single-channel speech separation applications. Regardless of the size of the corpus, it will not be possible to learn a substantial dictionary with more elements than the number of time–frequency bins [18]. To address this issue, the authors of [31] presented sparsity penalties on the activations \({\mathbf{H}}\) concerning audio. Additionally, cost functions based on the Euclidean distance and Kullback–Leibler (KL) divergence were investigated in [19], and it was shown that the KL cost function works outstandingly well for audio SS. Therefore, we consider \({\text{SNMF}}\) with the KL cost function in our study. The KL divergence cost function is minimized as follows:

where \({\upmu }\) denotes the sparsity parameter. The matrices \({\mathbf{W}}\) and \({\mathbf{H}}\) are updated through the following iterative principles:

where \(1_{{\text{m}}}\) denotes a matrix of ones with the same dimensions as of \({ }{\mathbf{Z}}\), \(1_{{\text{v}}}\) denotes a column vector of ones with a number of entries equal to the number of columns of \({\text{W}}\), and all of the divisions' operations elementwise division.

4 SS and SE Algorithms Based on NMF or SNMF

The \({\text{STFT}} - {\text{SNMF}}\)-based SS algorithm [41] has a training stage and testing stage. In the training stage, the \({\text{STFT}}\) is applied to the clean speech signals \({\mathbf{x}}\left( {\text{t}} \right)\) and \({\mathbf{y}}\left( {\text{t}} \right)\) to produce complex-valued spectrograms \({\mathbf{X}}\left( {{\uptau },{\text{f}}} \right)\) and \({\mathbf{Y}}\left( {{\uptau },{\text{f}}} \right)\), respectively, where \({\uptau }\) is the time index and \({\text{f}}\) is the frequency index. From these complex spectrograms, only the magnitude spectra \({ }\left| {{\mathbf{X}}\left( {{\uptau },{\text{f}}} \right)} \right|\) and \(\left| {{\mathbf{Y}}\left( {{\uptau },{\text{f}}} \right)} \right|\) are considered and are passed through the \({\text{SNMF}}\) module to obtain clean speech spectral bases \({ }{\mathbf{W}}_{{\text{X}}}\) and \({ }{\mathbf{W}}_{{\text{Y}}}\). Finally, these spectral bases are concatenated to form \({\mathbf{W}}_{{{\text{XY}}}} = [{\mathbf{W}}_{{\text{X}}} { }{\mathbf{W}}_{{\text{Y}}} ]\), which is used to prepare the activation matrix \({ }{\mathbf{H}}_{{{\text{XY}}}}\) for the testing stage. In the testing stage, the \({\text{STFT}}\) is applied to the mixed speech signal \({\mathbf{z}}\left( {\text{t}} \right)\), to produce a complex-valued mixed speech spectrogram \({ }{\mathbf{Z}}\left( {{\uptau },{\text{f}}} \right)\). Then, only the magnitude spectrum \(\left| {{\mathbf{Z}}\left( {{\uptau },{\text{f}}} \right)} \right|\) and the previously formed spectral basis \({ }{\mathbf{W}}_{{{\text{XY}}}}\) are passed to the \({\text{SNMF}}\) module to update the activation matrix \({ }{\mathbf{H}}_{{{\text{XY}}}} = \left[ {{\mathbf{H}}_{{\text{X}}} { }{\mathbf{H}}_{{\text{Y}}} } \right]\). Finally, the primary estimated clean speech signal spectra \({ }\left| {{\tilde{\mathbf{X}}}\left( {{\uptau },{\text{f}}} \right)} \right|{ }\) and \(\left| {{\tilde{\mathbf{Y}}}\left( {{\uptau },{\text{f}}} \right)} \right|\) are obtained using Eqs. (14) and (15).

The \({\text{DWT}} - {\text{STFT}} - {\text{SNMF}}\)-based SS algorithm was presented in [37]. In the training stage, clean speech bases (dictionaries) are estimated from clean speech databases by applying \({\text{SNMF}}\) after the \({\text{DWT}}\) and \({\text{STFT}}\). In the testing stage, the \({\text{DWT}}\) is applied to decompose the mixed speech signal \({\mathbf{z}}\left( {\text{t}} \right)\) into a set of coefficients consisting of one approximation coefficient and \({\text{J}}\) detail coefficients (where \({\text{J}}\) is the final decomposition level index). The \({\text{STFT}}\) is used to decompose the approximation coefficient of the mixed speech signal to produce a complex-valued mixed speech spectrogram \({ } {\mathbf{Z}}\left( {{\uptau },{\text{f}}} \right)\), where \({\uptau }\) and \({\text{f}}\) are the time index and frequency bin indexes, respectively, and all of the detail coefficients are replaced with zeros of the same length. Then, \({\text{SNMF}}\) is used to factorize only the magnitude part of the complex mixed speech spectrogram, while the phase spectrogram is preserved for further processing. The clean speech spectrograms \({\mathbf{X}}\left( {{\uptau },{\text{f}}} \right)\) and \({\mathbf{Y}}\left( {{\uptau },{\text{f}}} \right)\) are estimated by using the corresponding bases and weights. Finally, the estimated clean time-domain speech signals \({\mathbf{x}}\left( {\text{t}} \right)\) and \({\mathbf{y}}\left( {\text{t}} \right)\) are obtained by using the \({\text{ISTFT}}\) and \({\text{IDWT}}\).

The \({\text{DWPT}} - {\text{NMF}}\)-based SE algorithm was presented in [39]. In this algorithm, the training stage is the same as in the previous method except that the \({\text{DWPT}}\) is used instead of the \({\text{DWT}}\) (i.e., the bases \({ }{\mathbf{W}}_{{\text{X}}}^{{\text{b}}}\) and \({\mathbf{W}}_{{\text{N}}}^{{\text{b}}}\) are estimated from the clean speech signal \({\mathbf{x}}\left( {\text{t}} \right)\) and the noise \({\mathbf{n}}\left( {\text{t}} \right)\), and the basis matrix \({ }{\mathbf{W}}_{{{\text{XN}}}}^{{\text{b}}} = \left[ {{\mathbf{W}}_{{\text{X}}}^{{\text{b}}} { }{\mathbf{W}}_{{\text{N}}}^{{\text{b}}} } \right]\) is concatenated). In the second phase, the \({\text{DWPT}}\) and overlapping framing patterns are applied to the noisy speech signal \({\mathbf{z}}\left( {\text{t}} \right)\), and a set of nonnegative matrices \({\mathbf{Z}}_{{\text{b}}}^{{\text{J}}}\) is obtained for the noisy speech signal. Then, the weights \({\mathbf{H}}_{{\text{X}}}^{{\text{b}}}\) and \({\mathbf{H}}_{{\text{N}}}^{{\text{b}}}\) for the speech and noise signals, respectively, are obtained by performing \({\text{NMF}}\) on the matrix \({\mathbf{Z}}_{{\text{b}}}^{{\text{J}}}\) using the fixed training basis matrix \({ }{\mathbf{W}}_{{{\text{XN}}}}^{{\text{b}}} = \left[ {{\mathbf{W}}_{{\text{X}}}^{{\text{b}}} { }{\mathbf{W}}_{{\text{N}}}^{{\text{b}}} } \right]\) and the initial random weight matrix \({\mathbf{H}}_{{{\text{XN}}}}^{{\text{b}}} = \left[ {\begin{array}{*{20}c} {{\mathbf{H}}_{{\text{X}}}^{{\text{b}}} } \\ {{\mathbf{H}}_{{\text{N}}}^{{\text{b}}} } \\ \end{array} } \right]\). The gain sequence \({\text{G}}_{{\text{b}}}^{{\text{J}}}\) and the estimated subband signal \({\tilde{\mathbf{x}}}_{{\text{b}}}^{{\text{J}}}\) are obtained by using Eqs. (16) and (17), as follows:

The estimated subband signal \({\tilde{\mathbf{x}}}_{{\text{b}}}^{{\text{J}}}\) is generated by utilizing Eq. (18), where \({\upsigma }_{{{\text{b}},{\text{c}}}}\) and \({\upsigma }_{{\text{b}}}\) denote the root-mean-square values of the clean speech signal and \({\tilde{\mathbf{x}}}_{{\text{b}}}^{{\text{J}}}\), respectively. Finally, the de-framing scheme and the \({\text{IDWPT}}\) are applied to obtain the estimated signal \({\tilde{\mathbf{x}}}\left( {\text{t}} \right)\).

The \({\text{SWT}} - {\text{NMF}}\) SE method was presented in [11]. In the training stage, the nonnegative matrices \({\mathbf{X}}_{{\text{b}}}^{{{\text{J}}^{{{\text{Train}}}} { }}}\) and \({\mathbf{N}}_{{\text{b}}}^{{{\text{J}}^{{{\text{Train}}}} { }}}\) are obtained from the clean speech signal \({\mathbf{x}}\left( {\text{t}} \right)\) and the noise \({\mathbf{n}}\left( {\text{t}} \right)\) using the \({\text{SWT}}\), the overlapping framing scheme, the concatenated framing process, and an autoregressive moving average filter, where \({\text{J}}\) denotes the \({\text{SWT}}\) level, and \({\text{b}}\) denotes the subband index. Then, the basis matrices \({\mathbf{W}}_{{\text{X}}}^{{\text{b}}}\) and \({\mathbf{W}}_{{\text{N}}}^{{\text{b}}}\) that are obtained after \({\text{NMF}}\) are concatenated to prepare the basis matrix \({ }{\mathbf{W}}_{{{\text{XN}}}}^{{\text{b}}} = \left[ {{\mathbf{W}}_{{\text{X}}}^{{\text{b}}} { }{\mathbf{W}}_{{\text{N}}}^{{\text{b}}} } \right]\). In the testing stage, rough estimates of the clean speech signal (\({\overline{\mathbf{X}}}_{{\text{b}}}^{{\text{J}}} )\) and the noise \(\left( {{\overline{\mathbf{N}}}_{{\text{b}}}^{{\text{J}}} } \right)\) are calculated using Eqs. (19) and (20) after applying the \({\text{SWT}}\) to the noisy speech signal \({\mathbf{z}}\left( {\text{t}} \right)\) and performing \({\text{NMF}}\) on \({ }{\mathbf{Z}}_{{\text{b}}}^{{{\text{J}}^{{{\text{Test}}}} }}\). Finally, the estimated time-domain signal \({\tilde{\mathbf{x}}}\left( {\text{t}} \right)\) is obtained through the inverse concatenated framing process and the \({\text{ISWT}}\).

A recent study [12] proposed the \({\text{DTCWT}} - {\text{NMF}}\) SE strategy, which utilizes the \({\text{DTCWT}}\), \({\text{NMF}}\) with the KL cost function, and a proposed subband smooth ratio mask. We implement the \({\text{DWPT}} - {\text{SNMF}}\), \({\text{SWT}} - {\text{SNMF}}\), and \({\text{DTCWT}} - {\text{SNMF}}\) methods for SS, analogously to the \({\text{DWPT}} - {\text{NMF}}\) [11, 39] and \({\text{DTCWT}} - {\text{NMF}}\) [12] methods for SE, respectively, and compare the results with those of the proposed method.

5 Proposed SS Algorithm

This section describes the newly proposed SS method and the subtleties related to this approach. Usually, speech signals have some high-frequency components and some low-frequency components. The low-frequency components of a signal contain a substantial amount of information, whereas the high-frequency components contain a negligible amount of information. Nevertheless, the high-frequency information impacts the basis vectors of the lower-frequency components. For this reason, SS using only \({\text{NMF}}\) cannot properly estimate the contents of mixed speech signals. Therefore, the \({\text{DTCWT }}\) is used to filter out the high- and low-frequency components of the signal. In our proposed method, we use the first-level decomposition, in which the time-domain signal is decomposed into four subband signals. For example, the \({\text{DTCWT}}\) decomposes the source signal \({\mathbf{x}}\left( {\text{t}} \right)\) into components, denoted by \({\mathbf{x}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\), where J denotes the \({\text{DTCWT}}\) level, b is the subband index, and tl represents tree level. For the first-level decomposition, J = 1; b = 1, 2, 3, and 4; and tl = 1, and 2, where 1 is for the upper tree and 2 is for the lower tree (i.e., the subbands are \({ }{\mathbf{x}}_{1,1}^{1}\), \({ }{\mathbf{x}}_{2,1}^{1}\), \({ }{\mathbf{x}}_{3,2}^{1}\), and \({ }{\mathbf{x}}_{4,2}^{1}\), as explained in the DTCWT part of Sect. 3).

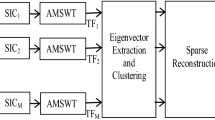

Then, the \({\text{STFT}}\) is applied to each subband signal to convert each subband signal into the time–frequency domain and build a complex spectrogram for each subband signal. The \({\text{STFT}}\) suffers from issues regarding time and frequency resolution because it cannot exactly determine which frequencies exist at what time. In our proposed method, we use the \({\text{DTCWT}}\) and \({\text{STFT}}\) sequentially to solve this problem of traditional \({\text{STFT}}\)-based methods. First, we use the \({\text{DTCWT}}\) to isolate the input signal into sufficiently small portions such that the low- and high-frequency components are separated. The sequential use of the \({\text{DTCWT}}\) and \({\text{STFT}}\) makes the input signal more stationary, thus resulting in a better transformation. Finally, \({\text{SNMF}}\) is applied to only the magnitude spectrogram in order to factorize the bases and weight vectors. Figure 5 shows the overall block diagram of the SS algorithm proposed in this paper. The proposed algorithm is divided into two stages: a training stage and a testing stage.

5.1 Training Stage

The two signals \({\mathbf{x}}\left( {\text{t}} \right)\) and \({\mathbf{y}}\left( {\text{t}} \right)\) are utilized as source signals. We obtain the subband source signals \({\mathbf{x}}_{1,1}^{1} , \ldots ,{\mathbf{x}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) and \({\mathbf{y}}_{1,1}^{1} , \ldots ,{\mathbf{y}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) from the signals \({\mathbf{x}}\left( {\text{t}} \right)\) and \({\mathbf{y}}\left( {\text{t}} \right)\) by utilizing the \({\text{DTCWT}}\), where \({\text{J}}\), \({\text{b}}\), and \({\text{tl}}\) denote the \({\text{DTCWT}}\) level, the subband index, and the tree level, respectively. The complex spectrograms \({\mathbf{X}}_{1,1}^{1} \left( {{\uptau },{\text{f}}} \right), \ldots ,{\mathbf{X}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} \left( {{\uptau },{\text{f}}} \right)\) and \({\mathbf{Y}}_{1,1}^{1} \left( {{\uptau },{\text{f}}} \right), \ldots ,{\mathbf{Y}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} \left( {{\uptau },{\text{f}}} \right)\) are calculated from the subband source signals via the \({\text{STFT}}\), where \({\uptau }\) and \({\text{f}}\) are the time and frequency bin indexes, respectively. The magnitude spectra \({\mathbf{XA}}_{1,1}^{{1^{{{\text{Train}}}} }} , \ldots ,{\mathbf{XA}}_{{{\text{b}},{\text{tl}}}}^{{{\text{J}}^{{{\text{Train}}}} }}\) and \({\mathbf{YA}}_{1,1}^{{1^{{{\text{Train}}}} }} , \ldots ,{\mathbf{YA}}_{{{\text{b}},{\text{tl}}}}^{{{\text{J}}^{{{\text{Train}}}} }}\) are obtained using Eqs. (21) and (22) through \({\text{SNMF}}\).

where \({\mathbf{XW}}_{1,1}^{1} , \ldots ,{ }{\mathbf{XW}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) and \({\mathbf{XH}}_{1,1}^{1} , \ldots ,{\mathbf{XH}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) denote the basis and weight matrices for source signal one, \({\mathbf{YW}}_{1,1}^{1} , \ldots ,{ }{\mathbf{YW}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) and \({\mathbf{YH}}_{1,1}^{1} , \ldots ,{\mathbf{YH}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) denote the basis and weight matrices for source signal two, and \({\upmu }\) is the sparsity constant. The basis matrices \({\mathbf{XW}}_{1,1}^{1} , \ldots ,{\mathbf{XW}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) can be generated by minimizing the distance between \({\mathbf{XA}}_{1,1}^{{1^{{{\text{Train}}}} }} , \ldots ,{\mathbf{XA}}_{{{\text{b}},{\text{tl}}}}^{{{\text{J}}^{{{\text{Train}}}} }}\) and \({\mathbf{XW}}_{1,1}^{1} {\mathbf{XH}}_{1,1}^{1} + {\upmu }\left| {{\mathbf{XH}}_{1,1}^{1} } \right|_{1} , \ldots ,{\mathbf{XW}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} {\mathbf{XH}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} + {\upmu }\left| {{\mathbf{XH}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} } \right|_{1}\) using Eq. (11), with the help of Eqs. (12) and (13). The basis matrices \({\mathbf{YW}}_{1,1}^{1} , \ldots ,{\mathbf{YW}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) are generated similarly and are then concatenated with \({\mathbf{XW}}_{1,1}^{1} , \ldots ,{\mathbf{XW}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) as follows: \({\mathbf{XYW}}_{1,1}^{1} , \ldots ,{\mathbf{XYW}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} = \left[ {{\mathbf{XW}}_{1,1}^{1} \;{\mathbf{YW}}_{1,1}^{1} } \right], \ldots ,\left[ {{\mathbf{XW}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} \;{\mathbf{YW}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} } \right]\).

5.2 Testing Stage

The mixed speech signal \({\text{z}}\left( {\text{t}} \right)\) is decomposed by applying the \({\text{DTCWT}}\) to generate a set of subband signals \({\mathbf{z}}_{1,1}^{1}\),…,\({ }{\mathbf{z}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\). The complex spectrum \({\mathbf{Z}}_{1,1}^{1} \left( {{\uptau },{\text{f}}} \right), \ldots ,{\mathbf{Z}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} \left( {{\uptau },{\text{f}}} \right)\) is obtained from the individual subband signals using the \({\text{STFT}}\). The magnitude spectrum \({\mathbf{ZA}}_{1,1}^{{1^{{{\text{Test}}}} }} , \ldots ,{\mathbf{ZA}}_{{{\text{b}},{\text{tl}}}}^{{{\text{J}}^{{{\text{Test}}}} }}\) and phase spectrum \({\mathbf{ZP}}_{1,1}^{1} , \ldots ,{\mathbf{ZP}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) are measured from the complex spectrum \({\mathbf{Z}}_{1,1}^{1} \left( {{\uptau },{\text{f}}} \right), \ldots ,{\mathbf{Z}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} \left( {{\uptau },{\text{f}}} \right)\) by taking the absolute value and angle, respectively. The magnitude spectrum \({\mathbf{ZA}}_{1,1}^{{1^{{{\text{Test}}}} }} , \ldots ,{\mathbf{ZA}}_{{{\text{b}},{\text{tl}}}}^{{{\text{J}}^{{{\text{Test}}}} }}\) is decomposed via the \({\text{SNMF}}\) using Eq. (23):

where \({\mathbf{XYH}}_{1,1}^{1} , \ldots ,{\mathbf{XYH}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\), \({\mathbf{XH}}_{1,1}^{1} , \ldots ,{\mathbf{XH}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\), and \({\mathbf{YH}}_{1,1}^{1} , \ldots ,{\mathbf{YH}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} { }\) denote the weight matrices of the mixed signal, source signal one, and source signal two, respectively. The weight matrices \({\mathbf{XYH}}_{1,1}^{1} , \ldots ,{\mathbf{XYH}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) can be learned via SNMF by minimizing the distances between \({\mathbf{ZA}}_{1,1}^{{1^{{{\text{Test}}}} }} , \ldots ,{\mathbf{ZA}}_{{{\text{b}},{\text{tl}}}}^{{{\text{J}}^{{{\text{Test}}}} }}\) and \({\mathbf{XYW}}_{1,1}^{1} {\mathbf{XYH}}_{1,1}^{1} + {\upmu }\left| {{\mathbf{XYH}}_{1,1}^{1} } \right|_{1} , \ldots ,{\mathbf{XYW}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} {\mathbf{XYH}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} + {\upmu }\left| {{\mathbf{XYH}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} } \right|_{1}\) using Eq. (11) with the help of Eq. (13), where the initial values of \({\mathbf{XYH}}_{1,1}^{1} , \ldots ,{\mathbf{XYH}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) are initialized as random numbers, and the values of \({\mathbf{XYW}}_{1,1}^{1} , \ldots ,{\mathbf{XYW}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) are fixed. The initially estimated magnitudes for source signal one \(({\overline{\mathbf{X}}}_{1,1}^{1} , \ldots ,{\overline{\mathbf{X}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\)) and source signal two \(({\overline{\mathbf{Y}}}_{1,1}^{1}\),…,\({ }{\overline{\mathbf{Y}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} )\) are obtained using Eqs. (24) and (25).

We observe that the sums of the initial estimates \({\overline{\mathbf{X}}}_{1,1}^{1} , \ldots ,{\overline{\mathbf{X}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) and \({\overline{\mathbf{Y}}}_{1,1}^{1} , \ldots ,{\overline{\mathbf{Y}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) are not equal to the components of the mixed signal magnitude spectrum \({\mathbf{ZA}}_{1,1}^{{1^{{{\text{Test}}}} }} , \ldots ,{\mathbf{ZA}}_{{{\text{b}},{\text{tl}}}}^{{{\text{J}}^{{{\text{Test}}}} }}\). To eliminate errors, we calculate the subband ratio masks using Eqs. (26) and (27).

The estimated source signal magnitudes \({\tilde{\mathbf{X}}}_{1,1}^{1} , \ldots ,{\tilde{\mathbf{X}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) and \({\tilde{\mathbf{Y}}}_{1,1}^{1} , \ldots ,{\tilde{\mathbf{Y}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) are obtained by using Eqs. (28) and (29).

Now, we recombine the phase spectrum \({\mathbf{ZP}}_{1,1}^{1} , \ldots ,{\mathbf{ZP}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) with the estimated source signal magnitude spectra \({\tilde{\mathbf{X}}}_{1,1}^{1} , \ldots ,{\tilde{\mathbf{X}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) and \({\tilde{\mathbf{Y}}}_{1,1}^{1} , \ldots ,{\tilde{\mathbf{Y}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} { }\) to obtain the modified complex spectra \({\tilde{\mathbf{X}}}_{1,1}^{1} \left( {{\uptau },{\text{f}}} \right), \ldots ,{\tilde{\mathbf{X}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} \left( {{\uptau },{\text{f}}} \right)\) and \({\tilde{\mathbf{Y}}}_{1,1}^{1} \left( {{\uptau },{\text{f}}} \right), \ldots ,{\tilde{\mathbf{Y}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} \left( {{\uptau },{\text{f}}} \right)\) using Eqs. (30) and (31), respectively.

The \({\text{ISTFT}}\) is used to convert the modified complex source signal spectra \({\tilde{\mathbf{X}}}_{1,1}^{1} \left( {{\uptau },{\text{f}}} \right), \ldots ,{\tilde{\mathbf{X}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} \left( {{\uptau },{\text{f}}} \right)\) and \({\tilde{\mathbf{Y}}}_{1,1}^{1} \left( {{\uptau },{\text{f}}} \right), \ldots ,{\tilde{\mathbf{Y}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} \left( {{\uptau },{\text{f}}} \right)\) into the modified subband signals \({\tilde{\mathbf{x}}}_{1,1}^{1} , \ldots ,{\tilde{\mathbf{x}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}} { }\) and \({\tilde{\mathbf{y}}}_{1,1}^{1} , \ldots ,{\tilde{\mathbf{y}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\). Finally, the estimated source signals \({\tilde{\mathbf{x}}}\left( {\text{t}} \right)\) and \({\tilde{\mathbf{y}}}\left( {\text{t}} \right)\) are obtained by applying the \({\text{IDTCWT}}\) to the subband signals \({\tilde{\mathbf{x}}}_{1,1}^{1} , \ldots ,{\tilde{\mathbf{x}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\) and \({\tilde{\mathbf{y}}}_{1,1}^{1} , \ldots ,{\tilde{\mathbf{y}}}_{{{\text{b}},{\text{tl}}}}^{{\text{J}}}\). The proposed algorithm for the training and testing stages is shown in Table 2.

6 Evaluations and Results

To evaluate the effectiveness of the proposed algorithm, we compare it with the \({\text{STFT}} - {\text{SNMF}}\) [11, 12, 33, 37, 39, 41] and \({\text{JDL}}\) [34] models. In these simulations, we use speech signals from the GRID audiovisual corpus [2], as the training and testing data (including different male and female speech samples). There are 34 speakers (18 male and 16 female speakers), and each speaker speaks 1000 utterances. We concatenate all the utterances together for each speaker. For each speaker, we randomly choose 500 utterances for training and 200 utterances for testing. In these simulations, we use two types of speech signal combinations: one for same-gender (male–male or female–female) speech separation, where the combined signals are denoted by M1 and M2 or by F1 and F2, and another for opposite-gender (male–female) speech separation, where the combined signals are denoted by M and F. For same-gender signal separation, eight utterances from same-gender speakers’ are utilized to form one experimental group, and eight different utterances from same-gender speakers’ are used to form another experimental group. For opposite-gender speech separation, we choose sixteen male speakers to compose one experimental group and sixteen female speakers to compose another experimental group. The length of each training signal is approximately 60 s, and the length of each testing signal is approximately 10 s. The sampling rate for each speech signal is 8000 Hz, and the signal is transformed into the time–frequency domain by using a 512-point \({\text{STFT}}\) with 50% overlap.

The speech separation performance is evaluated in terms of the signal-to-interference ratio (SIR) [10], source distortion ratio (SDR) [10], average frequency-weighted segmental SNR (fwsegSNR) [38], short-time objective intelligibility (STOI) [35], perceptual evaluation of speech quality (PESQ) [27], Hearing-Aid Speech Perception Index (HASPI) [15], and Hearing-Aid Speech Quality Index (HASQI) [16]. The SDR value estimates the overall speech quality; it is defined as the ratio of the power of the input signal to the power of the difference between the input and reconstructed signals. Higher SDR scores indicate better performance. In addition to the SDR, the SIR captures the error caused by failure to remove interfering signal information during the SS procedure. A higher SIR indicates higher separation quality. The STOI is defined as the correlation between the short-term temporal envelopes of the clean and separated speech signals, and its value ranges from 0 to 1, with a higher STOI score indicating better intelligibility. We choose the fwsegSNR as the objective measure to evaluate the intelligibility of the captured speech signal; a higher value represents better performance. For both hearing-impaired patients and people with normal hearing, the HASQI and HASPI gauge sound quality and perception, respectively. Similar to the STOI, the values range from 0 to 1, and higher scores indicate better sound quality and intelligibility, respectively.

First, the separation results of the different strategies are shown in Fig. 6, where the original female and male speech spectrograms are displayed in Fig. 6a and b, respectively. The estimated male speech spectrograms are presented in Fig. 6c, e, and g for the \({\text{DWT}} - {\text{STFT}} - {\text{SNMF}}\) algorithm, the \({\text{SWT}} - {\text{SNMF}}\) algorithms, and the proposed method (PM), respectively, and the female speech spectrograms are similarly presented in Fig. 6d, f, and h. From this figure, we can see that the SS quality of the \({\text{DWT}} - {\text{STFT}} - {\text{SNMF}}\) method is poor due to the total rejection of the high-frequency component, where the \({\text{SWT}} - {\text{SNMF}}\) method adds unwanted speech components to the estimated male and female speech signals. By contrast, the PM recovers male and female speech signals that are approximately similar to the original signals.

Second, in Fig. 7, we compare the PM with the existing models in terms of the fwsegSNR. From this figure, it appears that the PM performs very well in all cases compared to the other current methods. In the various SS scenarios, our method improves the fwsegSNR scores by 20.33% for the M1 signal, 17.76% for the M2 signal, 24.93% for the F1 signal, 32.17% for the F2 signal, 30.49% for the M signal, and 15.70% for the F signal compared to the \({\text{DTCWT}} - {\text{SNMF}}\) method.

Third, in Fig. 8, we show that in terms of the SDR and SIR, the PM considerably outperforms the existing models, namely the \({\text{STFT}} - {\text{SNMF}}\), \({\text{DWT}} - {\text{STFT}} - {\text{SNMF}}\), \({\text{DWPT}} - {\text{SNMF}}\), \({\text{SWT}} - {\text{SNMF}}\), \({\text{DTCWT}} - {\text{SNMF}}\), \({\text{CJD}},\) and \({\text{JDL}}\) algorithms. For all cases of speech separation, the SDR values of the PM are higher than those of the existing models. With the PM, the SDR is improved from 4.72 to 5.29 dB for the M1 signal, from 4.39 to 5.24 dB for the M2 signal, from 5.27 to 5.97 dB for the F1 signal, from 4.61 to 5.85 dB for the F2 signal, from 7.65 to 9.01 dB for the M signal, and from 6.35 to 9.12 dB for the F signal compared to the \({\text{DWT}} - {\text{STFT}} - {\text{SNMF}}\) model. From this figure, we can also see that the SIR values of the estimated signals are better with the PM than with the existing models. Moreover, we find that the separation result of the opposite-gender signals is much better than those for the same-gender signals.

Fourth, Tables 3 and 4 present a comparative performance analysis of the PM and the existing methods in terms of the STOI and PESQ. Compared to the existing \({\text{DWT}} - {\text{STFT}} - {\text{SNMF}}\) method, the PM improves the STOI scores by 13.24% and 10.98% for the M1 and M2 signals, respectively; by 13.20% and 11.73% for the F1 and F2 signals, respectively; and by 24.96% and 10.26% for the M and F signals, respectively. From Table 4, we can also see that the PESQ scores of the estimated signals are better than with the existing models.

Fifth, Tables 5 and 6 present the HASQI and HASPI results of the different methods, namely the \({\text{STFT}} - {\text{SNMF}}\), \({\text{DWT}} - {\text{STFT}} - {\text{SNMF}}\), \({\text{DWPT}} - {\text{SNMF}}\), \({\text{SWT}} - {\text{SNMF}}\), \({\text{DTCWT}} - {\text{SNMF}}\), \({\text{CJD}}\), and \({\text{JDL}}\) methods and the PM for the same- and opposite-gender speech separation tasks. From Table 5, one can observe that the PM algorithm yields better HASPI values than the other algorithms for all cases of separation. The PM algorithm outperforms the other seven methods in terms of the HASQI results for all cases of separation.

Sixth, we present the SS performance achieved on the TIMIT database [6] to further confirm the superiority of the PM in mixed speech separation experiments. For these experiments, 24 speakers (12 male and 12 female speakers) were selected from the TIMIT database. Each speaker utters ten sentences, corresponding to a total of 240 sentences. Of the 10 sentences uttered by each distinct speaker, the first eight sentences are selected for training, and the remaining two sentences are used for testing. To investigate the performance of our proposed strategy, we consider the SDR, SIR, STOI, and PESQ scores. From Fig. 9 and Table 5, one can clearly see that the proposed strategy performs better in strengthening speech than the other seven techniques (\({\text{STFT}} - {\text{SNMF}}\), \({\text{DWT}} - {\text{STFT}} - {\text{SNMF}}\), \({\text{DWPT}} - {\text{SNMF}}\), \({\text{SWT}} - {\text{SNMF}}\), \({\text{DTCWT}} - {\text{SNMF}}\), \({\text{CJD}}\), and \({\text{ JDL}}\)) according to the SDR, SIR, STOI, and PESQ scores for opposite-gender signal separation.

Finally, for a practical comparison of the computational load, we compare the execution times required to generate an estimated signal during one iteration when the analysis is implemented in MATLAB on a PC equipped with an Intel® Core™ i7-4790 CPU @ 3.60 GHz. As seen from the results in Table 8, the execution time of the PM is shorter than that those of all other methods except the \({\text{STFT}} - {\text{SNMF}}\) and \({\text{DWT}} - {\text{STFT}} - {\text{SNMF}}\) algorithms, while the other metrics of the PM (see Tables 3, 4, 5, 6, 7 and Figs. 7, 8, 9) are better than those of the other methods. The P/O ratios (where P refers to the PM and O signifies another method considered for comparison) in terms of the execution times are also listed in Table 8. The results confirm that the proposed method can reduce the online computational load by factors of approximately 3.68, 1.36, 1.08, 4.36, and 3.94, relative to the conventional methods listed in the table sequentially from top to bottom, respectively, except for the \({\text{STFT}} - {\text{SNMF}}\) and \({\text{DWT}} - {\text{STFT}} - {\text{SNMF}}\) algorithms.

7 Conclusion

In this paper, we have proposed an improved speech separation model using the DTCWT and the STFT with SNMF. The development of this dual-transform (DTCWT and STFT)-based speech separation model is the main focus of our research. First, we apply the DTCWT and STFT successively to the input speech signal to provide a more flexible basic framework for improved feature extraction. Second, the speech signal is sparsely represented by applying SNMF to obtain the corresponding weight matrices considering only the magnitude spectrograms used in the testing phase. Finally, the estimated separated speech signals are generated by applying the ISTFT and IDTCWT consecutively. The DTCWT separates the high- and low-frequency components of the time-domain signal by means of filters, and the STFT accurately characterizes the time–frequency components. For this reason, the quality and intelligibility of the separated speech signals are improved compared with the results of existing methods. In evaluations of the improvement in the separated speech signals using various evaluation methods, the experimental outcomes demonstrate that the overall performance of the proposed speech separation model is superior to that of the existing models. In the future, we plan to investigate alternative training and testing algorithms using deep neural networks.

Data availability

The datasets generated or analyzed during the current study are not publicly available because they are the subject of ongoing research but are available from the first author upon reasonable request.

References

G. Bao, Y. Xu, Z. Ye, Learning a discriminative dictionary for single-channel speech separation. IEEE Trans. Audio Speech Lang. Process. 22(7), 1130–1138 (2014)

M. Cooke, J. Barker, S. Cunningham, X. Shao, An audio-visual corpus for speech perception and automatic speech recognition. J. Acoust. Soc. Am. 120(5), 2421 (2006)

D.L. Daniel, H.S. Seung, Learning the pans of objects with non-negative matrix factorization. Nature 401, 788–791 (1999)

M.G. Emad, E. Hakan, Single channel speech music separation using nonnegative matrix factorization with sliding windows and spectral masks. Digital Signal Processing (DSP), in 17th International Conference in August (2011)

G.G. Francois, J.M. Gautham, Stopping criteria for non-negative matrix factorization based supervised and semi-supervised source separation. IEEE Signal Processing Letters, November (2014)

J. Garofolo, et al., TIMIT acoustic-phonetic continuous speech corpus. LDC93S1 (1993)

E.M. Grais, H. Erdogan, Discriminative non-negative dictionary learning using cross-coherence penalties for single channel source separation, in Proceedings of the International Conference on Spoken Language Processing (INTERSPEECH). Lyon, France, 25–29 August (2013)

R. Hidayat, A. Bejo, S. Sumaryono, A. Winursito, Denoising speech for MFCC feature extraction using wavelet transformation in speech recognition system, in 10th International Conference on Information Technology and Electrical Engineering (2018)

P.O. Hoyer, Non-negative matrix factorization with sparseness constraint. J. Mach. Learn. Res. 1457–1469, November (2004)

Y. Hu, P.C. Loizou, Evaluation of objective quality measures for speech enhancement. IEEE Trans. Audio Speech Lang. Process. 16(1), 229–238 (2008)

M.S. Islam, T.H. Al Mahmud, W.U. Khan, Z. Ye, Supervised single-channel speech enhancement based on stationary wavelet transforms and non-negative matrix factorization with concatenated framing process and subband smooth ratio mask. J. Sig. Process. Syst. Signal. Image Video Technol. 1–14 (2019)

M.S. Islam, T.H. Al Mahmud, W.U. Khan, Z. Ye, Supervised single-channel speech enhancement based on dual-tree complex wavelet transforms and nonnegative matrix factorization using the joint learning process and subband smooth ratio mask. Electronics 8, 353 (2019)

G.J. Jang, T.W. Lee, A maximum likelihood approach to single-channel source separation. J. Mach. Learn. Res. 4, 1365–1392 (2003)

D.S. Kapoor, A.K. Kohli, Gain adapted optimum mixture estimation scheme for single-channel speech separation. Circuits Syst. Signal Process. 32(5), 2335–2351 (2013)

J.M. Kates, K.H. Arehart, The hearing-aid speech perception index (HASPI). Speech Commun. 65, 75–93 (2014)

J.M. Kates, K.H. Arehart, The hearing-aid speech quality index (HASQI). J. Audio Eng. Soc. 58, 5363–5381 (2010)

N.G. Kingsbury, The dual-tree complex wavelet transforms: a new efficient tool for image restoration and enhancement, in Proceedings of the 9th European Signal Process Conference. EUSIPCO, Rhodes, Greece. 8–11 Sept (1998)

R.J. Le, F.J. Weninger, J.R. Hershey, Sparse NMF half-baked or well done? technical report TR2015–023, Mitsubishi Electric Research Laboratories (MERL), Cambridge, MA, USA, March (2015)

D. Lee, H.S. Seung, Algorithms for non-negative matrix factorization. Adv. Neural Inf. Process. Syst. 13, 556–562 (2001)

A. Mahmoodzadeh, H.R. Abutalebi, Hybrid approach to single-channel speech separation based on coherent incoherent modulation filtering. Circuits Syst. Signal Process. 36(5), 1970–1988 (2017)

S. Mavaddati, A novel singing voice separation method based on sparse non-negative matrix factorization and low-rank modeling. Iran. J. Electr. Electron. Eng. 15, 2 (2019)

P. Mercorelli, A denoising procedure using wavelet packets for instantaneous detection of pantograph oscillations. Mech. Syst. Signal Process. 35, 137–149 (2013)

P. Paatero, U. Tapper, Positive matrix factorization: a non-negative factor model with optimal utilization of error estimates of data values. Environmetrics 5(2), 111–126 (1994)

B.A. Pearlmutter, R.K. Olsson, Linear program differentiation for single-channel speech separation, in 16th IEEE Signal Processing Society Workshop in MLSP, Arlington, VA, USA (2006)

T. Pham, Y.S. Lee, Y.B. Lin, T.C. Tai, J.C. Wang, Single channel source separation using sparse nmf and graph regularization. ASE Big Data Soc. Inform. 55, 1–7 (2015)

B. Premanode, J. Vongprasert, C. Toumazou, Noise reduction for nonlinear nonstationary time series data using averaging intrinsic mode function. Algorithms 6(3), 407–429 (2013)

A.W. Rix, J.G. Beerends, M.P. Hollier, A.P. Hekstra, Perceptual evaluation of speech quality (PESQ)-a new method for speech quality assessment of telephone networks and codecs, in IEEE International Conference on Acoustics, Speech, Signal Processing. 6, 7–11 May (2001)

S.T. Roweis, One microphone source separation. Advances in Neural Information Processing Systems. 793–799 (2001).

S.T. Roweis, Factorial models and refiltering for speech separation and denoising, in Eurospeech, Geneva, 1009–1012 (2003)

M.N. Schmidt, R.K. Olsson, Single-channel speech separation using sparse non-negative matrix factorization, in 9th International Conference on Spoken Language Processing. Pittsburgh, PA, USA (2006)

M.N. Schmidt, M. Morup, Sparse non-negative matrix factor 2-D deconvolution for blind single-channel source separation. Indep. Compon. Anal. Blind Signal Sep. 3889, 700–707 (2006)

S.M. Seedahmed, A generalised wavelet packet‐based anonymization approach for ECG security application. 9, 18, 6137–6147 (2016)

L. Sun, C. Zhao, M. Su, F. Wang, Single-channel blind source separation based on joint dictionary with common sub-dictionary. Int. J. Speech Technol. 21(1), 19–27 (2018)

L. Sun, K. Xie, T. Gu, J. Chen, Z. Yang, Joint dictionary learning using a new optimization method for single-channel blind source separation. Speech Commun. 106, 85–94 (2019)

C.H. Tall, R.C. Hendriks, R. Heusdens, J. Jensen, An algorithm for intelligibility prediction of time-frequency weighted noisy speech. IEEE Trans. Audio Speech Lang. Process. 19(7), 2125–2136 (2011)

P. Tianliang, C. Yang, L. Zengli, A time-frequency domain blind source separation method for underdetermined instantaneous mixtures. Circuits Syst. Signal Process. 34(12), 3883–3895 (2015)

Y.V. Varshney, Z.A. Abbasi, M.R. Abidi, O. Farooq, Frequency selection based separation of speech signals with reduced computational time using sparse NMF. Arch. Acoust. 42(2), 287–295 (2017)

E. Vincent, R. Gribonval, C. Fevote, Performance measurement in blind audio source separation. IEEE Trans. Audio Speech Lang. Process. 14(4), 1462–1469 (2006)

S. Wang, A. Chern, Y. Tsao, J. Hung, X. Lu, Y. Lai, B. Su, Wavelet speech enhancement based on non-negative matrix factorization. IEEE Signal Process. Lett. 23, 1101–1105 (2016)

Y. Wang, Y. Li, K.C. Ho, A. Zare, M. Skubic, Sparsity promoted non-negative matrix factorization for source separation and detection, in Proceedings of the 19th International Conference on Digital Signal Processing. IEEE. 20–23 August (2014).

Z. Wanng, F. Sha, Discriminative non-negative matrix factorization for single-channel speech separation, in IEEE International Conference on Acoustics, Speech, and Signal Processing (2014)

Y. Xu, G. Bao, X. Xu, Z. Ye, Single-channel speech separation using sequential discriminative dictionary learning. Signal Process. 106, 134–140 (2015)

V.V. Yash, A.A. Zia, R.A. Musiur, O. Farooq, Variable sparsity regularization factor based SNMF for monaural speech separation, in 40th International Conference on Telecommunications and Signal Processing (TSP). 5–7 July (2017)

Acknowledgements

This research was supported by the National Natural Science Foundation of China (Grant No. 61671418).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hossain, M.I., Islam, M.S., Khatun, M.T. et al. Dual-Transform Source Separation Using Sparse Nonnegative Matrix Factorization. Circuits Syst Signal Process 40, 1868–1891 (2021). https://doi.org/10.1007/s00034-020-01564-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-020-01564-x