Abstract

This paper considers the parameter identification for a special class of nonlinear systems, i.e., bilinear-in-parameter systems. Based on the hierarchical identification principle, a hierarchical stochastic gradient (HSG) estimation algorithm is presented. The basic idea is to decompose a bilinear-in-parameter system into two subsystems and to derive the HSG identification algorithm for estimating the system parameters by replacing the unknown variables in the information vectors with their estimates obtained at the previous time. The convergence analysis of the proposed algorithm indicates that the parameter estimation errors converge to zero under persistent excitation conditions. The simulation results show that the proposed algorithm is effective.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Parameter estimation algorithms are often obtained through minimizing a criterion function. The gradient search, least squares search and Newton search are the useful tools for solving nonlinear optimization problems [15, 23, 44–46]. Nonlinearities exist widely in industrial processes [21]. Typical nonlinear systems are the block-oriented systems, including input nonlinear systems [25, 30, 38, 42], output nonlinear systems [11, 41] or Wiener nonlinear systems [9], input–output (i.e., Hammerstein–Wiener) nonlinear systems [2, 31] and feedback nonlinear systems [14]. When the static nonlinear part of the block-oriented systems can be expressed as a linear combination of the known basis functions, the corresponding systems are the Hammerstein systems, Wiener systems and their combinations [16, 40]. A direct method of identifying the block-oriented nonlinear systems is the over-parametrization method [3]. By re-parameterizing the nonlinear systems, the output appears to be linear on the unknown parameter space so that any linear identification algorithms can be applied [4]. However, the resulting identification model contains the cross-products between the parameters in the nonlinear part and those in linear part, leading to estimate more parameters than the nonlinear system.

In the area of system identification, linear-in-parameter output error moving average systems are common, for which Wang and Tang [36] presented a recursive least squares estimation algorithm and discussed several gradient-based iterative estimation algorithms using the filtering technique [37]; Wang and Zhu [39] presented a multi-innovation parameter estimation algorithm. The system that includes the product terms of parameters is called the bilinear-in-parameter system. Bai and Liu [5] discussed the least squares solution of the normalized iterative method, the over- parametrization method and the numerical method for bilinear-in-parameter systems; Wang et al. [24] revisited the unweighted least squares solution and extended to identify the case of colored noise; Abrahamsson et al. [1] presented a two-stage method based on the approximation of a weighting matrix and discussed the applications to submarine detection. Other methods include the Kalman filtering-based identification approaches [10, 23].

The convergence of identification algorithms is a basic topic for system identification and attracts much attention. Recently, an auxiliary model-based recursive least squares algorithm and an auxiliary model-based hierarchical gradient algorithm have been proposed for dual-rate state space systems [12] and for multivariable Box–Jenkins systems using the data filtering [32–34]. The modeling and multi-innovation parameter identification has been proposed for Hammerstein nonlinear state space systems using the filtering technique [35]; a recursive parameter and state estimation algorithm has been proposed for an input nonlinear state space system using the hierarchical identification principle [29]; an auxiliary model-based gradient algorithm has been reported for the time-delay systems by transforming the input–output representation into a regression model and its convergence was studied [13]. The convergence analysis of the hierarchical least squares algorithm has been analyzed for bilinear-in-parameter systems [26]. On the basis of the work in [26], this paper derives a hierarchical stochastic gradient (HSG) algorithm for bilinear-in-parameter systems based on the decomposition idea and analyzes its performances.

The rest of this paper is organized as follows. Section 2 presents an HSG algorithm for bilinear-in-parameter systems. Section 3 analyzes the performance of the HSG algorithm. Section 4 provides an illustrative example to show that the proposed algorithm is effective. Finally, a brief summary of the main contents is given in Sect. 5.

2 System Description and the HSG Algorithm

Consider the following bilinear-in-parameter systems [5, 26],

where y(t) is the system output, \(\varvec{F}(t) \in {\mathbb {R}}^{m\times n}\) is composed of available measurement data, v(t) is a white noise sequence with zero mean and finite variance \(\sigma ^2\) and \(\varvec{a}=[a_1, a_2,\ldots , a_m]^{\tiny \text{ T }}\in {\mathbb {R}}^m\) and \(\varvec{b}=[b_1, b_2, \ldots , b_n]^{\tiny \text{ T }}\in {\mathbb {R}}^n\) are the unknown parameter vectors to be estimated.

For the identification model in (1), assume that m and n are known, and \(y(t)=0\), \(v(t)=0\) for \(t\leqslant 0\). Note that for any pair \(\lambda \varvec{a}\), \(\varvec{b}/\lambda \) , the system in (1) has the identical input–output relationship, so the constant \(\lambda \) has to be fixed. Without generality, we adopt the following assumption.

Assumption 1

\(\lambda =\Vert \varvec{b}\Vert \), and the first element of \(\varvec{b}\) is positive, i.e., \(b_1>0\), where the norm of the vector \(\varvec{X}\) is defined by \(\Vert \varvec{X}\Vert ^2:=\mathrm{tr}[\varvec{X}\varvec{X}^{\tiny \text{ T }}]\).

Define the vector \({\varvec{\psi }}(t):=\varvec{F}(t)\varvec{b}\in {\mathbb {R}}^m\), \({\varvec{\varphi }}(t):=\varvec{F}^{\tiny \text{ T }}(t)\varvec{a}\in {\mathbb {R}}^n\). Then Eq. (1) can be written as

or

Define the following two cost functions:

Using the negative gradient search and minimizing \(J_1(\varvec{a})\) and \(J_2(\varvec{b})\), we obtain the estimates \(\hat{\varvec{a}}(t)\) of \(\varvec{a}\) in Subsystem (2) and \(\hat{\varvec{b}}(t)\) of \(\varvec{b}\) in Subsystem (3) at time t:

Since the vectors \({\varvec{\psi }}(t)\) and \({\varvec{\varphi }}(t)\) contain the unknown parameter vectors \(\varvec{b}\) and \(\varvec{a}\), the algorithm in (4)–(7) is impossible to implement. This problem can be solved by replacing \(\varvec{b}\) and \(\varvec{a}\) with their corresponding estimates \(\hat{\varvec{b}}(t-1)\) and \(\hat{\varvec{a}}(t-1)\) at time \(t-1\). Letting \(\hat{{\varvec{\psi }}}(t):=\varvec{F}(t)\hat{\varvec{b}}(t-1)\in {\mathbb {R}}^m\) and \(\hat{{\varvec{\varphi }}}(t):=\varvec{F}^{\tiny \text{ T }}(t)\hat{\varvec{a}}(t-1)\in {\mathbb {R}}^n\), we have the following HSG algorithm for bilinear-in-parameter systems in (1):

The initial values are taken to be \(\hat{\varvec{a}}(0)=\mathbf{1}_m/p_0\), \(\hat{\varvec{b}}(0)=\mathbf{1}_n/p_0\), where \(p_0\) is a large number, e.g., \(p_0=10^6\).

3 The Convergence Analysis

Lemma 1

[8] Assume that the nonnegative sequences T(t), \(\eta (t)\) and \(\zeta (t)\) satisfy the inequality

and \(\sum \limits _{t=1}^{\infty }\eta (t)<\infty \), then we have \(\sum \limits _{t=1}^{\infty }\zeta (t)<\infty \) and T(t) is bounded.

The proof of Lemma 1 is straightforward and hence omitted.

Theorem 1

For the system in (1) and the HSG algorithm in (8)–(11), assume that v(t) is a white noise sequence with zero mean and variances \(\sigma ^2\), and there exist an integer N and two positive constants \(c_1\) and \(c_2\) such that the following persistent excitation conditions hold:

Then the parameter estimation errors converge to zero, i.e.,

Proof

Define two parameter error vectors:

Substituting (1) and (8) into (12), we have

where

Taking the norm of both sides of (15) and using (16) yield

Define \(\tilde{y}_2(t):=\hat{\varvec{a}}^{\tiny \text{ T }}(t-1)\varvec{F}(t)\tilde{\varvec{b}}(t-1)\in {\mathbb {R}}\), \(\xi _2(t):=\tilde{\varvec{a}}^{\tiny \text{ T }}(t-1)\varvec{F}(t)\varvec{b}\in {\mathbb {R}}\). Similarly, we have

Let \(T(t):=\Vert \tilde{\varvec{a}}(t)\Vert ^2+\Vert \tilde{\varvec{b}}(t)\Vert ^2\). Using (18), (19), (9) and (11) gives

where

When \(\xi _1^2>\varepsilon \) or \(\xi _2^2>\varepsilon \) or \(\gamma (t)<0\) (\(\varepsilon \) is a given positive number), we let \(\tilde{\varvec{a}}(t):=\tilde{\varvec{a}}(t-1)\) and \(\tilde{\varvec{b}}(t):=\tilde{\varvec{b}}(t-1)\), and thus we have \(T(t)=T(t-1)\). When \(\xi _1^2 \leqslant \varepsilon \) and \(\xi _2^2\leqslant \varepsilon \) and \(\gamma (t)\geqslant 0\) , since v(t) is a white noise with zero mean and variance \(\sigma ^2\), and \(\varvec{F}(t)\), \(\hat{\varvec{a}}(t-1)\), \(\hat{\varvec{b}}(t-1)\), \(r_1(t)\), \(r_2(t)\), \(\xi _1(t)\) and \(\xi _2(t)\) are independent of v(t), taking expectation of both sides of (20), we have

From (9), we have

Similarly, from (11), we have

Hence, summation of the last term of the right-hand side of (21) from \(t=1\) to \(\infty \) is finite. Applying Lemma 1 to (21), we conclude that \(\mathrm{E}[T(t)]\) converges to a constant. So there exist a constant \(C>0\) and \(t_0\) such that \(\mathrm{E}[T(t)]\leqslant C\) for \(t>t_0\). From (21), it follows that

Note that \(r_1(t)>0\) and \(r_2(t)>0\), we have

Define the identification innovation

From (14), we have

Replacing t in (23) with \(t+j\) and successive substitutions give

Using (16), it follows that

Substituting (24) into (25) gives

Squaring and summing for j from \(j=1\) to \(j=N-1\), dividing by \(r_1(t+j)\), and using (A1), (24) and (26), we have

Since \(\mathrm{E}[T(t)]=\mathrm{E}[\Vert \tilde{\varvec{a}}(t)\Vert ^2+\Vert \tilde{\varvec{b}}(t)\Vert ^2]\leqslant C\), we have \(\mathrm{E}[\Vert \tilde{\varvec{a}}(t)\Vert ^2]\leqslant C \). Taking the expectation and the limit of both sides of (27), it follows

Assume that \(\lim \limits _{t\rightarrow \infty }\Vert \hat{{\varvec{\psi }}}(t+j)\Vert ^2/r_1(t+j)=0\). Using (22) gives \(\lim \limits _{t\rightarrow \infty }\mathrm{E}\left[ \Vert \tilde{\varvec{a}}(t)\Vert ^2\right] =0\). Similarly, we can obtain \(\lim \limits _{t\rightarrow \infty }\mathrm{E}[\Vert \tilde{\varvec{b}}(t)\Vert ^2]=0\). This completes the proof. \(\square \)

In order to improve the convergence rate of the HSG algorithm, we introduce a forgetting factor \(\lambda \) \((0\leqslant \lambda \leqslant 1)\) in (8)–(11) and the corresponding algorithm is called the forgetting factor HSG (FF-HSG) algorithm, which is as follows:

Obviously, when the forgetting factor \(\lambda =1\), the FF-HSG algorithm is reduced to the HSG algorithm; when \(\lambda =0\), the FF-HSG algorithm is degenerated to the hierarchical projection algorithm.

4 Example

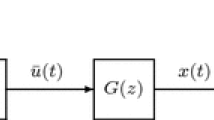

Consider the following bilinear-in-parameter system with \(m=2\) and \(n=3\),

where \(\Vert \varvec{b}\Vert =1\). In simulation, we generate a persistent excitation sequence with zero mean and unit variance as the input u(t) and take v(t) to be an uncorrelated noise sequence with zero mean and variance \(\sigma ^2=0.10^2\). Taking the data length \(L=3000\) and using the HSG algorithm to generate the parameter estimates \(\hat{\varvec{a}}(t)\) and \(\hat{\varvec{b}}(t)\) from the input–output data \(\{y(t),\varvec{F}(t)\): \(t=1,2,3\ldots \}\), the parameter estimates and their estimation errors are given in Tables 1, 2 and 3, and the estimation error \(\delta :=\Vert \hat{{\varvec{\theta }}}-{\varvec{\theta }}\Vert /\Vert {\varvec{\theta }}\Vert \) versus t is shown in Fig. 1.

From Tables 1, 2, 3 and Fig. 1, we can draw the following conclusions.

5 Conclusions

This paper investigates the performances of the HSG algorithm for bilinear-in-parameter systems. The theoretical analysis shows that the estimates converge to the true values under the persistent excitation conditions, and the simulation results verify the proposed convergence theorem. The method used in this paper can be extended to analyze the convergence of the identification algorithms for linear or nonlinear control systems [7, 19, 20, 43] and applied to hybrid switching-impulsive dynamical networks [18] and uncertain chaotic delayed nonlinear systems [17] or applied to other fields [6, 27, 28].

References

R. Abrahamssona, S.M. Kay, P. Stoica, Estimation of the parameters of a bilinear model with applications to submarine detection and system identification. Digit. Signal Process. 17(4), 756–773 (2007)

A. Atitallah, S. Bedoui, K. Abderrahim, Identification of wiener time delay systems based on hierarchical gradient approach. in The 8th Vienna International Conference on Mathematical Modelling—MATHMOD, IFAC-Papers OnLine 48(1), 403–408 (2015)

E.W. Bai, An optimal two-stage identification algorithm for Hammerstein–Wiener nonlinear systems. Automatica 34(3), 333–338 (1998)

E.W. Bai, A blind approach to the Hammerstein–Wiener model identification. Automatica 38(6), 967–979 (2002)

E.W. Bai, Y. Liu, Least squares solutions of bilinear equations. Syst. Control Lett. 55(6), 466–472 (2006)

X. Cao, D.Q. Zhu, S.X. Yang, Multi-AUV target search based on bioinspired neurodynamics model in 3-D underwater environments. IEEE Trans. Neural Netw. Learn. Syst. (2016). doi:10.1109/TNNLS.2015.2482501

Z.Z. Chu, D.Q. Zhu, S.X. Yang, Observer-based adaptive neural network trajectory tracking control for remotely operated Vehicle. IEEE Trans. Neural Netw. Learn. Syst. (2016). doi:10.1109/TNNLS

F. Ding, G.J. Liu, X.P. Liu, Parameter estimation with scarce measurements. Automatica 47(8), 1646–1655 (2011)

F. Ding, X.M. Liu, M.M. Liu, The recursive least squares identification algorithm for a class of Wiener nonlinear systems. J. Franklin Inst. 353(7), 1518–1526 (2016)

F. Ding, X.M. Liu, X.Y. Ma, Kalman state filtering based least squares iterative parameter estimation for observer canonical state space systems using decomposition. J. Comput. Appl. Math. 301, 135–143 (2016)

F. Ding, X.H. Wang, Q.J. Chen, Y.S. Xiao, Recursive least squares parameter estimation for a class of output nonlinear systems based on the model decomposition. Circuits Syst. Signal Process. (2016). doi:10.1007/s00034-015-0190-6

F. Ding, X.M. Liu, Y. Gu, An auxiliary model based least squares algorithm for a dual-rate state space system with time-delay using the data filtering. J. Franklin Inst. 353(2), 398–408 (2016)

F. Ding, Y. Gu, Performance analysis of the auxiliary model-based stochastic gradient parameter estimation algorithm for state-space systems with one-step state delay. Circuits Syst. Signal Process. 32(2), 585–599 (2013)

M. Gilson, P. Van den Hof, Instrumental variable methods for closed-loop system identification. Automatica 41(2), 241–249 (2005)

G.C. Goodwin, K.S. Sin, Adaptive Filtering Prediction and Control (Prentice-Hall, Englewood Cliffs, 1984)

A. Haryanto, K.S. Hong, Maximum likelihood identification of Wiener–Hammerstein models. Mech. Syst. Signal Process. 41(1–2), 54–70 (2013)

Y. Ji, X.M. Liu, F. Ding, New criteria for the robust impulsive synchronization of uncertain chaotic delayed nonlinear systems. Nonlinear Dyn. 79(1), 1–9 (2015)

Y. Ji, X.M. Liu, Unified synchronization criteria for hybrid switching-impulsive dynamical networks. Circuits Syst. Signal Process. 34(5), 1499–1517 (2015)

H. Li, Y. Shi, W. Yan, On neighbor information utilization in distributed receding horizon control for consensus-seeking. IEEE Trans. Cybern. (2016). doi:10.1109/TCYB.2015.2459719

H. Li, Y. Shi, W. Yan, Distributed receding horizon control of constrained nonlinear vehicle formations with guaranteed \(\gamma \)-gain stability. Automatica 68, 148–154 (2016)

H. Li, Y. Shi, Robust H-infinity filtering for nonlinear stochastic systems with uncertainties and random delays modeled by Markov chains. Automatica 48(1), 159–166 (2012)

L. Ljung, System Identification: Theory for the User, 2nd edn. (Prentice Hall, Englewood Cliffs, 1999)

J. Pan, X.H. Yang, H.F. Cai, B.X. Mu, Image noise smoothing using a modified Kalman filter. Neurocomputing 173, 1625–1629 (2016)

J.D. Wang, Q.H. Zhang, L. Ljung, Revisiting Hammerstein system identification through the two-stage algorithm for bilinear parameter estimation. Automatica 45(11), 2627–2633 (2009)

D.Q. Wang, Hierarchical parameter estimation for a class of MIMO Hammerstein systems based on the reframed models. Appl. Math. Lett. 57, 13–19 (2016)

X.H. Wang, F. Ding, F.E. Alsaadi, T. Hayat, Convergence analysis of the hierarchical least squares algorithm for bilinear-in-parameter systems. Circuits Syst. Signal Process. (2016). doi:10.1007/s00034-016-0278-7

T.Z. Wang, J. Qi, H. Xu et al., Fault diagnosis method based on FFT–RPCA–SVM for cascaded-multilevel inverter. ISA Trans. 60, 156–163 (2016)

T.Z. Wang, H. Wu, M.Q. Ni et al., An adaptive confidence limit for periodic non-steady conditions fault detection. Mech. Syst. Signal Process. 72–73, 328–345 (2016)

X.H. Wang, F. Ding, Recursive parameter and state estimation for an input nonlinear state space system using the hierarchical identification principle. Signal Process. 117, 208–218 (2015)

D.Q. Wang, F. Ding, Parameter estimation algorithms for multivariable Hammerstein CARMA systems. Inf. Sci. 355, 237–248 (2016)

Y.J. Wang, F. Ding, Recursive least squares algorithm and gradient algorithm for Hammerstein–Wiener systems using the data filtering. Nonlinear Dyn. 84(2), 1045–1053 (2016)

Y.J. Wang, F. Ding, Novel data filtering based parameter identification for multiple-input multiple-output systems using the auxiliary model. Automatica 71, 308–313 (2016)

Y.J. Wang, F. Ding, The filtering based iterative identification for multivariable systems. IET Control Theory Appl. 10(8), 894–902 (2016)

Y.J. Wang, F. Ding, The auxiliary model based hierarchical gradient algorithms and convergence analysis using the filtering technique. Signal Process. 128, 212–221 (2016)

X.H. Wang, F. Ding, Modelling and multi-innovation parameter identification for Hammerstein nonlinear state space systems using the filtering technique. Math. Comput. Modell. Dyn. Syst. 22(2), 113–140 (2016)

C. Wang, T. Tang, Recursive least squares estimation algorithm applied to a class of linear-in-parameters output error moving average systems. Appl. Math. Lett. 29, 36–41 (2014)

C. Wang, T. Tang, Several gradient-based iterative estimation algorithms for a class of nonlinear systems using the filtering technique. Nonlinear Dyn. 77(3), 769–780 (2014)

D.Q. Wang, W. Zhang, Improved least squares identification algorithm for multivariable Hammerstein systems. J. Franklin Inst. 352(11), 5292–5370 (2015)

C. Wang, L. Zhu, Parameter identification of a class of nonlinear systems based on the multi-innovation identification theory. J. Franklin Inst. 352(10), 4624–4637 (2015)

A. Wills, T.B. Schön, L. Ljung et al., Identification of Hammerstein–Wiener models. Automatica 49(1), 70–81 (2013)

W.L. Xiong, J.X. Ma, R.F. Ding, An iterative numerical algorithm for modeling a class of Wiener nonlinear systems. Appl. Math. Lett. 26(4), 487–493 (2013)

X.P. Xu, F. Wang, G.J. Liu, Identification of Hammerstein systems using key-term separation principle, auxiliary model and improved particle swarm optimisation algorithm. IET Signal Process. 7(8), 766–773 (2013)

L. Xu, A proportional differential control method for a time-delay system using the Taylor expansion approximation. Appl. Math. Comput. 236, 391–399 (2014)

L. Xu, Application of the Newton iteration algorithm to the parameter estimation for dynamical systems. J. Comput. Appl. Math. 288, 33–43 (2015)

L. Xu, L. Chen, W.L. Xiong, Parameter estimation and controller design for dynamic systems from the step responses based on the Newton iteration. Nonlinear Dyn. 79(3), 2155–2163 (2015)

L. Xu, The damping iterative parameter identification method for dynamical systems based on the sine signal measurement. Signal Process. 120, 660–667 (2016)

Acknowledgments

This work was supported by the National Natural Science Foundation of China (Nos. 61164015, 60474039) and the Key Research Project of Henan Higher Education Institutions (No. 16A120010).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ding, F., Wang, X. Hierarchical Stochastic Gradient Algorithm and its Performance Analysis for a Class of Bilinear-in-Parameter Systems. Circuits Syst Signal Process 36, 1393–1405 (2017). https://doi.org/10.1007/s00034-016-0367-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-016-0367-7