Abstract

Febrile neutropenia is a common complication of antineoplastic chemotherapy, especially in patients with hematologic malignancies. Infection prevention strategies such as chemoprophylaxis or the administration of prophylactic growth factors have not been clearly shown to reduce complications of febrile neutropenia, to be cost-effective, or to have a significant impact on mortality. Since febrile neutropenic patients represent a heterogeneous population, extensive research has been conducted on how to reliably identify a low-risk subset and to develop risk-based treatment strategies for low-risk and non-low-risk subsets. For adult patients, two validated risk prediction models have been developed: the Talcott model and the MASCC risk index. Clinical criteria and the MASCC model have been used successfully to identify patients suitable for treatment with oral antimicrobial regimens, after early discharge from hospital or entirely as outpatients. The primary focus of risk assessment models has been the identification of patients at low risk for serious medical complications. Very little research has been conducted to try and identify intermediate-risk or high-risk patients. This area is open for further study.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Acute Myeloid Leukemia

- Chronic Myeloid Leukemia

- Hematologic Malignancy

- Febrile Neutropenia

- Medical Complication

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Febrile neutropenia (FN) is a common complication of antineoplastic chemotherapy especially in patients with hematologic malignancies. It can be associated with substantial morbidity and some mortality. Febrile neutropenic patients, however, represent a heterogenous group, and not all patients have the same risk of developing FN and/or related complications. A recent study [32] looked at potential factors predicting the occurrence of FN in 266 patients who received 1,017 cycles of chemotherapy. Rates of FN following the administration of a unique cycle of chemotherapy ranged from 20 % for patients with lymphoma or Hodgkin’s disease to 25 % in myeloma, to more than 80 % in patients with chronic myeloid leukemia. Using patients with myeloma as reference, univariate odds ratios for the development of febrile neutropenia were 8.87 for acute myeloid leukemia and 12.11 for chronic myeloid leukemia. The overall risk of developing at least one febrile neutropenic episode has been reported to be >80 % in patients with hematologic malignancies [25]. A febrile neutropenic episode represents a serious and potentially lethal event, with an associated mortality of ~ 5 %. Although patients with hematologic malignancy are at higher risk of FN development, they do not appear to be at higher risk for death during the course of a febrile neutropenic episode, compared to patients with solid tumors as shown in a descriptive study by Klastersky et al. [27]: 64/1,223 episodes in patients with hematologic malignancy (5.2 %) versus 42/919 (4.6 %) in patients with solid tumors. However, aside from the risk of mortality, FN also causes serious medical complications and increased morbidity, which generate increased treatment-related costs and reduce the patients’ quality of life [29, 52].

Preventing febrile neutropenia is a worthwhile goal. Colony-stimulating factors do shorten the duration of neutropenia, especially in patients with solid tumors [7]. Nevertheless, various guidelines (ASCO, EORTC) recommend their use only if the risk of febrile neutropenia exceeds 20 % [1, 43], in order to make this strategy cost-effective. This level of risk remains difficult to assess or predict. Additionally, the role of growth factors in patients with hematologic malignancies continues to be debated [31]. Another preventive strategy is to administer prophylactic antibiotics during periods of increased risk, but this strategy is associated with the emergence of resistant pathogens [50]. However, at least in patients with hematologic malignancies, neutropenic following chemotherapy administration, and afebrile, it reduces infection-related mortality and even all causes mortality as shown by meta-analyses [14, 30]. In the Gafter-Gvili meta-analysis, the relative risk for all-cause mortality is estimated to be 0.66 (95 % CI: 0.55–0.79). As all causes mortality is impacted, the benefit of prophylaxis likely outweighs the harm although only half of the studies were evaluable for the mortality outcome.

Standard management of FN patients includes hospital-based supportive care and the prompt administration of parenteral, broad-spectrum, empiric antibiotics. Although successful, this approach has some drawbacks (e.g., unnecessary hospitalization for some patients, exposure to resistant hospital microflora, increased costs), and may not be necessary in all FN patients. As previously stated, febrile neutropenic patients constitute a heterogeneous population, with a complication rate of approximately 15 % (95 % confidence interval: 12–17 %) in unselected patients [24]. In other words, 85 % of febrile episodes in neutropenic patients resolve without any complications if adequately treated with early initiation of empiric antibiotic therapy and appropriate follow-up and treatment modification, if necessary. Response rates may be even higher and the frequency of complications lower, in selected subgroups of FN patients. This knowledge has led investigators to try to identify more homogenous patient populations in terms of the risk of development of complications and to model the probability of complication development in them. The purpose of this chapter is to describe currently available risk stratification models and their predictive characteristics.

2 Risk Stratification

When attempting risk stratification, it is important to define the outcome one wants to study or predict. The following outcomes may be considered: response to the initial empiric antibiotic regimen, the development of bacteremia or serious medical complications before resolution of fever and neutropenia, and mortality related to the FN episode. Response to empiric antibiotic treatment has been a useful endpoint for most randomized clinical trials [6]. Although it is a fair indicator of the activity of a particular drug or regimen, the requirement for antibiotic change does not necessarily imply clinical deterioration and should not be used as a marker of worsening in risk prediction models. The same argument applies to bacteremic status, i.e., bacteremia does not necessarily represent increased clinical risk, at least in adult patients [27]. Death resulting from the febrile neutropenic episode is the most relevant endpoint. Fortunately, it is an uncommon event, making it difficult to conduct studies sufficiently powered to model for the probability of death. Consequently, the occurrence of serious medical complications appears to be the only feasible endpoint for risk assessment. It does have high clinical relevance as highlighted in a discussion of risk assessment [22].

Before developing a risk stratification rule, it is important to think about the future application of the rule and about the patient subgroup one wants to identify. Is it more meaningful to identify low-risk patients or high-risk patients? Is a binary rule satisfactory? Or does one need to be more subtle? Even, if one models the probability of the development of serious medical complications and gets a “continuous” prediction rule, the use one wants to make of the predicted probability will guide the choice of the threshold for defining low-risk, intermediate risk, or high-risk. Indeed, in clinical practice, an infectious diseases specialist needing to decide how he will treat a specific patient does not care about a “continuous” prediction but wants to have a tool that will help him to opt for a specific therapeutic choice. Up to now, most of the studies done on risk stratification for FN in adults and children have focused on the identification of low-risk patients with the subsequent goal of simplifying therapy for these patients.

3 Risk Stratification Methods

3.1 Clinical Approach

This method relies on empirically combining a set of predictive factors published in the literature and/or chosen on the basis of clinical expertise, without formally analyzing the interaction between them. This was the most frequently adopted methodology for the clinical trials which tested oral antibiotic regimens in hospitalized patients considered to be at low-risk [12, 21, 51]. The University of Texas, MD Anderson Cancer Center, has played a pioneering role in delineating the clinical criteria for identifying patients at low-risk for the development of complications and therefore eligible for simplified therapy. Investigators at this institution were among the first to show that low-risk patients could be safely managed as outpatients [38, 41]. This “clinical” approach has the advantage that the definition of low-risk is flexible and may be changed depending on the context of use and on the results of new studies. It is probably more applicable in busy clinical practices but is considered less scientifically stringent when one is trying to conduct multicenter clinical trials (due to transportability and generalizability issues). Because of the flexible nature of clinical criteria, it is very difficult to accurately determine the sensitivity, specificity, and positive and negative predictive values of this approach. The clinical factors considered to delineate low-risk include hemodynamic stability/absence of hypotension, no altered mental status, no respiratory failure, no renal failure, no hepatic dysfunction, good clinical condition, short expected duration of neutropenia, no acute leukemia, no marrow/stem cell transplant, absence of chills, no abnormal chest X-ray, no cellulitis or signs of focal infection, no catheter-related infection, and no need for intravenous supportive therapy [12, 19, 21, 28, 33, 51]. Of note, the first models excluded most patients with hematologic malignancy (direct exclusion of patients with acute leukemia or indirect exclusion through the criterion requiring the expected duration of neutropenia to be short).

3.2 Modeling Approach

The second approach has been used to derive risk prediction models by integrating several factors in a unique way and taking into account their independent value and their interactions. This is a more systematic way of constructing prediction models, and their diagnostic characteristics can be studied and optimized depending on the future use of the model. Before being suitable for clinical practice, they need to be tested in distinct patients populations in order to ensure that they are well calibrated (predicted outcomes have to match observed outcomes) and transportable to other settings (other institutions, other underlying tumors, or other antineoplastic therapy). Their discriminant ability needs to be monitored regularly. The advantages of this approach are numerous: the assessment of low-risk as well as the definition of the outcome to be predicted is standardized and more objective; the classifications have known properties; the models can be constructed in a parsimonious way with the use of independent predictive factors making them robust when used in other settings. However, the development process may take years, and the need for validation should not be underestimated since the context of use has to be considered before introducing them in clinical practice.

3.3 Validated Models

To date, two scoring systems have been developed and validated in adult patients, both using a similarly defined endpoint, i.e., the occurrence of serious medical complications (Table 8.1). The definition may appear somewhat arbitrary but has the merit of having been clearly formulated. Deviations from this definition have been observed in validation series especially when the risk models were used for selecting patients for outpatient treatment. In that setting, it is logical to consider that hospitalization is an event to be avoided although hospitalization is not necessarily a serious medical complication. These adaptations have been used in the studies conducted by Kern et al. [23] and Klastersky et al. [26].

3.3.1 The Talcott Model

Talcott and colleagues were the first to propose risk-based subsets in patients with chemotherapy-induced FN. Patients were classified into four groups (Table 8.2). Groups 1, 2, and 3 (all hospitalized patients) were not considered to be low-risk. However, patients in group IV (with controlled cancer and without medical comorbidity, who developed their febrile episode outside the hospital) were considered to be at low-risk [44]. The construction of the groups was done using clinical arguments and expertise and was initially tested in a retrospective series of 261 patients from a single institution. It was then validated in a prospective series of 444 episodes of FN at two institutions [45]. The model was constructed without distinguishing patients with solid tumors or hematologic malignancy although the definition of controlled cancer was different for patients with leukemia (complete response on the last examination) than for patients with solid tumor (initiation of treatment or absence of documentation of progression). The validation series included 24 % of patients with non-Hodgkin’s lymphoma and 17 % of patients with acute myeloid leukemia. The diagnostic characteristics of the model were not stratified by underlying disease. The ultimate goal of the model was to identify low-risk patients, and groups I to III were never defined in order to further refine risk stratification. The model was further applied in a randomized trial [46] that aimed to assess whether outpatient management of predicted low-risk patients increases the risk of medical event. Patients with fever and neutropenia persisting after 24 h inpatient observation were randomized between continued inpatient care and early discharge without changing the antibiotic regimen unless medically required. The study was initially designed to detect an increase from 4 to 8 % in medical complication rate and then revised to detect an increase from 4 to 10 % with a planned sample size of 448 episodes. Stopped early due to poor accrual in 2000, the study was published with 66 episodes randomized in the hospital care arm and 47 episodes in the early discharge arm [46]. Although the study is underpowered, the authors concluded to no evidence of adverse medical consequences of the home arm (9 % complications rate versus 8 % and a 95 % confidence interval for the difference from −10 to 13 %). Having included only predicted low-risk patients, the study cannot be viewed as a full validation one, and we also can wonder why the study hypothesis was the inferiority of the experimental arm.

3.3.2 The MASCC Risk Index

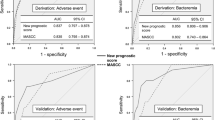

The second model was developed as the result of an international prospective study conducted by the Multinational Association for Supportive Care in Cancer (MASCC) [24]. The original design of the study included a validation part. Before carrying out any data analysis, study subjects were split into a derivation set (n = 756 episodes) and a validation set (n = 383). The score derived from the first set was obtained after multivariate logistic regression. A numeric risk index score, the so-called MASCC score, was constructed by attributing weights to seven independent factors shown to be associated with a high probability of favorable outcome. This score is presented in Table 8.3. It ranges from 0 to 26, with a score of 21 or more defined as being predictive of low-risk for the development of complications. This threshold was chosen from the derivation set, using a complication rate of 5 %, as a compromise between positive predictive value and sensitivity of the prediction rule. Similar to the Talcott model, the intended purpose of this model was to identify patients at sufficiently low-risk for the development of serious complications. The targeted positive predictive value of the score (i.e., the rate of patients without serious medical complication predicted by the rule) decreased, as expected, from 95 to 93 %, on the validation set. The characteristics of both models, based on the validation set are shown in Table 8.4. The MASCC study provides further validation of the Talcott classification in a multicentric setting. Comparing the characteristics of the prediction rules, the MASCC score did improve upon the sensitivity and the overall misclassification rate of the Talcott scheme. On the other hand, the positive predictive value might be considered suboptimal, at least when the threshold of 21 is used. Increasing the threshold might increase the positive predictive value but will also reduce the sensitivity of the model. In the Talcott model, the underlying disease particularly the presence of a solid tumor or hematologic malignancy impacted on the degree of risk only in the form of an interaction with the existence of a previous fungal infection or suspected fungal infection. The underlying disease was a predictive factor on univariate analysis, but was not subsequently identified as an independent risk predictive factor.

3.3.3 Independent Validation of the MASCC Score

Due to its immediate validation as planned in the study protocol, its increased sensitivity compared to the Talcott scheme, and its acceptable positive predictive value, the MASCC score has been proposed as a useful tool for predicting low-risk febrile neutropenia in the IDSA guidelines since 2002 [13, 18].

It has also been the subject of several independent validation studies. The primary objective of one of these studies was to attempt to improve the MASCC score through the estimation of the further duration of neutropenia. Indeed, expected further neutropenia duration, if correlated with the underlying tumor, could be the true factor underlying a higher risk for patients with hematologic malignancies than for patients with solid tumors. However, it is difficult to assess at presentation. A multicentric study was therefore conducted with detailed data collection about chemotherapy. This study [35] included 1,003 febrile episodes selected in 1,003 patients from 10 participating institutions. Among them, 546 had hematologic malignancy including 246 with acute leukemia. A model predicting further neutropenia duration as a binary status (long versus short duration) was developed. Almost all leukemic patients were predicted to have a long duration, and all patients with solid tumors were predicted to have a short duration of neutropenia, but the model was unable to split the patients with hematologic malignancies other than leukemia into subgroups with short or long predicted duration. Unfortunately, the addition of this covariate did not result in a risk prediction model more satisfactory than the one obtained with the MASCC risk index.

Table 8.5 summarizes the results of the independent series attempting to validate the MASCC score for identifying low-risk patients. Although some of the series are small, they all show positive predictive values that are above 85 %, except one study [8] not reported in the table. This study used a very different definition of complications which included a change in the empiric antibiotic regimen. Consequently, the reported rate of complications is huge (62 %), and this paper cannot be considered to be a true validation of the MASCC score. Looking at the data summarized in Table 8.5, one can observe that when the proportion of patients with hematologic malignancies increases, the positive predictive value decreases, suggesting that the score should be used with greater caution in patients with hematologic malignancies. One could also consider increasing the threshold for defining low-risk in order to increase the positive predictive value, albeit at the price of decreased sensitivity. Table 8.6 shows how the diagnostic characteristics may evolve with changes in threshold Table 8.7.

Numerous studies [4, 5, 20, 23, 26, 39, 40, 42] have used the MASCC score for selecting low-risk patients in order to simplify therapy, with suggested benefits such as improved quality of life for patients and their families. Some recently published studies confirm as it was hypothesized that costs are decreased [9, 16, 47]. The various therapeutic options for low-risk patients are beyond the scope of this chapter. Nevertheless, it needs to be stressed that prediction of low-risk and the suitability for either oral treatment (one of the approaches for simplifying treatment) or outpatient treatment (the other approach for simplifying treatment) are different issues. Prediction of low-risk is a necessary but not exclusive condition for simplified, risk-based therapy. This should be kept in mind [10, 11, 22, 26, 39] when designing studies for low-risk patients. Despite this, there are numerous recent reports showing that outpatient treatment can be as successful as in-hospital treatment, even for some patients with hematologic malignancies [5, 15, 42].

3.3.4 Predicting Intermediate or High-Risk Using the Validated Models

The Talcott model was designed to identify low-risk patients, and the classification of high-risk patients into groups I to III had no preplanned purpose. Consequently, this model is unlikely to be helpful in a setting other than the identification of low-risk patients. In the MASCC study, the probability of development of serious complications has been modeled with a “continuous” range of predicted values. This information, however, may not be relevant to the clinician who needs to get a binary answer to help in therapeutic decision making. Initially, the identification of low-risk was considered most relevant in order to facilitate research on oral antibiotic therapy and/or outpatient management. The threshold was set to achieve a positive predictive value of 95 %. A broader use of the score could be envisaged to select patients at intermediate or high-risk of complications. Data collected on validation series show that, indeed, the higher the score, the higher the probability of resolution without the development of serious medical complications, as depicted in Fig. 8.1 [34]. So, intermediate values of the score or even the lowest values of the score may help further categorize patients. However, in each of the categories, the rate of complications never approaches 1. Therefore, a rule trying to identify patients who will develop complications using the available model will lack sensitivity and be unsatisfactory. Furthermore, the number of patients at the lowest values of the score is small, and studies focusing on patients at high-risk of the development of complications might be very difficult to conduct due to low accrual potential. Nevertheless, a study comparing a very “aggressive” therapeutic approach including the administration of growth factors and/or immediate treatment in an intensive care unit, versus standard hospital-based empiric therapy, might be very interesting.

Rate of serious complication or death according to MASCC score values [34]

3.3.5 How to Improve Risk Prediction Models?

Although the MASCC score has been satisfactorily validated and reported to be useful for predicting low-risk in different patients populations and settings, it is far from optimal, with a misclassification rate of 30 % and low specificity and negative predictive values. Several attempts to improve the MASCC score have been made. As already mentioned, a multinational study [35] looked in detail at the characteristics of the chemotherapeutic regimen that induced the febrile neutropenic episode and attempted to associate it with further duration of neutropenia. The next step which was to incorporate this information in risk prediction failed.

Utilizing the databases of the original MASCC study and the subsequent study that looked at duration of neutropenia, the issue of the significance of bacteremic status was reviewed [36]. This review found that (1) after stratification for the type of underlying cancer (hematologic malignancy versus solid tumor) and for bacteremic status (no bacteremia, single organism gram-negative bacteremia, single organism gram-positive bacteremia, polymicrobial bacteremia), the MASCC score had a predictive value in all the strata without any detectable interaction term; and (2) prior or early knowledge of bacteremic status, although predictive of outcome, would not be helpful in improving the accuracy of a clinical rule characterizing patients as low and high-risk.

Uys et al. [49] analyzed the predictive role of circulating markers of infection (C-reactive protein, procalcitonin, serum amyloid A, and interleukins IL-1β, IL-6, IL-8, IL-10) in a monocentric series of 78 febrile neutropenic episodes. Although this study is limited by its sample size and power, their conclusion was that none of the markers had an independent predictive value that would improve risk prediction.

De Souza Viana [8] proposed adding information about complex infection status to the MASCC score and to exclude patients with a complex infection (defined as infection of major organs, sepsis, soft tissue wound infection, or oral mucositis grade >2) from the predicted low-risk patients. In a small series of 53 episodes (64 % of patients with hematologic tumor), they suggested that their model restricted the group of predicted low-risk patients from 21 to 15, but that the rate of complications was 0 instead of 4/21 (19 %). However, this proposal used a different definition of complication, considering that a patient with antibiotic change presented a complication although there is no clinical justification of this definition. This model is therefore not comparable to the other proposals and, in our opinion, less clinically meaningful.

In a recent study [37], the authors attempted to develop a risk model targeting high-risk prediction in patients with hematologic malignancies. They suggested that, in this group of patients, progression of infection might be quicker than in patients with solid tumors and that a specific model for predicting high-risk of complications in such patients would be very valuable. In a monocentric study of 259 febrile neutropenic episodes (137 patients), they constructed a score with values 0, 1, 2, or 3. One point is attributed to each of the following factors (measured before the administration of chemotherapy): low albumin level (<3.3 g/dl), low bicarbonate level (<21 mmol/l), and high CRP (≥20 mg/dl). The rates of complications were 7/117 (6 %), 21/71 (30 %), 24/43 (56 %), and 18/18 (100 %) with increasing values of the score. Park and coauthors suggested that patients with a score ≥2 should be considered as high-risk patients. The model needs validation, and the characteristics of the proposed clinical prediction rule should be studied further before being used. It is attractive due to the fact that it is specific to patients with hematologic malignancies and is based on very objective factors (contrary to the MASCC score which needs assessment of burden of FN) which are assessable even before the development of FN (i.e., at the time chemotherapy is initiated). It can however be hypothesized that a high CRP level at initiation of chemotherapy is just reflecting a nondiagnosed infectious disease rather than being predictive of the outcome of a future FN episode.

4 Conclusions

It is clear that febrile neutropenia occurs in a heterogeneous group of patients and that any accurate risk stratification system is valuable for guiding the management of selected subgroups of patients. Furthermore, evidence-based data and systematic reviews show that oral antibiotic therapy is a safe and feasible alternative to conventional intravenous therapy. The published scoring systems for predicting risk have been validated enough to guide the selection of patients for the administration of an oral regimen. At present, the MASCC risk index is probably the preferred method. However, there is room for improvement, specially in the prediction of low-risk in patients with hematologic malignancies. Further areas of research include the utility of rapid laboratory tests [22] and pattern recognition molecules able to activate the lectin pathway, such as mannose-binding lectin protein or ficolins [2]. One should also look at variables collected in the short-term follow-up. Indeed, the usefulness of reassessment of currently available scoring systems has not been studied much but is probably of limited value as most of the variables included in them are not susceptible to change. The use of risk prediction models for selecting patients for ambulatory treatment is more complex, as factors other than risk have to be taken into account as well as local epidemiology. The recognition of intermediate or high- risk and the provision of aggressive therapy to these patients are a new area for research and need to be formally studied.

References

Aapro MS, Cameron DA, Pettengell R, Bohlius J, Crawford J, Ellis M, et al. EORTC guidelines for the use of colony-stimulating factor to reduce the incidence of chemotherapy-induced febrile neutropenia in adult patients with lymphomas and solid tumours. Eur J Cancer. 2006;42:2433–53.

Ameye L, Paesmans M, Aoun M, Thiel S, Jensenius JC. Severe infection is associated with lower M-ficolin concentration in patients with hematologic cancer undergoing chemotherapy. Abstract presented at the 12th International Symposium on Febrile Neutropenia, Luxemburg, Luxemburg, Mar 2010.

Baskaran ND, Gan GG, Adeeba K. Applying the multinational association for supportive care in cancer risk scoring in predicting outcome of febrile neutropenia patients in a cohort of patients. Ann Hematol. 2008;87:563–9.

Chamilos G, Bamias A, Efstathiou E, Zorzou PM, Kastritis E, Kostis E, et al. Outpatient treatment of low-risk neutropenic fever in cancer patients using oral moxifloxacin. Cancer. 2005;103:2629–35.

Cherif H, Johansson E, Björkholm M, Kalin M. The feasibility of early hospital discharge with oral antimicrobial therapy in low-risk patients with febrile neutropenia following chemotherapy for hematologic malignancies. Haematologica. 2006;91(2):215–22.

Cometta A, Calandra T, Gaya H, et al. Monotherapy with meropenem versus combination therapy with ceftazidime plus amikacin as empiric therapy for fever in granulocytopenic patients with cancer. The International Antimicrobial Therapy Cooperative Group of the European Organization for Research and Treatment of Cancer and the Gruppo Italiano Malattie Ematologiche Maligne dell’Adulto Infection Program. Antimicrob Agents Chemother. 1996;40:1108–15.

Cooper KL, Madan J, Whyte S, Stevenson MD, Akehurst RL. Granulocyte-colony stimulating factors for febrile neutropenia prophylaxis following chemotherapy : systematic review and meta-analysis. BMC Cancer. 2011;11:404.

de Souza VL, Serufo JC, da Costa Rocha MO, Costa RN, Duarte RC. Performance of a modified MASCC index score for identifying low-risk febrile neutropenia cancer patients. Support Care Cancer. 2008;16:841–6.

Elting LS, Lu C, Escalante CP, Giordano SH, Trent JC, Cooksley C, Avritscher BC, Tina Shih YC, Ensor J, Nebiyou Bekele B, Gralla RJ, Talcott JA, Rolston K. Outcomes and cost of outpatient or inpatient management of 712 patients with febrile neutropenia. J Clin Oncol. 2008;26:606–11.

Feld R, Paesmans M, Freifeld AG, Klastersky J, Pizzo PA, Rolston KV, Rubenstein E, Talcott JA, Walsh TJ. Methodology for clinical trials involving patients with cancer who have febrile neutropenia : updated guidelines of the Immunocompromised Host Society/Multinational Association for Supportive Care in Cancer, with emphasis on outpatient studies. Clin Infect Dis. 2002;35:1463–8.

Finberg RW, Talcott JA. Fever and neutropenia : how to use a new treatment strategy. N Engl J Med. 1999;341:362–62.

Freifeld A, Marchigiani D, Walsh T, Chanock S, Lewis L, Hiemenz J, et al. A double-blind comparison of empirical oral and intravenous antibiotic therapy for low-risk febrile patients with neutropenia during chemotherapy. New Engl J Med. 1999;341:305–11.

Freifeld AG, Bow EJ, Sepkowitz KA, Boeckh MJ, Ito JI, Mullen CA, Raad II, Rolston KV, Young JA, Wingard JR. Clinical practice guideline for the use of antimicrobial agents in neutropenic patients with cancer: 2010 update by the Infectious Diseases Society of America. Clin Infect Dis. 2011;52:427–31.

Gafter-Gvili A, Frase A, Paul M, Vidal L, Lawrie TA, van de Wetering MD, Kremer LCM, Leibovici L. Antibiotic prophylaxis for bacterial infections in afebrile neutropenic patients following chemotherapy. Cochrane Database Syst Rev. 2012;(1):CD004386.

Girmenia C, Russo E, Carmosino I, Breccia M, Dragoni F, Latagliata R, Mecarocci S, Morano SG, Stefanizzi C, Alimena G. Early hospital discharge with oral antimicrobial therapy in patients with hematologic malignancies and low-risk febrile neutropenia. Ann Hematol. 2007;86:263–70.

Hendriks AM, Trice Loggers E, Talcott JA. Costs of home versus inpatient treatment for fever and neutropenia: analysis of a multicenter randomized trial. J Clin Oncol. 2011;29:3984–9.

Hui EP, Leung LKS, Poon TCW, Mo F, Chan VTC, Ma ATW, Poon A, Hui EK, Mak S-s, Kenny ML, Lei KIK, Ma BBY, Mok TSK, Yeo W, Zee BCY, Chan ATC. Prediction of outcome in cancer patients with febrile neutropenia: a prospective validation of the Multinational Association for Supportive Care in Cancer risk index in a Chinese population and comparison with the Talcott model and artificial neural network. Support Care Cancer. 2011;19:1625–35.

Hughes WT, Amstrong D, Bodey GP, Bow EJ, Brown AE, Calandra T, et al. 2002 guidelines for the use of antimicrobial agents in neutropenic patients with cancer. Clin Infect Dis. 2002;34:730–51.

Innes HE, Smith DB, O’Reilly SM, Clark PI, Kelly V, Marshall E. Oral antibiotics with early hospital discharge compared with in-patient intravenous antibiotics for low-risk febrile neutropenia in patients with cancer: a prospective randomised controlled single centre study. Br J Cancer. 2003;89:43–9.

Innes HE, Lim SL, Hall A, Chan SY, Bhalla N, Marshall E. Management of febrile neutropenia in solid tumors and lymphomas using the Multinational Association for Supportive Care in Cancer (MASCC) risk index: feasibility and safety in routine clinical practice. Support Care Cancer. 2008;16:485–91.

Kern WV, Cometta A, De Bock R, Langenaeken J, Paesmans M, Gaya H. Oral versus intravenous empirical antimicrobial therapy for fever in patients with granulocytopenia who are receiving cancer chemotherapy. New Engl J Med. 1999;341:312–8.

Kern WV. Risk assessment and treatment of low-risk patients with febrile neutropenia. Clin Infect Dis. 2006;42:533–40.

Kern WV, Marchetti O, Drgona L, Akan H, Aoun M, Akova M, de Bock R, Paesmans M, Maertens J, Viscoli C, Calandra T. Oral moxifloxacin versus ciprofloxacin plus amoxicillin/clavulanic acid for low-risk febrile neutropenia – a prospective, double-blind, randomised, multicentre EORTC-Infectious Diseases Group (IDG) Trial (protocol 46001, IDG trial XV). 18th European Congress of Clinical Microbiology and Infectious Diseases, Barcelona, 2008.

Klastersky J, Paesmans M, Rubenstein EB, Boyer M, Elting L, Feld R, et al. The Multinational Association for Supportive Care in Cancer risk index: a multinational scoring system for identifying low-risk febrile neutropenic cancer patients. J Clin Oncol. 2000;18:3038–51.

Klastersky J. Management of patients with different risks of complication. Clin Infect Dis. 2004;39:S32–7.

Klastersky J, Paesmans M, Georgala A, Muanza F, Plehiers B, Dubreucq L, et al. Management with oral antibiotics in an outpatient setting of febrile neutropenic cancer patients selected on the basis of a score predictive for complications. J Clin Oncol. 2006;24:4129–34.

Klastersky J, Ameye L, Maertens J, Georgala A, Muanza F, Aoun M, Ferrant A, Rapoport B, Rolston K, Paesmans M. Bacteremia in febrile neutropenic cancer patients. Int J Antimicrob Agents. 2007;30:51–9.

Klastersky J, Paesmans M. Risk-adapted strategy for the management of febrile neutropenia in cancer patients. Support Care Cancer. 2007;15:477–82.

Kuderer NM, Dale DC, Crawford J, Cosler LE, Lyman GH. Mortality, morbidity and cost associated with febrile neutropenia in adult cancer patients. Cancer. 2006;106:2258–66.

Leibovici D, Paul M, Cullen M, Bucaneve G, Gafter-Gvili A, Fraser A, Kern WV. Antibiotic prophylaxis in neutropenic patients: new evidence, practical decisions. Cancer. 2006;107:1743–51.

Levenga TH, Timmer-Bonte JNH. Review of the value of colony stimulating factors for prophylaxis of febrile neutropenic episodes in adult patients treated for hematologic malignancies. Br J Haematol. 2007;138:146–52.

Moreau M, Klastersky J, Schwarzbold A, Muanza F, Georgala A, Aoun M, Loizidou A, Barette M, Costantini S, Delmelle M, Dubreucq L, Vekemans M, Ferrant A, Bron D, Paesmans M. A general chemotherapy myelotoxicity score to predict febrile neutropenia in hematologic malignancies. Ann Oncol. 2009;20:513–9.

Paesmans M. Risk factors assessment in febrile neutropenia. Int J Antimicrob Agents. 2000;16:107–11.

Paesmans M. Factors predictive for low and high risk of complications during febrile neutropenia and their implications for the choice of empirical therapy: experience of the MASCC Study Group on Infectious Diseases Abstract presented at the 6th International Symposium on Febrile Neutropenia – Brussels, Dec 2003.

Paesmans M for the MASCC study section on infectious diseases; the duration of febrile neutropenia. Abstract presented at the 8th International Symposium on Febrile Neutropenia, Athens, Jan 2006.

Paesmans M, Klastersky J, Maertens J, Georgala A, Muanza F, Aoun M, Ferrant A, Rapoport B, Rolston K, Ameye L. Predicting febrile neutropenia at low-risk using the MASCC score: does bacteremia matter? Support Care Cancer. 2011;19:1001–8

Park Y, Kim DS, Park SJ, Seo HY, Lee SR, Sung HJ, Park KH, Choi IK, Kim SJ, Oh SC, Seo JH, Choi CW, Kim BS, Shin SW, Kim YH, Kim JS. The suggestion of a risk stratification system for febrile neutropenia patients with hematologic disease. Leuk Res. 2010;34:294–300.

Rolston K, Rubenstein E, Elting L, Escalante C, Manzullo E, Bodey GP. Ambulatory management of febrile episodes in low-risk neutropenic patients (abstract LM 81). In: Program and Abstracts of the 35th Interscience Conference on Antimicrobial Agents and Chemotherapy, American Society for Microbiology, Washington DC, 2005.

Rolston KVI, Manzullo EF, Elting LS, Frisbee-Hume SE, McMahon L, Theriault RL, et al. Once daily, oral, outpatient quinolone monotherapy for low-risk cancer patients with fever and neutropenia. A pilot study for low-risk cancer patients with fever and neutropenia. Cancer. 2006;106:2489–94.

Rolston KV, Frisbee-Hume SE, Patel S, et al. Oral moxifloxacin for outpatient treatment of low-risk, febrile neutropenic patients. Support Care Cancer. 2010;18:89–94.

Rubenstein EB, Rolston K, Benjamin RS, Loewy J, Escalante C, Manzullo E, et al. Outpatient treatment of febrile episodes in low-risk neutropenic patients with cancer. Cancer. 1993;71:3640–6.

Sebban C, Dussart S, Fuhrmann C, Ghesquières H, Rodrigues I, Geoffrois L, Devaux Y, Lancry L, Chvetzoff G, Bachelot T, Chelghoum M, Biron P. Oral moxifloxacin or intravenous ceftriaxone for the treatment of low-risk neutropenic fever in cancer patients suitable for early hospital discharge. Support Care Cancer. 2008;16:1017–23.

Smith TJ, Khatcheressian J, Lyman GH, Ozer H, Armitage JO, Balducci L, et al. Update of Recommendations for the use of white blood cell growth factors: an evidence-based clinical practice guideline. J Clin Oncol. 2006;24:3187–205.

Talcott JA, Finberg R, Mayer RJ, Goldman L. The medical course of patients with fever and neutropenia: clinical identification of a low-risk subgroup at presentation. Arch Intern Med. 1988;148:2561–8.

Talcott JA, Siegel RD, Finberg R, Goldman L. Risk assessment in cancer patients with fever and neutropenia: a prospective, two-center validation of a prediction rule. J Clin Oncol. 1992;10:316–22.

Talcott JA, Yeap BY, Clark JA, Siegel RD, Trice Loggers E, Lu C, Godley PA. Safety of early discharge for low-risk patients with febrile neutropenia: a multicenter randomized controlled trial. J Clin Oncol. 2011;29:3977–83.

Teuffel O, Amir E, Alibhai S, Beyene J, Shung L. Cost effectiveness of outpatient treatment for febrile neutropenia in adult cancer patients. Br J Cancer. 2011;104:1377–83.

Uys A, Rapoport BL, Anderson R. Febrile neutropenia: a prospective study to validate the Multniational Association for Supportive Care in Cancer (MASCC) risk-index score. Support Care Cancer. 2004;12:555–60.

Uys A, Rapoport BL, Fickl H, Meyer PWA, Anderson R. Prediction of outcome in cancer patients with febrile neutropenia: comparison of the Multinational Association of Supportive Care in Cancer risk-index score with procalcitonin, C-reactive protein, serum amyloid A, and interleukins-1β, -6, -8 and -10. Eur J Cancer. 2007;16:475–83.

Vento S, Cainelli F. Infections in patients with cancer undergoing chemotherapy: aetiology, prevention and treatment. Lancet Oncol. 2003;4:595–604.

Vidal L, Paul M, Ben dor I, Soares-Weiser K, Leibovici L. Oral versus intravenous antibiotic treatment for febrile neutropenia in cancer patients: a systematic review and meta-analysis of randomized trials. JAC. 2004;54:29–37.

Weycker D, Malin J, Edelsberg J, Glass A, Gokhale M, Oster G. Cost of neutropenic complications of chemotherapy. Ann Oncol. 2008;19:454–60.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Paesmans, M. (2015). Risk Stratification in Febrile Neutropenic Patients. In: Maschmeyer, G., Rolston, K. (eds) Infections in Hematology. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-44000-1_8

Download citation

DOI: https://doi.org/10.1007/978-3-662-44000-1_8

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-43999-9

Online ISBN: 978-3-662-44000-1

eBook Packages: MedicineMedicine (R0)