Abstract

This paper presents a low complexity digital self-calibration technique for a successive-approximation-register analog-to-digital converter (SAR ADC) for automotive applications. The ADC includes a digital-to-analog converter (DAC) having a binary, not calibrated, lsb part and a calibrated thermometric msb part. Very few extra elements are required in the analog part due to the self-calibration capability and only a limited amount of logic is added to the digital section.

The calibration sequence is executed once, during the testing process, and the results are stored on the device itself. The calibration process does not need any special precise external equipment.

The converter, realized in a 40 nm CMOS technology, achieves an INL of less than 0.25 lsb @ 12 bits thanks to the calibration procedure that brings a factor reduction of eight of the INL.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

- Calibration Process

- Switch Resistance

- Calibration Algorithm

- Binary Search Algorithm

- Dynamic Element Match

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Automotive microcontrollers make use of SAR ADC converters to manage a large variety of sensor input signals. In fact, this converter is able to achieve very good absolute accuracy and has the capability to manage, with proper multiplexing, several inputs with the same converter instance.

In the new generation of microcontrollers for automotive applications, we observe a significant increase of the required converter performances and the number of converters to be hosted, to achieve the required bandwidth and the capability of simultaneous sampling of several signals.

In order to avoid diverging cost of the converters, it is key to be able to reach the desired performance in a compact way.

Analog-to-Digital Converters (ADCs) require high linearity and so low voltage coefficient capacitors. A built in self-calibration and digital-trim algorithm correcting static mismatches in capacitive Digital-to-Analog Converter (CDAC) block is proposed.

1.1 Conventional SAR ADC Calibration/Trimming

Many techniques can be applied to compensate random mismatch errors in the matching-critical components for data converters. They can be divided into four categories: trimming, calibration, switching-sequence adjustment, and dynamic element matching (DEM).

Trimming compensates the random mismatch errors by regulating the component parameters at the wafer stage. It requires accurate test equipment to continuously measure and compare trimmed parameters with their nominal values. There are mainly two types of trimming: one is to change the physical dimensions of the circuit. The second is to connect/disconnect an array of small elements using fuses or MOS switches [1, 2].

Calibration can be seen as an improved version of trimming since it characterizes errors on chip or board and subsequently corrects them. Yet, the obtained accuracy level is limited by the resolution and accuracy of error measurement circuits and correction signals [3, 4].

Switching-sequence adjustment is commonly used in layout designs to compensate the systematic gradient errors for some electrical parameter [5, 6].

DEM is another popular solution to the critical components random mismatch errors. It dynamically changes the positions of mismatched elements at different time so that the equivalent component at each position is nearly matched on a time average. Unlike the static random mismatch compensation techniques, DEM translates mismatch errors into noise. However, the translated noise is only partially shaped where the in-band residuals could possibly affect the data converters’ signal-to-noise ratio (SNR). Furthermore, the output will be inaccurate at one time instant since DEM only guarantees matching on average [7, 8].

2 SAR ADC Overview

The SAR principle of operation is widely and perfectly known: essentially, it performs a binary search algorithm to converge to the input signal. At the heart of the SAR there are two components: a comparator and a DAC.

The DAC generates a “guess” of the analogue level and the comparator evaluates the difference between the DAC output and the actual, analogue input (Fig. 10.1).

Very often the Track&Hold structure is merged with the DAC for implementation efficiency and holding simplicity.

The sequencing logic and SAR register sets the next “guess” level, according to a binary search algorithm, in order to match the DAC output and the analogue input voltage. At the end, this process generates the digital code correspondent to the analogue input.

There is a strict correlation between the conversion result and the accuracy of the DAC converter: any non-ideality in the DAC components has an impact in the behavior of the SAR ADC system.

The main requirements of the comparator section are an offset compatible with the accuracy required, a noise contribution in line with the converter resolution and an evaluation speed adequate to the conversion timings.

2.1 DAC Architecture

A set of binary weighted physical elements, normally capacitors or resistors, is the most straightforward implementation of the DAC component. This implementation is putting heavy constraints on the accuracy of the elements especially if good linearity and the absence of missing codes have to be granted.

A different DAC organization can reduce the matching needs: a structure containing a set of identical elements thermometer coded implements the msb bits by while binary coded elements implement lsb bits.

This approach, while granting by construction the monotonicity of the thermometric DAC and reducing the matching requirements to ensure a good DNL at the transition between thermometric and binary DAC, requires the usage of a much wider set of switches to control the various elements that can have a not negligible impact on the area of the converter. The partitioning of the DAC elements in a number of a thermometric bits (msb) and binary bits (lsb), depends on the accuracy to be reached by the converter, on the matching parameters of the unit element (technology parameter) and on the area taken by the switches portion.

The selection of this kind of arrangement (driven by accuracy requirements) automatically implies a series of consequences that we use, favorably, in our calibration process.

In our implementation, the DAC has been fully realized with capacitors giventhe stringent requirement for speed and reference consumption. In fact, avoiding the usage of resistors, remove any DC current component on the reference and the settling time of the DAC will depend on the time constant associated to the switches and the capacitors: switches resistance can be lowered without affecting the reference consumption.

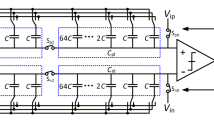

The proposed architecture implements a six bits thermometric DAC and a six bits binary DAC.

2.2 Thermometric and Binary Elements Organization

As said before the thermometric organization provides advantages in terms of linearity at the expense of a certain redundancy in the circuitry to select the elements. This redundancy normally is not exploited because the thermometric sequence is hard coded in the decoding logic.

The principle of the calibration method presented in this paper consists in the smart usage of the intrinsic redundancy offered by the thermometric decoding. The sequence of the thermometric elements is not hard coded but can be changed arbitrarily.

Notice that in our implementation the thermometric DAC is built by a complete set of elements able to generate all the 2NbitTh+1 (where NbitTh is the number of bits thermometric coded) reference levels from VrefN to VrefP. The reason for this choice will become clear later on.

Therefore, the binary DAC will not be realized as a fully additive DAC, but will be able to provide both positive and negative voltage values as well.

Figure 10.2 represents a conventional approach for the generation of the references using a thermometric and a binary DAC arrangement while Fig. 10.3 describes the decoding strategy used for the proposed DAC.

As it can be noticed, another redundancy is generated in our implementation because, in principle, we have the possibility to generate voltage references even above VrefP. We will use favorably also this kind of redundancy in the measurement process.

3 Thermometric Elements Calibration

Any calibration process is based on the capability to measure the system to be calibrated: in our case it is the set of unit capacitors constituting the thermometric DAC but the concept equally works with different physical elements as well (e.g.: current sources).

The accurate evaluation of the values of the thermometric elements is, without doubt, a critical step in the calibration procedure: wrong measurements will produce, invariably, bad calibration results.

To be effective from an industrial point of view, the final calibration process has to satisfy two basic requirements:

-

1.

no area relevant, extra analogue HW has to be designed and implemented in silicon for the calibration feature

-

2.

no high-accuracy references (internal and/or external) have to be requested by the calibration procedure

3.1 Thermometric Elements Measurement

We can easily recognize that with the hardware in our hands and without the help of any external equipment, we can measure, against a given arbitrary voltage reference, the difference between any of the two capacitors of the thermometric set.

In fact, what we really need to know is not the absolute value of each thermometric element (i.e. the capacitance values in fF), but the difference between every element and its ‘ideal’ value. In reality, as we will see later on, the difference between each thermometric element and only one of them chosen arbitrarily as a reference is sufficient to carry on the calibration procedure.

This is achieved by performing a simple SAR conversion of an error voltage generated on purpose by using the two capacitors under measurement.

Let’s sample the voltage VrefP on an arbitrarily chosen thermometric element, CTh-ref, while sampling VrefN on all the other elements.

After the sampling phase is ended, so the signal is frozen on the thermometric capacitors, we connect the CTh-ref capacitor to VrefN while connecting the capacitor to be measured, CTh-k, to VrefP.

The voltage at the input of the comparator will change by an amount equal to

At this point, the error signal generated can be converted in a digital code by means of the binary DAC. Obviously ΔVk can be either positive or negative but, as we said before, the binary DAC can provide both positive and negative output voltage levels as well.

To improve the resolution of the measurement, the reference used by the binary DAC can be scaled down (VrefP_Cal): in our implementation, the scaling factor can be 8 or 16 providing an equivalent measurement resolution of 15 or 16 bits if compared to the resolution of the final converter. It has to be noted that the accuracy of the calibration voltage reference has no impact on the accuracy of the calibration process so the area required to implement it is negligible compared to the area of the SAR ADC.

To enable the calibration process the structure of the SAR ADC needs to be modified according to Fig. 10.4. The overhead of the calibration process is very low, being confined to a small analog add-on and a digital block modification that in a 40 nm technology is for sure negligible.

In the mathematical computation terms, let us call Tk the generic thermometric element.

The ‘ideal’ value is simply the average value of the elements, because in case all the Tk would have the same value, they realise a perfect thermometric DAC.

where Nth is the number of thermometric elements.

As described before, with the HW available it is easy measure the following quantity, Ej,k:

This quantity is the basic element of the calibration process.

Selecting arbitrarily as reference for all measurements Tk ≡ Tref, the value of Eq. (10.3) is evaluated for all the thermometric elements, Tj, using always the fixed chosen element Tref.

Other, different, measurement policies can be adopted, but we will develop the next steps with the above assumption.

At the end of the measurement process, we have a numbered set of Nth values, Ej, representing the starting point of the calibration algorithm.

Again, Tref is a chosen element of the array.

The choice of this ‘reference’ element, Tref, is not univocal and it affects numerically the computations to be carried out during the calibration process. Notwithstanding, it is possible to demonstrate that it has no impact on the result.

The Ej itself does not represent directly the difference of the value of each thermometric element Tj from Tideal, but it is possible to make a convenient use of it during the calculations.

3.2 Measurement Procedure

The measurements of the values of Ej are carried out using the binary section of the SAR ADC; the technology guarantees for this subsection a high degree of accuracy and we take advantage of it.

Moreover, the values to be measured are intrinsically very small. We aremeasuring the difference between two thermometric elements, assumed very similar by technology. This to say that a limited dynamic range for the measurement is needed and the SAR binary subsection dynamic range is adequate for that task.

Notwithstanding, a single measure of Ej is not enough accurate due to the intrinsic noise of the comparator and the quantization error. An average on a certain number of measurements is mandatory.

In case of an ideal measurement environment, the repetition of the measure does not give any real advantage: the code readout from the ADC is invariably the same (Fig. 10.5).

However, the situation is radically different if a limited, but not negligible, amount of noise is present. In such a case, the readout of the measurements will spread around a certain interval and the average action is effective (Fig. 10.6).

The more noise is present, the more codes are exercised, while an excess of noise can damage the measurement process.

In fact, there is an optimum level of noise given the number of measurements available for the average or vice-versa. Given the noise level it is possible to fix the optimum number of measurements (Fig. 10.7).

It is evident that the accuracy of the measurement depends on the noise level, given the number of samples used in the average. In this case, a noise around 0.5 lsb is enough to guarantee a precision of almost 0.02 lsb after an average on 4096 samples. In fact the ‘signal’, in this case a constant value, increases linearly with the number of measurements used in the average, Nave, whilst the noise, adding in power, increases like sqrt (Nave). At the end the ‘signal’ to noise ratio increases like

Every doubling of the number of samples the accuracy increases by 3 dB, i.e. ½ lsb.

3.3 Calculations and Data Processing

Let us assume there are Nth thermometric elements in a DAC converter.

The output voltages generated by this DAC for every input code are:

It is implicit that for the ground level (code 0) no elements are used and V0 = 0; for the last level, where all elements are used, VNth = Vref, independently on the values of the Tk. Ground level and full scale level are always, by definition, correctly positioned, i.e. their INL is 0.

It is a matter of fact, due to the technology limitations, that the Tk have different values, each of them being affected by an error respect to the ideal value, Tideal

where tk is the deviation of element from the ideal value or, otherwise stated, the DNLk. By consequence, the DAC output voltage level becomes:

Note that, by definition, for full scale level the following is valid:

The sum of all the DNL errors is 0, as it has to be by INL definition.

The error between every level and the perfect level, i.e. the INLk, is:

By definition:

Here the main point: the INLk depends not only on the tk values, but on their ordering as well and the proposed implementation takes advantage of this property: changing the ‘sequence of firing’ of the Tk elements, the INL characteristic changes as well. The problem is the identification the best permutation of the thermometric elements in order to minimize the INL error.

Using the measurement results, Ek, it is possible (see Appendix), to evaluate both DNL and INL errors:

It is worth pointing out that both INL and DNL expressions do not imply the knowledge, as previously claimed, of the selected reference element Tref.

Every permutation of the thermometric elements generates a different INL characteristic for the DAC. Actually this is a huge number of possibilities (Nth!) even for relatively low numbers of thermometric elements and an exhaustive search, trying all possible permutations, is practically impossible.

3.4 Calibration Algorithms

At this point, after the Ek measurements, it is possible to evaluate the INL characteristic of the DAC for all the possible permutations of the thermometric elements. The identification the best one, without perform an exhaustive search, is strongly desirable. Using metaheuristic optimization approaches (Simulated-Annealing and Tabu-Search), simulations show a remarkable probability of trapping in local minima.

A deterministic approach solves the issue.

3.4.1 Calibration Algo I

The discussion of the rationale behind the calibration algorithms (Algo) and the placement strategy for the thermometric elements follows in the next paragraphs.

3.4.1.1 Calibration Algo I: Discussion

In a ‘perfect’ DAC, every thermometric element has a ‘perfect’ value, Tideal. In such a case, building conceptually the INL characteristic, when an element is placed in the position ‘k’, the characteristic increase by Tideal and the resulting INL is0. Actually, every element is affected by an error DNLk and we have to place all of them; including the element having the worst DNL value, DNLworst. Our ideal setup process, eventually, places this element as well, defining the best possible accuracy for INL. In fact, the best option we have is to place it exactly across the ideal staircase, fixing in this way the best INL performance of the current DAC at DNLworst/2. Given the measurement results, we immediately know the best possible INL performance for the DAC under test. Now we need a strategy able to identify one (out of many) permutation satisfying the following relationship (Figs. 10.8 and 10.9):

3.4.1.2 Calibration Algo I: Elements Placement

Let us assume that the distribution law for the thermometric element is Gaussian around their average value, Tideal; accordingly, a substantial number of them are placed near Tideal, showing good accuracy.

By definition, the INL error value at the extremes (ground and full scale) is zero. So, starting from one of these points is possible ‘to build’, if not the optimal sequence, at least a very good approximation of it.

Here is the procedure:

-

0.

Select ground (or full scale) as ‘Current INL level’. Initialise the “Set of elements to be placed” with all elements.

-

1.

Select the element with the worst DNL, DNLworst, in the set of elements to be placed.

-

2.

Taking advantage of the accurate elements having low DNL error, approximate as much as possible DNLworst/2 value, paying attention never exceed +/- 0.5 DNLworst

-

3.

Place the low DNL error elements sequence, followed by the DNLworst element, after the ‘Current INL level’.

-

4.

Remove the used elements from the “Set of elements to be placed”.

If the DNLworst/2 value has been accurately approximated, the DNLworst element will ‘jump’ from DNLworst/2 to -DNLworst/2.

-

5.

Update ‘Current INL level’ to the level after DNLworst element (the last one).

-

6.

If the “Set of elements to be placed” is not empty, restart from step 1), otherwise INL is 0 (it has to be, by construction) and stop.

This procedure, consuming systematically the thermometric elements having high DNL error, takes advantage of the relative large number of elements with small DNL error. Most of the times, only elements with small DNL error will remain at the end of the process. The result is the ‘stretching’ at the end of the INL plot, as sketched in Fig. 10.11.

3.4.2 Calibration Algo II

3.4.2.1 Calibration Algo II: Discussion

The value of the DNLworst element limits the “Algo I” calibration process performance. That element appears in the INL two times: the first one in the numerator of Eq. (10.6), when k ≥ ‘worst’ index; the second one in the denominator, contributing to Ttot jointly with all the other elements. In the denominator it does not generate any trouble; it is a problem, conversely, in the numerator, where it introduces a big ‘jump’ in the INL characteristic of the DAC at k = ‘worst’. Then a possible solution could be to use, in the numerator, only the elements having low DNL errors.

The following paragraph sketches the strategy adopted in order to avoid the presence of the elements with high DNL error in the numerator.

3.4.2.2 Calibration Algo II: Elements Selection and Placement

It is already established that

Now we can split the set of thermometric elements into two subsets; let us call them for the time being ‘set A’ and ‘set B’:

or, equivalently:

this means that, splitting the thermometric set into 2 subsets, the sum of subset ‘A’ will be always the opposite the sum of subset ‘B’, independently of the selection of the elements.

Now we can run the following procedure:

-

1.

split the thermometric element set into two subset of Nth/2 elements

-

2.

The first subset, let us call it the ‘Good’ set, contains the elements with lower DNL; the remaining elements belong to the second subset, called the ‘Bad’ set.

-

3.

The ‘Good’ subset obeys to supplemental criteria: minimize the absolute value of the sum of the DNL of all the elements; by consequence, the ‘Bad’ set will have the same DNL sum value, with the opposite sign. Ideally, that value should be zero; in practice it is possible to get a value very near to that target, with a value lower than the majority, if not all, of the DNL errors.

-

4.

The ordering of the elements of the ‘Good’ set can be established with an algorithm similar to ‘Algo I’ (Fig. 10.10)

Then, for every DAC measurement, apply the following strategy:

-

1.

Use, for the first half of the thermometric scale, only the ‘Good’ set

-

2.

For the second half of the thermometric scale proceed to:

-

(a)

Substitute the ‘Bad’ set in place of the ‘Good’ set in the lower section of the array.

-

(b)

Use again the ‘Good’ set in the higher section of the array, but with the elements used in the reversed order.

-

(a)

The substitution of the ‘Good’ to ‘Bad’ is seamless if the sum of the ‘Good’ set is zero or very near to zero.

There is a price to pay for that procedure: the DAC characteristic is not monotonic ‘by construction’ any more. Notwithstanding, it is a matter of fact that, with a careful selection strategy, it is always possible to have an extremely good matching between the ‘Good’ and ‘Bad’ set, much better than 1 lsb, avoiding in this way the inference of non-monotonicity.

In this way:

-

1.

Only the elements having low DNL error appear in the numerator as single step ‘increment’. These DNL values are, now, the limiting factor for the DAC under test performance.

-

2.

The discontinuity at half scale is minimized in the splitting procedure of the thermometric set in ‘Good’ and ‘Bad’ sets.

4 Statistical Simulations

A behavioral, statistical, MATLAB model evaluates the performances of the above calibration concept. That model takes into account all the main technology parameters, includes an accurate model of the analog part and the above described calibration algorithms. It predicts the behavior of the pre- and post-calibration INL characteristic for a single ADC and pictures the statistical behavior of a set of many ADC as well.

4.1 Calibration Algo I Statistical Simulation

Figure 10.11 represents, for a single ADC, the profile of the INL before (native) and after calibration with Algo I.

Figures 10.12 and 10.13 represent the simulation result on a population of 1024 elements. As evident in Fig. 10.13, the statistical distribution of the INL is compacted by roughly a factor of six.

4.2 Calibration Algo II Statistical Simulation

Figure 10.14 represents, for one particular case of the statistical distribution, the profile of the INL before (native ADC) and after calibration with Algo II.

Figures 10.15 and 10.16 represent instead the result on a population of 1024 elements. The statistical distribution of the INL is even more compacted with respect to Algo I (improvement of a factor of eight), reducing significantly the minimum value. The worst case DNL of the thermometric elements is not the limit any more (Fig. 10.17).

5 Silicon Measurements

The following paragraphs carry out a comparison of the simulation results vs. the laboratory measurements. All the measurements refer to the same device.

5.1 Uncalibrated ADC Silicon Measurement

The first measure (Fig. 10.18) shows the native INL characteristic. Due to the initial, random, distribution of the thermometric elements values, the maximum INL error is larger than 1 LBS.

The root cause of the oscillatory behavior (∼0.15 lsb) is in the inaccuracy of the binary ADC implemented for the six lsb bits. Figure 10.19 shows the details of characteristic of the binary section of the ADC.

Using the self-measurement capability of the calibration procedure, it is possible to estimate the native INL characteristic of the device without the aid of any external instrument. Figure 10.20 compares such estimation with the INL measured in the laboratory.

5.2 Calibration Algo I Silicon Measurement

Figure 10.21 shows the complete INL characteristic of the device after self-calibration using Algo I. The typical shape of Algo I has a good matching with the behavioral simulation results (Fig. 10.8).

Figure 10.22 depicts the matching, after calibration, between the estimation and the actual measure of the INL of thermometric elements only.

5.3 Calibration Algo II Silicon Measurement

Figure 10.23 sketches the INL after self-calibration using Algo II. It is evident the peculiar symmetry around the central code of this calibration procedure.

Figure 10.24 depicts the matching, after calibration, between the estimation and the actual measure of the INL of thermometric elements only. Also in this case, the agreement between the calculated characteristic and the measured one is quite good. The deviation is in the range of 0.15 lsb @ 12Bit and we suspect this is mainly due to inaccuracy of the measurement of the ADC characteristic after calibration (Figs. 10.25 and 10.26).

6 Conclusions

A low cost, high performance calibration method for SAR ADC has been presented. The theory, the simulation and the experimental results show a perfect alignment, leading to an improvement of the performance up to a factor of eight reaching 0.15 lsb of INL for the calibrated thermometric elements.

The overhead in terms of silicon area is negligible and there is no need of external, high precision instruments. The quality of the calibration measurements is high enough to allow the calculation of the native and calibrated INL profile with an error in the range of 0.15 lsb @ 12bit.

References

A. Hastings, The Art of Analog Layout (Prentice-Hall, Englewood Cliffs, 2000)

D. Marche, Y. Savaria, Y. Gagnon, Laser fine-tuneable deep-submicrometer CMOS 14-bit DAC. IEEE Trans. Circuits Syst. I Reg. Pap. 55(8), 2157–2165 (2008)

M. Taherzadeh-Sani, A.A. Hamoui, Digital background calibration of capacitor-mismatch errors in pipelined ADCs. IEEE Trans. Circuits Syst. II 53(9), 966–970 (2006)

W. Liu, P. Huang, Y. Chiu, A 12-bit, 45-MS/s, 3-mW redundant successive-approximation-register analog-to-digital converter with digital calibration. IEEE J. Solid-State Circuits 46(11), 2661–2672 (2011)

J. Bastos, A.M. Marques, M.S.J. Steyaert, W. Sansen, A 12-bit intrinsic accuracy high-speed CMOS DAC. IEEE J. Solid-State Circuits 33(12), 1959–1969 (1998)

G.A.M. Van der Plas, J. Vandenbussche, W. Sansen, M.S.J. Steyaert, G.G.E. Gielen, A 14-bit intrinsic accuracy Q2 random walk CMOS DAC. IEEE J. Solid-State Circuits 34(12), 1708–1718 (1999)

B.H. Leung, S. Sutarja, Multibit Σ-Δ A/D converter incorporating a novel class of dynamic element matching techniques. IEEE Trans. Circuits Syst. II 39(1), 35–51 (1992)

E. Fogelman, I. Galton, W. Huff, H. Jensen, A 3.3-V single-poly CMOS audio ADC delta-sigma modulator with 98-dB peak SINAD and 105-dB peak SFDR. IEEE J. Solid-State Circuits 35(3), 297–307 (2000)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Appendix

Appendix

The detailed calculations for DNLk and INLk follow, using the measurements Ek.

Let us start from the quantities measured in the Analog Section:

Solving for Tj:

Let us evaluate:

Using

It is possible now the evaluation of INLk:

Substituting the previous result, we can eliminate Tref:

Moreover, by definition:

Rights and permissions

Copyright information

© 2017 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Burgio, C., Giacomini, M., Donze, E.M., Restivo, D.F. (2017). A Self-Calibrating SAR ADC for Automotive Microcontrollers. In: Baschirotto, A., Harpe, P., Makinwa, K. (eds) Wideband Continuous-time ΣΔ ADCs, Automotive Electronics, and Power Management. Springer, Cham. https://doi.org/10.1007/978-3-319-41670-0_10

Download citation

DOI: https://doi.org/10.1007/978-3-319-41670-0_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-41669-4

Online ISBN: 978-3-319-41670-0

eBook Packages: EngineeringEngineering (R0)