Abstract

This chapter is devoted to the design of a static-state feedback controller for a linear system subject to saturated quantization and delay in the input. Due to quantization and saturation, we consider, for the closed-loop system, a weaker notion of stability, namely local ultimate boundedness. The closed-loop system is then modeled as a stable linear system subject to discontinuous perturbations. Then by coupling a certain Lyapunov–Krasovskii functional via S-procedure to adequate sector conditions, we derive sufficient conditions to ensure for the trajectories of the closed-loop system finite time convergence into a compact \(S_u\) surrounding the origin, from every initial condition belonging to a compact set \(S_0\). Moreover, the size of the initial condition set \(S_0\) and the ultimate set \(S_u\) are then optimized by solving a convex optimization problem over linear matrix inequality (LMI) constraints. Finally, an example extracted from the literature shows the effectiveness of the proposed methodology.

S. Tarbouriech—This work has been funded by the ANR under grant LIMICOS ANR-12-BS03-0005-01.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Introduction

Networked control systems have been extensively studied due to their practical interest (see for instance [12]) and also because the introduction of communication networks in control loops poses new theoretical challenges. Indeed, the constraints induced by networks can seriously degrade the closed-loop system performances. Among all these constraints, two of them are particularly important: quantization and delay. For a complete overview of the issues arising in networked control systems see; [10, 12, 15].

Quantization is a phenomenon occurring in all data networks, where a real-valued signal is transformed into a piecewise constant signal. Whenever quantization is uniform, global asymptotic stability of the closed-loop cannot be established; see [6]. In particular, for large signals, the quantizer saturates and as a consequence, global stabilization cannot be obtained except for open-loop stable systems; see [17]. Furthermore, as uniform quantizers manifest a dead-zone around the zero, in general, local asymptotic stability for the origin cannot be guaranteed; see [2, 3]. Several methods have been proposed in the literature to deal with quantized systems. One first method to cope with quantized closed-loop systems consists of adopting a robust control point of view. Namely, the closed-loop system is modeled as a nominal system perturbed by a perturbation, i.e., the quantization error. Then, by using sector conditions and classical tools like small gain theorem or Lyapunov functions coupled with Input-to-State stability (ISS) properties [2, 3, 14] or S-procedure [1, 8], closed-loop stability can be assessed [10, 18].

Time delays naturally occur in networked control systems due to finite-time propagation of signals in communication media. Clearly, the presence of time delays can induce an additional degradation of the closed-loop performances; see [11]. In that case, the idea generally proposed is to extend classical results (without delay) to account time delay by considering either a Lyapunov–Razumikhin function [14] or a Lyapunov–Krasovskii functional [5, 9]. Using Lyapunov–Razumikhin function leads to very conservative results [11] but may deal with time-varying delays. Concerning Lyapunov–Krasovskii-based approach, the applicability of this methodology requires to properly define a Lyapunov–Krasovskii functional . Such a choice may entail some conservatism and some technical problems; see [16].

In this chapter, we focus on the stabilization problem for linear systems subject to constant time delay and saturated quantization in the input channel. The resulting closed-loop system is modeled as a linear time-delay system perturbed by two different nonlinearities allowing to describe more precisely the saturation and the quantization phenomena separately. Following the work of [19], the saturation function is embedded into a local sector condition, while the nonlinearity associated to the quantization error is encapsulated into a sector via the use of certain sector conditions. At this point, due to the presence of the uniform quantizer, (at least for unstable open-loop plants), it is impossible to prove asymptotic stability of the origin; see [18]. To overcome this problem, we focus on local ultimate boundedness for the closed-loop system. In particular, we design the controller gain K in a way such that there exists a set (inner set) which is a finite time attractor for every initial condition belonging to a compact set (outer set) containing the previous one. Then, by relying on a Lyapunov–Krasovskii functional, we turn the solution to the considered control design problem into the solution to a quasi-LMI optimization problem. Finally, a procedure based on convex optimization is proposed to optimize the size of the outer and the inner sets.

Notation: The notation \(P >\mathbf{0}\), for \(P \in \mathbb R^{n\times n}\), means that P is symmetric and positive definite. The sets \(\mathbb S_n\) and \(\mathbb S_n^+\) represent, the set of symmetric and symmetric positive definite matrices of \(\mathbb R^{n\times n}\), respectively. Given a vector x (a matrix A), \(x' (A')\) denotes the transpose of x(A). The symmetric matrix \(\left[ {\begin{array}{cc} A&{}B\\ {*}&{}C \end{array}}\right] \) stands for \({\begin{bmatrix} A&B\\ B'&C \end{bmatrix}}\). The matrix diag(A, B) stands for the diagonal matrix \({\begin{bmatrix}A&0\\0&B\end{bmatrix}}\). Moreover, for any square matrix \(A\in \mathbb R^{n\times n}\), we define \(\mathrm {He}(A)=A+A'\). The matrix \(\mathbf{1}\) represents the identity matrix of appropriate dimension. The notation \(\mathbf{0}_{n,m}\) stands for the matrix in \(\mathbb R^{n\times m}\) whose entries are zero and, when no confusion is possible, the subscript will be omitted. For any function \(x:\ [-h,\ +\infty )\rightarrow \mathbb R^n\), the notation \(x_t(\theta )\) stands for \(x(t+\theta )\), for all \(t\ge 0\) and all \(\theta \in [-h,\ 0]\). |x| refers to the classical Euclidean norm and \(\Vert x_t\Vert \) refers to the induced norm defined by \(\Vert x_t\Vert =\mathop {\sup }\limits _{\theta \in [-h,0]} |x(t+\theta )|\). For \(P>0\), \(|x|_P\) refers to the modified Euclidean norm \(|x|_P=\sqrt{x'Px}\). Its induced norm is then denoted \(\Vert x_t\Vert _P\). For a generic positive \(\rho \), we define the functional sets \(L_V(\rho )=\left\{ x\in \mathscr {C}^1:V(x_t,\dot{x}_t) \le \rho \right\} \) and \({\text {int}}L_V(\rho )=\left\{ x\in \mathscr {C}^1:V(x_t,\dot{x}_t) < \rho \right\} .\) For a generic positive \(\rho \), we define the sets \(S(\rho )=\left\{ x\in \mathbb {R}^n:\eta (\vert x\vert ,0) \le \rho \right\} \) and \({\text {int}}S(\rho )=\left\{ x\in \mathbb {R}^n:\eta (\vert x \vert ,0)<\rho \right\} \), where \(\eta \) is a class \(\mathscr {K}\) function.

2 Problem Statement

Consider the following continuous-time system with delayed saturated quantized input:

where \(x\in \mathbb {R}^{n}\) is the state of the system, x(0) the initial state, u the control input and \(h\ge 0\) the constant input delay. A, B are real constant matrices of appropriate dimensions. The saturation map, \({\text {sat}}(u):\mathbb {R}^m\rightarrow \mathbb {R}^m\) is classically defined from the symmetric saturation function having as level the positive vector \(\bar{u}:{\text {sign}}(\bar{u}_{(i)})\min \{\bar{u}_{(i)},\vert \bar{u}_{(i)}\vert \}\). The quantization function , depicted in Fig. 8.1, is defined as

Assuming to fully measure the state x, we want to stabilize system (8.1) (in a sense to be specified) by a static-state feedback controller, that is

Plugging the above controller yields the following dynamics for the closed-loop system

Now, due to the delayed input, the closed-loop system turns into a time-delay system, thus a suitable initial condition needs to be selected for the controller. Notice that, actually the infinite dimensionality is introduced in the closed-loop system by the controller. Thus, to make (8.3) an effective description of the closed-loop system, the initial condition of the delayed-system (8.3) needs to be chosen as

In particular this choice avoids jumps in the plant state at time \(t=h\). Furthermore, note that \(x_0\) is assumed to be a constant given vector of \(\mathbb R^n\). Then, the closed-loop system can be described by the following functional differential equation

By defining

system (8.3) can be rewritten equivalently as

Due to quantization, whenever the open-loop system (8.1) is unstable, asymptotic stabilization of the origin cannot be achieved via any gain K; see [15]. To overcome this drawback, we rest on ultimate boundedness for the closed-loop system trajectories, whose definition is recalled below (see [13] for more details):

Definition 1

Let \(S_{0}\) and \(S_{u}\) be two compact sets containing the origin, the solutions to (8.4) with \(x_0\) starting from \(S_{0}\) are ultimately bounded in the set \(S_{u}\) if there exists a time \(T= T(|x_0|)\ge 0\) such that for every \(t\ge T\)

Hence, the problem we solve can be then summarized as follows:

Problem 1

Let A, B real matrices of adequate dimension, determine \(K\in \mathbb {R}^{m\times n}\), and two compact sets \(S_{0}\) and \(S_{u}\), with \(0\in S_u\subset S_{0}\), such that for every \(x_0\in S_0\), the resulting trajectory to (8.5) is ultimately bounded in \(S_{u}\).

3 Main Results

To solve Problem 1, we propose a Lyapunov-based result, which establishes local ultimate boundedness of a time-delay system, provided that certain inequalities involving a Lyapunov–Krasovskii functional hold.

Theorem 1

Given a functional \(V:\mathscr {C} \times \mathscr {C} \rightarrow \mathbb R^+\), assume that there exist \(\kappa \), \(\eta \) and \(\omega \) three class \(\mathcal{K}\) functions such that for every positive t

and there exist two positive scalars \(\gamma \) and \(\beta \), with \(\beta <\gamma \) such that for every positive t and for every \(x_t\in L_V(\gamma )\setminus {\text {int}}L_V(\beta )\) one gets:

Then,

-

for every initial condition \(x(0)\in S(\gamma ) \) the solutions to system (8.3) are bounded,

-

for every \(x(0)\in S(\gamma )\) there exists a positive time T such that for every \(t\ge T\),

$$x(t)\in S(\kappa ^{-1}(\beta )).$$

Proof

First, notice that

Thus, thanks to (8.7), for every positive t one has

Furthermore due to (8.6) one has

which implies that

and then boundedness is proven.

To prove finite time convergence, denote \(T=\inf \{t>0:x_t \in L_V(\beta ) \}\), for every \(t\in [0,T]\) we have \(x_t\in L_V(\gamma )\), then by integration of (8.7) along the trajectories of (8.5) yields

Now, observe that whenever \(x(\tau )\in L_V(\gamma )\) due to (8.6) we have

Thus, one gets

and then

From (8.6), to require that \(\kappa (\vert x(T) \vert )\le \beta \) if suffices to impose that

which leads to

Moreover since (8.7) holds also on the boundary of \(L_V(\beta )\), trajectories cannot leave such a set and for all \(t\ge T\)

and this concludes the proof. \(\blacksquare \)

Remark 1

In the previous result, any assumption on the considered norm was given. In particular, the norms involved in the above result may be chosen differently from each other. For example, one can assume relation (8.6) and (8.7) hold as follows

where \(\vert \cdot \vert _a\), \(\vert \cdot \vert _b\), \(\vert \cdot \vert _c\) and \(\vert \cdot \vert _d\) are any vector norms and \(\Vert \cdot \Vert _b\) and \(\Vert \cdot \Vert _c\) the corresponding induced function norms. The proof of Theorem 1 is almost the same, except that combining the left-hand side of (8.8) and (8.9) one should deal with different norms, i.e. \(\vert \cdot \vert _a\) and \(\vert \cdot \vert _d\). However, since \(\vert \cdot \vert _a\) and \(\vert \cdot \vert _d\) are equivalent, there exists a positive scalar \(\delta \) such that

allowing to obtain a similar result to Theorem 1.

Now, we present two lemmas that will be useful in the sequel:

Lemma 1

([7]) For every \(u\in \mathbb {R}^m\) the following conditions hold:

for any diagonal positive definite matrices \(T_1,T_2\in \mathbb {R}^{m\times m}\).

Lemma 2

([19]) Consider a matrix \(G\in \mathbb {R}^{m\times m}\), the nonlinearity \(\phi (u)= {\text {sat}}(u)-u\) satisfies

for every diagonal positive definite matrix \(T_3\in \mathbb {R}^{m\times m}\), if \(x\in \mathscr {S}(\bar{u})\) defined by

Based on the use of a Lyapunov–Krasovskii functional, the following theorem provides sufficient conditions to solve Problem 1.

Theorem 2

If there exist a matrix \(W\in \mathbb {R}^{n\times n}\), three symmetric positive define matrices \(J,L,U\in \mathbb {R}^{n\times n}\), two matrices \(Y,Z \in \mathbb {R}^{m\times n}\), three positive definite diagonal matrices \(T_1,T_2,T_3\in \mathbb {R}^{m\times m}\), and two positive scalars \(\sigma ,\sigma _2\) such that \(\sigma _2<\sigma \) and

then the gain \(K=YW'^{-1}\), the sets \(S_0=L_v(1)\) and \(S_u=L_v(\beta )\) with \(\frac{\sigma _2}{\sigma }<\beta <1\) are a solution to Problem 1, where \(P=W^{-1}L W'^{-1}\) and \(Q=W^{-1}U W'^{-1}\).

Proof

Consider the following Krasovskii–Lyapunov functional

where P, Q, R are symmetric positive definite matrices. Notice that for \(t>0\) the functional satisfies

and then

Now, by computing the time-derivative along the solutions to system (8.5) of the above functional, one gets

and

Moreover, by Jensen’s inequality , it follows

We want to show that if (8.13) and (8.14) hold, then there exists a positive small enough constant \(\varepsilon \) such that, along the solutions to system (8.5),

whenever \(x_t\in L_V(1)\setminus {\text {int}}L_V(\beta )\). To prove (8.21), by following S-procedure arguments, it suffices to prove that there exists a positive scalar \(\theta \), such that

From (8.20), one can write

where \(\mathscr {L}_0\) denotes the right-hand side of (8.20). Define

Then, inequality (8.22) reads:

where

Moreover, by selecting \(\theta =\sigma \) and \(\beta =\frac{\sigma _2}{\sigma }\), from the above expression we obtain

Now define

The satisfaction of (8.14), with the change of variables \(P=W^{-1}L W'^{-1}\) and \(Z=YW'^{-1}\) guarantees that the set \({\mathscr {E}}(P,1)= \{ x\in \mathbb {R}^n:x'Px\le 1\}\) is contained in the polyhedral set \({\mathscr {S}}(\bar{u})\) defined in Lemma 2. Moreover, due to (8.16), it follows that if \(x_t\in L_v(1)\) then \(x(t)\in \mathscr {E}(P,1)\) and therefore also \(x(t)\in \mathscr {S}(\bar{u})\), that guarantees the satisfaction of relation (8.12) in Lemma 2. Furthermore, note that from (8.15), one can write:

since \(x_0\) is supposed to be constant on \([-h,0]\). Then, from (8.16), for any \(x_0\in \mathscr {E}(P+hQ,1)\) then it follows that \(x_0\in Lv(1)\). Thus, by using Lemmas 1 and 2, for every \(x_t\in Lv(1)\), one has

where

with \(\mathcal{N}_4=\frac{1}{m}(-\sigma +\varDelta ^2{\text {trace}}(T_1))\mathbf{1}\) and

To prove our claim, we show that having (8.13) satisfied implies that (8.21) holds. To this end, in light of (8.24), (8.26), and (8.28), it suffices to show that along the trajectories of (8.5) one has

By Finsler lemma [18 ] , the above inequality is equivalent to find a matrix X of appropriate dimensions, such that

where \( \bar{B}=\begin{bmatrix} -\mathbf{1}&A&BK&\mathbf{0}&B&B \end{bmatrix}. \) Now, select X as follows

Then, by pre- and post-multiplying \(\mathcal{Q}\), respectively, by \({\text {diag}}\{H^{-1},H^{-1},H^{-1},\sqrt{m}\mathbf{1},\mathbf{1},\mathbf{1}\}\) and \({\text {diag}}\{H'^{-1},H'^{-1},H'^{-1},\sqrt{m}\mathbf{1},\mathbf{1},\mathbf{1}\}\) with

we obtain the left-hand side of (8.13). Then the satisfaction of (8.13) implies that the inequality

holds along the trajectories of system (8.5). Thus, by invoking Theorem 1, thanks to Remark 1, setting

and

establishes the result. \(\blacksquare \)

Remark 2

The above result is based on the use of a classical Lyapunov functional. As pointed by [9], this result can be extended to the more realistic case of time-varying delay by considering a more complex functional and by following analogous arguments as the ones shown in the proof of Theorem 2.

4 Optimization Issues

In solving Problem 1, the implicit objective is to obtain a set \(S_0\) as large as possible and a set \(S_u\) as small as possible. The problem of maximizing the size of \(S_0\) and minimizing the size of \(S_u\) relies on the choice of a good measure of such sets. At this stage, it is interesting to remark that as mentioned in the proof of Theorem 2, if \(x(0)\in \mathscr {E}(P+hQ,1)\), then \(x_0\in S_0=L_v(1)\). This implies that we can use the ellipsoid \(\mathscr {E}(P+hQ,1)\) to implicitly maximize \(S_0\). Hence, when considering ellipsoidal sets, several measures and therefore associated criteria can be considered. Volume, minor axis, directions of interest, inclusion of a given shape set defined through the extreme points \(v_r\) , \(r = 1, \ldots , n_r\) (see for example [1]) are some of the tools usually adopted to indirectly optimize the size of such sets. The natural choice to maximize the size of \(S_0\) in an homogeneous way, consists in minimizing the trace of the matrix \(P+hQ\).

By the same way, if \(x_t \in Lv(\beta )\) then from (8.16), \(x(t)\in \mathscr {E}(P,\beta )\). Then, to minimize the size of \(S_u\), one can simultaneously minimize \(\beta \) and maximize the trace of P. Therefore the minimization of \({\text {trace}}(P+hQ)\) clashes with the minimization of the set \(S_u\). Thus a trade-off between the two objectives needs to be considered. Moreover, the matrices P and Q do not directly appear in (8.13) and (8.14), thus the trace minimization problem needs to be rewritten in the decision variables. To this end, it is worthwhile to notice that the minimization of \({\text {trace}}(P+hQ)\), due to positive definiteness of \(P+hQ\), can be implicitly performed by maximizing the \({\text {trace}}(P+hQ)^{-1}\). In particular, since

it turns out that \((P+hQ)^{-1}=W'(hU+L)^{-1}W.\) Then, thanks to [4], we get

Thus, let \(\delta _1\) and \(\delta _2\) be two tuning parameters, we can consider the following criterion

At this point, it is important to note that conditions provided by Theorem 2 are nonlinear in the decision variables, which prevents from solving directly a convex optimization problem. This is more specifically a problem in case of products of decision matrices. On the other hand, products of a decision matrix with a scalar are numerically tractable if the scalar is considered either as a tuning parameter (but there is no guarantee that the problem remains feasible) or fixed via an iterative search. Next, we consider the following additional constraints in the decision variables:

-

\(T_2=\tau _2 \mathbf{1}\),

-

\(T_3=\tau _3 \mathbf{1}\).

Considering \(\sigma ,\tau _2,\tau _3\) as tuning parameters. Problem 1 can be solved by solving the following LMI optimization problem:

5 Example

Consider the system described by [9]:

The time delay is fixed to \(h=0.2\) and the level of saturation is \(\bar{u}=5\). We choose the quantization error bound as \(\varDelta =1\). Notice that, since the open-loop system is unstable (\(spec (A)=\{-0.5,\,1\}\)), the closed-loop trajectories cannot converge to the origin due to the quantizer. Using iterative research for scalars \(\sigma ,\tau _2,\tau _3\), we obtain the gain

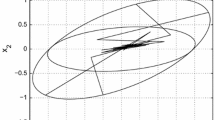

For different initial conditions, several trajectories are depicted in Fig. 8.2. The ellipsoid \(\mathscr {E}(P+hQ,1)\) and \(\mathscr {E}(P,\beta )\) are depicted in blue and dashed blue, respectively. The two red crosses are the two unstable equilibrium points due to the saturation. Furthermore, notice that a trajectory starting from an initial point outside the ellipsoid \(\mathscr {E}(P,1)\) and necessary such that \(x_0\) is outside \(L_v(1)\) still converges into \(\mathscr {E}(P,\beta )\) which indicates clearly the conservatism of our technique. For \(x_0=[-7.7074 \;6.5731]'\), Fig. 8.3 shows the evolution of the control u. Its value saturates for less than half a second and does not converge to zero.

6 Conclusion

In this chapter, we tackled the stabilization problem of linear systems with saturated, quantized, and delayed input. Specifically, the proposed methodology allows to design the controller to achieve local ultimate boundedness for the closed-loop system. Moreover, the control design problem is turned into an optimization problem aiming at the optimization of the size of the outer set \(S_0\) and of the inner set \(S_u\). The solution to such an optimization problem can be performed in a convex optimization setup, providing a computer-oriented solution. The effectiveness of the proposed strategy is supported by a numerical example. Future works should be devoted to the use of more complex Lyapunov functionals depending on extra states and slack variables, as well as the extension to these results to the case of time-varying time delay.

References

S. Boyd, L.E. Ghaoui, E. Feron, V. Balakrishnan, Linear Matrix Inequalities in System and Control Theory. (Society for Industrial and Applied Mathematics, 1997)

R.W. Brockett, D. Liberzon, Quantized feedback stabilization of linear systems. IEEE Trans. Autom. Control 45(7), 1279–1289 (2000)

F. Ceragioli, C. De Persis, P. Frasca, Discontinuities and hysteresis in quantized average consensus. Automatica 47(9), 1919–1928 (2011)

J. Daafouz, J. Bernussou, Parameter dependent Lyapunov functions for discrete time systems with time varying parametric uncertainties. Syst. Control Lett. 43(5), 355–359 (2001)

C. De Persis, F. Mazenc, Stability of quantized time-delay nonlinear systems: a Lyapunov-Krasowskii-functional approach. Math. Control Signals Syst. 21(4), 337–370 (2010)

D.F. Delchamps, Stabilizing a linear system with quantized state feedback. IEEE Trans. Autom. Control 35(8), 916–924 (1990)

F. Ferrante, F. Gouaisbaut, S. Tarbouriech, Observer-based control for linear systems with quantized output, in European Control Conference. (2014)

F. Ferrante, F. Gouaisbaut, S. Tarbouriech, Stabilization of continuous-time linear systems subject to input quantization. Automatica 58, 167–172 (2015)

E. Fridman, M. Dambrine, Control under quantization, saturation and delay: an LMI approach. Automatica 45(10), 2258–2264 (2009)

M. Fu, L. Xie, The sector bound approach to quantized feedback control. IEEE Trans. Autom. Control 50(11), 1698–1711 (2005)

K. Gu, V.L. Kharitonov, and J. Chen, Stability of Time-Delay Systems. Control Engineering (Birkhäuser Boston, 2003)

I.L. Hurtado, C.T. Abdallah, C. Canudas-de Wit, Control under limited information: special issue (part I). Int. J. Robust Nonlinear Control 19(16), 1767–1769 (2009)

H.K. Khalil, Nonlinear Systems, 3rd edn. (Prentice-Hall, 2002)

D. Liberzon, Quantization, time delays, and nonlinear stabilization. IEEE Trans. Autom. Control 51(7), 1190–1195 (2006)

D. Liberzon, Nonlinear control with limited information. Commun. Inf. Syst. 9(1), 41–58 (2009)

A. Seuret, F. Gouaisbaut, Wirtinger-based integral inequality: application to time-delay systems. Automatica 49(9), 2860–2866 (2013)

S. Tarbouriech, G. Garcia, J.M. Gomes da Silva Jr., I. Queinnec, Stability and Stabilization of Linear Systems with Saturating Actuators (Springer, 2011)

S. Tarbouriech, F. Gouaisbaut, Control design for quantized linear systems with saturations. IEEE Trans. Autom. Control 57(7), 1883–1889 (2012)

S. Tarbouriech, C. Prieur, J.M. Gomes da Silva Jr., Stability analysis and stabilization of systems presenting nested saturations. IEEE Transactions on Automatic Control 51(8), 1364–1371 (2006)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Ferrante, F., Gouaisbaut, F., Tarbouriech, S. (2016). Stabilization by Quantized Delayed State Feedback. In: Seuret, A., Hetel, L., Daafouz, J., Johansson, K. (eds) Delays and Networked Control Systems . Advances in Delays and Dynamics, vol 6. Springer, Cham. https://doi.org/10.1007/978-3-319-32372-5_8

Download citation

DOI: https://doi.org/10.1007/978-3-319-32372-5_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-32371-8

Online ISBN: 978-3-319-32372-5

eBook Packages: EngineeringEngineering (R0)